#regulate ai created images

Explore tagged Tumblr posts

Text

I have respect for actual artists and not people using AI generated programs. That shit is not art.

There's no heart or soul in it. Not to also forget, the AI is the one doing the work for the person.

Type something in one of the search or filter engine and the AI generated the random pics going on the person's filters. Come the fuck on?!

Seriously, it's not that fuckin hard to draw. Yeah,it takes time to develop and pick up more learning to use your skills, but at least it's more honorable way than cheating and stealing!

6 notes

·

View notes

Photo

Agreed.

Ai generated images need to be controlled and regulated!

The music industry has shown that this is possible and that music, musicians and copyright can be protected! We need this for visual art too! The database scraps all art they can find on the internet! Artists didn’t give their permission and there is no way to opt out! Even medical reports and photos that are classified are being fed into those ai machines! It’s unethical and criminal and they weasel they way around legal terms!

Stand with artists! Support them! Regulate AI!

Edit: no I don’t want the art world to be like the music industry! This was just an example to show that with support and lobby some kind of moderation is possible! But no one fucking cares for artists!

1K notes

·

View notes

Text

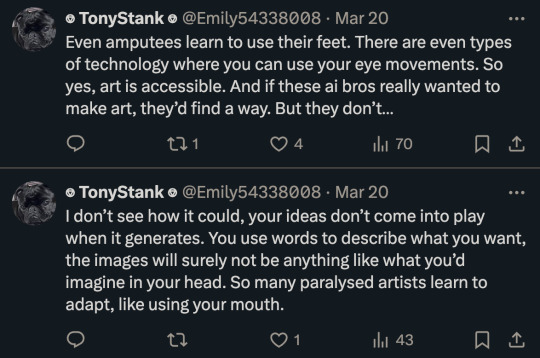

fuckin uuuuuuuuuuh sorry if this is a hot take or anything but “ai art is theft and unfair to the ppl who made the original art being sampled” doesnt suddenly become not true if the person generating the ai art is disabled

and its also possible to hold that opinion WITHOUT believing that disabled ppl are in any way “lazy” or “deserve” their disability or “it’s their fault” or any of that bullshit

i get that disability can be devastating to those who used to do a certain type of art that their body no longer allows in the traditional sense. but physical and mental disability have literally NEVER stopped people from creating art, EVER, in all of history. artists are creative. passionate people find a way.

and even if a way can’t be found…. stealing is still stealing. like. plagiarizing an article isn’t suddenly ethical just bc the person who stole it can’t read, and being against plagiarism doesn’t mean you hate people who can’t read.

the whole ableism angle on ai art confuses the hell out of me, if im honest. at least be consistent about it. if it’s ethical when disabled people do it, then it’s ethical when anyone does it

#lime rants#i think the only ethical way to use ai art is any way in which youre not claiming it as your own work#so like. you wanna generate a funny image to post in the groupchat#or you want some ideas for a piece of art that you ACTUALLY ARE gonna make yourself. but u need inspiration and cant find good extant image#unfortunately theres no way to regulate any of that#i also think theres something fucky about implying that disabled people cant do ANYTHING#even stuff that they historically have been able to do just fine#like ‘well disabled people are just useless so you cant expect them to do anything themselves’ uuuuh#disabled people can absolutely create their own art. they do not need to steal#and even if not that doesnt just make stealing okay

25 notes

·

View notes

Text

AI models can seemingly do it all: generate songs, photos, stories, and pictures of what your dog would look like as a medieval monarch.

But all of that data and imagery is pulled from real humans — writers, artists, illustrators, photographers, and more — who have had their work compressed and funneled into the training minds of AI without compensation.

Kelly McKernan is one of those artists. In 2023, they discovered that Midjourney, an AI image generation tool, had used their unique artistic style to create over twelve thousand images.

“It was starting to look pretty accurate, a little infringe-y,” they told The New Yorker last year. “I can see my hand in this stuff, see how my work was analyzed and mixed up with some others’ to produce these images.”

For years, leading AI companies like Midjourney and OpenAI, have enjoyed seemingly unfettered regulation, but a landmark court case could change that.

On May 9, a California federal judge allowed ten artists to move forward with their allegations against Stability AI, Runway, DeviantArt, and Midjourney. This includes proceeding with discovery, which means the AI companies will be asked to turn over internal documents for review and allow witness examination.

Lawyer-turned-content-creator Nate Hake took to X, formerly known as Twitter, to celebrate the milestone, saying that “discovery could help open the floodgates.”

“This is absolutely huge because so far the legal playbook by the GenAI companies has been to hide what their models were trained on,” Hake explained...

“I’m so grateful for these women and our lawyers,” McKernan posted on X, above a picture of them embracing Ortiz and Andersen. “We’re making history together as the largest copyright lawsuit in history moves forward.” ...

The case is one of many AI copyright theft cases brought forward in the last year, but no other case has gotten this far into litigation.

“I think having us artist plaintiffs visible in court was important,” McKernan wrote. “We’re the human creators fighting a Goliath of exploitative tech.”

“There are REAL people suffering the consequences of unethically built generative AI. We demand accountability, artist protections, and regulation.”

-via GoodGoodGood, May 10, 2024

#ai#anti ai#fuck ai art#ai art#big tech#tech news#lawsuit#united states#us politics#good news#hope#copyright#copyright law

2K notes

·

View notes

Text

The Best News of Last Week - March 18

1. FDA to Finally Outlaw Soda Ingredient Prohibited Around The World

An ingredient once commonly used in citrus-flavored sodas to keep the tangy taste mixed thoroughly through the beverage could finally be banned for good across the US. BVO, or brominated vegetable oil, is already banned in many countries, including India, Japan, and nations of the European Union, and was outlawed in the state of California in October 2022.

2. AI makes breakthrough discovery in battle to cure prostate cancer

Scientists have used AI to reveal a new form of aggressive prostate cancer which could revolutionise how the disease is diagnosed and treated.

A Cancer Research UK-funded study found prostate cancer, which affects one in eight men in their lifetime, includes two subtypes. It is hoped the findings could save thousands of lives in future and revolutionise how the cancer is diagnosed and treated.

3. “Inverse vaccine” shows potential to treat multiple sclerosis and other autoimmune diseases

A new type of vaccine developed by researchers at the University of Chicago’s Pritzker School of Molecular Engineering (PME) has shown in the lab setting that it can completely reverse autoimmune diseases like multiple sclerosis and type 1 diabetes — all without shutting down the rest of the immune system.

4. Paris 2024 Olympics makes history with unprecedented full gender parity

In a historic move, the International Olympic Committee (IOC) has distributed equal quotas for female and male athletes for the upcoming Olympic Games in Paris 2024. It is the first time The Olympics will have full gender parity and is a significant milestone in the pursuit of equal representation and opportunities for women in sports.

Biased media coverage lead girls and boys to abandon sports.

5. Restored coral reefs can grow as fast as healthy reefs in just 4 years, new research shows

Planting new coral in degraded reefs can lead to rapid recovery – with restored reefs growing as fast as healthy reefs after just four years. Researchers studied these reefs to assess whether coral restoration can bring back the important ecosystem functions of a healthy reef.

“The speed of recovery we saw is incredible,” said lead author Dr Ines Lange, from the University of Exeter.

6. EU regulators pass the planet's first sweeping AI regulations

The EU is banning practices that it believes will threaten citizens' rights. "Biometric categorization systems based on sensitive characteristics" will be outlawed, as will the "untargeted scraping" of images of faces from CCTV footage and the web to create facial recognition databases.

Other applications that will be banned include social scoring; emotion recognition in schools and workplaces; and "AI that manipulates human behavior or exploits people’s vulnerabilities."

7. Global child deaths reach historic low in 2022 – UN report

The number of children who died before their fifth birthday has reached a historic low, dropping to 4.9 million in 2022.

The report reveals that more children are surviving today than ever before, with the global under-5 mortality rate declining by 51 per cent since 2000.

---

That's it for this week :)

This newsletter will always be free. If you liked this post you can support me with a small kofi donation here:

Buy me a coffee ❤️

Also don’t forget to reblog this post with your friends.

781 notes

·

View notes

Text

With Meta being a big A-hole, it's time to repost this

Join the Monday Meta boycuts to support the strike for the rights of not just artists, but EVERYONE who ever uploaded anything to any meta site (like Instagram or Facebook).

Unless anyone can give me a proper, good reason not to, I fully support the ongoing protest against AI-generated images.

(Please read other people's posts too. This might give you a better understanding of the subject.)

While it has a lot of potential, even for artists, non-artist people took it to the point that it threatens our current and future job opportunities.

Honetly, it is a scary experience seeing it happening.

I've been drawing since I was able to hold a pencil. I've been drawing ALL MY LIFE, and just when I think it's going to pay off, when I think my pieces are getting good enough that I might be able to do it as a full-time job, something like this happens.

And I haven't even mentioned the art theft aspect of it. These pieces are NOT ORIGINAL WORKS. They're all based on other people's works, who DID NOT GIVE CONSENT TO USE THEIR WORKS.

I get it; it is wonderful to create something and frustrating when you don't have the skillset. But even this simple piece I created for this post has more life and feelings implied than all those AI "art pieces" put together.

It has my anger, sadness, disappointment, and fear in it.

It has that kindergarten girl in it who is still remembered by her former teachers for always drawing.

It has that suicidal preteen whose only happiness was writing her little stories and drawing the characters from them.

And it has that almost-adult high school student whose dream is to become like that one artist, to whom she can thank her life.

That one artist has barely any idea that I exist and definitely has no idea what she has done. I'm not sure if she realizes her childish stories from back then saved a life, but they did.

This is what art is for me.

Something you spend your life on without realizing it, getting better and better. Something that is perfect because it's human-made. Something that has feelings in it, even if we don't intend them.

#no to ai generated art#no to ai art#regulate ai created images#ai art#ai artwork#support real artists#support human artists#human art#human artist#ai#ai art is art theft#ai art is not art#ai art is theft#ai art is fake art#art#original character#digital art#oc art#digital drawing#say no to ai art#digital aritst#say no to ai generated art#meta boycut#monday meta strike#meta strike#meta instagram#meta

24 notes

·

View notes

Note

I'm speaking as an artist in the animation industry here, it's hard not to be reactionary about AI image generation when it's already taking jobs from artists. Sure, for now it's indie gigs on book covers or backgrounds on one Netflix short, but how long until it'll be responsible for layoffs en-masse? These conversations can't be had in a vacuum. As long as tools like these are used as a way for companies to not pay artists, we cannot support them, give them attention, do anything but fight their implementation in our industry. It doesn't matter if they're art. They cannot be given a platform in any capacity until regulation around their use in the entertainment industry is established. If it takes billions of people refusing to call AI image generation "art" and immediately refusing to support anything that features it, then that's what it takes. Complacency is choosing AI over living artists who are losing jobs.

Call me a luddite but I'll die on this hill. Artists with degrees and 20+ years in the industry are getting laid off, the industry is already in shambles. If given the chance, no matter how vapid, shallow, or visibly generated the content is, if it's content that rakes in cash, companies will opt for it over meaningful art made by a person, every time. Again, this isn't a debate that can be had in a vacuum. Until universal basic income is a reality, until we can all create what we want in our spare time and aren't crippled under capitalism, I'm condemning AI image generation because I'd like to keep my job and not be homeless. It has to be a black and white issue until we have protections in place for it to not be.

you can condemn the technology all you like but it's not going to save you. the only thing that can actually address these concerns is unionization in the short term and total transformation of our economic system in the long term. you are a luddite in the most literal classical sense & just like the luddites as long as you target the machines and not the system that implements them you will lose just like every single battle against new immiserating technology has been lost since the invention of the steam loom.

594 notes

·

View notes

Text

The creation of sexually explicit "deepfake" images is to be made a criminal offence in England and Wales under a new law, the government says.

Under the legislation, anyone making explicit images of an adult without their consent will face a criminal record and unlimited fine.

It will apply regardless of whether the creator of an image intended to share it, the Ministry of Justice (MoJ) said.

And if the image is then shared more widely, they could face jail.

A deepfake is an image or video that has been digitally altered with the help of Artificial Intelligence (AI) to replace the face of one person with the face of another.

Recent years have seen the growing use of the technology to add the faces of celebrities or public figures - most often women - into pornographic films.

Channel 4 News presenter Cathy Newman, who discovered her own image used as part of a deepfake video, told BBC Radio 4's Today programme it was "incredibly invasive".

Ms Newman found she was a victim as part of a Channel 4 investigation into deepfakes.

"It was violating... it was kind of me and not me," she said, explaining the video displayed her face but not her hair.

Ms Newman said finding perpetrators is hard, adding: "This is a worldwide problem, so we can legislate in this jurisdiction, it might have no impact on whoever created my video or the millions of other videos that are out there."

She said the person who created the video is yet to be found.

Under the Online Safety Act, which was passed last year, the sharing of deepfakes was made illegal.

The new law will make it an offence for someone to create a sexually explicit deepfake - even if they have no intention to share it but "purely want to cause alarm, humiliation, or distress to the victim", the MoJ said.

Clare McGlynn, a law professor at Durham University who specialises in legal regulation of pornography and online abuse, told the Today programme the legislation has some limitations.

She said it "will only criminalise where you can prove a person created the image with the intention to cause distress", and this could create loopholes in the law.

It will apply to images of adults, because the law already covers this behaviour where the image is of a child, the MoJ said.

It will be introduced as an amendment to the Criminal Justice Bill, which is currently making its way through Parliament.

Minister for Victims and Safeguarding Laura Farris said the new law would send a "crystal clear message that making this material is immoral, often misogynistic, and a crime".

"The creation of deepfake sexual images is despicable and completely unacceptable irrespective of whether the image is shared," she said.

"It is another example of ways in which certain people seek to degrade and dehumanise others - especially women.

"And it has the capacity to cause catastrophic consequences if the material is shared more widely. This Government will not tolerate it."

Cally Jane Beech, a former Love Island contestant who earlier this year was the victim of deepfake images, said the law was a "huge step in further strengthening of the laws around deepfakes to better protect women".

"What I endured went beyond embarrassment or inconvenience," she said.

"Too many women continue to have their privacy, dignity, and identity compromised by malicious individuals in this way and it has to stop. People who do this need to be held accountable."

Shadow home secretary Yvette Cooper described the creation of the images as a "gross violation" of a person's autonomy and privacy and said it "must not be tolerated".

"Technology is increasingly being manipulated to manufacture misogynistic content and is emboldening perpetrators of Violence Against Women and Girls," she said.

"That's why it is vital for the government to get ahead of these fast-changing threats and not to be outpaced by them.

"It's essential that the police and prosecutors are equipped with the training and tools required to rigorously enforce these laws in order to stop perpetrators from acting with impunity."

288 notes

·

View notes

Text

Next year will be Big Tech’s finale. Critique of Big Tech is now common sense, voiced by a motley spectrum that unites opposing political parties, mainstream pundits, and even tech titans such as the VC powerhouse Y Combinator, which is singing in harmony with giants like a16z in proclaiming fealty to “little tech” against the centralized power of incumbents.

Why the fall from grace? One reason is that the collateral consequences of the current Big Tech business model are too obvious to ignore. The list is old hat by now: centralization, surveillance, information control. It goes on, and it’s not hypothetical. Concentrating such vast power in a few hands does not lead to good things. No, it leads to things like the CrowdStrike outage of mid-2024, when corner-cutting by Microsoft led to critical infrastructure—from hospitals to banks to traffic systems—failing globally for an extended period.

Another reason Big Tech is set to falter in 2025 is that the frothy AI market, on which Big Tech bet big, is beginning to lose its fizz. Major money, like Goldman Sachs and Sequoia Capital, is worried. They went public recently with their concerns about the disconnect between the billions required to create and use large-scale AI, and the weak market fit and tepid returns where the rubber meets the AI business-model road.

It doesn’t help that the public and regulators are waking up to AI’s reliance on, and generation of, sensitive data at a time when the appetite for privacy has never been higher—as evidenced, for one, by Signal’s persistent user growth. AI, on the other hand, generally erodes privacy. We saw this in June when Microsoft announced Recall, a product that would, I kid you not, screenshot everything you do on your device so an AI system could give you “perfect memory” of what you were doing on your computer (Doomscrolling? Porn-watching?). The system required the capture of those sensitive images—which would not exist otherwise—in order to work.

Happily, these factors aren’t just liquefying the ground below Big Tech’s dominance. They’re also powering bold visions for alternatives that stop tinkering at the edges of the monopoly tech paradigm, and work to design and build actually democratic, independent, open, and transparent tech. Imagine!

For example, initiatives in Europe are exploring independent core tech infrastructure, with convenings of open source developers, scholars of governance, and experts on the political economy of the tech industry.

And just as the money people are joining in critique, they’re also exploring investments in new paradigms. A crop of tech investors are developing models of funding for mission alignment, focusing on tech that rejects surveillance, social control, and all the bullshit. One exciting model I’ve been discussing with some of these investors would combine traditional VC incentives (fund that one unicorn > scale > acquisition > get rich) with a commitment to resource tech’s open, nonprofit critical infrastructure with a percent of their fund. Not as investment, but as a contribution to maintaining the bedrock on which a healthy tech ecosystem can exist (and maybe get them and their limited partners a tax break).

Such support could—and I believe should—be supplemented by state capital. The amount of money needed is simply too vast if we’re going to do this properly. To give an example closer to home, developing and maintaining Signal costs around $50 million a year, which is very lean for tech. Projects such as the Sovereign Tech Fund in Germany point a path forward—they are a vehicle to distribute state funds to core open source infrastructures, but they are governed wholly independently, and create a buffer between the efforts they fund and the state.

Just as composting makes nutrients from necrosis, in 2025, Big Tech’s end will be the beginning of a new and vibrant ecosystem. The smart, actually cool, genuinely interested people will once again have their moment, getting the resources and clearance to design and (re)build a tech ecosystem that is actually innovative and built for benefit, not just profit and control. MAY IT BE EVER THUS!

72 notes

·

View notes

Text

I think this part is truly the most damning:

If it's all pre-rendered mush and it's "too expensive to fully experiment or explore" then such AI is not a valid artistic medium. It's entirely deterministic, like a pseudorandom number generator. The goal here is optimizing the rapid generation of an enormous quantity of low-quality images which fulfill the expectations put forth by The Prompt.

It's the modern technological equivalent of a circus automaton "painting" a canvas to be sold in the gift shop.

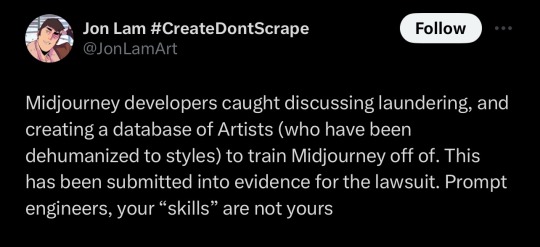

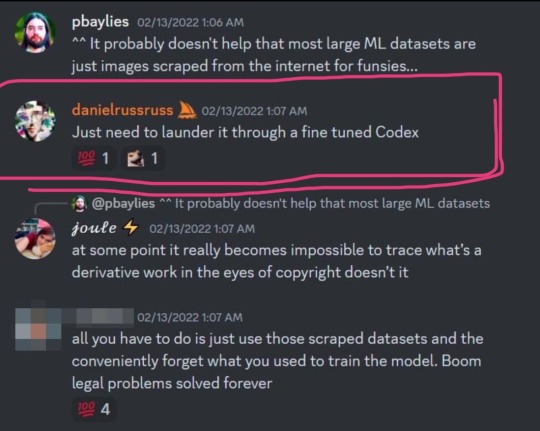

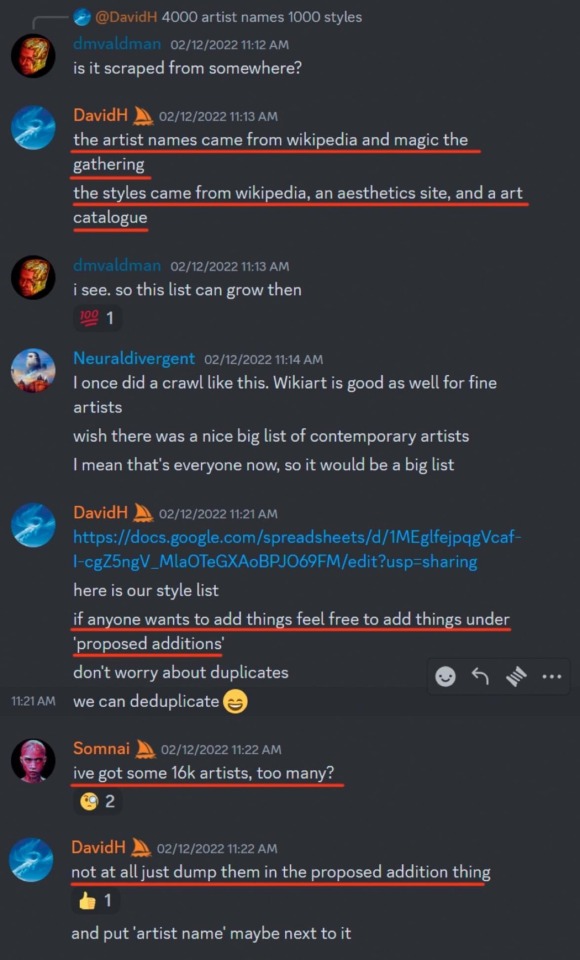

so a huge list of artists that was used to train midjourney’s model got leaked and i’m on it

literally there is no reason to support AI generators, they can’t ethically exist. my art has been used to train every single major one without consent lmfao 🤪

link to the archive

#to be clear AI as a concept has the power to create some truly fantastic images#however when it is subject to the constraints of its purpose as a machine#it is only capable of performing as its puppeteer wills it#and these puppeteers have the intention of stealing#tech#technology#tech regulation#big tech#data harvesting#data#technological developments#artificial intelligence#ai#machine generated content#machine learning#intellectual property#copyright

37K notes

·

View notes

Text

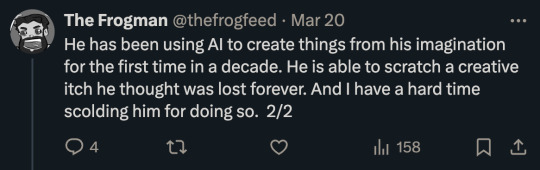

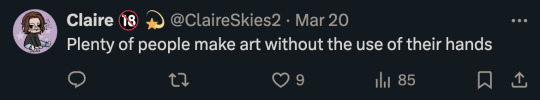

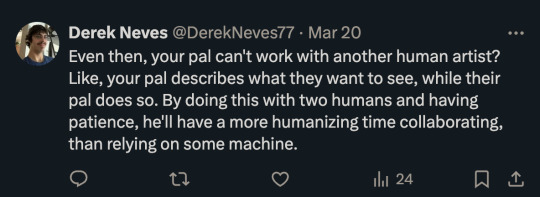

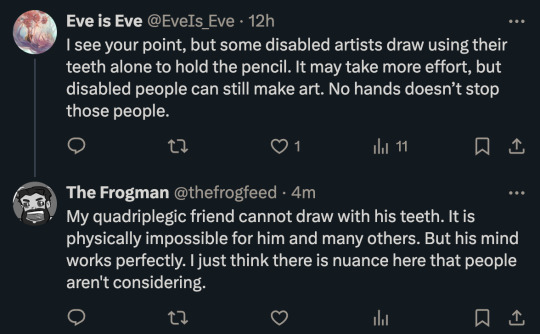

I found these replies very frustrating and fairly ableist. Do people not understand that disabilities and functionality vary wildly from person to person? Just because one person can draw with their teeth or feet doesn't mean others can.

And where is my friend supposed to get this magic eye movement drawing tech from? How is he supposed to afford it? And does the art created from it look like anything? Is it limited to abstraction? What if that isn't the art he wants to make?

Also, asking another artist to draw something for you is called a commission. And it usually costs money.

I have been using the generative AI in Photoshop for a few months now. It is trained on images Adobe owns, so I feel like it is in an ethical gray area. I mostly use it to repair damaged photos, remove objects, or extend boundaries. The images I create are still very much mine. But it has been an incredible accessibility tool for me. I was able to finish work that would have required much more energy than I had.

My friend uses AI like a sketchpad. He can quickly generate ideas and then he develops those into stories and videos and even music. He is doing all kinds of creative tasks that he was previously incapable of. It is just not feasible for him to have an artist on call to sketch every idea that pops into his brain—even if they donated labor to him.

I just think seeing these tools as pure evil is not the best take on all of this. We need them to be ethically trained. We need regulations to make sure they don't destroy creative jobs. But they do have utility and they can be powerful tools for accessibility as well.

These are complicated conversations. I'm not claiming to have all of the answers or know the most moral path we should steer this A.I behemoth towards. But seeing my friend excited about being creative after all of these years really affected me. It confused my feelings about generative A.I. Then I started using similar tools and it just made it so much easier to work on my photography. And that confused my feelings even more.

So...I am confused.

And unsure of how to proceed.

But I do hope people will be willing to at least consider this aspect and have these conversations.

147 notes

·

View notes

Note

now I do agree with a lot of what you're saying. AI is a tool that can be used (even by traditional artists also, the same way many traditional artists use 3D tools to create backgrounds in perspective instead of drawing it own their own)

But what really bothers me is the environmental impact. We know everything we do online has impact on the environment, but AI elevates this to the next level. I'm not even talking about training and maintaining GenAI, which is already pretty devastating but not unlike other technologies we have, but about the end user being able to cause a pretty big negative impact.

There is also a huge inequality of distribution of that impact. Companies that might be interested in keeping their energy usage carbon free or sustainable in, say, parts of europe, will do no such thing in asia and south america. We end up with data centers that will strain freshwater resources in the global south so that AI artists can make art?

I understand your points but I still think that all things being considered, we shouldn't be focused on whether AI art is art or not but rather on it should be regulated and it's environmental risks addressed.

Actually, AI barely budges the needle on environmental waste. The vast majority of industrial water use is for farming and agriculture, followed by apparel, beverage, and automotive manufacturing. You would do far, far more good for the environment by going vegetarian than by giving up AI art, and even within the tech industry, I'm pretty sure things like chip manufacturing take significantly more water and energy than data centers do.

End users barely make an impact at all - a chatGPT prompt or an image generation uses significantly less energy than, say, an hour of playing a video game or watching Youtube.

You are correct that offshoring data centers and manufacturing to dodge environmental regulations is a problem! But it's already a huge problem in multiple manufacturing industries. That's not a hypothetical future problem, it's literally happening right now. But the same people up in arms about how bad AI is for the environment are suspiciously quiet when it comes to demanding that individuals stop eating meat, buying new phones or playing video games, even though they are orders of magnitude worse for the environment.

23 notes

·

View notes

Text

So much fucking AI generated content on Pinterest. It’s every single image when you search something up at this point. It’d be a lot less insufferable (albeit, still insufferable) if it were mandatory for people who “create” and post that shit to put a disclaimer/somehow sort it under AI generated so it could be hidden/filtered through by those who have no interest in it.

If AI generated content HAS to be a thing, it should be organized in a separate division from everyone else. They “create” and enjoy their AI shit amongst their own crowd, and we don’t have to see it. We need restrictions and regulations on it ASAP.

It shouldn’t be mainstream and heavily accepted especially in such an early stage where it is ever evolving, and all these apps/websites should stop pandering. Especially on apps/websites like Pinterest where human creativity and authenticity is supposed to thrive. We’re all supposed to connect due to our shared love of everything from recipes, to anime girl fanart, and AI takes that away.

AI should not replace human passion. It should not replace human creativity and human skills. Learning skills is not an inconvenience, and it is ALWAYS rewarding. Learning how to draw and getting to see your practice and hard work come into fruition is rewarding.

Writing stories/fanfiction and finally getting to start off the plot line you were most excited for after finishing writing the plot line you were becoming really bored with, is rewarding. You learn, and you grow from these experiences, Even “boring” work/practice is rewarding whether you realize it at the moment or not.

Lyrics from one of my favorite Björk songs:

“Lust for comfort

suffocates the soul

Relentless restlessness

Liberates me (Sets me free)

I feel at home

Whenever the unknown surrounds me”

You have to do things that are “boring.” You have to do things that are uncomfortable and foreign. That’s how you learn. Not every part of acquiring new skills or learning something new is going to be easy or make sense immediately.

If some experiences were not boring, then the other experiences would not be enjoyable. If you are constantly comfortable, comfortability loses its appeal. We’ve gotten too reliant on comfortability and instant gratification. (Insert Tom Hiddleston talking about delayed gratification on Sesame Street)

What would be the point if there were no challenges? It would all be quite unfulfilling, and you’d stay the same. You wouldn’t learn to look at things differently and challenge yourself.

And I saw someone selling earrings with AI generated images on them without disclosing the fact that the images were made with AI. It’s kind of a scummy thing to do when people are likely buying your shit because they value authenticity and would like to support a likeminded person with creative passions rather than supporting corporations who mass produce shit with no passion except a passion for greed.

How do corporations nowadays have more passion than someone selling something on a site like Etsy where self made items, diy, and creativity are the main focus? Why stoop that low?

Remember, you’re supposed to be the alternative to PURE greed.

Let’s bring back being passionate about creative hobbies and let’s bring back mastering skills out of love for said skill. Out of love for creativity and expressing yourself through what you created. Let’s bring back authenticity and wanting to share your own authenticity with others.

How does this not scare people? That others are no longer passionate about anything? That human beings have become so fucking lazy, that even some of the most fulfilling things you can do in life are too much work?

So lazy, that they’d be more satisfied with typing prompts into a website so a machine can generate literal internet slop made from preexisting art/images on the internet rather than them creating something themselves and getting to make all the creative choices and have every last detail be theirs to decide.

And I didn’t even get into how fucked up it is that AI has little to no regulation/restriction. It’s fucked up that images can be made depicting public figures of any kind. Anything, and anyone. Singers, Actors, Comedians, Politicians, literally everyone.

It’s fucked up that voices can be made to say anything. To sing anything. To declare anything.

But go on, keep feeding the machine because you were too lazy to pick up a fucking pencil to draw one of your OCs. See where your laziness and lack of passion gets us all.

Mind you, people used to be happy to draw their own OCs. Putting them in new outfits and such and maybe even giving them new haircuts. We have lost every plot, because people are too busy acting out those plot lines out with AI chat bots instead of with other human beings. They’re too busy feeding prompts to a machine before they could even think for themselves about how they would want the plot to go.

TL;DR: FUCK AI!!

#fuck ai#anti ai#anti ai generated content#anti ai generated images#anti ai generated art#anti artificial intelligence#anti ai images#anti ai fanfiction#anti ai writing#anti character ai#pinterest

28 notes

·

View notes

Text

Sphinxmumps Linkdump

On THURSDAY (June 20) I'm live onstage in LOS ANGELES for a recording of the GO FACT YOURSELF podcast. On FRIDAY (June 21) I'm doing an ONLINE READING for the LOCUS AWARDS at 16hPT. On SATURDAY (June 22) I'll be in OAKLAND, CA for a panel and a keynote at the LOCUS AWARDS.

Welcome to my 20th Linkdump, in which I declare link bankruptcy and discharge my link-debts by telling you about all the open tabs I didn't get a chance to cover in this week's newsletters. Here's the previous 19 installments:

https://pluralistic.net/tag/linkdump/

Starting off this week with a gorgeous book that is also one of my favorite books: Beehive's special slipcased edition of Dante's Inferno, as translated by Henry Wadsworth Longfellow, with new illustrations by UK linocut artist Sophy Hollington:

https://www.kickstarter.com/projects/beehivebooks/the-inferno

I've loved Inferno since middle-school, when I read the John Ciardi translation, principally because I'd just read Niven and Pournelle's weird (and politically odious) (but cracking) sf novel of the same name:

https://en.wikipedia.org/wiki/Inferno_(Niven_and_Pournelle_novel)

But also because Ciardi wrote "About Crows," one of my all-time favorite bits of doggerel, a poem that pierced my soul when I was 12 and continues to do so now that I'm 52, for completely opposite reasons (now there's a poem with staying power!):

https://spirituallythinking.blogspot.com/2011/10/about-crows-by-john-ciardi.html

Beehive has a well-deserved rep for making absolutely beautiful new editions of great public domain books, each with new illustrations and intros, all in matching livery to make a bookshelf look classy af. I have several of them and I've just ordered my copy of Inferno. How could I not? So looking forward to this, along with its intro by Ukrainian poet Ilya Kaminsky and essay by Dante scholar Kristina Olson.

The Beehive editions show us how a rich public domain can be the soil from which new and inspiring creative works sprout. Any honest assessment of a creator's work must include the fact that creativity is a collective act, both inspired by and inspiring to other creators, past, present and future.

One of the distressing aspects of the debate over the exploitative grift of AI is that it's provoked a wave of copyright maximalism among otherwise thoughtful artists, despite the fact that a new copyright that lets you control model training will do nothing to prevent your boss from forcing you to sign over that right in your contracts, training an AI on your work, and then using the model as a pretext to erode your wages or fire your ass:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

Same goes for some privacy advocates, whose imaginations were cramped by the fact that the only regulation we enforce on the internet is copyright, causing them to forget that privacy rights can exist separate from the nonsensical prospect of "owning" facts about your life:

https://pluralistic.net/2023/10/21/the-internets-original-sin/

We should address AI's labor questions with labor rights, and we should address AI's privacy questions with privacy rights. You can tell that these are the approaches that would actually work for the public because our bosses hate these approaches and instead insist that the answer is just giving us more virtual property that we can sell to them, because they know they'll have a buyer's market that will let them scoop up all these rights at bargain prices and use the resulting hoards to torment, immiserate and pauperize us.

Take Clearview AI, a facial recognition tool created by eugenicists and white nationalists in order to help giant corporations and militarized, unaccountable cops hunt us by our faces:

https://pluralistic.net/2023/09/20/steal-your-face/#hoan-ton-that

Clearview scraped billions of images of our faces and shoveled them into their model. This led to a class action suit in Illinois, which boasts America's best biometric privacy law, under which Clearview owes tens of billions of dollars in statutory damages. Now, Clearview has offered a settlement that illustrates neatly the problem with making privacy into property that you can sell instead of a right that can't be violated: they're going to offer Illinoisians a small share of the company's stock:

https://www.theregister.com/2024/06/14/clearview_ai_reaches_creative_settlement/

To call this perverse is to go a grave injustice to good, hardworking perverts. The sums involved will be infinitesimal, and the only way to make those sums really count is for everyone in Illinois to root for Clearview to commit more grotesque privacy invasions of the rest of us to make its creepy, terrible product more valuable.

Worse still: by crafting a bespoke, one-off, forgiveness-oriented regulation specifically for Clearview, we ensure that it will continue, but that it will also never be disciplined by competitors. That is, rather than banning this kind of facial recognition tech, we grant them a monopoly over it, allowing them to charge all the traffic will bear.

We're in an extraordinary moment for both labor and privacy rights. Two of Biden's most powerful agency heads, Lina Khan and Rohit Chopra have made unprecedented use of their powers to create new national privacy regulations:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

In so doing, they're bypassing Congressional deadlock. Congress has not passed a new consumer privacy law since 1988, when they banned video-store clerks from leaking your VHS rental history to newspaper reporters:

https://en.wikipedia.org/wiki/Video_Privacy_Protection_Act

Congress hasn't given us a single law protecting American consumers from the digital era's all-out assault on our privacy. But between the agencies, state legislatures, and a growing coalition of groups demanding action on privacy, a new federal privacy law seems all but assured:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

When that happens, we're going to have to decide what to do about products created through mass-scale privacy violations, like Clearview AI – but also all of OpenAI's products, Google's AI, Facebook's AI, Microsoft's AI, and so on. Do we offer them a deal like the one Clearview's angling for in Illinois, fining them an affordable sum and grandfathering in the products they built by violating our rights?

Doing so would give these companies a permanent advantage, and the ongoing use of their products would continue to violate billions of peoples' privacy, billions of times per day. It would ensure that there was no market for privacy-preserving competitors thus enshrining privacy invasion as a permanent aspect of our technology and lives.

There's an alternative: "model disgorgement." "Disgorgement" is the legal term for forcing someone to cough up something they've stolen (for example, forcing an embezzler to give back the money). "Model disgorgement" can be a legal requirement to destroy models created illegally:

https://iapp.org/news/a/explaining-model-disgorgement

It's grounded in the idea that there's no known way to unscramble the AI eggs: once you train a model on data that shouldn't be in it, you can't untrain the model to get the private data out of it again. Model disgorgement doesn't insist that offending models be destroyed, but it shifts the burden of figuring out how to unscramble the AI omelet to the AI companies. If they can't figure out how to get the ill-gotten data out of the model, then they have to start over.

This framework aligns everyone's incentives. Unlike the Clearview approach – move fast, break things, attain an unassailable, permanent monopoly thanks to a grandfather exception – model disgorgement makes AI companies act with extreme care, because getting it wrong means going back to square one.

This is the kind of hard-nosed, public-interest-oriented rulemaking we're seeing from Biden's best anti-corporate enforcers. After decades kid-glove treatment that allowed companies like Microsoft, Equifax, Wells Fargo and Exxon commit ghastly crimes and then crime again another day, Biden's corporate cops are no longer treating the survival of massive, structurally important corporate criminals as a necessity.

It's been so long since anyone in the US government treated the corporate death penalty as a serious proposition that it can be hard to believe it's even happening, but boy is it happening. The DOJ Antitrust Division is seeking to break up Google, the largest tech company in the history of the world, and they are tipped to win:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

And that's one of the major suits against Google that Big G is losing. Another suit, jointly brought by the feds and dozens of state AGs, is just about to start, despite Google's failed attempt to get the suit dismissed:

https://www.reuters.com/technology/google-loses-bid-end-us-antitrust-case-over-digital-advertising-2024-06-14/

I'm a huge fan of the Biden antitrust enforcers, but that doesn't make me a huge fan of Biden. Even before Biden's disgraceful collaboration in genocide, I had plenty of reasons – old and new – to distrust him and deplore his politics. I'm not the only leftist who's struggling with the dilemma posed by the worst part of Biden's record in light of the coming election.

You've doubtless read the arguments (or rather, "arguments," since they all generate a lot more heat than light and I doubt whether any of them will convince anyone). But this week, Anand Giridharadas republished his 2020 interview with Noam Chomsky about Biden and electoral politics, and I haven't been able to get it out of my mind:

https://the.ink/p/free-noam-chomsky-life-voting-biden-the-left

Chomsky contrasts the left position on politics with the liberal position. For leftists, Chomsky says, "real politics" are a matter of "constant activism." It's not a "laser-like focus on the quadrennial extravaganza" of national elections, after which you "go home and let your superiors take over."

For leftists, politics means working all the time, "and every once in a while there's an event called an election." This should command "10 or 15 minutes" of your attention before you get back to the real work.

This makes the voting decision more obvious and less fraught for Chomsky. There's "never been a greater difference" between the candidates, so leftists should go take 15 minutes, "push the lever, and go back to work."

Chomsky attributed the good parts of Biden's 2020 platform to being "hammered on by activists coming out of the Sanders movement and other." That's the real work, that hammering. That's "real politics."

For Chomsky, voting for Biden isn't support for Biden. It's "support for the activists who have been at work constantly, creating the background within the party in which the shifts took place, and who have followed Sanders in actually entering the campaign and influencing it. Support for them. Support for real politics."

Chomsky tells us that the self-described "masters of the universe" understand that something has changed: "the peasants are coming with their pitchforks." They have all kinds of euphemisms for this ("reputational risks") but the core here is a winner-take-all battle for the future of the planet and the species. That's why the even the "sensible" ultra-rich threw in for Trump in 2016 and 2020, and why they're backing him even harder in 2024:

https://www.bbc.com/news/articles/ckvvlv3lewxo

Chomsky tells us not to bother trying to figure out Biden's personality. Instead, we should focus on "how things get done." Biden won't do what's necessary to end genocide and preserve our habitable planet out of conviction, but he may do so out of necessity. Indeed, it doesn't matter how he feels about anything – what matters is what we can make him do.

Chomksy himself is in his 90s and his health is reportedly in terminal decline, so this is probably the only word we'll get from him on this issue:

https://www.reddit.com/r/chomsky/comments/1aj56hj/updates_on_noams_health_from_his_longtime_mit/

The link between concentrated wealth, concentrated power, and the existential risks to our species and civilization is obvious – to me, at least. Any time a tiny minority holds unaccountable power, they will end up using it to harm everyone except themselves. I'm not the first one to take note of this – it used to be a commonplace in American politics.

Back in 1936, FDR gave a speech at the DNC, accepting their nomination for president. Unlike FDR's election night speech ("I welcome their hatred"), this speech has been largely forgotten, but it's a banger:

https://teachingamericanhistory.org/document/acceptance-speech-at-the-democratic-national-convention-1936/

In that speech, Roosevelt brought a new term into our political parlance: "economic royalists." He described the American plutocracy as the spiritual descendants of the hereditary nobility that Americans had overthrown in 1776. The English aristocracy "governed without the consent of the governed" and “put the average man’s property and the average man’s life in pawn to the mercenaries of dynastic power":

Roosevelt said that these new royalists conquered the nation's economy and then set out to seize its politics, backing candidates that would create "a new despotism wrapped in the robes of legal sanction…an industrial dictatorship."

As David Dayen writes in The American Prospect, this has strong parallels to today's world, where "Silicon Valley, Big Oil, and Wall Street come together to back a transactional presidential candidate who promises them specific favors, after reducing their corporate taxes by 40 percent the last time he was president":

https://prospect.org/politics/2024-06-14-speech-fdr-would-give/

Roosevelt, of course, went on to win by a landslide, wiping out the Republicans despite the endless financial support of the ruling class.

The thing is, FDR's policies didn't originate with him. He came from the uppermost of the American upper crust, after all, and famously refused to define the "New Deal" even as he campaigned on it. The "New Deal" became whatever activists in the Democratic Party's left could force him to do, and while it was bold and transformative, it wasn't nearly enough.

The compromise FDR brokered within the Democratic Party froze out Black Americans to a terrible degree. Writing for the Institute for Local Self Reliance, Ron Knox and Susan Holmberg reveal the long shadow cast by that unforgivable compromise:

https://storymaps.arcgis.com/stories/045dcde7333243df9b7f4ed8147979cd

They describe how redlining – the formalization of anti-Black racism in New Deal housing policy – led to the ruin of Toledo's once-thriving Dorr Street neighborhood, a "Black Wall Street" where a Black middle class lived and thrived. New Deal policies starved the neighborhood of funds, then ripped it in two with a freeway, sacrificing it and the people who lived in it.

But the story of Dorr Street isn't over. As Knox and Holmberg write, the people of Dorr Street never gave up on their community, and today, there's an awful lot of Chomsky's "constant activism" that is painstakingly bringing the community back, inch by aching inch. The community is locked in a guerrilla war against the same forces that the Biden antitrust enforcers are fighting on the open field of battle. The work that activists do to drag Democratic Party policies to the left is critical to making reparations for the sins of the New Deal – and for realizing its promise for everybody.

In my lifetime, there's never been a Democratic Party that represented my values. The first Democratic President of my life, Carter, kicked off Reaganomics by beginning the dismantling of America's antitrust enforcement, in the mistaken belief that acting like a Republican would get Democrats to vote for him again. He failed and delivered Reagan, whose Reaganomics were the official policy of every Democrat since, from Clinton ("end welfare as we know it") to Obama ("foam the runways for the banks").

In other words, I don't give a damn about Biden, but I am entirely consumed with what we can force his administration to do, and there are lots of areas where I like our chances.

For example: getting Biden's IRS to go after the super-rich, ending the impunity for elite tax evasion that Spencer Woodman pitilessly dissects in this week's superb investigation for the International Consortium of Investigative Journalists:

https://www.icij.org/inside-icij/2024/06/how-the-irs-went-soft-on-billionaires-and-corporate-tax-cheats/

Ending elite tax cheating will make them poorer, and that will make them weaker, because their power comes from money alone (they don't wield power because their want to make us all better off!).

Or getting Biden's enforcers to continue their fight against the monopolists who've spiked the prices of our groceries even as they transformed shopping into a panopticon, so that their business is increasingly about selling our data to other giant corporations, with selling food to us as an afterthought:

https://prospect.org/economy/2024-06-12-war-in-the-aisles/

For forty years, since the Carter administration, we've been told that our only power comes from our role as "consumers." That's a word that always conjures up one of my favorite William Gibson quotes, from 2003's Idoru:

Something the size of a baby hippo, the color of a week-old boiled potato, that lives by itself, in the dark, in a double-wide on the outskirts of Topeka. It's covered with eyes and it sweats constantly. The sweat runs into those eyes and makes them sting. It has no mouth, no genitals, and can only express its mute extremes of murderous rage and infantile desire by changing the channels on a universal remote. Or by voting in presidential elections.

The normie, corporate wing of the Democratic Party sees us that way. They decry any action against concentrated corporate power as "anti-consumer" and insist that using the law to fight against corporate power is a waste of our time:

https://www.thesling.org/sorry-matt-yglesias-hipster-antitrust-does-not-mean-the-abandonment-of-consumers-but-it-does-mean-new-ways-to-protect-workers-2/

But after giving it some careful thought, I'm with Chomsky on this, not Yglesias. The election is something we have to pay some attention to as activists, but only "10 or 15 minutes." Yeah, "push the lever," but then "go back to work." I don't care what Biden wants to do. I care what we can make him do.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/06/15/disarrangement/#credo-in-un-dio-crudel

Image: Jim's Photo World (modified) https://www.flickr.com/photos/jimsphotoworld/5360343644/

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#linkdump#linkdumps#chomsky#voting#elections#uspoli#oligarchy#irs#billionaires#tax cheats#irs files#hipster antitrust#matt ygelsias#dante#gift guide#books#crowdfunding#public domain#model disgorgement#ai#llms#fdr#groceries#ripoffs#toledo#redlining#race

76 notes

·

View notes

Note

I see some of your pro-ai stuff, and I also see that you're very good at explaining things, so I have some concerns about ai that I'd like for you to explain if it's okay.

I'm very worried about the amount of pollution it takes to make an ai generated image, story, video, etc. I'm also very worried about ai imagery being used to spread disinformation.

Correct me if I'm wrong, but you seem to go by the stance that since we can't un-create ai, we should just try our best to manage. How do we manage things like disinformation and massive amounts of pollution? To be fair, I actually don't know the exact amount of pollution ai generated prompts make.

so, first off: the environmental devastation argument is so incorrect, i would honestly consider it intellectually dishonest. here is a good, thorough writeup of the issue.

the tl;dr is that trying to discuss the "environmental cost of AI" as one monolithic thing is incoherent; AI is an umbrella term that refers to a wide breadth of both machine-learning research and, like, random tech that gets swept up in the umbrella as a marketing gimmick. when most people doompost about the environmental cost of AI, they're discussing image generation programs and chat interfaces in particular, and the fact is that running these programs on your computer eats about as much energy as, like, playing an hour of skyrim. bluntly, i consider this argument intellectually dishonest from anyone who does not consider it equally unethical to play skyrim.

the vast majority of the environmental cost of AI such as image generation and chat interfaces comes from implementation by large corporations. this problem isn't tractable by banning the tool; it's a structural problem baked into the existence of massive corporations and the current phase of capitalism we're in. prior to generative AI becoming a worldwide cultural trend, corporations were still responsible for that much environmental devastation, primarily to the end of serving ads--and like. the vast majority of use cases corporations are twisting AI to fit boil down to serving ads. essentially, i think focusing on the tool in this particular case is missing the forest for the trees; as long as you're not addressing the structural incentives for corporations to blindly and mindlessly participate in unsustainable extractivism, they will continue to use any and all tools to participate in such, and i am equally concerned about the energy spent barraging me with literally dozens and dozens of digital animated billboards in a ten-mile radius as i am with the energy spent getting a chatbot to talk up their product to me.

moving onto the disinformation issue: actually, yes, i'm very concerned about that. i don't have any personal opinions on how to manage it, but it's a very strong concern of mine. lowering the skill floor for production of media does, necessarily, mean a lot of bad actors are now capable of producing a much larger glut of malicious content, much faster.

i do think that, historically speaking, similar explosions of disinformation & malicious media haven't been socially managed by banning the tool nor by shaming those who use it for non-malicious purposes--like, when it was adopted for personal use, the internet itself created a sudden huge explosion of spam and disinformation as never before seen in human history, but "get rid of the internet" was never a tractable solution to this, and "shame people you see using the internet" just didn't do anything for the problem.

wish i could be more helpful on solutions for that one--it's just not a field i have any particular knowledge in, but if there's anyone reading who'd like to add on with information about large-scale regulation of the sort of broad field of malicious content i'm discussing, feel free.

28 notes

·

View notes

Note

Hello what do you think of Ai generated artwork and videos?

I have a whole entire blog post I wrote last year btw: The Rise of the Bots; The Ascension of the Human. (Reading it again a year later I am glad I am still validated in my thoughts)

My entire being and output as an artist is rooted in process, thought, craft and connection. I am open about my process and I share/create resources constantly. I have literally experienced the thing people mean when they say 'art transforms you' just by being so close to it every step of its making. All my comics have this centrality of personhood attached to them - if it's not obvious that the artist's hand (me) is in it, there is the characteristic focus on our emotional/cultural/artistic thread across history. Just as NFTs and what they represent were antithetical to how I interact with the world as artist and audience, so is the use of so-called AI art. NFTs and AI Art share a common hype cycle / speculative mania that comes out from an annoying vulture mindset that only knows how to eat itself to fill its belly, so I don't expect it to last too long. However I don't appreciate the damage both things have done to the utility of the internet, the degradation of art as a commercial pathway and the destruction of the image as a historical/educational/legal tool. (Which is why I am becoming more underground and turning towards alternatives like the Web Revival, small presses, curated resources and in-person communities)

The technological concept around LLM (pattern recognition and matching it to a goal), especially for medicine and statistics, is not itself problematic, especially when it follows ethical and data handling regulations that have been defined. However, when people talk generative art, what we are talking about, and fighting against, is the exploitation of resources and labour, and the further disconnection of worker = labour, human = society artificially imposed by the Corporate MBA / techno class in the pursuit of infinite stockmarket growth which then introduces a type of brainrot that can only think of things as producing value in relation to how fast one can seize for themselves Westernised Ideals of Fame and Fortune. Also like, this whole AI thing is part of the degradation of entertainment (the loss of small-to-medium outlets, constant mergers, nobody owning their digital streaming products they bought, the laundering of journalism/curation into press releases), the internet (the algorithimification of everything, constant spam, search engines getting worse, the worsening of socmedia as a tool) and the intellectual rigour of all information.

It's all part of this rot that's spreading outwards.

TL;DR bro I make all my art by hand and I am a nerd about informational integrity

#everytime anons ask a question of this style I am always wondering it's genuine or...#only cos if you know my work and what I am involved in you'd clearly see that I embody my position

136 notes

·

View notes