Text

You might think that I'm joking when I say that we need cyborg rights to be codified into law, but I honestly think that, given the pace of development of medical implants and the rights issues raised by having proprietary technologies becoming part of a human body, I think that this is absolutely essential for bodily autonomy, disability rights, and human rights more generally. This has already become an issue, and it will only become a larger issue moving forwards.

65K notes

·

View notes

Text

*Every day someone bruisingly encounters the realities of "dead media"

5K notes

·

View notes

Text

yeah but also I've talked to city people who did not realize a "hen" is a chicken. modern humanity is more disconnected from mother earth than youd expect

67K notes

·

View notes

Text

In the UK, you have the Cass report robbing young adults of their autonomy and right to health-care.l under the guise of "protecting the children."

In the US, we have the KOSA (Kids Online Safety Act) bill stripping internet users of privacy and their right to free speech under the guise of "protecting the children."

However, the US currently doesn't recognize minors as anything more than the property of their parents. The United States remains the only country in the world that has failed to ratify the United Nations Convention on the Rights of the Child (CRC).

I think @draculasstrawhat makes some excellent points. We're dealing with the consequences of the upper echelons of our society dominated by an aging, paranoid caste of career politicians and corporate despots. We're in for a rough ride, folks.

I wish age gap discourse hadn't spiraled the way it has because I want there to be a safe space to say "Men in their 40s who date 25 year olds aren't predators, they're just fucking losers"

#kosa bill#stop kosa#anti kosa#fuck kosa#Kosa#kids online safety bill#censorship#tech regulation#big tech#data harvesting#privacy#data#infantilization#adulthood#Adulting#adult human female#the internet is the information superhighway. he who controls the information controls the narrative.#information#internet law#internet

143K notes

·

View notes

Text

Wow... people having to identify themselves to a government because of they are part of a group that is seen as ¨other¨ or because they want to learn about a certain topic...where i have seen this before...

I don't know... maybe what it is considered one of the biggest red flags in steps of dehumanization of groups, mainly minorities?

Btw, this is what the people behind KOSA are trying to impose in all the United States of America.

[Image ID: News politcs article about USA politics that says ¨Kansas governor passes law requiring ID to view acts of 'homosexuality' online, vetoes anti-LGBTQ+ bill¨ /.End ID]

Link to the article: https://www.advocate.com/politics/kansas-veto-age-verification-gender-affirming-care-abortion

Edit: Since this gained more notes, for those who don't know KOSA is, it is a USA bill that was reintroduced on May 2023 (last year). It is called ¨Kids Online Safety Act¨ (KOSA for short). It has been introduced and reintroduced for a while now since 2022. It is meant with the intention to ¨protect kids¨ by restricting their use of internet by pushing age restrictions and people having to present their ID to use internet or access certain websites, quite similar to the Kansas state bill that got passed. Many groups and people have criticized this bill for the potential censorship it can come with it and do more harm to the kids than help them. Possible censorship that has been suggested this bill can bring is LGBT+ content, politics and news, mental health search, political and social opinions in general (adults included). What is more, it has been put into question the possible invasion of privacy for both minors and adults by having to share an identification to use certain websites. That people could get censored or doxxed by doing this.

As for the bill itself, there was a hearing earlier today in the Senate. ( April 17th-Wednesday). It could take a while before it gets voted and has to pass different stages. Then it would take months (18 months) to be implemented if it gets passed.

I'm not American myself, so i'm not sure how much i can do about this. What i do recommend is making calls to senators and people involved in pushing this bill to make clear your disapproval of it. Try sign petitions or just telling others about it.

Some sources: https://en.wikipedia.org/wiki/Kids_Online_Safety_Act https://www.stopkosa.com/ https://www.badinternetbills.com/ https://www.eff.org/deeplinks/2024/02/dont-fall-latest-changes-dangerous-kids-online-safety-act

Website to keep track of the KOSA bill movements and cosponsors of the bill:

4K notes

·

View notes

Text

more good news from tiktok: they’ve started blocking celebrities.

they’re calling it block party 2024. just blocking and ignoring countless celebrities who havent said shit about palestine. influencers, actors, anyone who went to the met gala, whatever, they’re getting blocked. and people keep talking about how cathartic it is, how good it feels, how they never realized they could DO that. there was some kind of subconscious law against blocking famous people, but it’s broken, and people are LOVING it. and it’s WORKING. a social media/digital advertising coordinator was talking about how ad companies are PANICKING, because they can’t accurately target anymore. so many big influencers, including fucking LIZZO started talking about palestine the MOMENT their follower counts started going down. and the best part? no one is forgiving them. lizzo posted a tiktok asking people to donate to palestinian families, and all the comments just said you’re a multimillionaire. put your money where your mouth is. blocked.

i feel like i’m witnessing the downfall of celebrity culture, right here right now. people are waking up.

54K notes

·

View notes

Text

#machine generated content#copyright#intellectual property#artificial intelligence#algorithmically generated content#Ownership#data privacy#privacy#technology#tech#big tech#tech regulation#technological developments#data harvesting#data

26K notes

·

View notes

Text

Algorithmic feeds are a twiddler’s playground

Next TUESDAY (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on WEDNESDAY (May 15), I'm in NORTH HOLLYWOOD with HARRY SHEARER for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

Like Oscar Wilde, "I can resist anything except temptation," and my slow and halting journey to adulthood is really just me grappling with this fact, getting temptation out of my way before I can yield to it.

Behavioral economists have a name for the steps we take to guard against temptation: a "Ulysses pact." That's when you take some possibility off the table during a moment of strength in recognition of some coming moment of weakness:

https://archive.org/details/decentralizedwebsummit2016-corydoctorow

Famously, Ulysses did this before he sailed into the Sea of Sirens. Rather than stopping his ears with wax to prevent his hearing the sirens' song, which would lure him to his drowning, Ulysses has his sailors tie him to the mast, leaving his ears unplugged. Ulysses became the first person to hear the sirens' song and live to tell the tale.

Ulysses was strong enough to know that he would someday be weak. He expressed his strength by guarding against his weakness. Our modern lives are filled with less epic versions of the Ulysses pact: the day you go on a diet, it's a good idea to throw away all your Oreos. That way, when your blood sugar sings its siren song at 2AM, it will be drowned out by the rest of your body's unwillingness to get dressed, find your keys and drive half an hour to the all-night grocery store.

Note that this Ulysses pact isn't perfect. You might drive to the grocery store. It's rare that a Ulysses pact is unbreakable – we bind ourselves to the mast, but we don't chain ourselves to it and slap on a pair of handcuffs for good measure.

People who run institutions can – and should – create Ulysses pacts, too. A company that holds the kind of sensitive data that might be subjected to "sneak-and-peek" warrants by cops or spies can set up a "warrant canary":

https://en.wikipedia.org/wiki/Warrant_canary

This isn't perfect. A company that stops publishing regular transparency reports might have been compromised by the NSA, but it's also possible that they've had a change in management and the new boss just doesn't give a shit about his users' privacy:

https://www.fastcompany.com/90853794/twitters-transparency-reporting-has-tanked-under-elon-musk

Likewise, a company making software it wants users to trust can release that code under an irrevocable free/open software license, thus guaranteeing that each release under that license will be free and open forever. This is good, but not perfect: the new boss can take that free/open code down a proprietary fork and try to orphan the free version:

https://news.ycombinator.com/item?id=39772562

A company can structure itself as a public benefit corporation and make a binding promise to elevate its stakeholders' interests over its shareholders' – but the CEO can still take a secret $100m bribe from cryptocurrency creeps and try to lure those stakeholders into a shitcoin Ponzi scheme:

https://fortune.com/crypto/2024/03/11/kickstarter-blockchain-a16z-crypto-secret-investment-chris-dixon/

A key resource can be entrusted to a nonprofit with a board of directors who are charged with stewarding it for the benefit of a broad community, but when a private equity fund dangles billions before that board, they can talk themselves into a belief that selling out is the right thing to do:

https://www.eff.org/deeplinks/2020/12/how-we-saved-org-2020-review

Ulysses pacts aren't perfect, but they are very important. At the very least, creating a Ulysses pact starts with acknowledging that you are fallible. That you can be tempted, and rationalize your way into taking bad action, even when you know better. Becoming an adult is a process of learning that your strength comes from seeing your weaknesses and protecting yourself and the people who trust you from them.

Which brings me to enshittification. Enshittification is the process by which platforms betray their users and their customers by siphoning value away from each until the platform is a pile of shit:

https://en.wikipedia.org/wiki/Enshittification

Enshittification is a spectrum that can be applied to many companies' decay, but in its purest form, enshittification requires:

a) A platform: a two-sided market with business customers and end users who can be played off against each other; b) A digital back-end: a market that can be easily, rapidly and undetectably manipulated by its owners, who can alter search-rankings, prices and costs on a per-user, per-query basis; and c) A lack of constraint: the platform's owners must not fear a consequence for this cheating, be it from competitors, regulators, workforce resignations or rival technologists who use mods, alternative clients, blockers or other "adversarial interoperability" tools to disenshittify your product and sever your relationship with your users.

he founders of tech platforms don't generally set out to enshittify them. Rather, they are constantly seeking some equilibrium between delivering value to their shareholders and turning value over to end users, business customers, and their own workers. Founders are consummate rationalizers; like parenting, founding a company requires continuous, low-grade self-deception about the amount of work involved and the chances of success. A founder, confronted with the likelihood of failure, is absolutely capable of talking themselves into believing that nearly any compromise is superior to shuttering the business: "I'm one of the good guys, so the most important thing is for me to live to fight another day. Thus I can do any number of immoral things to my users, business customers or workers, because I can make it up to them when we survive this crisis. It's for their own good, even if they don't know it. Indeed, I'm doubly moral here, because I'm volunteering to look like the bad guy, just so I can save this business, which will make the world over for the better":

https://locusmag.com/2024/05/cory-doctorow-no-one-is-the-enshittifier-of-their-own-story/

(En)shit(tification) flows downhill, so tech workers grapple with their own version of this dilemma. Faced with constant pressure to increase the value flowing from their division to the company, they have to balance different, conflicting tactics, like "increasing the number of users or business customers, possibly by shifting value from the company to these stakeholders in the hopes of making it up in volume"; or "locking in my existing stakeholders and squeezing them harder, safe in the knowledge that they can't easily leave the service provided the abuse is subtle enough." The bigger a company gets, the harder it is for it to grow, so the biggest companies realize their gains by locking in and squeezing their users, not by improving their service::

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

That's where "twiddling" comes in. Digital platforms are extremely flexible, which comes with the territory: computers are the most flexible tools we have. This means that companies can automate high-speed, deceptive changes to the "business logic" of their platforms – what end users pay, how much of that goes to business customers, and how offers are presented to both:

https://pluralistic.net/2023/02/19/twiddler/

This kind of fraud isn't particularly sophisticated, but it doesn't have to be – it just has to be fast. In any shell-game, the quickness of the hand deceives the eye:

https://pluralistic.net/2024/03/26/glitchbread/#electronic-shelf-tags

Under normal circumstances, this twiddling would be constrained by counterforces in society. Changing the business rules like this is fraud, so you'd hope that a regulator would step in and extinguish the conduct, fining the company that engaged in it so hard that they saw a net loss from the conduct. But when a sector gets very concentrated, its mega-firms capture their regulators, becoming "too big to jail":

https://pluralistic.net/2022/06/05/regulatory-capture/

Thus the tendency among the giant tech companies to practice the one lesson of the Darth Vader MBA: dismissing your stakeholders' outrage by saying, "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

Where regulators fail, technology can step in. The flexibility of digital platforms cuts both ways: when the company enshittifies its products, you can disenshittify it with your own countertwiddling: third-party ink-cartridges, alternative app stores and clients, scrapers, browser automation and other forms of high-tech guerrilla warfare:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

But tech giants' regulatory capture have allowed them to expand "IP rights" to prevent this self-help. By carefully layering overlapping IP rights around their products, they can criminalize the technology that lets you wrestle back the value they've claimed for themselves, creating a new offense of "felony contempt of business model":

https://locusmag.com/2020/09/cory-doctorow-ip/

A world where users must defer to platforms' moment-to-moment decisions about how the service operates, without the protection of rival technology or regulatory oversight is a world where companies face a powerful temptation to enshittify.

That's why we've seen so much enshittification in platforms that algorithmically rank their feeds, from Google and Amazon search to Facebook and Twitter feeds. A search engine is always going to be making a judgment call about what the best result for your search should be. If a search engine is generally good at predicting which results will please you best, you'll return to it, automatically clicking the first result ("I'm feeling lucky").

This means that if a search engine slips in the odd paid result at the top of the results, they can exploit your trusting habits to shift value from you to their investors. The congifurability of a digital service means that they can sprinkle these frauds into their services on a random schedule, making them hard to detect and easy to dismiss as lapses. Gradually, this acquires its own momentum, and the platform becomes addicted to lowering its own quality to raise its profits, and you get modern Google, which cynically lowered search quality to increase search volume:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

And you get Amazon, which makes $38 billion every year, accepting bribes to replace its best search results with paid results for products that cost more and are of lower quality:

https://pluralistic.net/2023/11/06/attention-rents/#consumer-welfare-queens

Social media's enshittification followed a different path. In the beginning, social media presented a deterministic feed: after you told the platform who you wanted to follow, the platform simply gathered up the posts those users made and presented them to you, in reverse-chronological order.

This presented few opportunities for enshittification, but it wasn't perfect. For users who were well-established on a platform, a reverse-chrono feed was an ungovernable torrent, where high-frequency trivialities drowned out the important posts from people whose missives were buried ten screens down in the updates since your last login.

For new users who didn't yet follow many people, this presented the opposite problem: an empty feed, and the sense that you were all alone while everyone else was having a rollicking conversation down the hall, in a room you could never find.

The answer was the algorithmic feed: a feed of recommendations drawn from both the accounts you followed and strangers alike. Theoretically, this could solve both problems, by surfacing the most important materials from your friends while keeping you abreast of the most important and interesting activity beyond your filter bubble. For many of us, this promise was realized, and algorithmic feeds became a source of novelty and relevance.

But these feeds are a profoundly tempting enshittification target. The critique of these algorithms has largely focused on "addictiveness" and the idea that platforms would twiddle the knobs to increase the relevance of material in your feed to "hack your engagement":

https://www.theguardian.com/technology/2018/mar/04/has-dopamine-got-us-hooked-on-tech-facebook-apps-addiction

Less noticed – and more important – was how platforms did the opposite: twiddling the knobs to remove things from your feed that you'd asked to see or that the algorithm predicted you'd enjoy, to make room for "boosted" content and advertisements:

https://www.reddit.com/r/Instagram/comments/z9j7uy/what_happened_to_instagram_only_ads_and_accounts/

Users were helpless before this kind of twiddling. On the one hand, they were locked into the platform – not because their dopamine had been hacked by evil tech-bro wizards – but because they loved the friends they had there more than they hated the way the service was run:

https://locusmag.com/2023/01/commentary-cory-doctorow-social-quitting/

On the other hand, the platforms had such an iron grip on their technology, and had deployed IP so cleverly, that any countertwiddling technology was instantaneously incinerated by legal death-rays:

https://techcrunch.com/2022/10/10/google-removes-the-og-app-from-the-play-store-as-founders-think-about-next-steps/

Newer social media platforms, notably Tiktok, dispensed entirely with deterministic feeds, defaulting every user into a feed that consisted entirely of algorithmic picks; the people you follow on these platforms are treated as mere suggestions by their algorithms. This is a perfect breeding-ground for enshittification: different parts of the business can twiddle the knobs to override the algorithm for their own parochial purposes, shifting the quality:shit ratio by unnoticeable increments, temporarily toggling the quality knob when your engagement drops off:

https://www.forbes.com/sites/emilybaker-white/2023/01/20/tiktoks-secret-heating-button-can-make-anyone-go-viral/

All social platforms want to be Tiktok: nominally, that's because Tiktok's algorithmic feed is so good at hooking new users and keeping established users hooked. But tech bosses also understand that a purely algorithmic feed is the kind of black box that can be plausibly and subtly enshittified without sparking user revolts:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

Back in 2004, when Mark Zuckerberg was coming to grips with Facebook's success, he boasted to a friend that he was sitting on a trove of emails, pictures and Social Security numbers for his fellow Harvard students, offering this up for his friend's idle snooping. The friend, surprised, asked "What? How'd you manage that one?"

Infamously, Zuck replied, "People just submitted it. I don't know why. They 'trust me.' Dumb fucks."

https://www.esquire.com/uk/latest-news/a19490586/mark-zuckerberg-called-people-who-handed-over-their-data-dumb-f/

This was a remarkable (and uncharacteristic) self-aware moment from the then-nineteen-year-old Zuck. Of course Zuck couldn't be trusted with that data. Whatever Jiminy Cricket voice told him to safeguard that trust was drowned out by his need to boast to pals, or participate in the creepy nonconsensual rating of the fuckability of their female classmates. Over and over again, Zuckerberg would promise to use his power wisely, then break that promise as soon as he could do so without consequence:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3247362

Zuckerberg is a cautionary tale. Aware from the earliest moments that he was amassing power that he couldn't be trusted with, he nevertheless operated with only the weakest of Ulysses pacts, like a nonbinding promise never to spy on his users:

https://web.archive.org/web/20050107221705/http://www.thefacebook.com/policy.php

But the platforms have learned the wrong lesson from Zuckerberg. Rather than treating Facebook's enshittification as a cautionary tale, they've turned it into a roadmap. The Darth Vader MBA rules high-tech boardrooms.

Algorithmic feeds and other forms of "paternalistic" content presentation are necessary and even desirable in an information-rich environment. In many instances, decisions about what you see must be largely controlled by a third party whom you trust. The audience in a comedy club doesn't get to insist on knowing the punchline before the joke is told, just as RPG players don't get to order the Dungeon Master to present their preferred challenges during a campaign.

But this power is balanced against the ease of the players replacing the Dungeon Master or the audience walking out on the comic. When you've got more than a hundred dollars sunk into a video game and an online-only friend-group you raid with, the games company can do a lot of enshittification without losing your business, and they know it:

https://www.theverge.com/2024/5/10/24153809/ea-in-game-ads-redux

Even if they sometimes overreach and have to retreat:

https://www.eurogamer.net/sony-overturns-helldivers-2-psn-requirement-following-backlash

A tech company that seeks your trust for an algorithmic feed needs Ulysses pacts, or it will inevitably yield to the temptation to enshittify. From strongest to weakest, these are:

Not showing you an algorithmic feed at all;

https://joinmastodon.org/

"Composable moderation" that lets multiple parties provide feeds:

https://bsky.social/about/blog/4-13-2023-moderation

Offering an algorithmic "For You" feed alongside of a reverse-chrono "Friends" feed, defaulting to friends;

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

As above, but defaulting to "For You"

Maturity lies in being strong enough to know your weaknesses. Never trust someone who tells you that they will never yield to temptation! Instead, seek out people – and service providers – with the maturity and honesty to know how tempting temptation is, and who act before temptation strikes to make it easier to resist.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/11/for-you/#the-algorithm-tm

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

djhughman https://commons.wikimedia.org/wiki/File:Modular_synthesizer_-_%22Control_Voltage%22_electronic_music_shop_in_Portland_OR_-_School_Photos_PCC_%282015-05-23_12.43.01_by_djhughman%29.jpg

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/deed.en

113 notes

·

View notes

Text

It should 100% be illegal for companies to make you give them your payment information when you sign up for a free trial version of their product. It is not necessary and there is no good fucking reason for them to do it. It’s blatantly just so they can steal forgetful customers’ money.

179K notes

·

View notes

Text

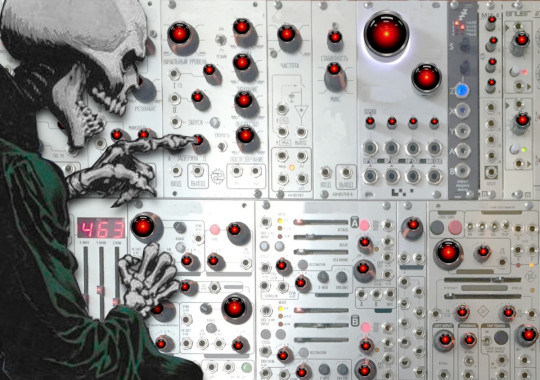

The disenshittified internet starts with loyal "user agents"

I'm in TARTU, ESTONIA! Overcoming the Enshittocene (TOMORROW, May 8, 6PM, Prima Vista Literary Festival keynote, University of Tartu Library, Struwe 1). AI, copyright and creative workers' labor rights (May 10, 8AM: Science Fiction Research Association talk, Institute of Foreign Languages and Cultures building, Lossi 3, lobby). A talk for hackers on seizing the means of computation (May 10, 3PM, University of Tartu Delta Centre, Narva 18, room 1037).

There's one overwhelmingly common mistake that people make about enshittification: assuming that the contagion is the result of the Great Forces of History, or that it is the inevitable end-point of any kind of for-profit online world.

In other words, they class enshittification as an ideological phenomenon, rather than as a material phenomenon. Corporate leaders have always felt the impulse to enshittify their offerings, shifting value from end users, business customers and their own workers to their shareholders. The decades of largely enshittification-free online services were not the product of corporate leaders with better ideas or purer hearts. Those years were the result of constraints on the mediocre sociopaths who would trade our wellbeing and happiness for their own, constraints that forced them to act better than they do today, even if the were not any better:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

Corporate leaders' moments of good leadership didn't come from morals, they came from fear. Fear that a competitor would take away a disgruntled customer or worker. Fear that a regulator would punish the company so severely that all gains from cheating would be wiped out. Fear that a rival technology – alternative clients, tracker blockers, third-party mods and plugins – would emerge that permanently severed the company's relationship with their customers. Fears that key workers in their impossible-to-replace workforce would leave for a job somewhere else rather than participate in the enshittification of the services they worked so hard to build:

https://pluralistic.net/2024/04/22/kargo-kult-kaptialism/#dont-buy-it

When those constraints melted away – thanks to decades of official tolerance for monopolies, which led to regulatory capture and victory over the tech workforce – the same mediocre sociopaths found themselves able to pursue their most enshittificatory impulses without fear.

The effects of this are all around us. In This Is Your Phone On Feminism, the great Maria Farrell describes how audiences at her lectures profess both love for their smartphones and mistrust for them. Farrell says, "We love our phones, but we do not trust them. And love without trust is the definition of an abusive relationship":

https://conversationalist.org/2019/09/13/feminism-explains-our-toxic-relationships-with-our-smartphones/

I (re)discovered this Farrell quote in a paper by Robin Berjon, who recently co-authored a magnificent paper with Farrell entitled "We Need to Rewild the Internet":

https://www.noemamag.com/we-need-to-rewild-the-internet/

The new Berjon paper is narrower in scope, but still packed with material examples of the way the internet goes wrong and how it can be put right. It's called "The Fiduciary Duties of User Agents":

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3827421

In "Fiduciary Duties," Berjon focuses on the technical term "user agent," which is how web browsers are described in formal standards documents. This notion of a "user agent" is a holdover from a more civilized age, when technologists tried to figure out how to build a new digital space where technology served users.

A web browser that's a "user agent" is a comforting thought. An agent's job is to serve you and your interests. When you tell it to fetch a web-page, your agent should figure out how to get that page, make sense of the code that's embedded in, and render the page in a way that represents its best guess of how you'd like the page seen.

For example, the user agent might judge that you'd like it to block ads. More than half of all web users have installed ad-blockers, constituting the largest consumer boycott in human history:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

Your user agent might judge that the colors on the page are outside your visual range. Maybe you're colorblind, in which case, the user agent could shift the gamut of the colors away from the colors chosen by the page's creator and into a set that suits you better:

https://dankaminsky.com/dankam/

Or maybe you (like me) have a low-vision disability that makes low-contrast type difficult to impossible to read, and maybe the page's creator is a thoughtless dolt who's chosen light grey-on-white type, or maybe they've fallen prey to the absurd urban legend that not-quite-black type is somehow more legible than actual black type:

https://uxplanet.org/basicdesign-never-use-pure-black-in-typography-36138a3327a6

The user agent is loyal to you. Even when you want something the page's creator didn't consider – even when you want something the page's creator violently objects to – your user agent acts on your behalf and delivers your desires, as best as it can.

Now – as Berjon points out – you might not know exactly what you want. Like, you know that you want the privacy guarantees of TLS (the difference between "http" and "https") but not really understand the internal cryptographic mysteries involved. Your user agent might detect evidence of shenanigans indicating that your session isn't secure, and choose not to show you the web-page you requested.

This is only superficially paradoxical. Yes, you asked your browser for a web-page. Yes, the browser defied your request and declined to show you that page. But you also asked your browser to protect you from security defects, and your browser made a judgment call and decided that security trumped delivery of the page. No paradox needed.

But of course, the person who designed your user agent/browser can't anticipate all the ways this contradiction might arise. Like, maybe you're trying to access your own website, and you know that the security problem the browser has detected is the result of your own forgetful failure to renew your site's cryptographic certificate. At that point, you can tell your browser, "Thanks for having my back, pal, but actually this time it's fine. Stand down and show me that webpage."

That's your user agent serving you, too.

User agents can be well-designed or they can be poorly made. The fact that a user agent is designed to act in accord with your desires doesn't mean that it always will. A software agent, like a human agent, is not infallible.

However – and this is the key – if a user agent thwarts your desire due to a fault, that is fundamentally different from a user agent that thwarts your desires because it is designed to serve the interests of someone else, even when that is detrimental to your own interests.

A "faithless" user agent is utterly different from a "clumsy" user agent, and faithless user agents have become the norm. Indeed, as crude early internet clients progressed in sophistication, they grew increasingly treacherous. Most non-browser tools are designed for treachery.

A smart speaker or voice assistant routes all your requests through its manufacturer's servers and uses this to build a nonconsensual surveillance dossier on you. Smart speakers and voice assistants even secretly record your speech and route it to the manufacturer's subcontractors, whether or not you're explicitly interacting with them:

https://www.sciencealert.com/creepy-new-amazon-patent-would-mean-alexa-records-everything-you-say-from-now-on

By design, apps and in-app browsers seek to thwart your preferences regarding surveillance and tracking. An app will even try to figure out if you're using a VPN to obscure your location from its maker, and snitch you out with its guess about your true location.

Mobile phones assign persistent tracking IDs to their owners and transmit them without permission (to its credit, Apple recently switch to an opt-in system for transmitting these IDs) (but to its detriment, Apple offers no opt-out from its own tracking, and actively lies about the very existence of this tracking):

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

An Android device running Chrome and sitting inert, with no user interaction, transmits location data to Google every five minutes. This is the "resting heartbeat" of surveillance for an Android device. Ask that device to do any work for you and its pulse quickens, until it is emitting a nearly continuous stream of information about your activities to Google:

https://digitalcontentnext.org/blog/2018/08/21/google-data-collection-research/

These faithless user agents both reflect and enable enshittification. The locked-down nature of the hardware and operating systems for Android and Ios devices means that manufacturers – and their business partners – have an arsenal of legal weapons they can use to block anyone who gives you a tool to modify the device's behavior. These weapons are generically referred to as "IP rights" which are, broadly speaking, the right to control the conduct of a company's critics, customers and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

A canny tech company can design their products so that any modification that puts the user's interests above its shareholders is illegal, a violation of its copyright, patent, trademark, trade secrets, contracts, terms of service, nondisclosure, noncompete, most favored nation, or anticircumvention rights. Wrap your product in the right mix of IP, and its faithless betrayals acquire the force of law.

This is – in Jay Freeman's memorable phrase – "felony contempt of business model." While more than half of all web users have installed an ad-blocker, thus overriding the manufacturer's defaults to make their browser a more loyal agent, no app users have modified their apps with ad-blockers.

The first step of making such a blocker, reverse-engineering the app, creates criminal liability under Section 1201 of the Digital Millennium Copyright Act, with a maximum penalty of five years in prison and a $500,000 fine. An app is just a web-page skinned in sufficient IP to make it a felony to add an ad-blocker to it (no wonder every company wants to coerce you into using its app, rather than its website).

If you know that increasing the invasiveness of the ads on your web-page could trigger mass installations of ad-blockers by your users, it becomes irrational and self-defeating to ramp up your ads' invasiveness. The possibility of interoperability acts as a constraint on tech bosses' impulse to enshittify their products.

The shift to platforms dominated by treacherous user agents – apps, mobile ecosystems, walled gardens – weakens or removes that constraint. As your ability to discipline your agent so that it serves you wanes, the temptation to turn your user agent against you grows, and enshittification follows.

This has been tacitly understood by technologists since the web's earliest days and has been reaffirmed even as enshittification increased. Berjon quotes extensively from "The Internet Is For End-Users," AKA Internet Architecture Board RFC 8890:

Defining the user agent role in standards also creates a virtuous cycle; it allows multiple implementations, allowing end users to switch between them with relatively low costs (…). This creates an incentive for implementers to consider the users' needs carefully, which are often reflected into the defining standards. The resulting ecosystem has many remaining problems, but a distinguished user agent role provides an opportunity to improve it.

And the W3C's Technical Architecture Group echoes these sentiments in "Web Platform Design Principles," which articulates a "Priority of Constituencies" that is supposed to be central to the W3C's mission:

User needs come before the needs of web page authors, which come before the needs of user agent implementors, which come before the needs of specification writers, which come before theoretical purity.

https://w3ctag.github.io/design-principles/

But the W3C's commitment to faithful agents is contingent on its own members' commitment to these principles. In 2017, the W3C finalized "EME," a standard for blocking mods that interact with streaming videos. Nominally aimed at preventing copyright infringement, EME also prevents users from choosing to add accessibility add-ons that beyond the ones the streaming service permits. These services may support closed captioning and additional narration of visual elements, but they block tools that adapt video for color-blind users or prevent strobe effects that trigger seizures in users with photosensitive epilepsy.

The fight over EME was the most contentious struggle in the W3C's history, in which the organization's leadership had to decide whether to honor the "priority of constituencies" and make a standard that allowed users to override manufacturers, or whether to facilitate the creation of faithless agents specifically designed to thwart users' desires on behalf of manufacturers:

https://www.eff.org/deeplinks/2017/09/open-letter-w3c-director-ceo-team-and-membership

This fight was settled in favor of a handful of extremely large and powerful companies, over the objections of a broad collection of smaller firms, nonprofits representing users, academics and other parties agitating for a web built on faithful agents. This coincided with the W3C's operating budget becoming entirely dependent on the very large sums its largest corporate members paid.

W3C membership is on a sliding scale, based on a member's size. Nominally, the W3C is a one-member, one-vote organization, but when a highly concentrated collection of very high-value members flex their muscles, W3C leadership seemingly perceived an existential risk to the organization, and opted to sacrifice the faithfulness of user agents in service to the anti-user priorities of its largest members.

For W3C's largest corporate members, the fight was absolutely worth it. The W3C's EME standard transformed the web, making it impossible to ship a fully featured web-browser without securing permission – and a paid license – from one of the cartel of companies that dominate the internet. In effect, Big Tech used the W3C to secure the right to decide who would compete with them in future, and how:

https://blog.samuelmaddock.com/posts/the-end-of-indie-web-browsers/

Enshittification arises when the everyday mediocre sociopaths who run tech companies are freed from the constraints that act against them. When the web – and its browsers – were a big, contented, diverse, competitive space, it was harder for tech companies to collude to capture standards bodies like the W3C to secure even more dominance. As the web turned into Tom Eastman's "five giant websites filled with screenshots of text from the other four," that kind of collusion became much easier:

https://pluralistic.net/2023/04/18/cursed-are-the-sausagemakers/#how-the-parties-get-to-yes

In arguing for faithful agents, Berjon associates himself with the group of scholars, regulators and activists who call for user agents to serve as "information fiduciaries." Mostly, information fiduciaries come up in the context of user privacy, with the idea that entities that hold a user's data would have the obligation to put the user's interests ahead of their own. Think of a lawyer's fiduciary duty in respect of their clients, to give advice that reflects the client's best interests, even when that conflicts with the lawyer's own self-interest. For example, a lawyer who believes that settling a case is the best course of action for a client is required to tell them so, even if keeping the case going would generate more billings for the lawyer and their firm.

For a user agent to be faithful, it must be your fiduciary. It must put your interests ahead of the interests of the entity that made it or operates it. Browsers, email clients, and other internet software that served as a fiduciary would do things like automatically blocking tracking (which most email clients don't do, especially webmail clients made by companies like Google, who also sell advertising and tracking).

Berjon contemplates a legally mandated fiduciary duty, citing Lindsey Barrett's "Confiding in Con Men":

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3354129

He describes a fiduciary duty as a remedy for the enforcement failures of EU's GDPR, a solidly written, and dismally enforced, privacy law. A legally backstopped duty for agents to be fiduciaries would also help us distinguish good and bad forms of "innovation" – innovation in ways of thwarting a user's will are always bad.

Now, the tech giants insist that they are already fiduciaries, and that when they thwart a user's request, that's more like blocking access to a page where the encryption has been compromised than like HAL9000's "I can't let you do that, Dave." For example, when Louis Barclay created "Unfollow Everything," he (and his enthusiastic users) found that automating the process of unfollowing every account on Facebook made their use of the service significantly better:

https://slate.com/technology/2021/10/facebook-unfollow-everything-cease-desist.html

When Facebook shut the service down with blood-curdling legal threats, they insisted that they were simply protecting users from themselves. Sure, this browser automation tool – which just automatically clicked links on Facebook's own settings pages – seemed to do what the users wanted. But what if the user interface changed? What if so many users added this feature to Facebook without Facebook's permission that they overwhelmed Facebook's (presumably tiny and fragile) servers and crashed the system?

These arguments have lately resurfaced with Ethan Zuckerman and Knight First Amendment Institute's lawsuit to clarify that "Unfollow Everything 2.0" is legal and doesn't violate any of those "felony contempt of business model" laws:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/

Sure, Zuckerman seems like a good guy, but what if he makes a mistake and his automation tool does something you don't want? You, the Facebook user, are also a nice guy, but let's face it, you're also a naive dolt and you can't be trusted to make decisions for yourself. Those decisions can only be made by Facebook, whom we can rely upon to exercise its authority wisely.

Other versions of this argument surfaced in the debate over the EU's decision to mandate interoperability for end-to-end encrypted (E2EE) messaging through the Digital Markets Act (DMA), which would let you switch from, say, Whatsapp to Signal and still send messages to your Whatsapp contacts.

There are some good arguments that this could go horribly awry. If it is rushed, or internally sabotaged by the EU's state security services who loathe the privacy that comes from encrypted messaging, it could expose billions of people to serious risks.

But that's not the only argument that DMA opponents made: they also argued that even if interoperable messaging worked perfectly and had no security breaches, it would still be bad for users, because this would make it impossible for tech giants like Meta, Google and Apple to spy on message traffic (if not its content) and identify likely coordinated harassment campaigns. This is literally the identical argument the NSA made in support of its "metadata" mass-surveillance program: "Reading your messages might violate your privacy, but watching your messages doesn't."

This is obvious nonsense, so its proponents need an equally obviously intellectually dishonest way to defend it. When called on the absurdity of "protecting" users by spying on them against their will, they simply shake their heads and say, "You just can't understand the burdens of running a service with hundreds of millions or billions of users, and if I even tried to explain these issues to you, I would divulge secrets that I'm legally and ethically bound to keep. And even if I could tell you, you wouldn't understand, because anyone who doesn't work for a Big Tech company is a naive dolt who can't be trusted to understand how the world works (much like our users)."

Not coincidentally, this is also literally the same argument the NSA makes in support of mass surveillance, and there's a very useful name for it: scalesplaining.

Now, it's totally true that every one of us is capable of lapses in judgment that put us, and the people connected to us, at risk (my own parents gave their genome to the pseudoscience genetic surveillance company 23andme, which means they have my genome, too). A true information fiduciary shouldn't automatically deliver everything the user asks for. When the agent perceives that the user is about to put themselves in harm's way, it should throw up a roadblock and explain the risks to the user.

But the system should also let the user override it.

This is a contentious statement in information security circles. Users can be "socially engineered" (tricked), and even the most sophisticated users are vulnerable to this:

https://pluralistic.net/2024/02/05/cyber-dunning-kruger/#swiss-cheese-security

The only way to be certain a user won't be tricked into taking a course of action is to forbid that course of action under any circumstances. If there is any means by which a user can flip the "are you very sure?" circuit-breaker back on, then the user can be tricked into using that means.

This is absolutely true. As you read these words, all over the world, vulnerable people are being tricked into speaking the very specific set of directives that cause a suspicious bank-teller to authorize a transfer or cash withdrawal that will result in their life's savings being stolen by a scammer:

https://www.thecut.com/article/amazon-scam-call-ftc-arrest-warrants.html

We keep making it harder for bank customers to make large transfers, but so long as it is possible to make such a transfer, the scammers have the means, motive and opportunity to discover how the process works, and they will go on to trick their victims into invoking that process.

Beyond a certain point, making it harder for bank depositors to harm themselves creates a world in which people who aren't being scammed find it nearly impossible to draw out a lot of cash for an emergency and where scam artists know exactly how to manage the trick. After all, non-scammers only rarely experience emergencies and thus have no opportunity to become practiced in navigating all the anti-fraud checks, while the fraudster gets to run through them several times per day, until they know them even better than the bank staff do.

This is broadly true of any system intended to control users at scale – beyond a certain point, additional security measures are trivially surmounted hurdles for dedicated bad actors and as nearly insurmountable hurdles for their victims:

https://pluralistic.net/2022/08/07/como-is-infosec/

At this point, we've had a couple of decades' worth of experience with technological "walled gardens" in which corporate executives get to override their users' decisions about how the system should work, even when that means reaching into the users' own computer and compelling it to thwart the user's desire. The record is inarguable: while companies often use those walls to lock bad guys out of the system, they also use the walls to lock their users in, so that they'll be easy pickings for the tech company that owns the system:

https://pluralistic.net/2023/02/05/battery-vampire/#drained

This is neatly predicted by enshittification's theory of constraints: when a company can override your choices, it will be irresistibly tempted to do so for its own benefit, and to your detriment.

What's more, the mere possibility that you can override the way the system works acts as a disciplining force on corporate executives, forcing them to reckon with your priorities even when these are counter to their shareholders' interests. If Facebook is genuinely worried that an "Unfollow Everything" script will break its servers, it can solve that by giving users an unfollow everything button of its own design. But so long as Facebook can sue anyone who makes an "Unfollow Everything" tool, they have no reason to give their users such a button, because it would give them more control over their Facebook experience, including the controls needed to use Facebook less.

It's been more than 20 years since Seth Schoen and I got a demo of Microsoft's first "trusted computing" system, with its "remote attestations," which would let remote servers demand and receive accurate information about what kind of computer you were using and what software was running on it.

This could be beneficial to the user – you could send a "remote attestation" to a third party you trusted and ask, "Hey, do you think my computer is infected with malicious software?" Since the trusted computing system produced its report on your computer using a sealed, separate processor that the user couldn't directly interact with, any malicious code you were infected with would not be able to forge this attestation.

But this remote attestation feature could also be used to allow Microsoft to block you from opening a Word document with Libreoffice, Apple Pages, or Google Docs, or it could be used to allow a website to refuse to send you pages if you were running an ad-blocker. In other words, it could transform your information fiduciary into a faithless agent.

Seth proposed an answer to this: "owner override," a hardware switch that would allow you to force your computer to lie on your behalf, when that was beneficial to you, for example, by insisting that you were using Microsoft Word to open a document when you were really using Apple Pages:

https://web.archive.org/web/20021004125515/http://vitanuova.loyalty.org/2002-07-05.html

Seth wasn't naive. He knew that such a system could be exploited by scammers and used to harm users. But Seth calculated – correctly! – that the risks of having a key to let yourself out of the walled garden were less than being stuck in a walled garden where some corporate executive got to decide whether and when you could leave.

Tech executives never stopped questing after a way to turn your user agent from a fiduciary into a traitor. Last year, Google toyed with the idea of adding remote attestation to web browsers, which would let services refuse to interact with you if they thought you were using an ad blocker:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

The reasoning for this was incredible: by adding remote attestation to browsers, they'd be creating "feature parity" with apps – that is, they'd be making it as practical for your browser to betray you as it is for your apps to do so (note that this is the same justification that the W3C gave for creating EME, the treacherous user agent in your browser – "streaming services won't allow you to access movies with your browser unless your browser is as enshittifiable and authoritarian as an app").

Technologists who work for giant tech companies can come up with endless scalesplaining explanations for why their bosses, and not you, should decide how your computer works. They're wrong. Your computer should do what you tell it to do:

https://www.eff.org/deeplinks/2023/08/your-computer-should-say-what-you-tell-it-say-1

These people can kid themselves that they're only taking away your power and handing it to their boss because they have your best interests at heart. As Upton Sinclair told us, it's impossible to get someone to understand something when their paycheck depends on them not understanding it.

The only way to get a tech boss to consistently treat you well is to ensure that if they stop, you can quit. Anything less is a one-way ticket to enshittification.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://commons.wikimedia.org/wiki/File:HAL9000.svg

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

345 notes

·

View notes

Text

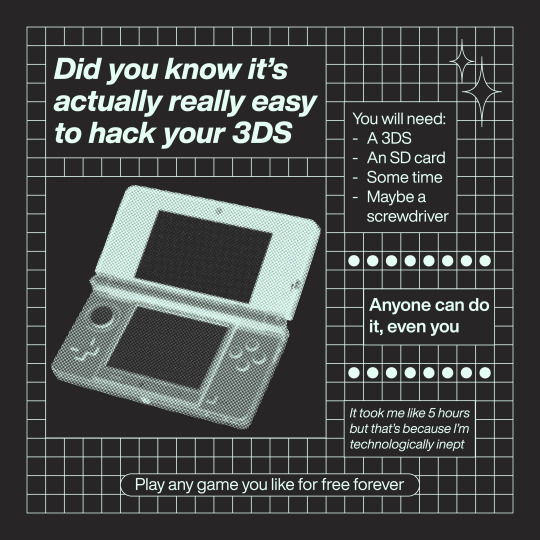

I made this a couple of months ago but. hack your 3ds. do it right now.

24K notes

·

View notes

Text

"Capitalism breeds innovation" girl there are only five websites left and they all look the same

67K notes

·

View notes