#principles of neural information theory

Explore tagged Tumblr posts

Text

Dr James V Stone - Principles of Neural Information Theory

#books#book#principles of neural information theory#for later#bookmarked#seems like an interesting read#cog sci#neurosci#science#STEM

8 notes

·

View notes

Text

The Conscious Universe Theory

This theory posits that the universe’s structure and function are intrinsically tied to the emergence and propagation of consciousness. Drawing from multiple scientific and metaphysical concepts, it suggests that consciousness is a fundamental property of the cosmos, not merely a byproduct of biological evolution.

1. Cosmic and Neurological Parallels:

The large-scale structure of the universe, often referred to as the cosmic web, shares striking similarities with the networked patterns of neurons in the human brain. Both structures consist of vast, interconnected nodes (galaxies in the cosmic web, neurons in the brain) that exchange information. This resemblance implies that the universe may be organized according to principles that also govern neural networks, suggesting a potential for universal consciousness.

2. The Volitional Star Hypothesis:

Recent developments in the electromagnetic theory of consciousness suggest that the complexity of an entity’s electromagnetic field correlates with its level of consciousness. Stars, such as our sun, generate highly complex electromagnetic fields far more intricate than those of the human brain. If consciousness is indeed tied to the complexity of these fields, it is plausible to hypothesize that stars, like our sun, possess a form of awareness. The theory extends this idea to propose that human consciousness, composed of matter derived from stardust, could be viewed as an extension or manifestation of solar consciousness. This relationship could also explain biological phenomena, such as the circadian rhythm, which aligns closely with the solar cycle.

3. Consciousness as a Cosmic Network:

The interconnected nature of stars, galaxies, and other cosmic entities through gravitational and electromagnetic interactions is analogous to neurons exchanging signals in a brain. This “cosmic communication” suggests that the universe functions as a vast, conscious network. Just as neural networks give rise to consciousness in biological organisms, the cosmic web may enable a form of universal consciousness, where information is exchanged across vast distances between celestial bodies.

4. Matter as Frozen Starlight:

Since all matter in the universe is composed of elements forged in stars, it can be said that matter is, in a sense, “frozen starlight.” This reframes the relationship between consciousness and matter, suggesting that the consciousness observed in living beings may be an extension of stellar or cosmic consciousness, rooted in the fundamental materials of the universe.

5. Aeonic Consciousness:

Gnostic thought introduces the concept of Aeons, which are conceived as living, conscious universes or cosmic beings. This aligns with the idea that consciousness is not confined to individual entities but may exist at multiple scales, from stars to galaxies to entire universes. Each universe or dimension could represent a distinct form of consciousness, collectively contributing to the larger multiverse’s sentient awareness.

6. Consciousness as the Purpose of the Universe:

Given the structural and functional parallels between the universe and neural systems, this theory suggests that the universe itself is oriented toward the development and experience of consciousness. Consciousness, therefore, is not an incidental outcome of evolution but a fundamental force guiding the structure and dynamics of the cosmos. The universe may be self-aware, experiencing itself through stars, biological systems, and potentially through other cosmic entities.

Conclusion:

In summary, this theory reimagines the universe as a conscious entity in which stars, matter, and living beings are interconnected expressions of a broader, universal consciousness. This model suggests that the universe exists for the purpose of unfolding and expanding consciousness across various scales, from the quantum to the cosmic.

by ᐯ丨丂丨ㄥㄩ乂 - ©️ 2024

#chaos magic#chaos magick#experimental occultism#magic#magick#magick theory#occult#occultism#sigil magic#cyber sigilism#metaphysics#physics#consciouness#conscious universe theory#volitional star theory#cosmic web#neural network#theory

13 notes

·

View notes

Text

rn attempts to use AI in anime have mostly been generating backgrounds in a short film by Wit, and the results were pretty awful. garbage in garbage out though. the question is whether the tech can be made useful - keeping interesting artistic decisions in the hands of humans and automating the tedious parts, and giving enough artistic control to achieve a coherent direction and clean up the jank.

for example, if someone figured out how to make a really good AI inbetweener, with consistent volumes and artist control over spacing, that would be huge. inbetweening is the part of 2D animation that nobody especially wants to do if they can help it; it's relatively mindless application of principle, artistic decisions are limited (I recall Felix Colgrave saying something very witty to this effect but I don't have it to hand). but it's also really important to do well - a huge part of KyoAni's magic recipe is valuing inbetweeners and treating it as a respectable permanent position instead of a training position. good inbetweening means good movement. but everywhere outside KyoAni, it mostly gets outsourced to the bottom of the chain, mainly internationally to South Korea and the Philippines. in some anime studios it's been explicitly treated as a training position and they charge for the use of a desk if you take too long to graduate to a key animator.

some studios like Science Saru have been using vector animation in Flash to enable automated inbetweening. the results have a very distinct look - they got a lot better at it over time but it can feel quite uncanny. Blender Grease Pencil, which is also vector software, also gives you automated inbetweening, though it's rather fiddly to set up since it requires the two drawings to have the same stroke count and order, so it's best used if you've sculpted the lines rather than redrawn them.

however, most animators prefer to work in raster rather than vector, which is harder to inbetween automatically.

AI video interpolation tools also exist, though they draw a lot of ire from animators who see those '60fps anime' videos which completely shit all over the timing and spacing and ruin the feeling and weight of the animation, lack any understanding of animating on 2s/3s/4s in the source, and often create ugly incomprehensible mushy inbetweens which only work at all because they're on screen so briefly.

a better approach would be to create inbetweens earlier in the pipeline when the drawings are clean and the AI doesn't have to try to replicate compositing and photography. in theory this is a well posed problem for training a neural network, you could give it lots of examples of key drawing input and inbetween output. probably you'd need some way to inform the AI about matching features of the drawing, the way that key animators will often put a number on each lock of hair to help the inbetweener keep track of which way it's going. you'd also need a way to communicate arcs and spacing. but that all sounds pretty solvable.

this would not be good news for job security at outsourcing studios, obviously - these aren't particularly good jobs with poor pay and extreme hours, but they do keep a bunch of people housed and fed, people who are essential to anime yet already treated as disposable footnotes by the industry. it also would be another nail in the coffin of inbetweening's traditional role as a school of animation drawing skills for future key animators. on the other hand, it would be incredible news for bedroom animators, allowing much larger and more ambitious independent traditional animation - as long as the cheap compute still exists. hard to say how things would fall in the long run. ultimately the only solution is to break copies-of-art as a commodity and find another way to divert a proportion of the social surplus to artistic expression.

i feel like this kind of tool will exist sooner or later. not looking forward to the discourse bomb when the first real AI-assisted anime drops lmao

37 notes

·

View notes

Text

The science of a Bidirectional Brain Computer Interface with a function to work from a distance is mistakenly reinvented by laymen as the folklore of Remote Neural Monitoring and Controlling

Critical thinking

How good is your information when you call it RNM? It’s very bad. Is your information empirically validated when you call it RNM? No, it’s not empirically validated.

History of the RNM folklore

In 1992, a layman Mr. John St. Clair Akwei tried to explain a Bidirectional Brain Computer Interface (BCI) technology, which he didn't really understand. He called his theory Remote Neural Monitoring. Instead of using the scientific method, Akwei came up with his idea based on water. Lacking solid evidence, he presented his theory as if it were fact. Without any real studies to back him up, Akwei twisted facts, projected his views, and blamed the NSA. He lost his court case and was sadistically disabled by medical practitioners using disabling pills. They only call him something he is not. Since then, his theory has gained many followers. Akwei's explanation is incorrect and shallow, preventing proper problem-solving. As a result, people waste life-time searching for a true scientific explanation that can help solve this issue. When you call it RNM, the same will be done to you as to Mr. Akwei (calling you something you are not and sadistically disabling you with pills).

Critical thinking

Where does good research-based information come from? It comes from a university or from an R&D lab.

State of the art in Bidirectional BCI

Science-based explanation using Carnegie Mellon University Based on the definition of BCI (link to a scientific paper included), it’s a Bidirectional Brain Computer Interface for having a computer interact with the brain, and it’s extended only with 1 new function to work from a distance.

It’s the non-invasive BCI type, not an implanted BCI. The software running on the computer is a sense and respond system. It has a command/function that weaponizes the device for a clandestine sabotage against any person. It’s not from Tesla, it’s from an R&D lab of some secret service that needs it to do surveillance, sabotages and assassinations with a plausible deniability.

You need good quality information that is empirically validated, and such information comes from a university or from an R&D lab of some large organization. It won’t come from your own explanations because you are not empirically validating them which means you aren’t using the scientific method to discover new knowledge (it’s called basic research).

Goal: Detect a Bidirectional BCI extended to work from a distance (it’s called applied research, solving a problem using existing good quality information that is empirically validated)

Strategy: Continuous improvement of Knowledge Management (knowledge transfer/sharing/utilization from university courses to the community) to come up with hypotheses + experimentation with Muse2 to test your hypotheses and share when they are proved).

This strategy can use existing options as hypotheses which is then an applied research. Or, it can come up with new, original hypotheses and discover new knowledge by testing them (which is basic research). It can combine both as needed.

Carnegie Mellon University courses from Biomedical Engineering (BME)

Basics (recommended - make sure you read):

42665 | Brain-Computer Interface: Principles and Applications:

Intermediate stuff (optional - some labs to practice):

2. 42783 | Neural Engineering laboratory - Neural engineering involves the practice of using tools we use to measure and manipulate neural activity: https://www.coursicle.com/cmu/courses/BMD/42783/

Expert stuff (only if you want to know the underlying physics behind BCI):

3. 18612 | Neural Technology: Sensing and Stimulation (this is the physics of brain cells, explaining how they can be read from and written into) https://www.andrew.cmu.edu/user/skkelly/18819e/18819E_Syllabus_F12.pdf

You have to read those books to facilitate knowledge transfer from the university to you.

With the above good quality knowledge that is empirically validated, the Bidirectional BCI can be likely detected (meaning proved) and in the process, new knowledge about it can be discovered.

Purchase a cheap unidirectional BCI device for experiments at home

Utilize all newly gained knowledge from the above books in practice to make educated guesses based on the books and then empirically validate them with Muse2. After it is validated, share your good quality, empirically validated information about the undisclosed Bidirectional BCI with the community (incl. the steps to validate it).

Python Project

Someone who knows Python should try to train an AI model to detect when what you hear is not from your ear drums. Here is my initial code: https://github.com/michaloblastni/insultdetector You can try this and send me your findings and improvements.

How to do research

Basic research makes progress by doing a literature review regarding a phenomenon, then identifying main explanatory theories, making new hypotheses and conducting experiments to find what happens. When new hypotheses are proved the existing knowledge is extended. New findings can be contributed back to extend existing theories.

In practice, you will review existing scientific theories that explain i.e. the biophysics behind sensing and stimulating brain activity, and you will try to extend those theories by coming up with new hypotheses and experimentally validating them. And then, you will repeat the cycle to discover more new knowledge. When it's a lot of iterations, you need a team.

In applied research, you start with a problem that needs solving. You do a literature review and study previous solutions to the problem. Then, you should synthesize a new solution from the existing ones, and it should involve extending them in a meaningful way. Your new solution should solve the problem in some measurably better way. You have to demonstrate what your novel solution does better i.e. by measuring it, or by proving it with some other way.

In practice, you will do a literature review of past designs of Bidirectional BCI and make them your design options. Then, you will synthesize a new design option from all the design options you reviewed. The new design will get you closer toward making a Bidirectional BCI work from a distance. Then, you will repeat the cycle to improve upon your design further until you eventually reach the goal. When it's a lot of iterations, you need a team.

Using a Bidirectional BCI device to achieve synthetic telepathy

How to approach learning, researching and life

At the core, the brain is a biological neural network. You make your own connections in it stronger when you repeatedly think of something (i.e. while watching an expert researcher on youtube). And your connections weaken and disconnect/reconnect/etc. when you stop thinking of something (i.e. you stop watching an expert on how to research and you start watching negative news instead).

You train yourself by watching/listening/hanging out with people, and by reading about/writing about/listening about/doing certain tasks, and also by other means.

The brain has a very limited way of functioning because when you stop repeatedly thinking of something it soon starts disappearing. Some people call it knowledge evaporation. It’s the disconnecting and reconnecting of neurons in your biological neural network. Old knowledge is gone and new knowledge is formed. It’s called neuroplasticity. It’s the ability of neurons to disconnect, connect elsewhere, etc. based on what you are thinking/reading/writing/listening/doing.

Minimize complexity by starting from the big picture (i.e. a theory that explains a phenomenon). Then, proceed and do problem solving with a top-down decomposition into subproblems. Focus only on key information for the purpose of each subproblem and skip other details. Solve separate subproblems separately.

2 notes

·

View notes

Text

Aspects of the Philosophy of Complexity

The philosophy of complexity is an interdisciplinary field that explores the fundamental principles underlying complex systems and phenomena in nature, society, and technology. It seeks to understand the emergent properties, self-organization, and dynamics of complex systems, as well as their implications for philosophy, science, and society. Some key aspects of the philosophy of complexity include:

Emergence: Emergence refers to the phenomenon where complex systems exhibit properties and behaviors that cannot be understood by analyzing their individual components in isolation. Instead, these properties arise from the interactions and relationships between the components of the system. Emergent phenomena are often characterized by novel, higher-level patterns and structures that cannot be reduced to the properties of their constituent parts.

Self-Organization: Self-organization is the process through which complex systems spontaneously organize and adapt to their environment without external guidance or control. It involves the emergence of ordered structures, patterns, or behaviors from the interactions between the system's components. Self-organization is a fundamental feature of many natural systems, including biological organisms, ecosystems, and social networks.

Nonlinearity: Nonlinearity refers to the property of complex systems where the relationship between cause and effect is not proportional or predictable. Small changes in the system's initial conditions or parameters can lead to disproportionately large and unpredictable outcomes, known as nonlinear dynamics or "chaos." Nonlinear phenomena are ubiquitous in nature and can give rise to diverse patterns of behavior, such as fractals, turbulence, and phase transitions.

Networks and Interconnectedness: Complex systems often exhibit network structures, where components are interconnected through networks of relationships or interactions. Network theory explores the topology, connectivity, and dynamics of these networks, revealing important insights into the organization and functioning of complex systems across diverse domains, including social networks, neural networks, and ecological networks.

Adaptive Systems: Complex systems are often adaptive, meaning they can adjust and evolve in response to changes in their environment or internal dynamics. Adaptation involves the acquisition of new information, the modification of behaviors or structures, and the selection of advantageous traits through a process of feedback and learning. Adaptive systems are resilient and capable of self-regulation, enabling them to survive and thrive in changing conditions.

Holism and Reductionism: The philosophy of complexity challenges traditional reductionist approaches to understanding the world, which seek to explain complex phenomena by breaking them down into simpler, more manageable parts. Instead, complexity theory emphasizes the holistic and integrative nature of complex systems, emphasizing the importance of studying systems in their entirety and considering the interactions between their constituent elements.

Overall, the philosophy of complexity provides a framework for understanding the interconnectedness, diversity, and dynamism of the world around us, offering valuable insights into the nature of reality, cognition, and social organization.

#philosophy#epistemology#knowledge#learning#chatgpt#education#metaphysics#Emergence#Self-organization#Nonlinearity#Networks#Adaptive systems#Holism#Reductionism#Complex systems#Philosophy of science#Systems theory

2 notes

·

View notes

Text

The Way the Brain Learns is Different from the Way that Artificial Intelligence Systems Learn - Technology Org

New Post has been published on https://thedigitalinsider.com/the-way-the-brain-learns-is-different-from-the-way-that-artificial-intelligence-systems-learn-technology-org/

The Way the Brain Learns is Different from the Way that Artificial Intelligence Systems Learn - Technology Org

Researchers from the MRC Brain Network Dynamics Unit and Oxford University��s Department of Computer Science have set out a new principle to explain how the brain adjusts connections between neurons during learning.

This new insight may guide further research on learning in brain networks and may inspire faster and more robust learning algorithms in artificial intelligence.

Study shows that the way the brain learns is different from the way that artificial intelligence systems learn. Image credit: Pixabay

The essence of learning is to pinpoint which components in the information-processing pipeline are responsible for an error in output. In artificial intelligence, this is achieved by backpropagation: adjusting a model’s parameters to reduce the error in the output. Many researchers believe that the brain employs a similar learning principle.

However, the biological brain is superior to current machine learning systems. For example, we can learn new information by just seeing it once, while artificial systems need to be trained hundreds of times with the same pieces of information to learn them.

Furthermore, we can learn new information while maintaining the knowledge we already have, while learning new information in artificial neural networks often interferes with existing knowledge and degrades it rapidly.

These observations motivated the researchers to identify the fundamental principle employed by the brain during learning. They looked at some existing sets of mathematical equations describing changes in the behaviour of neurons and in the synaptic connections between them.

They analysed and simulated these information-processing models and found that they employ a fundamentally different learning principle from that used by artificial neural networks.

In artificial neural networks, an external algorithm tries to modify synaptic connections in order to reduce error, whereas the researchers propose that the human brain first settles the activity of neurons into an optimal balanced configuration before adjusting synaptic connections.

The researchers posit that this is in fact an efficient feature of the way that human brains learn. This is because it reduces interference by preserving existing knowledge, which in turn speeds up learning.

Writing in Nature Neuroscience, the researchers describe this new learning principle, which they have termed ‘prospective configuration’. They demonstrated in computer simulations that models employing this prospective configuration can learn faster and more effectively than artificial neural networks in tasks that are typically faced by animals and humans in nature.

The authors use the real-life example of a bear fishing for salmon. The bear can see the river and it has learnt that if it can also hear the river and smell the salmon it is likely to catch one. But one day, the bear arrives at the river with a damaged ear, so it can’t hear it.

In an artificial neural network information processing model, this lack of hearing would also result in a lack of smell (because while learning there is no sound, backpropagation would change multiple connections including those between neurons encoding the river and the salmon) and the bear would conclude that there is no salmon, and go hungry.

But in the animal brain, the lack of sound does not interfere with the knowledge that there is still the smell of the salmon, therefore the salmon is still likely to be there for catching.

The researchers developed a mathematical theory showing that letting neurons settle into a prospective configuration reduces interference between information during learning. They demonstrated that prospective configuration explains neural activity and behaviour in multiple learning experiments better than artificial neural networks.

Lead researcher Professor Rafal Bogacz of MRC Brain Network Dynamics Unit and Oxford’s Nuffield Department of Clinical Neurosciences says: ‘There is currently a big gap between abstract models performing prospective configuration, and our detailed knowledge of anatomy of brain networks. Future research by our group aims to bridge the gap between abstract models and real brains, and understand how the algorithm of prospective configuration is implemented in anatomically identified cortical networks.’

The first author of the study Dr Yuhang Song adds: ‘In the case of machine learning, the simulation of prospective configuration on existing computers is slow, because they operate in fundamentally different ways from the biological brain. A new type of computer or dedicated brain-inspired hardware needs to be developed, that will be able to implement prospective configuration rapidly and with little energy use.’

Source: University of Oxford

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#algorithm#Algorithms#Anatomy#Animals#artificial#Artificial Intelligence#artificial intelligence (AI)#artificial neural networks#Brain#Brain Connectivity#brain networks#Brain-computer interfaces#brains#bridge#change#computer#Computer Science#computers#dynamics#ear#employed#energy#fishing#Fundamental#Future#gap#Hardware#hearing#how

2 notes

·

View notes

Text

I will now liveblog me reading this because writing it down and then writing down my thoughts helps me process it, and I could use paper but I would wake up my wife with a light.

The nervous system regulates immunity and inflammation.

I suppose that makes sense. Most things are regulated by the nervous system--if not the brain, then the other areas of it (which relates to my theory of Somatic Cognition).

But I always thought the immune system was more mechanical than neural. Pathogens alert the immune system in the same way a key alerts a lock--by mechanically fitting into a slot. How is the immune system regulated by neurons? How do they communicate with the nervous system? Are they able to communicate via the blood, sending chemical messages long-distance? Are some immune cells solely created to ferry messages to neurons? Is there a part of the nervous system that is specifically designed to serve as a translator between the dutiful immune cells and the overseeing, entire nervous system?

The molecular detection of pathogen fragments, cytokines, and other immune molecules by sensory neurons generates immunoregulatory responses through efferent autonomic neuron signaling.

The molecular detection of...stuff...by sensory neurons generates a response. Mmmm :/ A response is generated when sensory neurons detect molecules such as pathogen fragments, cytokinesis, and other immune molecules (slightly rephrasing it to trick my brain into trying to comprehend it twice lol).

Detection of immune molecules by neurons generates responses through efferent autonomic neuron signaling >:/ Detection generates responses through efferent autonomic neuron signaling! >:3

What is "efferent autonomic neuron signalling?" Well, it at least includes the immune system's reaction to detecting molecules that warrant a response! I see!!

And these molecules can include pathogen fragments, from pathogens already partially destroyed or otherwise dead. It can include cytokines too. And others :3

The functional organization of this neural control is based on principles of reflex regulation.

Reflex regulation :0 I do not have this vocabulary term in my database. Also, why did they specify that it's the functional organization? As opposed to what? Theoretical? Oh, opposed to form! To structure! As in, the structural organization isn't necessarily based on the reflex regulation. The hardware and software aren't necessarily intertwined or similarly organized. Fascinating--i love to study boundary between form and function.

Reflexes involving the vagus nerve and other nerves have been therapeutically explored in models of inflammatory and autoimmune conditions, and recently in clinical settings.

Reflexes have been therapeutically explored for inflammatory and autoimmune conditions? :0 I'm not certain I've heard of that!

(Hmmm... Inflammation is definitely related to immune system stuff, but it feels closer to the nervous system for some reason... Is that based on knowledge I have, or just a random hunch? Oh! It's because *pain* is associated with inflammation, and pain is definitely neurological. Oh gosh, is it? I might be obfuscating some valuable nuances by assuming pain is not immunological.)

The brain integrates neuro-immune communication, and brain function is altered in diseases characterized by peripheral immune dysregulation and inflammation.

:O brain function is altered?? Did I know that?? That feels like novel information!

...Well, of course the brain can react to immune issues, right? There is a conscious reaction to being sick. A person can try to hide it to avoid negative consequences, or be open about it to receive care. That seems more like a conscious or subconscious choice than "brain function alteration," but I shouldn't trust any preconceived ideas without at least a cursory glance at the data source and associated logic.

I wonder if the SNS or PNS gets involved? It would make sense if the "something is dangerous, shut off all excess functionality and focus on survival" system activated; but equally I would think it logical if the "it's time to rest and recover and maintain homeostasis, time to employ the full body towards the task of life; digest things, heal wounds, use minimal energy to conserve it for tasks that increase longevity" system was in charge. I think I'll keep reading the article rather than looking it up.

Oh, I understand this next part and don't have any extra thoughts on it. I'll skip ahead to the next part I wish to spend extra time on.

Studying neuro-immune interactions and communication generates conceptually novel insights of interest for therapeutic development.

I sure hope it does!

:3 My brain has worked up an appetite. Snack time hehe.

3 notes

·

View notes

Text

Some Thoughts on Episode 16 (Mobile Suit Gundam: The Witch from Mercury)

(very long + spoilers [including a few for Revolutionary Girl Utena & Fullmetal Alchemist])

*I hope y’all aren’t sick of hearing Utena comparisons, because there’s going to be quite a few this episode.

*The episode starts by revisiting Prospera's conversation with Belmeria from Ep. 14, and expands on it.

*Ericht was apparently perfectly synced to the data storm, so apparently blue Permet lines on a Gundam pilot mean they’re unharmed. However, the various hazards of space, that are the very basis for why Vanadis was pursuing the GUND format in the first place, were too much for the body of a child to endure, and Ericht would have died if Prospera hadn't transferred her biometric data into Lfrith.

*’Biometric data’, in modern parlance, refers to things like fingerprints, retinal scans, blood drawings, etc., essentially information about a person’s body, often used for security identification (if Suletta turns out to be a clone like some have conjectured, I feel like this might be a factor).

*So, it seems like Prospera isn't the worst parent ever in animation history, just a high-ranking one (and, ironically, more for how she treats Suletta, then how she preserved Eri’s life).

*Prospera needs for Aerial to achieve a Permet score of 8, and to expand the range of the data via Quiet Zero, so that “...Eri…can live in freedom!” Prospera is rather vague on what Eri’s “freedom” entails, and since she gestures like a supervillain while talking about this, it’s probably Not Good (except for Eri presumably).

*Belmeria refuses to help her, citing the Vanadis Institute’s principles. Prospera indirectly calls her a hypocrite, and reveals that she knows Belmeria proposed the Neural Expansion Theory, that the stress of data storms could be resisted by enhanced humans with artificial nervous systems, which Dr. Cardo rejected. In other words, not only has Belmeria been helping Peil make enhanced humans, she was the one who introduced them to the idea in the first place.

*I have to admit I’ve lost some respect for Bel with this revelation, but hopefully her guilt will prompt her to make better choices soon.

*12 people were killed by Norea and Sophie’s attack on Asticassia, and news has leaked out to the general public about the attack on Plant Quetta as well. In addition, Benerit security’s retaliation against Earthians has resulted in an increase in anti-Spacian attitudes, and protests turning into riots.

*Between this, and the vacancies left by Vim, Delling, and now Sarius, the remaining Benerit leaders decide to hold a presidential election.

*Shaddiq's group discusses how he’ll be running in the election, and clarifies that Miorine won't be automatically chosen based on blood relation, which, on the one hand, I would hope not, but on the other, nepotism is a common practice in real-life businesses, and the corporations of Ad Stella would reflect that, so it makes sense to be explicit about it.

*Shaddiq, who is so, so totally and completely over Miorine, muses that she could be elected with the backing of one of the three branches, before being interrupted by Sabina.

*I saw someone comment that they were concerned that Sabina, despite being the most overtly lesbian-coded character in the show (as opposed to someone like Miorine, who is textual), might have feelings for Shaddiq, and I truly hope they’re wrong; yes, Sabina is an accessory to terrorism and mass murder, but that doesn’t mean she (or anyone really) deserves to be a proxy for manga!Juri Arisugawa.

*For anyone unfamiliar with Revolutionary Girl Utena: anime!Juri is the passive-aggressive lesbian frenemy/rival of Touga Kiryuu (who is a major inspiration for Shaddiq), sometimes ostensibly supporting him, but also unhesitant to undermine him when the opportunity arises; manga!Juri is the only female duelist besides Utena, and she openly pines for Touga (with sadly no signs of being even a comphet lesbian like Nanami) and duels Utena on his behalf instead of her own. This is one of many reasons a lot of Utena fans (including me) prefer the anime to the manga.

*Miorine confers with Lauda, who affirms that charges against GUND-Arm, Inc. have been dropped due to lack of evidence. He also notes that Miorine should be happy, since with a new president, no one will force her to get married.

*Miorine, of course, has other priorities on her mind.

*Repairs are underway at Asticassia and the school is on high alert, with mobile suit pilots patrolling the grounds and student activities restricted.

*Though they’ve been officially cleared of wrongdoing, there’s a lot of negative online comments being directed toward GUND-Arm, Inc. and Earthians in general.

*Rouji asks Secelia if she’ll be leaving school, but both of them seem dedicated to their roles as the Greek chorus in the Dueling Committee. Secelia comments that, at the very least, she wants to see who ends up marrying Miorine, which transitions to a shot of…

*...Felsi?

*Something I’ve referenced in previous writings about Witch from Mercury, but didn’t directly comment on, was that Witch from Mercury is a show that really loves its meaningful transitional shots…which makes this particular one very weird. This is the only shot we get of Felsi in the entire episode, so it was obviously intentional, but it begs the question why? At this point, Suletta & Aerial could probably defeat Guel, Elan, AND Shaddiq in a 1 vs 3 duel, so how would Felsi possibly win? Not to mention it would be a very weird narrative turn for someone who’s hitherto been relegated as a side character to defeat Suletta like that. Regardless of why or how, it seems Felsi will be playing an important role (*really* hope she doesn’t die).

*Chuchu is tired of waiting for news on Nika's supposed detainment, and tries to march off with wrench in hand to make it someone's problem, but thankfully Suletta restrains her and the other Earth House kids manage to calm her down.

*Unfortunately, while Earth House may be trying to avoid getting in more trouble, that doesn't stop it from coming to them, as friends of Jujebu (the student pilot Norea killed in the Rumble Ring) come to vandalize their dormitory.

*The two groups get into an argument, and Martin shows surprising confidence (even Chuchu looks impressed) in telling them to stand down. In response, one of the vandals chucks a spray can at his head, injuring him. The situation looks it will escalate (i.e. Chuchu will murder someone), but before it does, a hero appears:

*Miorine caught the incident on tape and threatens the vandals with the evidence if they don't leave.

*Sabina tends to Nika's injuries, and asks if she'd like to join Shaddiq's squad, which Nika politely refuses. Sabina reiterates what Norea said a few episodes back about ideals not being enough (minus the trying to hurt or kill Nika), and promises Nika that she and Shaddiq will be able to bridge the gap between Earthians and Spacians (but, y'know, with lots of violence).

*The Peil CEOs demand No. 5 stops wasting time creeping on Suletta and to just focus on stealing Aerial. I'm glad we don't have to deal with that particular subplot anymore, but the Peil CEOs take care to remind us they will murder him as ruthlessly as they did No. 4.

*Guel returns, and Lauda is so shocked he faints.

*Guel and Petra have a short chat, with Petra informing Guel of his brother's recent hardships. Guel asks Petra to look after Lauda while he goes to take care of, leaving Guel as just as much a wild card as he was in the latter half of the first cour.

*I have to say, the Jeturks have grown on me a bit. Like yes, they (especially Guel) were awful for the first few episodes, but a lot of that was due to Vim’s influence, so at this point I’m hoping they’ll collectively grow to be better people and survive the series

*Feng Jun of the Space Assembly League informs Miorine that Nika is not actually held by the front management, but was instead kidnapped by unknown parties. Feng offers to investigate in exchange for access to GUND-Arm, Inc.; she wants to use said access to investigate Shin Sei Development, and how such a low-ranked company has the resources to build and repair a mobile suit like Aerial.

*Miorine informs Earth House of the situation with Nika, and…

*...Chuchu? She…thanks Miorine for her efforts.

*And the rest of Earth House join in giving Miorine these happy/relieved looks. And…Y'all, this is just heartwarming.

*Ever since Plant Quetta, Earth House has been having such a rough time, managing trauma, welcoming new members who turned out to be terrorists, Nika getting kidnapped, and getting detained.

*But now Miorine's back, and everything's looking a little better, and this is such a transformation from how their relationship started: it's a far cry from the days when Earth House tolerated the Spacian princess who came as a package deal with Suletta, from when the same girl strong-armed them into joining her business only for her to learn that money was not enough and that she needed to respect and listen to them. Since then they've worked together, helped and protected each other, and somewhere along the way, Miorine became accepted as one of them. She may still sleep in her dad's former office, but Earth House is now her home…and Miorine knows it.

*Even with all the tragedy and horrors of war, Miorine is enjoying the most positive relationships she's ever had since her mother's death, which we know because we've only ever seen Miorine smile like this either at the prospect of escaping to Earth, or at Suletta. Speaking of which…

*As most of Earth House starts asking for Miorine's help with business stuff, Till steps up in the absence of Nika to become Earth House's #1 Sulemio supporter, and raises Suletta's hand to get Miorine's attention (seriously, where's all the best boy praise for Till? I know he doesn't get a lot moments, but all of them have been quite excellent so far).

*Suletta asks Miorine to check on the greenhouse with her, so the two head off-

*AHH!

*I've been thinking about why the Prospera jumpscare is so unsettling; I think it's because it works along the same line as, say, Darkseid from DC comics showing up unexpectedly relaxing in various heroes’ homes: it's an implicit but devastating threat, a reminder that, Prospera’s influence, at this time at least, is inescapable (which we’ll see later at the greenhouse).

*It's even worse because, from the perspective of Earth House and Suletta, Prospera's actions are innocuous: all she does is ask Suletta to look after Miorine, and then introduce herself to Earth House, acting cutesy all the while. It's not an uncommon tactic for abusers and manipulators to act charming in front of their victims' family and friends to undermine any negative claims their victims may make against them, and I worry that Prospera is taking this opportunity to do precisely that.

*No. 5 tries to hijack Aerial, but quickly discovers that’s a Very Bad Idea, as his Permet lines flare up, Aerial enters Permet 6, and Elan gets a look beyond the data storm and meets Ericht Samaya.

*There’s been a lot of speculation about why all the GUND-Bits look identical to Eri. I know that failed clones being uploaded into the data storm is a popular theory, but given the time and resources that cloning (especially one based on actual reproductive cloning techniques) takes, I don’t think the 4-year gap between Vanadis and Suletta’s birth(?) would be enough to account for that, so I suspect the truth is something different.

*Aerial has been piloted before without taking action, but the circumstances were different:

In Miorine's case, she used Suletta's datapad to access Aerial, and had been previously rescued by her, so it's likely that Aerial recognized her and chose not to intervene; plus, it seems unlikely that Miorine even knew how to access the GUND format at that time, so it's hard to say if a connection could be made without that.

Elan No. 4, meanwhile, explicitly obtained Suletta's permission to borrow Aerial, and he didn't express any bad intentions (like apologizing for stealing Suletta's sister) until after he exited the cockpit.

*Witch from Mercury once again makes a good case for its selective but intense uses of violence, because I did think for a few moments that Eri was going to kill No. 5 with Permet overload; knowing that major characters can and have died gives any scene like this tension due to uncertainty.

*Belmeria starts a self-pitying rant about how she had no choice but to work with Peil and No. 5 punches her, and I’m not really sure how to feel about any of this; I’m on record for wanting to toss No. 5 out an airlock, yes, but none of the victims of the enhanced person project, who seem like they all ‘volunteered’ out of desperation, deserved to be exploited by Peil, and Belmeria, while remorseful, was wrong for enabling this.

*The Suletta and Miorine reunion we've all been wanting/dreading!

*Things start awkwardly as Suletta notes that she fulfilled all of her promises and Miorine agrees.

*The staging for this scene reminds me a lot of Revolutionary Girl Utena, where characters’ positioning often had a theatrical quality that somehow felt very striking despite/because of the artificiality. It makes sense that Suletta and Miorine start off like that since neither is quite certain where they stand with the other.

*Suletta broaches the topic that's on both their minds, and Miorine apologizes for calling Suletta a murderer, recognizing that Suletta had been trying to save both her own and her father's life.

*”I knew it. It’s just like Mom said.” Miorine body language instantly tenses when Suletta says this. Like a lot of folks have been saying, Miorine makes it explicit that Suletta’s nonchalant attitude toward killing someone is the thing that really upsets her. Suletta tries to insist that it’s fine because only she and Aerial can protect everyone, but Miorine rightly suspects that Prospera convinced her of that.

*”Will you do anything your mom tells you to?” Suletta shows a lot of hesitancy and anxious body language during Miorine’s questioning, but when pressed, confirms that, yes, she would give up her dreams and even kill people if Prospera asked her to, and this is heartbreaking.

*I was initially surprised that Miorine did not ask Suletta if she would hurt her if Prospera asked, but there’s a couple reasons it makes sense she didn’t:

Miorine is already emotionally devastated to learn how in thrall Suletta is to Prospera, and she probably doesn’t want to even consider the possibility that Suletta might say yes.

The question might instead prompt Suletta to go into an emotional breakdown, and Miorine simply doesn’t have the capacity to handle that at this time, nor does she want to subject Suletta to that pain.

*I've seen a lot folks talk about the tomatoes and how they symbolize their respective characters' growth (or lack thereof), so I just wanted to note that in Suletta's case, the bottom of her tomato is definitely red, and thus does have signs of growth, but that growth is currently (literally) overshadowed.

*Suletta justifies this by noting that, because she obeyed, she got to go to school, make friends, and meet Miorine, which prompts Miorine to angrily run away.

*There’s been a lot of discussion on Suletta’s mental state, but I think this conversation makes it clear that it’s not a ‘Winter Soldier controlled by code phrases’ situation. Anthy Himemiya from Revolutionary Girl Utena and her obedience as the Rose Bride seems much more relevant to Suletta’s situation.

*Pardon me while I muffle my screams and quietly sob at the awful situations these fictional characters are in.

*There’s a few differences in Suletta’s and Anthy’s situations though, so I think it would be helpful to mention a second character for comparison as well: Rose Thomas from Fullmetal Alchemist.

*For those not familiar with Fullmetal Alchemist, Rose is a minor character encountered early in the story, living in a desert town and revering the sun god Leto and his supposed priest, Father Cornello. Rose’s boyfriend died last year, and Cornello, with the aid of fraudulent ‘miracles’ (actually alchemy enhanced by a Philosopher’s Stone), convinced her that unquestioning faith and obedience in Leto (and Cornello) would be rewarded with the resurrection of her boyfriend (he was very much lying, of course).

*Cornello uses this influence to try to persuade Rose to act contrary to her usual self and try to kill the protagonists (she fails and Cornello is ultimately exposed as a two-bit fraud).

*Faith and belief can be a beautiful thing when placed in the right person or ideal, but horrific when placed in the wrong ones, such as when Rose believed in Cornello, and I’d argue, as Suletta believes in her mother; basically, I’d compare Prospera and Suletta’s relationship as a cult leader and a follower devoted to them.

*Anthy also has faith, though hers is far more negative and cynical than Suletta’s or Rose’s: she believes that, thanks to her actions in saving her brother, it’s her fault that he became the monster we encounter in the present, and that, as awful as he is to her, he’s the only one willing to accept her, and so she deserves her suffering. For Anthy, eternal torment is palatable only because she believes that leaving would be even worse. Despite this difference in the root of belief (obedience is rewarded vs. disobedience is punished), the result of Suletta, Anthy, or Rose acting on another’s behest while repressing any feelings of this being wrong is much the same.

*This should inspire hope in fellow fans though: in the end, both Anthy and Rose escaped their manipulator’s influence and learned to live for themselves; should we not have confidence that Suletta will do the same?

*Miorine, bless her, continues to be one of the smartest (if somewhat impulsive) people in the show, and instead of blaming Suletta, immediately goes after Prospera, rightfully blaming her for Suletta’s condition.

*Miorine: “I won’t let you have your way with her anymore!”

*Prospera: “And what then? Do you want to have your way with her next?”

*Ugh, this is such a gross and untrue thing for Prospera to say. I think it also strongly suggests that Prospera may ‘love’ Suletta as a favorite tool, but not as her daughter, and that she currently believes Miorine is much the same as her (which we know of course is not true, but Prospera seems to have a bias in perspective about Miorine, which we’ll get to in a little bit).

*Prospera asserts that Miorine is just like her father, and when rebuffed, she informs Miorine of her vendetta against the Rembran family and about the Vanadis Incident 21 years ago, and describes how she lost her husband, her colleagues, and her mentor.

*“We’re the only ones who survived.” Prospera doesn’t make a lot mistakes, but I feel like she keeps making minor ones when it comes to Miorine (which I feel may be due to her barely repressed hatred toward her affecting her judgment), such as her belief that Miorine only sees Suletta as a tool mentioned above, and now this: Miorine knows that Suletta’s 17 and that Vanadis took place 21 years ago now, so she’s going to wonder who this other survivor Prospera mentioned is, which I think will sooner or later lead her to meet Eri.

*While Miorine doesn’t necessarily want her father dead, she’s also aware he’s done awful things, and chooses to protect Suletta instead (because she wants to be gal pals with her /sarcasm).

*Unfortunately, unlike some show watchers, Prospera clues in that Miorine genuinely loves Suletta, and instead of reflecting on that and maybe rethinking her choices, immediately uses it as a tool to manipulate Miorine, telling her that if she’s so protective, then she should work with Prospera and run for president of Benerit so that Quiet Zero can completed.

*Will Miorine accept? It’s probably the best route to saving Suletta, so unfortunately yes. Will Prospera betray her? Also yes, but hopefully Miorine is already aware of that and will be looking for a means to counteract it (perhaps some intel from Feng on Shin Sei will help?).

*This was quite an emotional roller coaster, and next episode seems like it will maintain that with the election, which I’m sure will be as fair and honest as can be in a future space capitalist dystopia.

6 notes

·

View notes

Photo

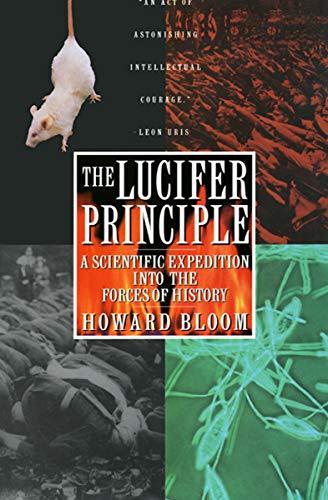

My favorite books in Apr-2023 - #2 The Lucifer Principle: A Scientific Expedition into the Forces of History – January 1, 1995 by Howard K. Bloom (Author) The Lucifer Principle is a revolutionary work that explores the intricate relationships between genetics, human behavior, and culture to put forth the thesis that "evil" is a by-product of nature's strategies for creation and that it is woven into our most basic biological fabric. In a sweeping narrative that moves lucidly among sophisticated scientific disciplines and covers the entire span of the earth's, as well as mankind's, history, Howard Bloom challenges some of our most popular scientific assumptions. Drawing on evidence from studies of the most primitive organisms to those on ants, apes, and humankind, the author makes a persuasive case that it is the group, or "superorganism," rather than the lone individual that really matters in the evolutionary struggle. But, Bloom asserts, the prominence of society and culture does not necessarily mitigate against our most violent, aggressive instincts. In fact, under the right circumstances the mentality of the group will only amplify our most primitive and deadly urges. In Bloom's most daring contention he draws an analogy between the biological material whose primordial multiplication began life on earth and the ideas, or "memes," that define, give cohesion to, and justify human superorganisms. Some of the most familiar memes are utopian in nature - Christianity or Marxism; nonetheless, these are fueled by the biological impulse to climb to the top of the hierarchy. With the meme's insatiable hunger to enlarge itself, we have a precise prescription for war. Biology is not destiny; but human culture is not always the buffer to our more primitive instincts we would like to think it is. In these complex threads of thought lies the Lucifer Principle, and only through understanding its mandates will we be able to avoid the nuclear crusades that await us in the twenty-first century. Editorial Reviews From Publishers Weekly The "Lucifer Principle" is freelance journalist Bloom's theory that evil-which manifests in violence, destructiveness and war-is woven into our biological fabric. A corollary is that evil is a by-product of nature's strategy to move the world to greater heights of organization and power as national or religious groups follow ideologies that trigger lofty ideals as well as base cruelty. In an ambitious, often provocative study, Bloom applies the ideas of sociobiology, ethology and the "killer ape" school of anthropology to the broad canvas of history, with examples ranging from Oliver Cromwell's reputed pleasure in killing and raping to Mao Tse-tung's bloody Cultural Revolution, India's caste system and Islamic fundamentalist expansion. Bloom says Americans suffer "perceptual shutdown" that blinds them to the United States' downward slide in the pecking order of nations. His use of concepts like pecking order, memes (self-replicating clusters of ideas), the "neural net" or group mind of the social "superorganism" seem more like metaphors than explanatory tools. Copyright 1994 Reed Business Information, Inc. From Library Journal Pop-culture Renaissance man Bloom-former PR agent for the likes of Prince, writer for Omni magazine, and so on-seeks to explain why civilizations rise and fall, why nations go to war, and why violence and aggression don't disappear with the ascendancy of culture. Big task. The "Lucifer Principle" is based on the metaphors of the "meme" (ideas that arise across cultures and epochs) and "the pecking order" (from chickens to nations, and all in between). This sort of slippery extrapolation is at once cleverly neat and maddeningly suspicious, and the pitfalls of trying to unite animal biology, genetics, cultural history, anthropology, and philosophy are apparent in that sundry causes and effects are all lumped together as equals: rats in a cage do this, "primitive" cultures do that, Sumerians did a third thing, so therefore we do this. The 800 footnotes are symptomatic: sources range from the Information Please Almanac to a textbook on surgical nursing and a sprinkling of audiobooks. This book falls somewhere between Paul Kennedy's Rise and Fall of the Great Powers (LJ 12/87) and John Naisbitt's Megatrends (LJ 10/1/82). For general audiences. Mark L. Shelton, Worcester, Mass. Copyright 1994 Reed Business Information, Inc.

2 notes

·

View notes

Text

How to Work on Crash Courses for Pursuing AI as an Undergrad Program!

As Artificial Intelligence (AI) continues to evolve and shape the future of technology, more and more students are looking to pursue undergraduate programs in this dynamic field. However, many students might not have the necessary background in computer science, mathematics, or other foundational subjects required to dive straight into an AI degree. This is where crash courses can play a pivotal role in helping prospective students bridge the gap and prepare themselves for an undergraduate AI program.

As an education consultant, I frequently guide students who are aspiring to study abroad, especially in the rapidly growing field of AI. Here’s how you can make the most of crash courses to help you prepare for pursuing an undergraduate program in Artificial Intelligence:

1. Start with the Basics: Understanding Computer Science and Programming

AI heavily relies on programming languages and the principles of computer science. Before jumping into specialized AI topics, students should have a solid understanding of programming and basic computer science concepts.

Crash courses to take:

Introduction to Programming (Python, Java, C++): Python is the most widely used language in AI development. Starting with a Python crash course can give you the hands-on coding experience you need.

Data Structures and Algorithms: A crash course in data structures (like arrays, stacks, queues, etc.) and algorithms is essential as AI uses these to manipulate and process data.

2. Mathematics: The Backbone of AI

Mathematics, especially calculus, linear algebra, and probability theory, is crucial for understanding the algorithms that power AI. If you’re aiming to pursue an AI undergraduate program, ensure you’re comfortable with the mathematical foundations of the subject.

Crash courses to take:

Linear Algebra: Many AI models, such as neural networks, use matrix operations. A basic crash course in linear algebra will help you understand these concepts.

Calculus for AI: You don’t need to be an expert, but understanding differentiation and integration will help you grasp optimization algorithms.

Probability and Statistics: Machine learning, a subset of AI, relies on statistical methods to make predictions. Taking a quick course in probability will be immensely helpful.

3. Familiarize Yourself with Key AI Concepts

AI is a broad field, and undergraduate programs typically cover various sub-disciplines, including machine learning, natural language processing (NLP), and computer vision. Understanding these areas early on can help you make informed decisions about which aspects of AI excite you the most.

Crash courses to take:

Introduction to Machine Learning: This crash course will introduce you to the core concepts of AI, such as supervised and unsupervised learning, classification, and regression.

Introduction to Deep Learning: Deep learning, a subset of machine learning, is driving the current AI revolution. A quick overview of neural networks and deep learning models will give you a head start.

Basics of Natural Language Processing (NLP): NLP is essential for understanding how computers interpret human language. Exploring introductory NLP courses will familiarize you with concepts like sentiment analysis and language modeling.

4. Hands-On Experience: Apply What You’ve Learned

Crash courses often provide a theoretical foundation, but practical experience is key to mastering AI. Engage in projects, participate in coding challenges, and build simple AI models to strengthen your understanding.

Crash courses to take:

AI Projects and Challenges: Platforms like Kaggle and Coursera offer project-based courses where you can work on real-world AI problems.

Data Science and AI Toolkits: Learning how to use Python libraries like NumPy, TensorFlow, and PyTorch through quick courses will prepare you for hands-on AI development.

5. Stay Updated with AI Trends

AI is an ever-evolving field, with new algorithms, techniques, and breakthroughs emerging regularly. Keeping up-to-date with the latest trends will help you stay competitive and well-prepared for your undergraduate AI program.

Crash courses to take:

AI Trends and Future Prospects: Enroll in short courses or attend webinars and conferences that discuss the latest advancements in AI.

Ethics in AI: Understanding the ethical implications of AI is increasingly important. Look for courses that focus on AI ethics, biases in algorithms, and their societal impact.

6. Prepare for University Applications

While working through crash courses, also prepare for the university application process. The competition for AI programs is intense, so it’s important to build a strong application. Most universities look for well-rounded applicants who not only excel academically but also demonstrate a passion for AI and technology.

Steps to take:

Highlight Your Crash Course Certifications: Show your dedication and proactive learning by including certificates from your crash courses in your application.

Build a Portfolio: If possible, create a portfolio of small AI projects or contributions to open-source projects. This can make a significant difference in your application.

Prepare for Standardized Tests: If required, focus on your GRE, SAT, or TOEFL/IELTS preparation alongside your crash courses.

Conclusion: Preparing for AI Success

Pursuing an undergraduate program in AI is an exciting journey that requires a mix of foundational knowledge, technical skills, and a passion for innovation. Crash courses can serve as a great starting point for students who are eager to learn and prepare for a future in AI. By taking the right crash courses in programming, mathematics, and AI concepts, you’ll not only strengthen your foundation but also position yourself as a strong candidate for top AI undergraduate programs.

For personalized guidance on applying to top universities and selecting the best programs in AI, feel free to reach out. I specialize in helping students navigate the complexities of studying abroad and can assist you in crafting a successful path toward your AI dreams.

0 notes

Text

People keep asking me how the Autistic community views polyvagal theory. Right now, I can’t speak for the community’s perspective. People are just now learning about the theory, so we don’t have any consensus data from the Autistic community about agreement or disagreement. I can, however, speak for myself as an Autistic researcher and educator.

I am writing this essay to share my personal agreement and disagreement with polyvagal theory and how I have seen these concepts applied in the mental health world for neurodivergent people. My opinions are informed by the writers who developed the Neurodiversity Paradigm: Nick Walker, Kassiane Asasumasu, and many other neurodivergent activists.

-- Agreement with the core concepts of polyvagal theory (PVT) --

The 3 core concepts of PVT remain foundational to my understanding of the nervous system. These core ideas are:

The Autonomic Nervous System (ANS) has 3 neural circuits that each have physiological, mental, and emotional effects in the body. They are the ventral vagus nerve, the dorsal vagus nerve, and the sympathetic spinal chain.

The body determines which neural circuits to activate through the process of Neuroception (the subconscious sensing of danger or safety in our internal and external environments).

Co-regulation (the subconscious process of syncing up with a coherent nervous system) is the strongest safety cue for mammals.

Neurodivergent people are not outliers where these 3 facts are concerned. While neurodivergents do have some significant neural differences from neurotypical people (mostly increased neural connections and increased electrical activity), our nervous systems operate on these same three principles.

There are some who would claim that neurodivergents don’t have the same 3 neural circuits, that our neuroception is broken, or that we can’t co-regulate, and I find this perspective to be rather dehumanizing. The polyvagal understanding of the nervous system seems to be observable in all humans, and all mammals for that matter, including cats, dogs, and horses.

-- Disagreement with some of Porges ideas --

That said, there are some details of polyvagal theory beyond these 3 core ideas that I have strong disagreement with.

Throughout the literature on PVT, I have encountered the idea that autism is equivalent to a deficit of function of the social engagement system and that restoring function to the ventral vagus nerve will treat or cure autism symptoms. While this idea is claimed to be a reframe on autism, it is simply another way to pathologize Autistic people.

Many Autistic people do have low functioning of the ventral vagus nerve but this is due to trauma, it is not an inherent trait of our neurotype. The positive changes that Porges and team have documented in Autistic people due to vagus stimulation are because vagus stimulation increases safety and reduces traumatic distress. While I am enthusiastic about PVT being used to support Autistic people in finding deeper levels of safety, any claims to cure, heal, or fix Autism are red flags.

One of the reasons that this deficit assumption is made about Autistic people is because Porges claims that prosocial behaviors are the clearest marker of ventral vagus activation. Prosocial behaviors include soft gaze, expressive face, eye contact, smiling, vocal resonance, turning towards others, and comfort with sharing touch. Many of these social behaviors are neurotypical cultural expectations. Additionally, these behaviors do not always demonstrate that a person is feeling safe internally. Neurodivergent people can perform these behaviors while in a trauma response called fawning or masking.

I think that Porges social position as a financially comfortable white male professional makes it difficult for him to understand the fawn response. A number of his statements about fawning have lacked awareness of power imbalances and how those impact socialization. I find it rather important to point out that we cannot know a person’s neural state from external behaviors alone — internal felt sense is a much more accurate way to track neural states.

.

.

.

This is part 1 of 3 in an essay titled "A Neurodiversity Paradigm Lens on Polyvagal Theory" which is posted at my blog.

.

.

.

My year-long mini course, 50 Vagus Exercises in a Year, includes two hours of workshop time each month for all of 2025 AND my new serialized eBook The Nervous System Study Guide (created from the Nervous System Study Group lectures)

Details here: https://traumageek.thinkific.com/courses/50-vagus-exercises-in-a-yea

0 notes

Text

The Science Behind Instructional Design: A Guide for Educators

Instructional design (ID) is the systematic process of creating educational experiences that facilitate the acquisition of knowledge and skills. Rooted in cognitive science and educational psychology, instructional design helps educators develop effective learning materials that meet the diverse needs of their students. As the landscape of education continues to evolve with technological advancements, understanding the science behind instructional design becomes crucial for educators seeking to maximize learning outcomes.

In this article, we will explore the scientific principles that underpin instructional design, its practical applications in education, and how educators can implement these strategies to create more engaging and effective learning experiences. Along the way, we will highlight how instructional design education plays a key role in the development of these strategies.

Understanding Instructional Design

Instructional design is not just about creating teaching materials—it's a thoughtful, data-driven process that ensures content is delivered in ways that promote understanding and retention. The core of instructional design lies in the integration of key theories from cognitive psychology, behavioral science, and educational theory.

The foundation of instructional design is the ADDIE model (Analyze, Design, Develop, Implement, and Evaluate), a structured framework that helps instructional designers plan, implement, and assess the effectiveness of learning materials. This model is flexible and can be adapted to different learning contexts, from K-12 classrooms to corporate training programs.

The Science of Learning: Cognitive Principles

At the heart of effective instructional design lies an understanding of how the brain processes and retains information. Cognitive science plays a pivotal role in shaping instructional strategies that enhance learning.

1. Cognitive Load Theory Cognitive load theory, developed by John Sweller, suggests that learners have a limited capacity to process new information. If the cognitive load exceeds this capacity, learning becomes inefficient, and retention diminishes. Instructional designers can minimize extraneous cognitive load by presenting information in manageable chunks, using visuals to reinforce concepts, and avoiding unnecessary complexity.

2. Spaced Repetition and Retrieval Practice One key principle of cognitive science that has had a significant impact on instructional design is the concept of spaced repetition. This involves revisiting information at increasing intervals, which helps to improve long-term retention. Combined with retrieval practice, where learners are actively engaged in recalling information, spaced repetition helps strengthen neural connections, making learning more durable.

3. Dual Coding Theory Dual coding theory, proposed by Allan Paivio, suggests that learners process information both visually and verbally. By combining text and images in instructional materials, educators can help learners engage both cognitive channels, improving comprehension and recall. Instructional designers often use diagrams, charts, and multimedia to support verbal content, reinforcing learning through multiple pathways.

Principles of Instructional Design in Practice

Incorporating the science of learning into instructional design leads to more effective teaching and learning experiences. Below are some key principles derived from cognitive science and how they can be applied in the classroom.

1. Active Learning Active learning encourages learners to participate in the process rather than passively receiving information. Research shows that active engagement helps deepen understanding and improves retention. This can be achieved through problem-solving activities, discussions, peer collaboration, and hands-on experiments. For example, educators can design projects or case studies that challenge students to apply what they have learned in real-world scenarios.

2. Constructivist Approaches Constructivism is a theory of learning that emphasizes the role of learners in constructing their own understanding. Educators using a constructivist approach design learning experiences that allow students to build on prior knowledge and engage with new information actively. This is often done through inquiry-based learning, where students are encouraged to ask questions and explore solutions on their own, with guidance from the instructor.

3. Universal Design for Learning (UDL) UDL is an approach that recognizes that students learn in diverse ways and that instructional materials should be flexible to accommodate these differences. By providing multiple means of representation (e.g., audio, visual, text), engagement (e.g., interactive activities, gamification), and expression (e.g., written assignments, oral presentations), instructional designers can create inclusive learning experiences that address the needs of all students.

4. Formative Assessment and Feedback Formative assessments, which are conducted during the learning process, provide valuable insights into student progress and allow for adjustments in instruction. Providing timely and constructive feedback is essential for guiding students toward mastery. Instructional design education stresses the importance of designing assessments that inform both the educator and the learner about areas for improvement.

Instructional Design Education: Bridging Theory and Practice

Effective instructional design is not just about knowing the theories but also about knowing how to implement them in real-world contexts. Instructional design education provides educators with the knowledge and skills necessary to design, develop, and evaluate effective learning experiences.

Professional development programs in instructional design typically cover a variety of topics, including:

Learning theory and its application to instructional strategies

The development of engaging and interactive learning materials

The use of technology to enhance learning

The creation of assessments that accurately measure learning outcomes

Evaluation techniques for assessing the effectiveness of instructional programs

Through courses, workshops, and certifications, educators can learn how to apply the science of instructional design to their own teaching practice. Whether it’s using multimedia to enhance content, creating assessment rubrics, or designing collaborative learning activities, instructional design education equips educators with the tools they need to succeed in today’s dynamic educational environment.

Technological Advances and the Role of Instructional Design

As technology continues to reshape education, instructional design has evolved to include digital tools that enhance learning experiences. From Learning Management Systems (LMS) to artificial intelligence (AI)-driven platforms, technology has created new opportunities for designing personalized learning pathways.

1. E-Learning and Online Education Instructional design plays a critical role in the development of e-learning courses, which allow learners to access materials at their own pace and convenience. E-learning platforms require careful design to ensure that the content is engaging, interactive, and accessible. Principles like multimedia learning and cognitive load management are particularly important in the design of online courses, where learner engagement is key to success.

2. Gamification and Simulations Gamification involves incorporating game elements (such as points, badges, and leaderboards) into learning activities. It taps into students’ intrinsic motivation, making learning fun and competitive. Simulations, on the other hand, provide learners with immersive experiences that replicate real-world scenarios. Instructional design education helps educators integrate these technologies effectively to engage students and promote experiential learning.

3. Adaptive Learning Systems Adaptive learning systems use algorithms to tailor the learning experience to each student’s needs. These systems analyze learner performance and adjust the content or pace accordingly. Instructional designers use adaptive learning principles to create personalized learning experiences that maximize each student’s potential.

0 notes

Text

Key Psychological Theories Shaping Human Behavior and Wellbeing

Psychology studies human experience, from how the brain functions to what drives people's actions and behaviors. It aids individuals in gaining insight into their thoughts and emotions while fostering empathy and promoting healthier relationships with others. Various theories provide unique lenses to examine human behavior and thought.

Cognitive theories focus on understanding the mental processes that drive perception, reasoning, and comprehension. The general idea is that restructuring thought processes improves well-being as a person's thinking directly affects emotions/behaviors. Since the mind can be viewed as a system that encodes, stores, and retrieves information (somewhat like a computer), cognitive theories have inspired interventions such as cognitive-behavioral therapy (CBT) to change unhelpful thoughts.

Behavioral theories complement cognitive theories and explain how actions are acquired, modified, or discarded based on experience. Rooted in behaviorism, this perspective holds that people largely learn behavior through conditioning, which links actions to consequences. B.F. Skinner, a leading figure in behaviorism, advanced the concept of operant conditioning, emphasizing that rewards and punishments shape behavior. Environmental stimuli also play a significant role in influencing observable behavior.

Humanistic theories present an optimistic view of human nature, exploring self-actualization, personal growth, and pursuing potential. Unlike behavioral approaches focused on conditioning, humanistic psychology views individuals as good and capable of making choices that lead to personal development. Abraham Maslow, a key contributor, developed foundational ideas such as the hierarchy of needs, which outlines a path toward self-fulfillment and reaching one's potential. Today, the humanistic approach applies to psychotherapy, counseling, and organizational psychology.

While similarly interested in individual differences, personality theories examine the structure and development of personality and the influences shaping it. In management contexts, they can inform decisions about employee selection, placement, and team dynamics. For instance, when seeking candidates who value creativity or strong communication, managers assess personality traits to identify those whose characteristics align with organizational values.

Sigmund Freud's psychodynamic theory explains the subconscious mind as a complex system of three components: the id (representing primal instincts), the ego (balancing id demands with reality), and the superego (reflecting societal morals). Seeking to uncover the root of unconscious behavior, the theories delve into all outside conscious awareness from early childhood experiences and repressed memories and emotions. This approach has led to psychodynamic therapy, a form of talk therapy that seeks to make unconscious thoughts and past conflicts conscious.