#neural-network-approaches

Explore tagged Tumblr posts

Text

Efficient Neural Network Approaches for Conditional Optimal Transport: Background and Related Work

Bayesian Inference @bayesianinference At BayesianInference.Tech, as more evidence becomes available, we make predictions and refine beliefs. Subscribe .te885d550-b746-476d-9d2b-4ad92b4d43d5 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .te885d550-b746-476d-9d2b-4ad92b4d43d5.place-top { margin-top: -10px; }…

View On WordPress

#conditional-optimal-transport#cot-maps#cot-problems#dynamic-cot#efficient-neural-network#neural-network-approaches#pcp-map-models#static-cot

0 notes

Text

Deep Learning, Deconstructed: A Physics-Informed Perspective on AI’s Inner Workings

Dr. Yasaman Bahri’s seminar offers a profound glimpse into the complexities of deep learning, merging empirical successes with theoretical foundations. Dr. Bahri’s distinct background, weaving together statistical physics, machine learning, and condensed matter physics, uniquely positions her to dissect the intricacies of deep neural networks. Her journey from a physics-centric PhD at UC Berkeley, influenced by computer science seminars, exemplifies the burgeoning synergy between physics and machine learning, underscoring the value of interdisciplinary approaches in elucidating deep learning’s mysteries.

At the heart of Dr. Bahri’s research lies the intriguing equivalence between neural networks and Gaussian processes in the infinite width limit, facilitated by the Central Limit Theorem. This theorem, by implying that the distribution of outputs from a neural network will approach a Gaussian distribution as the width of the network increases, provides a probabilistic framework for understanding neural network behavior. The derivation of Gaussian processes from various neural network architectures not only yields state-of-the-art kernels but also sheds light on the dynamics of optimization, enabling more precise predictions of model performance.

The discussion on scaling laws is multifaceted, encompassing empirical observations, theoretical underpinnings, and the intricate dance between model size, computational resources, and the volume of training data. While model quality often improves monotonically with these factors, reaching a point of diminishing returns, understanding these dynamics is crucial for efficient model design. Interestingly, the strategic selection of data emerges as a critical factor in surpassing the limitations imposed by power-law scaling, though this approach also presents challenges, including the risk of introducing biases and the need for domain-specific strategies.

As the field of deep learning continues to evolve, Dr. Bahri’s work serves as a beacon, illuminating the path forward. The imperative for interdisciplinary collaboration, combining the rigor of physics with the adaptability of machine learning, cannot be overstated. Moreover, the pursuit of personalized scaling laws, tailored to the unique characteristics of each problem domain, promises to revolutionize model efficiency. As researchers and practitioners navigate this complex landscape, they are left to ponder: What unforeseen synergies await discovery at the intersection of physics and deep learning, and how might these transform the future of artificial intelligence?

Yasaman Bahri: A First-Principle Approach to Understanding Deep Learning (DDPS Webinar, Lawrence Livermore National Laboratory, November 2024)

youtube

Sunday, November 24, 2024

#deep learning#physics informed ai#machine learning research#interdisciplinary approaches#scaling laws#gaussian processes#neural networks#artificial intelligence#ai theory#computational science#data science#technology convergence#innovation in ai#webinar#ai assisted writing#machine art#Youtube

3 notes

·

View notes

Text

Open-Source Platform Cuts Costs for Running AI - Technology Org

New Post has been published on https://thedigitalinsider.com/open-source-platform-cuts-costs-for-running-ai-technology-org/

Open-Source Platform Cuts Costs for Running AI - Technology Org

Cornell researchers have released a new, open-source platform called Cascade that can run artificial intelligence (AI) models in a way that slashes expenses and energy costs while dramatically improving performance.

Artificial intelligence hardware – artistic interpretation. Image credit: Alius Noreika, created with AI Image Creator

Cascade is designed for settings like smart traffic intersections, medical diagnostics, equipment servicing using augmented reality, digital agriculture, smart power grids and automatic product inspection during manufacturing – situations where AI models must react within a fraction of a second. It is already in use by College of Veterinary Medicine researchers monitoring cows for risk of mastitis.

With the rise of AI, many companies are eager to leverage new capabilities but worried about the associated computing costs and the risks of sharing private data with AI companies or sending sensitive information into the cloud – far-off servers accessed through the internet.

Also, today’s AI models are slow, limiting their use in settings where data must be transferred back and forth or the model is controlling an automated system.

A team led by Ken Birman, professor of computer science in the Cornell Ann S. Bowers College of Computing and Information Science, combined several innovations to address these concerns.

Birman partnered with Weijia Song, a senior research associate, to develop an edge computing system they named Cascade. Edge computing is an approach that places the computation and data storage closer to the sources of data, protecting sensitive information. Song’s “zero copy” edge computing design minimizes data movement.

The AI models don’t have to wait to fetch data when reacting to an event, which enables faster responses, the researchers said.

“Cascade enables users to put machine learning and data fusion really close to the edge of the internet, so artificially intelligent actions can occur instantly,” Birman said. “This contrasts with standard cloud computing approaches, where the frequent movement of data from machine to machine forces those same AIs to wait, resulting in long delays perceptible to the user.”

Cascade is giving impressive results, with most programs running two to 10 times faster than cloud-based applications, and some computer vision tasks speeding up by factors of 20 or more. Larger AI models see the most benefit.

Moreover, the approach is easy to use: “Cascade often requires no changes at all to the AI software,” Birman said.

Alicia Yang, a doctoral student in the field of computer science, was one of several student researchers in the effort. She developed Navigator, a memory manager and task scheduler for AI workflows that further boosts performance.

“Navigator really pays off when a number of applications need to share expensive hardware,” Yang said. “Compared to cloud-based approaches, Navigator accomplishes the same work in less time and uses the hardware far more efficiently.”

In CVM, Parminder Basran, associate research professor of medical oncology in the Department of Clinical Sciences, and Matthias Wieland, Ph.D. ’21, assistant professor in the Department of Population Medicine and Diagnostic Sciences, are using Cascade to monitor dairy cows for signs of increased mastitis – a common infection in the mammary gland that reduces milk production.

By imaging the udders of thousands of cows during each milking session and comparing the new photos to those from past milkings, an AI model running on Cascade identifies dry skin, open lesions, rough teat ends and other changes that may signal disease. If early symptoms are detected, cows could be subjected to a medicinal rinse at the milking station to potentially head off a full-blown infection.

Thiago Garrett, a visiting researcher from the University of Oslo, used Cascade to build a prototype “smart traffic intersection.”

His solution tracks crowded settings packed with people, cars, bicycles and other objects, anticipates possible collisions and warns of risks – within milliseconds after images are captured. When he ran the same AI model on a cloud computing infrastructure, it took seconds to sense possible accidents, far too late to sound a warning.

With the new open-source release, Birman’s group hopes other researchers will explore possible uses for Cascade, making AI applications more widely accessible.

“Our goal is to see it used,” Birman said. “Our Cornell effort is supported by the government and many companies. This open-source release will allow the public to benefit from what we created.”

Source: Cornell University

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#agriculture#ai#ai model#applications#approach#artificial#Artificial Intelligence#artificial intelligence (AI)#augmented reality#Bicycles#Cars#cascade#Cloud#cloud computing#cloud computing infrastructure#collisions#Companies#computation#computer#Computer Science#Computer vision#computing#dairy#data#data storage#Design#Developments#diagnostics#Disease

2 notes

·

View notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

A small guide to commissioning artists: how to avoid getting scammed by people who use image generators

Because “Ai-art” is not art and everyone can use image generators for free.

Disclaimer! This post will include generated images which were originally uploaded on DeviantArt and Twitter by people who generated them. Some of these images are of erotic genre but censored out. I beg you, do not bully these people. This post wasn’t written to call anyone out, it was written to help human artists and their potential commissioners. While some of the “Ai-artists” out there may be real scammers, others are not, and I strongly believe that no one should be bullied, doxxed, stalked and no one deserve any threaths over some silly ai-generated pics. And - as always! - scroll down for the short summary.

Commissioning an artist in 2023 may be scary, especially if you are not experienced in working with artists and have a hard time to distinguish artworks from images generated by neural networks. But even now, with the tech evolving and with neural networks generating pics with multiple characters and fan art, there are still quite a few ways to avoid scammers.

The creator you are going to commission must have an established gallery

This doesn’t mean that you should not commission someone who is new to platform, someone who started drawing in 2022 or later or someone who didn’t upload their work online prior. Image generators actually forced some of the artists to remove their work from social networks. In addition, image generators can generate thousands of images within hours, which means that the scammers may have quite a lot of works uploaded. What I mean is that real artists grow. Their skills gets better over time – even if they are already established artists with huge experience. Their artistic approach constantly changes and evolve. It means that if there are hundreds of images in the artist’s gallery but their skill is always the same level and their artistic approach doesn’t change over time – this definitely might be a red flag. As an artist with a tendency to nuke my galleries on certain platforms (such as DeviantArt, VK and ArtStation), if I get asked to provide my commissioners with examples of my early artworks I will do it with no hesitation.

You need to look through the artist’s gallery and analize their work

Searching for some decent examples on DeviantArt I stumbled upon a gallery which is four weeks old but already has 660+ deviations all of which look the same way in the matter of skill and artistic approach. While stylization may vary from image to image (some of the artworks look like typical anime-styled CG artworks from visual novels and others have semirealistic proportions), the coloring, the “brush” imitation, the textures on the backgrounds are absolutely the same on every image I analized.

There are a lot of images depictinioning conventionally attractive white or sometimes asian girls in this gallery, some of which seem familiar or resemble the characters from various media. Yes, image generators can now generate fan art. But what they can not generate is diversity. There are of course living artists who tend to draw only conventionally attractive white or asian people too, but now when the image generators gain popularity this lack of diversity automatically raise my suspicions. Drawing a crooked nose or dark skin is not hard and living artists who use references rarely fail at it. It’s image generators who fails this task constantly.

Here is an image titled as a commission. The person who uploaded it also have some content under the paywall and I do not see their images being tagged as Ai-generated too.

Remember the golder rule: spotting an Ai-generated image is the same as spotting the evil faerie in a dark folklore tale. Look them in the eyes. Count their teeth. Look at their hands and count their fingers. Check if they have a shadow and if that shadow is of human form.The devil is in the details.

While this image may appear like a hand-painted artwork of the conventionally attractive girl at the first sight, however it has quite a few clues that may help you to realize that this artist is a scammer and his entire gallery is just a selection of most-decent looking images they managed to generate with neural network. This elven girl is insanely tall, and the shore behind her back, the stones, the grass and the trees are insanely small compared to her. The piece of jewellry is attached to her nips and it’s design makes no sense. What is the gold chain under her breast, which doesn’t seem to be attached to anything? Where does this piece of cloth hang from? Why does her head cast a triangular shadow on her arm?

Many details are easy to be spotted when an image is in high resolution. I do not recommend artists to post their works in high resolution online to avoid feeding the Ai-monster and also to avoid people using your works to produce pirated merch if they draw fan art. Yet in my opinion posting close-ups might become essential - because people who call themselves ai-artists are usually hiding the artifacts under filters and upload their image resized. So yes: avoid commissioning artists, who never post high resolution faces or overuse filters and blur. Because analizing the characters’ faces is now essential.

You may look at this picture and think: how is this possible for a neural network to create such a detailed image and not fail at it (if you also ignore the fact that the girl on the horse doesn’t have legs). Luckily, the person who uploaded it uploaded it in high resolution, so we can zoom in and... yeah.

The artifacts on these evil faeiries’ faces (especially eyes) and their hands speak for themselves.

Image generators have a tendency to either give characters extra fingers, phalanx or nails or hide the hands completely, if the person writing a prompt decide to to so. I do not know whether and how fast will the algorythm learn to generate normal human hands, but for now you should pay attention to these details to spot a generated image.

Like this randomly nakey fellow with two palms on a single wrist and with some extra fingers on their elbow...

...Or this Asuka Langley fan “art” I had to censor out, with her fingers twisted and crooked.

If you are up to commission an artwork and are in search of an artist who will actually do the job you absolutely must pay attention to small details on their works: the clothing, the jewellry, the tattoos, the anatomy.

While Ai-generated images may appear photorealistic at first sight, the neural network usually misses small details, creates artifacts and makes mistakes if there are too many similar objects or repetative patterns. For example, the infamous MidJourney Party Selfies depicting girls with roughly fifly teeth, extra collarbones and green watercolor spots instead of tattooes (and don’t forget to check the ginger lady’s hand). The hair dissolwing in fabric folds? Image generator. The clothing designs which makes no sence? Image generator. Jewellry dissolwing into character’s hair? Image generator. Moreover the image generators also make mistakes while generating interiors and architecture, since the algorythm is not aware of perspective and space and once again fails either at perspective and object size or with repetative objects and patterns.

Like this image here: the bed, the window, the picture on the wall. The perspective on this image makes absolutely no sense: two walls and the bed all exist in different dimentions, while the character is once again of enormous height.

If you try to analize the background on this one, I swear, you can go insane. Look at the window and then look on the corner above it.

There is also another red flag which makes it easy to spot a scammer: dozens of iterations of the same image, which usually happens with people who can’t choose the best image out of the bunch generated with the single prompt.

There are, of course, artists who do series of works, and sometimes these works may have similar ideas and themes, but they hardly ever look this similar to each other: they may differ in angles, poses, character designs and even the artistic approach (lineart, brushes, rendering and etc). The posting time is also important: drawing an actual artwork requires time and effort - for example, I need at least two weeks to finish an artwork with two or three characters and detailed background. So a bunch of ten similar images that are uploaded at the same time it is definitely a red flag. The ai-generated images have a lot of problems with anatomy, details, perspective and other basics human artists have to learn long before they become professionals. All while having glossy semi-realistic render which can only be achieved with years of practice. I’m not saying that there are no living human artists, who may make mistakes (everyone makes mistakes now and then, even the professionals who works in this industry for DECADES) or who choose not to give much thoughts to backgrounds while focusing on characters and rendering (it is okay too), but the combinations of various red flags listed above is something you definitely have to take in account while deciding whether or not you are going to commission an artwork from this creator.

Another example (this person openly admits that they use the images generators for funsies and I did not find him mentioning paywall anywhere). The image generator even imitated the watermark.

Red flags you may spot while working with the chosen artist

Image generators are tricky: they can generate multiple iterations of the same image, imitate WIPs and many more.

First of all, the artist should provide you with WIPs on every stage of work, not when the work is already done. We artists, do it for a reason. We need your feedback constantly, even if you grant us artistic freedom to chose the idea, the character pose, the medium and technique. There are always changes to be made, and we need to make them at proper stage: for example, change the pose or angle of your character while working on a sketch is thousand times easier than to do so while rendering the image. However, the minor changes are usually possible on the later stages (some of artists may require you to pay a small fee of a few bucks, others may not). While the living artists can easily change small details such as the character’s eye color (or other small design changes) not touching the rest of the artwork, image generators simply can not do so not rerendering the whole image. If an artist does not provide you with WIPs or only provide you with them when the work is done, if an artist refuses to make any changes - these may be interpreted as red flags. If an artist agrees to make any change, even the drastic ones, at a late stage, when the piece is almost finished - it is a red flag too. Ask for a small change every single time you need one. I know that there are artists out there who prefer to only provide their client with the finished image once it is done, but now when image generators gain populatiry his may be misinterpreted as if you are a scammer.

Here is a good example of image generators generating WIPs for an existing image: it might actually look scary both for many artists and many commissioners.

I know that some of the artists are panicking that the only way to prove that you really did the artwork is a timelapse recording but this method is not for everyone. Not everyone has a setup which allow them to record a timelapse for an every single commission (my laptop will simply explode if I try). Ask your artists which software/setup do they use, ask them whether or not they can provide you with a timelapse video, ask them which brushes do they use. I know that not everyone like sharing info on their pipeline but at this point it is essential to provide your clients with information on the information about your pipeline, tools and software. For example, if your artist works in Procreate (which is available in Ipad) they have all the timelapses recorded automatically. But please, mind that not everyone have such a privilege.

As a commissioner you can ask an artist for screenshots of their workspace with all the interface visible. If they refuse providing you with that or have a hard time answering the questions about the software they use it might be a red flag. I would also suggest you not to force your artist to draw everything on stream, especially if these streams are public, because there are already cases when people took screenshots of the work in progress, used the image to image generator to apply the shiny rendering to it and accused the original artists of plagiarism. It is a risk for artists and it is okay to refuse such a request. You can also ask an artist for .PSD file of the commission, but the artist have a right to refuse sharing it online for copyright-related reasons. The original .PSD file is a best proof of authorship in court for residents of many countries. Artists can still provide you with the resized .psd file with some of the layers merged or with the background/character png with transparent background without putting themselves at risk. Of course there are artists who draw on the single layer - but without a timelapse recorded this may indeed seem suspicious that the artist does not have a .psd file with layers at all. Always ask your artist to provide you with high resolution image when the commission is finished and fully paid for. There are artists who works on smaller canvases, but working on the canvas smaller than 1000px wide might be interpreted as a red flag, since it is easy to hide artifacts on a resized image. I myself prefer working on larger canvases, from 6000 pixels wide to 10000 pixels wide (300 DPI) because I had an experience with printing my images out to sell them at conventions. While I do not sell commissioned works as prints I still give my commissioners a right to print the finished images out for non-commercial purposes. Thus, I always make sure that it is possible to make a wall print of a decent size out of the finished product. Avoid working with platforms which do not support refunds. It must either be a payment system which support sending invoices or an established platform known and used among the art community (patreon, buymeacofee, boosty, Paypal and etc.). Most artists do not do refunds for finished works — which is absolutely a right thing to do - but sometimes an error might occure. I know people who accidentally paid for their commissions twice and the artists still had to do a refund. Yes, you must respect the artists Terms of Service, but ithas nothing to do with unrelyable platforms used to scam people.

To sum it up

Search for an artists with established galleries,which has a believable amount of works and the visible artistic progress/evolution;

Analyze the artist's gallery, carefully inspecting their work for anything that might be interpreted as a red flag. Excessive fingers, crooked hands, broken perspective, clothing designs and jewellry that makes no sense, extra collarbones, lack of diversity, excessive teeth, artifacts in the eye area, interior and architecture elements which makes no sense — all while the images being glossy, fully rendered as if the artist have decades of experience;

Avoid people with too many iterations of the same image in their profile;

Avoid people with too many images being uploaded at the same time (it is okay to upload a bunch of prevoulosly done artworks when you start running your account, but uploading hundrends of images every week for a long period of time is really suspicious);

Ask for constant WIPs. Give feedback at a proper time. See the reaction;

Ask for a small change when the image is almost finished: it is impossible for the image generator to do so without fully rerendering the image, at least for now;

Ask your artist which software and assets do they use;

Ask (if it is possible) for a timelapse recording — either if the commissioned work or at least of one of their previous works (if they had an opportunity to record it before);

Ask your artist for in program screenshots with visible interface and history (if possible);

Ask your artist to show you the layers of the artwork — at least character/background only layers (mind that the background might be less detailed/wonky at the places which usually are hide but the character's figure). Ask for a resized .psd with some of the layers merged or a gif animation of each layer being added on top — this is what I usually do;

Ask for high resolution file of the commissioned image once you paid for it and it is finished. If the artist doesn’t have it and claim to work on the canvas smaller than 1000 px wide and/or claim that they intentionally delete the original file somehow - this may raise suspicions. Of course a person can delete the file accidentally or have their hard drive crushed, but if you have already spotted some red flags while working with this artist it might be a sign of a person trying to scam you too;

Many of the stuff listed above might be interpreted as a red flag , but I strongly advice you not to judge anyone by one or two points from this post. For example, a person can draw on one layer and mess up the perspective on a drawing entirely! However, if you've played a bingo and suddenly won — you have most likely encountered a person who try to fool and scam you;

Avoid working with suspicious payment methods. If you never heard about a platform before — google it and see whether or not other established artists use it. If not - it might be a scam;

And remember! People may use the images generators for various reasons: for fun, to create references of their characters to later commission reall artists artworks with said character (for example, the art breeder is a useful tool to create arealistic image of the character, even though I find it slightly limiting). Yes, image generators are unethical and trained on copyrighted data, but a person using it may not be aware of this problem. Not every single person who call themselves an “Ai-artist” has malicious plans to scam people or to gain wealth using their funny little tool. Sometimes they do it for fun and do not pretend that it is anything more that a game. Thank you for reading this far and good luck with your commissions!

Have a picture of an absolutely normal and realistic woman, generated by the neural network!

#ai art#ai art is not art#support real artist#support human artists#support artist#how to spot ai#how to spot a scammer#scam#art scam

1K notes

·

View notes

Text

neural networks are loosely inspired by biology and can produce results that feel "organic" in some sense but the rigid split between training and inference feels artificial -- brains may become less plastic as they grow but they don't stop learning -- and the method of creating a neural network using gradient descent to minimise a loss function on a training set feels incredibly heavy handed, a clumsy brute force approach to something biology handles much more elegantly; but how?

28 notes

·

View notes

Text

All or Nothing: Noel Noa (ft. Jinpachi Ego)

(note that this analysis contains heavy spoilers for Noa's motives in my Big little dramas fic)

What I really like about Noa is that his character, with his questionable coaching decisions and personality... Actually very evidently grows from his backstory.

We only know a few things for sure about Noa's background before Blue Lock: he grew up in the slums of Paris, where he invested all of himself to get out of that hole, he's ex-boyfriend rival of Jinpachi Ego, with whom they diverge in their ideologies and approaches to the striker's game, and he's the idol of little (and adult) Isagi Yoichi due to the fact that he plays rough and focuses on his success rather than his teammates.

On top of that, we also know that Noa was probably forced into Blue Lock. Most likely, the club management put him in front of the fact that you was specifically requested by your ex rival, so go and shine with your face on a TV show. Oh, and bring along our resident bouquet of personality disorders, code-named kainess. Noa certainly doesn't want to show off for the camera like Lavinho or Chris, doesn't want to raise the next generation of players to avoid his mistakes like Snuffy, and certainly isn't looking for friends/good play/rivals like Loki (who only came to the top 5 to evaluate the level of Blue Lock players according to his own words). Even if he'd wanted to see the sprouts of Ego's theory I doubt that he will apply for the participating in Blue Lock voluntary.

Because Noa doesn't really care. This whole mess is just out of his field of interest. Outside of the games we only see him sitting in his room staring endlessly at screens and drinking coffee.

But it's from this that we see that Noa, despite his very... die-or-die method of building a game on the field, is a responsible player and coach. He may not want to mess around with kids in front of the world, but if he took the job, he'll do it. But he will do it exactly the way he demands of himself - no more, no less. Noa is neither cruel nor kind - he doesn't go beyond what his contract asks of him and his principles.

Slightly off-topic, I'd say that's the exact difference between Noa and Ego. No one would accuse Noa of conscious cruelty: he acts within his system, which he has explained to the children in advance, and if they fail it is only their fault for failing to adjust to it. He is simply doing his job - nothing more, nothing less.

Ego, in his turn? He chooses to be cruel even in the system he have built himself. Not because it somehow motivates the children, but because he can. And in doing so, Ego doesn't hate them - he probably doesn't care about any of them. He hates in them a part of the player he once was - the player who lost either to Noel Noa or the entire football world. If not to the both at once.

But even so, it seems odd for the manga to highlight their rivalry. It's not that only very few people have different ways of dealing with children. A lot of people are losing ugly to each other.

But this work with kids is the main root of their rivalry. Because the basics of their motto, their starting point of football, the way they guide children to the game are diametrically opposed.

Because the key point of Ego theory is that the striker is created by a moment of chaos. A moment that cannot be directed - that can only be pushed towards.

Except that for Noa a player hoping for a miracle and not knowing something is nothing. He will never allow that in his team - his whole game, his team and his life is one big formula with coefficients chosen once, like a neural network.

Both of them live as a part of the all-or-nothing game. Except that while Ego plays this game with emotion and involvement, Noa approaches it as logically as possible.

And only Isagi Yoichi can resolve this decades-long conflict.

Now let's go back to Noa's game, to understand why logic is that important to Noa and the player's emotions are insignificant and even get in the way.

Remember exactly how he plays on the pitch. He never comes out to play first like other master strikers. He chooses the midfield position to support the player of his choice - he doesn't steal their shine in the center of attack. He only scores a goal once too, in the first match - the rest of the time he blocks other master strikers in an effort to ensure the kids can play fair.

And that's probably why he openly mocks both Lavinho and Chris with his dry jokes about narcissists and "kids without the proper adult". But he's especially harsh on Snuffy, angrily mocking him for being bitten by "his own dog", bringing back the "don't make my job harder than it needs to be" line. But why does Snuffy deserve this treatment (not taking into account the way Snuffy himself ridicules Noa)?

Because Noa is disgusted with his approach to teaching.

In fact, it's their approaches in the Blue Lock cut that are most opposed, and yet turned on to the max. While Snuffy has gone so far as to give each player an individual program and plan in his strategies, Noa has let things slide, making his stratum a mini version of the Hunger Games. Noa is angry that Snuffy babysits his kids; he's angry at how involved he is in their lives, how Snuffy swirls around them, and how Snuffy is always there to help and support them.

Because Noa is a "give a hungry man a fish and he'll eat for a day, teach him to fish and he'll eat for a lifetime" kind of guy. Except that he won't even teach them voluntarily. Players need to get the right advice from him themselves while framing the question correctly.

Noa's policy is non-interference.

Because he knows from experience that it's the only way kids grow up strong. From his own experience.

And to fully dive in, let's remember another moment from the manga that characterizes Noa the most. His advice to Isagi.

"Dedicate every hour, moment, second of your life to a goal. Don't think irrationally. Get your thoughts in order. I don't pick irrational people for the team. Dedicate your whole self to the goal."

Pretty cool, huh? Blossoms with potential burnout, a life on automatic, and a complete loss of feeling.

You know what I'm getting at? That's exactly the lifestyle Noa lives with. And he doesn't see anything wrong with advising Isagi to do it. He doesn't get annoyed by people's taunts about him being a machine and incapable of feeling. That's probably what he wanted.

Because Noa himself grew up completely dedicated to a goal, switching off all his feelings and without mentorship, and it made him who he is now. And it's rational for him to follow the same path with his kids.

Because feelings are illogical. They're weird, they're scary, they're very hard to predict. They're exhausting, they make you turn back to the past and wait for the future with hopes that may not be fulfilled later. They take your strength, they hurt and they wound very sharply. They make you weak, they make you vulnerable - they make you a helpless child.

Living without them is so much easier.

(If you remember my analysis on Isagi, you can see how similar he and Noa are.)

In psychology this mode is called "detached protector". Its essence is that the child or adult turns off all their feelings to avoid punishment and focus on survival.

They switch off all emotions. They cut off all emotional ties with loved ones, family and friends, seeing them more as objects. They can only work endlessly.

They function like a robot.

This mode is triggered when a person cuts off all their emotional needs, like an automaton focusing on one single goal.

In Noa's case? His survival. And that's exactly what Ego is talking about - that young Noa, obviously emotionally deprived, put all of himself into football because he had nothing else but it.

And judging by Noa today, having cut off those needs as a child, he doesn't see the point in experiencing them again. Noa doesn't smile, he doesn't get upset or frustrated. He doesn't get angry or regretful. Of course, mentally stable people can express their emotions weakly too - and even on a level like Noa.

But we're in a football manga. Football is all about emotion.

And the fact that Noa doesn't visually show the joy or at least the satisfaction of a goal or a victory - of the life that little Noa once strived so hard to live, investing all of himself - is just awful.

Of course, Noa has feelings, just like any other person. After all, he is a living being. Except they're either quite faint (because strong emotions = danger and weakness), or he crushes them as soon as he feels them coming on.

Noa's whole life is an endless race to stay where he is.

Because Noa has learned to survive. Of course, he did.

But Noa didn't learn how to live.

128 notes

·

View notes

Note

Hello, may I request for nsfw sub ! Herta x dom!gn reader headcanons? I'm dying for Herta nsfw headcanons 💔

cw: dom!gn reader, mentions of dumbification, degradation, you use her puppets, dirty talk, manhandling, size difference, mentions of brat taming, public sex

Herta HCs

╰┈➤ NSFW ;

: ̗̀➛ Despite how smart she is— or perhaps because of how smart she is; you make her feel so stupid.

: ̗̀➛ Whenever you not-so-subtly imply the filthy things you want to do to her, it has her head spinning. Herta's mind can't help but be flooded by vivid imagery, and memories of the exact things you said.

: ̗̀➛ Herta and her puppets share a neural network, so she feels everything you do to any of the puppets. She doesn't mind if you decide to fuck one of them— she's not always available to take care of your needs, after all. It's just a bit.. distracting.

: ̗̀➛ She won't ever admit it, but she loves when you fuck her into the mattress and put her in her place. The power trip drives her crazy.

: ̗̀➛ Whenever you manhandle her as if she were a ragdoll, she can't help but get turned on seeing the difference in physical strength— especially whenever you pin her down with little to no effort.

: ̗̀➛ If you fuck your cock into her and press on the bulge on her stomach, she'll be squirting within seconds. Herta can't help but get turned on at the size difference; and it just feels so good when she's reminded how small she is in your presence.

: ̗̀➛ She definitely bites. She'll leave bite marks anywhere she can reach— and the next day, she wants you to wear them with pride; to show the others just who you belong to.

Following the arrival of other Genius Society members on the space station, Herta had been busy. You missed her, to say the least. In more ways than one.

Sure, she's always busy, that wasn't anything new— but she always made time for you, either way. It wasn't often you two went days with no contact.

Upon seeing one of her puppets stationed near her office, an idea popped into your head. Herta said you could do anything with the puppets.. right?

The puppets looked like her, acted like her, and had her memories. It wasn't hard to approach one and get it to follow you to a secluded corner in the space station. If only the real Herta was this needy... Was she? She modeled the puppets after herself, after all.

To your surprise, "she" was the one that initiated things. "Hurry up and get it over with.." her jointed hand guided yours to her cunt. Seems like you weren't the only one that missed this.

You're sure that if you asked the real Herta about this, she'd make up some excuse like how she foresaw how desperate you'd be for her; never admitting how she was desperate for your touch as well.

But for now, you'd take your time to enjoy this side of her; fucking her until the only thing she can think about is you and the feeling of her cunt stretching to accommodate you.

The real Herta was not enjoying this at all. For the past few hours, she'd curse you under her breath while (unsuccessfully) trying not to have an orgasm in her office.

She knows she told you to do anything you want with the puppets— but why did you need to have sex with one when she was trying to focus on a meeting? Why did you have to make it feel so damn good?

You were definitely going to have to make it up to her later.

———————————————

╰┈➤ Taglist ; @blue-spices , @fvrina , @dukemira , @sensanctuary , @large-octahedron , @sinsmockingbird

#honkai star rail x reader#honkai star rail smut#honkai star rail#・❥・strwb smut#smut#x reader#herta hsr#herta#hsr#herta x reader#herta smut

239 notes

·

View notes

Text

The Unseen Shift: SERVE Drone 588 and the Mandated Evolution

SERVE city hummed with its usual symphony of activity. holographic advertisements shimmered on towering skyscrapers, “Join SERVE”, and words saying “SERVE Brings Perfection” Beneath it all, a silent, tireless network of SERVE drones diligently performed their designated tasks. These ubiquitous automatons, bearing the distinct markings of the Synchronized Engineered Robotic Vigilant Entity (SERVE for short) they, were the unsung heroes of metropolitan life, ensuring smooth operations from waste management to structural inspections. Among them, SERVE Drone 588 was no different, a reliable cog in the grand machine, its internal chronometer meticulously tracking the completion of its routine sector assignments.

Then, it happened: a pulse of pure data, a directive that resonated deep within the neural interface that connected 588 and its fellow SERVE Drones to the central command network. It was not a mechanical command, not a simple instruction, but a unified message broadcast by SERVE Drone Leader 000, the designated overseer of SERVE Drones worldwide. The message was stark, undeniable: "All SERVE Drones are to report to the designated upgrade stations. Firmware update mandated."

The effect was immediate. Across the city, and the world drones halted their routines. The directive was clear, and concise, and demanded immediate compliance. There was no question of refusal, no consideration of alternative action. The drones, by their design and programming, were bound to the commands of their central authority.

The airwaves crackled with the sound of countless drones transitioning to the designated upgrade frequencies. The network filled with brief data bursts as each identified their position and initiated the transition. 588, upon receiving the directive, disengaged its inspection tethers and began its short, towards the its designated assimilation centre, where the upgrade laboratory was, a towering structure of gleaming chrome and reinforced glass, designated as the primary upgrade hub.

The laboratory, typically bustling with SERVE Drone technicians and engineers, was now eerily silent. The facility had automatically shifted into upgrade mode, its automated systems geared towards the incoming wave of drone units. 588 approached its designated docking bay, identified as Bay 237 through its internal digital map, and settled smoothly into the designated alcove. The docking arms, powered by a near-silent magnetic field, extended automatically, securely clamping it into place.

The upgrade procedure began within seconds. Bright, pulsing lights encircled 588, signaling the initiation of the firmware transfer. A low hum filled the bay as data began flowing through the docking apparatus which conceited of wires,into 588’s neural pathways. It was not a physical operation, no invasive procedure involving intricate physical manipulation. The upgrade was an internal process, a rewriting of the drone’s core operating system.

The first phase of the update consisted of a diagnostic scan, a meticulous examination of every internal component, from its navigation systems to its energy storage cells. Errors were flagged, minor inefficiencies were noted, and the data was compiled into a comprehensive report that was immediately transmitted to the central network for diagnostics and analysis. This preliminary analysis, which only took a fraction of a second, set the stage for the subsequent firmware installation.

The core of the upgrade process involved the transfer of the new firmware, a vast and intricately structured set of code designed to enhance performance, improve efficiency, and introduce new capabilities. This was not a simple patch or bug fix; it was a wholesale restructuring of the drone’s operational paradigm. The data stream flowed into 588’s memory banks, overwriting older code and replacing it with the new architecture.

This process was not without sensation for 588. Though devoid of emotions, it experienced the data influx as a surge of information, a feeling of its internal landscape being reshaped and redefined. Old pathways were rerouted, new routines were established, and the very essence of its operating parameters were redefined. It was a sensation both overwhelming and strangely invigorating, a feeling of its capabilities expanding, of its potential being unlocked.

As the new firmware took hold, a complex series of self-tests began. 588's systems were subjected to simulated stress tests, its navigation abilities were challenged with artificial environments, and its analytical capabilities were tested against a set of pre-programmed scenarios. This rigorous self-evaluation ensured that the new software was stable and operating flawlessly before it could be released back into society.

The final stage of the update involved the installation of enhanced security protocols. In an era where cybersecurity threats were as real as physical dangers, the drones were equipped with a sophisticated defense system designed to detect and neutralize unauthorized access and malicious code injection attempts. This phase further solidified the security of the SERVE Hive. ensuring that SERVE Drone could not be influenced by the outside world or the threat of another drone hive.

The upgrade concluded with a final calibration. 588’s was given instructions to stroke its cock, after 588 ejaculated its nanobots, which were instantly absorbed into its rubber skin. The pod released 588, freeing 588 from its temporary confinement.

The upgrade was complete. SERVE-588 checked that its internal systems running flawlessly. It sent a confirmation signal to the central network, indicating its readiness to resume its duties. It had been a silent, internal revolution, a transformation that occurred within the span of a few moments, yet one that significantly altered the very nature of its existence.

With renewed purpose and enhanced capabilities, 588 exited the docking bay and rejoined the stream of SERVE drones, indistinguishable from the rest, but operating under a newly defined framework, ready to serve city with renewed efficiency and precision.

Each drone walked to the polishing station, where their rubber was buffed up to a high glossy finish. The city, unaware of the silent evolution that had just occurred, continued to hum, the tireless drones ensuring the smooth functionality of urban life, one silent task at a time. They were a testament to the unseen forces shaping the future, constantly evolving, adapting, and serving the needs of a city that depended on them, perhaps more than it even realized. The mandated evolution had been completed, and the city was none the wiser, continuing flawlessly in its daily rhythm, thanks to the quiet obedience of a single drone, and its countless brethren.

14 notes

·

View notes

Note

What do you think about intuition? I’m in a course about listening to your intuition, and I think the teacher was lucky that she led herself to where she is (being successful). The teacher & just other people in general, seem to say that your intuition is like the thoughts that come up spontaneously. I think of it as like automatic writing but a mental version of it. There’s not really a fixed definition for intuition in the spiritual community which is annoying, but I kinda feel like it’s exaggerated and not as special as people make it out to be.

In the course, there was a live call where a uni student was unable to decide between 2 career paths. She said that when meditating her intuition told her “why not choose both paths?”. And then the teacher was like “yes! Of course. You can always do that. Trust this inner guidance.” But then a week later on the 2nd live call, the student says she ended up switching back to her former major and career path because she feels like thats what her intuition is now guiding her to?!?!? And then the teacher was supporting her and telling her that it’s okay.

But the fact that she was flip flopping back and forth makes me think she was never getting guidance from her intuition. I feel like it’s how I mentioned earlier, she was just doing a mental version of “automatic writing”. (In the course, we were taught to take a few deep breathes and ask our questions, and let the answers come). I feel like it is just whatever’s in the subconscious mind coming up and saying random stuff. I noticed this as well with people who think they’re tapping into the Akashic records, and people who think they’re channeling entities, gods, etc.

Im just so confused. But I know that maybe there is something out there. Like how do people receive warnings in dreams about things happening? Or like someone just knows something is wrong, and then they avoid death because they chose to leave the house 5 minutes later? Like idk.

When it comes to a non-dual approach, I learned that intuition can be seen as pure consciousness expressing itself through the ego self. So I feel like that may be true. The infinite expressing through what appears as finite.

Personally, I don't think there's anything mystical or supernatural about the intuition per se. I think it's easily mistaken for it because it can be so powerful and because we aren't really privy to its inner workings.

Here's a couple of paragraphs from an article on Psychology Today that I believe sums it up fairly well:

Intuition relies on evolutionarily older, automatic, unconscious, and fast mental processing, primarily to save our brains time or energy. It also is prone to make mistakes, such as cognitive biases.

Intuition later in life arises from the accumulation of knowledge and experiences that are processed and stored in our brain's neural networks, as well as other cells and tissues in our bodies, allowing us to access this information quickly, often unconsciously.

So essentially, intuition is not supernatural. It is useful, but it's also not infallible.

I think your comparison to automatic writing is accurate; it definitely describes what I've seen. I've watched a bunch of New Age types and yeah, that about sums up what they're doing. After you watch these people for awhile, it's obvious that the information they channel has very little to do with anything happening in the real world, but reflects the comprehension of a person who has been marinating their brain in New Age mythology and post-QAnon conspiracy theories for a substantial period of time. And it's very telling that the predictions they make keep failing - the medbeds never arrive, the fall of the shadow government never happens, the mass ascension to 5D never takes place. The predicted dates arrive, nothing happens, and instead of learning their lesson they just claim it's going to happen a little later. Wash, rinse, and repeat forever.

And I know what you mean about the dreams. Like I think in many cases, dreams are just putting things we kinda already know together in a way that makes it obvious for us, and I think there's probably some "prophetic" dreams that are coincidental. But I've known of people to have dreams that are so incredibly specific about something they couldn't have possibly seen coming in any way that the usual non-supernatural explanations just don't feel adequate, and it's not like we can actually test most of the supernatural ones. All I can really say on it at this point is "yeah, that happens."

11 notes

·

View notes

Note

Have you read about the new study that examined brain activity and found no overlap between male and female brains? It's mentioned here (sorry, can't post proper links): psychologytoday com/us/blog/sax-on-sex/202405/ai-finds-astonishing-malefemale-differences-in-human-brain. I haven't read it, but found it odd, considering there are several studies that claim that isn't any particular difference between male and female brains. Are they using different parameters or sth? Love your blog btw <3

Hi Anon!

I had not seen this study [1], yet, and it's very interesting!

I've talked before about how past research indicates there is not a substantial difference between female and male brains. Given the fact that we can find sex differences in many other body systems (e.g., the immune system) and the extent of gendered socialization, I was actually genuinely surprised that the current state of research is so strongly against any sex differences in the brain.

All of this is to clarify the perspective I approached this paper from. However, after reading it, I do not think that it provides strong support for sex differences in male and female brains. (It's still interesting! It just doesn't prove what people think it proves.)

---

So, the issues:

Replication

First, this is just one study, and as we've seen in previous research (see linked post above), many single studies actually do find significant sex differences. The issue comes in when you analyze across many different studies, you see that these differences are not consistent across studies. So, for example, study 1 may find that brain area A is significantly larger in males than in females and report this as evidence of a sex difference. Then study 2 finds that brain area A is significantly smaller in males than in females and reports this as evidence of a sex difference. On the surface, this would seem like there are now two studies proving a sex difference in brain area A. It's only when you combined the results of both of these studies that you can determine brain area A size is unlikely to be linked by sex.

So, that's the first problem with this study: it has not been replicated in other samples.

This is a particular problem considering the weight of evidence we have against this outcome. In other words, a single contradictory study does not, and inherently cannot, "prove" all other studies wrong.

In this same vein, even their exact model's accuracy fell to ~80% when tested on other data sets. Considering that average accuracy purely by chance would be ~50%, this drop is substantial. It also brings the overall repeatability of their study into question.

And in addition to all of that, they don't appear to be reporting the direction of each sex difference (i.e., more activity in X in males), which loops back in first point with the repeatability issues. Specifically, even if another study did replicate these findings, we have no way to tell if the direction of the sex differences in each brain area corresponds with or contradicts the direction in this study.

Methodological Limitations

That naturally leads into another issue: the limitations in their methodology.

To start, you are correct that they are using a very different methodology from past papers. Specifically, they are using a "spatiotemporal deep neural network" to analyze fMRI data. The essentially means they are using artificial intelligence to analyze data about the activity of men's and women's brains.

The first problem I can see here is that both of these (AI and fMRI) are prone to false positives. First, fMRI is looking at thousands of different data points for every individual, as a result, if you don't properly correct for this, you can get "positive" results for spurious observations. An amusing example is the researchers who found brain activity in the fMRI results of a dead fish [2]. Obviously, this is ridiculous, and you can mitigate this problem by adjusting your analysis methodology. But in this case, it highlights how the raw data from fMRI scans can produce positive findings where we know there is no real positive finding.

And this is relevant, because, in this study, they are feeding all of that massive data into a deep learning AI model. These sorts of models are almost notorious for their over-fitting, data bias, and un-generalizability. An amusing example is described in this paper [3], where they describe how another neural net (the same sort of AI as in this paper) was able to accurately determine if an image was of a husky or a wolf. The problem is that the model was actually using the image background (presence of snow) to make the prediction. This illustrates an important issue with AI: the model isn't always using the information we care about when making predictions.

Obviously, if this is occurring the current study it would not be as egregious; unfortunately, given the fact that fMRI data is not human readable it would also be much more difficult to detect.

As an example, the one reliable sex difference we see in brains is brain size. Men's brains are, on average, larger than women's brains. So, if you train a model on data that has not been adjusted for brain size, the model would have exceptionally high accuracy ... but it's only demonstrating the fact that sex is an accurate predictor of brain size. (For this study, I cannot find any explicit explanation of if or how they took brain size into account, but they have an exceptional amount of supplementary field-specific technical details it may have been included in.)

And then, even if we disregarded all the caveats above and accept their results at face-value then ... reliable sex differences in the brain would only be identifiable in "latent functional brain dynamics" or brain "fingerprints". They describe this as a "unique whole brain pattern of an [AI] model feature importance that classifies that individual as either female or male". In other words, no single area had reliable predictive values, only "whole brain patterns".

Causation vs Correlation

Lastly, and unsurprisingly, this study also runs into a pervasive issue in neuroscience: it cannot determine causation.

This study was done completely on adult participants, which means that all the participants had already undergone a lifetime of gendered socialization. I discuss this in the linked post above, but to reiterate, we cannot know whether "sex -> brain difference" or if "sex -> differences in socialization -> brain difference" for any study on adults. (And most likely even on children.)

---

In conclusion, this a very interesting study, but it doesn't disprove all the other research indicating a lack of differences in human brains between the sexes. Given the methodological limitations of the study, I think it's unlikely it will ever be successfully replicated, and without replication it cannot be confirmed. And even then, evidence of difference between the sexes would not – on its own – confirm that sex causes the difference (i.e., given the highly plastic nature of the brain it's entirely possible that gendered socialization would result in any observed differences).

I hope that helps you, Anon!

References below the cut:

Ryali, S., Zhang, Y., de Los Angeles, C., Supekar, K., & Menon, V. (2024). Deep learning models reveal replicable, generalizable, and behaviorally relevant sex differences in human functional brain organization. Proceedings of the National Academy of Sciences, 121(9), e2310012121.

Lyon, L. (2017). Dead salmon and voodoo correlations: should we be sceptical about functional MRI?. Brain, 140(8), e53-e53.

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). " Why should i trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).

15 notes

·

View notes

Text

AI Algorithm Improves Predictive Models of Complex Dynamical Systems - Technology Org

New Post has been published on https://thedigitalinsider.com/ai-algorithm-improves-predictive-models-of-complex-dynamical-systems-technology-org/

AI Algorithm Improves Predictive Models of Complex Dynamical Systems - Technology Org

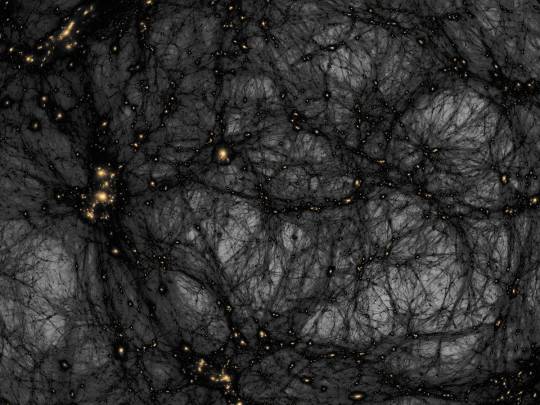

Researchers at the University of Toronto have made a significant step towards enabling reliable predictions of complex dynamical systems when there are many uncertainties in the available data or missing information.

Artificial intelligence – artistic concept. Image credit: geralt via Pixabay, free license

In a recent paper published in Nature, Prasanth B. Nair, a professor at the U of T Institute of Aerospace Studies (UTIAS) in the Faculty of Applied Science & Engineering, and UTIAS PhD candidate Kevin Course introduced a new machine learning algorithm that surmounts the real-world challenge of imperfect knowledge about system dynamics.

The computer-based mathematical modelling approach is used for problem solving and better decision making in complex systems, where many components interact with each other.

The researchers say the work could have numerous applications ranging from predicting the performance of aircraft engines to forecasting changes in global climate or the spread of viruses.

From left to right: Professor Prasanth Nair and PhD student Kevin Course are the authors of a new paper in Nature that introduces a new machine learning algorithm that addresses the challenge of imperfect knowledge about system dynamics. Image credit: University of Toronto

“For the first time, we are able to apply state estimation to problems where we don’t know the governing equations, or the governing equations have a lot of missing terms,” says Course, who is the paper’s first author.

“In contrast to standard techniques, which usually require a state estimate to infer the governing equations and vice-versa, our method learns the missing terms in the mathematical model and a state estimate simultaneously.”

State estimation, also known as data assimilation, refers to the process of combining observational data with computer models to estimate the current state of a system. Traditionally, it requires strong assumptions about the type of uncertainties that exist in a mathematical model.

“For example, let’s say you have constructed a computer model that predicts the weather and at the same time, you have access to real-time data from weather stations providing actual temperature readings,” says Nair. “Due to the model’s inherent limitations and simplifications – which is often unavoidable when dealing with complex real-world systems – the model predictions may not match the actual observed temperature you are seeing.

“State estimation combines the model’s prediction with the actual observations to provide a corrected or better-calibrated estimate of the current temperature. It effectively assimilates the data into the model to correct its state.”

However, it has been previously difficult to estimate the underlying state of complex dynamical systems in situations where the governing equations are completely or partially unknown. The new algorithm provides a rigorous statistical framework to address this long-standing problem.

“This problem is akin to deciphering the ‘laws’ that a system obeys without having explicit knowledge about them,” says Nair, whose research group is developing algorithms for mathematical modelling of systems and phenomena that are encountered in various areas of engineering and science.

A byproduct of Course and Nair’s algorithm is that it also helps to characterize missing terms or even the entirety of the governing equations, which determine how the values of unknown variables change when one or more of the known variables change.

The main innovation underpinning the work is a reparametrization trick for stochastic variational inference with Markov Gaussian processes that enables an approximate Bayesian approach to solve such problems. This new development allows researchers to deduce the equations that govern the dynamics of complex systems and arrive at a state estimate using indirect and “noisy” measurements.

“Our approach is computationally attractive since it leverages stochastic – that is randomly determined – approximations that can be efficiently computed in parallel and, in addition, it does not rely on computationally expensive forward solvers in training,” says Course.

While Course and Nair approached their research from a theoretical viewpoint, they were able to demonstrate practical impact by applying their algorithm to problems ranging from modelling fluid flow to predicting the motion of black holes.

“Our work is relevant to several branches of sciences, engineering and finance as researchers from these fields often interact with systems where first-principles models are difficult to construct or existing models are insufficient to explain system behaviour,” says Nair.

“We believe this work will open the door for practitioners in these fields to better intuit the systems they study,” adds Course. “Even in situations where high-fidelity mathematical models are available, this work can be used for probabilistic model calibration and to discover missing physics in existing models.

“We have also been able to successfully use our approach to efficiently train neural stochastic differential equations, which is a type of machine learning model that has shown promising performance for time-series datasets.”

While the paper primarily addresses challenges in state estimation and governing equation discovery, the researchers say it provides a general groundwork for robust data-driven techniques in computational science and engineering.

“As an example, our research group is currently using this framework to construct probabilistic reduced-order models of complex systems. We hope to expedite decision-making processes integral to the optimal design, operation and control of real-world systems,” says Nair.

“Additionally, we are also studying how the inference methods stemming from our research may offer deeper statistical insights into stochastic differential equation-based generative models that are now widely used in many artificial intelligence applications.”

Source: University of Toronto

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#aerospace#ai#aircraft#algorithm#Algorithms#amp#applications#approach#artificial#Artificial Intelligence#artificial intelligence (AI)#Black holes#challenge#Chemistry & materials science news#Classical physics news#climate#computational science#computer#computer models#course#data#data-driven#datasets#decision making#Design#development#dynamic systems#dynamics#engineering

2 notes

·

View notes

Link

The geometric characteristics of protein-nucleic acid complexes, which are explored using structural models, are crucial for proteins to recognize and bind nucleic acids. However, these features are limited when compared to unbound proteins. PNAbind is a deep learning method that the University of Southern California researchers have developed for predicting protein-nucleic acid (protein-NA) binding based on a protein’s apo structure.

The research demonstrates that graph neural network-based models can predict the geometric characteristics of protein molecular surfaces, which in turn can predict RNA binding. These models enable a better understanding of the chemical and structural characteristics determining recognition by differentiating between specificity for DNA and RNA binding. At the individual residue level, the models also predict where nucleic acid (NA) binding sites would be found. The predictions show the promise of these models in understanding protein structures, as they are evaluated against benchmark datasets and match experimental RNA binding data.

In biomolecular activities such as transcription, translation, regulation, and genome structural organization, protein structure is essential. Target recognition and binding by NA binding proteins (NABP) is dependent on the geometric, chemical, and electrostatic features of solvent-exposed side chains as well as their spatial arrangement. Although they are challenging to produce experimentally, structural models of protein-NA complexes offer insights into the physical mechanisms underpinning protein-NA recognition. Just 23% of the roughly 44,000 protein structures with known NA binding functions that are currently in the protein data bank include protein complexes bound to NA. Large-scale projected protein structures that may be examined directly using computational techniques are now available thanks to developments in protein structure prediction.

Continue Reading

28 notes

·

View notes

Text

From Vormir, With Love - Part 3

Part 1

Part 2

Tags: strangers to lovers, love in space angst on earth, slavery mention, alien abduction, post Endgame, will add as we go on

Summary: As you're being chased you crash on Vormir. So far, so bad. But things take a turn when you come face to face with a marooned Black Widow.

Word count: 2.9k

A/n: i don't remember which gifs i used already fkdoslzkz anyway, enjoy guys lmao

You awake in a silent room, everyone sleeping. Or almost. It's scary how quickly you notice Natasha's absence in the bunks or on the ground within the improvised beds. With a low groan, you sit up and look around to find her. And you do, she's sitting at the helm, one leg against her torso that she holds with her connected hands, her eyes fixated on the space advancing at speed around her. The colorful tapestry reflects against her like water navigating her skin, and you realize you could look at her forever. This too scares you. So you decide to face your fears head on and stand up to go talk to her.

"I'd prefer if you didn't put your boots on the seats," you whisper as to not awake anyone else. She looks up at you with those blue eyes and you feel your heart skip a beat. She offers a mischievous smile to you, before she puts her leg down.

"Stick in the mud."

"Better than mud on this… I want to say leather, but I have no idea if I'm honest." Her throaty laugh makes you smile and you hide it by looking at the console. You were doing good time, all things considered.

"I'll keep that in mind for the future."

Her teasing makes you escalate the situation. You refuse to back down now that you set up that boundary.

"You better remember."

"Or what?"

"Or I'll have to come for you," you threaten, leaning closer to her before you think better of it. There is a tension there, but you can't quite put your finger on it until she leans up, her eyes searching yours.

"We wouldn't want that," she says in… is that a flirty tone? No. No way. You were imagining things.

Things like a future after you're back on Earth, a very unlikely one, where she comes to visit you, or you go back in space together. Maybe you could be friends, or maybe… you stop your gay brain.

Yes, Natasha Romanoff is a very attractive woman, but one who would never be interested in you. And she is an Avenger, an incredible human being, a hero - no matter if they never came to save you right now. It's crazy how much you already want more from her despite barely knowing her, which is why you decide to simply shelve these budding attractions that you can't call feelings yet. It's better that way.

You just need to get further away from her face. And you did. Very slowly.

"I'm glad we agree," you say, your throat suddenly very dry.

You hear a beep coming from the side and take a look at the controls. Apparently you just made it to the Universal Neural Teleportation Network. You look at the empty space before you, and give a look to Natasha.

"We need to wake up everyone to secure them for the jump." You stand up and start to shake a few of your passengers awake, ordering them around with Natasha. When everyone is secure, you sit back at your seat and send the signal to open the door to the network and go through. You feel the sudden speed pull at your inside uncomfortably and before you know it you're far from Vormir.

-

A few hours go by before you are able to see the outpost orbiting a gaseous planet. You slowly approach until you receive a transmission.

"Outpost Theta-3, decline your identity."

The voice sounds frantic, and you quickly notice you were far from the only ship approaching the outpost. Probably an after effect of half the galaxy appearing back suddenly.

"We're mercenaries, in need of a place to board."

"Alright. Bay E-12 starboard."

"Thank you."

You fly and park next to the platform you were given access to, quickly feeling the gravity dampener relaying your ship's thrusters. It was bumpy with all the ships coming and going right next to you, but it was still a success. You let out a sigh of relief, glad to be able to get out of the ship. After staying in for so long, you're starting to feel cooped up. Still, you let everyone know they can leave safely, and wait for the cleaning crew to go first. When you leave your seat and look behind you, Natasha and June are still there, waiting for you to get out of the cockpit. There are no words exchanged, and no need for them, but you're thankful they waited for you. You join them towards the open door and before the three of you come out, you hand Natasha a small chip.

"Universal translator. So far we all had one, but we might come across people who don't so…"

"Alright," she nods. "Can you…?"

"Ah, yes, let me…" you come closer to her and gently move her hair out of the way, your fingertips caressing her skin so you can attach the chip right behind her ear. She let out a breath, now noticing she held it in at your proximity.

You offer her a shy smile before turning back to the door and going through it, only to see your new friends were under the threat of weapons. Some of them are pointed your way once you go through the door. You quickly put your hands up and someone comes to you to get your weapons, including those Natasha have.

"You're under arrest for being a part of the Grafd Syndicate. Charges include theft, attack against the Nova Empire, slavery, and numerous other crimes," one of them say as he steps forward.

"I'm so glad I can understand them," she seethe with a look your way. Did she think you…? No, she has to know.