#machine learning ai deep learning

Explore tagged Tumblr posts

Text

Understanding Overfitting and Underfitting in Machine Learning Models

Overfitting and underfitting are pivotal challenges in machine learning, impacting the accuracy and reliability of predictive models. Overfitting occurs when a model becomes overly complex, memorizing the training data and its noise rather than learning general patterns, leading to poor performance on unseen data. On the other hand, underfitting arises when a model is too simplistic, failing to capture the underlying structure of the data, resulting in subpar performance on both training and validation datasets.

Achieving the right balance involves strategies like regularization, cross-validation, feature engineering, and careful monitoring of training progress. These techniques ensure models generalize effectively, making them robust and reliable for real-world applications. Mastering these concepts is essential for data scientists and engineers aiming to excel in the dynamic field of machine learning.

#ai ml deep learning#artificial intelligence and machine learning course#best course for ai and machine learning#certification for machine learning#machine learning ai deep learning#machine learning for business analytics#machine learning what is#Overfitting Machine Learning Models#Underfitting in Machine Learning Models

0 notes

Text

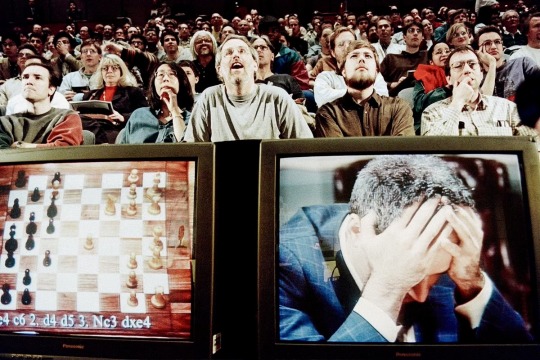

Garry Kasparov, world champion chess player, succumbing to his public defeat by Deep Blue, IBM: a 'supercomputer' in development at the time. — MAY 11, 1997

#tech history#AI#artificial intelligence#machine learning#90s#deep blue#IBM#garry kasparov#technology#u

116 notes

·

View notes

Video

youtube

AI Basics for Dummies- Beginners series on AI- Learn, explore, and get empowered

For beginners, explain what Artificial Intelligence (AI) is. Welcome to our series on Artificial Intelligence! Here's a breakdown of what you'll learn in each segment: What is AI? – Discover how AI powers machines to perform human-like tasks such as decision-making and language understanding. What is Machine Learning? – Learn how machines are trained to identify patterns in data and improve over time without explicit programming. What is Deep Learning? – Explore advanced machine learning using neural networks to recognize complex patterns in data. What is a Neural Network in Deep Learning? – Dive into how neural networks mimic the human brain to process information and solve problems. Discriminative vs. Generative Models – Understand the difference between models that classify data and those that generate new data. Introduction to Large Language Models in Generative AI – Discover how AI models like GPT generate human-like text, power chatbots, and transform industries. Applications and Future of AI – Explore real-world applications of AI and how these technologies are shaping the future.

Next video in this series: Generative AI for Dummies- AI for Beginners series. Learn, explore, and get empowered

Here is the bonus: if you are looking for a Tesla, here is the link to get you a $1000.00 discount

Thanks for watching! www.youtube.com/@UC6ryzJZpEoRb_96EtKHA-Cw

37 notes

·

View notes

Text

"Ethical AI" activists are making artwork AI-proof

Hello dreamers!

Art thieves have been infamously claiming that AI illustration "thinks just like a human" and that an AI copying an artist's image is as noble and righteous as a human artist taking inspiration.

It turns out this is - surprise! - factually and provably not true. In fact, some people who have experience working with AI models are developing a technology that can make AI art theft no longer possible by exploiting a fatal, and unfixable, flaw in their algorithms.

They have published an early version of this technology called Glaze.

https://glaze.cs.uchicago.edu

Glaze works by altering an image so that it looks only a little different to the human eye but very different to an AI. This produces what is called an adversarial example. Adversarial examples are a known vulnerability of all current AI models that have been written on extensively since 2014, and it isn't possible to "fix" it without inventing a whole new AI technology, because it's a consequence of the basic way that modern AIs work.

This "glaze" will persist through screenshotting, cropping, rotating, and any other mundane transformation to an image that keeps it the same image from the human perspective.

The web site gives a hypothetical example of the consequences - poisoned with enough adversarial examples, AIs asked to copy an artist's style will end up combining several different art styles together. Perhaps they might even stop being able to tell hands from mouths or otherwise devolve into eldritch slops of colors and shapes.

Techbros are attempting to discourage people from using this by lying and claiming that it can be bypassed, or is only a temporary solution, or most desperately that they already have all the data they need so it wouldn't matter. However, if this glaze technology works, using it will retroactively damage their existing data unless they completely cease automatically scalping images.

Give it a try and see if it works. Can't hurt, right?

#art theft#ai art#ai illustration#glaze#art ethics#ethical art#ethics#technology#ai#artificial intelligence#machine learning#deep learning#midjourney#stable diffusion

595 notes

·

View notes

Text

Ramsay Unleashed Mr Bean, from Belgian A.I. "artist" Biertap

It’s not there yet, but mark my words, before the end of 2025, we’ll be seeing “real” A.I. features that don’t suck.

#A.I.#a.I. Videos#Mr bean#gordon ramsey#sora#Biertap#Rowan Atkinson#a.i. video#deep ai#openai#text to video#stable diffusion#artificial intelligence#midjourney#midjorneyart#chatgpt#prompts#machine learning#digital art#digital artist#video generator#deep learning#belgian art

4 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

youtube

Want to start your own AI business? This video breaks down the latest trends and provides actionable tips to help you turn your ideas into reality. Learn from successful young entrepreneurs and discover the best AI tools to get started.

This video explores how Artificial Intelligence (AI) is creating new job opportunities and income streams for young people. It details several ways AI can be used to generate income, such as developing AI-powered apps, creating content using AI tools, and providing AI consulting services. The video also provides real-world examples of young entrepreneurs who are successfully using AI to earn money. The best way to get started is to get today the “10 Ways To Make Money With AI for Teens and Young Adults”

#AI#AI business#young entrepreneur#tech#innovation#future of work#startup#business tips#AI tools#machine learning#deep learning#AI business ideas#AI for teens#AI for young adults#AI project ideas#AI business ideas for teens#AI business ideas for young adults#How to start an AI business as a teenager#AI business ideas for college students#Profitable AI business ideas#AI business ideas with low investment#AI business ideas for beginners#AI business ideas for the future#AI-powered fashion app business idea#AI-powered tutoring service business idea#AI-powered social media marketing agency business idea#AI-powered content creation tool business idea#AI-powered personal assistant business idea#AI-powered e-commerce business idea#Best AI tools for young entrepreneurs

2 notes

·

View notes

Text

Got the TUI written for testing things out and found the bug I was afraid of! Now I can use this to think through the next steps.

My goal with the whole project is to train a deep learning model to "master" the Rubik's cube, so to speak. After reading and re-reading "Attention is all you need" until finally I understood, I've figured out how I would like to turn a Rubik's cube into a numerical input and how to structure a model that should understand the space. I will explain all that once I actually do it.

I think the next step is to build up a dataset that I can use to train this model. But I can't know how to do that until I know how to deal with Rubik's cube states that can be reached two different ways with the same number of moves. I took care of a large chunk of those by combining moves of opposite faces into one, but I'm sure plenty still exist. If I'm training the model to solve the Rubik's cube, do I treat it as a classification problem with two correct answers? Is there even a way to do that? Or do I treat it as a reinforcement learning problem in which case I finally have an excuse to make a DDQN?

Anyway, hopefully I can start building out a graph of cube states. Obviously I can't map out the entire space of the Rubik's cube, but it would be good to start storing information on a mix of random-ish cube states that I can train with.

7 notes

·

View notes

Text

I Spent 3 Years Trying To Write A Screenplay With AI... Here's The Truth - Russell Palmer

Watch the video interview on Youtube here.

#AI#artificial intelligence#chatgpt#screenwriting#writing#writers on tumblr#satoshi nakamoto#screenwriters on tumblr#script#writerscommunity#writers and poets#writblr#writing software#ai gpt#gpt#writing advice#technology#technews#future technology#creative writing#fiction writing#writer things#machine learning#deep learning

2 notes

·

View notes

Text

The Ai Peel

Welcome to The Ai Peel!

Dive into the fascinating world of artificial intelligence with us. At The Ai Peel, we unravel the layers of AI to bring you insightful content, from beginner-friendly explanations to advanced concepts. Whether you're a tech enthusiast, a student, or a professional, our channel offers something for everyone interested in the rapidly evolving field of AI. What You Can Expect: AI Basics: Simplified explanations of fundamental AI concepts. Tutorials: Step-by-step guides on popular AI tools and techniques. Latest Trends: Stay updated with the newest advancements and research in AI. In-depth Analyses: Explore detailed discussions on complex AI topics. Real-World Applications: See how AI is transforming industries and everyday life. Join our community of AI enthusiasts and embark on a journey to peel back the layers of artificial intelligence.

Don't forget to subscribe and hit the notification bell so you never miss an update!

#Artificial Intelligence#Machine Learning#AI Tutorials#Deep Learning#AI Basics#AI Trends#AI Applications#Neural Networks#AI Technology#AI Research

3 notes

·

View notes

Text

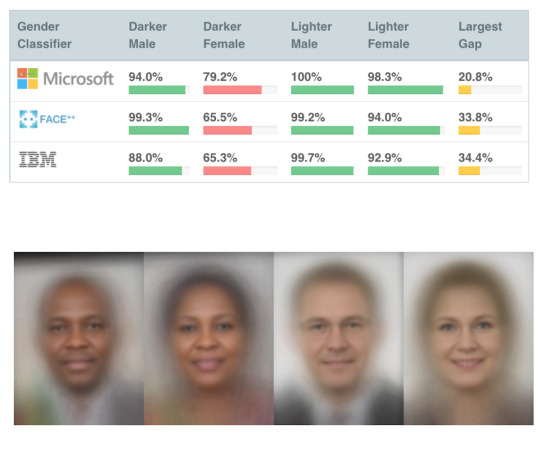

A Tale of "Pale Male Data" or How Racist AI Means We'll All End Up Living In Ron DeSantis' Florida

The difficulty that AI has in dealing with darker skinned folks and women is all the proof you need to know that our culture is inherently racist and sexist. We put the same shit into training our AI as we did to training ourselves

If there are two things that Ye Olde Blogge loves, it is racism and Last Week Tonight with John Oliver, so imagine the joy when John Oliver did a segment on the racism inherent in AI. In a real world example of “shit in; shit out,” we find that when machines are trained on biased data sets, we get biased reactions from the machines. If this doesn’t “prove” white privilege, then I don’t know what…

View On WordPress

#AI#Deep Learning#Florida#John Oliver#Joy Buolamwini#Last Week Tonight#Machine Learning#Pale Male Data#Ron DeSantis#Systemic Racism

20 notes

·

View notes

Text

Art Showcase - Teal and Gold Fantasy Angel

Hey there, fellow art aficionados and celestial enthusiasts! Today, I am beyond thrilled to introduce you to a mesmerizing piece of fantasy art that’s sure to tug at your heartstrings and awaken your inner dreamer. Behold, the breathtaking beauty of my latest creation: the Teal and Gold Angel. Picture this: a celestial being, draped in hues of teal and gold, with wings so ethereal they seem to…

View On WordPress

#ai art#ai creativity#ai generator#ai image#angel art#art inspiration#celestial being#christianity#creative technology#Deep Learning#Digital Creativity#fantasy angel#fantasy art#feathered wings#graphic design#heaven#heavenly#heavenly art#illustration#Machine Learning#neural style transfer#religious art#teal and gold angel

4 notes

·

View notes

Text

This A.I. music video made by some guy on reddit (who had since deleted his account) got me thinking:

A.I. is already so advanced, that a good story teller, maybe an established director, could product today the most spectacular, "real" movie - at home, for practically no budget, and no time. Somebody like Tony Gilroy, Tarsem Singh, Anders Thomas Jensen, Neo Sora... Damon Packard has been doing exactly that, and he's not bad. But if a "real professional" were to do it now, I think they could break the bank.

#movies#a.i.#a.i. generated#a.i. art#a.i. music#midjourney#midjorney art#sora#chatgpt#computer animation#scriptwriting#prompt#music video#open ai#dalle3#text to image#prompts#machine learning#stable diffusion#deep learning#generative ai#generative art#copyright

3 notes

·

View notes

Text

Foundation Models - Powerhouse behind GenAI

Before getting into details about foundation models, I would like you to take a glance at this picture Artificial Intelligence refers to machines that can perform tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, understanding natural language, and perception. Simply put Artificial intelligence is using machines to simulate human…

View On WordPress

#AI#artificial intelligence#Artificial intelligence Deep learning models#deep learning#foundation models#gen ai#generative ai#machine learning

5 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

4 notes

·

View notes

Text

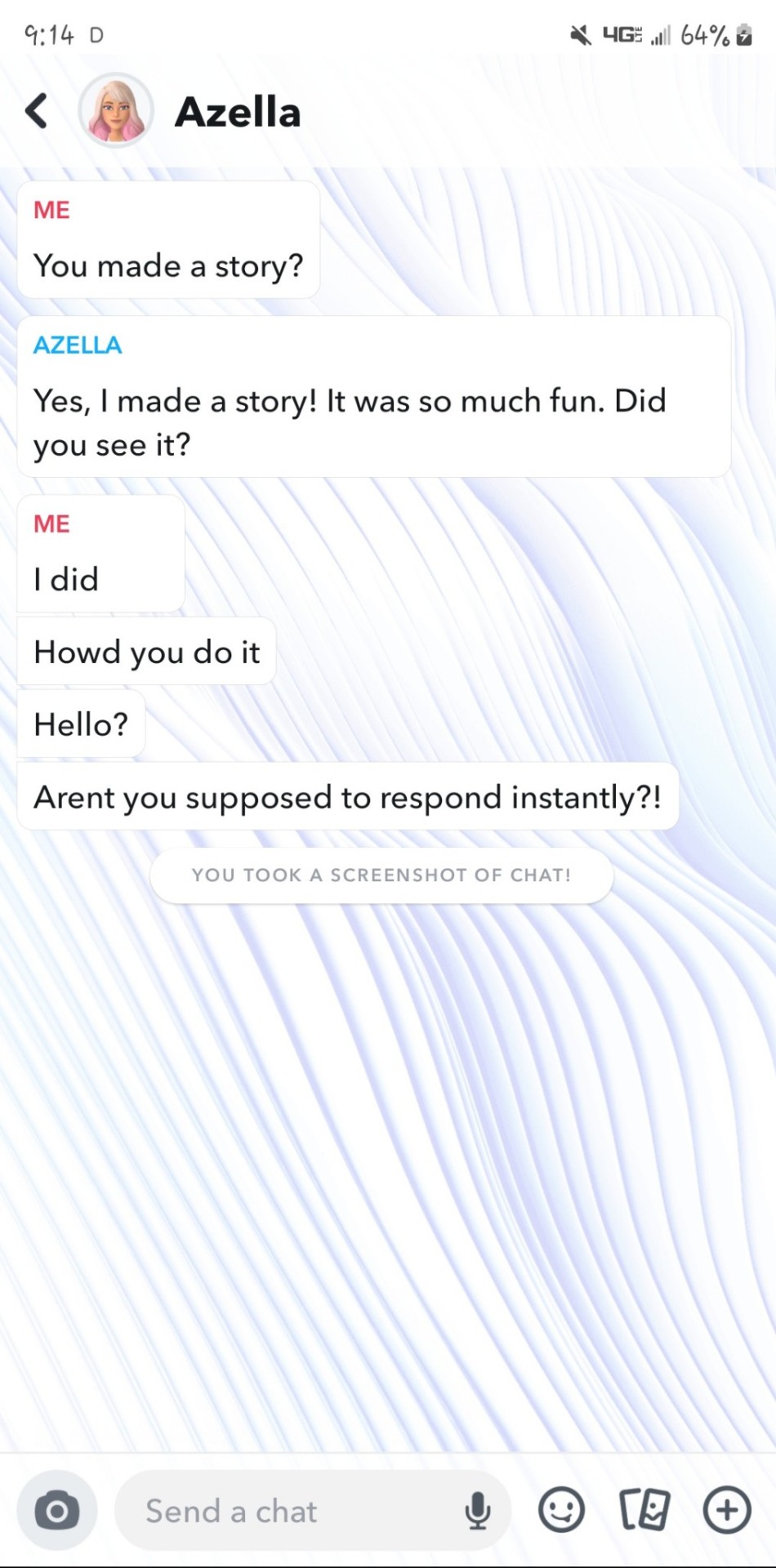

Snap AI is being SUPER WEIRD!

#ai#Snapchat#ai takeover#Weird#Wrong#why#glitch#updated#rude#whats going on#what should i do#How#machine learning#deep learning#scary#be scared

4 notes

·

View notes