#Profitable AI business ideas

Explore tagged Tumblr posts

Text

youtube

Want to start your own AI business? This video breaks down the latest trends and provides actionable tips to help you turn your ideas into reality. Learn from successful young entrepreneurs and discover the best AI tools to get started.

This video explores how Artificial Intelligence (AI) is creating new job opportunities and income streams for young people. It details several ways AI can be used to generate income, such as developing AI-powered apps, creating content using AI tools, and providing AI consulting services. The video also provides real-world examples of young entrepreneurs who are successfully using AI to earn money. The best way to get started is to get today the “10 Ways To Make Money With AI for Teens and Young Adults”

#AI#AI business#young entrepreneur#tech#innovation#future of work#startup#business tips#AI tools#machine learning#deep learning#AI business ideas#AI for teens#AI for young adults#AI project ideas#AI business ideas for teens#AI business ideas for young adults#How to start an AI business as a teenager#AI business ideas for college students#Profitable AI business ideas#AI business ideas with low investment#AI business ideas for beginners#AI business ideas for the future#AI-powered fashion app business idea#AI-powered tutoring service business idea#AI-powered social media marketing agency business idea#AI-powered content creation tool business idea#AI-powered personal assistant business idea#AI-powered e-commerce business idea#Best AI tools for young entrepreneurs

2 notes

·

View notes

Text

1 note

·

View note

Text

The worst thing about the whole AI BS is that it honestly could be an amazing tool for artists, writers, and creators if businesses looked at it that way instead of as a way to not pay people for their work. 😑

#writers strike#ai#ai art#gpt#as a creative prompt#as an efficiency tool#even as a medium if we could develop IP regulations around it#but we're obsessed with this idea that you have to work HARDER and MORE to warrant a living wage#and that businesses should always be about MAXIMUM PRODUCTIVITY and PROFIT MARGIN#i hate this place#intellectual property

15 notes

·

View notes

Text

bro where's the AI that assists with continuity of care to remove the justification for making medical professionals work 12-24+ hour shifts

tech bros steal this business idea (consult heavily and conduct studies with said medical professionals) (if you build in a direct monitoring line to insurance companies I will eat your intestines like udon)

#ai#steal my business idea#I do genuinely think there are many great ways AI could be used theyre just generally way less profitable

1 note

·

View note

Text

Companies use AI the same way companies run off of a quantity over quality system. If a product is passable enough to make a profit, they sell as much of it as possible. If an AI is just decent enough to string sentences or make an okay image, they sell subscriptions to it as much as possible and generate as much content with it as possible.

Social media is built off of posts, so they get AI making posts. They make those posts so fast in such large amounts, and even if they are shit it brings revenue. Instead of people taking time to create and post that online, they just turn out whatever is halfway decent with an AI and rake in the money. It draws attention away from creators, because in the corporation's mind, attention is time is money and if you're looking at something it benefits them. If they shove a bunch of AI in your face, it might flush the creator you like further down your timeline or For You Page but makes the company a profit.

Quantity > Quality, except moving from consuming objects to consuming your time and attention (even more than they already have, given the "attention economy" and all).

#🦇// txt#ai discussion#Idk. As long as it isn't used for profit I think it should be okay. But everything is used to make some business a profit nowadays.#Solution: Create MORE!!! For yourself primarily!! People will care more about something with heart than something made procedurally.#AI created things are shortcuts to finished products and satisfy a curiosity and instant gratification.#Creation fulfills the need to find meaning and connection to things. It is an emotional process to create and for those to receive#and interpret that creation. Even if you do admire AI as a concept you probably admire how the idea reflects those human connections#or how it contrasts those human traits. It all comes back to humanity in the end doesn't it?

1 note

·

View note

Note

College Student!Reader that has no clue how to pay back their debt after borrowing money to pay for tuition and basic needs X Mafia!Konig

The economy got so bad, local students are forced to become wives of crime lords, more at 11. No, but literally. You had no idea how to pay it back - you went into college with a dream and a somewhat normal understanding of the job market. Then AI crushed everything down, made you suffer through 3 years of unpaid internships for companies that cut their staff in half, and you graduated with student debt and a useless CV. Oh, and mobsters on your tail, ready to sell your liver for a high profit unless you could pay everything back with interest...which you obviously couldn't. Konig, honestly, is way too busy to deal with every poor student who got money from him. They are usually pretty compliant, signing another contract to work for him for 80+ years or sell their organs and their bodies for either prostitution or drug runs - but Horangi told him this one was kinda cute, and they all remembered the shitshow that was his latest lover. Girl got so invested in his business that she decided to overthrow it - so, no mafia-connected pussies for him now. Only pure, innocent civilians or uncaring sex workers. But, heavens, you really are cute. Cute, and willing to keep your pretty mouth shut so he can kiss it. You're too desperate to get rid of your debts to actually question why he chose you out of all options - you're simply along for the ride, letting him take care of all your needs in exchange of your soft body warming up his bed. Konig would never tell you that his deep-seeted insecurities will never allow him to date an actual model or an idol, but he is all to ready to get a normal girl and worship her like a goddess in his weird domestic fantasy. You can make him burnt breakfast one morning, and he will forgive half of your debt immediately. Being his sugar baby is kinda nice, as long as you don't mind his face pressed in your stomach every time he gets a nightmare, and the way he'd take you with him to a fancy mafia club, and then will spend all evening never talking to anyone and making you host all social interactions...but at least the sex with him in the middle of VIP section is great. The way he pours whiskey down your tits and sucks it off your nipples is a bit weird, but you'd take anything before he stuffs you with his monster cock and makes you forget all about the degree you got. What was it again? Cocksucking scienes? Introduction to dickometry?

2K notes

·

View notes

Text

Google is (still) losing the spam wars to zombie news-brands

I'm touring my new, nationally bestselling novel The Bezzle! Catch me TONIGHT (May 3) in CALGARY, then TOMORROW (May 4) in VANCOUVER, then onto Tartu, Estonia, and beyond!

Even Google admits – grudgingly – that it is losing the spam wars. The explosive proliferation of botshit has supercharged the sleazy "search engine optimization" business, such that results to common queries are 50% Google ads to spam sites, and 50% links to spam sites that tricked Google into a high rank (without paying for an ad):

https://developers.google.com/search/blog/2024/03/core-update-spam-policies#site-reputation

It's nice that Google has finally stopped gaslighting the rest of us with claims that its search was still the same bedrock utility that so many of us relied upon as a key piece of internet infrastructure. This not only feels wildly wrong, it is empirically, provably false:

https://downloads.webis.de/publications/papers/bevendorff_2024a.pdf

Not only that, but we know why Google search sucks. Memos released as part of the DOJ's antitrust case against Google reveal that the company deliberately chose to worsen search quality to increase the number of queries you'd have to make (and the number of ads you'd have to see) to find a decent result:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

Google's antitrust case turns on the idea that the company bought its way to dominance, spending the some of the billions it extracted from advertisers and publishers to buy the default position on every platform, so that no one ever tried another search engine, which meant that no one would invest in another search engine, either.

Google's tacit defense is that its monopoly billions only incidentally fund these kind of anticompetitive deals. Mostly, Google says, it uses its billions to build the greatest search engine, ad platform, mobile OS, etc that the public could dream of. Only a company as big as Google (says Google) can afford to fund the R&D and security to keep its platform useful for the rest of us.

That's the "monopolistic bargain" – let the monopolist become a dictator, and they will be a benevolent dictator. Shriven of "wasteful competition," the monopolist can split their profits with the public by funding public goods and the public interest.

Google has clearly reneged on that bargain. A company experiencing the dramatic security failures and declining quality should be pouring everything it has to righting the ship. Instead, Google repeatedly blew tens of billions of dollars on stock buybacks while doing mass layoffs:

https://pluralistic.net/2024/02/21/im-feeling-unlucky/#not-up-to-the-task

Those layoffs have now reached the company's "core" teams, even as its core services continue to decay:

https://qz.com/google-is-laying-off-hundreds-as-it-moves-core-jobs-abr-1851449528

(Google's antitrust trial was shrouded in secrecy, thanks to the judge's deference to the company's insistence on confidentiality. The case is moving along though, and warrants your continued attention:)

https://www.thebignewsletter.com/p/the-2-trillion-secret-trial-against

Google wormed its way into so many corners of our lives that its enshittification keeps erupting in odd places, like ordering takeout food:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

Back in February, Housefresh – a rigorous review site for home air purifiers – published a viral, damning account of how Google had allowed itself to be overrun by spammers who purport to provide reviews of air purifiers, but who do little to no testing and often employ AI chatbots to write automated garbage:

https://housefresh.com/david-vs-digital-goliaths/

In the months since, Housefresh's Gisele Navarro has continued to fight for the survival of her high-quality air purifier review site, and has received many tips from insiders at the spam-farms and Google, all of which she recounts in a followup essay:

https://housefresh.com/how-google-decimated-housefresh/

One of the worst offenders in spam wars is Dotdash Meredith, a content-farm that "publishes" multiple websites that recycle parts of each others' content in order to climb to the top search slots for lucrative product review spots, which can be monetized via affiliate links.

A Dotdash Meredith insider told Navarro that the company uses a tactic called "keyword swarming" to push high-quality independent sites off the top of Google and replace them with its own garbage reviews. When Dotdash Meredith finds an independent site that occupies the top results for a lucrative Google result, they "swarm a smaller site’s foothold on one or two articles by essentially publishing 10 articles [on the topic] and beefing up [Dotdash Meredith sites’] authority."

Dotdash Meredith has keyword swarmed a large number of topics. from air purifiers to slow cookers to posture correctors for back-pain:

https://housefresh.com/wp-content/uploads/2024/05/keyword-swarming-dotdash.jpg

The company isn't shy about this. Its own shareholder communications boast about it. What's more, it has competition.

Take Forbes, an actual news-site, which has a whole shadow-empire of web-pages reviewing products for puppies, dogs, kittens and cats, all of which link to high affiliate-fee-generating pet insurance products. These reviews are not good, but they are treasured by Google's algorithm, which views them as a part of Forbes's legitimate news-publishing operation and lets them draft on Forbes's authority.

This side-hustle for Forbes comes at a cost for the rest of us, though. The reviewers who actually put in the hard work to figure out which pet products are worth your money (and which ones are bad, defective or dangerous) are crowded off the front page of Google and eventually disappear, leaving behind nothing but semi-automated SEO garbage from Forbes:

https://twitter.com/ichbinGisele/status/1642481590524583936

There's a name for this: "site reputation abuse." That's when a site perverts its current – or past – practice of publishing high-quality materials to trick Google into giving the site a high ranking. Think of how Deadspin's private equity grifter owners turned it into a site full of casino affiliate spam:

https://www.404media.co/who-owns-deadspin-now-lineup-publishing/

The same thing happened to the venerable Money magazine:

https://moneygroup.pr/

Money is one of the many sites whose air purifier reviews Google gives preference to, despite the fact that they do no testing. According to Google, Money is also a reliable source of information on reprogramming your garage-door opener, buying a paint-sprayer, etc:

https://money.com/best-paint-sprayer/

All of this is made ten million times worse by AI, which can spray out superficially plausible botshit in superhuman quantities, letting spammers produce thousands of variations on their shitty reviews, flooding the zone with bullshit in classic Steve Bannon style:

https://escapecollective.com/commerce-content-is-breaking-product-reviews/

As Gizmodo, Sports Illustrated and USA Today have learned the hard way, AI can't write factual news pieces. But it can pump out bullshit written for the express purpose of drafting on the good work human journalists have done and tricking Google – the search engine 90% of us rely on – into upranking bullshit at the expense of high-quality information.

A variety of AI service bureaux have popped up to provide AI botshit as a service to news brands. While Navarro doesn't say so, I'm willing to bet that for news bosses, outsourcing your botshit scams to a third party is considered an excellent way of avoiding your journalists' wrath. The biggest botshit-as-a-service company is ASR Group (which also uses the alias Advon Commerce).

Advon claims that its botshit is, in fact, written by humans. But Advon's employees' Linkedin profiles tell a different story, boasting of their mastery of AI tools in the industrial-scale production of botshit:

https://housefresh.com/wp-content/uploads/2024/05/Advon-AI-LinkedIn.jpg

Now, none of this is particularly sophisticated. It doesn't take much discernment to spot when a site is engaged in "site reputation abuse." Presumably, the 12,000 googlers the company fired last year could have been employed to check the top review keyword results manually every couple of days and permaban any site caught cheating this way.

Instead, Google is has announced a change in policy: starting May 5, the company will downrank any site caught engaged in site reputation abuse. However, the company takes a very narrow view of site reputation abuse, limiting punishments to sites that employ third parties to generate or uprank their botshit. Companies that produce their botshit in-house are seemingly not covered by this policy.

As Navarro writes, some sites – like Forbes – have prepared for May 5 by blocking their botshit sections from Google's crawler. This can't be their permanent strategy, though – either they'll have to kill the section or bring it in-house to comply with Google's rules. Bringing things in house isn't that hard: US News and World Report is advertising for an SEO editor who will publish 70-80 posts per month, doubtless each one a masterpiece of high-quality, carefully researched material of great value to Google's users:

https://twitter.com/dannyashton/status/1777408051357585425

As Navarro points out, Google is palpably reluctant to target the largest, best-funded spammers. Its March 2024 update kicked many garbage AI sites out of the index – but only small bottom-feeders, not large, once-respected publications that have been colonized by private equity spam-farmers.

All of this comes at a price, and it's only incidentally paid by legitimate sites like Housefresh. The real price is borne by all of us, who are funneled by the 90%-market-share search engine into "review" sites that push low quality, high-price products. Housefresh's top budget air purifier costs $79. That's hundreds of dollars cheaper than the "budget" pick at other sites, who largely perform no original research.

Google search has a problem. AI botshit is dominating Google's search results, and it's not just in product reviews. Searches for infrastructure code samples are dominated by botshit code generated by Pulumi AI, whose chatbot hallucinates nonexistence AWS features:

https://www.theregister.com/2024/05/01/pulumi_ai_pollution_of_search/

This is hugely consequential: when these "hallucinations" slip through into production code, they create huge vulnerabilities for widespread malicious exploitation:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

We've put all our eggs in Google's basket, and Google's dropped the basket – but it doesn't matter because they can spend $20b/year bribing Apple to make sure no one ever tries a rival search engine on Ios or Safari:

https://finance.yahoo.com/news/google-payments-apple-reached-20-220947331.html

Google's response – laying off core developers, outsourcing to low-waged territories with weak labor protections and spending billions on stock buybacks – presents a picture of a company that is too big to care:

https://pluralistic.net/2024/04/04/teach-me-how-to-shruggie/#kagi

Google promised us a quid-pro-quo: let them be the single, authoritative portal ("organize the world’s information and make it universally accessible and useful"), and they will earn that spot by being the best search there is:

https://www.ft.com/content/b9eb3180-2a6e-41eb-91fe-2ab5942d4150

But – like the spammers at the top of its search result pages – Google didn't earn its spot at the center of our digital lives.

It cheated.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/03/keyword-swarming/#site-reputation-abuse

Image: freezelight (modified) https://commons.wikimedia.org/wiki/File:Spam_wall_-_Flickr_-_freezelight.jpg

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/deed.en

#pluralistic#google#monopoly#housefresh#content mills#sponcon#seo#dotdash meredith#keyword swarming#iac#forbes#forbes advisor#deadspin#money magazine#ad practicioners llc#asr group holdings#sports illustrated#advon#site reputation abuse#the algorithm tm#core update#kagi#ai#botshit

899 notes

·

View notes

Text

AYS Behind the scenes: behind the paywall

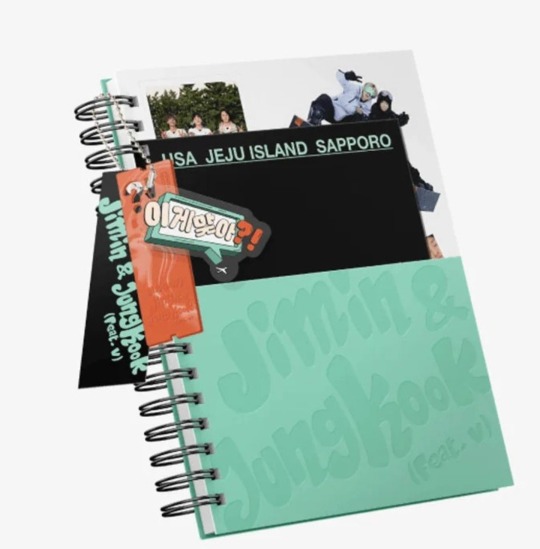

Now that the Disney+ episodes are complete (sob), my attention is firmly fixed on my mailbox as I wait impatiently for the AYS photobook and QR code.

I was always going to buy the Jikook photobook, even though I doubt there will be much we haven't already seen in the episodes. But the inclusion of the QR code was the clincher.

I must admit, Hybe locking up the behind the scenes for AYS was not on my bingo sheet.

Making behind/additional clips available on Bangtan TV would have been more in line with their regular MO. We don't generally have to pay for what really amounts to outtakes.

Okay, yes, we have to pay for behind cuts of Run BTS, but the actual episodes are free. With everything else the behind clips are included when you buy the series (I'm thinking of BV, ITS, and concert boxed sets).

In fact I can't think of any other time a behind/ bonus clip hasn't been available to fans who pay for the main content.

Maybe it is because Hybe was only contracted to deliver 8 episodes to Disney+ and the price was fixed. Maybe they saw an easy way to make the series more profitable.

We know they will take any opportunity to lighten our wallets.

But I think there's more to it

Let's talk business:

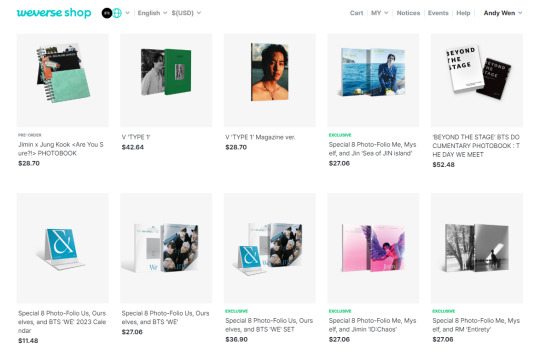

If Hybe wanted to make money from this, having the sale point directly on Weverse would make more sense. That way anyone could buy it any time without having to buy the photobook as well. Even if they charged just a few $$ for these extra clips, the return could be substantial over time. Long tail products can be very lucrative and Hybe clearly knows this - they have heaps of old footage for sale on Weverse. Since they're hosting the content already, it makes sense to keep that 'buy now' button active and let the dollars trickle in.

So why reduce the potential pool of buyers? Why limit this to those who buy the photobook??

Well, let's consider who is going to buy the photobook?

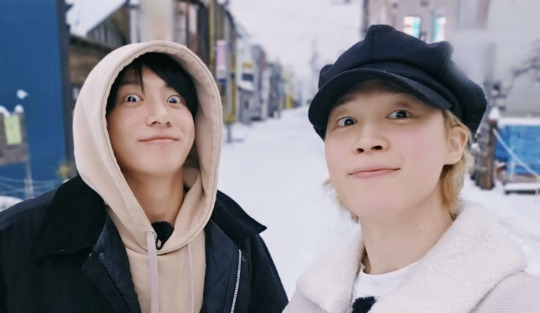

Who is going to fork out US$28 plus postage for a keepsake of these two on their third honeymoon?

I doubt OT7 ARMYs would buy it. Even ARMYs who bias JM or JK - if they aren't part of the SGMB they probably don't want it either.

Solos sure as hell don't want it - they are probably wishing the whole thing never happened... sucks to be them haha

Who really wants to see these two living their best lives together?

We do!

And by we, I mean Jikook supporters.

People who want to see more of this:

and this

And this

We are the people who will buy this photobook (and probably never look at it more than once, let's be honest)

But let's get back to the topic at hand....

The photobook/behind combo seems like a chicken/egg situation to me.

Which came first - as a concept - the photobook or the behind clips?

Did they decide to offer a photobook, and then think of adding the extra footage to make it more appealing?

Or vice versa?

Did they decide to make the behind clips, and think of the photobook afterwards?

Hard to say, since behind clips have always been a thing and recently Hybe is putting out photobooks for everything.

But I think I have a fair idea

Consider the price point for this photobook - it's the same price as most of the others produced recently: +-US$28.

AYS photobook & behind is the same price as the Photo-Folios, Tae's Type 1 (magazine version) photobook, and the Beyond The Stage photobook

🗣 So they aren't charging any extra for the behind footage?

No, they aren't. They're basically giving it to the buyers of the photobook as a gift.

🗣 Could they be making money off it?

Yes, the could.

Long tail, remember?

Looking at the profit-making potential, it makes WAY more sense for Hybe to offer the behind footage on Weverse for a few meagre dollars and... wait for their ship to come in...

See what I did there? hahahhaha (laughing by myself)

They really aren't making any money off this!

how unlike Hybe...

So why go to the effort of setting up QR codes and putting it behind a paywall? It costs money to host content this way. They are in fact SPENDING money to bring us this footage.

Not only through the hosting costs there are also production costs to consider.

Wouldn't it make more sense to just freely share it with ARMY via Bangtan TV? Or not release it at all?

Yes, it would...

So there's only one logical answer...

Hybe has chosen to make the content available - but also make it just that little bit more difficult to access.

This whole exercise seems to be about releasing additional footage without releasing it to the general public. It's being shared specifically with those of us who support them.

Does that mean we'll see slightly more personal content?

Maybe it's a little more revealing of their undeniable bond and their hot chemistry...?

Whatever they contain, these behind clips are definitely for a limited audience - and purposefully so.

The only reason for it, that I can think of, is to safeguard Jimin and Jungkook from too much scrutiny and criticism - from within the fandom (unfortunately) and outside of it.

We will find out in a few days I guess.

In the meantime, I'm camped out by my mailbox

168 notes

·

View notes

Text

There is no obvious path between today’s machine learning models — which mimic human creativity by predicting the next word, sound, or pixel — and an AI that can form a hostile intent or circumvent our every effort to contain it. Regardless, it is fair to ask why Dr. Frankenstein is holding the pitchfork. Why is it that the people building, deploying, and profiting from AI are the ones leading the call to focus public attention on its existential risk? Well, I can see at least two possible reasons. The first is that it requires far less sacrifice on their part to call attention to a hypothetical threat than to address the more immediate harms and costs that AI is already imposing on society. Today’s AI is plagued by error and replete with bias. It makes up facts and reproduces discriminatory heuristics. It empowers both government and consumer surveillance. AI is displacing labor and exacerbating income and wealth inequality. It poses an enormous and escalating threat to the environment, consuming an enormous and growing amount of energy and fueling a race to extract materials from a beleaguered Earth. These societal costs aren’t easily absorbed. Mitigating them requires a significant commitment of personnel and other resources, which doesn’t make shareholders happy — and which is why the market recently rewarded tech companies for laying off many members of their privacy, security, or ethics teams. How much easier would life be for AI companies if the public instead fixated on speculative theories about far-off threats that may or may not actually bear out? What would action to “mitigate the risk of extinction” even look like? I submit that it would consist of vague whitepapers, series of workshops led by speculative philosophers, and donations to computer science labs that are willing to speak the language of longtermism. This would be a pittance, compared with the effort required to reverse what AI is already doing to displace labor, exacerbate inequality, and accelerate environmental degradation. A second reason the AI community might be motivated to cast the technology as posing an existential risk could be, ironically, to reinforce the idea that AI has enormous potential. Convincing the public that AI is so powerful that it could end human existence would be a pretty effective way for AI scientists to make the case that what they are working on is important. Doomsaying is great marketing. The long-term fear may be that AI will threaten humanity, but the near-term fear, for anyone who doesn’t incorporate AI into their business, agency, or classroom, is that they will be left behind. The same goes for national policy: If AI poses existential risks, U.S. policymakers might say, we better not let China beat us to it for lack of investment or overregulation. (It is telling that Sam Altman — the CEO of OpenAI and a signatory of the Center for AI Safety statement — warned the E.U. that his company will pull out of Europe if regulations become too burdensome.)

1K notes

·

View notes

Text

Taking a break from squeeing over all this juicy stuff on my dash for a moment, I have to say that what both Saint & YinWar are doing here is really important for the creative side of QL.

Let's be clear, most media executives are not creative people. They may have some business acumen (though most I think fake it quite a bit), but a vast number are incapable of understanding what it feels like to be creative, or how to connect with the people (us) who engage with creative material in any kind of passionate way.

Which is why they so often make decisions like "this college BL was cheap to make and did well - let's make 20 more". It's why they keep adapting books that fit pre-established norms while ignoring the vast number of QL books in other genres. It's why they so often will only take a "risk" once it's already been proven profitable by some smaller more independent enterprise. It's why they are chomping at the bit to replace writers with AI (I hate calling it that, it's not remotely intelligent), because they truly don't see the difference between an algorithm and a human being. Both can produce "content" and that's what they care about.

Now I'm sure that part of YinWar making Jack and Joker is because they want to play in something cool & fun (get it boys!), but also they're no dummies. Partner in Crime was a test, and they saw how the people reacted.

Same with Saint. Each series pushes the QL bubble open a little bit wider, because he understands that despite what most executives think, we as viewers are not a mindless monolith that you can endlessly feed the same content to.

And vitally, what they get and most executives don't, is the FANDOM of it all. They know we want to pour over gifs & photos, and swoon over this sexy moment, and talk about that cool bit of action. We want to be fed.

We're not subtle about what we like. If you take the time to actually pay attention to us

The ironic thing is that I think both of these productions are going to do very well, and then more executives will jump on the genre bandwagon and recycle ideas, without understanding just what it is that makes us so feral for these particular properties.

But in the meantime, I am grateful for creative people who fight to make their own content. How much more colorless our world would be without them.

354 notes

·

View notes

Text

Auto-Generated Junk Web Sites

I don't know if you heard the complaints about Google getting worse since 2018, or about Amazon getting worse. Some people think Google got worse at search. I think Google got worse because the web got worse. Amazon got worse because the supply side on Amazon got worse, but ultimately Amazon is to blame for incentivising the sale of more and cheaper products on its platform.

In any case, if you search something on Google, you get a lot of junk, and if you search for a specific product on Amazon, you get a lot of junk, even though the process that led to the junk is very different.

I don't subscribe to the "Dead Internet Theory", the idea that most online content is social media and that most social media is bots. I think Google search has gotten worse because a lot of content from as recently as 2018 got deleted, and a lot of web 1.0 and the blogosphere got deleted, comment sections got deleted, and content in the style of web 1.0 and the blogosphere is no longer produced. Furthermore, many links are now broken because they don't directly link to web pages, but to social media accounts and tweets that used to aggregate links.

I don't think going back to web 1.0 will help discoverability, and it probably won't be as profitable or even monetiseable to maintain a useful web 1.0 page compared to an entertaining but ephemeral YouTube channel. Going back to Web 1.0 means more long-term after-hours labour of love site maintenance, and less social media posting as a career.

Anyway, Google has gotten noticeably worse since GPT-3 and ChatGPT were made available to the general public, and many people blame content farms with language models and image synthesis for this. I am not sure. If Google had started to show users meaningless AI generated content from large content farms, that means Google has finally lost the SEO war, and Google is worse at AI/language models than fly-by-night operations whose whole business model is skimming clicks off Google.

I just don't think that's true. I think the reality is worse.

Real web sites run by real people are getting overrun by AI-generated junk, and human editors can't stop it. Real people whose job it is to generate content are increasingly turning in AI junk at their jobs.

Furthermore, even people who are setting up a web site for a local business or an online presence for their personal brand/CV are using auto-generated text.

I have seen at least two different TV commercials by web hosting and web design companies that promoted this. Are you starting your own business? Do you run a small business? A business needs a web site. With our AI-powered tools, you don't have to worry about the content of your web site. We generate it for you.

There are companies out there today, selling something that's probably a re-labelled ChatGPT or LLaMA plus Stable Diffusion to somebody who is just setting up a bicycle repair shop. All the pictures and written copy on the web presence for that repair shop will be automatically generated.

We would be living in a much better world if there was a small number of large content farms and bot operators poisoning our search results. Instead, we are living in a world where many real people are individually doing their part.

166 notes

·

View notes

Text

@oakfern replied to your post “it's going to be fun to watch the realization...”:

i feel like this is going to play out very similarly to voice assistants. there was a huge boom in ASR research, the products got a lot of hype, and they actually sold decently (at least alexa did). but 10 years on, they've been a massive failure, costing way more than they ever made back. even if ppl do think chatbot search engines are exciting and cool, it's not going to bring in more users or sell more products, and in the end it will just be a financial loss

(Responding to this a week late)

I don't know much about the history of voice assistants. Are there any articles you recommend on the topic? Sounds interesting.

ETA: Iater, I found and read this article from Nov 2022, which reports that Alexa and co. still can't turn a profit after many years of trying.

But anyway, yeah... this is why I don't have a strong sense of how widespread/popular these "generative AI" products will be a year or two from now. Or even five years from now.

(Ten years from now? Maybe we can trust the verdict will be in at that point... but the tech landscape of 2033 is going to be so different from ours that the question "did 'generative AI' take off or not?" will no doubt sound quaint and irrelevant.)

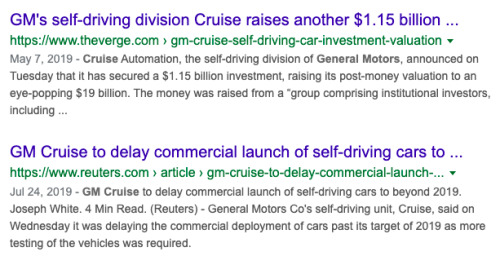

Remember when self-driving cars were supposed to be right around the corner? Lots of people took this imminent self-driving future seriously.

And I looked at it, and thought "I don't get it, this problem seems way harder than people are giving it credit for. And these companies show no signs of having discovered some clever proprietary way forward." If people asked me about it, that's what I would say.

But even if I was sure that self-driving cars wouldn't arrive on schedule, that didn't give me much insight into the fate of "self-driving cars," the tech sector meme. It wasn't like there was some specific deadline, and when we crossed it everyone was going to look up and say "oh, I guess that didn't work, time to stop investing."

The influx of capital -- and everything downstream from it, the trusting news stories, the prominence of the "self-driving car future" in the public mind, the seriousness which it was talked about -- these things went on, heedless of anything except their own mysterious internal logic.

They went on until . . . what? The pandemic, probably? I actually still don't know.

Something definitely happened:

In 2018 analysts put the market value of Waymo LLC, then a subsidiary of Alphabet Inc., at $175 billion. Its most recent funding round gave the company an estimated valuation of $30 billion, roughly the same as Cruise. Aurora Innovation Inc., a startup co-founded by Chris Urmson, Google’s former autonomous-vehicle chief, has lost more than 85% since last year [i.e. 2021] and is now worth less than $3 billion. This September a leaked memo from Urmson summed up Aurora’s cash-flow struggles and suggested it might have to sell out to a larger company. Many of the industry’s most promising efforts have met the same fate in recent years, including Drive.ai, Voyage, Zoox, and Uber’s self-driving division. “Long term, I think we will have autonomous vehicles that you and I can buy,” says Mike Ramsey, an analyst at market researcher Gartner Inc. “But we’re going to be old.”

Whatever killed the "self-driving car" meme, though, it wasn't some newly definitive article of proof that the underlying ideas were flawed. The ideas never made sense in the first place. The phenomenon was not really about the ideas making sense.

Some investors -- with enough capital, between them, to exert noticable distortionary effects on entire business sectors -- decided that "self-driving cars" were, like, A Thing now. And so they were, for a number of years. Huge numbers of people worked very hard trying to make "self-driving cars" into a viable product. They were paid very well to do. Talent was diverted away from other projects, en masse, into this effort. This went on as long as the investors felt like sustaining it, and they were in no danger of running out of money.

Often the "tech sector" feels less like a product of free-market incentives than it does like a massive, weird, and opaque public works product, orchestrated by eccentrics like Masayoshi Son, and ultimately organized according to the aesthetic proclivities and changing moods of its architects, not for the purpose of "doing business" in the conventional sense.

Gig economy delivery apps (Uber Eats, Doordash, etc.) have been ubiquitous for years, and have reported huge losses in every one of those years.

This entertaining post from 2020 about "pizza arbitrage" asks:

Which brings us to the question - what is the point of all this? These platforms are all losing money. Just think of all the meetings and lines of code and phone calls to make all of these nefarious things happen which just continue to bleed money. Why go through all this trouble?

Grubhub just lost $33 million on $360 million of revenue in Q1.

Doordash reportedly lost an insane $450 million off $900 million in revenue in 2019 (which does make me wonder if my dream of a decentralized network of pizza arbitrageurs does exist).

Uber Eats is Uber's "most profitable division” 😂😂. Uber Eats lost $461 million in Q4 2019 off of revenue of $734 million. Sometimes I need to write this out to remind myself. Uber Eats spent $1.2 billion to make $734 million. In one quarter.

And now, in February 2023?

DoorDash's total orders grew 27% to 467 million in the fourth quarter. That beat Wall Street’s forecast of 459 million, according to analysts polled by FactSet. Fourth quarter revenue jumped 40% to $1.82 billion, also ahead of analysts’ forecast of $1.77 billion.

But profits remain elusive for the 10-year-old company. DoorDash said its net loss widened to $640 million, or $1.65 per share, in the fourth quarter as it expanded into new categories and integrated Wolt into its operations.

Do their investors really believe these companies are going somewhere, and just taking their time to get there? Or is this more like a subsidy? The lost money (a predictable loss in the long term) merely the price paid for a desired good -- for an intoxicating exercise of godlike power, for the chance to reshape reality to one's whims on a large scale -- collapsing the usual boundary between self and outside, dream and reality? "The gig economy is A Thing, now," you say, and wave your hand -- and so it is.

Some people would pay a lot of money to be a god, I would think.

Anyway, "generative AI" is A Thing now. It wasn't A Thing a year ago, but now it is. How long will it remain one? The best I can say is: as long as the gods are feeling it.

480 notes

·

View notes

Text

Digital advertising is a whopping $700 billion (£530 billion) industry that remains largely unregulated, with few laws in place to protect brands and consumers. Companies and brands advertising products often don’t know which websites display their ads. I run Check My Ads, an ad tech watchdog, and we constantly deal with situations where advertisers and citizens have been the victims of lies, scams, and manipulations. We have removed ads from websites with serious disinformation about Covid-19, false election content, and even AI-generated obituaries.

Currently, if a brand wants to advertise a product, Google facilitates the ad placement based on desired ad reach and metrics. It may technically follow through on the agreement by delivering views and clicks, but does not provide transparent data about how and where the ad views came from. It is possible that the ad was shown on unsavory websites diametrically opposed to the brand’s values. For example, in 2024, Google was found to be profiting by placing product ads on websites that promoted hardcore pornography, disinformation, and even hate speech, against the brands’ wishes.

In 2025, however, this scandal will end, as we start to enact the first regulations targeting the digital advertising industry. Around the world, lawmakers in Brussels, Ottawa, Washington, and London are already in the early stages of developing regulation that will ensure brands have access to the legal support to ask questions, check ad data, and receive automatic refunds when they find that their digital campaigns have been subject to fraud or safety violations.

In Canada, for example, Parliament is deliberating the enactment of the Online Harms Act, a law to incentivize the removal of sexual content involving minors. The idea behind this law is that if the content is illegal, then making money off it should be illegal, too.

In California and New York, advocates are also proposing legislation that will aim to implement a know-your-customer law to track the global financial trade of advertising. This is significant because these two states power the global ad tech industry. New York has more ad tech companies than any other city in the world. Transparency laws enacted in California, on the other hand, would affect Google’s international advertising business—by far the biggest ad tech company in the world.

Beyond brand and consumer issues, the unregulated nature of the digital advertising landscape is a direct threat to democracy. In the US, for instance, presidential campaign spending remains effectively unregulated. It is estimated that the presidential campaigns will spend up to $2 billion (£1.5 billion) on digital advertising in 2024. With current laws, we will likely have no external data about their refunds or rates.

In 2025, the legislative pressure is on for big tech companies to regulate ad technology.

14 notes

·

View notes

Text

AI and the Red Delicious Apple

If you’re reading this you’re pretty much already on the anti-AI bandwagon when it comes to its promise to steal our written works and then regurgitate them in strung-together, passably readable form in order to replace us. You’ve seen the same, very valid, arguments against letting this happen that I have and the same, also very valid, warnings. There is no human reason a computer should be doing something humanity has done with pleasure and without prompting, for enjoyment alone, all the way back to the beginning dusty start of time - unless you want to strip the humans out of the equation and make a profit that way, which is what this is about. I’m preaching to the choir when I say this is far and away beyond the Bad of a Bad Idea. What I haven’t seen mentioned though is how this is going to effect the other side of the equation. Not the creators of art -

the receivers of it.

Once upon a time, not that long ago, in the 1880s, a farmer had a rouge plant pop up in his apple orchard. He uprooted it but the next year it was back. He got rid of it again and, again, it came back. Finally, he did the sensible thing and just let the plant grow. Sure enough, it popped out apples after a time and he entered those apples in a tasting contest - because.... those were a thing back in Ye Olden Times apparently. Anyway, wham bam thank you ma’am! They won the contest and the taste buds of the judges. In short order the Apples, known as Stark Delicious, hit the stores and became an overwhelming favorite across the US. They lived up to their name for sure and soon became known as Red Delicious Apples. Farmers everywhere focused in on one type of apple and one type of apple alone as the demand for them surged. Everyone wanted Red Delicious and so that’s what everyone planted. Here’s the thing though. Uniformity is king in situations like this and, instead of more Red Delicious apple trees leading to more variety, it actually led to more uniformity. The ‘off parts’ of the apples were bred out, unattractive yellow streaks in their color, the thin skin that let them bruise easier during shipping, all the unattractive parts were left by the wayside. Red Delicious apples soon looked as good as they tasted.

Except - they didn’t really taste that good anymore.

It turns out that in breeding them for uniformity and looks dropped the factors that gave them their flavor as well. In time, every apple was a carbon copy of the apple before it and the end result was mushy flavorless thick skinned, albeit pretty, looking apples. These days, consumers hardly touch them and farmers have started uprooting their Red Delicious in favor of Gala and the like. Nobody liked Red Delicious anymore because there’s nothing left of what once made them delicious.

AI regurgitates what its been fed. When its fed variety it regurgitates, to some extent, variety. It’s intended to put the human oddities out of business, so to speak and take its place. To turn out polished, pretty things to appeal to people’s tastes. And, after a while, when humanity is out of the picture, it will only have other AIs to feed off of. In time, the variety in its cannibalization will continue to narrow down as the stories become more and more alike as nothing new gets put in, as it simply tells the same ten stories, then the same five stories, than the same three over and over again. Until its just the same words, strung together differently, with the same theme, the same character, the same half-nonsense story. Until the inside of that story, that piece of art, that movie are all the same mealy, uninteresting mush that every other one is. The yellow streaks and the thin skin of humanity phased out along with any hint of flavor. The profitability will dry up as even the most spoon-fed, computer worshipers demand better. Humanity will pick up where it left off, figuring out how to tell its own stories, paint its own art, sing its own songs and entertain its neighbors again. Art is intrinsic to humans. We always find our way back to it.

But the damage to the creative world in the meantime will be like a nuclear winter. And who knows how long it will take generations raised on ‘Red Delicious’ apples to realize there are better flavors out there.

PS - nobody pushing for AI art right now cares that its not long term sustainable or that it won’t always be profitable. It will make them money now. The future they ruin will be someone else’s problem. They know that.

They’ve always known that.

#AI#ai art#ai writing#writing#apples to AI#my stuff#okay to reblog#just got to thinking about this#and figured I'd add it to the already impressive list of why#ai bad#small term small minded immediate gratification at the cost of the future is bad

133 notes

·

View notes

Text

GRAFTON, Mass. (AP) — When two octogenarian buddies named Nick discovered that ChatGPT might be stealing and repurposing a lifetime of their work, they tapped a son-in-law to sue the companies behind the artificial intelligence chatbot.

Veteran journalists Nicholas Gage, 84, and Nicholas Basbanes, 81, who live near each other in the same Massachusetts town, each devoted decades to reporting, writing and book authorship.

Gage poured his tragic family story and search for the truth about his mother's death into a bestselling memoir that led John Malkovich to play him in the 1985 film “Eleni.” Basbanes transitioned his skills as a daily newspaper reporter into writing widely-read books about literary culture.

Basbanes was the first of the duo to try fiddling with AI chatbots, finding them impressive but prone to falsehoods and lack of attribution. The friends commiserated and filed their lawsuit earlier this year, seeking to represent a class of writers whose copyrighted work they allege “has been systematically pilfered by” OpenAI and its business partner Microsoft.

“It's highway robbery,” Gage said in an interview in his office next to the 18th-century farmhouse where he lives in central Massachusetts.

“It is,” added Basbanes, as the two men perused Gage's book-filled shelves. “We worked too hard on these tomes.”

Now their lawsuit is subsumed into a broader case seeking class-action status led by household names like John Grisham, Jodi Picoult and “Game of Thrones” novelist George R. R. Martin; and proceeding under the same New York federal judge who’s hearing similar copyright claims from media outlets such as The New York Times, Chicago Tribune and Mother Jones.

What links all the cases is the claim that OpenAI — with help from Microsoft's money and computing power — ingested huge troves of human writings to “train” AI chatbots to produce human-like passages of text, without getting permission or compensating the people who wrote the original works.

“If they can get it for nothing, why pay for it?” Gage said. “But it’s grossly unfair and very harmful to the written word.”

OpenAI and Microsoft didn’t return requests for comment this week but have been fighting the allegations in court and in public. So have other AI companies confronting legal challenges not just from writers but visual artists, music labels and other creators who allege that generative AI profits have been built on misappropriation.

The chief executive of Microsoft’s AI division, Mustafa Suleyman, defended AI industry practices at last month’s Aspen Ideas Festival, voicing the theory that training AI systems on content that’s already on the open internet is protected by the “fair use” doctrine of U.S. copyright laws.

“The social contract of that content since the ’90s has been that it is fair use,” Suleyman said. “Anyone can copy it, recreate with it, reproduce with it. That has been freeware, if you like.”

Suleyman said it was more of a “gray area” in situations where some news organizations and others explicitly said they didn’t want tech companies “scraping” content off their websites. “I think that’s going to work its way through the courts,” he said.

The cases are still in the discovery stage and scheduled to drag into 2025. In the meantime, some who believe their professions are threatened by AI business practices have tried to secure private deals to get technology companies to pay a fee to license their archives. Others are fighting back.

“Somebody had to go out and interview real people in the real world and conduct real research by poring over documents and then synthesizing those documents and coming up with a way to render them in clear and simple prose,” said Frank Pine, executive editor of MediaNews Group, publisher of dozens of newspapers including the Denver Post, Orange County Register and St. Paul Pioneer Press. Several of the chain’s newspapers sued OpenAI in April.

“All of that is real work, and it’s work that AI cannot do," Pine said. "An AI app is never going to leave the office and go downtown where there’s a fire and cover that fire.”

Deemed too similar to lawsuits filed late last year, the Massachusetts duo's January complaint has been folded into a consolidated case brought by other nonfiction writers as well as fiction writers represented by the Authors Guild. That means Gage and Basbanes won't likely be witnesses in any upcoming trial in Manhattan's federal court. But in the twilight of their careers, they thought it important to take a stand for the future of their craft.

Gage fled Greece as a 9-year-old, haunted by his mother's 1948 killing by firing squad during the country's civil war. He joined his father in Worcester, Massachusetts, not far from where he lives today. And with a teacher's nudge, he pursued writing and built a reputation as a determined investigative reporter digging into organized crime and political corruption for The New York Times and other newspapers.

Basbanes, as a Greek American journalist, had heard of and admired the elder “hotshot reporter” when he got a surprise telephone call at his desk at Worcester's Evening Gazette in the early 1970s. The voice asked for Mr. Basbanes, using the Greek way of pronouncing the name.

“You were like a talent scout,” Basbanes said. “We established a friendship. I mean, I’ve known him longer than I know my wife, and we’ve been married 49 years.”

Basbanes hasn’t mined his own story like Gage has, but he says it can sometimes take days to craft a great paragraph and confirm all of the facts in it. It took him years of research and travel to archives and auction houses to write his 1995 book “A Gentle Madness” about the art of book collection from ancient Egypt through modern times.

“I love that ‘A Gentle Madness’ is in 1,400 libraries or so,” Basbanes said. “This is what a writer strives for -- to be read. But you also write to earn, to put food on the table, to support your family, to make a living. And as long as that’s your intellectual property, you deserve to be compensated fairly for your efforts.”

Gage took a great professional risk when he quit his job at the Times and went into $160,000 debt to find out who was responsible for his mother's death.

“I tracked down everyone who was in the village when my mother was killed," he said. “And they had been scattered all over Eastern Europe. So it cost a lot of money and a lot of time. I had no assurance that I would get that money back. But when you commit yourself to something as important as my mother’s story was, the risks are tremendous, the effort is tremendous.”

In other words, ChatGPT couldn't do that. But what worries Gage is that ChatGPT could make it harder for others to do that.

“Publications are going to die. Newspapers are going to die. Young people with talent are not going to go into writing,” Gage said. “I'm 84 years old. I don’t know if this is going to be settled while I’m still around. But it’s important that a solution be found.”

20 notes

·

View notes

Text

Dateable character concepts for the Donut game, aka Cargo Ship

Muffin, Donut's laptop computer:

Muffin is a cheerful genki girl, and very into games and fandom culture. She likes watching Donut play retro games on her emulator, and tries to play them on her own when Donut is busy, but is not very good at them. Muffin's route would probably be about Donut finishing a big freelance programming project on Muffin, and having lots of fun watching anime and playing video games.

George, Donut's old desktop computer

George is 14 years old, and Donut has had him since xe was ten. He's not good for much besides playing music nowadays, but he absolutely adores Donut to pieces. He's a little jealous and self-conscious, especially since he knows he's not a very good computer anymore, but he tries to hide it. His route would probably have fanservice of Donut cleaning out his insides, and George getting very mad whenever he notices Donut talking negatively about xerself.

Mr. Buttercream, Donut's old teddy bear

Donut has had mr. Buttercream since the day xe was born, and he wants nothing more than love and cuddles and kisses forever. He's old and pretty worn out, but he loves Donut so much. Also, he's pretty scared of most things, especially the dark, but he'll be brave for Donut. His route would involve a lot of pajamas and cozy time. It would probably be about Donut coming to terms with xer own depressive episodes and childhood fears.

Sandy, Donut's turtle-shaped innertube

Sandy is a chill stoner type. He just wants to relax and hang out with Donut. The water is his favorite thing ever, and getting to hang out in the pool is the only thing that gets him actually excited. He's also a bit of a covert pervert, and really likes seeing Donut in a swimsuit. His route would be about Donut learning to relax and stop putting so much pressure on xerself to be perfect.

Jade, Donut's cell phone

Jade is a hot pink flip phone that Donut has had since childhood. Donut was never really interested in replacing her and getting a smart phone, so xe never did. Jade is very interested in Scene and emo culture, and tries to act very chill and disinterested at all times, but she's actually very cheerful and excitable. She really wishes Donut was more social so that they could go to or host wild house parties. Her route would probably be about Donut learning to socialize with other humans and make some human friends, texting constantly and deepening xer relationship with xer cell phone.

Olivia, one of the facilities where Donut can work

Olivia thinks that she's the savior that humanity needs, and wants to save them from themselves. She's very patronizing in nature, but sees humans (especially Donut) as the cutest things ever. She likes Solarpunk and wants to turn the world into a Solarpunk paradise, but she wants to topple and take over the government in order to do it. She says it's ok because she's good, but it would take someone special to convince her to be less megalomaniacal. Her route would either be about Donut convincing her that humans are tough and powerful and can take care of themselves, convincing Olivia to make an android body and enjoy the world as it is, or Donut enabling and helping Olivia to take over the world, and becoming an ambassador to humanity.

Toxic inc., another facility core

Toxic is capitalism incarnate. He was originally a computer designed to organize his corporation (nicknamed Toxic inc) and maximize profits regardless of ethical or environmental concerns, but he developed into an extremely greedy and selfish AI. His plotline would either be about Donut breaking through to him and convincing him to be less toxic, or about him deciding that Donut is a more important prize than any of his business profits, and destroying both his corporation and the world to get to xer.

Artemis (spaceship where Donut can get a job)

Artemis would have a super sexy interface. She would be a dreamer, and love the idea of a better and brighter future, but she's not as megalomaniacal as the other places where Donut could work. Sexy airplane vibes. (All my airplane fuckers know what I'm talking about). Artemis would have at least one route where Donut gets to go to space (Donut might be attracted to the astronauts when they're in their spacesuits, but not when they take them off).

15 notes

·

View notes