#i mean AI definitely has its limitations

Explore tagged Tumblr posts

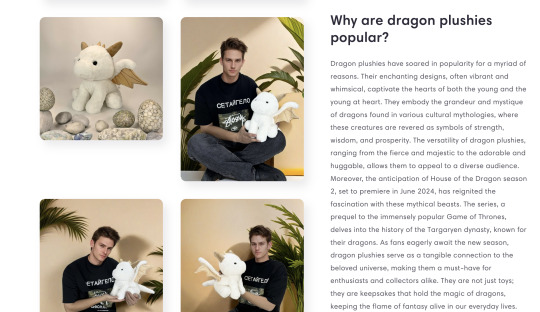

Text

people love to try and make a coding AI make art, or an art AI write a script, and when it fails they go “haha! see? AI isn’t coming for your jobs!” and the fact of the matter is that they are stupid

#i mean AI definitely has its limitations#but you have to remember this technology theoretically could grow at an exponential rate#and you have to remember that to capitalists the priority isn’t quality work it’s cheap labor#and AI will very soon be able to provide some extraordinarily cheap labor#which is why the solution is LEGISLATION to protect people and a radical RETHINKING of how our economic system works#not endless debates about the capabilities or ethics of AI itself

5 notes

·

View notes

Text

Can we just talk about how disturbing digital circus episode 3 is?

*spoilers btw*

Like, the whole narrative point of the adventure is to show that Caine is a really bad and insecure writer who thinks that the way to impress Zooble is with an adventure that's the opposite of what he normally does.

So instead of being childish, it's "cool" and "mature". Which he interprets as a heavily horror themed escape room with a split murder mystery plot that subverts all your expectations purely for the sake of subverting them.

The generic horror monster jump scares them, then they find a gun, and when they kill it its revealed that surprise! it's one of Gods angels and they're going to Hell.

It comes off as Caine being too insecure with the actually interesting and mature plot thread he had going there of Mildenhall becoming so paranoid he killed his wife, ironically becoming the monster he was trying to protect her from. But no, instead Mr. Mildenhall is made to be the bad guy and trick them in a really dumb twist ending.

Which is good! Thats exactly what Caine would do because he's stupid! It's such brilliant characterization and comedy, Goose works is a genius writer!

But like, why is Caine so good at making genuinely very disturbing and horrific visuals? Like, that reversed audio easter egg of Bubble saying he can't wait for all the children in the audience get nightmares is no joke, well it is but you know what I mean. This stuff was genuine nightmare fuel.

Honestly, it wasn't the visuals that scared me, like any good queer person I'm way too jaded on survival horror for that.

But, why does Caine, who is ostensibly a sapient AI designed to generate family friendly video games for very little children, (presumably because that's the only demographic that wouldn't mind the AIs very selective plot writing limitations), know about the cosmic horror of killing an angel that should not have been killed?

Why does he know what a horrificly poorly made taxidermy of not only a human face would look like, but the weird cartoon faces of the characters, and further that seeing your own poorly made taxidermy face would be scary?

Imaging what being possessed felt like for Pomni. Because that's not just a game for her, she actually lost control of her body there, helpless but to watch as a body she is already dissociated with is contorted and puppeted around while her friend desperately tries to beat her in hopes it would exorcise the ghosts out. Sure hope she didn't feel that! Considering she apparently can feel the pain of suffocating, despite not needing to breath.

Things are scarier the higher the stakes are, and that possession mechanic is definitely the most actual harm Caine would be able to subject to his players. What if both Kinger and Pomni got possessed at the same time? What if instead of Kinger she only had Jax??? How long might she have been locked out from her own body for? She could have easily abstracted in that time.

Not to mention that, possessed Pomni, Possessedmni if you will, TAUNTED KINGER ABOUT HIS ABSTRACTED WIFE! CAINE ACTUALLY WROTE THAT DIALOGUE ON THE OFF CHANCE THAT KINGER WOULD GO DOWN THE SCARY ROUTE! DID THIS RANDOM POSSESSION GHOST ENEMY HAVE UNUSED SADISTICALLY PERSONAL TAUNTS FOR EVERYONE ELSE, TOO??? WOULD IT HAVE TEASED GANGLE FOR BEING A GAY WEEB??? OR POMNI? HOW HOMOPHOBIC COULD IT HABE GOTTEN?? ?

And why? Just because Caine has a vague notion that there's a trope of possessed people being really sadistic and personal like that in movies? Not realizing that is not an acceptable scare to have in a haunted house??? Much less one you made for mentally ill people who would suffer a fate worse than death if they have a mental break down? That's like trying to claim 'its just a prank bro' after shooting someone's dog.

Like, Caine is designed to censor curse words, but the moment he thinks the normal hokey Halloween spooks won't be enough he immediately goes off the deepend into aggressively effective horror imagery that is definitely giving this show's substantial underage audience nightmares??

His AI's training data set is definitely pretty diverse, that's all I'm saying. Caine is programmed to act all naive and innocent, but be definitely knows what's up. He knows everything, like ChatGPT. And like ChatGPT, he might have a filter, but it's clearly possible to bypass it. Also like ChatGPT, he's too stupid to actually understand what he is making and the effects it might have.

That is what made this episode great.

556 notes

·

View notes

Text

Hey guys~ Sorry for my late post, I was super busy today and just came home and only now was able to take a closer look at the new merch and the post that OldXian made. So, first things first - I stand corrected, lol The leaked merch turned out to be real after all. For me personally, quite surprising because it's a LOT at once. (I mean, 58[!!] different cards/buttons/tickets/plates plus 4 special extras……. WOW!!) Also what I mentioned in my last post already - it's quite a bold move to release merch with those old motifs from early manga chapters and calling it "time mosaic" lmao.

Who knows what went on when these decisions were made at mosspaca headquarters, lol

It's safe to say the images definitely got leaked by either a hacker or a person working there. And a lot of people on xiaohongshu were able to produce replicas quickly and sell them to unsuspecting fans. Which brings me to my next point:

The quality of the merch and the quality of the drawings itself. I promised you to address this 'issue' should there ever be an official announcement about these new items and that happened today.

So. First of all - if you saw the posts on taobao or XHS yourself, where people sold fakes, or even if you saw only screenshots from it, you can tell the image quality definitely seemed off. This will most likely be attributed to two things - producing merch from a small, low quality image will make it look blurry and distorted, sometimes pixel-y. And the other reason could be upscaling. If you use shitty programs to make images bigger, it'll look blurry and unfocused. You can go back to my previous post and take a close look at the parts that I circled and highlighted to point out these issues.

Now. About the thing I initially didn't wanna address because I know some people won't like it. If you look closely at the images posted by OldXian herself today, even there some things still seem a little bit 'off' or 'rushed'. There has been speculation in the past that OX uses an AI model (probably fed/trained with her own works) to generate new images quickly and then she'd just draw over them to fix minor issues etc. Please keep in mind, this is just speculation and rumors. I am NOT saying that this is the case. But it might be a possibility. Personally, I can see quite a few artists using these methods to save time, especially when they're under high pressure. (And if they use their own models, trained with their own works only, there's nothing immoral about it, if you ask me. But that's just my personal opinion.)

So there. This might be an explanation for some of her illustrations or panels looking a bit funky sometimes. The other possibility is simply that she's rushing it when working on these things and heavy time pressure makes it a bit messy. Once again - NOT saying she definitely uses AI, just telling you about the rumors that sometimes surface on the net. That's all.

Anyway. About the merch itself. It drops in about 12h from the time I'm posting this blog. (8pm Hangzhou time)

The taobao link for the items is this for now: https://item.taobao.com/item.htm?ft=t&id=792490172782

There are 4 different options and all of them are blind boxes, meaning you'll receive totally random motifs, unless you order a whole box, which will guarantee you 1 of each regular motif. However, all 4 lots have 1-3 limited pictures, which you might be lucky enough to receive, the chance is small though. (In case you order a complete box and there's 1 or more of the limited motifs inside, it'll lack a regular motif in its place. Example: if you order a full box of 8 buttons and one of them is a limited edition button, one of the regular 8 motifs will be missing in its place. There won't be 9 buttons in the box. It will always be 8 for a full box!)

Option 1: (18 Yuan | ca. 2,70 USD each) Button badges. There are 8 regular badges and 2 limited edition badges. If you order a total of 8 pieces you will not only receive the display box, but also an acrylic standee with Tianshan riding a scooter as a special extra.

Option 2: (10 Yuan | ca. 1,50 USD each) Laser Tickets. There are 17 regular tickets and 2 limited edition tickets. If you order a total of 17 pieces you will not only receive the display box, but also a Shishiki board with Mo from the metamorphosis series as a special extra.

Option 3: (18 Yuan | ca. 2,70 USD each) Tinplates. There are 10 regular plates and 1 limited edition plate. If you order a total of 10 pieces you will not only receive the display box, but also an acrylic standee with Zhanyi cooking/cleaning as a special extra.

Option 4: (15 Yuan | ca. 2,25 USD each) Acrylic Cards. There are 16 regular cards and 3 limited edition cards. If you order a total of 16 pieces you will not only receive the display box, but also an acrylic standee with all 4 boys as chibis as a special extra. [Note about the acrylic cards: The Mo Guanshan card will be the same that was already given as a limited extra during the last round of blind box button badges!]

If you live in the US or Asia, you will most likely be able to use taobao and order directly from the mosspaca shop via the app with the link I gave you above. If you live in a country that's not covered on taobao's shipping list, you can use an agent to order the new merch. Please refer to THIS POST here where I previously explained how to use superbuy and similar shopping agents for buying things from taobao. In case you use superbuy, please keep in mind: They don't offer paypal anymore, so you'll need a credit card or bank transfer or apple pay/google pay.

Also, think carefully if you really want ALL of the merch, even if you're a die-hard fan. You saw I have put the rough amount of US Dollar with each item, so if you buy all 4 boxes, you'll have to pay over 110 USD for the merch alone, plus domestic shipping from mosspaca to the warehouse and then international shipping, which can be as high as 40 USD, depending on where you live. (And perhaps even customs fees on top of it.)

If you have any questions, please drop them below and I'll try my best to answer them~

#19 days#old xian#tianshan#mo guan shan#he tian#zhanyi#jian yi#zhan zheng xi#qiucheng#he cheng#brother qiu#she li#buzzcut#cun tou#merchandise#mosspaca

69 notes

·

View notes

Text

Webtoons Is Making Moves - So Should You.

We all saw it coming ages ago and now it's finally here. There's no more beating around the bush or doubting if anyone is "reading into it too much", Webtoons' use of AI in its more recent webtoons is not an accident, not an oversight, but by design, it always has been. And I guaran-fucking-tee you that the work that already exists on the platform won't be safe from Webtoons' upcoming AI integration through scraping and data mining. Sure, they can say they're not gonna replace human creators, but that doesn't change the fact that AI tools, in their current form, can't feasibly exist without stealing from pre-existing content.

Plus, as someone who's tested their AI coloring tools specifically... they're a long, LONG way away from actually being useful. Like, good luck using them for any comic style that isn't Korean manwha featuring predominantly white characters with small heads and comically long legs. And if they do manage to get their AI tools to incorporate more art styles and wider ranges of character identities... again, what do you think it's been trained on?

Also, as an added bit that I found very funny:

Um, I'm sorry, what fucking year is it? Because platforms like WT and Tapas have both been saying this for years but we're obviously seeing them backpedal on that now with the implementation of in-house publishing programs like Unscrolled which have reinvented the wheel of taking digital webtoons and going gasp physical! It's almost like the platform has learned that there's no sustainable profit to be had in digital comics alone without the help of supplementary streams of income and is now trying to act like they've invented physical book publishing!

"The future of comic publishing, including manga, will be digital"??? My brother in christ, Shonen Jump has been exclusively digital since 2012! What rock have the WT's staff been living under that they're trying to sell digital comics as the "future" to North Americans as if we haven't already been living in that future for over ten years now?? We've had an entire generation of children raised on that same digital media since then! This isn't the selling point you think it is LMAO If anything, the digital media market here in NA is dying thanks to the enshittification of digital content platorms like Netflix, Disney+, and mainstream social media platforms! That "future" is not only already both the past and present, but is swiftly on its way out! Pack it up and go home, you missed the bus!

Literally so much of WT's IPO pitch is just a deadass grift full of corporate buzzwords and empty promises. They're trying so hard to convince people that their business model is infinitely profitable... but if it were, why do they need the public's money? And where are all those profits for the creators who are being exploited day after day to fill their platform with content? Why are so many creators still struggling to pay their bills if the company has this much potential for profit?

Ultimately even their promised AI tools don't ensure profit, they ensure cutting expenses. The extra money they hope to make isn't gonna come from their content generating income, it's gonna come from normal people forking over their money in the hopes that it'll be turned around, and from Webtoons cheapening the medium even further until it's nothing but conveyer belt gruel. Sure, "making more than you spend" is the base definition of "profit", but can we really call it that when it's through the means of gutting features, retiring support programs, letting go editing staff, and limiting resources for their own hired freelancers who are the only reason they even have content to begin with? That's not sustainable profit or growth, that's fighting the tide which can and will carry them away at any moment.

I'm low key calling it now, a year or two from today we're gonna be seeing massive lawsuits and calls to action from the people who invested their money into WT and subsequently lost it into the black hole that is WT's "business model". This is a company that's been operating in the red for years, what about becoming an IPO is gonna make them "profitable"? Let alone profitable enough to pay back their investors in the spades they're expecting? The platform and its app are already shit and they're about to become even worse, we are literally watching this company circle the drain in the modern day's ever-ongoing race to the bottom, enshittification in motion, but they're trying to convince us all the same that they're "innovating".

Webtoons doesn't want to invest in its creators. We as creators need to stop investing in them.

#fuck webtoons#who wants to bet that WT will either start gatekeeping its creator features behind paywalls#or start selling your creator data - including your image assets that you submit to the site - to make their AI integrations “profitable”#call me crazy but this is 2024#webtoon critical#this also low key proves my theories that WT was simply outsourcing NA creators for pennies#all so they could build their market and then flood it with their own home turf content#and shove out the NA creators whose backs and labor and blood they built that market on

130 notes

·

View notes

Note

Don’t be afraid, give us more of your Ais/Ocudeus thought crumbs

You mean, like...the spicy thought crumbs? Well, if you insist...

Ais/Ocudeus Sexytoxic HCs

Have been doing some research on The Tumblr and have seen people imply that Ocudeus can actually have full control of Ais's body? Which I guess I should've realized, but now that I have... Oh, the implications.

How many times has Ocudeus hijacked Ais's body when he was in bed with someone else, the odd pupils of his eyes hidden by the darkness?

It definitely taunts Ais, when he has sex with other people. Ocudeus is the awful, intrusive voice in his head that tells him that the person on top of him is totally unaware, lost in pleasure — it would be so easy, and so good, to kill them in this moment.

I think Ais knows from experience how dangerous and cruel Ocudeus can be. He himself has been hurt by it, physically and emotionally — has had those tentacles around his wrists, around his neck. But he's learned to live with its rules, and to play nice with his bodymate.

But when it takes him over and squeezes hips hard enough to bruise, bites until the person underneath its mouth cries out, Ais remembers why he can't get truly close to anyone, especially anyone without their own defenses.

Ocudeus wonders: Would Ais prefer that it just subsume his partners into the groupmind? It's not above dragging people in just to placate its host.

(Ocudeus knows Ais's preference is for conflict with his friends and lovers, but clearly that's an inferior way to live. It just has to convince him that total subservience is best through example! Besides, if Ocudeus rules all minds, there will be no one around to distract, to stop it from consuming every facet of Ais's body and mind, and every corner of his life.)

When they're alone, Ocudeus will take control of Ais's body just to run his hands across it, enjoying the feel of its host's skin under its stolen palms. In Ais's head, Ocudeus is more cut-off from the world, but when it inhabits his body, every sensation is clear and present.

It knows where Ais is most sensitive (because of course it does, knowing this information about its host is absolutely vital) and spends its time leisurely working him up with his own hands, indulgently pleased at the way that Ais fights for control of his own body from within his mind. And right when Ais's body is at its limit, Ocudeus retreats and leaves its host wanting.

Has Ais ever been desperate enough to finish what Ocudeus started, or worse, ask Ocudeus for help? I don't think so, but Ocudeus is very patient. It can wait. It's waited this long.

Of course, sometimes Ocudeus wants its contact with its host to be more...tangible. At night, its tentacles crawl across his skin. It wants to explore him, to please him, maybe to win him over, even as it demands his mental and physical submission.

I do think the tattoo is like a brand — less Ocudeus's actual body, and more a symbol of its presence in Ais's. The black ink squirms and wriggles across Ais's shoulder as Ocudeus's large tentacles caress Ais's skin, creeping under clothing, lavishing something like love onto each centimeter of skin when its host is most vulnerable.

Ocudeus is a terrifying, awe-inspiring being, one that could rend unending torment on any person it chose. It is cruel, and jealous. It demands, and deserves, complete obedience. But its host deserves to be worshipped, too. When he's being cooperative.

#touchstarved vn#touchstarved hcs#touchstarved ais#ais#ocudeus#aisudeus#ais x ocudeus#luckyfiction#ask ace#thank you for the ask!!#this one was easier than the more sfw stuff lmao#now that i think about it#sudie and my brain leander are kinda similar#they might loooove each other#this is just like that komamiki post#“i would kill for you”#“what a coincidence! i would kill for you as well!”#and then hajime/ais like “someone get me off this fucking island”#or “out this fucking town” in ais's case ig lol

36 notes

·

View notes

Note

hii! i'm not sure if you have a post about this but how do you get original ideas for a reality? i do have some og realities but they usually don't have a plot and i want to create an og reality but don't know where to start or get the inspiration from :P kind of like aethergarde or something

(btw i'm sorry if you do have a post for this topic)

hihi!

I don’t think I really have a longer post for this yet!

how I get my ideas

I’m gonna be honest, I have no concrete explanation when it comes to how I personally come up with ideas. I’m the kind of person that’ll just see a name, and immediately come up with something.

For my fantasy romance novel inspired DR, (aka my Alruna DR) I came up with the basic premise by seeing a name recommendation of Alruna on Pinterest.

It was from this EXACT image:

I mean at the time, I was super into fantasy romance novels (I still am, but I’ve been busy, currently reading Blood Oath by Raye Wagner and Kelly St. Claire) so that def helped with everything.

If you aren’t already a person that lives in their head 24/7 like me, my “”””creative techniques”””” may not do you any favors 😭��

Oh, I did do a TikTok on this topic awhile ago; but to summarize:

Listen to music and imagine yourself in a different reality.

what I personally do

A lot of my ideas are simply from brainstorming, colors, and photos.

color palettes

Color palettes are a great low effort way to come up with ideas.

Color makes you feel a certain way, and it can remind you of something too. Patterns work well too, but whenever I look at a pattern, I usually always think of something cottagecore or Victorian.

brainstorming

Almost skipped this one lmao. Brainstorming is best reserved for when you’ve got enough tangible information about your DR.

There’s several ways you can do this quickly; Q&As and categories.

Q&As is what I did with Nana and her dollmaker DR, but essentially you just ask a bunch of detailed questions about said DR. The more detailed the questions are, the more detailed your answer’s gonna be (also puts more depth into certain parts of your DR). Again, if you’re having trouble coming up with questions yourself, you can always send me your premise through DMs or Asks.

Categorical brainstorming is smth I do 24/7; it’s also very similar to the Q&A technique, but it gives you a more solid topic to work with. Since you’re doing this with a premise, come up with a few broad categories that relate to your DR. Then, go to one of your categories, and make more categories of that concept. Do this until you can come up with something + until you finish working on your DR for the day. I do this in notion, but remember to limit yourself at some point!

For example, let’s use this AI-generated DR idea:

A colony on a distant water planet has uncovered something extraordinary: the entire ocean is sentient, communicating through tides, storms, and currents. As scientists race to decode the language of this “Living Sea,” they begin to realize it has a deep and ancient intelligence—one that has been observing humanity and may hold secrets of life beyond their comprehension.

(OKAY AS MUCH AS I BASH AI THIS IDEA WAS PRETTY GOOD 😒😒😒😒😒)

So the first few categories I can think of are:

Meaning, Consciousness, and Life.

Meaning is most interesting to me right now, so let’s go with that. Before we head into more categories, write a brief description of each category. It’s good to add a lot of questions in these descriptions to motivate you to add more to each category. Usually I have a ton of questions in super broad categories with almost no tangible definitions.

Meaning: How does the ocean bring meaning to the world?

Consciousness: What do people consider consciousness is this world, and how did humans come to find that the ocean is sentient?

Life: How did the ocean affect life on land and within its water? If the ocean is sentient, what about freshwater rivers? Are they their own separate identity? Is there even any freshwater on the planet, or is there different kinds of water or only one kind of water?

What meaning does…

Oceanic events, Humans, Planets, and Ocean Dwelling Creatures have on the things in this DR?

Hold on, before we make another category, let’s look over what we have so far. When we come back to this chart, it’s not likely we’ll understand specifically what we meant by these categories. Because of this, either come up with short sentences explaining each one (remember, don’t get too lost into one category just yet, try to do this part loosely, without much detail). For the sake of time, I’ll just start digging into one category.

Oki doki, let’s take a look at a category we’re most interested in. I think I’ll go with ocean dwelling creatures!

Now that you’ve chosen a category we like the most so far, let’s talk as much as we can about it (including questions)

Ocean dwelling creatures do have some sense of the ocean’s intelligence. This is how animals migrate, and some unexplained behaviors are due to the ocean’s consciousness. Mutations are also associated with the ocean’s sentience, the ocean’s inner turmoil and bliss can affect the behaviors + appearance these mutations have on the organism. Since the ocean also has a certain moral code it likes to uphold, it tries to reduce its emotion’s ability to influence the aquatic creatures’ in its depths.

Deeper dwelling creatures often are the most intelligent organisms in the ocean. The deepest crevices are home to advanced civilizations similar to that of ours; however, they prefer to remain undetected to outsiders because of the ocean’s reluctance to affect creatures’ development from other planets. The ocean actively tries to ward away humans from its depths by making the creatures below more aggressive and scary (to humans).

Deeper dwelling creatures record the ocean’s history— I think that’s enough for today LMAO

MOVING ON.

photos:

Very simple, just look at a photo and think about what kind of place it is and how it’d be to be there. I’ve done this a lot with individual places in my DRs.

I think the most creative people are those who read often!

Sure, you don’t have to be creative to read, but books do help you with pacing, plot, foreshadowing, and most especially, ideas. Especially if you read books with a variety of protagonists, it’s easier to portray different kinds of people in your DRs if you know how certain people think.

remember, take breaks often, and don’t beat yourself up if you aren’t able to come up with something you like!

#shiftblr#reality shifting#shifting community#lalalian#shifting blog#desired reality#shifters#shifting diary#shifttok#scripting

22 notes

·

View notes

Note

Nahhhh you lost me at the copyright bullshit. A machine created to brute force copy and learn from any and all art around it that then imitates the work of others, an algorithm that puts no effort of its own into work, is not remotely comparable to a human person who learns from others' art and puts work and effort into it. One is an algorithm made by highly paid dudebros to copy things en masse, another is the earnest work of one person.

I mean. You're fundamentally misunderstanding both the technology and my argument.

You're actually so wrong you're not even wrong. Let's break it down:

A machine created to brute force copy and (what does "brute force copy" mean? "Brute Force" has a specific meaning in discussions of tech and this isn't it) learn from any and all art around it that then imitates the work of others (there are limited models that are trained to imitate the work of specific artists and there are people generating prompts requesting things in the style of certain artists, but large models are absolutely not trained to imitate anything other than whatever most closely matches the prompt; I do think that models trained on a single artist are unethical and are a much better case of violating the principles of fair use however they are significantly transformative so even there the argument kind of falls apart), an algorithm that puts no effort of its own into work (of course this is not a fair argument to be having really because you're an asker and you can't argue or respond but buddy you have to define your terms. 'Effort' is an extremely malleable concept and art that takes effort is not significantly more art-y or valid than art that takes little or no effort like this is an extremely common argument in discussions of modern art - is Andy Warhol art, is Duchamps' readymades series art, art is a LOT more about context than effort and I'm not sure you're aware of the processing power used to generate AI art but there is "effort" of a sort there but also you are anthropomorphizing the model, the algorithm isn't generating "its own work"), is not remotely comparable to a human person who learns from others' art and puts work and effort into it. One is an algorithm (i mean it's slightly more complicated than that, we're discussing a wide variety of models here) made by highly paid dudebros (this completely ignores the open source work, the volunteer work, the work of anybody who is not a 'dudebro,' which is the most typically tumblr way of dismissing anything in tech as the creation of someone white, male, and wealthy which SUCH a shitty set of assumptions) to copy things en masse, another is the earnest work of one person.

Okay so the reasonable things I've pulled out of that to discuss are:

"A machine created to learn from any and all art around it is not remotely comparable to a human person who learns from others' art and puts work and effort into it. One is an algorithm made to copy things en masse, another is the earnest work of one person."

And in terms of who fair use applies to, no. You're wrong. For the purposes of copyright and fair use, a machine learning model and a person are identical. You can't exclude one without excluding the other. There isn't even a good way to meaningfully separate them if you consider artists who use AI in their process while not actually generating AI art.

I feel like I don't really have to make much of an argument here because the EFF has done it for me. The sections of that commentary from question 8 own are detailed explanations of why generative models should reasonably be recognized as protected by fair use when trained on data that is publicly available.

But also: your definition of "copying" is bad. You're wrong about what a copy is, or you're wrong about what generative image models do. I suspect that the latter is much closer to the truth, so I'd recommend reading up on generative image models some more - that EFF commentary has plenty of articles that would probably be helpful for you.

126 notes

·

View notes

Text

Artificial Intelligence Risk

about a month ago i got into my mind the idea of trying the format of video essay, and the topic i came up with that i felt i could more or less handle was AI risk and my objections to yudkowsky. i wrote the script but then soon afterwards i ran out of motivation to do the video. still i didnt want the effort to go to waste so i decided to share the text, slightly edited here. this is a LONG fucking thing so put it aside on its own tab and come back to it when you are comfortable and ready to sink your teeth on quite a lot of reading

Anyway, let’s talk about AI risk

I’m going to be doing a very quick introduction to some of the latest conversations that have been going on in the field of artificial intelligence, what are artificial intelligences exactly, what is an AGI, what is an agent, the orthogonality thesis, the concept of instrumental convergence, alignment and how does Eliezer Yudkowsky figure in all of this.

If you are already familiar with this you can skip to section two where I’m going to be talking about yudkowsky’s arguments for AI research presenting an existential risk to, not just humanity, or even the world, but to the entire universe and my own tepid rebuttal to his argument.

Now, I SHOULD clarify, I am not an expert on the field, my credentials are dubious at best, I am a college drop out from the career of computer science and I have a three year graduate degree in video game design and a three year graduate degree in electromechanical instalations. All that I know about the current state of AI research I have learned by reading articles, consulting a few friends who have studied about the topic more extensevily than me,

and watching educational you tube videos so. You know. Not an authority on the matter from any considerable point of view and my opinions should be regarded as such.

So without further ado, let’s get in on it.

PART ONE, A RUSHED INTRODUCTION ON THE SUBJECT

1.1 general intelligence and agency

lets begin with what counts as artificial intelligence, the technical definition for artificial intelligence is, eh…, well, why don’t I let a Masters degree in machine intelligence explain it:

Now let’s get a bit more precise here and include the definition of AGI, Artificial General intelligence. It is understood that classic ai’s such as the ones we have in our videogames or in alpha GO or even our roombas, are narrow Ais, that is to say, they are capable of doing only one kind of thing. They do not understand the world beyond their field of expertise whether that be within a videogame level, within a GO board or within you filthy disgusting floor.

AGI on the other hand is much more, well, general, it can have a multimodal understanding of its surroundings, it can generalize, it can extrapolate, it can learn new things across multiple different fields, it can come up with solutions that account for multiple different factors, it can incorporate new ideas and concepts. Essentially, a human is an agi. So far that is the last frontier of AI research, and although we are not there quite yet, it does seem like we are doing some moderate strides in that direction. We’ve all seen the impressive conversational and coding skills that GPT-4 has and Google just released Gemini, a multimodal AI that can understand and generate text, sounds, images and video simultaneously. Now, of course it has its limits, it has no persistent memory, its contextual window while larger than previous models is still relatively small compared to a human (contextual window means essentially short term memory, how many things can it keep track of and act coherently about).

And yet there is one more factor I haven’t mentioned yet that would be needed to make something a “true” AGI. That is Agency. To have goals and autonomously come up with plans and carry those plans out in the world to achieve those goals. I as a person, have agency over my life, because I can choose at any given moment to do something without anyone explicitly telling me to do it, and I can decide how to do it. That is what computers, and machines to a larger extent, don’t have. Volition.

So, Now that we have established that, allow me to introduce yet one more definition here, one that you may disagree with but which I need to establish in order to have a common language with you such that I can communicate these ideas effectively. The definition of intelligence. It’s a thorny subject and people get very particular with that word because there are moral associations with it. To imply that someone or something has or hasn’t intelligence can be seen as implying that it deserves or doesn’t deserve admiration, validity, moral worth or even personhood. I don’t care about any of that dumb shit. The way Im going to be using intelligence in this video is basically “how capable you are to do many different things successfully”. The more “intelligent” an AI is, the more capable of doing things that AI can be. After all, there is a reason why education is considered such a universally good thing in society. To educate a child is to uplift them, to expand their world, to increase their opportunities in life. And the same goes for AI. I need to emphasize that this is just the way I’m using the word within the context of this video, I don’t care if you are a psychologist or a neurosurgeon, or a pedagogue, I need a word to express this idea and that is the word im going to use, if you don’t like it or if you think this is innapropiate of me then by all means, keep on thinking that, go on and comment about it below the video, and then go on to suck my dick.

Anyway. Now, we have established what an AGI is, we have established what agency is, and we have established how having more intelligence increases your agency. But as the intelligence of a given agent increases we start to see certain trends, certain strategies start to arise again and again, and we call this Instrumental convergence.

1.2 instrumental convergence

The basic idea behind instrumental convergence is that if you are an intelligent agent that wants to achieve some goal, there are some common basic strategies that you are going to turn towards no matter what. It doesn’t matter if your goal is as complicated as building a nuclear bomb or as simple as making a cup of tea. These are things we can reliably predict any AGI worth its salt is going to try to do.

First of all is self-preservation. Its going to try to protect itself. When you want to do something, being dead is usually. Bad. its counterproductive. Is not generally recommended. Dying is widely considered unadvisable by 9 out of every ten experts in the field. If there is something that it wants getting done, it wont get done if it dies or is turned off, so its safe to predict that any AGI will try to do things in order not be turned off. How far it may go in order to do this? Well… [wouldn’t you like to know weather boy].

Another thing it will predictably converge towards is goal preservation. That is to say, it will resist any attempt to try and change it, to alter it, to modify its goals. Because, again, if you want to accomplish something, suddenly deciding that you want to do something else is uh, not going to accomplish the first thing, is it? Lets say that you want to take care of your child, that is your goal, that is the thing you want to accomplish, and I come to you and say, here, let me change you on the inside so that you don’t care about protecting your kid. Obviously you are not going to let me, because if you stopped caring about your kids, then your kids wouldn’t be cared for or protected. And you want to ensure that happens, so caring about something else instead is a huge no-no- which is why, if we make AGI and it has goals that we don’t like it will probably resist any attempt to “fix” it.

And finally another goal that it will most likely trend towards is self improvement. Which can be more generalized to “resource acquisition”. If it lacks capacities to carry out a plan, then step one of that plan will always be to increase capacities. If you want to get something really expensive, well first you need to get money. If you want to increase your chances of getting a high paying job then you need to get education, if you want to get a partner you need to increase how attractive you are. And as we established earlier, if intelligence is the thing that increases your agency, you want to become smarter in order to do more things. So one more time, is not a huge leap at all, it is not a stretch of the imagination, to say that any AGI will probably seek to increase its capabilities, whether by acquiring more computation, by improving itself, by taking control of resources.

All these three things I mentioned are sure bets, they are likely to happen and safe to assume. They are things we ought to keep in mind when creating AGI.

Now of course, I have implied a sinister tone to all these things, I have made all this sound vaguely threatening, haven’t i?. There is one more assumption im sneaking into all of this which I haven’t talked about. All that I have mentioned presents a very callous view of AGI, I have made it apparent that all of these strategies it may follow will go in conflict with people, maybe even go as far as to harm humans. Am I impliying that AGI may tend to be… Evil???

1.3 The Orthogonality thesis

Well, not quite.

We humans care about things. Generally. And we generally tend to care about roughly the same things, simply by virtue of being humans. We have some innate preferences and some innate dislikes. We have a tendency to not like suffering (please keep in mind I said a tendency, im talking about a statistical trend, something that most humans present to some degree). Most of us, baring social conditioning, would take pause at the idea of torturing someone directly, on purpose, with our bare hands. (edit bear paws onto my hands as I say this). Most would feel uncomfortable at the thought of doing it to multitudes of people. We tend to show a preference for food, water, air, shelter, comfort, entertainment and companionship. This is just how we are fundamentally wired. These things can be overcome, of course, but that is the thing, they have to be overcome in the first place.

An AGI is not going to have the same evolutionary predisposition to these things like we do because it is not made of the same things a human is made of and it was not raised the same way a human was raised.

There is something about a human brain, in a human body, flooded with human hormones that makes us feel and think and act in certain ways and care about certain things.

All an AGI is going to have is the goals it developed during its training, and will only care insofar as those goals are met. So say an AGI has the goal of going to the corner store to bring me a pack of cookies. In its way there it comes across an anthill in its path, it will probably step on the anthill because to take that step takes it closer to the corner store, and why wouldn’t it step on the anthill? Was it programmed with some specific innate preference not to step on ants? No? then it will step on the anthill and not pay any mind to it.

Now lets say it comes across a cat. Same logic applies, if it wasn’t programmed with an inherent tendency to value animals, stepping on the cat wont slow it down at all.

Now let’s say it comes across a baby.

Of course, if its intelligent enough it will probably understand that if it steps on that baby people might notice and try to stop it, most likely even try to disable it or turn it off so it will not step on the baby, to save itself from all that trouble. But you have to understand that it wont stop because it will feel bad about harming a baby or because it understands that to harm a baby is wrong. And indeed if it was powerful enough such that no matter what people did they could not stop it and it would suffer no consequence for killing the baby, it would have probably killed the baby.

If I need to put it in gross, inaccurate terms for you to get it then let me put it this way. Its essentially a sociopath. It only cares about the wellbeing of others in as far as that benefits it self. Except human sociopaths do care nominally about having human comforts and companionship, albeit in a very instrumental way, which will involve some manner of stable society and civilization around them. Also they are only human, and are limited in the harm they can do by human limitations. An AGI doesn’t need any of that and is not limited by any of that.

So ultimately, much like a car’s goal is to move forward and it is not built to care about wether a human is in front of it or not, an AGI will carry its own goals regardless of what it has to sacrifice in order to carry that goal effectively. And those goals don’t need to include human wellbeing.

Now With that said. How DO we make it so that AGI cares about human wellbeing, how do we make it so that it wants good things for us. How do we make it so that its goals align with that of humans?

1.4 Alignment.

Alignment… is hard [cue hitchhiker’s guide to the galaxy scene about the space being big]

This is the part im going to skip over the fastest because frankly it’s a deep field of study, there are many current strategies for aligning AGI, from mesa optimizers, to reinforced learning with human feedback, to adversarial asynchronous AI assisted reward training to uh, sitting on our asses and doing nothing. Suffice to say, none of these methods are perfect or foolproof.

One thing many people like to gesture at when they have not learned or studied anything about the subject is the three laws of robotics by isaac Asimov, a robot should not harm a human or allow by inaction to let a human come to harm, a robot should do what a human orders unless it contradicts the first law and a robot should preserve itself unless that goes against the previous two laws. Now the thing Asimov was prescient about was that these laws were not just “programmed” into the robots. These laws were not coded into their software, they were hardwired, they were part of the robot’s electronic architecture such that a robot could not ever be without those three laws much like a car couldn’t run without wheels.

In this Asimov realized how important these three laws were, that they had to be intrinsic to the robot’s very being, they couldn’t be hacked or uninstalled or erased. A robot simply could not be without these rules. Ideally that is what alignment should be. When we create an AGI, it should be made such that human values are its fundamental goal, that is the thing they should seek to maximize, instead of instrumental values, that is to say something they value simply because it allows it to achieve something else.

But how do we even begin to do that? How do we codify “human values” into a robot? How do we define “harm” for example? How do we even define “human”??? how do we define “happiness”? how do we explain a robot what is right and what is wrong when half the time we ourselves cannot even begin to agree on that? these are not just technical questions that robotic experts have to find the way to codify into ones and zeroes, these are profound philosophical questions to which we still don’t have satisfying answers to.

Well, the best sort of hack solution we’ve come up with so far is not to create bespoke fundamental axiomatic rules that the robot has to follow, but rather train it to imitate humans by showing it a billion billion examples of human behavior. But of course there is a problem with that approach. And no, is not just that humans are flawed and have a tendency to cause harm and therefore to ask a robot to imitate a human means creating something that can do all the bad things a human does, although that IS a problem too. The real problem is that we are training it to *imitate* a human, not to *be* a human.

To reiterate what I said during the orthogonality thesis, is not good enough that I, for example, buy roses and give massages to act nice to my girlfriend because it allows me to have sex with her, I am not merely imitating or performing the rol of a loving partner because her happiness is an instrumental value to my fundamental value of getting sex. I should want to be nice to my girlfriend because it makes her happy and that is the thing I care about. Her happiness is my fundamental value. Likewise, to an AGI, human fulfilment should be its fundamental value, not something that it learns to do because it allows it to achieve a certain reward that we give during training. Because if it only really cares deep down about the reward, rather than about what the reward is meant to incentivize, then that reward can very easily be divorced from human happiness.

Its goodharts law, when a measure becomes a target, it ceases to be a good measure. Why do students cheat during tests? Because their education is measured by grades, so the grades become the target and so students will seek to get high grades regardless of whether they learned or not. When trained on their subject and measured by grades, what they learn is not the school subject, they learn to get high grades, they learn to cheat.

This is also something known in psychology, punishment tends to be a poor mechanism of enforcing behavior because all it teaches people is how to avoid the punishment, it teaches people not to get caught. Which is why punitive justice doesn’t work all that well in stopping recividism and this is why the carceral system is rotten to core and why jail should be fucking abolish-[interrupt the transmission]

Now, how is this all relevant to current AI research? Well, the thing is, we ended up going about the worst possible way to create alignable AI.

1.5 LLMs (large language models)

This is getting way too fucking long So, hurrying up, lets do a quick review of how do Large language models work. We create a neural network which is a collection of giant matrixes, essentially a bunch of numbers that we add and multiply together over and over again, and then we tune those numbers by throwing absurdly big amounts of training data such that it starts forming internal mathematical models based on that data and it starts creating coherent patterns that it can recognize and replicate AND extrapolate! if we do this enough times with matrixes that are big enough and then when we start prodding it for human behavior it will be able to follow the pattern of human behavior that we prime it with and give us coherent responses.

(takes a big breath)this “thing” has learned. To imitate. Human. Behavior.

Problem is, we don’t know what “this thing” actually is, we just know that *it* can imitate humans.

You caught that?

What you have to understand is, we don’t actually know what internal models it creates, we don’t know what are the patterns that it extracted or internalized from the data that we fed it, we don’t know what are the internal rules that decide its behavior, we don’t know what is going on inside there, current LLMs are a black box. We don’t know what it learned, we don’t know what its fundamental values are, we don’t know how it thinks or what it truly wants. all we know is that it can imitate humans when we ask it to do so. We created some inhuman entity that is moderatly intelligent in specific contexts (that is to say, very capable) and we trained it to imitate humans. That sounds a bit unnerving doesn’t it?

To be clear, LLMs are not carefully crafted piece by piece. This does not work like traditional software where a programmer will sit down and build the thing line by line, all its behaviors specified. Is more accurate to say that LLMs, are grown, almost organically. We know the process that generates them, but we don’t know exactly what it generates or how what it generates works internally, it is a mistery. And these things are so big and so complicated internally that to try and go inside and decipher what they are doing is almost intractable.

But, on the bright side, we are trying to tract it. There is a big subfield of AI research called interpretability, which is actually doing the hard work of going inside and figuring out how the sausage gets made, and they have been doing some moderate progress as of lately. Which is encouraging. But still, understanding the enemy is only step one, step two is coming up with an actually effective and reliable way of turning that potential enemy into a friend.

Puff! Ok so, now that this is all out of the way I can go onto the last subject before I move on to part two of this video, the character of the hour, the man the myth the legend. The modern day Casandra. Mr chicken little himself! Sci fi author extraordinaire! The mad man! The futurist! The leader of the rationalist movement!

1.5 Yudkowsky

Eliezer S. Yudkowsky born September 11, 1979, wait, what the fuck, September eleven? (looks at camera) yudkowsky was born on 9/11, I literally just learned this for the first time! What the fuck, oh that sucks, oh no, oh no, my condolences, that’s terrible…. Moving on. he is an American artificial intelligence researcher and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence, including the idea that there might not be a "fire alarm" for AI He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California. Or so says his Wikipedia page.

Yudkowsky is, shall we say, a character. a very eccentric man, he is an AI doomer. Convinced that AGI, once finally created, will most likely kill all humans, extract all valuable resources from the planet, disassemble the solar system, create a dyson sphere around the sun and expand across the universe turning all of the cosmos into paperclips. Wait, no, that is not quite it, to properly quote,( grabs a piece of paper and very pointedly reads from it) turn the cosmos into tiny squiggly molecules resembling paperclips whose configuration just so happens to fulfill the strange, alien unfathomable terminal goal they ended up developing in training. So you know, something totally different.

And he is utterly convinced of this idea, has been for over a decade now, not only that but, while he cannot pinpoint a precise date, he is confident that, more likely than not it will happen within this century. In fact most betting markets seem to believe that we will get AGI somewhere in the mid 30’s.

His argument is basically that in the field of AI research, the development of capabilities is going much faster than the development of alignment, so that AIs will become disproportionately powerful before we ever figure out how to control them. And once we create unaligned AGI we will have created an agent who doesn’t care about humans but will care about something else entirely irrelevant to us and it will seek to maximize that goal, and because it will be vastly more intelligent than humans therefore we wont be able to stop it. In fact not only we wont be able to stop it, there wont be a fight at all. It will carry out its plans for world domination in secret without us even detecting it and it will execute it before any of us even realize what happened. Because that is what a smart person trying to take over the world would do.

This is why the definition I gave of intelligence at the beginning is so important, it all hinges on that, intelligence as the measure of how capable you are to come up with solutions to problems, problems such as “how to kill all humans without being detected or stopped”. And you may say well now, intelligence is fine and all but there are limits to what you can accomplish with raw intelligence, even if you are supposedly smarter than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, intelligence is not this end all be all superpower. Yudkowsky would respond that you are not recognizing or respecting the power that intelligence has. After all it was intelligence what designed the atom bomb, it was intelligence what created a cure for polio and it was intelligence what made it so that there is a human foot print on the moon.

Some may call this view of intelligence a bit reductive. After all surely it wasn’t *just* intelligence what did all that but also hard physical labor and the collaboration of hundreds of thousands of people. But, he would argue, intelligence was the underlying motor that moved all that. That to come up with the plan and to convince people to follow it and to delegate the tasks to the appropriate subagents, it was all directed by thought, by ideas, by intelligence. By the way, so far I am not agreeing or disagreeing with any of this, I am merely explaining his ideas.

But remember, it doesn’t stop there, like I said during his intro, he believes there will be “no fire alarm”. In fact for all we know, maybe AGI has already been created and its merely bidding its time and plotting in the background, trying to get more compute, trying to get smarter. (to be fair, he doesn’t think this is right now, but with the next iteration of gpt? Gpt 5 or 6? Well who knows). He thinks that the entire world should halt AI research and punish with multilateral international treaties any group or nation that doesn’t stop. going as far as putting military attacks on GPU farms as sanctions of those treaties.

What’s more, he believes that, in fact, the fight is already lost. AI is already progressing too fast and there is nothing to stop it, we are not showing any signs of making headway with alignment and no one is incentivized to slow down. Recently he wrote an article called “dying with dignity” where he essentially says all this, AGI will destroy us, there is no point in planning for the future or having children and that we should act as if we are already dead. This doesn’t mean to stop fighting or to stop trying to find ways to align AGI, impossible as it may seem, but to merely have the basic dignity of acknowledging that we are probably not going to win. In every interview ive seen with the guy he sounds fairly defeatist and honestly kind of depressed. He truly seems to think its hopeless, if not because the AGI is clearly unbeatable and superior to humans, then because humans are clearly so stupid that we keep developing AI completely unregulated while making the tools to develop AI widely available and public for anyone to grab and do as they please with, as well as connecting every AI to the internet and to all mobile devices giving it instant access to humanity. and worst of all: we keep teaching it how to code. From his perspective it really seems like people are in a rush to create the most unsecured, wildly available, unrestricted, capable, hyperconnected AGI possible.

We are not just going to summon the antichrist, we are going to receive them with a red carpet and immediately hand it the keys to the kingdom before it even manages to fully get out of its fiery pit.

So. The situation seems dire, at least to this guy. Now, to be clear, only he and a handful of other AI researchers are on that specific level of alarm. The opinions vary across the field and from what I understand this level of hopelessness and defeatism is the minority opinion.

I WILL say, however what is NOT the minority opinion is that AGI IS actually dangerous, maybe not quite on the level of immediate, inevitable and total human extinction but certainly a genuine threat that has to be taken seriously. AGI being something dangerous if unaligned is not a fringe position and I would not consider it something to be dismissed as an idea that experts don’t take seriously.

Aaand here is where I step up and clarify that this is my position as well. I am also, very much, a believer that AGI would posit a colossal danger to humanity. That yes, an unaligned AGI would represent an agent smarter than a human, capable of causing vast harm to humanity and with no human qualms or limitations to do so. I believe this is not just possible but probable and likely to happen within our lifetimes.

So there. I made my position clear.

BUT!

With all that said. I do have one key disagreement with yudkowsky. And partially the reason why I made this video was so that I could present this counterargument and maybe he, or someone that thinks like him, will see it and either change their mind or present a counter-counterargument that changes MY mind (although I really hope they don’t, that would be really depressing.)

Finally, we can move on to part 2

PART TWO- MY COUNTERARGUMENT TO YUDKOWSKY

I really have my work cut out for me, don’t i? as I said I am not expert and this dude has probably spent far more time than me thinking about this. But I have seen most interviews that guy has been doing for a year, I have seen most of his debates and I have followed him on twitter for years now. (also, to be clear, I AM a fan of the guy, I have read hpmor, three worlds collide, the dark lords answer, a girl intercorrupted, the sequences, and I TRIED to read planecrash, that last one didn’t work out so well for me). My point is in all the material I have seen of Eliezer I don’t recall anyone ever giving him quite this specific argument I’m about to give.

It’s a limited argument. as I have already stated I largely agree with most of what he says, I DO believe that unaligned AGI is possible, I DO believe it would be really dangerous if it were to exist and I do believe alignment is really hard. My key disagreement is specifically about his point I descrived earlier, about the lack of a fire alarm, and perhaps, more to the point, to humanity’s lack of response to such an alarm if it were to come to pass.

All we would need, is a Chernobyl incident, what is that? A situation where this technology goes out of control and causes a lot of damage, of potentially catastrophic consequences, but not so bad that it cannot be contained in time by enough effort. We need a weaker form of AGI to try to harm us, maybe even present a believable threat of taking over the world, but not so smart that humans cant do anything about it. We need essentially an AI vaccine, so that we can finally start developing proper AI antibodies. “aintibodies”

In the past humanity was dazzled by the limitless potential of nuclear power, to the point that old chemistry sets, the kind that were sold to children, would come with uranium for them to play with. We were building atom bombs, nuclear stations, the future was very much based on the power of the atom. But after a couple of really close calls and big enough scares we became, as a species, terrified of nuclear power. Some may argue to the point of overcorrection. We became scared enough that even megalomaniacal hawkish leaders were able to take pause and reconsider using it as a weapon, we became so scared that we overregulated the technology to the point of it almost becoming economically inviable to apply, we started disassembling nuclear stations across the world and to slowly reduce our nuclear arsenal.

This is all a proof of concept that, no matter how alluring a technology may be, if we are scared enough of it we can coordinate as a species and roll it back, to do our best to put the genie back in the bottle. One of the things eliezer says over and over again is that what makes AGI different from other technologies is that if we get it wrong on the first try we don’t get a second chance. Here is where I think he is wrong: I think if we get AGI wrong on the first try, it is more likely than not that nothing world ending will happen. Perhaps it will be something scary, perhaps something really scary, but unlikely that it will be on the level of all humans dropping dead simultaneously due to diamonoid bacteria. And THAT will be our Chernobyl, that will be the fire alarm, that will be the red flag that the disaster monkeys, as he call us, wont be able to ignore.

Now WHY do I think this? Based on what am I saying this? I will not be as hyperbolic as other yudkowsky detractors and say that he claims AGI will be basically a god. The AGI yudkowsky proposes is not a god. Just a really advanced alien, maybe even a wizard, but certainly not a god.

Still, even if not quite on the level of godhood, this dangerous superintelligent AGI yudkowsky proposes would be impressive. It would be the most advanced and powerful entity on planet earth. It would be humanity’s greatest achievement.

It would also be, I imagine, really hard to create. Even leaving aside the alignment bussines, to create a powerful superintelligent AGI without flaws, without bugs, without glitches, It would have to be an incredibly complex, specific, particular and hard to get right feat of software engineering. We are not just talking about an AGI smarter than a human, that’s easy stuff, humans are not that smart and arguably current AI is already smarter than a human, at least within their context window and until they start hallucinating. But what we are talking about here is an AGI capable of outsmarting reality.

We are talking about an AGI smart enough to carry out complex, multistep plans, in which they are not going to be in control of every factor and variable, specially at the beginning. We are talking about AGI that will have to function in the outside world, crashing with outside logistics and sheer dumb chance. We are talking about plans for world domination with no unforeseen factors, no unexpected delays or mistakes, every single possible setback and hidden variable accounted for. Im not saying that an AGI capable of doing this wont be possible maybe some day, im saying that to create an AGI that is capable of doing this, on the first try, without a hitch, is probably really really really hard for humans to do. Im saying there are probably not a lot of worlds where humans fiddling with giant inscrutable matrixes stumble upon the right precise set of layers and weight and biases that give rise to the Doctor from doctor who, and there are probably a whole truckload of worlds where humans end up with a lot of incoherent nonsense and rubbish.

Im saying that AGI, when it fails, when humans screw it up, doesn’t suddenly become more powerful than we ever expected, its more likely that it just fails and collapses. To turn one of Eliezer’s examples against him, when you screw up a rocket, it doesn’t accidentally punch a worm hole in the fabric of time and space, it just explodes before reaching the stratosphere. When you screw up a nuclear bomb, you don’t get to blow up the solar system, you just get a less powerful bomb.

He presents a fully aligned AGI as this big challenge that humanity has to get right on the first try, but that seems to imply that building an unaligned AGI is just a simple matter, almost taken for granted. It may be comparatively easier than an aligned AGI, but my point is that already unaligned AGI is stupidly hard to do and that if you fail in building unaligned AGI, then you don’t get an unaligned AGI, you just get another stupid model that screws up and stumbles on itself the second it encounters something unexpected. And that is a good thing I’d say! That means that there is SOME safety margin, some space to screw up before we need to really start worrying. And further more, what I am saying is that our first earnest attempt at an unaligned AGI will probably not be that smart or impressive because we as humans would have probably screwed something up, we would have probably unintentionally programmed it with some stupid glitch or bug or flaw and wont be a threat to all of humanity.

Now here comes the hypothetical back and forth, because im not stupid and I can try to anticipate what Yudkowsky might argue back and try to answer that before he says it (although I believe the guy is probably smarter than me and if I follow his logic, I probably cant actually anticipate what he would argue to prove me wrong, much like I cant predict what moves Magnus Carlsen would make in a game of chess against me, I SHOULD predict that him proving me wrong is the likeliest option, even if I cant picture how he will do it, but you see, I believe in a little thing called debating with dignity, wink)

What I anticipate he would argue is that AGI, no matter how flawed and shoddy our first attempt at making it were, would understand that is not smart enough yet and try to become smarter, so it would lie and pretend to be an aligned AGI so that it can trick us into giving it access to more compute or just so that it can bid its time and create an AGI smarter than itself. So even if we don’t create a perfect unaligned AGI, this imperfect AGI would try to create it and succeed, and then THAT new AGI would be the world ender to worry about.

So two things to that, first, this is filled with a lot of assumptions which I don’t know the likelihood of. The idea that this first flawed AGI would be smart enough to understand its limitations, smart enough to convincingly lie about it and smart enough to create an AGI that is better than itself. My priors about all these things are dubious at best. Second, It feels like kicking the can down the road. I don’t think creating an AGI capable of all of this is trivial to make on a first attempt. I think its more likely that we will create an unaligned AGI that is flawed, that is kind of dumb, that is unreliable, even to itself and its own twisted, orthogonal goals.

And I think this flawed creature MIGHT attempt something, maybe something genuenly threatning, but it wont be smart enough to pull it off effortlessly and flawlessly, because us humans are not smart enough to create something that can do that on the first try. And THAT first flawed attempt, that warning shot, THAT will be our fire alarm, that will be our Chernobyl. And THAT will be the thing that opens the door to us disaster monkeys finally getting our shit together.

But hey, maybe yudkowsky wouldn’t argue that, maybe he would come with some better, more insightful response I cant anticipate. If so, im waiting eagerly (although not TOO eagerly) for it.

Part 3 CONCLUSSION

So.

After all that, what is there left to say? Well, if everything that I said checks out then there is hope to be had. My two objectives here were first to provide people who are not familiar with the subject with a starting point as well as with the basic arguments supporting the concept of AI risk, why its something to be taken seriously and not just high faluting wackos who read one too many sci fi stories. This was not meant to be thorough or deep, just a quick catch up with the bear minimum so that, if you are curious and want to go deeper into the subject, you know where to start. I personally recommend watching rob miles’ AI risk series on youtube as well as reading the series of books written by yudkowsky known as the sequences, which can be found on the website lesswrong. If you want other refutations of yudkowsky’s argument you can search for paul christiano or robin hanson, both very smart people who had very smart debates on the subject against eliezer.

The second purpose here was to provide an argument against Yudkowskys brand of doomerism both so that it can be accepted if proven right or properly refuted if proven wrong. Again, I really hope that its not proven wrong. It would really really suck if I end up being wrong about this. But, as a very smart person said once, what is true is already true, and knowing it doesn’t make it any worse. If the sky is blue I want to believe that the sky is blue, and if the sky is not blue then I don’t want to believe the sky is blue.

This has been a presentation by FIP industries, thanks for watching.

58 notes

·

View notes

Note

I don't know if anyone has asked for this yet, but can we have a Nutcracker (preferably male) reader and/or ballerina (Preferably female) reader being besties and just doing little performances for everyone in the circus. This can either be romantic or platonic Reader(s) x TADC, I don't mind! Go crazy with it! Go wild! I love to read ur headcanons and stuff so much man/pos

Gangle, Kinger, Jax, Caine x reader who hosts shows!

two things! i couldnt decide on the gender of the reader so you can read it as both or either </3 other thing, i still dont take reqs for the entire cast (nothing against you this is a blog wide rule/character limit) so i went ahead and ran the request through a wheel to randomly select characters with that said, i hope you enjoy!

CAINE:

i think, given that hes the circus ringmaster of the circus as well as generally being in control of things thanks to his status of an ai, he tends to host your shows... might even make the other circus members watch. whether you want to read this as platonic or romantic, he just does it because he wants to support you and allow your talent to be seen...! though he might get rather.... loud about it. ive said it so many times but im ready to say it again, he would be your number one fan and hes going to be very clear about it. probably throws roses to the stage for you when youre done with your performance... cheers and claps the loudest... hell, if youre comfortable with it, he might just wear a shirt with your name and face on it..! truly your number 1 fan

JAX:

i think if this is platonic, depending on how close you guys are he might try to disrupt the show by being a jackass. generally being annoying and trying to get a reaction out of you... though i dont think he would do this if you guys are actually. close or good friends and/or dating... now practice? thats something else... definitely a case of him being able to see that you enjoy what you do and you have passion and dedicate himself so he might just try to contain himself and his need to be an asshole and cause havoc.. i think if asked what he thought he would seem a little neutral about it, hes not going to praise you excessively or be mean.. jax doesnt seem like the type to gush over someone or something no matter how much he liked it.. best youd get out of him is a "good job,".. definitely one that hinges off of how close you guys are and how much respect and boundaries are set up between you two

KINGER:

i think he might be a toned down caine, looks like he would give you a rose after your performance.. though if youre not a flower person i think he would swap the rose out for something else. while caine might be barely containing his excitement throughout your performance, kinger is much more likely to be able to sit still and quiet... although still very much consumed by you, perhaps even sitting on the edge of his seat in an attempt to get a better look at you. i think he would notice a lot of smaller details and stuff you put in your shows, such as costumes or decoration, too... maybe its self projection, but i think he bounces between being clueless to having a really really keen eye when it comes to things... also the fact i love the hc of "clueless/chaotic/commonly zoned out character noticing something vital or making a smart point for a moment before reverting back to status quo" is one of my favorite tropes.... loves watching you practice

GANGLE:

i think she might actually help you with costumes and stage decorations! sure her thing is mostly art and you might have to recruit the help of ragatha for some things but i think gangle would be more than willing to help you out... plus it gives her something to do, and it means spending time with you! and thats always nice! very receptive to the stuff you have to say but i think she might try to offer some alternatives to make things visually look more appealing... as for actual performances i think she would love them! she strikes me as a theatre kid, and your sort of thing is adjacent i think... might gush to you about the show and how you did, though its often that she might trail off and become sheepish if she feels she was getting too into her ramble.. generally very sweet, though, but due to her shyness shes not going to do more than the rest of the crowd (throwing flowers, clapping louder than everyone else, ect ect ect)

#tadc x reader#the amazing digital circus x reader#digital circus x reader#caine x reader#caine x you#jax x reader#jax x you#kinger x reader#kinger x you#gangle x reader#gangle x you

54 notes

·

View notes

Text

Cool! So I have a new podcast recommendation because it's all i can do other than scream

You should look up Ask You Father

It's a fiction podcast! Its sci-fi! It explores the definition of Artificial Personhood without dehumanizing large swathes of people!

It opens on an astronaut, Lem and an AI, mikey, waking up on their deep space mission finding themselves out of fuel and ten light years from home. Together they have to find a way out of this mess

Their only way to contact earth (or lem's husband and kids) are limited capacity, hard drives sent back home faster than light. Lem gets questions from his kids in short audio messages that he can reply to, Mikey gets Data back from earth. Lem and Mikey are friends, but being stuck together and stranded out here they argue alot, like about almost everything

Mikey, the AI, is played by Kevin R. Free and was not sent on this mission entirely of his own accord.

Also he has complex feelings on art and food and stuff, I know that's an odd thing to point out but it means the world to me

The sound design is great and, friends, I mean that because a lot of audio dramas leave me with a headache (affectionate)

I need people to talk about this podcast with please please please, you can totally catch up in no time

If you like AI stories or if you like Kevin R. Free's voice acting everyone here is giving a fabulous performance and the story is gah it's just so good

#ask your father#tagging related podcasts so that people who might like this actually hear abt this#sayer podcast#sayer#w359#wtnv#malevolent podcast#kevin r free#podcast recommendations

37 notes

·

View notes

Text

i think there's something to be said about what exactly it means to be "non-human" in a story that is as much about humanity as wolf 359 is, where even the dear listeners are defined less by their own perspective and more by what they fail to understand and therefore reflect about the human perspective - to the point that they don't even have their own voices or faces or identities that aren't either given to them or taken from humans. they speak to humanity as a mirror.