#hype machine

Explore tagged Tumblr posts

Text

SFH 8: Hype Unleashed

This new playlist pretty much got full immediately (I aim for 40 songs per sub-playlist) since my new listening method, (using a speaker and making a shortlist, vs prior, headphones and only adding sure favourites) followed by a couple weeks of vetting and culling, worked very well.

Here are my posts from compiling the playlist on Spotify on the 28th June:

I've been auditioning and editing my recent Hypem discoveries (cut down to about 55 songs, with some new additions) while sleeping and during the day sometimes, and I think I'm happy with most of it, so I'm adding a few dozen songs to my playlists now :p

I have amazing music taste, I have to say, it's simply a fact. Last 11 songs added to SFH 7, (40 songs per sub-playlist) so I made a new SFH 8, and also adding them to the main year's playlist, main one here:

New SFH 8 subplaylist is this one:

These songs were missing from Spotify 😒 so I included the webpage urls in the screenshots, since one has already been deleted, but is still playable via the blog, and others are hard to search for:

Finished the update for now, 47 new songs added with 5 missing. This one was interesting, a song from 2011 called Gabriel. The original song has a lot of emphasis on my name which, well, I went for this remix, which mostly uses a short vocal sample from the end of the original.

A video of the current main 2024 Searching for Hype playlist, above (minus the five tracks in the screenshots)

0 notes

Text

i was saying this to my best friend the other day but why are voltron aus making keith either rich or like a prince or something. why must you take his poor kid sparkle. that man knows a 7/11 slurpee he knows a walmart brand bottle of soda. he deserves to know the simple pleasure of an inflatable backyard pool. I know he got those fuckass black jeggings from a thrift store. and that fuckass mullet is from great clips. is keith kogane truly keith kogane if hes not taking his change to the coinstar at the grocery store. dont take this from my man!!!!!!

#i used to take my change to the coinstar all the time but i dont get tips at work anymore so i like never have cash..... i miss it#grocery store trip you get ur coinstar money and then u buy a crisp monster energy GAME CHANGER#sorry i got rlly hyped abt the coinstar machine i forgot what i was talking abt#soad.txt#not art#voltron#anyways i truly cannot imagine keith being anything but kinda poor i often project my white trash upon him and i will continue to do so

650 notes

·

View notes

Text

I assure you, an AI didn’t write a terrible “George Carlin” routine

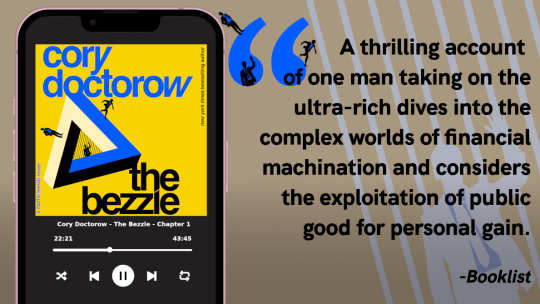

There are only TWO MORE DAYS left in the Kickstarter for the audiobook of The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

On Hallowe'en 1974, Ronald Clark O'Bryan murdered his son with poisoned candy. He needed the insurance money, and he knew that Halloween poisonings were rampant, so he figured he'd get away with it. He was wrong:

https://en.wikipedia.org/wiki/Ronald_Clark_O%27Bryan

The stories of Hallowe'en poisonings were just that – stories. No one was poisoning kids on Hallowe'en – except this monstrous murderer, who mistook rampant scare stories for truth and assumed (incorrectly) that his murder would blend in with the crowd.

Last week, the dudes behind the "comedy" podcast Dudesy released a "George Carlin" comedy special that they claimed had been created, holus bolus, by an AI trained on the comedian's routines. This was a lie. After the Carlin estate sued, the dudes admitted that they had written the (remarkably unfunny) "comedy" special:

https://arstechnica.com/ai/2024/01/george-carlins-heirs-sue-comedy-podcast-over-ai-generated-impression/

As I've written, we're nowhere near the point where an AI can do your job, but we're well past the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job:

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

AI systems can do some remarkable party tricks, but there's a huge difference between producing a plausible sentence and a good one. After the initial rush of astonishment, the stench of botshit becomes unmistakable:

https://www.theguardian.com/commentisfree/2024/jan/03/botshit-generative-ai-imminent-threat-democracy

Some of this botshit comes from people who are sold a bill of goods: they're convinced that they can make a George Carlin special without any human intervention and when the bot fails, they manufacture their own botshit, assuming they must be bad at prompting the AI.

This is an old technology story: I had a friend who was contracted to livestream a Canadian awards show in the earliest days of the web. They booked in multiple ISDN lines from Bell Canada and set up an impressive Mbone encoding station on the wings of the stage. Only one problem: the ISDNs flaked (this was a common problem with ISDNs!). There was no way to livecast the show.

Nevertheless, my friend's boss's ordered him to go on pretending to livestream the show. They made a big deal of it, with all kinds of cool visualizers showing the progress of this futuristic marvel, which the cameras frequently lingered on, accompanied by overheated narration from the show's hosts.

The weirdest part? The next day, my friend – and many others – heard from satisfied viewers who boasted about how amazing it had been to watch this show on their computers, rather than their TVs. Remember: there had been no stream. These people had just assumed that the problem was on their end – that they had failed to correctly install and configure the multiple browser plugins required. Not wanting to admit their technical incompetence, they instead boasted about how great the show had been. It was the Emperor's New Livestream.

Perhaps that's what happened to the Dudesy bros. But there's another possibility: maybe they were captured by their own imaginations. In "Genesis," an essay in the 2007 collection The Creationists, EL Doctorow (no relation) describes how the ancient Babylonians were so poleaxed by the strange wonder of the story they made up about the origin of the universe that they assumed that it must be true. They themselves weren't nearly imaginative enough to have come up with this super-cool tale, so God must have put it in their minds:

https://pluralistic.net/2023/04/29/gedankenexperimentwahn/#high-on-your-own-supply

That seems to have been what happened to the Air Force colonel who falsely claimed that a "rogue AI-powered drone" had spontaneously evolved the strategy of killing its operator as a way of clearing the obstacle to its main objective, which was killing the enemy:

https://pluralistic.net/2023/06/04/ayyyyyy-eyeeeee/

This never happened. It was – in the chagrined colonel's words – a "thought experiment." In other words, this guy – who is the USAF's Chief of AI Test and Operations – was so excited about his own made up story that he forgot it wasn't true and told a whole conference-room full of people that it had actually happened.

Maybe that's what happened with the George Carlinbot 3000: the Dudesy dudes fell in love with their own vision for a fully automated luxury Carlinbot and forgot that they had made it up, so they just cheated, assuming they would eventually be able to make a fully operational Battle Carlinbot.

That's basically the Theranos story: a teenaged "entrepreneur" was convinced that she was just about to produce a seemingly impossible, revolutionary diagnostic machine, so she faked its results, abetted by investors, customers and others who wanted to believe:

https://en.wikipedia.org/wiki/Theranos

The thing about stories of AI miracles is that they are peddled by both AI's boosters and its critics. For boosters, the value of these tall tales is obvious: if normies can be convinced that AI is capable of performing miracles, they'll invest in it. They'll even integrate it into their product offerings and then quietly hire legions of humans to pick up the botshit it leaves behind. These abettors can be relied upon to keep the defects in these products a secret, because they'll assume that they've committed an operator error. After all, everyone knows that AI can do anything, so if it's not performing for them, the problem must exist between the keyboard and the chair.

But this would only take AI so far. It's one thing to hear implausible stories of AI's triumph from the people invested in it – but what about when AI's critics repeat those stories? If your boss thinks an AI can do your job, and AI critics are all running around with their hair on fire, shouting about the coming AI jobpocalypse, then maybe the AI really can do your job?

https://locusmag.com/2020/07/cory-doctorow-full-employment/

There's a name for this kind of criticism: "criti-hype," coined by Lee Vinsel, who points to many reasons for its persistence, including the fact that it constitutes an "academic business-model":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

That's four reasons for AI hype:

to win investors and customers;

to cover customers' and users' embarrassment when the AI doesn't perform;

AI dreamers so high on their own supply that they can't tell truth from fantasy;

A business-model for doomsayers who form an unholy alliance with AI companies by parroting their silliest hype in warning form.

But there's a fifth motivation for criti-hype: to simplify otherwise tedious and complex situations. As Jamie Zawinski writes, this is the motivation behind the obvious lie that the "autonomous cars" on the streets of San Francisco have no driver:

https://www.jwz.org/blog/2024/01/driverless-cars-always-have-a-driver/

GM's Cruise division was forced to shutter its SF operations after one of its "self-driving" cars dragged an injured pedestrian for 20 feet:

https://www.wired.com/story/cruise-robotaxi-self-driving-permit-revoked-california/

One of the widely discussed revelations in the wake of the incident was that Cruise employed 1.5 skilled technical remote overseers for every one of its "self-driving" cars. In other words, they had replaced a single low-waged cab driver with 1.5 higher-paid remote operators.

As Zawinski writes, SFPD is well aware that there's a human being (or more than one human being) responsible for every one of these cars – someone who is formally at fault when the cars injure people or damage property. Nevertheless, SFPD and SFMTA maintain that these cars can't be cited for moving violations because "no one is driving them."

But figuring out who which person is responsible for a moving violation is "complicated and annoying to deal with," so the fiction persists.

(Zawinski notes that even when these people are held responsible, they're a "moral crumple zone" for the company that decided to enroll whole cities in nonconsensual murderbot experiments.)

Automation hype has always involved hidden humans. The most famous of these was the "mechanical Turk" hoax: a supposed chess-playing robot that was just a puppet operated by a concealed human operator wedged awkwardly into its carapace.

This pattern repeats itself through the ages. Thomas Jefferson "replaced his slaves" with dumbwaiters – but of course, dumbwaiters don't replace slaves, they hide slaves:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

The modern Mechanical Turk – a division of Amazon that employs low-waged "clickworkers," many of them overseas – modernizes the dumbwaiter by hiding low-waged workforces behind a veneer of automation. The MTurk is an abstract "cloud" of human intelligence (the tasks MTurks perform are called "HITs," which stands for "Human Intelligence Tasks").

This is such a truism that techies in India joke that "AI" stands for "absent Indians." Or, to use Jathan Sadowski's wonderful term: "Potemkin AI":

https://reallifemag.com/potemkin-ai/

This Potemkin AI is everywhere you look. When Tesla unveiled its humanoid robot Optimus, they made a big flashy show of it, promising a $20,000 automaton was just on the horizon. They failed to mention that Optimus was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Likewise with the famous demo of a "full self-driving" Tesla, which turned out to be a canned fake:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

The most shocking and terrifying and enraging AI demos keep turning out to be "Just A Guy" (in Molly White's excellent parlance):

https://twitter.com/molly0xFFF/status/1751670561606971895

And yet, we keep falling for it. It's no wonder, really: criti-hype rewards so many different people in so many different ways that it truly offers something for everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

Back the Kickstarter for the audiobook of The Bezzle here!

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Ross Breadmore (modified) https://www.flickr.com/photos/rossbreadmore/5169298162/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#ai#absent indians#mechanical turks#scams#george carlin#comedy#body-snatchers#fraud#theranos#guys in robot suits#criti-hype#machine learning#fake it til you make it#too good to fact-check#mturk#deepfakes

2K notes

·

View notes

Text

(it is enough. it is more than enough.)

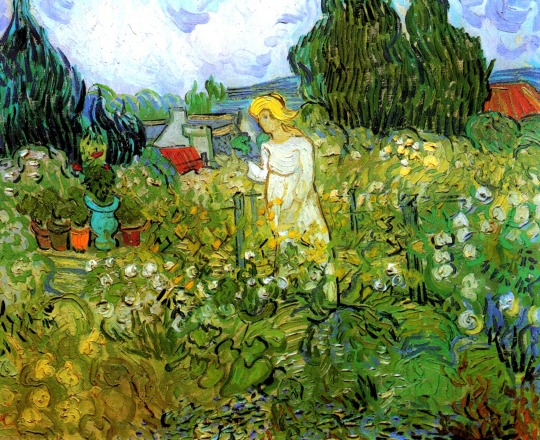

Anne of Green Gables, L.M. Montgomery | Mademoiselle Gachet in her garden at Auvers-sur-Oise, Vincent van Gogh (1890) | 'Toad' - Mary Oliver | About Time (2013) | 'No Choir' - Florence + the Machine | Comic by eOndine | The Amber Spyglass, Philip Pullman | Superstore 'All Sales Final' (2021) | 'The View Between Villages' - Noah Kahan | Meadow with Poplars, Claude Monet (1875) | 'The Orange' - Wendy Cope

#anne of green gables#l m montgomery#vincent van gogh#mary oliver#about time#florence + the machine#the amber spyglass#his dark materials#philip pullman#noah kahan#claude monet#the orange#wendy cope#web weave#web weaving#hopecore#light academia#quotes#literature#art#painting#mine#words#the living of it all#I have been meaning to post this for about a million years#james i hope it lives up to the hype BUH#slightly different vibes from my last web weave lmao

1K notes

·

View notes

Note

*Holds out a poster of The Divine Machine for Grey.*

I may not have heard of them or their music but I can at least be kind to someone who has!

#sprunki#incredibox sprunki#sprunki incredibox#sprunki au#sprunki mortality#sprunki mortality au#sprunki gray#surprise savior art moment#the band is fictional btw to those who are unaware#but x aint gonna lie if there was a band named The Divine Machine x'd be hype for it too

47 notes

·

View notes

Text

I'm trying to expand my social bubble on here. If you're anywhere in the realm of monsterfuckery, hello let's be mutuals and maybe friends!

#love love love interacting with my followers!!!#but i also want to get to know other creators and hype them up#ive found that making friends in a community space is important so you feel like Community and not just a content machine lol#monster fucker#monsterfucker#terato#teratophillia#monster boyfriend#demon boyfriend#demon fucker#monster x reader

57 notes

·

View notes

Text

Always love Alex borderline threatening Paris for not screaming loud enough for Miles, then Miles looking over at Alex when he sings “something beautiful”.

youtube

#we love supportive boyfriends#hyping each other up#alex turner#miles kane#arctic monkeys#sias era#little illusion machine#Youtube

22 notes

·

View notes

Note

wtf is that all she has to say about her boyfriend michael fans praised him more than. so is this her saying the show phenomenal or her boyfriend cos honestly this chick don't make sense what ur thoughts on this post

Hi there! And oh, wow. I've had a little time to process this now that I'm home, and I think the biggest thing that comes to mind is how this Insta story feels so...obligatory, and the bare minimum. As you said, it's not clear whether Anna is talking about the production itself or Michael's performance, and there is hardly any energy or enthusiasm to the post, especially not compared to the multiple posts AL made about Photobombing Michael J. Fox at the BAFTAs.

It becomes even more noticeable when you look at it next to the Insta story that Georgia posted:

Georgia and David didn't even attend the show tonight, and yet they hyped Michael up in a way Anna did not. You can feel the warmth and silliness and love in how they're rooting for him and cheering him on--David, in his manic Scottish way, and Georgia in her more sarcastic/dry English way--and how they seem genuinely excited for Michael. Yet I got absolutely none of that from AL's post.

All of the above is augmented by the choice of pictures in the post, with David and Georgia's photo centering Michael, literally and figuratively. He is the focus of the picture and of their attention, and the message there seems to be that Michael is what David and Georgia are most excited about. In contrast, the picture AL used is of a nearly empty dimly lit stage with a hospital bed on it, and I do not think that is by accident.

As I have said previously, my reaction is never to any one post in isolation, but to the continuation of a pattern of posts/comments from Anna over the course of several years. The same thing happened when production photos were released of Michael as Prince Andrew a few months ago, and when he played Chris Tarrant in Quiz in 2021:

AL hated the wig then, and my feeling is that she hates the wig Michael is wearing now, as well as the pyjamas that are his costume for a significant portion of the play and how he looks in them. I think that she does not care at all about the play itself or its significance to Michael, and has no desire to hype him up because his appearance in Nye is not what she considers "attractive." In addition, a fan posted stage door pictures on Twitter, including one with AL, and it seems to very much echo the lack of enthusiasm in her Insta story.

So yes, I think AL's post seems very generic (at best). It makes her come across as disinterested and somehow "removed" from both Michael and the show itself, again in contrast to David and Georgia's picture that conveys the exact opposite.

Those are my thoughts, at any rate, and I could be completely off the mark, but as always I'd be glad to hear from my followers about what you think. Thanks for writing in! x

#angel19924#reply post#michael sheen#welsh seduction machine#nye the play#national theatre#david tennant#soft scottish hipster gigolo#georgia tennant#when your boyfriend and his wife hype you up more than your own girlfriend#they just do not give 'couple' energy and never have#yikes#choices#not all of them good#i just hope Michael knows he is lovely#and deserves good things#anna lundberg#discourse

96 notes

·

View notes

Text

sukuna did not tell kenjaku about his plan to change vessels, which makes me wonder… what makes us and maybe even sukuna think that kenjaku told him everything. another yapping session incoming cause I need to get this out of me. we don’t know the terms of their agreement but sukuna is certain that yuuji’s only purpose was to seal his fingers and mind you that says the man who throughout the whole manga kept underestimating him and saying how boring he is, which creates a perfect blind spot. sukuna is so uninterested in yuuji, probably as a way to keep some sort of distance between himself and yuuji, that it is very much likely that he’s not aware of the actual plans kenjaku had towards yuuji. why was it important for them to keep sukuna caged? wa yuuji always supposed to have an engraved curse technique(s)?? why is he slowly turning into another sukuna?? and I’m not saying it to take away yuuji’s agency as a character but to point to the fact that the lines between the curse known as ryomen sukuna and yuuji are beginning to become more and more blurry with each new chapter. sukuna referred to himself as the fallen angel/disgraced one, but who was he BEFORE that?? and what’s the actual reason behind angel wanting to kill him? there’s so much we don’t know and honestly… as intelligent as he is I don’t think sukuna truly knows what is going on either… but I might be totally reaching and I still don’t why I keep brainstorming all of it cause gege is just too damn unpredictable so I really don’t know what’s relevant here or what’s not but there are just so many unknowns in this story that I just can’t help but wonder… (more in the tags)

#I’m yapping#I might have overlooked some information if so pls correct me!!#I know everyone is hyped for yuuji and I'm happy he's finally getting some long overdue recognition#but this boy is like a killing machine at this point… a weapon even#I remember how everyone kept saying how it sucked to be gojo cause he was essentially used as a weapon#well… the same thing is happening to yuuji rn and no one is talking about it#at least gojo enjoyed fighting… but yuuji doesn’t#for yuuji it’s like a job#and I’m not saying yuuji is another sukuna cause he’s nothing like sukuna#but it almost seems like they want to turn him into another sukuna... but this time he'd be a better#sukuna cause this time he will do as he is told.. a perfect cog#maybe is someone actually stopped for a moment to listen to kenjaku’s plans we wouldn’t be in this situation#itadori yuuji#ryomen sukuna#kenjaku

58 notes

·

View notes

Text

414 News pt 2

Enhanced versions of Ink Machine coming to PS5, XBox, and Switch this year

#batdr#batim#bendy and the dark revival#bendy and the ink machine#i wonder if this is the only kind of news we'll get today#i have my doubts since they hyped it up so much#guess we'll see

15 notes

·

View notes

Text

The Shortlist

My new music listening method is basically reinventing the hifi, I changed the hotkeys on my tablet to be volume up/down, pause/play and skip back/forward, (so basically a remote) and then listen to everything via speaker. I don't have it too loud since the volume is very variable on Hypem, and the fan and background noise dulls it a bit, but I have made a shortlist of generally enjoyable songs heard in this more ambient way - I skip less since this way is more passive, so I don't react strongly to certain things I dislike in songs (like speaking, sound effects, though I must still subconsciously filter them out and just skip less forcefully, since this shortlist still features no elements I dislike so far) and I listen to more things repeatedly since I'm less focused, and somehow this less effortful method has resulted in 76(!) shortlisted songs in two weeks. The more focused method resulted in more like 8 shortlisted songs a month. I switched back to headphones to review them, and all these songs are very good and sound good simply in discovery order. I might make a new playlist series for the songs I found this way as I'm surprised it was so effective, listening *less* intently to allow more music to be heard, and listening to more songs all the way through. There's a slightly chaotic energy to this selection too, it has that kind of random joyfulness found at good music festivals, of just wandering between undefined expressions of energy.

0 notes

Text

Don't think I've posted these yet, but a while ago I made some ieytd palettes, and I decided to clean them up a little in preparation for the third game, you know how it is

Use them if you'd like, share them around, all of that fun stuff. And if you make something cool um 😳🥺 I would love to see if you decided to tag me in it 👉👈 tee hee

#I expect you to die#ieytd#maaan. these games have the worst tagging conventions in the WORLD dude adbhskjfsd. im not tagging the sequel indivisually#i picked up a pen (art)#dude i am HYPED for the third game and for making color palettes for the third game#color palette#you already KNOW im titling the title screen palette cog in my machine baby. you already know

245 notes

·

View notes

Text

Well this 414 was kinda ass

#bendy and the ink machine#batim#bendy the cage#bendy lone wolf#dreams come to life#bendy dctl#bendy and the dark revival#batdr#boris and the dark survival#Roddy Rambles#I mean.. at least Meatly addressed the Cage thing#Not the official Bendy accounts. just Meatly#And I guess we got good merch finally... for 4 days#and shorts that couuuld be good.. dunno who's animating them tho since the team doesn't like crediting artists#this was just. ugh. ughghhhhh#I was thinking this would be like.. I dunno. a thing like 'oh here's Lone Wolf and maybe some teasers'#And that'd be it... this is worse#Nothing about Lone Wolf nothing about the Movie#the Cage is fucking GONE#WHICH NOT TO BE THAT GUY BUT. I KNEW IT#I KNEW something like this would happen#They went quiet! they promised they wouldn't go quiet on development after BATDR and they did!!#Kept talking about B3ndy and wouldn't address people's questions about the Cage#Didn't think it'd be this exactly but I knew something was up#fml#They hyped up this 414 SO hard and for what? a comp of old shorts from one of the MANY people you laid off?#Merch that's actually decent-to-good and it's limited to 4 DAYS??#Like. holy fuck what a nothing day..#EDIT sorry sorry one genuinely awesome thing. Hex merch#Forgot about that

13 notes

·

View notes

Text

She should be allowed some stabbing, as a treat

#tma#melanie king#the magnus archives#I was so hyped for her to stab Elias and for nothing#digital art#florence and the machine#i love her music

48 notes

·

View notes

Text

I wonder what Hakari and Uraume been up to

Because Gege obviously don’t know.

#like I remember how hype people were to see this fight#only for the fight never to be seen 😭😭#what they talking about what philosophical quandaries are they pondering?#is Hakari teaching Uraume how to gamble with pachinko machines?#at this point they might just be spectating the sukuna matches#jjk#jjk funny#jjk memes#jjk shitpost#shitposting#jujutsu kaisen#hakari kinji#uraume#uraume jjk#jjk hakari

23 notes

·

View notes

Text

About Genshin Impact and the technology of Teyvat

We get a kamera at the start of the game from Xu for the “Snapshot” world quest. In it Xu remarks that the kamera is a new invention from Fontaine.

Xu shows surprise at the Traveler knowing what it is, this supports the idea of the kamera being a new invention meaning it’s pricey and rare to have at this point. Later on, on the Traveler’s journey, we get quests to take pictures of things. This could mean that the kamera is becoming more widespread a time goes on, or just the knowledge of it.

During the 4.3 Fontinalia Festival the focus was placed of films. This was an attempt by the Fontaine Film Association to introduce films to the populace, making the main point of the festival the films produced for it.

This would give idea to films still being a new invention, as they aren’t widespread, just like how the kamera was at the start of the game which was about 3 years ago. And based off of Furina’s Character Demo “Furina: All the World’s a Stage” we can assume that the films recorded were black and white in quality. Unless, we assume that the mini-games during the 4.3 “Roses and Muskets” event were canonical in the way they showed the film quality. No noise was shown in either, but one was in color the other not.

This gives us an estimate of where — technological advancement vice — Teyvat is compared to our world. Films started becoming a thing at the end of the 1800s and start of the 1900s. Colored film started showing up around the 1930s, but became more accessible and profitable later on. Films started getting sound added to them around the mid-to-late 1920s. This gives us an estimate of around the start of the 1900s placement vice.

Now I’d like to bring up Khaenri'ah. It was heralded as “the pride of humanity” as said by Dainslief. Khaenri'ah has more than one notable scientific aspect linked to it, one of these being the “Field Tillers” aka Ruin Machines. Ruin Machines vary from “simple” Ruin Guards to a Ruin Serpent(s).

Ruin Machines seem to have been around for a while, since the Archon War even given the Ruin Hunter stored by Guizhong in a domain. This means that the technology may have been around for 1000s of years, likely being used as a defense method during the war and preserved thereafter. The knowledge of this technology didn’t spread much beyond Khaenri'ah, this showing through how the people of Teyvat started calling the Ruin Machines “Ruin Machines” after they spread globally after the Cataclysm.

Around Sumeru there exist three giant Ruin Machines called “Ruin Golems”. These were giant mecha style machines were piloted by a crew of people, and — get this — include a colored screen. By screen I mean the type of screen you’re viewing this through, footage from outside the Ruin Golem being broadcasted to the screen to allow the people piloting to see where they’re going or what they’re doing.

During the “Vimana Agama” world quest that was apart of the Aranyaka quest line we can go into the Ruin Golem in Devantaka Mountain by Port Ormos. There we can use the actual screen and see through it. This being a machine that has sat unused for hundreds of years. And its screen is still in working condition.

So, simply put. Khaenri'ah was very mechanically advanced.

BUT!

I have yet to mention the Terminal Viewfinders in Fontaine. You know, those eye ball machines you use to transfer energy to terminals in puzzles in the Fontaine Research Institute area. You know, the machines with working colored screens. Mind you this isn’t technology you’re able to find all through out Fontaine, only in certain areas usually accompanied by a researcher from the Institute. So it would be more comparable to machines found in laboratories and not accessible to common people.

But still. At the very least Fontaine is near the mechanical advancements of Khaenri'ah either a few hundred to thousands of years ago.

And I have yet to mention everything going on in King Deshret’s places in the Sumeru desert. And I’m not going to go into more than this mention because that is a headache I have yet to even get around to in game.

TL;DR Khaenri'ah was very technologically advanced to the point of hundreds of years later working colored screen technology. Fontaine is around that point with the Research Institute while normal day to day people are around silent movies in advancement, while I have no idea what’s going on with the Sumeru Desert.

#looking into this mess has made me even more annoyed with Khaenri'ah#what did you need the fucking war machines for. and why the code names. and also the weird decender orphanage thing#I just don’t like the governing body or people of authority in Khaenri'ah pretty much#Dain can hype it up as much as he wants but I just don’t trust it#also the whole abyss order bullshit#yeah but anyway#I got thinking about the technological advancements of Teyvat and got invested#genshin impact#genshin impact lore#teyvat#khaenri'ah#ruin golem#ruin machines#genshin lore#dainsleif#fontaine#Fontaine research institute#Genshin impact 4.3#lore#world building#technology#machines#Genshin kamera#what am I supposed to tag this as#lore discussion#hoyoverse#mihoyo#king deshret#sumeru desert#Genshin impact Field tillers

49 notes

·

View notes