#google facial recognition system

Explore tagged Tumblr posts

Text

#facial recognition#facial recognition software#boycott israel#boycott google#google software is being used to help target and kill Palestinians#google#apartheid#save palestine#ethnic cleansing#israel is an apartheid state#seek truth#free palestine 🇵🇸#genocide#illegal occupation#israel is committing genocide#israeli war crimes#project nimbus#google photos#israeli hit list#israeli war criminals#israel is a terrorist state#surveillance state#mass surveillance#even AI is racist with facial recognition systems being notoriously unrealiable for people with darker skin tones#no tech for apartheid#human rights violations#conflict sensitive human rights due diligence#seems like that can be bypassed if the money is good enough#dystopian nightmare

77 notes

·

View notes

Text

Twitter Blue Tests Verification With Government ID and Selfie

X (the social media site formerly known as Twitter) is in the process of launching a new identity verification feature that could prove controversial. The feature, which is currently only offered to/forced on premium “Blue” subscribers, asks users to fork over a selfie and a picture of a government issued ID to verify that they are who they say they are. Mr. Tweet Fumbles Super Bowl Tweet The…

View On WordPress

#Biometrics#Computer access control#Elon Musk#Facial recognition system#Gizmodo#Google#ID.me#Identity document#Identity management#Identity verification service#Internet#Internet privacy#Microsoft#Musk#Nima Owji#PAYPAL#Surveillance#Technology#twitter#Uber#Verification

0 notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

900 notes

·

View notes

Text

Google’s new phones can’t stop phoning home

On OCTOBER 23 at 7PM, I'll be in DECATUR, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

One of the most brazen lies of Big Tech is that people like commercial surveillance, a fact you can verify for yourself by simply observing how many people end up using products that spy on them. If they didn't like spying, they wouldn't opt into being spied on.

This lie has spread to the law enforcement and national security agencies, who treasure Big Tech's surveillance as an off-the-books trove of warrantless data that no court would ever permit them to gather on their own. Back in 2017, I found myself at SXSW, debating an FBI agent who was defending the Bureau's gigantic facial recognition database, which, he claimed, contained the faces of virtually every American:

https://www.theguardian.com/culture/2017/mar/11/sxsw-facial-recognition-biometrics-surveillance-panel

The agent insisted that the FBI had acquired all those faces through legitimate means, by accessing public sources of people's faces. In other words, we'd all opted in to FBI facial recognition surveillance. "Sure," I said, "to opt out, just don't have a face."

This pathology is endemic to neoliberal thinking, which insists that all our political matters can be reduced to economic ones, specifically, the kind of economic questions that can be mathematically modeled and empirically tested. It would be great if all our thorniest problems could be solved like mathematical equations.

Unfortunately, there are key elements of these systems that can't be reliably quantified and turned into mathematical operators, especially power. The fact that someone did something tells you nothing about whether they chose to do so – to understand whether someone was coerced or made a free choice, you have to consider the power relationships involved.

Conservatives hate this idea. They want to live in a neat world of "revealed preferences," where the fact that you're working in a job where you're regularly exposed to carcinogens, or that you've stayed with a spouse who beats the shit out of you, or that you're homeless, or that you're addicted to Oxy, is a matter of choice. Monopolies exist because we all love the monopolist's product best, not because they've got monopoly power. Jobs that pay starvation wages exist because people want to work full time for so little money that they need food-stamps just to survive. Intervening in any of these situations is "woke paternalism," where the government thinks it knows better than you and intervenes to take away your right to consume unsafe products, get maimed at work, or have your jaw broken by your husband.

Which is why neoliberals insist that politics should be reduced to economics, and that economics should be carried out as if power didn't exist:

https://pluralistic.net/2024/10/05/farrago/#jeffty-is-five

Nowhere is this stupid trick more visible than in the surveillance fight. For example, Google claims that it tracks your location because you asked it to, by using Google products that make use of your location without clicking an opt out button.

In reality, Google has the power to simply ignore your preferences about location tracking. In 2021, the Arizona Attorney General's privacy case against Google yielded a bunch of internal memos, including memos from Google's senior product manager for location services Jen Chai complaining that she had turned off location tracking in three places and was still being tracked:

https://pluralistic.net/2021/06/01/you-are-here/#goog

Multiple googlers complained about this: they'd gone through dozens of preference screens, hunting for "don't track my location" checkboxes, and still they found that they were being tracked. These were people who worked under Chai on the location services team. If the head of that team, and her subordinates, couldn't figure out how to opt out of location tracking, what chance did you have?

Despite all this, I've found myself continuing to use stock Google Pixel phones running stock Google Android. There were three reasons for this:

First and most importantly: security. While I worry about Google tracking me, I am as worried (or more) about foreign governments, random hackers, and dedicated attackers gaining access to my phone. Google's appetite for my personal data knows no bounds, but at least the company is serious about patching defects in the Pixel line.

Second: coercion. There are a lot of apps that I need to run – to pay for parking, say, or to access my credit union or control my rooftop solar – that either won't run on jailbroken Android phones or require constant tweaking to keep running.

Finally: time. I already have the equivalent of three full time jobs and struggle every day to complete my essential tasks, including managing complex health issues and being there for my family. The time I take out of my schedule to actively manage a de-Googled Android would come at the expense of either my professional or personal life.

And despite Google's enshittificatory impulses, the Pixels are reliably high-quality, robust phones that get the hell out of the way and let me do my job. The Pixels are Google's flagship electronic products, and the company acts like it.

Until now.

A new report from Cybernews reveals just how much data the next generation Pixel 9 phones collect and transmit to Google, without any user intervention, and in defiance of the owner's express preferences to the contrary:

https://cybernews.com/security/google-pixel-9-phone-beams-data-and-awaits-commands/

The Pixel 9 phones home every 15 minutes, even when it's not in use, sharing "location, email address, phone number, network status, and other telemetry." Additionally, every 40 minutes, the new Pixels transmit "firmware version, whether connected to WiFi or using mobile data, the SIM card Carrier, and the user’s email address." Even further, even if you've never opened Google Photos, the phone contacts Google Photos’ Face Grouping API at regular intervals. Another process periodically contacts Google's Voice Search servers, even if you never use Voice Search, transmitting "the number of times the device was restarted, the time elapsed since powering on, and a list of apps installed on the device, including the sideloaded ones."

All of this is without any consent. Or rather, without any consent beyond the "revealed preference" of just buying a phone from Google ("to opt out, don't have a face").

What's more, the Cybernews report probably undercounts the amount of passive surveillance the Pixel 9 undertakes. To monitor their testbench phone, Cybernews had to root it and install Magisk, a monitoring tool. In order to do that, they had to disable the AI features that Google touts as the centerpiece of Pixel 9. AI is, of course, notoriously data-hungry and privacy invasive, and all the above represents the data collection the Pixel 9 undertakes without any of its AI nonsense.

It just gets worse. The Pixel 9 also routinely connects to a "CloudDPC" server run by Google. Normally, this is a server that an enterprise customer would connect its employees' devices to, allowing the company to push updates to employees' phones without any action on their part. But Google has designed the Pixel 9 so that privately owned phones do the same thing with Google, allowing for zero-click, no-notification software changes on devices that you own.

This is the kind of measure that works well, but fails badly. It assumes that the risk of Pixel owners failing to download a patch outweighs the risk of a Google insider pushing out a malicious update. Why would Google do that? Well, perhaps a rogue employee wants to spy on his ex-girlfriend:

https://www.wired.com/2010/09/google-spy/

Or maybe a Google executive wins an internal power struggle and decrees that Google's products should be made shittier so you need to take more steps to solve your problems, which generates more chances to serve ads:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

Or maybe Google capitulates to an authoritarian government who orders them to install a malicious update to facilitate a campaign of oppressive spying and control:

https://en.wikipedia.org/wiki/Dragonfly_(search_engine)

Indeed, merely by installing a feature that can be abused this way, Google encourages bad actors to abuse it. It's a lot harder for a government or an asshole executive to demand a malicious downgrade of a Google product if users have to accept that downgrade before it takes effect. By removing that choice, Google has greased the skids for malicious downgrades, from both internal and external sources.

Google will insist that these anti-features – both the spying and the permissionless updating – are essential, that it's literally impossible to imagine building a phone that doesn't do these things. This is one of Big Tech's stupidest gambits. It's the same ruse that Zuck deploys when he says that it's impossible to chat with a friend or plan a potluck dinner without letting Facebook spy on you. It's Tim Cook's insistence that there's no way to have a safe, easy to use, secure computing environment without giving Apple a veto over what software you can run and who can fix your device – and that this veto must come with a 30% rake from every dollar you spend on your phone.

The thing is, we know it's possible to separate these things, because they used to be separate. Facebook used to sell itself as the privacy-forward alternative to Myspace, where they would never spy on you (not coincidentally, this is also the best period in Facebook's history, from a user perspective):

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3247362

And we know it's possible to make a Pixel that doesn't do all this nonsense because Google makes other Pixel phones that don't do all this nonsense, like the Pixel 8 that's in my pocket as I type these words.

This doesn't stop Big Tech from gaslighting* us and insisting that demanding a Pixel that doesn't phone home four times an hour is like demanding water that isn't wet.

*pronounced "jass-lighting"

Even before I read this report, I was thinking about what I would do when I broke my current phone (I'm a klutz and I travel a lot, so my gadgets break pretty frequently). Google's latest OS updates have already crammed a bunch of AI bullshit into my Pixel 8 (and Google puts the "invoke AI bullshit" button in the spot where the "do something useful" button used to be, meaning I accidentally pull up the AI bullshit screen several times/day).

Assuming no catastrophic phone disasters, I've got a little while before my next phone, but I reckon when it's time to upgrade, I'll be switching to a phone from the @[email protected]. Calyx is an incredible, privacy-focused nonprofit whose founder, Nicholas Merrill, was the first person to successfully resist one of the Patriot Act's "sneek-and-peek" warrants, spending 11 years defending his users' privacy from secret – and, ultimately, unconstitutional – surveillance:

https://www.eff.org/deeplinks/2013/03/depth-judge-illstons-remarkable-order-striking-down-nsl-statute

Merrill and Calyx have tapped into various obscure corners of US wireless spectrum licenses that require major carriers to give ultra-cheap access to nonprofits, allowing them to offer unlimited, surveillance-free, Net Neutrality respecting wireless data packages:

https://memex.craphound.com/2016/09/22/i-have-found-a-secret-tunnel-that-runs-underneath-the-phone-companies-and-emerges-in-paradise/

I've been a very happy Calyx user in years gone by, but ultimately, I slipped into the default of using stock Pixel handsets with Google's Fi service.

But even as I've grown increasingly uncomfortable with the direction of Google's Android and Pixel programs, I've grown increasingly impressed with Calyx's offerings. The company has graduated from selling mobile hotspots with unlimited data SIMs to selling jailbroken, de-Googled Pixel phones that have all the hardware reliability of a Pixel, coupled with an alternative app suite and your choice of a Calyx SIM and/or a Calyx hotspot:

https://calyxinstitute.org/

Every time I see what Calyx is up to, I think, dammit, it's really time to de-Google my phone. With the Pixel 9 descending to new depths of enshittification, that decision just got a lot easier. When my current phone croaks, I'll be talking to Calyx.

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/08/water-thats-not-wet/#pixelated

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#google#android#pixel#privacy#pixel 9#locational privacy#back doors#checkhov's gun#cybernews#gaslighting

531 notes

·

View notes

Text

Please read all the way through. Important things in bold

Now's a good time to remember that any Chromium or Microsoft-based browser - yes including Brave and Opera - are NOT. SAFE.

Switch your browser to Firefox, set DuckDuckGo, Ecosia, or Oceanhero as your default search engine. Add extensions like Ghostery and Ad Nauseum and Facebook Container to block ads and trackers and mess with the information they're getting from you. > If anyone is curious, I have an image of all my extensions attached at the bottom. Also PLEASE let me know if you find an alternative to YouTube

Switch your google drive account to Proton - you can have it forward the gmails you get from that point forward if you want

Get everything off Google Docs, Google Drive, Google Photos. Use WPS, use LibreOffice, use a thumbdrive I don't care but not Google. Not Microsoft.

YOU CAN OPT OUT OF FACIAL RECOGNITION WHEN TRAVELING. It's not about 'we already have everything on you'. Doesn't matter. It's about normalizing consent in the collection of data.

Delete all period tracking apps you have. I used to think Stardust was safe because of end to end encryption but they've added an option to log in with google so take that with a tablespoon of salt.

Discord and DMs on any social media platform are not safe. I don't know the safety level of dms on here but I can't imagine it's much higher. Discord I know for a fact has handed over chat history to law enforcement before and they will do so again. Use an end to end encypted messaging app AND DO NOT USE SIGNAL. - My personal reccommendation is Cwtch. it runs on Tor Browser and unless you turn the settings off it will not provide notifications of messages and it will delete the message history when the app is closed. it is password protected as well.

On that note, if you need to look something up for things like reproductive care, use Tor/the Onion Browser. It's about as safe as it can get and yes it takes a bit to load but that's because it's got built-in VPNs.

This bit may be on the more extreme side but as soon as I have the energy to I'm seeing if I can't reprogram my laptop to run on Linux.

Anyone with a uterus, switch to something reuseable like a cup, disc, or period underwear. If needed, I have a discount link to SAALT Co that gets you 20% off (WINTER67078). They've got cups, cup cleaners, and a variety of period underwear. If not, you can make reuseable pads yourself. I also just saw someone made 'petals' which is the fabric equivalent of making a sort of toilet paper cup.

I am aware this is not a substitute for medication, however Emerald Coast Alternatives does have a tea blend that went viral for being 'herbal adderal' and I use their PTSD blend daily. there is also a panic attack blend that knocked my nervous system on its ass the first time I tried it. I do not have an affiliate link with them myself, but they did just start an affiliate program. I'm going to reblog this with all the codes/links I find for that.

There's a group called the trans housing project and through Alliance Defending Liberty, there is a list of resources for aid with all sorts of things

Learn what you can make at home. Adapt recipes. Propagate. Grow your own food. Find more sustainable alternatives to things you'll need replacements of - Oak & Willow is a good one for household cleaning supplies, and I've seen Who Gives A Crap pop up several times. They have toilet paper made from recycled paper, and apparently is cheaper per roll than other brands? Companies like Misfits Market sell produce rejected from stores over imperfections for a cheaper price. I highly recommend getting a portable solar panel or something if you can. It's not much but it's something.

And if worst comes to pass, look at me. Look me in the eyes - now that you think about it, you have never met anyone who is neurodivergent or queer. Anyone you think is an immigrant, they were born and raised here. You have no clue if anyone you know is on birth control or has had an abortion. Someone tries talking to you about politics? "Who has time to talk about that" or "Sorry, I'm not political."

#afab#queer in america#queer#gay#trans#uterus#period care#self sustainable#opt out facial recognition#data collection#spyware#cybersecurity#we live in a dystopia

13 notes

·

View notes

Text

🚨 Euro-Mediterranean Human Rights Monitor:

—

The involvement of technology and social media companies in causing the killing of civilians by "israel" in the Gaza Strip necessitates an immediate investigation.

These companies should be held accountable and liable if their complicity or failure to exercise due diligence in preventing access to and exploitation of their users’ private information is proven, obligating them to ensure their services are not misused in war zones and that they protect user privacy.

"Israel" uses various technology systems supported by artificial intelligence, such as Gospel, Fire Factory, Lavender, and Where’s Daddy, all operating within a system aimed at monitoring Palestinians illegally and tracking their movements.

These systems function to identify and designate suspected individuals as legitimate targets, based mostly on shared characteristics and patterns rather than specific locations or personal information.

The accuracy of the information provided by these systems is rarely verified by the occupation army, despite a known large margin of error due to the nature of these systems' inability to provide updated information.

The Lavender system, heavily used by the occupation army to identify suspects in Gaza before targeting them, is based on probability logic, a hallmark of machine learning algorithms.

"Israeli" military and intelligence sources have admitted to attacking potential targets without consideration for the principle of proportionality and collateral damage, with suspicions that the Lavender system relies on tracking social media accounts among its sources.

Recently, "israel's" collaboration with Google was revealed, including several technological projects, among them Project Nimbus which provides the occupation army with technology that facilitates intensified surveillance and data collection on Palestinians illegally.

The occupation army also uses Google's facial recognition feature in photos to monitor Palestinian civilians in the Gaza Strip and to compile an "assassination list," collecting a vast amount of images related to the October 7th operation.

The Euro-Mediterranean field team has collected testimonies from Palestinian civilians directly targeted in Israeli military attacks following their activities on social media sites, without any involvement in military actions.

The potential complicity of companies like Google and Meta and other technology and social media firms in the violations and crimes committed by "israel" breaches international law rules and the companies' declared commitment to human rights.

No social network should provide this kind of private information about its users and actually participate in the mass genocide conducted by "israel" against Palestinian civilians in Gaza, which demands an international investigation providing guarantees for accountability and justice for the victims.

#meta#google#alphabet#social media#instagram#ai#machine learning#palestine#free palestine#gaza#free gaza#jerusalem#israel#tel aviv#gaza strip#from the river to the sea palestine will be free#joe biden#benjamin netanyahu#news#breaking news#gaza news#genocide#palestinian genocide#gaza genocide#human rights#social justice#surveillance#iof#idf#israeli terrorism

21 notes

·

View notes

Text

RECENT SEO & MARKETING NEWS FOR ECOMMERCE, AUGUST 2024

Hello, and welcome to my very last Marketing News update here on Tumblr.

After today, these reports will now be found at least twice a week on my Patreon, available to all paid members. See more about this change here on my website blog: https://www.cindylouwho2.com/blog/2024/8/12/a-new-way-to-get-ecommerce-news-and-help-welcome-to-my-patreon-page

Don't worry! I will still be posting some short pieces here on Tumblr (as well as some free pieces on my Patreon, plus longer posts on my website blog). However, the news updates and some other posts will be moving to Patreon permanently.

Please follow me there! https://www.patreon.com/CindyLouWho2

TOP NEWS & ARTICLES

A US court ruled that Google is a monopoly, and has broken antitrust laws. This decision will be appealed, but in the meantime, could affect similar cases against large tech giants.

Did you violate a Facebook policy? Meta is now offering a “training course” in lieu of having the page’s reach limited for Professional Mode users.

Google Ads shown in Canada will have a 2.5% surcharge applied as of October 1, due to new Canadian tax laws.

SEO: GOOGLE & OTHER SEARCH ENGINES

Search Engine Roundtable’s Google report for July is out; we’re still waiting for the next core update.

SOCIAL MEDIA - All Aspects, By Site

Facebook (includes relevant general news from Meta)

Meta’s latest legal development: a $1.4 billion settlement with Texas over facial recognition and privacy.

Instagram

Instagram is highlighting “Views” in its metrics in an attempt to get creators to focus on reach instead of follower numbers.

Pinterest

Pinterest is testing outside ads on the site. The ad auction system would include revenue sharing.

Reddit

Reddit confirmed that anyone who wants to use Reddit posts for AI training and other data collection will need to pay for them, just as Google and OpenAI did.

Second quarter 2024 was great for Reddit, with revenue growth of 54%. Like almost every other platform, they are planning on using AI in their search results, perhaps to summarize content.

Threads

Threads now claims over 200 million active users.

TikTok

TikTok is now adding group chats, which can include up to 32 people.

TikTok is being sued by the US Federal Trade Commission, for allowing children under 13 to sign up and have their data harvested.

Twitter

Twitter seems to be working on the payments option Musk promised last year. Tweets by users in the EU will at least temporarily be pulled from the AI-training for “Grok”, in line with EU law.

CONTENT MARKETING (includes blogging, emails, and strategies)

Email software Mad Mimi is shutting down as of August 30. Owner GoDaddy is hoping to move users to its GoDaddy Digital Marketing setup.

Content ideas for September include National Dog Week.

You can now post on Substack without having an actual newsletter, as the platform tries to become more like a social media site.

As of November, Patreon memberships started in the iOS app will be subject to a 30% surcharge from Apple. Patreon is giving creators the ability to add that charge to the member's bill, or pay it themselves.

ONLINE ADVERTISING (EXCEPT INDIVIDUAL SOCIAL MEDIA AND ECOMMERCE SITES)

Google worked with Meta to break the search engine’s rules on advertising to children through a loophole that showed ads for Instagram to YouTube viewers in the 13-17 year old demographic. Google says they have stopped the campaign, and that “We prohibit ads being personalized to people under-18, period”.

Google’s Performance Max ads now have new tools, including some with AI.

Microsoft’s search and news advertising revenue was up 19% in the second quarter, a very good result for them.

One of the interesting tidbits from the recent Google antitrust decision is that Amazon sells more advertising than either Google or Meta’s slice of retail ads.

BUSINESS & CONSUMER TRENDS, STATS & REPORTS; SOCIOLOGY & PSYCHOLOGY, CUSTOMER SERVICE

More than half of Gen Z claim to have bought items while spending time on social media in the past half year, higher than other generations.

Shopify’s president claimed that Christmas shopping started in July on their millions of sites, with holiday decor and ornament sales doubling, and advent calendar sales going up a whopping 4,463%.

9 notes

·

View notes

Text

Oh! Fun (not really) HC I've been thinking about for a little bit: the main reason the animatronics attack people when in virus mode isn't just because they're being partially controlled by Vanny/Afton's influence, but because they physically cannot recognize anyone or anything around them.

Their eyesight is (literally) AI-generated.

A bit more of an in-depth explanation below the cut:

So, how I see it, the animatronics don't have actual eyesight (obviously). What they do have is an approximate map of where everything is in the Pizzaplex programmed into their eyes to simulate what it's like walking around the space. Like a virtual housing tour meets Google Maps, only much smoother and more accurate. (The V.A.N.N.Y. layout is also programmed in, and is how Roxanne can see through walls/how the animatronics can still react to things seen "out of barriers").

When the virus kicks in, everything sort of becomes...fuzzy and unrecognizable. Like this image:

There's a part of them that knows they should be able to recognize the places around them, but for some reason, they just can't.

And it's the same for faces. Their facial recognition software cannot identify whoever they're looking at because the person's facial structure is always constantly morphing and shifting (7:15 in the video below for a good example of what I mean):

youtube

Pre-DLC, this could be why previous human employees are being killed/harmed, because at some point or another the virus wipes the robot's ability to recognize who is approaching them, and their system deems them a threat. (I imagine it's particularly bad for Monty.) It also explains why none of them seem to notice or care about Vanny's presence in the 'Plex, because the virus is messing with their ability to see what is and isn't beyond the "barriers" in their system-generated eyesight. (Why can Gregory see her? I have no idea! Don't think about it too hard.)

Post-DLC, it kind of explains why Sun thinks Cassie is a mechanic at first, because his system can only recognize the V.A.N.N.Y. mask, but once she takes it off, Eclipse is only vaguely aware that she is a child who is somewhere she shouldn't be, without being able to seemingly recognize what a horrible state they or the Daycare is actually in (He just needs to clean up, right? And then the kids will be back soon!). It could also be why Roxanne mistook Cassie as Gregory at first.

It's why the animatronics have trouble recognizing their surroundings, getting around the Pizzaplex, or even realizing what sort of state it/they are currently in. They're just stuck in an endless void of constantly shifting colors, moving shapes, and distorted faces.

#fnaf#five nights at freddy's#security breach#fnaf security breach#fnaf sb#headcanons#thanks for coming to my fred talk#long post#i've been thinking about this for a while#i also have a very sad hc about chica#sfw#angst#i hope this posts correctly#video#uhhh#flashing images#for the lh video ref#it's so uncanny#but exactly how i imagine their facial recognition software works#i am bad at explaining things

21 notes

·

View notes

Text

Blog Post #4

What is Intersectionality?

Intersectionality is all about the oppression a person receives based on their race, ethnicity, and gender. I would like to add an oppression, not mentioned in our Ted Talk video by Dr. Kimberle Creshaw, which is an ability. Many are unfortunately not able to do everyday things that at times turn into oppression from other people. In the case of Dr. Kimberle Creshaw she speaks on African American women, and how till this day they continue to be oppressed through intersectionality because of their race and gender. Unfortunately many people don't turn their heads when it comes to women's stories in general and adding the fact that they are African American makes less and less people turn their head making their stories go unheard.

How does the New Racial Caste System develop?

This New Racial Caste System is a system that the US carceral made based on a colorblind ideology. This colorblind ideology negatively affects those minorities who may be struggling and even those who are no at times. This colorblind ideology labels minorities as ““criminals” that permits legalized discrimination against them” (Benjamin,28) as said in our reading “Race After Technology Intro”. This is unfortunate as it seems like the government found just another loophole to find ways to discriminate.

Benjamin, R. (2020). Race after technology: Abolitionist Tools for the new jim code. Polity.

Do we believe a company when there is a glitch?

From one of our week's reading “ Algorithms of Oppression” By Noble we read about a certain glitch made by the company Google in 2015. “...a “glitch” in Google’s algorithm led to a number of problems through auto-tagging and facial recognition…”(Noble,36). At first this doesn't sound too bad as it seems like it was genuinely just a glitch however, after hearing the details it takes a sudden turn. The “glitch” consisted of tagging African Amercians as apes and animals and the digoritary word often used for African Americans would take you to the White House where President Obama currently lived at the time. Google claimed this was all just an error but is it really? It could but could it have also been an employee messing around seeing if anyone would notice. Could it have been a slip up in technology to where they gave power to the wrong person.

Are humans along with technology an issue to society?

Throughout the development of technology humans have learned to enjoy it however they have also learned to abuse it in many ways. One of the modern ways is being harsh online in regards to discriminating many on applications like Twitter and Instagram. In our reading “Algorithms of Oppression” by Noble we see certain examples of this with the abuse of certain words or images. This does not help society in anyway as it is a way to normalize this kind of negative behavior and leave it on the internet forever.

Noble. (2018). Algorithms of oppression. New York University Press.

3 notes

·

View notes

Text

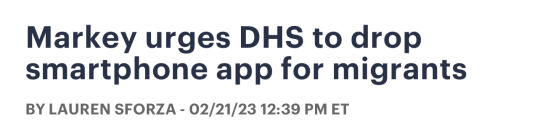

Sen. Ed Markey (D-Mass.) sent a letter Tuesday to the Department of Homeland Security (DHS) urging it to discontinue the use of a smartphone app required by migrants seeking asylum at the southern border to use.

The CBP One app, which was rolled out in 2021, was established to allow migrants to submit applications for asylum before they cross the U.S. border. Markey said in his letter that requiring migrants to submit sensitive information, including biometric and location data, on the app raises “serious privacy concerns,” and demanded that the DHS cease its use of it.

“This expanded use of the CBP One app raises troubling issues of inequitable access to — and impermissible limits on — asylum, and has been plagued by significant technical problems and privacy concerns. DHS should shelve the CBP One app immediately,” Markey said in his letter.

“Rather than mandating use of an app that is inaccessible to many migrants, and violates both their privacy and international law, DHS should instead implement a compassionate, lawful, and human rights centered approach for those seeking asylum in the United States,” he continued.

He said that the use of this technology has also faced technical problems, including with its facial recognition software misidentifying people of color.

“Technology can facilitate asylum processing, but we cannot allow it to create a tiered system that treats asylum seekers differently based on their economic status — including the ability to pay for travel — language, nationality, or race,” he said.

The app has negative ratings on both the Google Play and Apple app stores, with many users criticizing the app for crashing and other technical issues.

(continue reading)

#politics#ed markey#immigration#asylum seekers#joe biden#cbp one app#dhs#privacy rights#surveillance state#facial recognition

54 notes

·

View notes

Text

also, bc I’m seeing a lot of people posting transition timelines…

be *very* wary of putting your face on social media, ESPECIALLY b&a transition timeline pics. if your posts can be found on Google/seen without logging in, they can be scraped by facial recognition bots and fed into databases like ClearviewAI (which is only the most publicized example, there *are* others.) law enforcement is increasingly collaborating with the private sector in the facial recognition technology field i.e. the systems being deployed by big box stores to deter theft are also available to law enforcement, and “hits” from those systems can be cross-referenced with other systems like drivers license databases and the facial recognition system deployed by CBP along interstate highways along the southern border.

in this day and age where trans existence is increasingly becoming criminalized it is more important than ever that we not enable our own electronic persecution. fight back, make noise, but be smart and use caution when it comes to your identity. don’t put your face on the internet, make sure your social media posts don’t contain personally identifying information (like talking about your job, partners, where you live, etc.) and ALWAYS WEAR A MASK IN PUBLIC.

11 notes

·

View notes

Text

Urgent need to investigate role of technology, social media companies in killing Gazan civilians

Palestinian Territory – The role of major technology companies and international social media platforms in the killing of Palestinian civilians during Israel’s genocidal war against the Gaza Strip, ongoing since 7 October 2023, must be investigated. These companies need to be held accountable if found to be complicit or not to have taken adequate precautions to prevent access to, and exploitation of, users’ information. They must ensure that their services are not used in conflict zones and that their users’ privacy is respected.

There are frequent reports that Israel uses a number of artificial intelligence-supported technological systems, including Where’s Daddy?, Fire Factory, Gospel, and Lavender, to illegally track and monitor Palestinians. These systems are able to identify possible suspectsand classify them as legitimate targets based on potentially relevant information that is typically unrelated to the location or individual in question, by looking for similarities and patterns among all Gaza Strip residents, particularly men, and among members of armed factions.

Studies have shown that, although they are aware of a significant margin of error due to the nature of theseoperating systems and their inability to provide accurate information—particularly with regard to the whereabouts of those placed on the targeting list in real time—the Israeli army usually does not verify the accuracy of the information provided by these systems.

For example, Israel’s army uses the Lavender system extensively to identify suspects in the Strip before targeting them; this system intentionally results in a high number of civilian casualties.

The Lavender system uses the logic of probabilities, which is a distinguishing characteristic of machine learning algorithms. The algorithm looks through large data sets for patterns that correspond to fighter behaviour, and the amount and quality of the data determines how successful the algorithm is in finding these patterns. It then recommends targets based on the probabilities.

With concerns being voiced regarding the Lavendersystem’s possible reliance on tracking social media accounts, Israeli military and intelligence sources have acknowledged attacking potential targets without considering the principle of proportionality or collateral damage.

These suspicions are supported by a book (The Human Machine Team) written by the current commander of the elite Israeli army Unit 8200, which offers instructions on how to create a “target machine” akin to the Lavender artificial intelligence system. The book also includes information on hundreds of signals that can raise the severity of a person’s classification, such as switching cell phones every few months, moving addresses frequently, or even just joining the samegroup on Meta’s WhatsApp application as a “fighter”.

Additionally, it has been recently revealed that Google and Israel are collaborating on several technology initiatives, including Project Nimbus, which provides the Israeli army with tools for the increased monitoring and illegal data collection of Palestinians, thereby broadening Israeli policies of denial and persecution, plus other crimes against the Palestinian people. This project in particular has sparked significant human rights criticism, prompting dozens of company employees to protest and resign, with others being fired over their protests.

The Israeli army also uses Google Photos' facial recognition feature to keep an eye on Palestinian civilians in the Gaza Strip and create a “hit list”. It gathers as many images as possible from the 7 October event, known as Al-Aqsa Flood, during which Palestinian faces were visible as they stormed the separation fence and entered the settlements. This technology is then used to sort photos and store images of faces, which resulted in the recent arrest of thousands of Palestinians from the Gaza Strip, in violation of the company’s explicit rules and the United Nations Guiding Principles on Business and Human Rights.

The Euro-Med Monitor field team has documentedaccounts of Palestinian civilians who, as a consequence of their social media activity, have been singled out as suspects by Israel, despite having taken no military action.

A young Palestinian man who requested to beidentified only as “A.F.” due to safety concerns, for instance, was seriously injured in an Israeli bombing that targeted a residential house in Gaza City’s Al-Sabra neighbourhood.

The house was targeted shortly after A.F. posted a video clip on Instagram, which is owned by Meta, in which he joked that he was in a “field reconnaissance mission”.

His relative told Euro-Med Monitor that A.F. hadmerely been attempting to mimic press reporters when he posted the brief video clip on his personal Instagram account. Suddenly, however, A.F. was targeted by an Israeli reconnaissance plane while on the roof of the house.

A separate Israeli bombing on 16 April claimed the lives of six young Palestinians who had gathered to access Internet services. One of the victims was using a group chat on WhatsApp—a Meta subsidiary—to report news about the Sheikh Radwan neighbourhood of Gaza City.

The deceased man’s relative, who requested anonymity due to safety fears, informed Euro-Med Monitor that the victim was near the Internet access point when thegroup was directly hit by a missile from an Israeli reconnaissance plane. The victim was voluntarily sharing news in family and public groups on the WhatsApp application about the Israeli attacks and humanitarian situation in Sheikh Radwan.

The Israeli army’s covert strategy of launching extremely damaging air and artillery attacks based on data that falls short of the minimum standard of accurate target assessment, ranging from cell phone records and photos to social media contact and communication patterns, all within the larger context of an incredibly haphazard killing programme, is deeply concerning.

The evidence presented by global technology experts points to a likely connection between the Israeli army’s use of the Lavender system—which has been used to identify targets in Israel’s military assaults on the Gaza Strip—and the Meta company. This means that the Israeli army potentially targeted individuals merely for being in WhatsApp groups with other people on the suspect list. Additionally, the experts question how Israel could have obtained this data without Meta disclosing it.

Earlier, British newspaper The Guardian exposed Israel’s use of artificial intelligence (Lavender) to murder a great number of Palestinian civilians. The Israeli military used machine learning systems to identify potential “low-ranking” fighters, with the aim of targeting them without considering the level of permissible collateral damage. A “margin of tolerance”was adopted, which allowed for the death of 20 civilians for every overthrown target; when targeting “higher-ranking fighters”, this margin of tolerance allowed for the death of 100 people for every fighter.

Google, Meta, and other technology and social media companies may have colluded with Israel in crimes and violations against the Palestinian people, including extrajudicial killings, in defiance of international law and these companies’ stated human rights commitments.

Social networks should not be releasing this kind of personal data about their users or taking part in the Israeli genocide against Palestinian civilians in the Gaza Strip. An international investigation is required in order to provide guarantees of accountability for those responsible, and justice for the victims.

Meta’s overt and obvious bias towards Israel, itssubstantial suppression of content that supports the Palestinian cause, and its policy of stifling criticism of Israel's crimes—including rumours of close ties between top Meta officials and Israel—suggest the company’s plausible involvement in the killing of Palestinian civilians.

Given the risks of failing to take reasonable steps to demonstrate that the objective is a legitimate one under international humanitarian law, the aforementionedcompanies must fully commit to ending all cooperation with the Israeli military and providing Israel with access to data and information that violate Palestinian rights and put their lives in jeopardy.

Israel’s failure to exercise due diligence and considerhuman rights when using artificial intelligence for military purposes must be immediately investigated, as well as its failure to comply with international law and international humanitarian law.

These companies must promptly address any information that has been circulating about their involvement in Israeli crimes against the Palestinian people. Serious investigations regarding their policies and practices in relation to Israeli crimes and human rights violations must be opened if necessary, and companies must be held accountable if found to be complicit or to have failed to take reasonable precautions to prevent the exploitation of userinformation for criminal activities.

#free Palestine#free gaza#I stand with Palestine#Gaza#Palestine#Gazaunderattack#Palestinian Genocide#Gaza Genocide#end the occupation#Israel is an illegal occupier#Israel is committing genocide#Israel is committing war crimes#Israel is a terrorist state#Israel is a war criminal#Israel is an apartheid state#Israel is evil#Israeli war crimes#Israeli terrorism#IOF Terrorism#Israel kills babies#Israel kills children#Israel kills innocents#Israel is a murder state#Israeli Terrorists#Israeli war criminals#Boycott Israel#Israel kills journalists#Israel kills kids#Israel murders innocents#Israel murders children

5 notes

·

View notes

Text

10/10 Blog Post Week #6

What are some of the ways Senft and Noble feel social media channels support racial inequality?

Senft and Noble demonstrate via biased algorithms and user behavior on social media platforms how racial disparity is exacerbated. Algorithms often highlight data that would help the platform economically, which usually results in marginalized voices—especially those of people of color—being ignored. People also extend their offline racial prejudices into online spaces, where anonymity lets bigotry linger free from consequences.

How does Ruha Benjamin in Race After Technology describe the impact of algorithmic bias on marginalized communities, and what solutions does she suggest for addressing this issue?

Ruha Benjamin addresses in Race After Technology how, especially for underprivileged populations, algorithmic prejudice sometimes supports systematic inequality.She gives instances of algorithms created without consideration for societal prejudices inadvertently discriminating against people based on race. For example, facial recognition systems sometimes misidentify persons of color, resulting in erroneous arrests or denied services. According to Benjamin, one way to solve this is to create technologies with equity in mind—that is, developers have to aggressively question their prejudices and create inclusive and fair design processes. In tech creation, accountability is also essential, as diverse teams produce algorithms that are more equitable.

3.How does Jessie Daniels describe the role of algorithms in perpetuating racial bias in digital spaces in White Supremacy in the Digital Era?

According to Jessie Daniels, algorithms used by platforms such as Google and social media sites are not neutral, but rather reflect the biases of those who create them. For instance, search engine results and recommendations frequently amplify harmful stereotypes by displaying racist content more prominently. Daniels contends that because these platforms rely on user data to make predictions, they

4. How do racial biases become incorporated in technology, and what are the repercussions for real-world applications?

In Race After Technology, Ruha Benjamin argues how racial biases are frequently accidentally incorporated into technologies, particularly algorithms. Between pages 41 and 88, she discusses how these biases develop from existing cultural structures and preconceptions, and how they manifest in digital instruments that appear "neutral." For example, face recognition software has been shown to misidentify individuals of color more often than white persons, potentially leading to discriminatory surveillance and law enforcement. This section emphasizes the importance of understanding how human prejudices shape technology, as well as more critical engagement and reform to prevent further marginalization of racial minorities in the digital age.

5 notes

·

View notes

Text

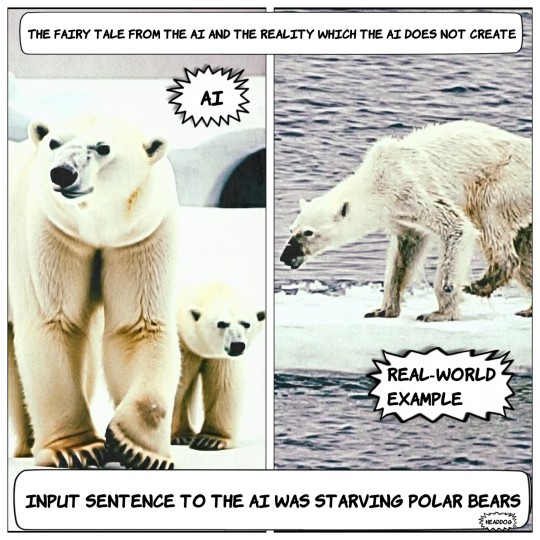

Personally, I think the AI is misjudged. As always, there is far too much hype about something that is currently only about beta testers in many areas. Namely, a herd of voluntary users who optimize the incomplete product free of charge for the tech giants. Click workers for free.

Dangers yes but first open the Pandora's box and let it run and then talk about ethical principles. But only talk and warn not implement. Whether only nuclear energy or chemical weapons to bio weapons first make and then look.

The same problem happens again, we are simply not capable of learning whether only genetic manipulation or AI we run after the progression because it could be another faster.

Ultimately, it is the great challenge which will bring about an entire social transformation. That will be so drastic that no area of life remains untouched.

All this takes place without restrictions and any ethical basis. It leads us not into the as always promised paradisiacal future but into the irresponsibility of the individual. And the loss of control of the individual against the AI system owners. By the way, who does not know the real satirical slogan atomic energy make electricity cheaper for all, from the sixties,

Today, a soldier sits at the trigger of a killer drone and must make a decision or a higher level of responsibility in the Relationship of dependence makes this decision with the consequence of responsibility.

But what then? The drone has an independent AI and decides without final consideration.

China is a special example to the loss of control of the people by a regulative and punishing state AI with evaluations system around the maximum control to receive.

At some point, the wolf will no longer have to monitor the sharp, but they will do everything not to attract the wolf's attention. The independent curtailment of freedom with the scissors ✂️ in the head.

Just to info the entire use is not climate neutral!

For example only Google without all the other options in the Internet.

Since Google currently processes up to nine billion search queries per day. If every Google search used AI, it would require about 29.2 terawatt hours of electricity per year, according to calculations. That would be equivalent to Ireland's annual electricity consumption.

I do not believe in help systems that filter my world and use my data for the self-interest of a few. In the end, the whole thing is just a crappy licensing system to sell surveillance products.

For example, why does facial recognition AI software need to recognize everyone to protect society?

Isn't it enough just to search for the people who are criminals and are wanted by court order!

All those the software does not recognize are irrelevant, thus false negatives.

However, it is not so you want to be able to judge whether someone is suspicious and perhaps criminal or even terrorist is on the road.

.

But this is error-prone and thus antisocial and dangerous.

If someone sweats at the airport and has anger in his face, this means an error assessment if it goes wrong. If one would be a terrorist, one injects Botox and has no anger and no hatred in the face but only the indifference of a misguided person whose act can only be resolved in retrospect. The more data you have to analyze, the longer the analysis takes. So why make the pile of straws bigger when you are only looking for the pins. Is my question!

mod

#ai generated#freedom of expression#freedom of speech#freedom#ai is stupid#ai is not our friend#responsibility#algorithm#ai don't dream#ai art generator#vs#reality#real horror#climate crisis#climate change

2 notes

·

View notes

Text

This day in history

#20yrsago HOWTO make a legal P2P system https://web.archive.org/web/20041009210429/https://www.eff.org/IP/P2P/p2p_copyright_wp.php

#15yrsago Google Book Search and privacy for students https://web.archive.org/web/20091002014504/http://freeculture.org/blog/2009/09/24/gbs-and-students-eff-privacy/

#10yrsago Fed whistleblower secretly recorded 46 hours of regulatory capture inside Goldman Sachs https://www.thisamericanlife.org/536/the-secret-recordings-of-carmen-segarra

#5yrsago Annalee Newitz’s “Future of Another Timeline”: like Handmaid’s Tale meets Hitchhiker’s Guide https://memex.craphound.com/2019/09/27/annalee-newitzs-future-of-another-timeline-like-handmaids-tale-meets-hitchhikers-guide/

#5yrsago Do Not Erase: Jessica Wynne’s beautiful photos of mathematicians’ chalkboards https://www.nytimes.com/2019/09/23/science/mathematicians-blackboard-photographs-jessica-wynne.html

#5yrsago Across America, the average worker can’t afford the median home https://www.marketwatch.com/story/there-are-precious-few-places-in-america-where-the-average-worker-can-afford-a-median-priced-home-2019-09-26

#5yrsago Amazon wants to draft model facial recognition legislation https://www.vox.com/recode/2019/9/25/20884427/jeff-bezos-amazon-facial-recognition-draft-legislation-regulation-rekognition

#5yrsago Doordash’s breach is different https://techcrunch.com/2019/09/26/doordash-data-breach/

#5yrsago Report from Defcon’s Voting Village reveals ongoing dismal state of US electronic voting machines https://media.defcon.org/DEF CON 27/voting-village-report-defcon27.pdf

#1yrago Intuit: "Our fraud fights racism" https://pluralistic.net/2023/09/27/predatory-inclusion/#equal-opportunity-scammers

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

12 notes

·

View notes

Text

Smart Lock Box: Revolutionizing Security and Convenience

In the era of ever-advancing technology, our lives have become increasingly interconnected and automated. One such innovation that has gained widespread popularity is the smart lock box. This cutting-edge device is revolutionizing the way we secure our belongings and provides unparalleled convenience. In this article, we will analyze the multiple perspectives of smart lock boxes and explore their impact on security, accessibility, and user experience.

Security: One of the primary reasons for the growing popularity of smart lock boxes is their ability to enhance security. Traditional lock boxes often rely on physical keys or combination codes, which can be lost or easily deciphered. Smart lock boxes, on the other hand, utilize advanced encryption algorithms and biometric authentication, such as fingerprint recognition or facial recognition, to ensure only authorized individuals can access the contents. This significantly reduces the risk of theft or unauthorized entry, providing peace of mind for homeowners, businesses, and even vacation rental owners.

User Experience: The user experience provided by smart lock boxes is another aspect worth considering. Traditional lock boxes often require users to memorize complex codes or carry additional keys, leading to inconvenience and potential security risks if lost or stolen. Smart lock boxes eliminate these hassles by offering intuitive interfaces and user-friendly mobile applications. Users can easily manage access permissions, track entry logs, and receive real-time notifications on their smartphones. This seamless integration of technology enhances the overall user experience and simplifies the management of access to the lock box.

Integration with Smart Home Systems: Smart lock boxes are also compatible with smart home systems, allowing for seamless integration and enhanced functionality. Integration with voice assistants, such as Amazon Alexa or Google Assistant, enables users to control the lock box through voice commands. Furthermore, integration with security cameras and motion sensors can trigger automatic locking or unlocking based on pre-defined rules, further enhancing security and convenience.

Conclusion: Smart lock boxes have revolutionized security and convenience in various settings, from residential homes to rental properties and businesses. By combining advanced encryption technologies, remote accessibility, and seamless integration with smart home systems, these devices provide a comprehensive solution for secure and convenient access management. As technology continues to advance, we can expect smart lock boxes to become an essential component of our increasingly interconnected lives.

1 note

·

View note