#facial recognition

Explore tagged Tumblr posts

Text

me fr but I have prosopagnosia :,)

whenever i read books i cannot actually imagine what the characters look like, they're all somewhat like this.

#prosopagnosia#facial recognition#all for the game#:’)#andreil#neil josten#andrew minyard#aftg#this is so pretty#<333

2K notes

·

View notes

Text

So, this is scary as hell, Google's developing tech to scan your face as a form of age identification, and it's yet another reason why we need to stop the various bad internet bills like EARN IT, STOP CSAM, and especially KOSA.

Because, that's why they're doing this, and that sort of invasive face scanning is what everybody's been warning people they're going to do if they pass, so the fact they're running up to push it through should alarm everyone.

And, as of this posting on 12/27/2023, it's been noted that Chuck Schumer wants to try and start pushing these bills through as soon as the new year starts, and some whisperings have been made even of all these bad bills being merged under STOP CSAM into one deadly super-bill.

So, if you live in the US call your senators, and even if you don't please boost this, we need to stop this now.

#censorship#online censorship#internet censorship#surveilance#privacy#facial recognition#signal boost

6K notes

·

View notes

Text

Private-sector Trumpism

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on WEDNESDAY (Apr 2), and in BLOOMINGTON on FRIDAY (Apr 4). More tour dates here.

Trumpism is a mixture of grievance, surveillance, and pettiness: "I will never forgive your mockery, I have records of you doing it, and I will punish you and everyone who associates with you for it." Think of how he's going after the (cowardly) BigLaw firms:

https://abovethelaw.com/2025/03/skadden-makes-100-million-settlement-with-trump-in-pro-bono-payola/

Trump is the realization of decades of warning about ubiquitous private and public surveillance – that someday, all of this surveillance would be turned to the systematic dismantling of human rights and punishing of dissent.

23 years ago, I was staying in London with some friends, scouting for a flat to live in. After at day in town, I came back and we ordered a curry and had a nice chat. I mentioned how discomfited I'd been by all the CCTV cameras that had sprouted at the front of every private building, to say nothing of all the public cameras installed by local councils and the police. My friend dismissed this as a kind of American, hyper-individualistic privacy purism, explaining that these cameras were there for public safety – to catch flytippers, vandals, muggers, boy racers tearing unsafely through the streets. My fear about having my face captured by all these cameras was little more than superstitious dread. It's not like they were capturing my soul.

Now, I knew that my friend had recently marched in one of the massive demonstrations against Bush and Blair's illegal invasion plans for Iraq. "Look," I said, "you marched in the street to stand up and be counted. But even so, how would you have felt if – as a condition of protesting – you were forced to first record your identity in a government record-book?" My friend had signed petitions, he'd marched in the street, but even so, he had to admit that there would be some kind of chilling effect if your identity had to be captured as a condition of participating in public political events.

Trump has divided the country into two groups of people: "citizens" (who are sometimes only semi-citizens) and immigrants (who have no rights):

https://crookedtimber.org/2025/03/29/trumps-war-on-immigrants-is-the-cancellation-of-free-society/#fn-53926-1

Trump has asserted that he can arrest and deport immigrants (and some semi-citizens) for saying things he doesn't like, or even liking social media posts he disapproves of. He's argued that he can condemn people to life in an offshore slave-labor camp if he doesn't like their tattoos. It is tyranny, built on ubiquitous surveillance, fueled by spite and grievance.

One of Trumpism's most important tenets is that private institutions should have the legal right to discriminate against minorities that he doesn't like. For example, he's trying to end the CFPB's enforcement action against Townstone, a mortgage broker that practiced rampant racial discrimination:

https://prospect.org/justice/2025-03-28-trump-scrambles-pardon-corporate-criminals-townstone-boeing-cfpb/

By contrast, Trump abhors the idea that private institutions should be allowed to discriminate against the people he likes, hence his holy war against "DEI":

https://www.cnbc.com/2025/03/29/trump-administration-warns-european-companies-to-comply-with-anti-dei-order.html

This is the crux of Wilhoit's Law, an important and true definition of "conservativism":

Conservatism consists of exactly one proposition, to wit: There must be in-groups whom the law protectes but does not bind, alongside out-groups whom the law binds but does not protect.

https://crookedtimber.org/2018/03/21/liberals-against-progressives/#comment-729288

Wilhoit's definition is an important way of framing how conservatives view the role of the state. But there's another definition I like, one that's more about how we relate to one-another, which I heard from Steven Brust: "Ask, 'What's more important: human rights or property rights?' Anyone who answers 'property rights are human rights' is a conservative."

Thus the idea that a mortgage broker or an employer or a banker or a landlord should be able to discriminate against you because of the color of your skin, your sexual orientation, your gender, or your beliefs. If "property rights are human rights," then the human right not to rent to a same-sex couple is co-equal with the couple's human right to shelter.

The property rights/human rights distinction isn't just a way to cleave right from left – it's also a way to distinguish the left from liberals. Liberals will tell you that 'it's not censorship if it's done privately' – on the grounds that private property owners have the absolute right to decide which speech they will or won't permit. Charitably, we can say that some of these people are simply drawing a false equivalence between "violating the First Amendment" and "censorship":

https://pluralistic.net/2022/12/04/yes-its-censorship/

But while private censorship is often less consequential than state censorship, that isn't always true, and even when it is, that doesn't mean that private censorship poses no danger to free expression.

Consider a thought experiment in which a restaurant chain called "No Politics At the Dinner Table Cafe" buys up every eatery in town, and then maintains its monopoly by sewing up exclusive deals with local food producers, and then expands into babershops, taxis and workplace cafeterias, enforcing a rule in all these spaces that bans discussions of politics:

https://locusmag.com/2020/01/cory-doctorow-inaction-is-a-form-of-action/

Here we see how monopoly, combined with property rights, creates a system of censorship that is every bit as consequential as a government rule. And if all of those facilities were to add AI-backed cameras and mics that automatically monitored all our conversations for forbidden political speech, then surveillance would complete the package, yielding private censorship that is effectively indistinguishable from government censorship – with the main difference being that the First Amendment permits the former and prohibits the latter.

The fear that private wealth could lead to a system of private rule has been in America since its founding, when Thomas Jefferson tried (unsuccessfully) to put a ban on monopolies into the US Constitution. A century later, Senator John Sherman wrote the Sherman Act, the first antitrust bill, defending it on the Senate floor by saying:

If we would not submit to an emperor we should not submit to an autocrat of trade.

https://pluralistic.net/2022/02/20/we-should-not-endure-a-king/

40 years ago, neoliberal economists ended America's century-long war on monopolies, declaring monopolies to be "efficient" and convincing Carter, then Reagan, then all their successors (except Biden) to encourage monopolies to form. The US government all but totally suspended enforcement of its antitrust laws, permitting anticompetitive mergers, predatory pricing, and illegal price discrimination. In so doing, they transformed America into a monopolist's playground, where versions of the No Politics At the Dinner Table Cafe have conquered every sector of our economy:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

This is especially true of our speech forums – the vast online platforms that have become the primary means by which we engage in politics, civics, family life, and more. These platforms are able to decide who may speak, what they may say, and what we may hear:

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

These platforms are optimized for mass surveillance, and, when coupled with private sector facial recognition databases, it is now possible to realize the nightmare scenario I mooted in London 23 years ago. As you move through both the virtual and physical world, you can be identified, your political speech can be attributed to you, and it can be used as a basis for discrimination against you:

https://pluralistic.net/2023/09/20/steal-your-face/#hoan-ton-that

This is how things work at the US border, of course, where border guards are turning away academics for having anti-Trump views:

https://www.nytimes.com/2025/03/20/world/europe/us-france-scientist-entry-trump-messages.html

It's not just borders, though. Large, private enterprises own large swathes of our world. They have the unlimited property right to exclude people from their properties. And they can spy on us as much as they want, because it's not just antitrust law that withered over the past four decades, it's also privacy law. The last consumer privacy law Congress bestirred itself to pass was 1988's "Video Privacy Protection Act," which bans video-store clerks from disclosing your VHS rentals. The failure to act on privacy – like the failure to act on monopoly – has created a vacuum that has been filled up with private power. Today, it's normal for your every action – every utterance, every movement, every purchase – to be captured, stored, combined, analyzed, and, of course sold.

With vast property holdings, total property rights, and no privacy law, companies have become the autocrats of trade, able to regulate our speech and association in ways that can no longer be readily distinguished state conduct that is at least theoretically prohibited by the First Amendment.

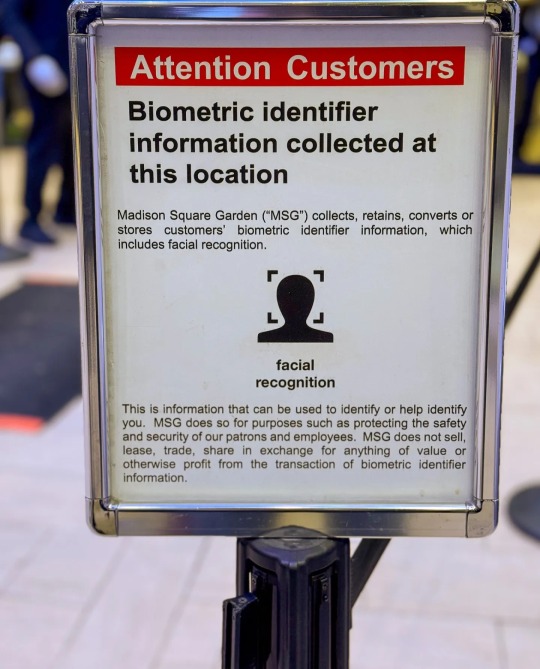

Take Madison Square Garden, a corporate octopus that owns theaters, venues and sport stadiums and teams around the country. The company is notoriously vindictive, thanks to a spate of incidents in which the company used facial recognition cameras to bar anyone who worked at a law-firm that was suing the company from entering any of its premises:

https://www.nytimes.com/2022/12/22/nyregion/madison-square-garden-facial-recognition.html

This practice was upheld by the courts, on the grounds that the property rights of MSG trumped the human rights of random low-level personnel at giant law firms where one lawyer out of thousands happened to be suing the company:

https://www.nbcnewyork.com/news/local/madison-square-gardens-ban-on-lawyers-suing-them-can-remain-in-place-court-rules/4194985/

Take your kid's Girl Scout troop on an outing to Radio City Music Hall? Sure, just quit your job and go work for another firm.

But that was just for starters. Now, MSG has started combing social media to identify random individuals who have criticized the company, and has added their faces to the database of people who can't enter their premises. For example, a New Yorker named Frank Miller has been banned for life from all MSG properties because, 20 years ago, he designed a t-shirt making fun of MSG CEO James Dolan:

https://www.theverge.com/news/637228/madison-square-garden-james-dolan-facial-recognition-fan-ban

This is private-sector Trumpism, and it's just getting started.

Take hotels: the entire hotel industry has collapsed into two gigachains: Marriott and Hilton. Both companies are notoriously bad employers and at constant war with their unions (and with nonunion employees hoping to unionize in the face of flagrant, illegal union-busting). If you post criticism online of both hotel chains for hiring scabs, say, and they add you to a facial recognition blocklist, will you be able to get a hotel room?

After more than a decade of Uber and Lyft's illegal predatory pricing, many cities have lost their private taxi fleets and massively disinvested in their public transit. If Uber and Lyft start compiling dossiers of online critics, could you lose the ability to get from anywhere to anywhere, in dozens of cities?

Private equity has rolled up pet groomers, funeral parlors, and dialysis centers. What happens if the PE barons running those massive conglomerates decide to exclude their critics from any business in their portfolio? How would it feel to be shut out of your mother's funeral because you shit-talked the CEO of Foundation Partners Group?

https://kffhealthnews.org/news/article/funeral-homes-private-equity-death-care/

More to the point: once this stuff starts happening, who will dare to criticize corporate criminals online, where their speech can be captured and used against them, by private-sector Trumps armed with facial recognition and the absurd notion that property rights aren't just human rights – they're the ultimate human rights?

The old fears of Thomas Jefferson and John Sherman have come to pass. We live among autocrats of trade, and don't even pretend the Constitution controls what these private sector governments can do to us.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/31/madison-square-garden/#autocrats-of-trade

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#monopoly#monopolies#monopolism#madison square gardens#autocrats of trade#facial recognition#property rights#human rights#property rights vs human rights

316 notes

·

View notes

Text

Air Canada is poised to roll out facial recognition technology at the gate, making it the first Canadian airline to deploy the software in a bid to streamline the boarding process. Starting Tuesday, customers who board most domestic Air Canada flights at Vancouver International Airport will be able to walk onto the plane without presenting any physical pieces of identification, such as a passport or driver's licence, the country's largest airline said. Participants in the program, which is voluntary, can upload a photo of their face and a scan of their passport to the airline's app.

Continue reading

Tagging: @newsfromstolenland

474 notes

·

View notes

Photo

Facial Recognition

7 October 2018.

593 notes

·

View notes

Text

Makeup that stops facial recognition software

Isn't it super cool? You could design so many characters in cyberpunk settings with make-up looks like this and it'll actually have a lore reason

#and that's definitely totally the oooonly reason why i'd share this#haha ha#makeup#makeup artist#cyberpunk#face recognition#facial recognition#dystopian#dystopia#makeup tips#dystopian fashion

84 notes

·

View notes

Text

A concert on Monday night at New York’s Radio City Music Hall was a special occasion for Frank Miller: his parents’ wedding anniversary. He didn’t end up seeing the show — and before he could even get past security, he was informed that he was in fact banned for life from the venue and all other properties owned by Madison Square Garden (MSG).

After scanning his ticket and promptly being pulled aside by security, Miller was told by staff that he was barred from the MSG properties for an incident at the Garden in 2021. But Miller says he hasn’t been to the venue in nearly two decades.

“They hand me a piece of paper letting me know that I’ve been added to a ban list,” Miller says. “There’s a trespass notice if I ever show up on any MSG property ever again,” which includes venues like Radio City, the Beacon Theatre, the Sphere, and the Chicago Theatre.

He was baffled at first. Then it dawned on him: this was probably about a T-shirt he designed years ago. MSG Entertainment won’t say what happened with Miller or how he was picked out of the crowd, but he suspects he was identified via controversial facial recognition systems that the company deploys at its venues.

In 2017, 1990s New York Knicks star Charles Oakley was forcibly removed from his seat near Knicks owner and Madison Square Garden CEO James Dolan. The high-profile incident later spiraled into an ongoing legal battle. For Miller, Oakley was an “integral” part of the ’90s Knicks, he says. With his background in graphic design, he made a shirt in the style of the old team logo that read, “Ban Dolan” — a reference to the infamous scuffle.

A few years later, in 2021, a friend of Miller’s wore a Ban Dolan shirt to a Knicks game and was kicked out and banned from future events. That incident spawned ESPN segments and news articles and validated what many fans saw as a pettiness on Dolan and MSG’s part for going after individual fans who criticized team ownership.

But this week, Miller wasn’t wearing a Ban Dolan shirt; he wasn’t even at a Knicks game. His friend who was kicked out for the shirt tagged him in social media posts as the designer when it happened, but Miller, who lives in Seattle, hadn’t attended an event in New York in years.

(continue reading)

#politics#ban dolan#charles oakley#james dolan#madison square garden#facial recognition#msg#oligarchy#biometrics#biometric identifiers#radio city music hall#frank miller#surveillance state#surveillance capitalism

41 notes

·

View notes

Text

Dazzle camouflage but for people.

Of course, a good surveillance state would put out this sort of rumor along with pictures and have it all be complete and utter bullshit and then be able to ID and arrest a bunch of suckers, so...keep that in mind, I guess.

#facial recognition#facial recognition software#protest safety#protest ideas#antifascist#surveillance state

43 notes

·

View notes

Text

Friendly reminder that juggalo/ICP face paint messes with facial recognition :)

30 notes

·

View notes

Text

Protecting sex workers means protecting our privacy. Protecting sex workers means putting a stop to facial recognition technology.

Facial recognition technology poses a real danger to sex workers, by giving people a new way to discover our true identities. In this article I share my personal struggle with accepting how easily I can be stalked or harmed and the harm the rise of facial recognition image search websites has done to sex workers in general.

43 notes

·

View notes

Text

Hypothetical AI election disinformation risks vs real AI harms

I'm on tour with my new novel The Bezzle! Catch me TONIGHT (Feb 27) in Portland at Powell's. Then, onto Phoenix (Changing Hands, Feb 29), Tucson (Mar 9-12), and more!

You can barely turn around these days without encountering a think-piece warning of the impending risk of AI disinformation in the coming elections. But a recent episode of This Machine Kills podcast reminds us that these are hypothetical risks, and there is no shortage of real AI harms:

https://soundcloud.com/thismachinekillspod/311-selling-pickaxes-for-the-ai-gold-rush

The algorithmic decision-making systems that increasingly run the back-ends to our lives are really, truly very bad at doing their jobs, and worse, these systems constitute a form of "empiricism-washing": if the computer says it's true, it must be true. There's no such thing as racist math, you SJW snowflake!

https://slate.com/news-and-politics/2019/02/aoc-algorithms-racist-bias.html

Nearly 1,000 British postmasters were wrongly convicted of fraud by Horizon, the faulty AI fraud-hunting system that Fujitsu provided to the Royal Mail. They had their lives ruined by this faulty AI, many went to prison, and at least four of the AI's victims killed themselves:

https://en.wikipedia.org/wiki/British_Post_Office_scandal

Tenants across America have seen their rents skyrocket thanks to Realpage's landlord price-fixing algorithm, which deployed the time-honored defense: "It's not a crime if we commit it with an app":

https://www.propublica.org/article/doj-backs-tenants-price-fixing-case-big-landlords-real-estate-tech

Housing, you'll recall, is pretty foundational in the human hierarchy of needs. Losing your home – or being forced to choose between paying rent or buying groceries or gas for your car or clothes for your kid – is a non-hypothetical, widespread, urgent problem that can be traced straight to AI.

Then there's predictive policing: cities across America and the world have bought systems that purport to tell the cops where to look for crime. Of course, these systems are trained on policing data from forces that are seeking to correct racial bias in their practices by using an algorithm to create "fairness." You feed this algorithm a data-set of where the police had detected crime in previous years, and it predicts where you'll find crime in the years to come.

But you only find crime where you look for it. If the cops only ever stop-and-frisk Black and brown kids, or pull over Black and brown drivers, then every knife, baggie or gun they find in someone's trunk or pockets will be found in a Black or brown person's trunk or pocket. A predictive policing algorithm will naively ingest this data and confidently assert that future crimes can be foiled by looking for more Black and brown people and searching them and pulling them over.

Obviously, this is bad for Black and brown people in low-income neighborhoods, whose baseline risk of an encounter with a cop turning violent or even lethal. But it's also bad for affluent people in affluent neighborhoods – because they are underpoliced as a result of these algorithmic biases. For example, domestic abuse that occurs in full detached single-family homes is systematically underrepresented in crime data, because the majority of domestic abuse calls originate with neighbors who can hear the abuse take place through a shared wall.

But the majority of algorithmic harms are inflicted on poor, racialized and/or working class people. Even if you escape a predictive policing algorithm, a facial recognition algorithm may wrongly accuse you of a crime, and even if you were far away from the site of the crime, the cops will still arrest you, because computers don't lie:

https://www.cbsnews.com/sacramento/news/texas-macys-sunglass-hut-facial-recognition-software-wrongful-arrest-sacramento-alibi/

Trying to get a low-waged service job? Be prepared for endless, nonsensical AI "personality tests" that make Scientology look like NASA:

https://futurism.com/mandatory-ai-hiring-tests

Service workers' schedules are at the mercy of shift-allocation algorithms that assign them hours that ensure that they fall just short of qualifying for health and other benefits. These algorithms push workers into "clopening" – where you close the store after midnight and then open it again the next morning before 5AM. And if you try to unionize, another algorithm – that spies on you and your fellow workers' social media activity – targets you for reprisals and your store for closure.

If you're driving an Amazon delivery van, algorithm watches your eyeballs and tells your boss that you're a bad driver if it doesn't like what it sees. If you're working in an Amazon warehouse, an algorithm decides if you've taken too many pee-breaks and automatically dings you:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

If this disgusts you and you're hoping to use your ballot to elect lawmakers who will take up your cause, an algorithm stands in your way again. "AI" tools for purging voter rolls are especially harmful to racialized people – for example, they assume that two "Juan Gomez"es with a shared birthday in two different states must be the same person and remove one or both from the voter rolls:

https://www.cbsnews.com/news/eligible-voters-swept-up-conservative-activists-purge-voter-rolls/

Hoping to get a solid education, the sort that will keep you out of AI-supervised, precarious, low-waged work? Sorry, kiddo: the ed-tech system is riddled with algorithms. There's the grifty "remote invigilation" industry that watches you take tests via webcam and accuses you of cheating if your facial expressions fail its high-tech phrenology standards:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

All of these are non-hypothetical, real risks from AI. The AI industry has proven itself incredibly adept at deflecting interest from real harms to hypothetical ones, like the "risk" that the spicy autocomplete will become conscious and take over the world in order to convert us all to paperclips:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Whenever you hear AI bosses talking about how seriously they're taking a hypothetical risk, that's the moment when you should check in on whether they're doing anything about all these longstanding, real risks. And even as AI bosses promise to fight hypothetical election disinformation, they continue to downplay or ignore the non-hypothetical, here-and-now harms of AI.

There's something unseemly – and even perverse – about worrying so much about AI and election disinformation. It plays into the narrative that kicked off in earnest in 2016, that the reason the electorate votes for manifestly unqualified candidates who run on a platform of bald-faced lies is that they are gullible and easily led astray.

But there's another explanation: the reason people accept conspiratorial accounts of how our institutions are run is because the institutions that are supposed to be defending us are corrupt and captured by actual conspiracies:

https://memex.craphound.com/2019/09/21/republic-of-lies-the-rise-of-conspiratorial-thinking-and-the-actual-conspiracies-that-fuel-it/

The party line on conspiratorial accounts is that these institutions are good, actually. Think of the rebuttal offered to anti-vaxxers who claimed that pharma giants were run by murderous sociopath billionaires who were in league with their regulators to kill us for a buck: "no, I think you'll find pharma companies are great and superbly regulated":

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

Institutions are profoundly important to a high-tech society. No one is capable of assessing all the life-or-death choices we make every day, from whether to trust the firmware in your car's anti-lock brakes, the alloys used in the structural members of your home, or the food-safety standards for the meal you're about to eat. We must rely on well-regulated experts to make these calls for us, and when the institutions fail us, we are thrown into a state of epistemological chaos. We must make decisions about whether to trust these technological systems, but we can't make informed choices because the one thing we're sure of is that our institutions aren't trustworthy.

Ironically, the long list of AI harms that we live with every day are the most important contributor to disinformation campaigns. It's these harms that provide the evidence for belief in conspiratorial accounts of the world, because each one is proof that the system can't be trusted. The election disinformation discourse focuses on the lies told – and not why those lies are credible.

That's because the subtext of election disinformation concerns is usually that the electorate is credulous, fools waiting to be suckered in. By refusing to contemplate the institutional failures that sit upstream of conspiracism, we can smugly locate the blame with the peddlers of lies and assume the mantle of paternalistic protectors of the easily gulled electorate.

But the group of people who are demonstrably being tricked by AI is the people who buy the horrifically flawed AI-based algorithmic systems and put them into use despite their manifest failures.

As I've written many times, "we're nowhere near a place where bots can steal your job, but we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job"

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

The most visible victims of AI disinformation are the people who are putting AI in charge of the life-chances of millions of the rest of us. Tackle that AI disinformation and its harms, and we'll make conspiratorial claims about our institutions being corrupt far less credible.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/27/ai-conspiracies/#epistemological-collapse

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#disinformation#algorithmic bias#elections#election disinformation#conspiratorialism#paternalism#this machine kills#Horizon#the rents too damned high#weaponized shelter#predictive policing#fr#facial recognition#labor#union busting#union avoidance#standardized testing#hiring#employment#remote invigilation

146 notes

·

View notes

Text

Some police services in Canada are using facial recognition technology to help solve crimes, while other police forces say human rights and privacy concerns are holding them back from employing the powerful digital tools. It’s this uneven application of the technology — and the loose rules governing its use — that has legal and AI experts calling on the federal government to set national standards. “Until there’s a better handle on the risks involved with the use of this technology, there ought to be a moratorium or a range of prohibitions on how and where it can be used,” says Kristen Thomasen, law professor at the University of British Columbia. As well, the patchwork of regulations on emerging biometric technologies has created situations in which some citizens’ privacy rights are more protected than others. “I think the fact that we have different police forces taking different steps raises concerns (about) inequities and how people are treated across the country, but (it) also highlights the continuing importance of some kind of federal action to be taken,” she said.

Continue Reading

Tagging: @newsfromstolenland

309 notes

·

View notes

Text

^^ quiz if you’re not sure (10-20 mins, it tells you your decile at the end)

#poll#polls#tumblr polls#pollblr#augmented polls#face blindness#faceblind#face blind#facial recognition#prosopagnosia

28 notes

·

View notes

Text

"Nineteen Eighty-Four was not supposed to be an instruction manual."

Yet so much Orwell's writings ring true today. Big Brother Watch is determined to make Nineteen Eighty-Four fiction again.

#Big Brother Watch#George Orwell#Big Brother#1984#Nineteen Eighty Four#thought police#thoughtcrime#authoritarianism#totalitarianism#thought control#Telescreen#facial recognition#Ministry of Truth#misinformation#disinformation#debanking#privacy#Newspeak#freedom of thought#freedom of speech#free speech#safetyism#censorship#religion is a mental illness

49 notes

·

View notes

Text

MPs in Hungary have voted to ban Pride events and allow authorities to use facial recognition software to identify attenders and potentially fine them, in what Amnesty International has described as a “full-frontal attack” on LGBTQ+ people.

The legislation – the latest by the prime minister, Viktor Orbán, and his rightwing populist party to target LGBTQ+ rights – was pushed through parliament on Tuesday. Believed to be the first of its kind in the EU’s recent history, the nationwide ban passed by 136 votes to 27 after it was submitted to parliament one day earlier.

It amends the country’s law on assembly to make it an offence to hold or attend events that violate Hungary’s contentious “child protection” legislation, which bars any “depiction or promotion” of homosexuality to minors under the age of 18.

The legislation was condemned by Amnesty International, which described it as the latest in a series of discriminatory measures the Hungarian authorities have taken against LGBTQ+ people.

“The spurious justification for the passing of this law – that events and assemblies would be ‘harmful to children’ – is based on harmful stereotypes and deeply entrenched discrimination, homophobia and transphobia,” it said in a statement.

“This law is a full-frontal attack on the LGBTI community and a blatant violation of Hungary’s obligations to prohibit discrimination and guarantee freedom of expression and peaceful assembly,” it said, adding that the ban would turn the clock back 30 years in Hungary by undermining hard-won rights.

Hadja Lahbib, the EU commissioner for equality, suggested the new law contravened the values of the 27-nation bloc, posting: “Everyone should be able to be who they are, live & love freely. The right to gather peacefully is a fundamental right to be championed across the European Union. We stand with the LGBTQI community – in Hungary & in all member states.

After lawmakers first submitted the bill on Monday, the organisers of Budapest Pride said the law was aimed at turning the LGBTQ+ minority into a “scapegoat” in order to silence critics of Orbán’s government.

“This is not child protection, this is fascism,” organisers wrote. Budapest Pride will mark its 30th anniversary this year, bringing together thousands of people to make visible the community’s struggle for freedom, safety and equal rights even as those in power continually seek to dehumanise them, it noted.

“The government is trying to restrict peaceful protests with a critical voice by targeting a minority,” it added. “Therefore, as a movement, we will fight for the freedom of all Hungarians to demonstrate.”

Organisers said they planned to go ahead with the march in Budapest, despite the law’s stipulation thatthose who attend a prohibited event could face fines of up to 200,000 Hungarian forints (£425).

As the vote was held, opposition MPs ignited smoke bombs, filling the parliamentary chamber with thick plumes of colourful smoke.

Opposition MPs set off flares in the Hungarian parliament. Photograph: Boglárka Bodnár/AP

After the law’s adoption, a spokesperson for Budapest Pride, Jojó Majercsik, told the Associated Press that the organisation had received an outpouring of support.

“Many, many people have been mobilised,” Majercsik said. “It’s a new thing, compared with the attacks of the last years, that we’ve received many messages and comments from people saying: ‘Until now I haven’t gone to Pride, I didn’t care about it, but this year I’ll be there and I’ll bring my family.’”

Since returning to lead the country in 2010, Orbán has faced criticism for weakening democratic institutions, including accusations of gradually undermining the rule of law.

His government, in turn, has sought to portray itself as a champion of traditional family values, unleashing a crackdown that has drawn parallels with Russia as it adopts measures such as blocking same-sex couples from adopting children and barring any mention of LGBTQ+ issues in school education programmes.

Tamás Dombos, a project coordinator at the Hungarian LGBTQ+ rights group Háttér Society, described Orbán’s assault on minorities as a tactic aimed at distracting voters.

“It’s a very common strategy of authoritarian governments not to talk about the real issues that people are affected by: the inflation, the economy, the terrible condition of education and healthcare,” said Dombos.

Orbán, he continued, “has been here with us for 15 years lying into people’s faces, letting the country rot basically, and then coming up with these hate campaigns”.

The restrictions on Pride come as Orbán is facing an unprecedented challenge from a former member of the Fidesz party’s elite, Péter Magyar, in advance of next year’s elections, leading some to suggest the ban was aimed at winning over far-right voters.

“It is easy to win votes by restricting the rights of a minority in a conservative society,” Szabolcs Hegyi of the Hungarian Civil Liberties Union (TASZ) told Agence France-Presse.

He warned, however, that the curtailing of civil liberties, seemingly for political gain, was a slippery slope. “Eventually, you can get to a situation where virtually no one can protest except those who are not critical of the government’s position

#article#LGBTQ#hungary#pride#police state#facial recognition#privacy#police#censorship#discrimination#europe#the guardian

10 notes

·

View notes

Text

> THREAD: The official narrative of how Luigi Mangione was apprehended doesn’t add up. Evidence suggests a deeper surveillance operation involving real-time facial recognition technology. Let’s delve into the inconsistencies and explore the implications. 🧵 Image Image >Considering Mangione’s efforts to conceal his identity, it’s improbable that a fast-food employee could identify him based solely on limited public images. We’re talking about a high-pressure, fast-paced environment where employees process hundreds of customers daily. >But what if the real key to his capture wasn’t human recognition at all? I’ll bet you didn’t know McDonald’s kiosk cameras have facial recognition technology: pointjupiter.com/work/mcdonalds/ >Given the integration of this technology, it’s not unlikely that federal agencies can access these systems for surveillance with real-time facial recognition across multiple venues. The NSA and other agencies already have a track record of using private surveillance networks. >Admitting the feds are running real-time facial recognition surveillance across the country would spark outrage. Instead, they sell a more "believable" narrative that a heroic employee saved the day. >If this is true, it means federal agencies have access to live camera feeds in private businesses, and they’re using AI to scan and identify individuals in real time. Surveillance isn’t limited to fugitives – it could extend to anyone, anywhere.

>This has massive implications for privacy and civil liberties. If McDonald’s can be used as a hub for mass surveillance, what about other chains? Grocery stores? Gas stations? The infrastructure is already there. Image >Kroger has faced scrutiny for using facial recognition tech too – and it’s even more dystopian. Not only are they scanning faces, but they’re linking that data to shopping profiles and potentially altering prices in real time based on your data. aclu.org/news/privacy-t… >This isn’t just about marketing – it’s surveillance capitalism on steroids. Corporations are turning our faces into data points to manipulate our spending, while the government secretly piggybacks off that infrastructure to track civilians. >We should demand transparency. If facial recognition is being used at this scale, we have a right to know. How much data are these companies collecting? Who else has access to this data, such as third parties and law enforcement? How are these technologies regulated, if at all? >We’re at a crossroads. If we don’t push back now, this kind of tech will become the norm. Surveillance will be baked into every facet of our lives, from shopping to dining to simply walking down the street. Image >The Mangione case and Kroger’s practices show us the future: a world where our faces are not just tracked but exploited, both by corporations seeking profit and governments seeking control. >We need transparency and accountability: Clear limits on the use of facial recognition tech, protections against price manipulation based on profiling, and strong oversight of government access to private surveillance networks. >This isn’t just a McDonald’s or Kroger issue – it’s a systemic shift. Facial recognition is becoming the foundation of a surveillance economy, and we must demand better protections now.

17 notes

·

View notes