#fabric rest api

Explore tagged Tumblr posts

Text

Fabric Rest API ahora en SimplePBI

La Data Web trae un regalo para esta navidad. Luego de un gran tiempo de trabajo, hemos incorporado una gran cantidad de requests provenientes de la API de Fabric a la librería SimplePBI de python . Los llamados globales ya están en preview y hemos intentado abarcar los más destacados.

Este es el comienzo de un largo camino de desarrollo que poco a poco intentar abarcar cada vez más categorías para facilitar el uso como venimos haciendo con Power Bi hace años.

Este artículo nos da un panorama de que hay especificamente y como comenzar a utilizarla pronto.

Para ponernos en contexto comenzamos con la teoría. SimplePBI es una librería de Python open source que vuelve mucho más simple interactuar con la PowerBi Rest API. Ahora incorpora también Fabric Rest API. Esto significa que no tenemos que instalar una nueva librería sino que basta con actualizarla. Esto podemos hacerlo desde una consola de comandos ejecutando pip siempre y cuando tengamos python instalado y PIP en las variables de entorno. Hay dos formas:

pip install --upgrade SimplePBI pip install -U SimplePBI

Necesitamos una versión 1.0.1 o superior para disponer de la nueva funcionalidad.

Pre requisitos

Tal como lo hacíamos con la PowerBi Rest API, lo primero es registrar una app en azure y dar sus correspondientes permisos. De momento, todos los permisos de Fabric se encuentran bajo la aplicación delegada "Power Bi Service". Podes ver este artículo para ejecutar el proceso: https://blog.ladataweb.com.ar/post/740398550344728576/seteo-powerbi-rest-api-por-primera-vez

Características

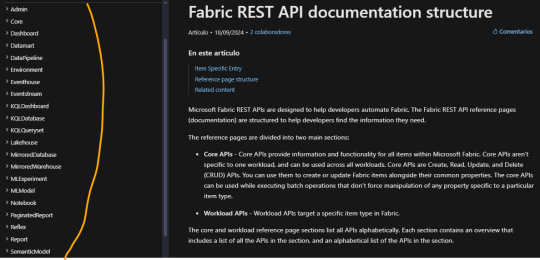

La nueva incorporación intentará cubrir principalmente dos categorías indispensables de la Rest API. Veamos la documentación para guiarnos mejor: https://learn.microsoft.com/en-us/rest/api/fabric/articles/api-structure

A la izquierda podemos ver todas las categorías bajo las cuales consultar u operar siempre y cuando tengamos permisos. Fabric ha optado por denominar "Items" a cada tipo de contenido creable en su entorno. Por ejemplo un item podría ser un notebook, un modelo semántico o un reporte. En este primer release, hemos decidido enfocarnos en las categorías más amplias. Estamos hablando de Admin y Core. Cada una contiene una gran cantidad métodos. Una enfocada en visión del tenant y otro en operativo de la organización. Admin contiene subcategorías como domains, items, labels, tenant, users, workspaces. En core encontraremos otra como capacities, connections, deployment pipelines, gateways, items, job scheduler, long running operations, workspaces.

La forma de uso es muy similar a lo que simplepbi siempre ha presentado con una ligera diferencia en su inicialización de objeto, puesto que ahora tenemos varias clases en un objeto como admin o core.

Para importar llamaremos a fabric desde simplepbi aclarando la categoría deseada

from simplepbi.fabric import core

Para autenticar vamos a necesitar valores de la app registrada. Podemos hacerlo por service principal con un secreto o nuestras credenciales. Un ejemplo para obtener un token que nos permita utilizar los objetos de la api con service principal es:

t = token.Token(tenant_id, app_client_id, None, None, app_secret_key, use_service_principal=True)

Vamos intentar que las categorías de la documentación coincidan con el nombre a colocar luego de importar. Sin embargo, puede que algunas no coincidan como "Admin" de fabric no puede usarse porque ya existe en simplepbi. Por lo tanto usariamos "adminfab". Luego inicializamos el objeto con la clase deseada de la categoría de core.

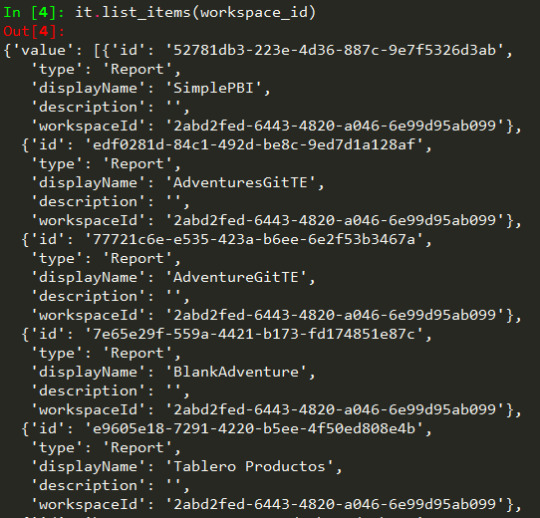

it = core.Items(t.token)

De este modo tenemos accesibilidad a cada método en items de core. Por ejemplo listarlos:

Consideraciones

No todos los requests funcionan con Service Principal. La documentación especifica si podremos usar dicha autenticación o no. Leamos con cuidado en caso de fallas porque podría no soportar ese método.

Nuevos lanzamientos en core y admin. Nos queda un largo año en que buscaremos tener esas categorías actualizadas y poco a poco ir planificando bajo prioridad cuales son las más atractivas para continuar.

Para conocer más, seguirnos o aportar podes encontrarnos en pypi o github.

Recordemos que no solo la librería esta incorporando estos requests como preview sino también que la Fabric API esta cambiando cada día con nuevos lanzamientos y modificaciones que podrían impactar en su uso. Por esto les pedimos feedback y paciencia para ir construyendo en comunidad una librería más robusta.

#fabric#microsoftfabric#fabric tutorial#fabric training#fabric tips#fabric rest api#power bi rest api#fabric python#ladataweb#simplepbi#fabric argentina#fabric cordoba#fabric jujuy

0 notes

Text

The modder argument is a fallacy.

We've all heard the argument, "a modder did it in a day, why does Mojang take a year?"

Hi, in case you don't know me, I'm a Minecraft modder. I'm the lead developer for the Sweet Berry Collective, a small modding team focused on quality mods.

I've been working on a mod, Wandering Wizardry, for about a year now, and I only have the amount of new content equivalent to 1/3 of an update.

Quality content takes time.

Anyone who does anything creative will agree with me. You need to make the code, the art, the models, all of which takes time.

One of the biggest bottlenecks in anything creative is the flow of ideas. If you have a lot of conflicting ideas you throw together super quickly, they'll all clash with each other, and nothing will feel coherent.

If you instead try to come up with ideas that fit with other parts of the content, you'll quickly run out and get stuck on what to add.

Modders don't need to follow Mojang's standards.

Mojang has a lot of standards on the type of content that's allowed to be in the game. Modders don't need to follow these.

A modder can implement a small feature in 5 minutes disregarding the rest of the game and how it fits in with that.

Mojang has to make sure it works on both Java and Bedrock, make sure it fits with other similar features, make sure it doesn't break progression, and listen to the whole community on that feature.

Mojang can't just buy out mods.

Almost every mod depends on external code that Mojang doesn't have the right to use. Forge, Fabric API, and Quilt Standard Libraries, all are unusable in base Minecraft, as well as the dozens of community maintained libraries for mods.

If Mojang were to buy a mod to implement it in the game, they'd need to partially or fully reimplement it to be compatible with the rest of the codebase.

Mojang does have tendencies of *hiring* modders, but that's different than outright buying mods.

Conclusion

Stop weaponizing us against Mojang. I can speak for almost the whole modding community when I say we don't like it.

Please reblog so more people can see this, and to put an end to the modder argument.

#minecraft#minecraft modding#minecraft mods#moddedminecraft#modded minecraft#mob vote#minecraft mob vote#minecraft live#minecraft live 2023#content creation#programming#java#c++#minecraft bedrock#minecraft community#minecraft modding community#forge#fabric#quilt#curseforge#modrinth

1K notes

·

View notes

Text

our forever world <3 ⊹˚. * . ݁₊ ⊹ ⊹˚. * . ݁₊

click below to see details <3 ⊹˚. * . ݁₊ ⊹

Hello! These are some screenshots my boyfriend took of me running around our long-term modded survival minecraft world! If you would like me to post the mods I have added (Fabric API thru curseforge for version 1.21.4), please let me know, and I'll gladly do so!

I can also tell you more details if you so please, like what resource packs, settings, etc. These are all running pretty smoothly for me, and I have a geekom mini pc! So, if you'd like some low-pc friendly mods for quality of life and cozy-casual gameplay, I'd be more than happy to give the creators some love and share their work!

I can also post some builds and updates on here as we progress, if that's something you guys would like to see.

Thanks for reading! Have a lovely rest of your day.

#minecraft screenshots#minecraft youtuber#minecraft build#minecraft server#minecraft#mine#forever world#worldbuilding#modpack#minecraft modpack#modded#minecraft mods#music#aesthetic#cottagecore#cottage aesthetic#cozycore#cozy aesthetic#cozy vibes#sandbox games#survivalworld#survival games#forever#boyfriend#cute#cozy games#stardew valley#fantasy#medieval#show us your builds

19 notes

·

View notes

Text

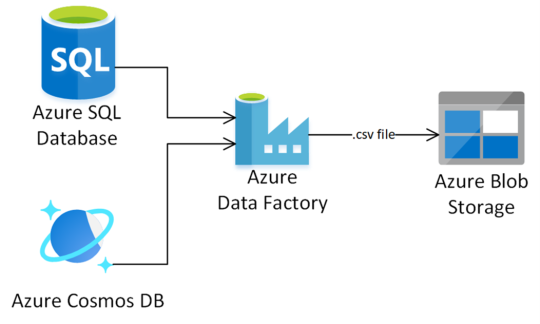

What’s New in Azure Data Factory? Latest Features and Updates

Azure Data Factory (ADF) has introduced several notable enhancements over the past year, focusing on expanding data movement capabilities, improving data flow performance, and enhancing developer productivity. Here’s a consolidated overview of the latest features and updates:

Data Movement Enhancements

Expanded Connector Support: ADF has broadened its range of supported data sources and destinations:

Azure Table Storage and Azure Files: Both connectors now support system-assigned and user-assigned managed identity authentication, enhancing security and simplifying access management.

ServiceNow Connector: Introduced in June 2024, this connector offers improved native support in Copy and Lookup activities, streamlining data integration from ServiceNow platforms.

PostgreSQL and Google BigQuery: New connectors provide enhanced native support and improved copy performance, facilitating efficient data transfers.

Snowflake Connector: Supports both Basic and Key pair authentication for source and sink, offering flexibility in secure data handling.

Microsoft Fabric Warehouse: New connectors are available for Copy, Lookup, Get Metadata, Script, and Stored Procedure activities, enabling seamless integration with Microsoft’s data warehousing solutions.

Data Flow and Processing Improvements

Spark 3.3 Integration: In April 2024, ADF updated its Mapping Data Flows to utilize Spark 3.3, enhancing performance and compatibility with modern data processing tasks.

Increased Pipeline Activity Limit: The maximum number of activities per pipeline has been raised to 80, allowing for more complex workflows within a single pipeline.

Developer Productivity Features

Learning Center Integration: A new Learning Center is now accessible within the ADF Studio, providing users with centralized access to tutorials, feature updates, best practices, and training modules, thereby reducing the learning curve for new users.

Community Contributions to Template Gallery: ADF now accepts pipeline template submissions from the community, fostering collaboration and enabling users to share and leverage custom templates.

Enhanced Azure Portal Design: The Azure portal features a redesigned interface for launching ADF Studio, improving discoverability and user experience.

Upcoming Features

Looking ahead, several features are slated for release in Q1 2025:

Dataflow Gen2 Enhancements:

CI/CD and Public API Support: Enabling continuous integration and deployment capabilities, along with programmatic interactions via REST APIs.

Incremental Refresh: Optimizing dataflow execution by retrieving only changed data, with support for Lakehouse destinations.

Parameterization and ‘Save As’ Functionality: Allowing dynamic dataflows and easy duplication of existing dataflows for improved efficiency.

Copy Job Enhancements:

Incremental Copy without Watermark Columns: Introducing native Change Data Capture (CDC) capabilities for key connectors, eliminating the need for specifying incremental columns.

CI/CD and Public API Support: Facilitating streamlined deployment and programmatic management of Copy Job items.

These updates reflect Azure Data Factory’s commitment to evolving in response to user feedback and the dynamic data integration landscape. For a more in-depth exploration of these features, you can refer to the official Azure Data Factory documentation.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Exploring the Key Components of Cisco ACI Architecture

Cisco ACI (Application Centric Infrastructure) is a transformative network architecture designed to streamline data center management by enabling centralized automation and policy-driven management. In this blog, we will explore the key components of Cisco ACI architecture, highlighting how it facilitates seamless connectivity, scalability, and flexibility within modern data centers.

Whether you are a network professional or a beginner, Cisco ACI training offers invaluable insights into mastering the complexities of ACI. Understanding its core components is essential for optimizing performance and ensuring a secure, efficient network environment. Let's dive into the foundational elements that make up this powerful solution.

Introduction to Cisco ACI Architecture

Cisco ACI is a software-defined networking (SDN) solution designed for modern data centers. It provides a centralized framework to manage networks through a policy-based approach, enabling administrators to automate workflows and enforce application-centric policies efficiently. The architecture is built on a fabric model, incorporating a spine-leaf topology for optimized communication.

Core Components of Cisco ACI

Application Policy Infrastructure Controller (APIC):

The brain of the Cisco ACI architecture.

Manages and monitors the fabric while enforcing policies.

Provides a centralized RESTful API for automation and integration.

Leaf and Spine Switches:

Spine Switches: Handle high-speed inter-leaf communication.

Leaf Switches: Connect endpoints such as servers, storage devices, and other network resources.

Together, they create a low-latency, highly scalable topology.

Endpoint Groups (EPGs):

Logical groupings of endpoints that share similar application or policy requirements.

Simplify the application of policies across connected devices.

Cisco ACI Fabric

The ACI fabric forms the backbone of the architecture, ensuring seamless communication between components. It is based on a spine-leaf topology, where each leaf connects to every spine.

Features:

Uses VXLAN (Virtual Extensible LAN) for overlay networking.

Ensures scalability with distributed intelligence and endpoint learning.

Provides high availability and fault tolerance.

Benefits:

Eliminates bottlenecks with uniform traffic distribution.

Simplifies network operations through centralized management.

Policy Model in Cisco ACI

The policy-driven approach is central to Cisco ACI’s architecture. It enables administrators to define how applications and endpoints interact, reducing complexity and errors.

Key Elements:

Tenants: Logical units for resource isolation.

EPGs: Define groups of endpoints with shared policies.

Contracts and Filters: Govern communication between EPGs.

Advantages:

Provides consistency across the network.

Enhances security by enforcing predefined policies.

Tenants in Cisco ACI

Tenants are fundamental to Cisco ACI’s multi-tenancy model. They provide logical segmentation of resources within the network.

Types of Tenants:

Management Tenant: Manages the ACI fabric and infrastructure.

Common Tenant: Shares resources across multiple users or applications.

Custom Tenants: Created for specific business units or use cases.

Benefits:

Enables secure isolation of resources.

Simplifies management in multi-tenant environments.

Contracts and Filters

Contracts and filters define how endpoints within different EPGs interact.

Contracts:

Specify traffic rules between EPGs.

Include criteria such as protocols, ports, and permissions.

Filters:

Provide granular control over traffic flow.

Allow administrators to define specific policies for allowed or denied communication.

Endpoint Discovery and Learning

Cisco ACI simplifies network operations with dynamic endpoint discovery.

How It Works:

Leaf switches identify endpoints by tracking MAC and IP addresses.

Updates to the fabric are automatic, reflecting real-time changes.

Benefits:

Reduces manual intervention.

Ensures efficient resource utilization and adaptability.

Role of VXLAN in Cisco ACI

VXLAN is a critical technology in Cisco ACI, enabling overlay networking for scalable data centers.

Features:

Encapsulates Layer 2 traffic over a Layer 3 network.

Supports up to 16 million VLANs for extensive segmentation.

Benefits:

Enhances network flexibility and scalability.

Simplifies workload mobility without reconfiguring the physical network.

Integrations with External Networks

Cisco ACI integrates seamlessly with legacy and external networks, ensuring smooth interoperability.

Connectivity Options:

Layer 2 Out (L2Out): Provides direct Layer 2 connectivity to external devices.

Layer 3 Out (L3Out): Establishes Layer 3 routing to external systems.

Advantages:

Facilitates gradual migration to ACI.

Bridges new and existing network infrastructures.

Advantages of Cisco ACI Architecture

Scalability: Supports rapid growth with a spine-leaf topology.

Automation: Reduces complexity with policy-driven management.

Security: Enhances protection through segmentation and controlled interactions.

Flexibility: Adapts to hybrid and multi-cloud environments.

Efficiency: Simplifies network operations, reducing administrative overhead.

Conclusion

In conclusion, understanding the key components of Cisco ACI architecture is essential for designing and managing modern data center networks. By integrating software and hardware, ACI offers a scalable, secure, and automated network environment.

With its centralized policy model and simplified management, Cisco ACI transforms network operations. For those looking to gain in-depth knowledge and practical skills in Cisco ACI, enrolling in a Cisco ACI course can be a valuable step toward mastering this powerful technology and staying ahead in the ever-evolving world of networking.

0 notes

Text

Microsoft SQL Server 2025: A New Era Of Data Management

Microsoft SQL Server 2025: An enterprise database prepared for artificial intelligence from the ground up

The data estate and applications of Azure clients are facing new difficulties as a result of the growing use of AI technology. With privacy and security being more crucial than ever, the majority of enterprises anticipate deploying AI workloads across a hybrid mix of cloud, edge, and dedicated infrastructure.

In order to address these issues, Microsoft SQL Server 2025, which is now in preview, is an enterprise AI-ready database from ground to cloud that applies AI to consumer data. With the addition of new AI capabilities, this version builds on SQL Server’s thirty years of speed and security innovation. Customers may integrate their data with Microsoft Fabric to get the next generation of data analytics. The release leverages Microsoft Azure innovation for customers’ databases and supports hybrid setups across cloud, on-premises datacenters, and edge.

SQL Server is now much more than just a conventional relational database. With the most recent release of SQL Server, customers can create AI applications that are intimately integrated with the SQL engine. With its built-in filtering and vector search features, SQL Server 2025 is evolving into a vector database in and of itself. It performs exceptionally well and is simple for T-SQL developers to use.Image credit to Microsoft Azure

AI built-in

This new version leverages well-known T-SQL syntax and has AI integrated in, making it easier to construct AI applications and retrieval-augmented generation (RAG) patterns with safe, efficient, and user-friendly vector support. This new feature allows you to create a hybrid AI vector search by combining vectors with your SQL data.

Utilize your company database to create AI apps

Bringing enterprise AI to your data, SQL Server 2025 is a vector database that is enterprise-ready and has integrated security and compliance. DiskANN, a vector search technology that uses disk storage to effectively locate comparable data points in massive datasets, powers its own vector store and index. Accurate data retrieval through semantic searching is made possible by these databases’ effective chunking capability. With the most recent version of SQL Server, you can employ AI models from the ground up thanks to the engine’s flexible AI model administration through Representational State Transfer (REST) interfaces.

Furthermore, extensible, low-code tools provide versatile model interfaces within the SQL engine, backed via T-SQL and external REST APIs, regardless of whether clients are working on data preprocessing, model training, or RAG patterns. By seamlessly integrating with well-known AI frameworks like LangChain, Semantic Kernel, and Entity Framework Core, these tools improve developers’ capacity to design a variety of AI applications.

Increase the productivity of developers

To increase developers’ productivity, extensibility, frameworks, and data enrichment are crucial for creating data-intensive applications, such as AI apps. Including features like support for REST APIs, GraphQL integration via Data API Builder, and Regular Expression enablement ensures that SQL will give developers the greatest possible experience. Furthermore, native JSON support makes it easier for developers to handle hierarchical data and schema that changes regularly, allowing for the development of more dynamic apps. SQL development is generally being improved to make it more user-friendly, performant, and extensible. The SQL Server engine’s security underpins all of its features, making it an AI platform that is genuinely enterprise-ready.

Top-notch performance and security

In terms of database security and performance, SQL Server 2025 leads the industry. Enhancing credential management, lowering potential vulnerabilities, and offering compliance and auditing features are all made possible via support for Microsoft Entra controlled identities. Outbound authentication support for MSI (Managed Service Identity) for SQL Server supported by Azure Arc is introduced in SQL Server 2025.

Additionally, it is bringing to SQL Server performance and availability improvements that have been thoroughly tested on Microsoft Azure SQL. With improved query optimization and query performance execution in the latest version, you may increase workload performance and decrease troubleshooting. The purpose of Optional Parameter Plan Optimization (OPPO) is to greatly minimize problematic parameter sniffing issues that may arise in workloads and to allow SQL Server to select the best execution plan based on runtime parameter values supplied by the customer.

Secondary replicas with persistent statistics mitigate possible performance decrease by preventing statistics from being lost during a restart or failover. The enhancements to batch mode processing and columnstore indexing further solidify SQL Server’s position as a mission-critical database for analytical workloads in terms of query execution.

Through Transaction ID (TID) Locking and Lock After Qualification (LAQ), optimized locking minimizes blocking for concurrent transactions and lowers lock memory consumption. Customers can improve concurrency, scalability, and uptime for SQL Server applications with this functionality.

Change event streaming for SQL Server offers command query responsibility segregation, real-time intelligence, and real-time application integration with event-driven architectures. New database engine capabilities will be added, enabling near real-time capture and publication of small changes to data and schema to a specified destination, like Azure Event Hubs and Kafka.

Azure Arc and Microsoft Fabric are linked

Designing, overseeing, and administering intricate ETL (Extract, Transform, Load) procedures to move operational data from SQL Server is necessary for integrating all of your data in conventional data warehouse and data lake scenarios. The inability of these conventional techniques to provide real-time data transfer leads to latency, which hinders the development of real-time analytics. In order to satisfy the demands of contemporary analytical workloads, Microsoft Fabric provides comprehensive, integrated, and AI-enhanced data analytics services.

The fully controlled, robust Mirrored SQL Server Database in Fabric procedure makes it easy to replicate SQL Server data to Microsoft OneLake in almost real-time. In order to facilitate analytics and insights on the unified Fabric data platform, mirroring will allow customers to continuously replicate data from SQL Server databases running on Azure virtual machines or outside of Azure, serving online transaction processing (OLTP) or operational store workloads directly into OneLake.

Azure is still an essential part of SQL Server. To help clients better manage, safeguard, and control their SQL estate at scale across on-premises and cloud, SQL Server 2025 will continue to offer cloud capabilities with Azure Arc. Customers can further improve their business continuity and expedite everyday activities with features like monitoring, automatic patching, automatic backups, and Best Practices Assessment. Additionally, Azure Arc makes SQL Server licensing easier by providing a pay-as-you-go option, giving its clients flexibility and license insight.

SQL Server 2025 release date

Microsoft hasn’t set a SQL Server 2025 release date. Based on current data, we can make some confident guesses:

Private Preview: SQL Server 2025 is in private preview, so a small set of users can test and provide comments.

Microsoft may provide a public preview in 2025 to let more people sample the new features.

General Availability: SQL Server 2025’s final release date is unknown, but it will be in 2025.

Read more on govindhtech.com

#MicrosoftSQLServer2025#DataManagement#SQLServer#retrievalaugmentedgeneration#RAG#vectorsearch#GraphQL#Azurearc#MicrosoftAzure#MicrosoftFabric#OneLake#azure#microsoft#technology#technews#news#govindhtech

0 notes

Text

API architectural styles determine how applications communicate. The choice of an API architecture can have significant implications on the efficiency, flexibility, and robustness of an application. So it is very important to choose based on your application's requirements, not just what is often used. Let’s examine some prominent styles:

REST https://drp.li/what-is-a-rest-api-z7lk…

GraphQL https://drp.li/graphql-how-does-it-work-z7l…

SOAP https://drp.li/soap-how-does-it-work-z7lk…

gRPC gRPC https://drp.li/what-is-grpc-z7lk…

WebSockets https://drp.li/webhooks-and-websockets-z7lk…

MQTT MQTT https://drp.li/automation-with-mqtt-z7lk…

API architectural styles are strategic choices that influence the very fabric of application interactions. to learn more : https://drp.li/state-of-the-api-report-z7dp…

0 notes

Text

No. 4: HALLUCINATIONS

Hypnosis | Sensory Deprivation | “You’re still alive in my head.” (Billy Lockett, More)

OC Whump

Hi, here is my contribution no.4 for Whumptober !

A bit of context : When he was younger, the Ensorceleur fled his home and met a man who drew him into his mercenary army. He trusted this man completely, without realizing that their relationship was anything but healthy. After years of committing atrocities on behalf of his mentor, he finally opened his eyes and left. But the experience have definitely left a mark.

If you have any questions, I'd obviously be more than happy to answer! Also, English isn't my first language, so i apologize for any mistake. Check the tags for TW and enjoy !

The world moved around without him seeming to belong to it. His body seemed to be in a different space-time, heavy and slow, while a complex choreography of fluid movements seemed to take place around. A thick, heavy fabric limited his movements and separated him from the rest of the world. On a deeper level, the Ensorceleur recognized the effects of an active substance, probably an opioid administered to calm the raging pain that had taken hold of his decomposing right arm. This recognition, however, didn't allow him to act on the consequences, which didn't help the swarm of agitated persons next to him to calm down.

Standing next to his shivering friend who was clearly in a state of shock, Api struggled to retain any vestiges of composure.

-If there's one fucking piece of information that's correct and accurate in his file, it's that he reacts badly to opioids !

-It wasn't in his file, sir ! Retorted the young apprentice on the verge of tears.

-Then who messed with the files ?!

-I did the best I could with what I had, sir !

-Damn it!

At his wits end, the healer turned away and took a deep breath to calm himself. Well, at least the drug seemed to have greatly reduced the physical pain, which was the primary objective. On the negative side, the mercenary looked more distressed than Api had ever seen him.

The Ensorceleur buried his head in his knees with a moan, drawing his attention. The man who treated a show of weakness as the worst thing that could happen to him moaned. The healer dropped to one knee, hesitantly bringing his hands up to the other man. The problem with trying to heal an Entity completely drugged and trained to kill was that the slightest miscalculated gesture could have dramatic consequences.

-Easy, breathed a voice behind his ear before he could make contact with his friend.

Crouching beside him, Bryan regarded the Ensorceleur with a worried expression.

-If possible, avoid touching him. He sometimes reacts...violently, when he's not in his normal state.

-Has anything like this ever happened before ? inquired the healer cautiously.

The guild leader hesitated visibly, because...

-With his metabolism, yes, from time to time...Don't look at me like that ! he quickly defended himself against the healer's glare. We tried to get his cooperation on several potential treatment plans when necessary, when he was in top form, and he always refused ! Except that once he was injured, we had no choice but to try and treat him with what little medical history we had. So yes, sometimes things got out of hand, and I've seen him in that kind of state before.

The Ensorceleur muttered a series of garbled words incomprehensible to them, and Bryan winced.

-Well, maybe not like this. His reactions to opioids are one of the pieces of information he's shared with us on his own.

-Hey. I need you to focus on us and try to communicate how you're feeling. I have a drug with an antagonistic effect that may help you feel better, but with your strange metabolism, I'd rather we let the effect wear off on its own. But I need to know how you feel, Api said slowly and distinctly to his patient.

The Ensorceleur could have answered him. He could have told him immediately to give him the strongest possible dose of his magic product. In fact, he would probably have begged him to do so, had he been able to hear what Api was saying.

But the ghostly hand resting on the back of his neck like tthe executioner guillotine had ensured that his undivided attention went to the only person in the room worthy of it.

Didn’t I taught you that showing weakness is the best way to get others to stab you in the back ?

Not real. He wasn't. He was drugged, and he absolutely had to hold onto that thought. At all costs.

You've never been one to hide behind lies. But I guess that's what you needed to keep hiding behind Silver Shein's back like a scared child.

The hand had more weight now, nails digging into flesh.

It's pathetic. You look like a beaten dog. But I suppose my disgust is normal. Few artists are ever satisfied with their creations.

The Ensorceleur exhaled the liquid lead in his lungs in a long, hoarse hiss and tried to convince himself that the hand on the back of his neck was more reassuring than terrifying, whether it belonged to Api or Bryan, or even Freya, who distrusted him but wouldn't hurt him for no reason, least of all in front of Bryan's eyes.

He forced himself to open his eyes and stare at Api's anxious face hovering in front of him. Whatever he felt behind him wasn't real. Just a hallucination brought on by the painkiller. Nothing that could hurt him, just a conspiracy from his brain and senses. If he concentrated on Api's features, on his reassuring presence, then the hallucinations would have a harder time dragging him into the dark corner of his consciousness where they resided.

Except that a pale face burst into his field of vision, blocking out his friend's view. The Enchanter gasped and threw himself backwards. His skull hit the wall with a thud and a flash of white flashed into his retina for a second, just a second ; that was enough.

A leather-gloved iron fist closed around his neck, strangling the scream. A weight much heavier than it should have crushed his hips, pinning him to the ground, and Magister leaned over him, smiling broadly, his pupils two black holes dripping ink onto his face.

Perhaps your brother's son would make a better canvas...or a better receptacle !

The man's face melted, lengthened a little, and his hair grew and lightened until a mass of curls frame familiar features. A grotesque parody of Lucien laughed in his face, before vomiting black, stale blood onto his chest. The Ensorceleur received a few drops in his mouth and audibly choked, struggling to free himself from his mentor's grasp.

-No. N-no...

He’s choking

Even now, you don't beg. Is there anything that could make you give up your misplaced pride ? Are they so insignificant to you, those you claim to protect ?

-Nooo...

We'll see, whispered the abomination with his nephew’s face. We'll see how quickly you fall at his feet...

When I've repaired your mistake and got my new suit of flesh, finished Magister, his mentor, master, friend and executioner.

Through the delirious terror (not for himself, never for himself, because his master would never hurt him, but the others, the insignificant...) that clouded his mind, he became aware of an increasingly acute pain in his arm. He resumed his pitiful attempts to free himself. He was the Ensorceleur, he had to fight, to keep going, to do the only thing he was good at...

But he had never been able to make even a violent gesture towards Magister.

You love me more than you've ever loved anyone.

Warm breath on his nose. Ice-blue eyes, punctuated with shadows and shades, so close he could almost see the constellations formed by the black flakes in the iris.

I'll try to sedate him

Watch his arm

Moist warmth on his cheeks, distant and impersonal. Emotions blunted and others too vivid to comprehend that clash and leave him torn, barely able to put together the pieces that make him the Ensorceleur.

I love you.

A sharp but localized pain in his arm.

I forgive you.

The last image to followed him into the muddy waters of unconsciousness were those icy eyes. Or...warm brown, perhaps?

He prefered this softer brown.

L'Ensorceleur let himself be drawn under the surface, where neither ghosts nor memories can follow him.

You belong to me, after all.

#whumptober2024#no.4#Hallucinations#You’re still alive in my head#OC#fic#fantasy settings#angst#Drugged whumpee#Implied past-abuse#Implied unhealthy relationship#Probably an inappropriate way to handle this type of situation

1 note

·

View note

Text

Cisco Nexus Dashboard Fabric Controller Arbitrary Command Execution Vulnerability [CVE-2024-20432]

CVE number = CVE-2024-20432 A vulnerability in the REST API and web UI of Cisco Nexus Dashboard Fabric Controller (NDFC) could allow an authenticated, low-privileged, remote attacker to perform a command injection attack against an affected device. This vulnerability is due to improper user authorization and insufficient validation of command arguments. An attacker could exploit this vulnerability by submitting crafted commands to an affected REST API endpoint or through the web UI. A successful exploit could allow the attacker to execute arbitrary commands on the CLI of a Cisco NDFC-managed device with network-admin privileges. Note: This vulnerability does not affect Cisco NDFC when it is configured for storage area network (SAN) controller deployment. Cisco has released software updates that address this vulnerability. There are no workarounds that address this vulnerability. This advisory is available at the following link: https://sec.cloudapps.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-ndfc-cmdinj-UvYZrKfr Read the full article

0 notes

Text

Cisco Nexus Vulnerability Let Hackers Execute Arbitrary Commands on Vulnerable Systems

A critical vulnerability has been discovered in Cisco’s Nexus Dashboard Fabric Controller (NDFC), potentially allowing hackers to execute arbitrary commands on affected systems. This flaw, identified as CVE-2024-20432, was first published on October 2, 2024. Its CVSS score of 9.9 indicates its severe impact. Vulnerability Details The vulnerability resides in the Cisco NDFC’s REST API […] The post…

0 notes

Text

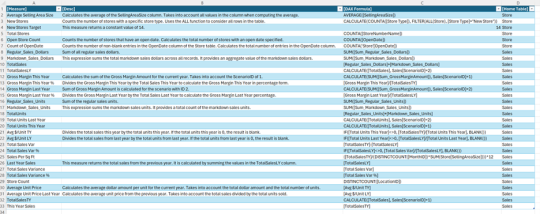

[DAX] INFO functions

Hace varios meses ya que microsoft nos ha sorprendido agregando nuevas funciones en DAX que responden a información del modelo. Conocer detalles del modelo se vuelve indispensable para documentar o trabajar por primera vez con un modelo ya creado.

Ya son más de 50 nuevas funciones que permiten preguntarle al modelo detalles particulares como por ejemplo indagar en las expresiones de las medidas de nuestro modelo o los roles de seguridad por filas creados.

Lo cierto es que ya existía una forma de llegar a esta información. Desde el inicio de modelos tabulares tuvimos exposición a DMV (Dynamic Management Views). Como nos gusta hacer aqui en LaDataWeb, primero veamos el contexto teórico:

Las vistas de administración dinámica (DMV) de Analysis Services son consultas que devuelven información sobre los objetos del modelo, las operaciones del servidor y el estado del servidor. La consulta, basada en SQL, es una interfaz para esquemas de conjuntos de filas.

Si quieren traer un poco más a la memoria o un ejemplo de ello, pueden revisar este viejo artículo. El nuevo esquema INFO en DAX nos brinda más de 50 funciones para obtener información del esquema TMSCHEMA de DMV.

Si ya existía... ¿Por qué tanto revuelo?

Las INFO de DAX vienen a evolucionar algunos aspectos que no podíamos resolver antes y darnos creatividad para explotarlos en otros que tal vez no habíamos pensado.

No es necesario utilizar una sintaxis de consulta diferente a DAX para ver información sobre tu modelo semántico (antes SQL). Son funciones DAX nativas y aparecen en IntelliSense cuando escribes INFO.

Puedes combinarlas usando otras funciones DAX. La sintaxis de consulta DMV existente no te permite combinarlas. Como función DAX, la salida es de tipo de datos Tabla y se pueden usar funciones DAX existentes que unen tablas o las resumen.

Estas son el eslabón inicial bajo el cual se llamó la atención. Ahora podemos pensar solo en DAX y si buscamos INFO de Tablas, relaciones, columnas o medidas, podemos combinarlas para mejorar el resultado de nuestra consulta. Si quisieramos conocer las medidas de una tabla puntual con su nombre, podríamos ejecutar algo así:

EVALUATE VAR _measures = SELECTCOLUMNS( INFO.MEASURES(), "Measure", [Name], "Desc", [Description], "DAX formula", [Expression], "TableID", [TableID] ) VAR _tables = SELECTCOLUMNS( INFO.TABLES(), "TableID", [ID], "Table", [Name] ) VAR _combined = NATURALLEFTOUTERJOIN(_measures, _tables) RETURN SELECTCOLUMNS( _combined, "Measure", [Measure], "Desc", [Desc], "DAX Formula", [DAX formula], "Home Table", [Table] )

Así obtendríamos una tabla con su nombre, sus medidas, sus descripciones de medidas y las expresiones de las medidas. El resultado puede ser documentación de modelo exportado a excel:

¿Donde o cómo puede ejecutarlo?

Las funciones de información puede ayudarnos para explorar un modelo en edición actual. Si estamos conociendo un modelo por primera vez o culminando un trabajo para documentar, podríamos abrir la vista de consultas DAX de un Power Bi Desktop para ejecutar el código. También siempre dispondríamos de DAX Studio que no solo permite ejecutar con un PowerBi Desktop, sino también contra un modelo en una capacidad dedicada en Fabric o PowerBi Service.

En mi opinión lo más interesante es que esto es DAX nativo. Como gran amante de la PowerBi Rest API, eso me entusiasma, dado que el método que permite ejecutar código DAX contra un modelo semántico en el portal de PowerBi, no permite DMV. Restringe a DAX nativo. Esto abre las puertas a ejecutar por código una consulta de información al modelo. Podríamos estudiar y comprender que es lo que a nuestra organización más nos ayuda entender de un modelo o nos gustaría documentar. A partir de ello construir un código que obtenga esa información de un modelo semántico que necesitemos comprender. Veamos la siguiente imagen que aclare más

Espero que las funciones los ayuden a construir flujos que les brinden la información que desean obtener de sus modelos.

#powerbi#power bi#power bi desktop#DAX#power bi tips#power bi tutorial#power bi training#power bi argentina#power bi jujuy#power bi cordoba#ladataweb#simplepbi

1 note

·

View note

Text

The MERN Stack Journey Crafting Dynamic Web Applications

This course initiates an engaging expedition into the realm of MERN stack development course. The MERN stack, comprising MongoDB, Express.js, React, and Node.js, stands as a potent fusion of technologies empowering developers to construct dynamic and scalable web applications. Whether you're a novice venturing into web development or a seasoned professional aiming to broaden your expertise, this course equips you with the requisite knowledge and hands-on practice to master the MERN stack.

Diving into MongoDB the Foundation of Data Management

Our journey commences with MongoDB, a premier NoSQL database offering versatility and scalability in managing extensive datasets. We delve into the essentials of MongoDB, encompassing document-based data structuring, CRUD operations, indexing techniques, and aggregation methodologies. By course end, you'll possess a firm grasp on harnessing MongoDB for data storage and retrieval within MERN stack applications.

Constructing Robust Backend Services with Express.js

Subsequently, we immerse ourselves in Express.js, a concise web application framework for Node.js, to fabricate the backend infrastructure of our MERN stack applications. You'll acquire proficiency in crafting RESTful APIs with Express.js, handling HTTP transactions, integrating middleware for authentication and error management, and establishing connectivity with MongoDB for database interactions. Through practical exercises and projects, you'll cultivate adeptness in constructing resilient and scalable backend systems using Express.js.

Crafting Dynamic User Interfaces with React

Transitioning to the frontend domain, we harness the capabilities of React, a JavaScript library for crafting user interfaces. In MERN stack development course you’ll master the art of formulating reusable UI components, managing application state via React hooks and context, processing user input and form submissions, and implementing client-side routing mechanisms for single-page applications. Upon course completion, you'll possess the prowess to develop dynamic and interactive user interfaces utilizing react.

Orchestrating Full-Stack Development with Node.js

In the concluding segment of the course, we synergize all components, leveraging Node.js to orchestrate full-stack MERN applications. You'll learn to establish frontend-backend connectivity via RESTful APIs, handle authentication and authorization intricacies, integrate real-time data updates utilizing WebSockets, and deploy applications to production environments. Through a series of hands-on projects, you'll fortify your understanding of MERN stack development course, emerging as a proficient practitioner in crafting dynamic web applications.

Going on the MERN Stack Mastery Journey

Upon completing this course, you'll possess an encompassing comprehension of MERN stack development course and the adeptness to craft dynamic and scalable web applications from inception. Whether you're envisioning a career in web development, aspiring to launch a startup venture, or simply seeking to enhance your skill repertoire, mastery of the MERN stack unlocks myriad opportunities in the contemporary landscape of web development. Are you prepared to embark on this transformative journey and emerge as a MERN stack virtuoso? Let's commence this exhilarating expedition!

Data Management with MongoDB

MongoDB serves as the database component of the MERN stack. Here, we'll discuss schema design, CRUD operations, indexing, and data modeling techniques to effectively manage your application's data.

Securing Your MERN Application

Security is paramount in web development. This section covers best practices for securing your MERN application, including authentication, authorization, input validation, and protecting against common vulnerabilities.

Deployment and Scaling Strategies

Once your MERN application is ready, it's time to deploy it to a production environment. We'll explore deployment options, containerization with Docker, continuous integration and deployment (CI/CD) pipelines, and strategies for scaling your application as it grows.

Troubleshooting and Debugging

Even the well-designed applications encounter bugs and errors. Here, we'll discuss strategies for troubleshooting and debugging your MERN application, including logging, error handling, and using developer tools for diagnosing issues.

Advanced Topics and Next Steps

To further expand your MERN stack skills, this section covers advanced topics such as server-side rendering with React.js, GraphQL integration, micro services architecture, and exploring additional libraries and frameworks to enhance your development workflow.

Continuous Integration and Deployment (CI/CD)

Automating the deployment process can streamline your development workflow and ensure consistent releases. In this section, we'll delve into setting up a CI/CD pipeline for your MERN application using tools like Jenkins, Travis CI, or GitLab CI. You'll learn about automated testing, deployment strategies, and monitoring your application in production.

Conclusion

MERN stack development course offers a robust and efficient framework for building modern web applications. By leveraging MongoDB, Express.js, React.js, and Node.js, developers can create highly scalable, responsive, and feature-rich applications. The modular nature of the stack allows for easy integration of additional technologies and tools, enhancing its flexibility and functionality. Additionally, the active community support and extensive documentation make MERN stack development accessible and conducive to rapid prototyping and deployment. As businesses continue to demand dynamic and interactive web solutions, mastering the MERN stack remains a valuable skill for developers looking to stay at the forefront of web development trends.

0 notes

Note

hey! would love to know the info on any mods you use for your forever world! ❤️ xx

Hello!

thank you so much for asking!

I'm going to eventually make a carrd similar to my bio one (pinned post) with an updated list of my modpacks or resource packs. However, for now, I'll list them out and include pictures here for your viewing!

modpack overview

⊹˚. * . ݁₊ ⊹

Firstly, here's the modpack overview. It's for the latest version of the game (which I regret because it made a lot of mods I wanted incompatible). I was new to modding. Most of these work for older versions if you so choose to experiment with that!

It's also ran through fabric, which is used for more cozy-vanilla friendly mods, whereas forge or something else is used for more game-changing mods.

I do add content to this modpack kinda often, so I'll make updated posts if I add thing non-cosmetic.

⊹˚. * . ݁₊ ⊹

client mods

⊹˚. * . ݁₊ ⊹

So these are "client mods," which to my understanding only impact your gaming and not the server or world.

So some of these download automatically with other mods, like the entity ones and I think sodium.

I downloaded "BadOptimizations", "ImmediatelyFast"," and "Iris Shaders" myself because the first two helped my gameplay run smoother on a low quality PC, and the last one is needed to run the shaders I chose. You can experiment with that, too, but I know most people use Iris.

actual mods

⊹˚. * . ݁₊ ⊹

okay, so these are all the rest of them underneath client mods. I'm not going to go into much detail for each one, I'll just kind of generally group them together. If you have any specific questions about any or want a link, comment !!

Most of them are self-explanatory, so I'll talk about the random ones that aren't.

--

ModernFix, FPS Reducer, Architectury, are all similar to the optimization mods but they alter game stuff to make your game run better.

--

Essential make it similar to bedrock when it comes to hosting single-player worlds and having a social menu. You can send screenshots and dm on there.

Mod Menu adds a mod button to your menu screen. Allows you to access mods and configure them, especially if you have a configure mod added.

--

Text and Fabric API are needed to run the other mods.

--

resource packs

⊹˚. * . ݁₊ ⊹

these are the current resource packs I have added. Since there's so little, I'll go through them all.

--

Borderless Glass, self explanatory. Makes windows borderless and looks more seamless and realistic.

Better leaves add a bushiness to the leaves blocks.

Dynamic surround sounds adds some really nice and immersion noises to the game, by far a favorite.

Circle Sun and Moon

Fresh animations are so cool that they add more realistic animations to the mobs in game!

Grass flowers and flowering crops add flower textures to the blocks and the crops in the game, super cute!

shaders

⊹˚. * . ݁₊ ⊹

Lastly, these are the shaders I currently have on. Tweak around with the video settings on it. All the options look good !

--

I hope this was insightful and answered all your questions! Have a blessed day.

dms open!! send any other questions <3

Corinthians 9:7

"Each of you should give what you have decided in your heart to give, not reluctantly or under compulsion, for God loves a cheerful giver."

#minecraft#minecraft build#minecraft mods#minecraft screenshots#minecraft youtuber#survivalworld#writing#forever world#mc#sqftash

2 notes

·

View notes

Text

Empower Your Digital Transformation with Microsoft Azure Cloud Service

Today, cloud computing applications and platforms are promptly growing across various industries, allowing businesses to become more efficient, effective, and competitive. In fact, these days, over 77% of businesses have some part of their computing infrastructure in the cloud.

Although, there are various cloud computing platforms available, one of the few platforms that lead the cloud computing industry is Microsoft Azure Cloud. Although, Amazon Web Services (AWS) is a leading giant in the public cloud market, Azure is the most rapidly-growing and second-largest in the world of computing.

What is Microsoft Azure?

Azure is a cloud computing service provided by Microsoft. There are more than six hundred services that come under the Azure umbrella. In simple terms, it is a web-based platform used for building, testing, managing and deploying applications and services.

About 80% of Fortune 500 Companies are using Azure for their cloud computing requirements.

Azure supports a multitude of programming languages, including Node JS, Java and C#.

Another interesting fact about Azure is that it has nearly 42 data centers around the globe, which is the maximum number of data centers for any cloud platform.

A broad range of Microsoft’s Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS) and Infrastructure-as-a-Service (IaaS) products are hosted on Azure. To understand these major cloud computing service models in detail, check out our other blog.

Azure provides three key aspects of functionality: Virtual Machine, app services and cloud services.

Virtual Machines

The virtual machines by Azure are one of many types of scalable, on-demand computing resources. An Azure virtual machine provides you with the flexibility of virtualization without the need of buying and maintaining the physical hardware that runs it.

App Services

Azure App Services is a service based on HTTP which is used for hosting web applications, mobile back ends, and REST APIs. It can be developed in your favourite language, be it JAVA, .NET, .NET Core, Node.js, Ruby, PHP or Python. Applications smoothly run and scale on both Windows and Linux-based environments.

Cloud Services

Azure Cloud Services is a form of Platform-as-a-Service. Similar to Azure App Service, the technology is crafted to support applications that are reliable, scalable, and reasonable to operate. Like App Services are hosted on virtual machines, so do Azure Cloud Services.

Various Azure services and how it works

Azure offers over 200 services, divided across 18 categories. These categories constitute computing, storage, networking, IoT, mobile, migration, containers, analytics, artificial intelligence and other machine learning, management tools, integration, security, developer tools, databases, security, DevOps, media identity and web services. Below, we have broken down some of these important Azure services based on their category:

Computer services

Azure Cloud Service: You can create measurable applications within the cloud by using this service. It offers instant access to the latest services and technologies required in the enterprise, enabling Azure cloud engineers to execute complex solutions seamlessly.

Virtual Machines: They offer Infrastructure-as-a-Service and can be used in diverse ways. When there is a need for complete control over an operating system and environment, VMS are a suitable choice. With this service, you can create a virtual machine in Linux, Windows or any other configuration in seconds.

Service Fabric: It is a Platform-as-a-Service which is designed to facilitate the development, deployment and management of highly customizable and scalable applications for the Microsoft Azure cloud platform. It simplifies the process of developing a micro service.

Functions: It enables you to build applications in any programming language. When you’re simply interested in the code that runs your service and not the underlying platform or infrastructure, functions are great.

Networking

Azure CDN: It helps store and access data on varied content locations and servers. Using Azure CDN (Content Delivery Network), you can transfer content to any person around the world.

Express Route: This service allows users to connect their on-premise network to the Microsoft Cloud or any other services using a private connection. ExpressRoute offers more reliability, consistent latencies, and faster speed than usual connections on the internet.

Virtual Network: It is a logical representation of the network in the cloud. So, by building an Azure Virtual Network, you can decide your range of private IP addresses. This service enables users to have any of the Azure services communicate with each other securely and privately.

Azure DNS: Name resolution is provided by Azure DNS, a hosting service for DNS domains that makes use of the Microsoft Azure infrastructure. You can manage your DNS records by utilising the same login information, APIs, tools, and pricing as your other Azure services if you host your domains in Azure.

Storage

Disk Storage: In Azure, VM uses discs as a storage medium for an operating system, programs, and data. A Windows operating system disc plus a temporary disc are the minimum numbers of discs present in any virtual machine.

File Storage: The main usage of Azure file storage is to create a shared drive between two servers or users. We’ll use Azure file storage in that situation. It is possible to access this managed file storage service via the server message block (SMB) protocol.

Blob Storage: Azure blob storage is essential to the overall Microsoft Azure platform since many Azure services will store and act on data that is stored in a storage account inside the blob storage. And each blob must be kept in its own container.

Benefits of using Azure

Application development: Any web application can be created in Azure.

Testing: After the successful development of the application on the platform, it can be easily tested.

Application hosting: After the testing, you can host the application with the help of Azure.

Create virtual machines: Using Azure, virtual machines can be created in any configuration.

Integrate and sync features: Azure enables you to combine and sync directories and virtual devices

Collect and store metrics: Azure allows you to collect and store metrics, enabling you to identify what works.

Virtual hard drives: As they are extensions of virtual machines, they offer a massive amount of data storage.

Bottom line

With over 200 services and countless benefits, Microsoft Azure Cloud is certainly the most rapidly-growing cloud platform being used by organizations. Incessant innovation from Microsoft allows businesses to respond quickly to unexpected changes and new opportunities.

So, are you planning to migrate your organization’s data and workload to the cloud? At CloudScaler, get instant access to the best services and technologies from the ground up, supported by a team of experts that keep you one step ahead in the competition.

0 notes

Text

Microsoft Azure Machine Learning Studio And Its Features

Azure Machine Learning is for whom?

Machine learning enables people and groups putting MLOps into practice inside their company to deploy ML models in a safe, auditable production setting.

Tools can help data scientists and machine learning engineers speed up and automate their daily tasks. Tools for incorporating models into apps or services are available to application developers. Platform developers can create sophisticated ML toolset with a wide range of tools supported by resilient Azure Resource Manager APIs.

Role-based access control for infrastructure and well-known security are available to businesses using the Microsoft Azure cloud. A project can be set up to restrict access to specific operations and protected data.

Features

Utilize important features for the entire machine learning lifecycle.

Preparing data

Data preparation on Apache Spark clusters within Azure Machine Learning may be iterated quickly and is compatible with Microsoft Fabric.

The feature store

By making features discoverable and reusable across workspaces, you may increase the agility with which you ship your models.

Infrastructure for AI

Benefit from specially created AI infrastructure that combines the newest GPUs with InfiniBand networking.

Machine learning that is automated

Quickly develop precise machine learning models for problems like natural language processing, classification, regression, and vision.

Conscientious AI

Create interpretable AI solutions that are accountable. Use disparity measures to evaluate the model’s fairness and reduce unfairness.

Catalog of models

Use the model catalog to find, optimize, and implement foundation models from Hugging Face, Microsoft, OpenAI, Meta, Cohere, and more.

Quick flow

Create, build, test, and implement language model processes in a timely manner.

Endpoint management

Log metrics, carry out safe model rollouts, and operationalize model deployment and scoring.

Azure Machine Learning services

Your needs-compatible cross-platform tools

Anyone on an ML team can utilize their favorite tools. Run quick experiments, hyperparameter-tune, develop pipelines, or manage conclusions using familiar interfaces:

Azure Machine Learning Studio

Python SDK (v2)

Azure CLI(v2)

Azure Resource Manager REST APIs

Sharing and finding files, resources, and analytics for your projects on the Machine Learning studio UI lets you refine the model and collaborate with others throughout the development cycle.

Azure Machine Learning Studio

Machine Learning Studio provides many authoring options based on project type and familiarity with machine learning, without the need for installation.

Use managed Jupyter Notebook servers integrated inside the studio to write and run code. Open the notebooks in VS Code, online, or on your PC.

Visualise run metrics to optimize trials.

Azure Machine Learning designer: Train and deploy ML models without coding. Drag and drop datasets and components to build ML pipelines.

Learn how to automate ML experiments with an easy-to-use interface.

Machine Learning data labeling: Coordinate image and text labeling tasks efficiently.

Using LLMs and Generative AI

Microsoft Azure Machine Learning helps you construct Generative AI applications using Large Language Models. The solution streamlines AI application development with a model portfolio, fast flow, and tools.

Azure Machine Learning Studio and Azure AI Studio support LLMs. This information will help you choose a studio.

Model catalog

Azure Machine Learning studio’s model catalog lets you find and use many models for Generative AI applications. The model catalog includes hundreds of models from Azure OpenAI service, Mistral, Meta, Cohere, Nvidia, Hugging Face, and Microsoft-trained models. Microsoft’s Product Terms define Non-Microsoft Products as models from other sources, which are subject to their terms.

Prompt flow

Azure Machine Learning quick flow simplifies the creation of AI applications using Large Language Models. Prompt flow streamlines AI application prototyping, experimentation, iterating, and deployment.

Enterprise security and readiness

Security is added to ML projects by Azure.

Integrations for security include:

Network security groups for Azure Virtual Networks.

Azure Key Vault stores security secrets like storage account access.

Virtual network-protected Azure Container Registry.

Azure integrations for full solutions

ML projects are supported by other Azure integrations. Among them:

Azure Synapse Analytics allows Spark data processing and streaming.

Azure Arc lets you run Azure services on Kubernetes.

Azure SQL Database, Azure Blob Storage.

Azure App Service for ML app deployment and management.

Microsoft Purview lets you find and catalog company data.

Project workflow for machine learning

Models are usually part of a project with goals. Projects usually involve multiple people. Iterative development involves data, algorithms, and models.

Project lifecycle

Project lifecycles vary, but this diagram is typical.Image credit to Microsoft

Many users working toward a same goal can collaborate in a workspace. The studio user interface lets workspace users share experimentation results. Job types like environments and storage references can employ versioned assets.

User work can be automated in an ML pipeline and triggered by a schedule or HTTPS request when a project is operational.

The managed inferencing system abstracts infrastructure administration for real-time and batch model deployments.

Train models

Azure Machine Learning lets you run training scripts or construct models in the cloud. Customers commonly bring open-source framework-trained models to operationalize in the cloud.

Open and compatible

Data scientists can utilize Python models in Azure Machine Learning, such as:

PyTorch

TensorFlow

scikit-learn

XGBoost

LightGBM

Other languages and frameworks are supported:

R

.NET

Automated feature and algorithm selection

Data scientists employ knowledge and intuition to choose the proper data feature and method for training in traditional ML, a repetitive, time-consuming procedure. Automation (AutoML) accelerates this. Use it with Machine Learning Studio UI or Python SDK.

Optimization of hyperparameters

Optimization and adjusting hyperparameters can be arduous. Machine Learning can automate this procedure for every parameterized command with minimal job description changes. The studio displays results.

Multiple-node distributed training

Multinode distributed training can boost deep learning and classical machine learning training efficiency. Azure Machine Learning computing clusters and serverless compute offer the newest GPUs.

Azure Machine Learning Kubernetes, compute clusters, and serverless compute support:

PyTorch

TensorFlow

MPI

MPI distribution works for Horovod and bespoke multinode logic. Serverless Spark compute and Azure Synapse Analytics Spark clusters support Apache Spark.

Embarrassingly parallel training

Scaling an ML project may involve embarrassingly parallel model training. Forecasting demand sometimes involves training a model for many stores.

Deploy models

Use deployment to put a model into production. Azure Machine Learning managed endpoints encapsulate batch or real-time (online) model scoring infrastructure.

Real-time and batch scoring (inferencing)

Endpoints with data references are invoked in batch scoring or inferencing. The batch endpoint asynchronously processes data on computing clusters and stores it for analysis.

Online inference, or real-time scoring, includes contacting an endpoint with one or more model installations and receiving a result via HTTPS in near real time. Traffic can be split over many deployments to test new model versions by redirecting some traffic initially and increasing it after confidence is achieved.

Machine learning DevOps

Production-ready ML models are developed using DevOps, or MLOps. From training to deployment, a model must be auditable if not replicable.

ML model lifecycleImage credit to Microsoft

Integrations for MLOPs Machine Learning considers the entire model lifecycle. Auditors can trace the model lifecycle to a commit and environment.

Features that enable MLOps include:

Git integration.

Integration of MLflow.

Machine-learning pipeline scheduling.

Custom triggers in Azure Event Grid.

Usability of CI/CD tools like GitHub Actions and Azure DevOps.

Machine Learning has monitoring and auditing features:

Code snapshots, logs, and other job outputs.

Asset-job relationship for containers, data, and compute resources.

The airflow-provider-azure-machine learning package lets Apache Airflow users submit workflows to Azure Machine Learning.

Azure Machine Learning pricing

Pay only what you require; there are no up-front fees.

Utilize Azure Machine Learning for free. Only the underlying computational resources used for model training or inference are subject to charges. A wide variety of machine kinds are available for you to choose from, including general-purpose CPUs and specialist GPUs.

Read more on Govindhtech.com

#AzureMachineLearning#MachineLearningStudio#MachineLearning#LearningStudio#MLOps#AI#PythonSDK#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

MuleSoft Offerings

MuleSoft, a Salesforce company, offers a range of products and services designed to facilitate the integration of applications, data, and devices both within and outside of an organization. Its core offerings revolve around the Anypoint Platform, which provides a comprehensive suite of tools for building, managing, and deploying integrations and APIs. Here’s an overview of MuleSoft’s primary offerings:

Anypoint Platform

The Anypoint Platform is MuleSoft’s flagship product, offering a unified, flexible integration and API management platform that enables companies to realize their digital transformation initiatives. Its main components include:

Design and Development:

Anypoint Studio: An Eclipse-based IDE for designing and developing APIs and integration flows using the Mule runtime engine.

API Designer: A web-based tool for designing, documenting, and testing APIs using the RAML (RESTful API Modeling Language) or OAS (OpenAPI Specification).

API Management:

API Manager: Manages APIs’ lifecycle, including security, throttling, and versioning.

Anypoint API Community Manager: Engages API developers and partners by providing a customizable developer portal for discovering and testing APIs.

Runtime Services:

Mule Runtime Engine: A lightweight, scalable engine for running integrations and APIs, available both on-premises and in the cloud.

Anypoint Runtime Manager: A console for deploying, managing, and monitoring applications and APIs, regardless of where they run.

Connectivity:

Anypoint Connectors: A library of pre-built connectors that provide out-of-the-box connectivity to hundreds of systems, databases, APIs, and services.

DataWeave: A powerful data query and transformation language integrated with Anypoint Studio and the Mule runtime for handling complex data integration challenges.

Deployment Options:

CloudHub: A fully managed cloud platform for deploying and managing applications and APIs.

Anypoint Runtime Fabric: A container service for deploying Mule applications and APIs on-premises or in the cloud, supporting Docker and Kubernetes.

Anypoint Private Cloud: Enables companies to run Anypoint Platform in their own secure, private cloud environment.

Security and Governance:

Anypoint Security: Features to protect the application network and APIs from external threats and internal issues.

Anypoint Monitoring and Visualizer: Tools for monitoring API and integration health, performance, and usage, as well as visualizing the architecture and dependencies of application networks.

MuleSoft Composer

MuleSoft Composer is a more recent addition aimed at empowering non-developers to connect apps and data to Salesforce quickly without writing code. It provides a simple, guided user interface for integrating popular SaaS applications and automating workflows directly within the Salesforce interface.

Training and Certification

MuleSoft also offers comprehensive training, certification programs, and learning paths for developers, architects, and operations professionals. These resources help individuals and organizations build expertise in designing, developing, and managing integrations and APIs using MuleSoft’s Anypoint Platform.

Community and Support

MuleSoft Community: A vibrant community of developers and architects where members can ask questions, share knowledge, and contribute connectors.

MuleSoft Support: Professional support services from MuleSoft, including troubleshooting, technical guidance, and product assistance to ensure customer success with MuleSoft solutions.

Demo Day 1 Video:

youtube

You can find more information about Mulesoft in this Mulesoft Docs Link

Conclusion:

Unogeeks is the №1 Training Institute for Mulesoft Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Mulesoft Training here — Mulesoft Blogs

You can check out our Best in Class Mulesoft Training details here — Mulesoft Training

Follow & Connect with us:

— — — — — — — — — — — -

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

#MULESOFT #MULESOFTTARINING #UNOGEEKS #UNOGEEKS TRAINING

0 notes