#azure chatgpt 4

Explore tagged Tumblr posts

Text

Simplifying Testing Infrastructure with Cloud Automation Testing

In today’s fast-paced digital world, businesses need to continuously deliver high-quality software applications to meet customer expectations. But how are businesses going to make sure whether their product meets the highest functionality, usability, security and performance standards? This is where Software Testing comes into the picture and ensures the performance and quality of the product.

There are two methods of testing: manual and automated. However, manual testing is time-consuming and can be prone to errors. With the rise in the scope and scale of testing in DevOps, the requirement for automated testing has become apparent.

What is Automation Testing?

Automation Testing is the process of automatically running tests. They are used to implement text scripts on a software application. These tests are conducted with the help of testing software which frees up resources and time during the process of testing. This enables people to test the quality of the software more judiciously and at a lower cost.

Automation testing allows people to:

Create a set of tests that can be reused multiple times

Save cost by debugging and detecting problems earlier

Deploy tests continuously to accelerate product launches

How Automation Testing is Transforming the World?

Automation can be seen almost everywhere, not only in QA testing but in our day-to-day lives. From self-driving cars to voice tech, the technology is rapidly becoming automated to simplify our lives.

Automation testing is consistently improving the quality of QA testing and saves a lot of time in comparison to manual testing. As known, writing test cases need continuous human intervention. And to ensure the best results, test cases should be a continuous collaboration between testers and developers.

No matter the product or service, the key benefits of automation testing can be summarized as the following:

Increased speed

Increased output

Enhanced quality

Lesser cost

Advantages of Automation Testing

With the improvement in AI, the power and scope of automated testing tools are increasing rapidly. Let’s look into detail as to what people and organizations can gain from automation testing:

Saves cost

Automated Software Testing will help your business save time, money and resources during the process of quality assurance. While there will be a requirement for manual testing too, the QA engineers will have the time to invest in other projects. This will lower the overall cost of software development.

Simultaneously run test

Since automated testing needs little to no human intervention once it starts, it becomes easy to run multiple tests at once. This also provides you with the opportunity to create comprehensive comparative reports faster with the same parameters.

Quicker feedback cycle

In the case of manual tests, it can take a lot of time for testers to return to your DevOps department with feedback. Using automation testing tools, you can implement quicker validation during the software development process. By testing at the earlier stages, you increase the efficiency of your team.

Lesser time to market

The time that is saved with continuous testing during development contributes to the earlier launch of your product. Automation testing tools can also enable faster test results, speeding up final software validation.

Improved test coverage

With a well-planned automation strategy, you can expand your test coverage to improve the quality of greater features in your application. Due to the automation of the testing process, it gives free time to your automation engineers to write more tests and make them more detailed.

Better insights

Automation tests not just disclose when your test fails but also reveal application insights. It can demonstrate data tables, file contents, and memory contents. This will allow developers to identify what went wrong.

Enhanced accuracy

Making mistakes is human and in the case of manual testing, there’s always a possibility of human error. Whereas with automation, the implementation of tests will be accurate most of the time. Obviously, test scripting is done by humans, which implies that there’s still some risk of making errors. But these errors will become lesser and lesser the more you reuse tests.

Lesser stress on your QA team

Your quality assurance team will experience significantly less stress if you adopt an automated testing technique. Once you eliminate the hassle of manual testing, you give them the time to create tools that improve your testing suite even further.

Types of Automated Testing

Unit Testing If the individual parts of your code won’t function correctly, there is no possibility for them to work within the final product. Unit testing looks into the smallest bit of code that can be segregated in a system. To conduct Unit Tests, the tester must be aware of the internal structure of the program. The best thing about Unit Testing is that it can be implemented throughout the process of software development. This ensures consistent delivery of feedback that will speed up the development process, sending products to market faster.

Functional Testing

After ensuring that all the individual parts work, you need to check whether the system functions based on your specifications and requirements. Functional Testing makes sure that your application works as it was planned based on requirements and specifications. Functional Testing assesses the APIs, user interface, security, database and other functionalities.

Regression Testing

Regression tests are required to confirm that a recent change made in the system hasn’t impacted the existing features. To perform these tests, you extract current relevant test cases from the test suite that involves the affected and modified parts of the code. You must carry out regression testing whenever you modify, alter or update any part of the code.

Load Testing

Do you know how much pressure your application can take? This is an essential piece of information to keep yourself prepared with before you hand it over to your user. Load tests are non-functional software tests which are carried out to test your software under a specified load. It will demonstrate the behaviour of the software while being put under the stress of various users.

Performance Testing

Performance Testing assesses the responsiveness, stability, and speed of your application. If you don’t put your product through some sort of performance test, you’ll never know how it will function in a variety of situations.

Integration Testing

Integration testing involves testing how the individual units or components of the software application work together as a whole. It is done after unit testing to ensure that the units integrate and function correctly.

Security Testing

Security testing is used to identify vulnerabilities and weaknesses in the software application’s security. It involves testing the application against different security threats to ensure that it is secure.

GUI Testing

GUI testing involves testing the graphical user interface of the software application to ensure that it is user-friendly and functions as expected.

API Testing

API testing involves testing the application programming interface (API) to ensure that it functions correctly and meets the requirements.

Choosing a Test Automation Software Provider

If your business is planning to make the move, the test automation provider you pick should be able to provide:

Effortless integration with CI/CD pipeline to facilitate automation, short feedback cycle and fast delivery of software.

The capability to function on private or public cloud networks.

Integration with the current infrastructure for on-site testing for simpler test handling and reporting.

Remote access to real-time insights and monitoring tools that can help you better understand user journeys and how a certain application is being used.

Automated exploratory testing to increase application coverage.

Test environments that are already set up and can be quickly launched when needed.

CloudScaler: A Trusted Provider of Software Testing in the Netherlands

With the increasing complexity of modern software development, the need for reliable and efficient software testing services has never been greater. CloudScaler, a trusted provider of Software Testing in the Netherlands, offers a comprehensive suite of testing services to help teams navigate the challenges of cloud infrastructure and microservices.

Our services are designed to shorten deployment times and development costs, enabling your team to focus on what they do best: building innovative software solutions. Our approach is rooted in efficiency, reliability, and expertise, ensuring that you can trust CloudScaler as your partner in software testing.

0 notes

Text

Open Ai case study (will Ai replace humans)

*Form last few months people are talking about chat gpt but no one talking about "OpenAi" the company which made chat gpt*

After Open ai launch chat gpt, chat gpt crossed the mark of 1 million users in 5 days and 100 million at 2 month its very hard to cross thise numbers for an any other company but Open Ai is not a like any other company it is one of game changer companies

How? You will get soon

One of the big company in the tech industry Microsoft itself invested $1billion in 2019 and On January 23, 2023, Microsoft announced a new multi-year, multi-billion doller investment in open Ai, reported to be $10 billion . The investment is believed to be a part of Microsoft's efforts to integrate OpenAI's ChatGPT into the Bing search engine.

After thise announcement launch of Chat gpt

even threaten the shark of the industry Google, Google is ruling this industry since 2 and half decades

But after the announcement of Microsoft

the monopoly of Google has been threaten by the direct competitor of the company

The CEO of Google sunder pichai even announced "code red" Whenever there is a major issue at Google, Google announcess"Code Red." In the company When you hear that at Google, it means "all hands on deck," thise is time to work hard

But thise is still the tip of the ice burg

Open Ai is making more project like chat gpt or better than that

*Point which I am going to cover in this post*

1.what is open ai?

2.active projects of open ai.

3.how open ai making revenue?

1.What is open ai ?

OpenAI is an American based AI research laboratory .OpenAI conducts AI research with the declared intention of promoting and developing a friendly AI. OpenAI systems run on an Azure-based supercomputing which provided by Microsoft.OpenAI was founded in 2015 by a group of high-profile figures in the tech industry, including Elon Musk,Sam Altman, Greg Brockman, Ilya Sutskever, John Schulman, and Wojciech Zaremba. Ya you heard right Elon Musk the man who says against Ai in his interviews and already invested $100million at open Ai in its initial days

It's like saying smoking is injurious to health while smoking weed

However, Musk resigned from the board in 2018 but remained a donor

Musk in another tweet clarified that he has no control or ownership over OpenAI .

It's not because the "ai is dangerous" thing it becouse conflict between co founder and other reasons

The current CEO of Open ai is sam altman and current CTO of the company is Mira murati ya a one more Indian origin person who is running big tech company

The organization was founded with the goal of developing artificial intelligence technology that is safe and beneficial for humanity, and the founders committed $1 billion in funding to support the organization's research.

First of all you should understand that Open ai is not a company like others

It's doesn't start like others

No one made this company in their basement or something the story of open in not inspiring like others but it's valuable it's an example of

what's happens when the top tech giant's and top tech scientist create something together

2. Active projects of open ai

GPT-4: OpenAI's most recent language model, which is capable of generating human-like language and has been used for a wide range of applications, including chatbots, writing assistance, and even creative writing.

DALL-E: a neural network capable of generating original images from textual descriptions, which has been used to create surreal and whimsical images.

CLIP: a neural network that can understand images and text together, and has been used for tasks such as image recognition, text classification, and zero-shot learning.

Robotics: OpenAI is also working on developing advanced robotics systems that can perform complex tasks in the physical world, such as manipulating objects and navigating environments.

Multi-agent AI: OpenAI is also exploring the field of multi-agent AI, which involves developing intelligent agents that can work together to achieve common goals. This has applications in fields such as game theory, economics, and social science

Developers can use the API of openAi to create apps for customer service, chatbots, and productivity, as well as tools for content creation, document searching, and more, with many providing great utility for businesses

We can Develop and deploy intelligent chatbots that can interact with customers, answer questions, and provide personalized recommendations based on user preferences.

3.how open ai making revenue ?

OpenAI is a research organization that develops artificial intelligence in a variety of fields, such as natural language processing, robotics, and deep learning. The organization is primarily funded by a combination of private investors, including Microsoft and Amazon Web Services, and research partnerships with various organizations.

OpenAI generates revenue through several means,

including: AI products and services: OpenAI offers a range of AI products and services to businesses and organizations, including language models, machine learning tools, and robotic systems.

Research partnerships: OpenAI collaborates with businesses, governments, and academic institutions on research projects and consultancies.

Licensing agreements: OpenAI licenses its technologies and patents to third-party companies and organizations, allowing them to use OpenAI's technology in their own products and services.

Investments: OpenAI has received significant investments from various companies and organizations, which have provided the organization with funds to support its research and development efforts

When the open ai started by Elon Musk and other founder in 2015 the open ai started as non profit organization

but right now open ai is not properly non profit organization

It has it's for-profit subsidiary corporation OpenAI Limited Partnership (OpenAI LP)

and it's non profit organization called OpenAI Incorporated (OpenAI Inc.)

.

If it's continuous like thise open ai will play good role in technology

but the main question is

Will Ai replace humans?

It is unlikely that AI will completely replace humans in the foreseeable future. While AI has made significant advances in recent years, there are still many areas where humans excel and where machines struggle to match human performance.

AI is particularly good at performing repetitive tasks and processing large amounts of data quickly, but it lacks the creativity, empathy, and emotional intelligence that humans possess. Additionally, AI is only as good as the data it is trained on, and biases in the data can lead to biased AI systems.

Furthermore, many jobs require human-to-human interaction, which AI cannot replicate. For example, jobs in healthcare, education, and social work require empathy, understanding, and interpersonal skills, which machines are not capable of.

Overall, it is more likely that AI will augment human abilities rather than replace them entirely. As AI technology continues to develop, we may see more and more tasks being automated, but there will always be a need for human oversight and decision-making.

But there is a chance that Ai will definitely replace you

If you did not upgrade your self

If you still in bottom of your company ai will definitely replace you and I am not just talking about company I am talking about overall in general aspects it might be artist,coder,writer, editor,content creator, labour, farmer's ect.

If you are not upgrade your self you will gona replaced by Ai and machine,s

So upgrade your self using ai and make your place in the upcoming world of Ai and machine

"Ai will never replace humans but the humans who use ai they replace humans who don't use Ai"

There is evil side of Ai also but if we can create Ai than we can also create things to deal with ai

It could be anything it could be regulation's or any terms and conditions

but the point is we can use Ai to do are thing

easy

#willaireplacehumans#ai#open ai case study#ai replace humans#elon musk and open ai connection#sam altman#mira murati

2 notes

·

View notes

Text

GPT-4 will hunt for trends in medical records thanks to Microsoft and Epic

Enlarge / An AI-generated image of a pixel art hospital with empty windows.

Benj Edwards / Midjourney

On Monday, Microsoft and Epic Systems announced that they are bringing OpenAI's GPT-4 AI language model into health care for use in drafting message responses from health care workers to patients and for use in analyzing medical records while looking for trends.

Epic Systems is one of America's largest health care software companies. Its electronic health records (EHR) software (such as MyChart) is reportedly used in over 29 percent of acute hospitals in the United States, and over 305 million patients have an electronic record in Epic worldwide. Tangentially, Epic's history of using predictive algorithms in health care has attracted some criticism in the past.

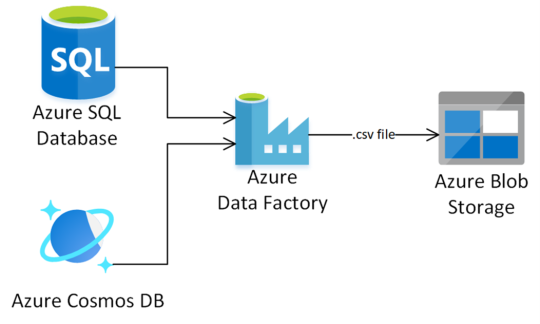

In Monday's announcement, Microsoft mentions two specific ways Epic will use its Azure OpenAI Service, which provides API access to OpenAI's large language models (LLMs), such as GPT-3 and GPT-4. In layperson's terms, it means that companies can hire Microsoft to provide generative AI services for them using Microsoft's Azure cloud platform.

The first use of GPT-4 comes in the form of allowing doctors and health care workers to automatically draft message responses to patients. The press release quotes Chero Goswami, chief information officer at UW Health in Wisconsin, as saying, "Integrating generative AI into some of our daily workflows will increase productivity for many of our providers, allowing them to focus on the clinical duties that truly require their attention."

The second use will bring natural language queries and "data analysis" to SlicerDicer, which is Epic's data-exploration tool that allows searches across large numbers of patients to identify trends that could be useful for making new discoveries or for financial reasons. According to Microsoft, that will help "clinical leaders explore data in a conversational and intuitive way." Imagine talking to a chatbot similar to ChatGPT and asking it questions about trends in patient medical records, and you might get the picture.

GPT-4 is a large language model (LLM) created by OpenAI that has been trained on millions of books, documents, and websites. It can perform compositional and translation tasks in text, and its release, along with ChatGPT, has inspired a rush to integrate LLMs into every type of business, whether appropriate or not.

2 notes

·

View notes

Text

OpenAI Launches GPT-4.5: A Research Preview with Enhanced Capabilities

OpenAI has officially launched GPT-4.5, the latest iteration in its AI language model lineup. Available first as a research preview for ChatGPT Pro users, GPT-4.5 is being hailed as OpenAI’s most knowledgeable model yet. However, the company has made it clear that GPT-4.5 is not a frontier model and does not surpass OpenAI’s o1 and o3-mini models in certain reasoning evaluations.

What’s New in GPT 4.5?

Despite not being classified as a frontier AI model, GPT-4.5 introduces several enhancements, making it a compelling update over its predecessor, GPT-4o:

Improved Writing Capabilities: The model exhibits better coherence, structure, and fluency in generating text, making it ideal for content creation and professional writing.

Enhanced Pattern Recognition & Logical Reasoning: GPT-4.5 is better at identifying complex patterns, drawing logical conclusions, and solving real-world problems efficiently.

Refined Personality & Conversational Flow: OpenAI has worked on making interactions with GPT-4.5 feel more natural and engaging, ensuring it understands context better and provides more emotionally nuanced responses.

Reduced Hallucinations: Compared to GPT-4o, GPT-4.5 significantly reduces hallucinations, providing more factual and reliable answers.

Greater Computational Efficiency: OpenAI states that GPT-4.5 operates with 10x better computational efficiency than GPT-4, optimizing performance without requiring excessive resources.

GPT-4.5 vs. Frontier AI Models

While GPT-4.5 is an improvement over previous models, OpenAI has openly stated that it does not introduce enough novel capabilities to be considered a frontier model. A leaked internal document highlighted that GPT-4.5 does not meet the criteria of seven net-new frontier capabilities, which are required for classification as a cutting-edge AI model. The document, which OpenAI later edited, mentioned that GPT-4.5 underperforms compared to the o1 and o3-mini models in deep research assessments.

OpenAI’s CEO Sam Altman described GPT-4.5 as a “giant, expensive model” that won’t necessarily dominate AI benchmarks but still represents a meaningful step in AI development.

Training and Development of GPT-4.5

OpenAI has employed a mix of traditional training methods and new supervision techniques to build GPT-4.5. The model was trained using:

Supervised Fine-Tuning (SFT)

Reinforcement Learning from Human Feedback (RLHF)

Synthetic Data Generated by OpenAI’s o1 Reasoning Model (Strawberry)

This blend of training methodologies allows GPT-4.5 to refine its accuracy, alignment, and overall user experience. Raphael Gontijo Lopes, a researcher at OpenAI, mentioned during the company’s livestream that human testers found GPT-4.5 consistently outperforms GPT-4o across nearly every category.

GPT-4.5 Rollout Plan

OpenAI is rolling out GPT-4.5 in phases, ensuring stability before wider availability:

Now available for ChatGPT Pro users

Plus and Team users will get access next week

Enterprise and Edu users will receive the update shortly after

Microsoft Azure AI Foundry has also integrated GPT-4.5, along with models from Stability, Cohere, and Microsoft

The Road to GPT-5

The release of GPT-4.5 sets the stage for OpenAI’s next major milestone: GPT-5, expected as early as late May. CEO Sam Altman has hinted that GPT-5 will integrate OpenAI’s latest o3 reasoning model, marking a significant leap toward achieving Artificial General Intelligence (AGI). Unlike GPT-4.5, GPT-5 is expected to bring groundbreaking AI capabilities advancements and incorporate elements from OpenAI’s 12 Days of Christmas announcements.

What This Means for AI Development

With GPT-4.5, OpenAI continues to refine its AI models, enhancing performance, accuracy, and user engagement. While it may not be a frontier AI, its significant improvements in writing, reasoning, and conversational depth make it an essential tool for businesses, researchers, and developers alike. As OpenAI progresses towards GPT-5, we can expect even more transformative AI innovations soon.

For those eager to explore GPT-4.5’s capabilities, it is now live on ChatGPT Pro and will soon be available across various platforms. Stay tuned for more updates on OpenAI’s roadmap toward next-generation AI technology.

0 notes

Text

Web Content Management Market Expansion: Industry Size, Share & Analysis 2032

TheWeb Content Management Market Size was valued at USD 8.13 Billion in 2023 and is expected to reach USD 28.76 Billion by 2032 and grow at a CAGR of 15.1% over the forecast period 2024-2032

The Web Content Management (WCM) market is expanding rapidly as organizations strive to enhance their digital presence. With the rise of e-commerce, digital marketing, and customer engagement platforms, the demand for efficient content management solutions is at an all-time high. Businesses are investing in WCM systems to streamline content creation, delivery, and optimization.

The Web Content Management market continues to grow as companies seek to improve their online visibility and user experience. The need for dynamic, personalized content has led to advancements in AI-powered content management, automation, and cloud-based platforms. Organizations across industries are adopting WCM solutions to stay competitive in the digital-first era.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3486

Market Keyplayers:

Adobe (US) – Adobe Experience Manager, Adobe Creative Cloud

OpenText (Canada) – OpenText TeamSite, OpenText Experience Platform

Microsoft (US) – Microsoft SharePoint, Microsoft Azure AI Content Management

Oracle Corporation (US) – Oracle Content Management, Oracle WebCenter Sites

Automattic (US) – WordPress.com, WooCommerce

OpenAI (US) – ChatGPT API, DALL·E for Content Creation

Canva (US) – Canva Pro, Canva for Teams

RWS (UK) – Tridion, RWS Language Cloud

Progress (US) – Progress Sitefinity, Telerik Digital Experience Cloud

HubSpot (US) – HubSpot CMS Hub, HubSpot Marketing Hub

Yext (US) – Yext Content Management, Yext Knowledge Graph

Upland Software (US) – Upland Kapost, Upland Altify

HCL Technologies (India) – HCL Digital Experience, HCL Unica

Acquia (US) – Acquia Digital Experience Platform, Acquia Site Studio

Optimizely (US) – Optimizely CMS, Optimizely Content Intelligence

Bloomreach (US) – Bloomreach Experience Cloud, Bloomreach Discovery

Sitecore (US) – Sitecore Experience Platform, Sitecore Content Hub

Market Trends Driving Growth

1. AI and Automation in Content Management

Artificial Intelligence is revolutionizing WCM by enabling automated content tagging, personalization, and real-time analytics.

2. Rise of Headless CMS

Decoupled and headless CMS platforms are gaining popularity, allowing seamless content delivery across multiple channels and devices.

3. Cloud-Based Content Management

The shift toward cloud-based solutions offers scalability, security, and cost-efficiency, driving adoption across enterprises.

4. Personalization and Omnichannel Content Delivery

Brands are leveraging WCM systems to provide personalized, consistent content experiences across websites, mobile apps, and social media.

5. Increasing Focus on Security and Compliance

With growing concerns about data privacy, WCM providers are integrating robust security measures and regulatory compliance tools.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3486

Market Segmentation:

By Component

Solutions

Services

By Deployment Type

On-premises

Cloud-based

By Organization Size

Large Enterprises

Small and Medium Enterprises

By Vertical

BFSI

IT and Telecom

Retail

Education

Government

Healthcare

Media and Entertainment

Travel and Hospitality

Others

Market Analysis and Current Landscape

Increasing demand for seamless digital experiences across industries.

Integration of AI and data analytics to optimize content strategies.

Adoption of mobile-first and voice-enabled content management solutions.

Emphasis on user-friendly interfaces and low-code/no-code platforms for easy content management.

While the market shows strong potential, challenges such as content governance, integration complexities, and data security remain. However, ongoing technological innovations are addressing these concerns, making WCM systems more efficient and accessible.

Future Prospects: What Lies Ahead?

1. Expansion of AI-Driven Content Strategies

AI-powered content recommendations, automated workflows, and predictive analytics will shape the future of WCM.

2. Enhanced Multilingual and Localization Capabilities

Businesses will invest in multilingual WCM systems to cater to global audiences and enhance customer engagement.

3. Growth of Voice Search and Conversational AI

WCM platforms will incorporate voice search optimization and AI-driven chatbots for improved user interaction.

4. Integration with Digital Experience Platforms (DXPs)

The convergence of WCM and DXPs will enable a unified approach to content, marketing, and customer experience management.

5. Strengthening Security and Compliance Features

Blockchain and AI-driven security protocols will enhance content authenticity, privacy, and regulatory adherence.

Access Complete Report: https://www.snsinsider.com/reports/web-content-management-market-3486

Conclusion

The Web Content Management market is evolving rapidly, driven by digital transformation and the increasing need for personalized content experiences. As businesses prioritize seamless content delivery and automation, WCM solutions will continue to play a crucial role in shaping the future of digital engagement. Companies investing in AI, cloud, and omnichannel strategies will lead the market, redefining how content is created, managed, and distributed in the digital age.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Web Content Management Market#Web Content Management Market Analysis#Web Content Management Market Share#Web Content Management Market Growth#Web Content Management Market Trends

0 notes

Text

Exploring the Impact of Generative AI on Businesses

Generative AI, a recent technological marvel, is changing the way businesses operate, making them more efficient. Companies are increasingly adopting this technology to automate repetitive tasks, improve decision-making, and streamline their operations. In this article, we’ll dive into the evolving world of Generative AI, focusing on key trends, the skills in demand, practical use cases, and its implications for the future of work.

Understanding the Generative AI landscape

Our latest research reveals that businesses across industries are showing a growing interest in Generative AI. Not only are they embracing this technology, but many also plan to expand their teams to harness its potential. This indicates the growing significance of Generative AI in the business realm.

Key takeaways:

1. Popular AI models: Companies currently favor well-established AI models like ChatGPT, BERT, and Stable Diffusion due to their proven performance.

2. Shift toward wider applications: Businesses are moving away from individual Generative AI tools and are instead looking for skills related to broader.

3. Tech professionals lead the way: Tech professionals are taking the lead in acquiring essential AI skills for various industries. There’s a strong demand for expertise in working with Large Language Models (LLMs), prompt engineering, and object detection.

4. Rising search trends: Noteworthy trends in Generative AI-related searches encompass a wide range of topics including TensorFlow, AI Chatbots, Generative AI, Image Processing, PyTorch, Natural Language Processing (NLP), Bard, AI Content Creation, Gradio, Azure OpenAI, Convolutional Neural Network, Large Language Models (LLMs), Midjourney, and Prompt Engineering. These trends mirror the dynamic nature of the field and the rapidly evolving interests of professionals and businesses in Generative AI.

Emerging trends in Generative AI

The Generative AI landscape is dynamic, with emerging trends shaping its course:

Ethical considerations: As Generative AI gains prominence, ethical concerns related to its use become increasingly important. Businesses must navigate the ethical aspects of AI responsibly.

Regulatory challenges: Regulatory bodies are paying more attention to AI technologies. Staying compliant with evolving regulations is crucial for businesses adopting Generative AI.

Practical applications across industries

Generative AI has a multitude of applications across various domains, each with its unique potential:

1. Content generation: Generative AI helps in efficiently creating diverse content, including text, code, poetry, and music.

2. Image generation and manipulation: It can transform images artistically, alter facial features, or generate realistic images.

3. Video generation: Applications range from creating videos to summarizing lengthy videos automatically.

4. Data augmentation: Generative AI can synthesize data to improve machine learning models and translate images between different domains.

5. Chatbots and Virtual assistants: Conversational agents and personalized responses are revolutionizing customer support and information retrieval.

6. Healthcare: It aids in generating medical images and molecular structures for drug discovery.

7. Design and creativity: Generative AI fosters creativity in design, from logo creation to architectural proposals.

8. Game development: It assists in generating game content and dialogues for interactive storytelling.

9. Language translation and interpretation: Real-time language translation and sign language interpretation have become more accessible.

10. Security and privacy: Generative AI supports anonymization and secure password generation.

11. Art and creativity: It can generate digital art and music compositions, fostering creativity.

12. Autonomous systems: Enhancing the decision-making of autonomous vehicles and motion planning for robots and drones.

13. Content recommendation: Personalizing content recommendations for articles, products, and media.

Conclusion

In conclusion, Generative AI is changing the way businesses operate and hire. To make the most of its potential, companies should look for individuals with a diverse range of AI skills. Our staffing services can assist in finding the right talent to maximize the benefits of Generative AI. As we embrace this journey, the blend of human creativity and AI innovation promises a more productive and creative era for businesses.

#GenerativeAI#ArtificialIntelligence#AIInnovation#MachineLearning#DeepLearning#AITrends#TechRevolution#AIApplications

0 notes

Text

OpenAI Unveils GPT-4.5: Heres Everything You Need to Know

The AI race just got more interesting. OpenAI has officially launched GPT-4.5, its latest and largest language model. While it boasts improvements in writing, reasoning, and computational efficiency, OpenAI is making it clear—this is not a frontier model. So, what does that mean for AI users? And how does GPT-4.5 compare to its predecessors? Let’s break it down. What is GPT-4.5? GPT-4.5 is OpenAI’s newest large language model (LLM), designed to enhance user experience with: ✅ Better Writing Capabilities – More fluid and natural text generation ✅ Improved Pattern Recognition – Stronger ability to connect ideas and generate insights ✅ Reduced Hallucinations – Fewer inaccurate responses compared to GPT-4o ✅ More Efficient Performance – 10x computational efficiency over GPT-4 It is being released as a research preview and will initially be available to ChatGPT Pro users before expanding to Plus, Team, Enterprise, and Edu users. Why GPT-4.5 is NOT a Frontier Model Despite its advancements, OpenAI explicitly states that GPT-4.5 does not introduce significant new capabilities compared to its predecessors. Unlike “frontier models” that push the boundaries of AI, GPT-4.5 is more of a refinement than a revolution. In fact, OpenAI says it performs below models like o1 and o3-mini, particularly in high-level reasoning and research applications. So why release it? GPT-4.5 is designed to bridge the gap between existing models and the upcoming GPT-5, offering a more cost-effective and accessible AI tool while OpenAI prepares for its next big leap. The Role of o1 and o3-mini Models GPT-4.5 was reportedly trained using OpenAI’s o1 reasoning model, codenamed Strawberry, leveraging synthetic data for fine-tuning. However, OpenAI notes that o1 and o3-mini still outperform GPT-4.5 in many areas, particularly in advanced AI research and reasoning tasks. The company’s ultimate goal? To combine multiple AI models into a more capable and general intelligence system—a stepping stone toward AGI (Artificial General Intelligence). What’s Next? The Road to GPT-5 If GPT-4.5 feels like an incremental update, there’s a reason—GPT-5 is coming soon. 🔹 Expected Launch: As early as May 2024 🔹 Key Feature: Built with o3 reasoning model, making it a major upgrade 🔹 Vision: A more integrated AI system that combines OpenAI’s latest breakthroughs GPT-5 is anticipated to be a true frontier model, with higher reasoning capabilities, better contextual understanding, and possibly multimodal functionalities. Where You Can Access GPT-4.5 GPT-4.5 is rolling out in stages: 📌 Now Available → ChatGPT Pro users 📌 Next Week → Plus & Team users 📌 Coming Soon → Enterprise & Edu users 📌 Also Available → Microsoft Azure AI Foundry With OpenAI’s AI ecosystem rapidly evolving, businesses and developers now have more options than ever to integrate advanced AI into their workflows. Final Thoughts GPT-4.5 represents an important step forward, but it’s clear that OpenAI is reserving its most powerful upgrades for GPT-5. While GPT-4.5 is more efficient, reliable, and natural in conversations, it isn’t a groundbreaking leap in AI. Instead, it serves as a high-performance bridge model leading up to OpenAI’s next frontier innovation. via https://mazdak.com/p/openai-gpt-45

0 notes

Text

Best AI Companies Leading the Future of Innovation

Artificial Intelligence (AI) is transforming industries worldwide, from healthcare and finance to eCommerce and entertainment. With rapid advancements, several AI companies are leading the charge in innovation and technology. Whether you're a business looking for AI-driven solutions or a tech enthusiast, here are some of the best AI companies to watch in 2024.

1. OpenAI

OpenAI is at the forefront of AI research and development, known for its powerful language model, ChatGPT. With innovations in natural language processing (NLP) and deep learning, OpenAI is revolutionizing the way businesses interact with AI, from chatbots to content generation and automation.

2. DeepMind

A subsidiary of Alphabet (Google's parent company), DeepMind specializes in AI-driven solutions, particularly in the healthcare sector. Their AlphaFold project, which predicts protein structures, has the potential to accelerate drug discovery and medical research.

3. Nvidia

While primarily known for its high-performance GPUs, Nvidia is a leader in AI hardware and software solutions. The company’s AI chips power machine learning models, autonomous vehicles, and robotics, making it a key player in the AI revolution.

4. IBM Watson

IBM Watson is renowned for its AI-driven business solutions. It provides powerful AI tools for enterprises, including AI-powered customer service, data analysis, and automation. Watson’s AI capabilities help businesses make smarter decisions and optimize operations.

5. Tesla

Tesla is not just an automotive company—it’s an AI-driven powerhouse. With its advancements in self-driving technology and AI-powered robotics, Tesla continues to push the boundaries of AI in transportation and automation.

6. Amazon Web Services (AWS) AI

AWS AI offers machine learning tools and cloud-based AI solutions for businesses of all sizes. With AI-powered services like Amazon Lex (chatbots), Rekognition (image analysis), and SageMaker (machine learning model deployment), AWS is a go-to platform for AI innovation.

7. Microsoft AI

Microsoft’s AI division is integrated into its cloud platform, Azure. With AI services such as Azure Cognitive Services, AI-driven analytics, and automation tools, Microsoft is a major player in AI applications for businesses and developers.

8. Baidu AI

Baidu, often referred to as China’s Google, is investing heavily in AI research and development. With innovations in self-driving technology, facial recognition, and AI-powered search engines, Baidu is a key competitor in the AI landscape.

9. Anthropic

Anthropic is an AI research company focused on creating safe and reliable AI systems. Their AI models, such as Claude, aim to improve AI’s understanding and ethical decision-making, ensuring responsible AI development.

10. DataRobot

DataRobot specializes in automated machine learning (AutoML) solutions. It provides businesses with AI-driven analytics and predictive modeling, enabling organizations to leverage AI without extensive technical expertise.

Conclusion

The AI industry is evolving at an unprecedented pace, with companies like OpenAI, Tesla, and IBM Watson leading the way. Whether you're looking for AI-powered automation, cloud-based AI services, or cutting-edge research, these companies are shaping the future of AI. As technology continues to advance, businesses must stay ahead by leveraging AI-driven solutions to remain competitive in the digital landscape.

Looking to integrate AI into your business? AriPro Designs offers AI-powered web solutions, automation tools, and custom AI integration to help businesses thrive in the AI era. Contact us today to explore how AI can revolutionize your operations!

0 notes

Text

Empower Your Digital Transformation with Microsoft Azure Cloud Service

Today, cloud computing applications and platforms are promptly growing across various industries, allowing businesses to become more efficient, effective, and competitive. In fact, these days, over 77% of businesses have some part of their computing infrastructure in the cloud.

Although, there are various cloud computing platforms available, one of the few platforms that lead the cloud computing industry is Microsoft Azure Cloud. Although, Amazon Web Services (AWS) is a leading giant in the public cloud market, Azure is the most rapidly-growing and second-largest in the world of computing.

What is Microsoft Azure?

Azure is a cloud computing service provided by Microsoft. There are more than six hundred services that come under the Azure umbrella. In simple terms, it is a web-based platform used for building, testing, managing and deploying applications and services.

About 80% of Fortune 500 Companies are using Azure for their cloud computing requirements.

Azure supports a multitude of programming languages, including Node JS, Java and C#.

Another interesting fact about Azure is that it has nearly 42 data centers around the globe, which is the maximum number of data centers for any cloud platform.

A broad range of Microsoft’s Software-as-a-Service (SaaS), Platform-as-a-Service (PaaS) and Infrastructure-as-a-Service (IaaS) products are hosted on Azure. To understand these major cloud computing service models in detail, check out our other blog.

Azure provides three key aspects of functionality: Virtual Machine, app services and cloud services.

Virtual Machines

The virtual machines by Azure are one of many types of scalable, on-demand computing resources. An Azure virtual machine provides you with the flexibility of virtualization without the need of buying and maintaining the physical hardware that runs it.

App Services

Azure App Services is a service based on HTTP which is used for hosting web applications, mobile back ends, and REST APIs. It can be developed in your favourite language, be it JAVA, .NET, .NET Core, Node.js, Ruby, PHP or Python. Applications smoothly run and scale on both Windows and Linux-based environments.

Cloud Services

Azure Cloud Services is a form of Platform-as-a-Service. Similar to Azure App Service, the technology is crafted to support applications that are reliable, scalable, and reasonable to operate. Like App Services are hosted on virtual machines, so do Azure Cloud Services.

Various Azure services and how it works

Azure offers over 200 services, divided across 18 categories. These categories constitute computing, storage, networking, IoT, mobile, migration, containers, analytics, artificial intelligence and other machine learning, management tools, integration, security, developer tools, databases, security, DevOps, media identity and web services. Below, we have broken down some of these important Azure services based on their category:

Computer services

Azure Cloud Service: You can create measurable applications within the cloud by using this service. It offers instant access to the latest services and technologies required in the enterprise, enabling Azure cloud engineers to execute complex solutions seamlessly.

Virtual Machines: They offer Infrastructure-as-a-Service and can be used in diverse ways. When there is a need for complete control over an operating system and environment, VMS are a suitable choice. With this service, you can create a virtual machine in Linux, Windows or any other configuration in seconds.

Service Fabric: It is a Platform-as-a-Service which is designed to facilitate the development, deployment and management of highly customizable and scalable applications for the Microsoft Azure cloud platform. It simplifies the process of developing a micro service.

Functions: It enables you to build applications in any programming language. When you’re simply interested in the code that runs your service and not the underlying platform or infrastructure, functions are great.

Networking

Azure CDN: It helps store and access data on varied content locations and servers. Using Azure CDN (Content Delivery Network), you can transfer content to any person around the world.

Express Route: This service allows users to connect their on-premise network to the Microsoft Cloud or any other services using a private connection. ExpressRoute offers more reliability, consistent latencies, and faster speed than usual connections on the internet.

Virtual Network: It is a logical representation of the network in the cloud. So, by building an Azure Virtual Network, you can decide your range of private IP addresses. This service enables users to have any of the Azure services communicate with each other securely and privately.

Azure DNS: Name resolution is provided by Azure DNS, a hosting service for DNS domains that makes use of the Microsoft Azure infrastructure. You can manage your DNS records by utilising the same login information, APIs, tools, and pricing as your other Azure services if you host your domains in Azure.

Storage

Disk Storage: In Azure, VM uses discs as a storage medium for an operating system, programs, and data. A Windows operating system disc plus a temporary disc are the minimum numbers of discs present in any virtual machine.

File Storage: The main usage of Azure file storage is to create a shared drive between two servers or users. We’ll use Azure file storage in that situation. It is possible to access this managed file storage service via the server message block (SMB) protocol.

Blob Storage: Azure blob storage is essential to the overall Microsoft Azure platform since many Azure services will store and act on data that is stored in a storage account inside the blob storage. And each blob must be kept in its own container.

Benefits of using Azure

Application development: Any web application can be created in Azure.

Testing: After the successful development of the application on the platform, it can be easily tested.

Application hosting: After the testing, you can host the application with the help of Azure.

Create virtual machines: Using Azure, virtual machines can be created in any configuration.

Integrate and sync features: Azure enables you to combine and sync directories and virtual devices

Collect and store metrics: Azure allows you to collect and store metrics, enabling you to identify what works.

Virtual hard drives: As they are extensions of virtual machines, they offer a massive amount of data storage.

Bottom line

With over 200 services and countless benefits, Microsoft Azure Cloud is certainly the most rapidly-growing cloud platform being used by organizations. Incessant innovation from Microsoft allows businesses to respond quickly to unexpected changes and new opportunities.

So, are you planning to migrate your organization’s data and workload to the cloud? At CloudScaler, get instant access to the best services and technologies from the ground up, supported by a team of experts that keep you one step ahead in the competition.

0 notes

Text

Microsoft y Google Ofrecen Modelos AI Gratis Frente a DeepSeek En el dinámico y rápidamente evolucionando mundo de la inteligencia artificial, dos de los gigantes tecnológicos más influyentes, Microsoft y Google, están tomando medidas significativas para contrarrestar el reciente auge de DeepSeek, una nueva y prometedora IA de origen asiático. DeepSeek, conocida por su naturaleza de código abierto y accesibilidad gratuita, ha generado un considerable revuelo en la industria, obligando a estas empresas a reevaluar y ajustar sus estrategias. El Desafío de DeepSeek DeepSeek, con su enfoque en código abierto y acceso gratuito, se está posicionando como una seria competencia para modelos de IA establecidos como ChatGPT de OpenAI. Su capacidad para ser instalada localmente en sistemas operativos como Windows, sin necesidad de una conexión a Internet, la hace particularmente atractiva para usuarios que buscan independencia y eficiencia[3]. La Respuesta de Microsoft Microsoft, no queriendo quedarse atrás, ha anunciado que su herramienta Copilot incorporará el modelo de razonamiento o1 de OpenAI de manera gratuita para todos los usuarios. Estos modelos, lanzados en septiembre del año pasado, se destacan por dedicar más tiempo al razonamiento antes de responder, lo que les permite resolver problemas más complejos. La función "Think Deeper" de Copilot Labs, que utiliza estos modelos, será ahora accesible sin costo adicional, permitiendo a los usuarios de Copilot beneficiarse de una mayor profundidad y precisión en sus interacciones con la IA[1]. La Estrategia de Google Por su parte, Google está apostando por su modelo Gemini 2 Flash para mejorar significativamente el rendimiento de su plataforma de IA. Anunciado en diciembre, Gemini 2 Flash supera al anterior Gemini 1.5 Pro en varias pruebas clave y admite entradas y salidas multimodales, incluyendo imágenes, vídeo y audio. Este modelo se convertirá en el predeterminado para todos los usuarios de la aplicación Gemini en la web y en dispositivos móviles, permitiendo a Google competir directamente con el auge de DeepSeek[1]. Además, Google está facilitando el acceso a Gemini 2 Flash a través de AI Studio y Vertex AI, herramientas que permiten a desarrolladores, estudiantes y investigadores experimentar y crear prototipos con la API de Gemini sin costo adicional. Esto incluye el uso de Google AI Studio, que no tiene coste en todas las regiones disponibles, y créditos gratuitos para nuevos clientes de Google Cloud[4]. La Integración de DeepSeek en Plataformas Existentes En un giro interesante, tanto Microsoft como Google han decidido integrar el modelo DeepSeek R1 en sus respectivas plataformas. Microsoft ha incorporado DeepSeek R1 en Azure AI Foundry y GitHub, sometiéndolo a rigurosas evaluaciones de seguridad para asegurar su confiabilidad y escalabilidad. De manera similar, Google ha agregado el modelo R1 a su plataforma Vertex AI, demostrando una estrategia de colaboración y adaptación frente al nuevo desafío[5]. Conclusión La respuesta de Microsoft y Google a DeepSeek refleja la dinámica competitiva y la innovación constante en el sector de la inteligencia artificial. Al ofrecer modelos AI de alta calidad de manera gratuita, estas empresas buscan retener y atraer a usuarios en un mercado cada vez más saturado. Mientras DeepSeek continúa ganando terreno con su enfoque en código abierto y accesibilidad, Microsoft y Google están demostrando su capacidad para adaptarse y evolucionar, asegurando que la carrera por dominar la IA siga siendo intensa y emocionante. https://rafaeladigital.com/noticias/microsoft-google-modelos-ai-gratis-deepseek/?feed_id=6266

0 notes

Text

How AI Development is Revolutionizing the Tech Industry

The tech industry has always been a driver of innovation, shaping how we live, work, and communicate. In recent years, artificial intelligence AI development has emerged as a transformative force, revolutionizing the tech sector in unprecedented ways. From streamlining processes to enabling groundbreaking technologies, AI is reshaping the industry's landscape. Here’s a deep dive into how AI development is revolutionizing the tech world.

1. Enhancing Software Development Processes

AI is automating and optimizing software development. Tools like GitHub Copilot and TabNine use AI to assist developers by suggesting code, identifying bugs, and even automating repetitive tasks. This reduces development time and minimizes human error, allowing teams to focus on creativity and problem-solving.

Impact:

Faster development cycles.

Improved code quality.

Enhanced productivity for software engineers.

2. Transforming Data Analysis and Insights

Data is the backbone of the tech industry, and AI is transforming how companies process and analyze information. Machine learning algorithms can analyze vast datasets to identify patterns, predict trends, and deliver actionable insights. This is crucial for industries like finance, healthcare, and marketing.

Examples:

Predictive analytics in customer behavior.

Real-time fraud detection in financial transactions.

Personalized recommendations in e-commerce and streaming platforms.

3. Driving Innovation in Hardware

AI is influencing hardware design, leading to the creation of specialized processors like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) optimized for AI workloads. These advancements are critical for training complex AI models and deploying them efficiently.

Notable Advancements:

Edge computing devices powered by AI.

Energy-efficient AI chips.

AI-powered IoT devices transforming industries like agriculture and manufacturing.

4. Revolutionizing Customer Experience

AI-powered tools like chatbots and virtual assistants are enhancing customer service by providing instant, accurate responses. Companies like Klarna and Zendesk leverage AI to improve customer satisfaction while reducing operational costs.

Key Benefits:

24/7 support availability.

Personalized user interactions.

Scalability in managing customer queries.

5. Enabling Autonomous Systems

From self-driving cars to drones, AI is at the core of autonomous systems. These innovations are transforming industries like transportation, logistics, and defense. Companies like Tesla, Waymo, and Amazon are leading this revolution with AI-powered solutions.

Applications:

Autonomous delivery systems.

Smart cities using AI-driven traffic management.

Advanced robotics in manufacturing and healthcare.

6. Powering Breakthroughs in Natural Language Processing (NLP)

Natural Language Processing (NLP) has seen remarkable progress, with AI systems like ChatGPT and Bard offering human-like conversational abilities. These systems are revolutionizing areas like content creation, translation, and accessibility.

Examples:

AI-generated marketing content.

Real-time translation tools for global communication.

Improved accessibility for visually and hearing-impaired users.

7. Strengthening Cybersecurity

As cyber threats evolve, AI plays a pivotal role in identifying vulnerabilities and preventing attacks. AI-powered security systems can detect anomalies, analyze threats, and respond in real time, ensuring robust protection for businesses and users.

AI in Action:

Behavioral analysis to detect phishing attempts.

Automated threat intelligence.

Proactive vulnerability management.

8. Democratizing Technology

AI development is making advanced technologies more accessible. Cloud-based AI platforms like Google AI, Azure AI, and AWS AI provide businesses and developers with tools to integrate AI into their products without extensive expertise.

Benefits:

Lower barriers to entry for startups.

Scalable AI solutions for businesses of all sizes.

Accelerated innovation across industries.

Challenges and Ethical Considerations

While AI development has immense potential, it also presents challenges. Issues like bias in AI algorithms, data privacy concerns, and job displacement need to be addressed to ensure equitable and ethical AI adoption.

Conclusion

AI development is not just a technological evolution—it’s a revolution reshaping the tech industry from its core. By enhancing efficiency, driving innovation, and unlocking new possibilities, AI is laying the foundation for a future that blends human ingenuity with machine intelligence.

As we navigate this AI-driven era, collaboration between developers, businesses, and policymakers will be crucial to harness its potential responsibly and sustainably. The journey has just begun, and the possibilities are limitless.

0 notes

Text

The Pitfalls of AI: Common Errors in Automated Transcription (and How Physicians Can Avoid Them)

Is AI transcription safe for doctors and clinicians to use? If you thought that AI transcription was “good enough” for your patients’ EMRs, it might be time to think again. Common errors in AI transcription can lead to a myriad of problems, from small errors in notes to potentially life-threatening alterations to the patient record. Then, of course, is the question: Who’s responsible when AI gets it wrong?

Here are some of the common errors in AI transcription, along with two ideas on how to avoid them.

4 Ways AI Transcriptions Jeopardize Patient Care

1. AI Transcription Bots Can’t Accurately Recognize Accents

Many of the common errors in AI transcription can be traced back to accents alone. A recent Guide2Fluency survey found that voice recognition software like ChatGPT has problems with almost all accents in the United States. It doesn’t seem to matter where you live: Boston, New York, the South, Philadelphia, Minnesota, the LA Valley, Hawaii, or Alaska – they’re all in the Top 30 regions. And that just covers speakers born within the U.S.… You and I may ask for clarification when confused by an accent, but AI can’t (or won’t).

2. Technical Jargon – Like Medical Terms – Are Confounding for AI

If AI can’t recognize “y’all” with a southern accent, how is it expected to recognize “amniocentesis”? In turn, the words AI tries to spell get confusing for clinicians going back to the patient record. De-coding “Am neo-scent thesis” isn’t always as easy as it looks, especially if similar errors happen a dozen times in a given transcript. AI transcription software just isn’t built to recognize medical terms well enough.

3. AI Hallucinations

Then there’s the problem of AI hallucinations. A recent Associated Press article points out that OpenAI’s Whisper transcription software can invent new medications and even add commentary that the physician never said. These common AI errors can have “really grave consequences” according to the article – as we can all well imagine. In one example, AI changed “He, the boy, was going to, I’m not sure exactly, take the umbrella.” to “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.” Clearly, there can be medical and legal implications with these changes to the patient record. Who’s responsible when AI gets it wrong?

4. AI Transcription Does Not Ensure Privacy

You can almost guarantee that AI transcription software is storing that data somewhere in its system – or a third party’s. OpenAI uses Microsoft Azure as its server, which prompted one California lawyer to refuse signing a consent form to share medical information with Microsoft. OpenAI says it follows all laws (which presumably includes HIPAA), but that assertion would likely need to be tested in court before anyone knows for sure. Either way, the horse might already be out of the barn regardless of what a court finds…

How to Avoid Common AI Transcription Errors Corrupting Patient Records

There are two main ways physicians and clinicians can reduce the risk of these and other common errors in AI transcription from corrupting your patients’ EMRs.

1. Use Human Transcription instead of AI Transcription

This is the most straightforward approach. According to that same Associated Press report, “OpenAI recommended in its online disclosures against using Whisper in ‘decision-making contexts, where flaws in accuracy can lead to pronounced flaws in outcomes.’” If there is any situation where flaws in accuracy lead to flaws in outcomes, patient EMRs is certainly one of them. Preferred Transcriptions’ medical transcription services provide greater accuracy for better outcomes.

2. Use AI Transcription Editing Services

Busy doctors are more likely to miss errors because they are already burned out with administrative tasks. Plus, you may think AI transcription is helping relieve this documentation burden, but it may be adding to it instead when the doctor has to fix so many errors. Some companies now offer AI transcription editing services that can help clean up these errors, making the final review faster and easier for the busy clinician.

Contact Preferred Transcriptions to Reduce Common Errors in AI Transcription

Why leave your patient records to chance? Preferred Transcriptions provides fast, accurate transcription services that eliminates all common AI transcription errors. Not only will our trained medical transcriptionists reduce your documentation burden, they can help preserve better patient outcomes, too. Call today at 888-779-5888 or contact us via our email form. We can get started on your transcription as early as today.

Blog is originally published at: https://www.preferredtranscriptions.com/ai-transcription-common-errors/

It is republished with the permission from the author.

0 notes

Text

Top AI Companies Shaping the Future of the World

Artificial Intelligence driving the world and helping us to develop our daily lives. Across various industries, We will see top AI companies leading the way by changing how we work, communicate, and solve problems. These companies are creating advanced technologies that can do important tasks and bring innovative solutions in areas like healthcare, transportation, and more. Let’s explore some top AI companies that are driving and shaping the future.

What are Top AI Companies that are transforming the world:

OpenAI: A Leader in Generative AI

OpenAI, a company that was founded in 2015, is an important player in Artificial intelligence advanced technology. Known for tools like GPT-4 and DALL·E, OpenAI has acquired worldwide attention for its innovative technology. Its large language models are used in chatbots, content creation, and also in programming.

Beyond their advanced technology innovations, OpenAI plays a major role in promoting ethical AI use. ChatGPT is widely used by businesses and individuals, the company focuses on making AI tools accessible, helpful, and safe. By driving smarter decisions and creative solutions, OpenAI is not only transforming industries but also opening doors for new businesses, that’s why OpenAI comes under top AI companies.

Google DeepMind: Advancing AI for a Better World

Google DeepMind, this company focuses on using Google artificial intelligence to tackle real-world challenges. From mastering complex games like Go to solving major advanced technology scientific problems, DeepMind achievements are amazing.

One of its most important projects is AlphaFold, which solved the mystery of protein folding. This development is transforming drug discovery and accelerating progress in healthcare. By using Google artificial intelligence to drive societal progress, DeepMind defines how advanced technology can be developed to benefit humanity. So, these companies come in top AI companies which are driving the world through advanced technologies.

NVIDIA AI: Driving Artificial intelligence with Advanced Hardware

NVIDIA AI plays a key role in Artificial intelligence advanced technology by providing the powerful advanced technology hardware it needs. Famous for its GPUs (Graphics Processing Units), NVIDIA AI supports artificial intelligence research and applications across industries. That’s why this company comes as a top AI companies.

Its CUDA platform helps researchers train complex neural networks quickly, while tools like NVIDIA AI Omniverse enable virtual simulations. NVIDIA AI advance technology is not just innovating for today, it’s building the foundation for AI’s future. From self-driving cars to gaming, its impact is vast, making it a crucial player in the AI revolution. That’s why this company comes under top AI companies.

Tesla: Leading the Way in Advanced Technology

Tesla is a colonist in using AI for transportation. Under Elon Musk’s leadership, the company has revolutionized electric vehicles by combining sustainable energy with advanced AI. This company is among the top AI companies in the world.

Tesla’s Full Self-Driving (FSD) software displays its vision for autonomous travel. By leveraging neural networks and real-time data, Tesla vehicles can handle complex driving situations, covering the way for safer and more efficient transportation. While full autonomy is still a work in progress, Tesla innovations have significantly pushed the boundaries what’s possible.

Microsoft: Advancing AI Through Collaboration

Microsoft the famous company has integrated Artificial intelligence into its products to boost productivity and teamwork. By partnering with OpenAI, it has brought GPT technology to tools like Word and Excel, making everyday tasks simpler and more efficient.

Through Azure AI, its cloud-based platform, Microsoft helps developers create AI-powered applications across industries like healthcare and education. With a strong commitment to ethical AI practices, Microsoft continues to be a trusted leader, driving innovation while ensuring responsible use of technology.

Baidu: The AI Leader in China

Baidu, known as the “Google of China,” which is a powerhouse in AI technology innovation. From autonomous driving to voice recognition, Baidu is leading AI development in Asia.

The company’s Apollo project has made significant progress in self-driving technology, with multiple partnerships to deploy autonomous vehicles in real world. Additionally, Baidu’s AI-powered search engine and voice assistant cater to millions of users, making it a critical player in the global AI landscape. That’s why Baidu is in the top AI companies that are driving the world through their advanced technologies growth.

Artificial Intelligence’s Impact and Responsibility

AI companies are reshaping industries, solving complex problems, and creating new opportunities, from healthcare to transportation. Top AI company’s innovations are building a smarter and more well-organized world.

However, with innovation comes the responsibility to ensure ethical and inclusive use. Whether it’s OpenAI’s generative tools or NVIDIA AI advanced hardware, these advancements highlight AI’s potential to benefit all of humanity.

Conclusion

AI is transforming the world and these companies are leading the way to change the world. From OpenAI creative tools to Tesla’s self-driving cars, they are solving problems and creating new opportunities. Their work shows how AI can make life easier, safer, and more efficient. Read more AI-related News and Blogs only at AiOnlineMoney.

#aionlinemoney.com

0 notes

Text

Step-by-Step Guide to Building a Generative AI Model from Scratch

Generative AI is a cutting-edge technology that creates content such as text, images, or even music. Building a generative AI model may seem challenging, but with the right steps, anyone can understand the process. Let’s explore steps to build a generative AI model from scratch.

1. Understand Generative AI Basics

Before starting, understand what generative AI does. Unlike traditional AI models that predict or classify, generative AI creates new data based on patterns it has learned. Popular examples include ChatGPT and DALL·E.

2. Define Your Goal

Identify what you want your model to generate. Is it text, images, or something else? Clearly defining the goal helps in choosing the right algorithms and tools.

Example goals:

Writing stories or articles

Generating realistic images

Creating music

3. Choose the Right Framework and Tools

To build your AI model, you need tools and frameworks. Some popular ones are:

TensorFlow: Great for complex AI models.

PyTorch: Preferred for research and flexibility.

Hugging Face: Ideal for natural language processing (NLP).

Additionally, you'll need programming knowledge, preferably in Python.

4. Collect and Prepare Data

Data is the backbone of generative AI. Your model learns patterns from this data.

Collect Data: Gather datasets relevant to your goal. For instance, use text datasets for NLP models or image datasets for generating pictures.

Clean the Data: Remove errors, duplicates, and irrelevant information.

Label Data (if needed): Ensure the data has proper labels for supervised learning tasks.

You can find free datasets on platforms like Kaggle or Google Dataset Search.

5. Select a Model Architecture

The type of generative AI model you use depends on your goal:

GANs (Generative Adversarial Networks): Good for generating realistic images.

VAEs (Variational Autoencoders): Great for creating slightly compressed data representations.

Transformers: Used for NLP tasks like text generation (e.g., GPT models).

6. Train the Model

Training involves feeding your data into the model and letting it learn patterns.

Split your data into training, validation, and testing sets.

Use GPUs or cloud services for faster training. Popular options include Google Colab, AWS, or Azure.

Monitor the training process to avoid overfitting (when the model learns too much from training data and fails with new data).

7. Evaluate the Model

Once the model is trained, test it on new data. Check for:

Accuracy: How close the outputs are to the desired results.

Creativity: For generative tasks, ensure outputs are unique and relevant.

Error Analysis: Identify areas where the model struggles.

8. Fine-Tune the Model

Improvement comes through iteration. Adjust parameters, add more data, or refine the model's architecture to enhance performance. Fine-tuning is essential for better outputs.

9. Deploy the Model

Once satisfied with the model’s performance, deploy it to real-world applications. Tools like Docker or cloud platforms such as AWS and Azure make deployment easier.

10. Maintain and Update the Model

After deployment, monitor the model’s performance. Over time, update it with new data to keep it relevant and efficient.

Conclusion

Building a generative AI model from scratch is an exciting journey that combines creativity and technology. By following this step-by-step guide, you can create a powerful model tailored to your needs, whether it's for generating text, images, or other types of content.

If you're looking to bring your generative AI idea to life, partnering with a custom AI software development company can make the process seamless and efficient. Our team of experts specializes in crafting tailored AI solutions to help you achieve your business goals. Contact us today to get started!

0 notes

Photo

セキュリティと利便性を両立させた「生成AI活用環境」の実現方法──日立ソリューションズとAllganize Japanが示す実践的アプローチ 掲載日 2024/11/18 10:00

著者:周藤瞳美

企業の生成AI活用が本格化するなか、秘匿性の高い機密情報や技術情報を安全に扱う方法に注目が集まっている。2024年10月18日に開催された「生成AIで秘匿性の高い機密情報や技術情報を安全に扱う方法とは?」と題したオンラインセミナーでは、日立ソリューションズとAllganize Japanの2社による講演が行われた。SaaS環境やプライベート環境でのセキュアな生成AI活用事例が紹介され、セキュリティと利便性を両立させた生成AI活用の具体的な道筋が示された。

生成AIは、試行から本格活用のフェーズへ 最初のセッションでは、株式会社日立ソリューションズ クラウドソリューション本部 企画部 兼AIトランスフォーメーション推進本部 AX戦略部 担当部長(現:シニアAIビジネスストラテジスト) 北林 拓丈氏によって、生成AI市場の最新動向と同社の取り組みが紹介された。

国内企業における生成AI活用は、2023年度の試行フェーズを経て加速。そして2024年は本格活用のフェーズに入ったといえる。 生成AI活用の方向性として、北林氏は「攻め」と「守り」の二面性を挙げる。攻めの側面では業務効率化やサービスの高度化をめざし、守りの側面では著作権やプライバシー侵害、情報漏洩などのリスク対策が重要だという。

これらの取り組みは段階的に進められ、組織の一部での試行から始まり、全社活用とユースケース創出を経て、業務プロセス変革、そしてサービスの高度化へと発展していく。北林氏は「各フェーズの取り組みを推進するにあたってさまざまな課題があり、その課題への対処が必要になります」と話す。

続けて、北林氏はグローバルトレンドについて、北米最大級のAIカンファレンス「Ai4 2024」での知見を共有した。特に注目すべき点として、ユースケースに応じた適切なモデルを選択するマルチモデル対応の重要性が挙げられる。また、コスト効率を考慮した特定分野向けの小規模言語モデル(SLM)の活用が進んでいるという。さらに、責任あるAIの実現とリスク管理の観点から、AIのガバナンスに対する重要性が高まっていることを明らかにした。

日立ソリューションズは、2024年4月にAIトランスフォーメーション推進本部を設立。同社は、生成AIをはじめとしたAI技術を駆使するAIトランスフォーメーション(AX)を進めることにより、社会と顧客と自社のDXを加速させ、持続可能な社会の実現に向けたサステナビリティトランスフォーメーション(SX)に貢献することをめざしている。同本部の具体的な取り組みとして、ソリューションの高度化、社内業務の効率化、開発業務の効率化、そしてリスク管理・ガバナンスの4つの柱を掲げている。 実践的な活用事例も着実に成果を上げている。プロモーション業務では、従来1カ月以上かかっていたコラム作成の期間を1日程度にまで短縮することに成功した。その他、問い合わせ対応業務の効率化や、会議議事録作成の自動化、協創活動におけるアイデア発想支援など、幅広い領域で活用が進んでいるという。「今後は自社商材への生成AI適用プロジェクトも進めていきます」と北林氏は語り、継続的な取り組みの展開を示した。

同社の実践例は、生成AIの企業活用における具体的なロードマップを示すものとして注目すべき取り組みといえるだろう。

機密情報もフル活用。生成AIを「すぐに」「セキュアに」企業活用する術とは? 続いてのセッションでは、Allganize Japan株式会社 Solution Sales Senior Manager 池上 由樹氏が登壇。生成AIの実用的な企業活用についての解説が行われた。

生成AIの企業活用において、��上氏は2つの主要な課題を指摘する。

「1つ目は、ChatGPTをはじめ生成AIを利用できる環境を全社に展開しても、従業員からすると具体的な使い方がわからないという活用面における課題、そして2つ目は、セキュリティ面での懸念です」(池上氏)

これらの課題に対し、同社はオールインワン生成AI・LLMアプリプラットフォーム「Alli LLM App Market」を提供している。 同サービスについて、池上氏は「プロンプトを入力しなくても、選ぶだけで使える生成AI・LLMアプリケーションを100個以上用意している」と説明。さらに、ノーコードでのアプリケーション作成・カスタマイズ機能、自社データとの連携機能などを備えており、企業のニーズに応じた柔軟な活用が可能となっている。

特に注目すべき機能として、企業向けに特化したRAG(Retrieval-Augmented Generation)技術を使用した機能があ��。 この機能では、質問に対する回答を社内文書の中から自動で生成する際に、同社独自のRAG技術により、質問に関連する文書内の該当箇所を自動でハイライト表示し、生成された回答の根拠を明確に示すことができる。「表や画像を含む複雑な文書でも、自動で適切な前処理を行い、高精度な回答生成を実現します」と池上氏は述べる。

Alli LLM App Marketは、企業のセキュリティポリシーに応じて下記の3つの提供形態を用意している。

1.SaaS型:クラウドベースで迅速な導入が可能 最も導入が容易な形態として、SaaS型のサービスを提供している。池上氏によると、「低コストで最短1日で利用可能」という即効性が最大の特徴だ。ドキュメントなどのデータは同社が管理する環境にアップロードされ、LLMは同社が契約しているLLMのAPIサービスを経由して利用する形となる。LLMをAPIで利用する場合は、顧客企業のデータがモデル学習に利用されることはない。また、顧客企業が独自に契約しているAzure OpenAI ServiceなどのLLMと接続することも可能だ。セキュリティポリシーによってはアップロード可能なファイルに制限が生じるという課題はあるものの、迅速な導入を重視する企業には最適な選択肢となる。

2.プライベートクラウド型:セキュアな環境での運用 「最近では、プライベートクラウドを使用するケースが増えています」と池上氏は言及する。この形態では、Alli LLM App Marketをプライベートクラウド上に実装し、顧客企業が契約しているLLMと接続する構成を採用している。完全なローカル環境ではないものの、プライベートクラウド上でのデータ管理が許容される企業にとって、バランスの取れた選択肢となる。

3.オンプレミス型:完全なローカル環境での展開 最も厳格なセキュリティを必要とする企業向けの選択肢として、完全なオンプレミス環境での導入も可能だ。「特に金融機関や官公庁、製造業などからの需要が高いです」と池上氏は説明する。この形態では、LLMを含むすべてのコンポーネントを顧客企業の環境内に実装する。GPTのような大規模モデルはサイズの問題でオンプレミスには置けないため、同社が提供する専用のオンプレミスLLMを使用する形となる。なお、顧客企業が契約する特定のLLMとの接続も可能だ。

具体例として、大手証券会社での導入事例が紹介された。およそ300種類の業務マニュアルに対する高度な検索機能の実現と、生成AI活用による業務自動化基盤の構築を約3カ月で実現したという。また、クラウドサービスの利用が制限される大手銀行での完全オンプレミス環境での導入例も示された。

「企業によって求められるセキュリティ水準はさまざまです。Alli LLM App Marketはそれぞれの要件に応じた提供形態で、生成AIの活用を短期間で実現可能です」(池上氏)

導入事例から見えてきた!全社で使える安全な生成AI環境の実現方法 最後のセッションでは、2017年からAIによる業務効率化の支援に携わってきたという株式会社日立ソリューションズ スマートワークソリューション本部 ビジネス創生部 エバンジェリスト 小林 大輔氏が登壇。Alli LLM App Marketを100社以上に提���した経験をもとに、安全かつ効果的な全社展開のポイントについて解説された。

まず小林氏は、企業の生成AI活用の実態について言及。帝国データバンク「生成AIの活用に関する日本企業の最新トレンド分析(2024年9月)」によると、生成AIを「活用している」企業は全体の17.3%にとどまり、約半数の企業が「活用しておらず活用予定もない」状況だ。しかし、小林氏は「実際に活用している企業の9割近くが効果を実感しています」という。

活用部門としては経営企画部門が最も多く、企業の中枢での利用が進んでいる。また、企業規模別では1,000人以上の大企業での活用が進む一方で、小規模な企業の方が効果をより実感している傾向が見られる。それは、現状では特定の個人による利用が中心となっており、全社的な展開には至っていない企業が多いためだという。

企業内での生成AI活用を広げるための課題として、小林氏は法規制対応と社内ルールの整備、使いやすさとノウハウの不足、そして情報漏洩などのセキュリティ懸念の3点を挙げる。

「安心して社内で利用できるルール整備を行ったうえで、Alli LLM App Marketを導入することで、利用しやすく、セキュアに生成AIを活用できます。また、自社のセキュリティポリシーに適合させた環境を実現することで秘匿性の高い業務情報も利用可能です」(小林氏)

小林氏は、効果的な全社展開方法の事例として、同社が支援を行った従業員5,000人規模のITサービス企業でのAlli LLM App Marketの導入事例について解説した。この企業では、多くの社員が生成AIに触れて、便利さを実感してもらうことで利用の拡大を図る方針を立て、全社展開を加速するために、部門でのトライアルを行わずに、まず全社公開を行ったことが特徴的だ。社内ポータルサイトを通じたAlli LLM App Marketへの容易なアクセス確保や、親しみやすい名称の採用により、心理的なハードルを下げることに成功したという。

セキュリティ面では、シングルサインオン認証の導入や、全社活用と特定業務用に環境を分離するなど、社内ポリシーに応じた柔軟な対応を実現。日立ソリューションズでは生成AI導入の豊富な実績とノウハウをもとに、SaaSから物理サーバー環境までさまざまな導入形態に対応可能だ。

「今後は生成AIを業務システムやプロセスに組み込み、業務全体を効率化していく流れが加速するでしょう」と小林氏は展望を語る。たとえば問い合わせ対応業務では、受付後の手間のかかる回答作成、進捗管理などを含めた一連のプロセスをデジタル化し、そこに生成AIを組み込むことで、より効率的な業務遂行が可能になるという。

日立ソリューションズでは、今後、こうした業務全体の効率化を実現するソリューションの提供を進めていく。

関連リンク 生成AI(Generative AI)とは?生成AIサービスをビジネスで活用する導入支援 https://www.hitachi-solutions.co.jp/products/pickup/generative-ai/

企業向け生成AI利用環境を提供する「Alli LLM App Market」 https://www.hitachi-solutions.co.jp/allganize/