#exascale computing

Explore tagged Tumblr posts

Text

Powering the Future

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in How High‑Performance Computing Ignites Innovation Across Disciplines. Explore how HPC and supercomputers drive breakthrough research in science, finance, and engineering, fueling innovation and transforming our world. High‑Performance Computing (HPC) and supercomputers are the engines that power modern scientific, financial,…

#AI Integration#Data Analysis#Energy Efficiency#Engineering Design#Exascale Computing#Financial Modeling#High‑Performance Computing#HPC#Innovation#News#Parallel Processing#Sanjay Kumar Mohindroo#Scientific Discovery#Simulation#Supercomputers

0 notes

Text

The Evolution and Impact of Supercomputers and Servers in the Modern World

Introduction Supercomputers represent the pinnacle of computational power, evolving from massive, room-sized machines to sleek, compact devices with immense processing capabilities. These advancements have drastically transformed scientific research, industry, and even daily life. In parallel, server technology has undergone a rapid evolution, supporting the backbone of global networks and data…

#artificial intelligence#cloud computing#compact supercomputers#Cray supercomputers#Cray-1#Cryptography#exascale computing#Fugaku supercomputer#future of computing#Google Sycamore#high-performance computing#history of supercomputers#HPC applications#IBM 7030 Stretch#machine learning#massively parallel processing#NVIDIA DGX Station#parallel processing#personalized medicine#Q-bit theory#Q-bits#quantum algorithms#Quantum Computing#quantum servers#quantum supremacy#scientific simulations#server technology#Seymour Cray#supercomputing#technological advancements

0 notes

Text

Exascale Computing Market Size, Share, Analysis, Forecast, and Growth Trends to 2032: The Race to One Quintillion Calculations Per Second

The Exascale Computing Market was valued at USD 3.47 billion in 2023 and is expected to reach USD 29.58 billion by 2032, growing at a CAGR of 26.96% from 2024-2032.

The Exascale Computing Market is undergoing a profound transformation, unlocking unprecedented levels of computational performance. With the ability to process a billion billion (quintillion) calculations per second, exascale systems are enabling breakthroughs in climate modeling, genomics, advanced materials, and national security. Governments and tech giants are investing aggressively, fueling a race for exascale dominance that’s reshaping industries and redefining innovation timelines.

Exascale Computing Market revolutionary computing paradigm is being rapidly adopted across sectors seeking to harness the immense data-crunching potential. From predictive simulations to AI-powered discovery, exascale capabilities are enabling new frontiers in science, defense, and enterprise. Its impact is now expanding beyond research labs into commercial ecosystems, paving the way for smarter infrastructure, precision medicine, and real-time global analytics.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6035

Market Keyplayers:

Hewlett Packard Enterprise (HPE) [HPE Cray EX235a, HPE Slingshot-11]

International Business Machines Corporation (IBM) [IBM Power System AC922, IBM Power System S922LC]

Intel Corporation [Intel Xeon Max 9470, Intel Max 1550]

NVIDIA Corporation [NVIDIA GH200 Superchip, NVIDIA Hopper H100]

Cray Inc. [Cray EX235a, Cray EX254n]

Fujitsu Limited [Fujitsu A64FX, Tofu interconnect D]

Advanced Micro Devices, Inc. (AMD) [AMD EPYC 64C 2.0GHz, AMD Instinct MI250X]

Lenovo Group Limited [Lenovo ThinkSystem SD650 V3, Lenovo ThinkSystem SR670 V2]

Atos SE [BullSequana XH3000, BullSequana XH2000]

NEC Corporation [SX-Aurora TSUBASA, NEC Vector Engine]

Dell Technologies [Dell EMC PowerEdge XE8545, Dell EMC PowerSwitch Z9332F]

Microsoft [Microsoft Azure NDv5, Microsoft Azure HPC Cache]

Amazon Web Services (AWS) [AWS Graviton3, AWS Nitro System]

Sugon [Sugon TC8600, Sugon I620-G30]

Google [Google TPU v4, Google Cloud HPC VM]

Alibaba Cloud [Alibaba Cloud ECS Bare Metal Instance, Alibaba Cloud HPC Cluster]

Market Analysis The exascale computing landscape is characterized by high-stakes R&D, global governmental collaborations, and fierce private sector competition. With countries like the U.S., China, and members of the EU launching national initiatives, the market is shaped by a mix of geopolitical strategy and cutting-edge technology. Key players are focusing on developing energy-efficient architectures, innovative software stacks, and seamless integration with artificial intelligence and machine learning platforms. Hardware giants are partnering with universities, startups, and defense organizations to accelerate deployments and overcome system-level challenges such as cooling, parallelism, and power consumption.

Market Trends

Surge in demand for high-performance computing in AI and deep learning

Integration of exascale systems with cloud and edge computing ecosystems

Government funding and national strategic investments on the rise

Development of heterogeneous computing systems (CPUs, GPUs, accelerators)

Emergence of quantum-ready hybrid systems alongside exascale architecture

Adoption across healthcare, aerospace, energy, and climate research sectors

Market Scope

Supercomputing for Scientific Discovery: Empowering real-time modeling and simulations at unprecedented speeds

Defense and Intelligence Advancements: Enhancing cybersecurity, encryption, and strategic simulations

Precision Healthcare Applications: Supporting drug discovery, genomics, and predictive diagnostics

Sustainable Energy Innovations: Enabling complex energy grid management and fusion research

Smart Cities and Infrastructure: Driving intelligent urban planning, disaster management, and IoT integration

As global industries shift toward data-driven decision-making, the market scope of exascale computing is expanding dramatically. Its capacity to manage and interpret massive datasets in real-time is making it essential for competitive advantage in a rapidly digitalizing world.

Market Forecast The trajectory of the exascale computing market points toward rapid scalability and broader accessibility. With increasing collaborations between public and private sectors, we can expect a new wave of deployments that bridge research and industry. The market is moving from proof-of-concept to full-scale operationalization, setting the stage for widespread adoption across diversified verticals. Upcoming innovations in chip design, power efficiency, and software ecosystems will further accelerate this trend, creating a fertile ground for startups and enterprise adoption alike.

Access Complete Report: https://www.snsinsider.com/reports/exascale-computing-market-6035

Conclusion Exascale computing is no longer a vision of the future—it is the powerhouse of today’s digital evolution. As industries align with the pace of computational innovation, those embracing exascale capabilities will lead the next wave of transformation. With its profound impact on science, security, and commerce, the exascale computing market is not just growing—it is redefining the very nature of progress. Businesses, researchers, and nations prepared to ride this wave will find themselves at the forefront of a smarter, faster, and more resilient future.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Aiming exascale at black holes - Technology Org

New Post has been published on https://thedigitalinsider.com/aiming-exascale-at-black-holes-technology-org/

Aiming exascale at black holes - Technology Org

In 1783, John Michell, a rector in northern England, “proposed that the mass of a star could reach a point where its gravity prevented the escape of most anything, even light. The same prediction emerged from [founding IAS Faculty] Albert Einstein’s theory of general relativity. Finally, in 1968, physicist [and Member (1937) in the School of Math/Natural Sciences] John Wheeler gave such phenomena a name: black holes.”

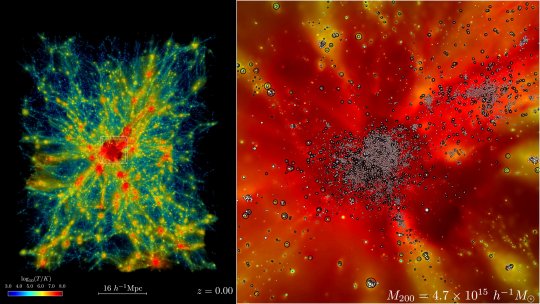

As plasma—matter turned into ionized gas—falls into a black hole (center), energy is released through a process called accretion. This simulation, run on a Frontier supercomputer, shows the plasma temperature (yellow = hottest) during accretion. Image credit: Chris White and James Stone, Institute for Advanced Study

Despite initial skepticism that such astrophysical objects could exist, observations now estimate that there are 40 quintillion (or 40 thousand million billion) black holes in the universe. These black holes are important because the matter that falls into them “doesn’t just disappear quietly,” says James Stone, Professor in the School of Natural Sciences.

“Instead, matter turns into plasma, or ionized gas, as it rotates toward a black hole. The ionized particles in the plasma ‘get caught in the gravitational field of a black hole, and as they are pulled in they release energy,’ he says. That process is called accretion, and scientists think the energy released by accretion powers many processes on scales up to the entire galaxy hosting the black hole.”

To explore this process, Stone uses general relativistic radiation magnetohydrodynamics (MHD). But the equations behind MHD are “so complicated that analytic solutions — finding solutions with pencil and paper — [are] probably impossible.” Instead, by running complex simulations on high-performance computers like Polaris and Frontier, Stone and his colleagues are working to understand how radiation changes black hole accretion.

“The code created by Stone’s team to investigate black hole accretion can be applied to other astrophysical phenomena. Stone mentions that he ‘can use the same […] code for MHD simulations to follow the motion of cosmic rays,’ high-energy particles also produced by black holes.”

Source: Institute for Advanced Study

You can offer your link to a page which is relevant to the topic of this post.

#albert einstein#Astronomy news#billion#black hole#Black holes#code#computers#cosmic rays#energy#exascale#Faculty#Fundamental physics news#Galaxy#gas#general relativity#gravity#Hosting#how#IAS#it#Light#Link#mass#math#matter#natural#objects#Other#paper#particles

0 notes

Video

youtube

The AI Arms Race: The High Cost of Powering the Coming Revolution

0 notes

Text

New project investigates mysteries of Sun's atmosphere

The sun's activity has a profound impact on satellites, humans in space and technology on Earth.

To understand the physical processes behind the sun's activity, it is vital for any simulation to capture the fundamental interplay between the sun's radiation and conditions in the vastly different layers of the sun's atmosphere (the photosphere, the chromosphere and corona), the complex coupling between them, and how magnetic flux emergence drives eruptions and flares.

No model can currently do this—but one is necessary to understand the cutting-edge observations produced by new facilities, and to provide a step-change in our understanding of how the solar atmosphere works.

The Solar Atmospheric Modeling Suite, or SAMS, project aims to build a next-generation modeling tool for the solar atmosphere—making a code that can be run on anything from laptops to the latest supercomputers.

The project team is led by the University of Exeter and includes the universities of Warwick, Sheffield and Cambridge.

"For a long time the UK was the leading the way in simulating the atmosphere of the sun, but in recent years we have been eclipsed," said Professor Andrew Hillier, from the University of Exeter.

"This project will put us right back as one of the leaders in this area."

For this project, the team will build a modeling suite with detailed physics-based documentation to promote ease of use.

This will be open-source with world-leading physics capabilities designed to maintain the UK's solar physics community at the forefront of international research while pushing forward research in groups around the world.

This will also enable full exploitation of next-generation observations and Exascale computing.

This project will also provide training for early career researchers on the complex underlying physics of the solar atmosphere and how to model this with SAMS.

Dr. Erwin Verwichte, Associate Professor (Reader), University of Warwick, said, "Warwick has built a world-leading reputation in numerical modeling of plasma physics.

"Our simulation codes, whether applied to fusion research, the sun or space weather, are used by researchers across the world.

"The SAMS code will be built on top of that heritage and signifies a key stepping stone in simulating and expanding our knowledge of the sun's atmosphere."

Professor Grahame Blair, STFC Executive Director of Programs, said, "This substantial investment demonstrates our commitment to maintaining the UK's leading role in solar physics research.

"Understanding the complex dynamics of our sun is vital not just for scientific advancement, but for protecting our technology infrastructure, satellite networks, power grids and communications systems on Earth from the impacts of space weather."

IMAGE: Observations of a sunspot taken on 04/10/2017 in H-alpha using the New Solar Telescope at Big Bear Solar Observatory. Credit: Juie Shetye (NMSU), Erwin Verwichte (Warwick), Kevin Reardon (NSO)

11 notes

·

View notes

Text

What is Exascale Computing? #Computing #Exascale https://www.altdatum.com/what-is-exascale-computing/?feed_id=135306&_unique_id=6864224c6914f

0 notes

Text

Advantage2 D Wave’s Powering Future Of Quantum Computing

D-wave unveils advantage2 quantum computer The Advantage2 D-Wave quantum computer's general availability marks a turning point for D-Wave and quantum computing. D-Wave's sixth-generation quantum computer can be used on-premises or in the cloud. It aims to solve complex industrial difficulties faster and better.

What is D-Wave's Advantage2 Quantum Computer? Advantage2 is a 4,400-qubit (or 4,400+ qubit) quantum computer that encourages quantum annealing, unlike general-purpose quantum processors. The latter are experimental, while annealing systems are designed to handle large-scale optimisation problems. These include scheduling, machine learning, route planning, and other tasks with many competing limits and options that typical computers cannot handle at scale. D-Wave sells Advantage2 as a commercial AI, materials modelling, and optimisation system. Advantage2 D wave tech innovations and features Hardware and architectural upgrades make Advantage2 faster, more accurate, and easier to operate. These include: Enhanced Qubit Connectivity: The Advantage2 processor's 20-way connectivity and Zephyr structure enable more complex problems. Energy Scale and Noise: The system provides 40% more energy scale and 75% less noise than its predecessor, resulting in better solutions for complex computations. Doubled Coherence: Quantum state stability has doubled. This improves computing speed and reliability, reducing processor time-to-solution. Fast Anneal: A revolutionary feature called “fast anneal” allows the quantum processor to compute quickly while avoiding environmental disruptions, a common issue in quantum systems. Energy Efficiency: The Advantage2 maintains D-Wave's 12.5 kilowatt power footprint from its initial commercial system despite its improved performance.

Enterprise and government clients seeking computational benefits without high operational costs would appreciate this energy efficiency and faster solution times. Accessibility, Hybrid Solvers Advantage2 is available on D-Wave's Leap cloud platform, which allows real-time, remote quantum system and hybrid solver utilization. Leap is available in over 40 countries with 99.9% uptime, sub-second response times, and SOC 2 Type 2 accreditation to meet organisational and security needs. For immediate access, D-Wave offers on-site installation. One device will be hosted by Davidson Technologies in Huntsville, Alabama, for quantum research related to national security. The Jülich Supercomputing Centre in Germany is adding another. To facilitate AI and quantum research collaboration, it will be linked to JUPITER, Europe's only exascale supercomputer. The Leap quantum cloud service uses hybrid solvers. By using classical and quantum processing, these techniques can solve problems too large for the quantum processor. The latest version supports up to two million variables and constraints, making it useful for manufacturing, materials research, and logistics. Application and Early User Engagement The Advantage2 addresses computationally difficult problems that traditional computers cannot solve. Over 20 million issue runs have been recorded by early Advantage2 prototype users through Leap since mid-2022. Utilisation rose 134% in six months. Organisations studying applications in diverse fields encourage this growth: Drug Discovery and Pharmaceutical Development: Japan Tobacco used a prototype to show that Advantage2 systems can provide high-quality, low-energy samples to improve generative AI architectures for drug discovery using quantum computing and AI. Materials Science and Research: Jülich Centre and Los Alamos National Laboratory have used early editions for magnetism, condensed matter physics, and AI benchmarks. For magnetic material and condensed matter theory research, LANL is studying analogue quantum computers. National Security: Davidson Technologies hosts an Advantage2 system on-premises for mission-critical challenges and quantum research. AI and optimisation: Businesses are employing the technology to improve mobile networks, labour scheduling, and car production. D-Wave's real-world integration method aims to turn quantum computing into a production technology integrated into commercial and government activities. The company claims to be one of the few quantum hardware vendors having a commercial system.

#Advantage2Dwave#DWave#Advantage2#LANL#DWaveAdvantage2QuantumComputer#technology#TechnologyNews#technews#news#govindhtech#technologytrends

0 notes

Text

High Performance Computing Market: Trends, Drivers, and Future Outlook

The High Performance Computing Market is undergoing rapid expansion and transformation, driven by the increasing demand for complex data processing, advanced simulations, and the emergence of artificial intelligence (AI) and big data analytics. HPC systems, which encompass a wide range of high-performance servers and microservers, are becoming indispensable for industries that require extensive computational power for research, simulations, and operational efficiency. These systems play a crucial role in scientific discoveries, financial modeling, healthcare advancements, and various other domains that demand superior processing capabilities.

Read High Performance Computing Market Report Today –

httpstt.com/report/high-performance-computing-market

High-Performance Computing Market Size and Growth

The global High Performance Computing Market was valued at USD 54.64 billion in 2023 and is projected to experience significant growth, reaching USD 4.28 billion by 2032. The market is expected to grow from USD 58.85 billion in 2024, exhibiting a compound annual growth rate (CAGR) of 7.7%% during the forecast period (2025-2032). Several factors are fueling this growth, including the increasing need for virtualization, enhanced computing power, IT infrastructure expansion, improved scalability, and the rising demand for reliable data storage solutions.

Key Market Data:

Market Size Value in 2023: USD 54.64 billion

Projected Market Size Value in 2032: USD 58.85 billion

Growth Rate (CAGR): 7.7%

Base Year: 2024

Forecast Period: 2025-2032

High Performance Computing Market Segmentation

The High Performance Computing Market is segmented based on multiple factors, allowing for a comprehensive analysis of various aspects that influence market growth.

By Component:

Solutions: Servers, Storage, Networking Devices, Software

Services: Design and Consulting, Integration and Deployment

By Computation Type:

Parallel Computing

Distributed Computing

Exascale Computing

By Deployment Type:

On-Premises

Cloud

By Organization Size:

Small and Medium-Sized Enterprises (SMEs)

Large Enterprises

By Vertical:

Banking, Financial Services, and Insurance (BFSI)

Education and Research

Energy and Utilities

Government and Defense

Healthcare and Life Sciences

Manufacturing

Media and Entertainment

Other Application Areas

Key Market Drivers

Growing Demand for AI and Big Data Analytics: The explosion of data and the need for sophisticated analytics are propelling the demand for HPC solutions. These systems enable organizations to process vast datasets, perform intricate simulations, and extract valuable insights for strategic decision-making.

Advancements in Cloud-Based HPC Solutions: Cloud-based HPC is gaining traction due to its flexibility, scalability, and cost-effectiveness. Cloud solutions offer organizations on-demand access to HPC resources without the need for significant upfront capital investments, making them particularly advantageous for SMEs.

Market Restraints

Complexity of Integration: Integrating HPC systems with existing IT infrastructure poses significant challenges. Ensuring seamless compatibility and achieving optimal performance require specialized expertise and careful planning.

Data Security and Compliance Concerns: Handling sensitive data in HPC environments presents security risks and regulatory compliance challenges. Organizations must implement robust security measures to prevent unauthorized access and adhere to industry regulations.

To Learn More About This Report, Request a Free Sample Copy –

High-Performance Computing Market Competitive Landscape

The global HPC market features a mix of established enterprises and emerging players. Key market participants include:

Advanced Micro Devices, Inc. (USA)

NEC Corporation (Japan)

Hewlett Packard Enterprise (USA)

Qualcomm Incorporated (USA)

Fujitsu Limited (Japan)

Intel Corporation (USA)

IBM Corporation (USA)

Microsoft Corporation (USA)

Dell Technologies Inc. (USA)

Dassault Systemes SE (France)

Lenovo Group Ltd (China)

These companies are focusing on innovative HPC solutions that integrate AI, cloud computing, and advanced technologies to enhance performance and efficiency.

Recent Developments

Advancements in server technology, including multi-core processors and AI accelerators, are improving HPC performance.

Increasing adoption of cloud-based HPC solutions is offering businesses greater flexibility and cost-effectiveness.

Substantial investments in R&D are driving innovation in storage and data management systems, addressing security and integration challenges.

Future Outlook

The High Performance Computing Market is poised for continuous growth, driven by advancements in AI, big data analytics, and cloud computing. Despite challenges such as integration complexity and data security, ongoing technological developments are expected to overcome these hurdles. As organizations increasingly adopt HPC to gain a competitive edge, the market will continue to evolve, offering enhanced computational capabilities and new opportunities for industry expansion.

0 notes

Link

0 notes

Text

Immersion Cooling Market Size, Share, Growth Drivers, and Forecast to 2033

"Immersion Cooling Market" - Research Report, 2025-2033 delivers a comprehensive analysis of the industry's growth trajectory, encompassing historical trends, current market conditions, and essential metrics including production costs, market valuation, and growth rates. Immersion Cooling Market Size, Share, Growth, and Industry Analysis, By Type (Single-Phase Immersion Cooling, Two-Phase Immersion Cooling), By Application (High Performance Computing, Artificial Intelligence, Edge Computing, Cryptocurrency Mining, Cloud Computing, Others), Regional Insights and Forecast to 2033 are driving major changes, setting new standards and influencing customer expectations. These advancements are expected to lead to significant market growth. Capitalize on the market's projected expansion at a CAGR of 17.3% from 2024 to 2033. Our comprehensive [127+ Pages] market research report offers Exclusive Insights, Vital Statistics, Trends, and Competitive Analysis to help you succeed in this Information & Technology sector.

Immersion Cooling Market: Is it Worth Investing In? (2025-2033)

Global Immersion Cooling market size is anticipated to be worth USD 354.16 million in 2024, projected to reach USD 1782.78 million by 2033 at a 17.3% CAGR.

The Immersion Cooling market is expected to demonstrate strong growth between 2025 and 2033, driven by 2024's positive performance and strategic advancements from key players.

The leading key players in the Immersion Cooling market include:

Fujitsu

Green Revolution Cooling(GRC)

Submer Technologies

3M

Supermicro

Equinix

Digital Realty

NTT

Allied Control

Asperitas

Midas Green Technologies

Iceotope Technologies

LiquidCool Solutions

Downunder Geosolutions

DCX Liquid Cooling Company

Solvay

Liqit

Exascaler

Qcooling

Horizon Computing Solutions

Wiwynn

PRASA Infocom & Power Solutions

Request a Free Sample Copy @ https://www.marketgrowthreports.com/enquiry/request-sample/103825

Report Scope

This report offers a comprehensive analysis of the global Immersion Cooling market, providing insights into market size, estimations, and forecasts. Leveraging sales volume (K Units) and revenue (USD millions) data, the report covers the historical period from 2020 to 2025 and forecasts for the future, with 2024 as the base year.

For granular market understanding, the report segments the market by product type, application, and player. Additionally, regional market sizes are provided, offering a detailed picture of the global Immersion Cooling landscape.

Gain valuable insights into the competitive landscape through detailed profiles of key players and their market ranks. The report also explores emerging technological trends and new product developments, keeping you at the forefront of industry advancements.

This research empowers Immersion Cooling manufacturers, new entrants, and related industry chain companies by providing critical information. Access detailed data on revenues, sales volume, and average price across various segments, including company, type, application, and region.

Request a Free Sample Copy of the Immersion Cooling Report 2025 - https://www.marketgrowthreports.com/enquiry/request-sample/103825

Understanding Immersion Cooling Product Types & Applications: Key Trends and Innovations in 2025

By Product Types:

Single-Phase Immersion Cooling

Two-Phase Immersion Cooling

By Application:

High Performance Computing

Artificial Intelligence

Edge Computing

Cryptocurrency Mining

Cloud Computing

Others

Emerging Immersion Cooling Market Leaders: Where's the Growth in 2025?

North America (United States, Canada and Mexico)

Europe (Germany, UK, France, Italy, Russia and Turkey etc.)

Asia-Pacific (China, Japan, Korea, India, Australia, Indonesia, Thailand, Philippines, Malaysia and Vietnam)

South America (Brazil, Argentina, Columbia etc.)

Middle East and Africa (Saudi Arabia, UAE, Egypt, Nigeria and South Africa)

Inquire more and share questions if any before the purchase on this report at - https://www.marketgrowthreports.com/enquiry/request-sample/103825

This report offers a comprehensive analysis of the Immersion Cooling market, considering both the direct and indirect effects from related industries. We examine the pandemic's influence on the global and regional Immersion Cooling market landscape, including market size, trends, and growth projections. The analysis is further segmented by type, application, and consumer sector for a granular understanding.

Additionally, the report provides a pre and post pandemic assessment of key growth drivers and challenges within the Immersion Cooling industry. A PESTEL analysis is also included, evaluating political, economic, social, technological, environmental, and legal factors influencing the market.

We understand that your specific needs might require tailored data. Our research analysts can customize the report to focus on a particular region, application, or specific statistics. Furthermore, we continuously update our research, triangulating your data with our findings to provide a comprehensive and customized market analysis.

COVID-19 Changed Us? An Impact and Recovery Analysis

This report delves into the specific repercussions on the Immersion Cooling Market. We meticulously tracked both the direct and cascading effects of the pandemic, examining how it reshaped market size, trends, and growth across international and regional landscapes. Segmented by type, application, and consumer sector, this analysis provides a comprehensive view of the market's evolution, incorporating a PESTEL analysis to understand key influencers and barriers. Ultimately, this report aims to provide actionable insights into the market's recovery trajectory, reflecting the broader shifts. Final Report will add the analysis of the impact of Russia-Ukraine War and COVID-19 on this Immersion Cooling Industry.

TO KNOW HOW COVID-19 PANDEMIC AND RUSSIA UKRAINE WAR WILL IMPACT THIS MARKET - REQUEST SAMPLE

Detailed TOC of Global Immersion Cooling Market Research Report, 2025-2033

1 Report Overview

1.1 Study Scope 1.2 Global Immersion Cooling Market Size Growth Rate by Type: 2020 VS 2024 VS 2033 1.3 Global Immersion Cooling Market Growth by Application: 2020 VS 2024 VS 2033 1.4 Study Objectives 1.5 Years Considered

2 Global Growth Trends

2.1 Global Immersion Cooling Market Perspective (2020-2033) 2.2 Immersion Cooling Growth Trends by Region 2.2.1 Global Immersion Cooling Market Size by Region: 2020 VS 2024 VS 2033 2.2.2 Immersion Cooling Historic Market Size by Region (2020-2025) 2.2.3 Immersion Cooling Forecasted Market Size by Region (2025-2033) 2.3 Immersion Cooling Market Dynamics 2.3.1 Immersion Cooling Industry Trends 2.3.2 Immersion Cooling Market Drivers 2.3.3 Immersion Cooling Market Challenges 2.3.4 Immersion Cooling Market Restraints

3 Competition Landscape by Key Players

3.1 Global Top Immersion Cooling Players by Revenue 3.1.1 Global Top Immersion Cooling Players by Revenue (2020-2025) 3.1.2 Global Immersion Cooling Revenue Market Share by Players (2020-2025) 3.2 Global Immersion Cooling Market Share by Company Type (Tier 1, Tier 2, and Tier 3) 3.3 Players Covered: Ranking by Immersion Cooling Revenue 3.4 Global Immersion Cooling Market Concentration Ratio 3.4.1 Global Immersion Cooling Market Concentration Ratio (CR5 and HHI) 3.4.2 Global Top 10 and Top 5 Companies by Immersion Cooling Revenue in 2024 3.5 Immersion Cooling Key Players Head office and Area Served 3.6 Key Players Immersion Cooling Product Solution and Service 3.7 Date of Enter into Immersion Cooling Market 3.8 Mergers & Acquisitions, Expansion Plans

4 Immersion Cooling Breakdown Data by Type

4.1 Global Immersion Cooling Historic Market Size by Type (2020-2025) 4.2 Global Immersion Cooling Forecasted Market Size by Type (2025-2033)

5 Immersion Cooling Breakdown Data by Application

5.1 Global Immersion Cooling Historic Market Size by Application (2020-2025) 5.2 Global Immersion Cooling Forecasted Market Size by Application (2025-2033)

6 North America

6.1 North America Immersion Cooling Market Size (2020-2033) 6.2 North America Immersion Cooling Market Growth Rate by Country: 2020 VS 2024 VS 2033 6.3 North America Immersion Cooling Market Size by Country (2020-2025) 6.4 North America Immersion Cooling Market Size by Country (2025-2033) 6.5 United States 6.6 Canada

7 Europe

7.1 Europe Immersion Cooling Market Size (2020-2033) 7.2 Europe Immersion Cooling Market Growth Rate by Country: 2020 VS 2024 VS 2033 7.3 Europe Immersion Cooling Market Size by Country (2020-2025) 7.4 Europe Immersion Cooling Market Size by Country (2025-2033) 7.5 Germany 7.6 France 7.7 U.K. 7.8 Italy 7.9 Russia 7.10 Nordic Countries

8 Asia-Pacific

8.1 Asia-Pacific Immersion Cooling Market Size (2020-2033) 8.2 Asia-Pacific Immersion Cooling Market Growth Rate by Region: 2020 VS 2024 VS 2033 8.3 Asia-Pacific Immersion Cooling Market Size by Region (2020-2025) 8.4 Asia-Pacific Immersion Cooling Market Size by Region (2025-2033) 8.5 China 8.6 Japan 8.7 South Korea 8.8 Southeast Asia 8.9 India 8.10 Australia

9 Latin America

9.1 Latin America Immersion Cooling Market Size (2020-2033) 9.2 Latin America Immersion Cooling Market Growth Rate by Country: 2020 VS 2024 VS 2033 9.3 Latin America Immersion Cooling Market Size by Country (2020-2025) 9.4 Latin America Immersion Cooling Market Size by Country (2025-2033) 9.5 Mexico 9.6 Brazil

10 Middle East & Africa

10.1 Middle East & Africa Immersion Cooling Market Size (2020-2033) 10.2 Middle East & Africa Immersion Cooling Market Growth Rate by Country: 2020 VS 2024 VS 2033 10.3 Middle East & Africa Immersion Cooling Market Size by Country (2020-2025) 10.4 Middle East & Africa Immersion Cooling Market Size by Country (2025-2033) 10.5 Turkey 10.6 Saudi Arabia 10.7 UAE

11 Key Players Profiles

12 Analyst's Viewpoints/Conclusions

13 Appendix

13.1 Research Methodology 13.1.1 Methodology/Research Approach 13.1.2 Data Source 13.2 Disclaimer 13.3 Author Details

Request a Free Sample Copy of the Immersion Cooling Report 2025 @ https://www.marketgrowthreports.com/enquiry/request-sample/103825

About Us: Market Growth Reports is a unique organization that offers expert analysis and accurate data-based market intelligence, aiding companies of all shapes and sizes to make well-informed decisions. We tailor inventive solutions for our clients, helping them tackle any challenges that are likely to emerge from time to time and affect their businesses.

0 notes

Text

Seamless Integration of aarna.ml GPU CMS with DDN EXAScaler for High-Performance AI Workloads

Managing external storage forGPU-accelerated AI workloads can be complex—especially when ensuring thatstorage volumes are provisioned correctly, isolated per tenant, andautomatically mounted to the right compute nodes. With aarna.ml GPU CloudManagement Software (GPU CMS), this entire process is streamlined throughseamless integration with DDN EXAScaler.

End-to-EndAutomation with No Manual Steps

With aarna.ml GPU CMS, end users don’tneed to manually log into multiple systems, configure storage mounts, or worryabout compatibility between compute and storage. The DDN EXAScaler integrationis fully automated—allowing users to simply specify:

Everything else—from tenant-awareprovisioning, storage policy enforcement, network isolation, to automatic mountpoint creation—is handled seamlessly by aarna.ml GPU CMS.

Simpleand Efficient Flow

Theprocess starts with the NCP admin (cloud provider admin) importing the entireGPU infrastructure (compute, storage, E⇔W network, N⇔S network) into thesoftware and setting up a new tenant. Once the tenant is created, the tenantuser can allocate a GPU bare-metal or VM instance and request external storagefrom DDN.

The tenant simply provides:

Once these inputs are provided, aarna.mlGPU CMS handles all interactions with DDN, including:

This zero-touch integration eliminatesany need for the tenant to interact with the DDN portal directly.

Real-TimeValidation Across Systems

To ensure transparency and operationalassurance, the NCP admin or tenant admin can view all configured storagevolumes directly within aarna.ml GPU CMS. For additional verification, they canalso cross-check the automatically created tenants, networks, policies, andmount points directly in the DDN admin portal.

All configurations are performed via APIswith no manual intervention.

FullTenant Experience

Once the storage is provisioned, thetenant user can log directly into their allocated GPU compute node andimmediately access the mounted DDN EXAScaler storage volume. Whether forlarge-scale AI training data or model checkpoints or inference, this automatedmount ensures data is available where and when the user needs it.

KeyBenefits

The aarna.ml GPU CMS provides thefollowing key benefits:

API-DrivenConsistency: All configurations—frommount points to network overlays—are performed through automated APIs, ensuringaccuracy and compliance with tenant policies.

This post originally published on https://www.aarna.ml

0 notes

Text

WEF schedules Quantum Technology conference as Europe moves more into quantum-666?

COGwriter

The European-based World Economic Forum (WEF) has announced a virtual quantum conference it has set for next week:

Innovation and Quantum Technology27 March, 15:00 CETQuantum technology is transforming industries and solving some of the world’s most complex challenges. From breakthroughs in healthcare and finance to advancements in artificial intelligence and data security, quantum innovation is redefining what’s possible. …

Explore Strategic Intelligence: Quantum EconomyQuantum technologies will impact many critical industries and inspire new business models. Diverse technologies such as quantum computing, quantum communications, quantum sensing, and quantum materials have given rise to new industry players and promising solutions for end users, greater sustainability, and new answers to hitherto unsolved problems. Quantum technology will eventually permeate and affect every key sector of the economy and take us into a period likely to be referred to as the “post-quantum era.” …

The World Economic Forum, committed to improving the state of the world, is the International Organization for Public-Private Cooperation. The Forum engages the foremost political, business, cultural and other leaders of society to shape global, regional and industry agendas.

Yes, the WEF is correct that Quantum technologies will impact many.

European nations are getting more involved with quantum tech as the following shows:

IBM and Basque Government to Launch Europe’s First Quantum Computing Hub in Spain

March 20, 2025

IBM … and the Basque Government have announced plans to install Europe’s first IBM Quantum System Two in San Sebastián, Spain. This initiative is part of the BasQ project, which seeks to position the Basque Country as a center for quantum technology development.

The IBM Quantum System Two will be housed at the IBM Euskadi Quantum Computational Center on the Ikerbasque Foundation’s main campus in San Sebastián. The system is expected to be operational by the end of 2025.

The system will be powered by a 156-qubit IBM Quantum Heron processor, designed to execute complex algorithms beyond the capabilities of classical computers. It will leverage Qiskit software to run quantum circuits with up to 5,000 two-qubit gate operations, expanding the potential for computational research in various fields. https://www.tipranks.com/news/ibm-and-basque-government-to-launch-europes-first-quantum-computing-hub-in-spain

Spain is not the only place in Europe that these quantum supercomputers are planned for.

Notice the following from last Fall:

New JUPITER module strengthens leading position of Europe’s upcoming Exascale Supercomputer

The journey towards Europe’s first exascale supercomputer, JUPITER, at Forschungszentrum Jülich is progressing at a robust pace. A major milestone has just been reached with the completion of JETI, the second module of this groundbreaking system. By doubling the performance of JUWELS Booster—currently the fastest supercomputer in Germany—JETI now ranks among the world’s most powerful supercomputers, as confirmed today at the Supercomputing Conference SC in Atlanta, USA. The JUPITER Exascale Transition Instrument, JETI, is already one-twelfth of the power of the final JUPITER system, setting a new benchmark on the TOP500 list

18 November 2024

Built by the Franco-German team ParTec-Eviden, Europe’s first exascale supercomputer, JUPITER, will enable breakthroughs in the use of artificial intelligence (AI) and take scientific simulations and discoveries to a new level. Procured by the European supercomputing initiative EuroHPC Joint Undertaking (EuroHPC JU), it will be operated by the Jülich Supercomputing Centre (JSC), one of three national supercomputing centres within the Gauss Centre for Supercomputing (GCS). Since the middle of this year, JUPITER has been gradually installed at Forschungszentrum Jülich. Currently, the modular high-performance computing facility, known as the Modular Data Centre (MDC), is being delivered to house the supercomputer. The hardware for JUPITER’s booster module will occupy 125 racks, which are currently being pre-installed at Eviden’s flagship factory in Angers, France, and will then be shipped to Jülich ready for operation.

The final JUPITER system will be equipped with approximately 24,000 NVIDIA GH200 Grace Hopper Superchips, specifically optimized for computationally intensive simulations and the training of AI models. This will enable JUPITER to achieve more than 70 ExaFLOP/s in lower-precision 8-bit calculations, making it one of the world’s fastest systems for AI. The current JETI pilot system contains 10 racks, which is exactly 8 percent of the size of the full system. In a trial run using the Linpack Benchmark for the TOP500 list, JETI achieved a performance of 83 petaflops, which is equivalent to 83 million billion operations per second (1,000 PetaFLOP is equal to 1 ExaFLOP). With this performance JETI ranks 18th on the current TOP500 list of the world’s fastest supercomputers, doubling the performance of the current German flagship supercomputer JUWELS Booster, also operated by JSC. …

In order to equip Europe with a world-leading supercomputing infrastructure, the EuroHPC JU has already procured nine supercomputers, located across Europe. No matter where in Europe they are located, European scientists and users from the public sector and industry can benefit from these EuroHPC supercomputers via the EuroHPC Access Calls to advance science and support the development of a wide range of applications with industrial, scientific and societal relevance for Europe.https://www.mynewsdesk.com/partec/pressreleases/new-jupiter-module-strengthens-leading-position-of-europes-upcoming-exascale-supercomputer-3355162?utm_source=rss&utm_medium=rss&utm_campaign=Alert&utm_content=pressrelease

The JUPITER Exascale Transition Instrument, JETI, is already one-twelfth of the power of the final JUPITER system, setting a new benchmark on the TOP500 list. 11/19/24 https://www.hpcwire.com/off-the-wire/new-jupiter-module-strengthens-leading-position-of-europes-upcoming-exascale-supercomputer/?utm_source=twitter&utm_medium=social&utm_term=hpcwire&utm_content=05dac910-6268-4a88-b01b-1f0dd233eca9

Exascale: the Engine of Discovery

Exascale computing will have a profound impact on everyday life in the coming decades. At 1,000,000,000,000,000,000 operations per second, exascale supercomputers will be able to quickly analyze massive volumes of data and more realistically simulate the complex processes and relationships behind many of the fundamental forces of the universe.

accessed 12/19/24 https://www.exascaleproject.org/what-is-exascale/

The “much more” would seem to include military applications as well as the surveillance and control of people and buying and selling.

This sounds like another step to 666.

The speed of process is so fast that it is mindboggling.

The British Broadcasting Corporation (BBC) warned about these type of computers years ago:

What is the quantum apocalypse and should we be scared?

27 January 2022

Imagine a world where encrypted, secret files are suddenly cracked open – something known as “the quantum apocalypse”.

Put very simply, quantum computers work completely differently from the computers developed over the past century. In theory, they could eventually become many, many times faster than today’s machines.

That means that faced with an incredibly complex and time-consuming problem – like trying to decrypt data – where there are multiple permutations running into the billions, a normal computer would take many years to break those encryptions, if ever.

But a future quantum computer, in theory, could do this in just seconds. …

Data thieves

A number of countries … are working hard and investing huge sums of money to develop these super-fast quantum computers with a view to gaining strategic advantage in the cyber-sphere.

Every day vast quantities of encrypted data – including yours and mine – are being harvested without our permission and stored in data banks, ready for the day when the data thieves’ quantum computers are powerful enough to decrypt it. …

Everything we do over the internet today,” says Harri Owen, chief strategy officer at the company PostQuantum, “from buying things online, banking transactions, social media interactions, everything we do is encrypted.

“But once a functioning quantum computer appears that will be able to break that encryption… it can almost instantly create the ability for whoever’s developed it to clear bank accounts, to completely shut down government defence systems – Bitcoin wallets will be drained.” https://www.bbc.com/news/technology-60144498

Monitoring the buying and selling of everything is something that quatum or other computers can do.

The Bible shows that a European power will arise that will control buying and selling:

16 He causes all, both small and great, rich and poor, free and slave, to receive a mark on their right hand or on their foreheads, 17 and that no one may buy or sell except one who has the mark or the name of the beast, or the number of his name. Here is wisdom. Let him who has understanding calculate the number of the beast, for it is the number of a man: His number is 666. (Revelation 13:16-17)

When the Apostle John penned the above, such control of buying and selling was not possible.

However, with supercomputers. AI, and digital payments, it is now.

The European Union has already announced it was launching surveillance software (see ‘The EU is allowing the linking of face recognition databases to create a mega surveillance system’ 666 infrastructure being put into place).

And, now, it looks to be closer to having the supercomputer hardware to make it more of a reality.

There is also an office in the EU to enforce its financial rules.

When it was announced, we put together the following video:

youtube

9:55

EU Setting Up 666 Enforcer?

The European Union is in the process of establishing the European Public Prosecutor’s Office. This is a major, first-of-its-kind move, with the EU setting up a European-wide prosecutor’s office that will have power to investigate and charge people for financial crimes committed against the EU. It looks like this type of office may end up persecuting those that do not have the mark of the Beast when they “buy or sell” as that will later be considered a financial crime in Europe. What does 666 mean? How has that name be calculated? How can we be certain that this is a prophecy for Europe and not Islam? Is the appointment of this new office of significant prophetic importance? Dr. Thiel addresses these issues and more by pointing to scriptures, news items, and historical accounts.

Here is a link to the sermonette video: EU Setting Up 666 Enforcer?

The European Public Prosecutor’s Office officially started operations on 1 June 2021 (https://www.eppo.europa.eu/en/background).

So, yes, there is an enforcer.

Now there is hardware, more surveillance software, and massively faster computation ability.

Persecution will come.

Jesus warned Christians to flee persecution:

23 When they persecute you in this city, flee to another. For assuredly, I say to you, you will not have gone through the cities of Israel before the Son of Man comes (Matthew 10:23).

Surveillance software on supercomputers may well be a factor in fleeing from multiple cities.

Europe in particular is moving more and more towards becoming an Orwellian totalitarian state (watch also Is Germany Becoming Orwell’s 1984?).

When will this fully be in place?

After the Gospel of the Kingdom of God has sufficiently reached the world the end comes and the Beast will rise up (Matthew 24:14-22, Revelation 13, 17:12-13).

We are getting closer to that day.

It looks like quantum supercomputers are another step towards 666.

Related Items:

Is Germany Becoming Orwell’s 1984? In his novel 1984 George Orwell described a totalitarian government that controlled all aspects of human existence. “Big Brother is Watching You” was the reality of this government. George Orwell introduced “Newspeak”, a language that was designed to limit the range of thought. He also defined his concept of “Doublethink”, which is the ability to hold two contradictory beliefs at once and, yet accept both as reality. In 1984, the Thought Police were tasked with actually controlling what people can even think, let alone what they can say! The year 1984 has come and gone, but has George Orwell’s “Big Brother” government finally started to arrive? Are many governments in the world today adopting many of the ideas and tactics outlined in George Orwell’s book? If so, what government or governments are leading the way? Has Germany just put in its own version of “Thought Police”? Think about this: There is now a German Government initiative to report your family for wrong think Dr. Thiel quotes Luke 12:53 in our Bible and then goes on to further shine the light of Biblical prophecy on the answers to these ‘666’ matters. Answers from the verses of the Bible that bring prophecy to life is in this video. Preparing for the ‘Short Work’ and The Famine of the Word What is the ‘short work’ of Romans 9:28? Who is preparing for it? Here is a link to a related video sermon titled: The Short Work. Here is a link to another: Preparing to Instruct Many. Internet Censorship and Prophecy Are concerns about internet censorship limited to nations such as Russia, China, Iran, and North Korea. But what about the USA, Canada, and Germany? What about the European Union? What about internet media companies such as Facebook, Google, YouTube, or email services like MailChimp? Has the attempt to control information been made by various ones over the centuries? Was the New Testament affected by it? What about the church throughout the centuries? Has the Bible already been partially censored? Which Bible prophecies point to coming Internet censorship? What about the Book of Amos? What about the coming 666 Beast and final Antichrist? Is there anything that can be done about this? Should Philadelphian Christians be working on anything now? Will preaching the Gospel of the Kingdom of God vs. a highly media-supported alternative lead to a ‘famine of the word’? More internet censorship is coming as various statements in the Bible support. Europa, the Beast, and Revelation Where did Europe get its name? What might Europe have to do with the Book of Revelation? What about “the Beast”? Is an emerging European power “the daughter of Babylon”? What is ahead for Europe? Here is are links to related videos: European history and the Bible, Europe In Prophecy, The End of European Babylon, and Can You Prove that the Beast to Come is European? Here is a link to a related sermon in the Spanish language: El Fin de la Babilonia Europea. The European Union and the Seven Kings of Revelation 17 Could the European Union be the sixth king that now is, but is not? Here is a link to the related sermon video: European Union & 7 Kings of Revelation 17:10. Must the Ten Kings of Revelation 17:12 Rule over Ten Currently Existing Nations? Some claim that these passages refer to a gathering of 10 currently existing nations together, while one group teaches that this is referring to 11 nations getting together. Is that what Revelation 17:12-13 refers to? The ramifications of misunderstanding this are enormous. Here is a link to a related sermon in the Spanish language: ¿Deben los Diez Reyes gobernar sobre diez naciones? A related sermon in the English language is titled: Ten Kings of Revelation and the Great Tribulation. European Technology and the Beast of Revelation Will the coming European Beast power would use and develop technology that will result in the taking over of the USA and its Anglo-Saxon allies? Is this possible? What does the Bible teach? Here is a related YouTube video: Military Technology and the Beast of Revelation. Persecutions by Church and State This article documents some that have occurred against those associated with the COGs and some prophesied to occur. Will those with the cross be the persecutors or the persecuted–this article has the shocking answer. There are also three video sermons you can watch: Cancel Culture and Christian Persecution, The Coming Persecution of the Church, and Christian Persecution from the Beast. Here is information in the Spanish language: Persecuciones de la Iglesia y el Estado. Orwell’s 1984 by 2024? In 1949, the late George Orwell wrote a disturbing book about a totalitarian government called “nineteen-eighty four.” Despite laws that are supposed to protect freedom of speech and religion, we are seeing governments taking steps consistent with those that George Orwell warned against. We are also seeing this in the media, academia, and in private companies like Google, Facebook, and Twitter. With the advent of technology, totalitarianism beyond what Orwell wrote is possible. Does the Bible teach the coming a totalitarian state similar to George Orwell’s? What about the Antichrist and 666? Will things get worse? What is the solution? Dr. Thiel answers these questions and more in this video. The Mark of Antichrist What is the mark of Antichrist? What have various ones claimed? Here is a link to a related sermon What is the ‘Mark of Antichrist’? Mark of the Beast What is the mark of the Beast? Who is the Beast? What have various ones claimed the mark is? What is the ‘Mark of the Beast’? The Large Hadron Collider has Military Potential Some say this European project is only peaceful. So why is it working on capturing antimatter? Here is a link to a related video: Could the Large Hadron Collider lead to destruction? Two Horned Beast of Revelation and 666 Who is 666? This article explains how the COG views this, and compares this to Ellen White. Here is a link to a prophetic video Six Financial Steps Leading to 666?

LATEST NEWS REPORTS

LATEST BIBLE PROPHECY INTERVIEWS

0 notes

Text

#the future is quantum#ai artwork#ai artist#artists on tumblr#deepsouth#ai#machinelearning#deep learning#neuroscience#neuromorphic computing#exascale#supercomputer#technology#technology news

0 notes

Text

Record-breaking run on Frontier sets new bar for simulating the universe in exascale era

The universe just got a whole lot bigger—or at least in the world of computer simulations, that is. In early November, researchers at the Department of Energy's Argonne National Laboratory used the fastest supercomputer on the planet to run the largest astrophysical simulation of the universe ever conducted.

The achievement was made using the Frontier supercomputer at Oak Ridge National Laboratory. The calculations set a new benchmark for cosmological hydrodynamics simulations and provide a new foundation for simulating the physics of atomic matter and dark matter simultaneously. The simulation size corresponds to surveys undertaken by large telescope observatories, a feat that until now has not been possible at this scale.

"There are two components in the universe: dark matter—which as far as we know, only interacts gravitationally—and conventional matter, or atomic matter," said project lead Salman Habib, division director for Computational Sciences at Argonne.

"So, if we want to know what the universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies," he said. "The astrophysical 'kitchen sink' so to speak. These simulations are what we call cosmological hydrodynamics simulations."

Not surprisingly, the cosmological hydrodynamics simulations are significantly more computationally expensive and much more difficult to carry out compared to simulations of an expanding universe that only involve the effects of gravity.

"For example, if we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you're talking about looking at huge chunks of time—billions of years of expansion," Habib said. "Until recently, we couldn't even imagine doing such a large simulation like that except in the gravity-only approximation."

The supercomputer code used in the simulation is called HACC, short for Hardware/Hybrid Accelerated Cosmology Code. It was developed around 15 years ago for petascale machines. In 2012 and 2013, HACC was a finalist for the Association for Computing Machinery's Gordon Bell Prize in computing.

Later, HACC was significantly upgraded as part of ExaSky, a special project led by Habib within the Exascale Computing Project, or ECP. The project brought together thousands of experts to develop advanced scientific applications and software tools for the upcoming wave of exascale-class supercomputers capable of performing more than a quintillion, or a billion-billion, calculations per second.

As part of ExaSky, the HACC research team spent the last seven years adding new capabilities to the code and re-optimizing it to run on exascale machines powered by GPU accelerators. A requirement of the ECP was for codes to run approximately 50 times faster than they could before on Titan, the fastest supercomputer at the time of the ECP's launch. Running on the exascale-class Frontier supercomputer, HACC was nearly 300 times faster than the reference run.

The novel simulations achieved its record-breaking performance by using approximately 9,000 of Frontier's compute nodes, powered by AMD Instinct MI250X GPUs. Frontier is located at ORNL's Oak Ridge Leadership Computing Facility, or OLCF.

IMAGE: A small sample from the Frontier simulations reveals the evolution of the expanding universe in a region containing a massive cluster of galaxies from billions of years ago to present day (left). Red areas show hotter gasses, with temperatures reaching 100 million Kelvin or more. Zooming in (right), star tracer particles track the formation of galaxies and their movement over time. Credit: Argonne National Laboratory, U.S Dept of Energy

vimeo

In early November 2024, researchers at the Department of Energy's Argonne National Laboratory used Frontier, the fastest supercomputer on the planet, to run the largest astrophysical simulation of the universe ever conducted. This movie shows the formation of the largest object in the Frontier-E simulation. The left panel shows a 64x64x76 Mpc/h subvolume of the simulation (roughly 1e-5 the full simulation volume) around the large object, with the right panel providing a closer look. In each panel, we show the gas density field colored by its temperature. In the right panel, the white circles show star particles and the open black circles show AGN particles. Credit: Argonne National Laboratory, U.S Dept. of Energy

3 notes

·

View notes