#data visualization tools examples

Text

6 Best Data Visualization Software for Exploring Data | Data Visualization Tools

In this video, we'll be taking a look at 6 of the best data visualization software for exploring data. These tools allow you to explore your data in a way that is both intuitive and visually stunning. If you're looking for ways to improve your business intelligence skills, then you need to check out these data visualization tools.

By using these tools, you'll be able to make sense of your data and make important decisions based on it. So put on your data visualization hat and let's take a look at some of the best options out there!

#data visualization tools 2022#data visualization tools#data visualization#top bi tools 2022#data visualization tools examples#business intelligence tools#business intelligence#top 5 bi tools#tableau#power bi#sisense#business analytics#adaptive insights#bi for beginners#bi tools review#tools for business intelligence#how to choose a bi tool#bi platforms#business intelligence tutorial#best data visualization tools#data analytics#data visualisation

0 notes

Text

Example of Bad Data Visualization

This is one of the clear Examples of Bad Data Visualization. The issue here is with the colors used to show the wickets and the average. Both are shown in different shades of red on the same bar, making it hard to tell them apart.

#data visualization#data visualization examples#bad data visualization#data visualization tools#business success#technology

0 notes

Text

Unveiling the Magic of D3.js: Empowering Modern Data Visualization

Explore the rapid expansion of the digital universe in 2023. Discover the power of D3.js for cutting-edge data visualization as global data production soars.

0 notes

Text

https://ezinsights.ai/effective-data-visualization-examples-and-techniques/

#effective data visualization examples#guide to data visualization#how to data visualization#types of data visualization techniques#why use data visualization tools#data visualization tips and tricks

0 notes

Text

Data storytelling describes the capability to transfer important information and significant insights from data using a combination of textual and visual narrative technic. It's a skill that helps provide context and deeper understanding around metrics in a document or dashboard, inspire your viewers to act, and aid the decision-making method. Think of it as another effective way to share how your business is growing, and motivate others to care about a innovation, when the real numbers alone can't. For more please visit website.

#augmented analytics#what is analytics#tools data visualization#data visualization examples#germany vs brazil#data storytelling

0 notes

Photo

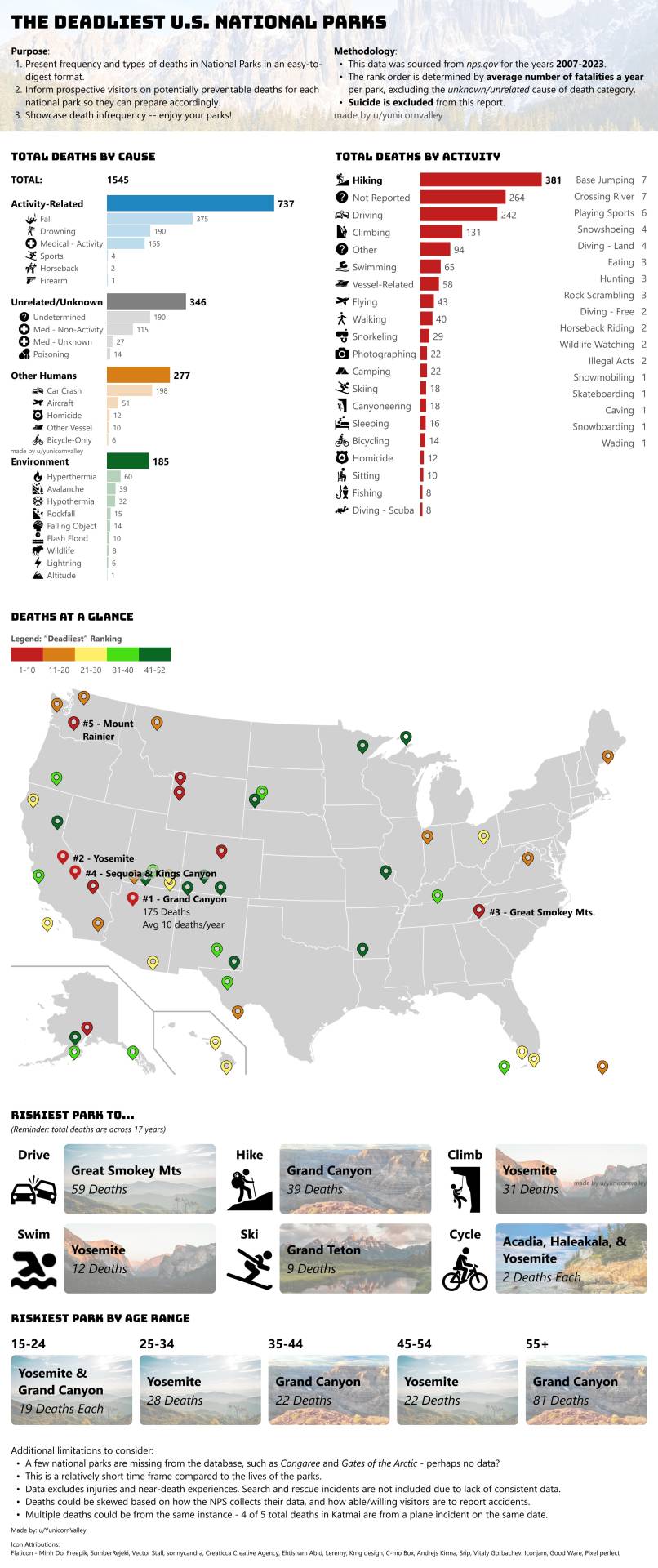

A Visual Guide of the Deadliest National Parks.

by YunicornValley

Hi all. I made this visual guide as a response to “The Safest (and Most Dangerous) National Parks” guide posted a couple days ago.

I enjoy the idea and don’t want to rag on their obvious enthusiasm and thoughtfulness. However, I found their scoring methodology subjective and could mislead potential visitors to think parks are more dangerous than they are.

For example, they score up to 4 points per type of dangerous wildlife, but there have only been 8 confirmed wildlife deaths across all National Parks in the last 17 years. They score more points for good cell service and wifi, despite a higher score meaning a more dangerous park. And they sourced ~pennlive.com~ for their Search-and-Rescue data – this is a local news website, not a data source. Some National Parks do provide SAR numbers, but not for a consistent time range, and not across all parks.

Therefore, I wanted to provide a visual using only the data from the Mortality and Annual Visitation spreadsheets provided by the NPS. I appreciate the previous visual was trying to fill in the gaps for injuries, near-death experiences, and unreported data, and we’ll never know those true numbers.

Although the numbers may be underreported, the mortality data does give us a good idea of where to be careful. Falls occur most often in canyons and high-elevation hiking areas. Drowning can occur anywhere people swim or cross rivers. And driving, much like day-to-day life, is always a dangerous activity.

I hope you’ll find this visual entertaining, informative, but also optimistic. 1,545 people have died in these parks across 17 years. The U.S. fatality rate for car accidents in just 2022 is 45,514.

We should always be prepared, research the weather and environment, and practice activities safely. But please don’t be anxious and enjoy your parks!

Tools used: Excel and Figma

Sources:

Other post: https://www.reddit.com/r/coolguides/comments/1ddlozt/a_cool_guide_to_the_most_and_least_dangerous_us/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button, https://www.reddit.com/r/NationalPark/comments/1ddoxhn/the_safest_and_most_dangerous_national_parks_in/

NPS mortality data: https://www.nps.gov/aboutus/mortality-data.htm

NPS visitation numbers data: https://www.nps.gov/aboutus/visitation-numbers.htm

Car Crash stats: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/813560

260 notes

·

View notes

Note

Hello! First, I wanted to say thank you for your post about updating software and such. I really appreciated your perspective as someone with ADHD. The way you described your experiences with software frustration was IDENTICAL to my experience, so your post made a lot of sense to me.

Second, (and I hope my question isn't bothering you lol) would you mind explaining why it's important to update/adopt the new software? Like, why isn't there an option that doesn't involve constantly adopting new things? I understand why they'd need to fix stuff like functional bugs/make it compatible with new tech, but is it really necessary to change the user side of things as well?

Sorry if those are stupid questions or they're A Lot for a tumblr rando to ask, I'd just really like to understand because I think it would make it easier to get myself to adopt new stuff if I understand why it's necessary, and the other folks I know that know about computers don't really seem to understand the experience.

Thank you so much again for sharing your wisdom!!

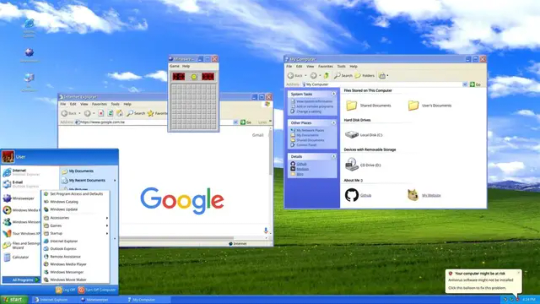

A huge part of it is changing technologies and changing norms; I brought up Windows 8 in that other post and Win8 is a *great* example of user experience changing to match hardware, just in a situation that was an enormous mismatch with the market.

Win8's much-beloathed tiles came about because Microsoft seemed to be anticipating a massive pivot to tablet PCs in nearly all applications. The welcome screen was designed to be friendly to people who were using handheld touchscreens who could tap through various options, and it was meant to require more scrolling and less use of a keyboard.

But most people who the operating system went out to *didn't* have touchscreen tablets or laptops, they had a desktop computer with a mouse and a keyboard.

When that was released, it was Microsoft attempting to keep up with (or anticipate) market trends - they wanted something that was like "the iPad for Microsoft" so Windows 8 was meant to go with Microsoft Surface tablets.

We spent the first month of Win8's launch making it look like Windows 7 for our customers.

You can see the same thing with the centered taskbar on Windows 11; that's very clearly supposed to mimic the dock on apple computers (only you can't pin it anywhere but the bottom of the screen, which sucks).

Some of the visual changes are just trends and various companies trying to keep up with one another.

With software like Adobe I think it's probably based on customer data. The tool layout and the menu dropdowns are likely based on what people are actually looking for, and change based on what other tools people are using. That's likely true for most programs you use - the menu bar at the top of the screen in Word is populated with the options that people use the most; if a function you used to click on all the time is now buried, there's a possibility that people use it less these days for any number of reasons. (I'm currently being driven mildly insane by Teams moving the "attach file" button under a "more" menu instead of as an icon next to the "send message" button, and what this tells me is either that more users are putting emojis in their messages than attachments, or microsoft WANTS people to put more emojis than messages in their attachments).

But focusing on the operating system, since that's the big one:

The thing about OSs is that you interact with them so frequently that any little change seems massive and you get REALLY frustrated when you have to deal with that, but version-to-version most OSs don't change all that much visually and they also don't get released all that frequently. I've been working with windows machines for twelve years and in that time the only OSs that Microsoft has released were 8, 10, and 11. That's only about one OS every four years, which just is not that many. There was a big visual change in the interface between 7 and 8 (and 8 and 8.1, which is more of a 'panicked backing away' than a full release), but otherwise, realistically, Windows 11 still looks a lot like XP.

The second one is a screenshot of my actual computer. The only change I've made to the display is to pin the taskbar to the left side instead of keeping it centered and to fuck around a bit with the colors in the display customization. I haven't added any plugins or tools to get it to look different.

This is actually a pretty good demonstration of things changing based on user behavior too - XP didn't come with a search field in the task bar or the start menu, but later versions of Windows OSs did, because users had gotten used to searching things more in their phones and browsers, so then they learned to search things on their computers.

There are definitely nefarious reasons that software manufacturers change their interfaces. Microsoft has included ads in home versions of their OS and pushed searches through the Microsoft store since Windows 10, as one example. That's shitty and I think it's worthwhile to find the time to shut that down (and to kill various assistants and background tools and stop a lot of stuff that runs at startup).

But if you didn't have any changes, you wouldn't have any changes. I think it's handy to have a search field in the taskbar. I find "settings" (which is newer than control panel) easier to navigate than "control panel." Some of the stuff that got added over time is *good* from a user perspective - you can see that there's a little stopwatch pinned at the bottom of my screen; that's a tool I use daily that wasn't included in previous versions of the OS. I'm glad it got added, even if I'm kind of bummed that my Windows OS doesn't come with Spider Solitaire anymore.

One thing that's helpful to think about when considering software is that nobody *wants* to make clunky, unusable software. People want their software to run well, with few problems, and they want users to like it so that they don't call corporate and kick up a fuss.

When you see these kinds of changes to the user experience, it often reflects something that *you* may not want, but that is desirable to a *LOT* of other people. The primary example I can think of here is trackpad scrolling direction; at some point it became common for trackpads to scroll in the opposite direction that they used to; now the default direction is the one that feels wrong to me, because I grew up scrolling with a mouse, not a screen. People who grew up scrolling on a screen seem to feel that the new direction is a lot more intuitive, so it's the default. Thankfully, that's a setting that's easy to change, so it's a change that I make every time I come across it, but the change was made for a sensible reason, even if that reason was opaque to me at the time I stumbled across it and continues to irritate me to this day.

I don't know. I don't want to defend Windows all that much here because I fucking hate Microsoft and definitely prefer using Linux when I'm not at work or using programs that I don't have on Linux. But the thing is that you'll see changes with Linux releases as well.

I wouldn't mind finding a tool that made my desktop look 100% like Windows 95, that would be fun. But we'd probably all be really frustrated if there hadn't been any interface improvements changes since MS-DOS (and people have DEFINITELY been complaining about UX changes at least since then).

Like, I talk about this in terms of backward compatibility sometimes. A lot of people are frustrated that their old computers can't run new software well, and that new computers use so many resources. But the flipside of that is that pretty much nobody wants mobile internet to work the way that it did in 2004 or computers to act the way they did in 1984.

Like. People don't think about it much these days but the "windows" of the Windows Operating system represented a massive change to how people interacted with their computers that plenty of people hated and found unintuitive.

(also take some time to think about the little changes that have happened that you've appreciated or maybe didn't even notice. I used to hate the squiggly line under misspelled words but now I see the utility. Predictive text seems like new technology to me but it's really handy for a lot of people. Right clicking is a UX innovation. Sometimes you have to take the centered task bar in exchange for the built-in timer deck; sometimes you have to lose color-coded files in exchange for a right click.)

291 notes

·

View notes

Text

Welcome to the Omen Archive!

We're pleased to see you. You may note the “pardon our moon dust” banner at the top of our site. We’ve been weighing site readiness against a desire to launch, and finally felt we had reached the tipping point. This doesn’t, however, mean that things are complete: there is a long list of projects on the docket. Some are below.

We intend, in time, to add:

A single campaign-at-a-glance page

Character cards (previous levels)

Character level-up summaries for each level

Character damage taken

Fearne’s Wild Shapes

Chetney’s Hybrid Transformations

Chetney’s toys

Laudna’s Hunger of the Shadow

Imogen’s dreams

Laudna’s “capable”s

Ruidus flares

FCG’s baked goods

Delilah appearances

Campaign calendar

Dynamic character/party inventories

Visited locations list

Improved ability to sort existing lists and tables

Additional data visualizations (graphs, etc.)

Export data to machine readable formats, such as Excel or JSON

Many of these projects are currently in progress.

We have recently added:

Character cards (current levels)

FCG berserks

If there are additional stats you’d like to see collected, please email us at [email protected], as well as (if relevant) some notes about how you tend to use that type of data (for example, running some analysis of your own, cross-checking lore, researching fanfiction, etc.). We’re always happy to add new things to the list where we can.

Keep visiting the site for updates, and keep an eye on our social media for announcements of additions and changes to our stats and interface.

-

ETA: We're happy to see people getting excited about discovering new data via our site, but there's one important detail we want to be sure to clarify. Abundant credit is due to the team at CritRoleStats for not only lending us all of the pre-Episode 82 data on our site, but also for providing a partial foundation for some of the above categories where we hope to expand. While some of the data we eventually add to the site will be new from the ground up, thus far a lot of our work has been providing new interfaces and visualizations for engaging with the work the CritRoleStats team has already done!

ETA: This post is being edited to reflect progress in adding new data and tools to the site.

214 notes

·

View notes

Text

An App Does Not a Master Naturalist Make

Originally posted on my website at https://rebeccalexa.com/app-not-master-naturalist/ - I had written this as an op-ed and sent it to WaPo, but they had no interest, so you get to read it here instead!

I have mixed feelings about Michael Coren’s April 25 Washington Post article, “These 4 free apps can help you identify every flower, plant and tree around you.” His ebullience at exploring some of the diverse ecological community around him made me grin, because I know exactly what it feels like. There’s nothing like that sense of wonder and belonging when you go outside and are surrounded by neighbors of many species, instead of a monotonous wall of green, and that is a big part of what led me to become a Master Naturalist.

When I moved from the Midwest to the Pacific Northwest in 2006, I felt lost because I didn’t recognize many of the animals or plants in my new home. So I set about systematically learning every species that crossed my path. Later, I began teaching community-level classes on nature identification to help other people learn skills and tools for exploring their local flora, fauna, and fungi.

Threeleaf foamflower (Tiarella trifoliata)

Let me be clear: I love apps. I use Merlin routinely to identify unknown bird songs, and iNaturalist is my absolute favorite ID app, period. But these tools are not 100% flawless.

For one thing, they’re only as good as the data you provide them. iNaturalist’s algorithms, for example, rely on a combination of photos (visual data), date and time (seasonal data), and GPS coordinates (location data) to make initial identification suggestions. These algorithms sift through the 135-million-plus observations uploaded to date, finding observations that have similar visual, seasonal, and location data to yours.

There have been many times over the years where iNaturalist isn’t so sure. Take this photo of a rather nondescript clump of grass. Without seed heads to provide extra clues, the algorithms offer an unrelated assortment of species, with only one grass. I’ve gotten that “We’re not confident enough to make a recommendation” message countless times over my years of using the app, often suggesting species that are clearly not what I’m looking at in real life.

Because iNaturalist usually offers up multiple options, you have to decide which one is the best fit. Sometimes it’s the first species listed, but sometimes it’s not. This becomes trickier if all the species that are suggested look alike. Tree-of-Heaven (Ailanthus altissima), smooth sumac (Rhus glabra) and eastern black walnut (Juglans nigra) all have pinnately compound, lanceolate leaves, and young plants of these three species can appear quite similar. If all you know how to do is point and click your phone’s camera, you aren’t going to be able to confidently choose which of the three plants is the right one.

Coren correctly points out that both iNaturalist and Pl@ntNet do offer more information on suggested species—if people are willing to take the time to look. Too many assume ID apps will give an easy, instant answer. In watching my students use the app in person almost everyone just picks the first species in the list. It’s not until I demonstrate how to access the additional content for each species offered that anyone thinks to question the algorithms’ suggestions.

While iNaturalist is one of the tools I incorporate into my classes, I emphasize that apps in general are not to be used alone, but in conjunction with field guides, websites, and other resources. Nature identification, even on a casual level, requires critical thinking and observation skills if you want to make sure you’re correct. Coren’s assertion that you only need a few apps demonstrates a misunderstanding of a skill that takes time and practice to develop properly—and accurately.

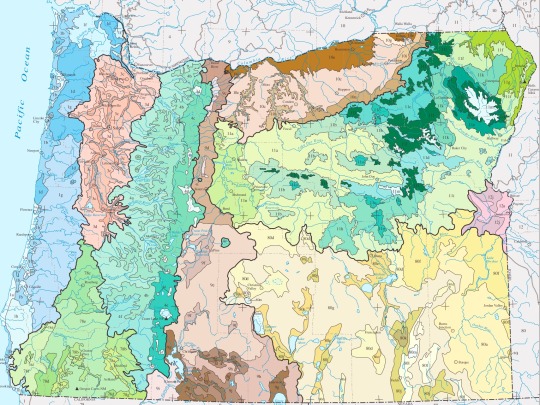

Speaking of oversimplification, apps are not a Master Naturalist in your pocket, and that statement —while meant as a compliment–does a disservice to the thousands of Master Naturalists across the country. While the training curricula vary from state to state, they are generally based in learning how organisms interact within habitats and ecosystems, often drawing on a synthesis of biology, geology, hydrology, climatology, and other natural sciences. A Master Naturalist could tell you not only what species you’re looking at, but how it fits into this ecosystem, how its adaptations are different from a related species in another ecoregion, and so forth.

Map showing Level III and IV ecoregions of Oregon, the basis of my training as an Oregon Master Naturalist.

In spite of my criticisms, I do think that Coren was absolutely onto something when he described the effects of using the apps. Seeing the landscape around you turn from a green background to a vibrant community of living beings makes going outside a more exciting, personal experience. I and my fellow nature nerds share an intense curiosity about the world around us. And that passion, more than any app or other tool, is fundamental to becoming a citizen naturalist, Master or otherwise.

Did you enjoy this post? Consider taking one of my online foraging and natural history classes or hiring me for a guided nature tour, checking out my other articles, or picking up a paperback or ebook I’ve written! You can even buy me a coffee here!

#iNaturalist#plant apps#Seek#Merlin#nature#wildlife#plant identification#apps#botany#biology#science#scicomm#science communication#Google Lens#naturalist#Master Naturalist#conservation#environment

623 notes

·

View notes

Text

Regardless of what companies and investors may say, artificial intelligence is not actually intelligent in the way most humans would understand it. To generate words and images, AI tools are trained on large databases of training data that is often scraped off the open web in unimaginably large quantities, no matter who owns it or what biases come along with it.

When a user then prompts ChatGPT or DALL-E to spit out some text or visuals, the tools aren’t thinking about the best way to represent those prompts because they don’t have that ability. They’re comparing the terms they’re presented with the patterns they formed from all the data that was ingested to train their models, then trying to assemble elements from that data to reflect what the user is looking for. In short, you can think of it like a more advanced form of autocorrect on your phone’s keyboard, predicting what you might want to say next based on what you’ve already written and typed out in the past.

If it’s not clear, that means these systems don’t create; they plagiarize. Unlike a human artist, they can’t develop a new artistic style or literary genre. They can only take what already exists and put elements of it together in a way that responds to the prompts they’re given. There’s good reason to be concerned about what that will mean for the art we consume, and the richness of the human experience.

[...]

AI tools will not eliminate human artists, regardless of what corporate executives might hope. But it will allow companies to churn out passable slop to serve up to audiences at a lower cost. In that way, it allows a further deskilling of art and devaluing of artists because instead of needing a human at the center of the creative process, companies can try to get computers to churn out something good enough, then bring in a human with no creative control and a lower fee to fix it up. As actor Keanu Reeves put it to Wired earlier this year, “there’s a corporatocracy behind [AI] that’s looking to control those things. … The people who are paying you for your art would rather not pay you. They’re actively seeking a way around you, because artists are tricky.”

To some degree, this is already happening. Actors and writers in Hollywood are on strike together for the first time in decades. That’s happening not just because of AI, but how the movie studios and steaming companies took advantage of the shift to digital technologies to completely remake the business model so workers would be paid less and have less creative input. Companies have already been using AI tools to assess scripts, and that’s one example of how further consolidation paired with new technologies are leading companies to prioritize “content” over art. The actors and writers worry that if they don’t fight now, those trends will continue — and that won’t just be bad for them, but for the rest of us too.

287 notes

·

View notes

Text

JavaScript Fundamentals

I have recently completed a course that extensively covered the foundational principles of JavaScript, and I'm here to provide you with a concise overview. This post will enable you to grasp the fundamental concepts without the need to enroll in the course.

Prerequisites: Fundamental HTML Comprehension

Before delving into JavaScript, it is imperative to possess a basic understanding of HTML. Knowledge of CSS, while beneficial, is not mandatory, as it primarily pertains to the visual aspects of web pages.

Manipulating HTML Text with JavaScript

When it comes to modifying text using JavaScript, the innerHTML function is the go-to tool. Let's break down the process step by step:

Initiate the process by selecting the HTML element whose text you intend to modify. This selection can be accomplished by employing various DOM (Document Object Model) element selection methods offered by JavaScript ( I'll talk about them in a second )

Optionally, you can store the selected element in a variable (we'll get into variables shortly).

Employ the innerHTML function to substitute the existing text with your desired content.

Element Selection: IDs or Classes

You have the opportunity to enhance your element selection by assigning either an ID or a class:

Assigning an ID:

To uniquely identify an element, the .getElementById() function is your go-to choice. Here's an example in HTML and JavaScript:

HTML:

<button id="btnSearch">Search</button>

JavaScript:

document.getElementById("btnSearch").innerHTML = "Not working";

This code snippet will alter the text within the button from "Search" to "Not working."

Assigning a Class:

For broader selections of elements, you can assign a class and use the .querySelector() function. Keep in mind that this method can select multiple elements, in contrast to .getElementById(), which typically focuses on a single element and is more commonly used.

Variables

Let's keep it simple: What's a variable? Well, think of it as a container where you can put different things—these things could be numbers, words, characters, or even true/false values. These various types of stuff that you can store in a variable are called DATA TYPES.

Now, some programming languages are pretty strict about mentioning these data types. Take C and C++, for instance; they're what we call "Typed" languages, and they really care about knowing the data type.

But here's where JavaScript stands out: When you create a variable in JavaScript, you don't have to specify its data type or anything like that. JavaScript is pretty laid-back when it comes to data types.

So, how do you make a variable in JavaScript?

There are three main keywords you need to know: var, let, and const.

But if you're just starting out, here's what you need to know :

const: Use this when you want your variable to stay the same, not change. It's like a constant, as the name suggests.

var and let: These are the ones you use when you're planning to change the value stored in the variable as your program runs.

Note that var is rarely used nowadays

Check this out:

let Variable1 = 3;

var Variable2 = "This is a string";

const Variable3 = true;

Notice how we can store all sorts of stuff without worrying about declaring their types in JavaScript. It's one of the reasons JavaScript is a popular choice for beginners.

Arrays

Arrays are a basically just a group of variables stored in one container ( A container is what ? a variable , So an array is also just a variable ) , now again since JavaScript is easy with datatypes it is not considered an error to store variables of different datatypeslet

for example :

myArray = [1 , 2, 4 , "Name"];

Objects in JavaScript

Objects play a significant role, especially in the world of OOP : object-oriented programming (which we'll talk about in another post). For now, let's focus on understanding what objects are and how they mirror real-world objects.

In our everyday world, objects possess characteristics or properties. Take a car, for instance; it boasts attributes like its color, speed rate, and make.

So, how do we represent a car in JavaScript? A regular variable won't quite cut it, and neither will an array. The answer lies in using an object.

const Car = {

color: "red",

speedRate: "200km",

make: "Range Rover"

};

In this example, we've encapsulated the car's properties within an object called Car. This structure is not only intuitive but also aligns with how real-world objects are conceptualized and represented in JavaScript.

Variable Scope

There are three variable scopes : global scope, local scope, and function scope. Let's break it down in plain terms.

Global Scope: Think of global scope as the wild west of variables. When you declare a variable here, it's like planting a flag that says, "I'm available everywhere in the code!" No need for any special enclosures or curly braces.

Local Scope: Picture local scope as a cozy room with its own rules. When you create a variable inside a pair of curly braces, like this:

//Not here

{ const Variable1 = true;

//Variable1 can only be used here

}

//Neither here

Variable1 becomes a room-bound secret. You can't use it anywhere else in the code

Function Scope: When you declare a variable inside a function (don't worry, we'll cover functions soon), it's a member of an exclusive group. This means you can only name-drop it within that function. .

So, variable scope is all about where you place your variables and where they're allowed to be used.

Adding in user input

To capture user input in JavaScript, you can use various methods and techniques depending on the context, such as web forms, text fields, or command-line interfaces.We’ll only talk for now about HTML forms

HTML Forms:

You can create HTML forms using the <;form> element and capture user input using various input elements like text fields, radio buttons, checkboxes, and more.

JavaScript can then be used to access and process the user's input.

Functions in JavaScript

Think of a function as a helpful individual with a specific task. Whenever you need that task performed in your code, you simply call upon this capable "person" to get the job done.

Declaring a Function: Declaring a function is straightforward. You define it like this:

function functionName()

{

// The code that defines what the function does goes here

}

Then, when you need the function to carry out its task, you call it by name:

functionName();

Using Functions in HTML: Functions are often used in HTML to handle events. But what exactly is an event? It's when a user interacts with something on a web page, like clicking a button, following a link, or interacting with an image.

Event Handling: JavaScript helps us determine what should happen when a user interacts with elements on a webpage. Here's how you might use it:

HTML:

<button onclick="FunctionName()" id="btnEvent">Click me</button>

JavaScript:

function FunctionName() {

var toHandle = document.getElementById("btnEvent");

// Once I've identified my button, I can specify how to handle the click event here

}

In this example, when the user clicks the "Click me" button, the JavaScript function FunctionName() is called, and you can specify how to handle that event within the function.

Arrow functions : is a type of functions that was introduced in ES6, you can read more about it in the link below

If Statements

These simple constructs come into play in your code, no matter how advanced your projects become.

If Statements Demystified: Let's break it down. "If" is precisely what it sounds like: if something holds true, then do something. You define a condition within parentheses, and if that condition evaluates to true, the code enclosed in curly braces executes.

If statements are your go-to tool for handling various scenarios, including error management, addressing specific cases, and more.

Writing an If Statement:

if (Variable === "help") {

console.log("Send help"); // The console.log() function outputs information to the console

}

In this example, if the condition inside the parentheses (in this case, checking if the Variable is equal to "help") is true, the code within the curly braces gets executed.

Else and Else If Statements

Else: When the "if" condition is not met, the "else" part kicks in. It serves as a safety net, ensuring your program doesn't break and allowing you to specify what should happen in such cases.

Else If: Now, what if you need to check for a particular condition within a series of possibilities? That's where "else if" steps in. It allows you to examine and handle specific cases that require unique treatment.

Styling Elements with JavaScript

This is the beginner-friendly approach to changing the style of elements in JavaScript. It involves selecting an element using its ID or class, then making use of the .style.property method to set the desired styling property.

Example:

Let's say you have an HTML button with the ID "myButton," and you want to change its background color to red using JavaScript. Here's how you can do it:

HTML: <button id="myButton">Click me</button>

JavaScript:

// Select the button element by its ID

const buttonElement = document.getElementById("myButton");

// Change the background color property buttonElement.style.backgroundColor = "red";

In this example, we first select the button element by its ID using document.getElementById("myButton"). Then, we use .style.backgroundColor to set the background color property of the button to "red." This straightforward approach allows you to dynamically change the style of HTML elements using JavaScript.

#studyblr#code#codeblr#css#html#javascript#java development company#python#study#progblr#programming#studying#comp sci#web design#web developers#web development#website design#ui ux design#reactjs#webdev#website#tech

357 notes

·

View notes

Text

Sight vs. Senses: Exploring perception in Only Friends.

Because many people seem to be confused by this scene and don't know how to interpret it or simply classify it as “creepy”, I have decided to share my interpretation of how this scene uses cinematographic techniques and symbolism to influence the visual and general narrative of the series. Let's talk about Top and Mew's relationship, cinematography, visual narrative, symbolism, cinematographic techniques and the difference between vision-based perception and sense-based perception as inherent concepts in Top and Mew's relationship.

1. An introduction to cinematography, narrative and the use of visual metaphors.

Cinematography is a central element when creating movies or series, it can be used creatively to support the narrative and emotions of the story. At the same time, it contributes to create a visual narrative through the choice of camera angles, framing, camera movements and composition of images.

Visual narrative: refers to the way stories are told using primarily visual elements, such as images, composition, colors, symbolism, and camera movements, rather than relying primarily on dialogue or writing. It's about transmitting information, emotions and messages through what is seen on the screen in movies, television series, photographs or any other visual medium.

Visual narrative uses visual elements to convey emotions, themes and meanings that affect the perception of a story. Among the visual elements that are used to enrich the narrative we find visual metaphors.

Visual metaphors are visual elements in a cinematic work that represent abstract concepts, emotions, or themes. These metaphors can be subtle or prominent and are used to enrich the narrative and understanding of the story.

With this concept in mind, we can say that the date in the restaurant is a visual metaphor to address the concept of perception.

Perception is the process by which a person interprets and integrates the sensory information they receive from their environment to form an understanding of the world around them. It involves the ability to take sensory data, such as what you see, hear, smell, touch, or taste, and turn them into meaningful and understandable experiences.

In the restaurant, diners cannot see the food they're consuming until the lights are turned on at the end of the meal. This reflects a lack of literal vision, as they cannot use their sense of sight to identify food. Due to the lack of vision, diners must rely on their other senses, such as taste and smell, to experience food. This scene highlights the importance of trusting feelings and intuitions rather than relying solely on what can be seen with the eyes.

2. Narrative, cinematographic techniques and perception.

Another element that enriches visual narrative and, finally, narrative in general are cinematographic techniques. Visual narrative can use a variety of cinematic and visual techniques to guide the audience through a story, create atmosphere, reveal characters and their emotions, and convey underlying themes or messages. For example, the moment when Top asks Mew to use his senses and the camera focuses on Mew's face is a cinematic technique called “close up” and the following moment when the camera loses focus is a cinematic technique called “generalized blurring”.

Close up in cinematography and photography refers to the moment in which the camera gets very close to the subject, object or face of a character, filling most of the frame with that element. The main objective of a close up is to highlight details and facial expressions, allowing the viewer to concentrate on those elements with greater clarity and focus.

Generalized blurring in cinematography is a powerful tool in the hands of filmmakers to enrich the visual and emotional experience of a film. In this technique, the entire image is deliberately blurred or out of focus. It's used for various purposes like to set a specific mood in a scene or to represent the emotional or psychological states of the characters, among other uses.

In the restaurant scene, these techniques are used to concentrate on Mew's emotional or psychological state after Top asked him to use his senses and not his sight to perceive the food. Based on these cinematic techniques, we can infer that something important is happening in Mew's mind. In fact, judging by what happens after the scene, we can infer that something very important happened in the restaurant and that meant a change in Mew's attitude. What changed? The way Mew perceives Top.

3. Vision-based perception and sense-based perception.

In EP1, Mew told Top that he knew who was honest (about dating him) because “his sense was always right”. Basically, Mew is able to see people's intentions and, ultimately, their essence through sense-based perception.

Sense-based perception: this form of perception involves all human senses, such as sight, hearing, smell, touch and taste. It's based on the information captured through these senses to form a complete and rich understanding of the environment and people. Sense-based perception can be deeper and more accurate than other types of perception, as it involves multiple sensory modalities.

However, this isn't the type of perception that Mew has been using with Top until now. Mew is usually extra careful and suspicious around Top. His behaviour is usually guided by the mental image (of someone considered promiscuous) he formed of Top based on his immediate appearance. This type of perception is more related to what can be seen immediately and, finally, it's a type of perception related to the use of sight.

Vision-based perception focuses primarily on visual information. This is the most common form of perception in humans and refers to the ability to observe, interpret and understand the world through sight. Visual information is important, but it's often used along other senses to gain a complete understanding. In real life, things aren't what they seem at first glance and what it's seen doesn't always reflect the underlying reality.

An example of how Mew is usually guided by vision-based perception with Top can be found in EP2. When Top tells Mew about his trauma, Mew's immediate reaction is to laugh and not believe it. This is because what Top is telling Mew doesn't correspond to the mental image that Mew has formed of Top. The fact that Top can't sleep alone doesn't correspond to what Mew perceives based on his sight of Top.

Because visual-based perception is limited and must be used along other senses, this type of way of perceiving isn't enough to perceive the complete reality or, in this case, Top's essence.

Mew never stopped perceiving Top based on the apparent, based on the observable. We know this because Mew has never been around Top without his glasses (except in the water sports and shower scene) until EP5, episode in which Mew finally spends time with Top when he has broken glasses. The change in Mew's perception coincides with the episode in which his glasses break, coincidence? No, symbolism.

Mew's glasses: In this context, glasses could represent limited perception or distorted vision of reality.

In EP5, Top asks Mew three times to perceive him based on all his senses. All these times, Mew can't wear his glasses or Top suggests him to stop wearing them.

Situation #1: At the café, Top tells Mew that he wants to be clearly seen (although Mew can see him clearly while wearing his glasses) and recommends him to try a surgery. This time, Top suggests that Mew should stop wearing his glasses so he can perceive his essence/true self, while being guided by his sense-based perception.

Situation #2: At the restaurant, Top reminds Mew of what he said in the bookstore about sense-based perception and asks him to be guided by it. This is, again, a request to see Top as he really is and not based on a mental image.

That's why everything becomes blurry, it involves ignoring appearances or what's obvious in front of your eyes. Sense-based perception involves seeing Top's essence (and the affection he truly feels for Mew) and ignoring that which is easily perceptible to vision such as signs of lies. In this case, the generalized blur is a metaphor for saying that love is blind (in a cheesy way).

When Mew starts perceiving Top based on his senses, he loses control and shows how in love he really is with Top. Even all the people who were in the restaurant disappear because Mew and Top are not focusing on what can be perceived by sight, they're focusing on seeing the essence (and the love they feel for each other). This plane isn't on the same plane that the diners are, therefore they disappear.

I guess you can also say that people disappear because Mew is in love and he doesn't care about others, but I'd say it's more than that. It's a matter of perception. It has nothing to do with seeing, it has to do with looking and perceiving the essence of the person in front. It's not a passive activity, it's an active activity that requires collecting information based on all the senses.

No, I don't think it's a dream.

Situation #3: Before Top and Mew have their first time, Mew takes off his glasses and Top asks if he can see him. However, we know Mew can't see clearly without his glasses, so Top is actually asking him if he can perceive him based on his senses (if he can see his intentions and his deep love for him, that's why he keeps telling him that he loves him. He tries to supplant the sense of sight with the sense of listening).

4. The end of vision-based perception.

If we are guided by the idea that Mew's glasses are a symbolism that limits his perception to a vision-based perception, with no glasses Mew will be able to see clearly. Mew will see through Top's lies and that's why in EP5, Mew gets rid of his glasses and discovers Top's infidelity.

As you may have noticed, the restaurant scene is the climax of a topic that has been worked on, in relation to Top and Mew, since the beginning of the series. The restaurant scene marks a milestone in the relationship of both of them and the turning point in the narrative, this thanks to the way in which metaphors and cinematographic techniques shape the narrative. Clearly, this is one of my favorite scenes in the entire series and I think it has a high level of complexity and interpretation.

If you want to read more of my analyses, you can read my Mew analysis here. Stay tuned, I'll do a Ray analysis soon.

#only friends the series#only friends series ep 5#only friends series#only friends#ofts#ofts meta#mew#mew meta#book#top#force#meta#gmmtv#only friend series#thai bl#only friends analysis#topmew#forcebook

188 notes

·

View notes

Text

The Great Data Cleanup: A Database Design Adventure

As a budding database engineer, I found myself in a situation that was both daunting and hilarious. Our company's application was running slower than a turtle in peanut butter, and no one could figure out why. That is, until I decided to take a closer look at the database design.

It all began when my boss, a stern woman with a penchant for dramatic entrances, stormed into my cubicle. "Listen up, rookie," she barked (despite the fact that I was quite experienced by this point). "The marketing team is in an uproar over the app's performance. Think you can sort this mess out?"

Challenge accepted! I cracked my knuckles, took a deep breath, and dove headfirst into the database, ready to untangle the digital spaghetti.

The schema was a sight to behold—if you were a fan of chaos, that is. Tables were crammed with redundant data, and the relationships between them made as much sense as a platypus in a tuxedo.

"Okay," I told myself, "time to unleash the power of database normalization."

First, I identified the main entities—clients, transactions, products, and so forth. Then, I dissected each entity into its basic components, ruthlessly eliminating any unnecessary duplication.

For example, the original "clients" table was a hot mess. It had fields for the client's name, address, phone number, and email, but it also inexplicably included fields for the account manager's name and contact information. Data redundancy alert!

So, I created a new "account_managers" table to store all that information, and linked the clients back to their account managers using a foreign key. Boom! Normalized.

Next, I tackled the transactions table. It was a jumble of product details, shipping info, and payment data. I split it into three distinct tables—one for the transaction header, one for the line items, and one for the shipping and payment details.

"This is starting to look promising," I thought, giving myself an imaginary high-five.

After several more rounds of table splitting and relationship building, the database was looking sleek, streamlined, and ready for action. I couldn't wait to see the results.

Sure enough, the next day, when the marketing team tested the app, it was like night and day. The pages loaded in a flash, and the users were practically singing my praises (okay, maybe not singing, but definitely less cranky).

My boss, who was not one for effusive praise, gave me a rare smile and said, "Good job, rookie. I knew you had it in you."

From that day forward, I became the go-to person for all things database-related. And you know what? I actually enjoyed the challenge. It's like solving a complex puzzle, but with a lot more coffee and SQL.

So, if you ever find yourself dealing with a sluggish app and a tangled database, don't panic. Grab a strong cup of coffee, roll up your sleeves, and dive into the normalization process. Trust me, your users (and your boss) will be eternally grateful.

Step-by-Step Guide to Database Normalization

Here's the step-by-step process I used to normalize the database and resolve the performance issues. I used an online database design tool to visualize this design. Here's what I did:

Original Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerName varchar

AccountManagerPhone varchar

Step 1: Separate the Account Managers information into a new table:

AccountManagers Table:

AccountManagerID int

AccountManagerName varchar

AccountManagerPhone varchar

Updated Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerID int

Step 2: Separate the Transactions information into a new table:

Transactions Table:

TransactionID int

ClientID int

TransactionDate date

ShippingAddress varchar

ShippingPhone varchar

PaymentMethod varchar

PaymentDetails varchar

Step 3: Separate the Transaction Line Items into a new table:

TransactionLineItems Table:

LineItemID int

TransactionID int

ProductID int

Quantity int

UnitPrice decimal

Step 4: Create a separate table for Products:

Products Table:

ProductID int

ProductName varchar

ProductDescription varchar

UnitPrice decimal

After these normalization steps, the database structure was much cleaner and more efficient. Here's how the relationships between the tables would look:

Clients --< Transactions >-- TransactionLineItems

Clients --< AccountManagers

Transactions --< Products

By separating the data into these normalized tables, we eliminated data redundancy, improved data integrity, and made the database more scalable. The application's performance should now be significantly faster, as the database can efficiently retrieve and process the data it needs.

Conclusion

After a whirlwind week of wrestling with spreadsheets and SQL queries, the database normalization project was complete. I leaned back, took a deep breath, and admired my work.

The previously chaotic mess of data had been transformed into a sleek, efficient database structure. Redundant information was a thing of the past, and the performance was snappy.

I couldn't wait to show my boss the results. As I walked into her office, she looked up with a hopeful glint in her eye.

"Well, rookie," she began, "any progress on that database issue?"

I grinned. "Absolutely. Let me show you."

I pulled up the new database schema on her screen, walking her through each step of the normalization process. Her eyes widened with every explanation.

"Incredible! I never realized database design could be so... detailed," she exclaimed.

When I finished, she leaned back, a satisfied smile spreading across her face.

"Fantastic job, rookie. I knew you were the right person for this." She paused, then added, "I think this calls for a celebratory lunch. My treat. What do you say?"

I didn't need to be asked twice. As we headed out, a wave of pride and accomplishment washed over me. It had been hard work, but the payoff was worth it. Not only had I solved a critical issue for the business, but I'd also cemented my reputation as the go-to database guru.

From that day on, whenever performance issues or data management challenges cropped up, my boss would come knocking. And you know what? I didn't mind one bit. It was the perfect opportunity to flex my normalization muscles and keep that database running smoothly.

So, if you ever find yourself in a similar situation—a sluggish app, a tangled database, and a boss breathing down your neck—remember: normalization is your ally. Embrace the challenge, dive into the data, and watch your application transform into a lean, mean, performance-boosting machine.

And don't forget to ask your boss out for lunch. You've earned it!

8 notes

·

View notes

Text

ChatGPT developer OpenAI’s approach to building artificial intelligence came under fire this week from former employees who accuse the company of taking unnecessary risks with technology that could become harmful.

Today, OpenAI released a new research paper apparently aimed at showing it is serious about tackling AI risk by making its models more explainable. In the paper, researchers from the company lay out a way to peer inside the AI model that powers ChatGPT. They devise a method of identifying how the model stores certain concepts—including those that might cause an AI system to misbehave.

Although the research makes OpenAI’s work on keeping AI in check more visible, it also highlights recent turmoil at the company. The new research was performed by the recently disbanded “superalignment” team at OpenAI that was dedicated to studying the technology’s long-term risks.

The former group’s coleads, Ilya Sutskever and Jan Leike—both of whom have left OpenAI—are named as coauthors. Sutskever, a cofounder of OpenAI and formerly chief scientist, was among the board members who voted to fire CEO Sam Altman last November, triggering a chaotic few days that culminated in Altman’s return as leader.

ChatGPT is powered by a family of so-called large language models called GPT, based on an approach to machine learning known as artificial neural networks. These mathematical networks have shown great power to learn useful tasks by analyzing example data, but their workings cannot be easily scrutinized as conventional computer programs can. The complex interplay between the layers of “neurons” within an artificial neural network makes reverse engineering why a system like ChatGPT came up with a particular response hugely challenging.

“Unlike with most human creations, we don’t really understand the inner workings of neural networks,” the researchers behind the work wrote in an accompanying blog post. Some prominent AI researchers believe that the most powerful AI models, including ChatGPT, could perhaps be used to design chemical or biological weapons and coordinate cyberattacks. A longer-term concern is that AI models may choose to hide information or act in harmful ways in order to achieve their goals.

OpenAI’s new paper outlines a technique that lessens the mystery a little, by identifying patterns that represent specific concepts inside a machine learning system with help from an additional machine learning model. The key innovation is in refining the network used to peer inside the system of interest by identifying concepts, to make it more efficient.

OpenAI proved out the approach by identifying patterns that represent concepts inside GPT-4, one of its largest AI models. The company released code related to the interpretability work, as well as a visualization tool that can be used to see how words in different sentences activate concepts, including profanity and erotic content, in GPT-4 and another model. Knowing how a model represents certain concepts could be a step toward being able to dial down those associated with unwanted behavior, to keep an AI system on the rails. It could also make it possible to tune an AI system to favor certain topics or ideas.

Even though LLMs defy easy interrogation, a growing body of research suggests they can be poked and prodded in ways that reveal useful information. Anthropic, an OpenAI competitor backed by Amazon and Google, published similar work on AI interpretability last month. To demonstrate how the behavior of AI systems might be tuned, the company's researchers created a chatbot obsessed with San Francisco's Golden Gate Bridge. And simply asking an LLM to explain its reasoning can sometimes yield insights.

“It’s exciting progress,” says David Bau, a professor at Northeastern University who works on AI explainability, of the new OpenAI research. “As a field, we need to be learning how to understand and scrutinize these large models much better.”

Bau says the OpenAI team’s main innovation is in showing a more efficient way to configure a small neural network that can be used to understand the components of a larger one. But he also notes that the technique needs to be refined to make it more reliable. “There’s still a lot of work ahead in using these methods to create fully understandable explanations,” Bau says.

Bau is part of a US government-funded effort called the National Deep Inference Fabric, which will make cloud computing resources available to academic researchers so that they too can probe especially powerful AI models. “We need to figure out how we can enable scientists to do this work even if they are not working at these large companies,” he says.

OpenAI’s researchers acknowledge in their paper that further work needs to be done to improve their method, but also say they hope it will lead to practical ways to control AI models. “We hope that one day, interpretability can provide us with new ways to reason about model safety and robustness, and significantly increase our trust in powerful AI models by giving strong assurances about their behavior,” they write.

10 notes

·

View notes

Text

when people characterise artists who are wary of generative AI as entitled babies who think they're so much more special than the professions automation has come for in the past, my thinking is like....

I do actually think there's a difference between automation taking over dangerous or simply mind-numbing work and it taking over work that people genuinely want to do

I have done a type of data entry that could be almost entirely replaced with automation based on existing technologies and where the only negative side effect of that would be the people struggling to find other work. Frankly when I was doing that work, I was thinking "this ought to be automated"

In other words, the only problem of automating most of that work is that we have chosen to build a society where everyone must work or starve. It should be a good thing that we can replace dangerous and mind-numbing labour with machiness! But if our society is entirely build up around the idea that full time work should be performed by everyone physically able to perform it, it becomes a problem instead of a boon

If we lived in a world that wasn't "work or starve" people would still want to make art. And I don't want to imply that what I'm saying applies only to art -- people would still want to translate between different languages, for example. People would still want to perform a lot of the highly context-sensitive labour that computers are -- at least for now -- ill-suited to perform

Beyond that, there's the philosophical idea that art -- whether it be visual, written, music, etc -- is a way humans communicate ideas to other humans. What does generative AI communicate?

(I do think AI tools for speeding up some work would be fine and probably will end up as the norm sometime in the future. As in, among the tools digital artists already use in Photoshop or animation programs, there will be ones based on machine learning.)

11 notes

·

View notes

Note

hi! what do you make of ai art? im conflicted cause i see how its great for disabled people in many ways, but then i look back at the work people put into becoming artists and mastering the craft and feel many things lol i wish i could look at it similarly like i look at producers for example, where you have a vision and tools and you know how to use it well so you dont need the musical training background to be creative, but i cant help but feel like its more complicated with visual art? that theres a whole other side besides having a vision and good understanding of a shortcut tool. im very very torn and also sorry for all that on your succession blog but knowing youre a fantastic artist whos recently been dealing with this sort of impossibility to make art i wonder if you have some insight in this area.

sending love!

i appreciate u wanting to know my opinion on a Hot Topic such as this! i dunno man i have an aversion to any definition of art of any kind that requires effort or skill as essential features that make the art “real”. i think a lot of what is happening with AI discourse is that people are appropriately appalled by the way capitalism mangles creative output and even what kind of relationships artists can have with their work and with the rest of the world. i do not have a problem with a machine that digests and reconfigures information — a machine is just a machine. if one copied the way i make texture with colored pencil and produced an approximation of a new original work by me, i would be fascinated by what reactions i might have to it. would i feel threatened by it? would i be flattered? what might it open up for me, to see my work broken into a particular machine’s data? this is just a dream, though. i see many artists understandably frightened by what the exploiter class may choose to do with their new toys (and what they are already doing to us with them). it just sucks to see that very plain class antagonism passed over with arguments about the “purity” of human-made art, how it is somehow apparent to any observer when a work is truly endowed with a “soul” (if these arguments sound eerily like fascist aesthetic principles, it’s because they are fascistic).

and then to see people cheering for their own doom with this thing of mr. game of thrones & co suing chatGPT, complete with condescending explanations of how it’s not going to hurt fanfic writers because the problem these multimillionaires have is actually with people monetizing their work, and the true humble Fan would ne’er ask a but penny. do people really not see how this is making the divide between the “artist” and the “common person” greater? it is so goddamn expensive to survive right now, and the wealthy are using fear of technology as a tool to prevent you from making money, and yes, making art at all. only those with enough capital to protect their intellectual property with the force of the law are allowed to express themselves through art. yes, i think it should be well within your rights to bind and sell (for money, yes, money) your game of thrones fanfiction. so many of us are living in poverty right now, bombarded by entertainment but prevented from ever chewing it up or spitting it out. ed roth’s rat fink character had it right. fuck mickey mouse. like, we’re actually back to saying “fuck mickey mouse” being really cool. put him in a blender full of data, have it put him into a beach scene with BBW anime versions of lara croft and princess peach. intellectual property is a historically recent phenomenon. it is a tool to make the rich richer and get you well and squarely fucked. theoretically, yeah, it sounds good to have your work and livelihood honored and protected, but just like they’re trying to replace artists and actors and writers with AI, every single tool becomes a weapon in the hands of the rich. the hell people are worried they need more punishing copyright law to fix is already here. the woman who designed care bears & strawberry shortcake never saw a penny from it. AI art is only a threat in the hands of the corporations that happily do these things in the first place.

anyways. lol. i’m not very technologically minded in my own art practice — i’m not naturally drawn to new technology as a part of my work, and find many of the results i’ve seen from current AI art tech to be kind of aesthetically unpleasant. artwork contains unpleasantness, though. i’m not really interested in arguments over what artwork “should” contain, only what it does. i think the best AI art i’ve seen (ie: the stuff i’ve enjoyed the most) has been from alan resnick:

it is so terrifically disquieting. it leans into what makes AI-generated BBW lara croft kind of difficult to actually jack off to. the overlapping lines of bodies, the nonsense text. but then, if this work has merit, is that because alan resnick is uniquely special, thus proving the point that the technology is only valid in the hands of a “real artist”? can mr. resnick be said to be the “artist” of these images at all, because he trained a program to his own style and input interesting ides? does he deserve lots of money for his work creating iconic adult swim shorts like this house has people in it? well sure

or would this art only have value if somebody put a tremendous amount of labor into it? you know. my mother used to tell me, “hard work beats talent when talent doesn’t work”. she said she should nail the phrase to my forehead, like martin luther at the church doors. having very recently become disabled & chronically ill, i don’t believe it anymore. i believe we should be able to use technology to make ourselves more free. we should not be so financially insecure that we are threatened by anyone expressing themselves with something we made. the ultra-wealthy are threatened by infringement because they need everybody else to stay poor, and the poor are threatened because they do not want to be poor any longer. it’s got nothing to do with strange scrambled pictures. if i could take pictures of every work of art i’ve ever loved and put it into a machine that mixes it up and turns it into a monster, i would do it just for a bittersweet laugh at it.

19 notes

·

View notes