#aws s3 monitoring

Explore tagged Tumblr posts

Text

Amazon S3 Bucket Feature Tutorial Part2 | Explained S3 Bucket Features for Cloud Developer

Full Video Link Part1 - https://youtube.com/shorts/a5Hioj5AJOU Full Video Link Part2 - https://youtube.com/shorts/vkRdJBwhWjE Hi, a new #video on #aws #s3bucket #features #cloudstorage is published on #codeonedigest #youtube channel. @java #java #awsc

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. Customers of all sizes and industries can use Amazon S3 to store and protect any amount of data. Amazon S3 provides management features so that you can optimize, organize, and configure access to your data to meet your specific business,…

View On WordPress

#amazon s3 bucket features#amazon s3 features#amazon web services#aws#aws cloud#aws cloudtrail#aws cloudwatch#aws s3#aws s3 bucket#aws s3 bucket creation#aws s3 bucket features#aws s3 bucket tutorial#aws s3 classes#aws s3 features#aws s3 interview questions and answers#aws s3 monitoring#aws s3 tutorial#cloud computing#s3 consistency#s3 features#s3 inventory report#s3 storage lens#simple storage service (s3)#simple storage service features

0 notes

Note

any podcast recommendations for guys Going Through It. im a sucker for whump and i’ve already listened to TMA and Malevolent sooo

Fiction Podcasts: Characters Going Through It / Experiencing the Horrors

Gore warning for most, here's 15 to get you started:

I am in Eskew: (Horror) David Ward is arguably the Guy Going Through It. Stories from a man living in something that very much wants to be a city, and a private investigator who was, in her words, "hired to kill a ghost". Calmly recounted stories set to Eskew's own gentle, persistent rain. The audio quality's a bit naff but the writing is spectacular. If you like the writing, also check out The Silt Verses, which is a brilliant show by the same creators.

VAST Horizon: (Sci-Fi, Horror, Thriller/Suspense Elements) And Dr. Nolira Ek is arguably the Gal Going Through it. An agronomist wakes from cryo to discover the ship she's on is dead in the water, far from their destination, and seemingly empty, barring the ship's malfunctioning AI, and an unclear reading on the monitors. I think you'll like this one. Great sound design, amazing acting, neat worldbuilding, and plenty of awful situations.

Dining in the Void: (Horror, Sci-Fi) So, the initial pacing on this one is a little weird, but stick with it. A collection of notable people are invited to a dinner aboard a space station, and find not only are they trapped there, but they're on a timer until total station destruction: unless they can figure out who's responsible. And there's someone else aboard to run a few games, just to make things more interesting. The games are frequently torturous. If that wasn't clear.

The White Vault: (Horror) By the same creators as VAST Horizon, this one follows a group sent to a remote arctic research base to diagnose and repair a problem. Trapped inside by persistant snow and wind, they discover something very interesting below their feet. Really well made show. The going through it is more spread out but there's a lot of it happening.

Archive 81: (Horror, Weird Fiction, Mystery and Urban Fantasy Elements) A young archivist is commissioned to digitize a series of tapes containing strange housing records from the 1990s. He has an increasingly bad time. Each season is connected but a bit different, so if S1 (relatively short) doesn't catch your ear, hang in for S2. You've got isolation, degredation of relationships, dehumanisation, and a fair amount of gore. And body horror on a sympathetic character is so underdone.

The Harrowing of Minerva Damson: (Fantasy, Horror) In an alternate version of our own world with supernatural monsters and basic magic, an order of women knights dedicated to managing such problems has survived all the way to the world wars, and one of them is doing her best with what she's got in the middle of it all.

SAYER: (Horror, Sci-Fi) How would you like to be the guy going through it? A series of sophisticated AI guide you soothingly through an array of mundane and horrible tasks.

WOE.BEGONE: (Sci-Fi) I don't keep up with this one any more, but I think Mike Walters goes through enough to qualify it. Even if it's frequently his own fault. A guy gets immediately in over his head when he begins to play an augmented reality game of entirely different sort. Or, the time-travel murder game.

Janus Descending: (Sci-Fi, Horror, Tragedy) A xenobiologist and a xenoanthropologist visit a dead city on a distant world, and find something awful. You hear her logs first-to-last, and his last-to-first, which is interesting framing but also makes the whole thing more painful. The audio equivalent of having your heart pulled out and ditched at the nearest wall. Listen to the supercut.

The Blood Crow Stories: (Horror) A different story every season. S1 is aboard a doomed cruise ship set during WWII, S2 is a horror western, S3 is cyberpunk with demons, and S4 is golden age cinema with a ghostly influence.

Mabel: (Supernatural, Horror, Fantasy Elements) The caretaker of a dying woman attempts to contact her granddaughter, leaving a series of increasingly unhinged voicemails. Supernatural history transitioning to poetic fae lesbian body horror.

Jar of Rebuke: (Supernatural) An amnesiac researcher with difficulties staying dead investigates strange creatures, eats tasty food, and even makes a few friends while exploring the town they live in. A character who doesn't stay dead creates a lot of scenarios for dying in interesting ways

The Waystation: (Sci-Fi, Horror) A space station picks up an odd piece of space junk which begins to have a bizzare effect on some of the crew. The rest of it? Doesn't react so well to this spreading strangeness. Some great nailgun-related noises.

Station Blue: (Psychological Horror) A drifting man takes a job as a repair technician and maintenance guy for an antarctic research base, ahead of the staff's arrival. He recounts how he got there, as his time in the base and some bizzare details about it begin to get to him. People tend to either quite like this one or don't really get the point of it, but I found it a fascinating listen.

The Hotel: (Horror) Stories from a "Hotel" which kills people, and the strange entities that make it happen. It's better than I'm making it sound, well-made with creative deaths, great sound work, and a strange staff which suffer as much as the guests. Worth checking out.

210 notes

·

View notes

Text

so part of me wants to blame this entirely on wbd, right? bloys said he was cool with the show getting shopped around, so assuming he was telling the truth (not that im abt to start blindly trusting anything a CEO says lol), that means it’s not an hbo problem. and we already know wbd has an awful track record with refusing to sell their properties—altho unlike coyote v acme, s3 of ofmd isn’t a completed work and therefore there isn’t the same tax writeoff incentive to bury the thing. i just can’t see any reason to hold on to ofmd except for worrying about image, bc it would be embarrassing if they let this show go with such a devoted fanbase and recognizable celebrities and it went somewhere else and did really well (which it would undoubtedly do really well, we’ve long since proven that). it feels kinda tinfoil hat of me to making assumptions abt what’s going on in wbd behind the scenes, but i also feel like there are hints that i’m onto something w my suspicions: suddenly cracking down on fan merch on etsy doesn’t seem like something a studio looking to sell their property would bother with, and we know someone was paying to track the viewing stats on ofmd’s bbc airing, which isn’t finished yet, so i’d expect whoever is monitoring that to not make a decision abt buying ofmd until the s2 finale dropped.

but also i think part of me just wants there to be a clear villain in the situation. it’s kinda comforting to have a face to blame, a clear target to shake my fist at. but the truth is that the entire streaming industry is in the shitter. streaming is not pulling in the kind of profit that investors were promised, and we’re seeing the bubble that was propped up w investor money finally start to pop. studios aren’t leaving much room in their budgets for acquiring new properties, and they’re whittling down what they already have. especially w the strikes last year, they’re all penny pinching like hell. and that’s much a much harder thing to rage against than just one studio or one CEO being shitty. that’s disheartening in a way that’s much bigger and more frightening than if there was just one guy to blame.

my guess is that the truth of the situation is probably somewhere in the middle. wbd is following the same shitty pattern they’ve been following since the merger, and it’s just a hard time for anyone trying to get their story picked up by any studio. ofmd is just one of many shows that are unlucky enough to exist at this very unstable time for the tv/streaming industry.

when i think abt it that way, tho, i’m struck by how lucky we are that ofmd even got to exist at all. if the wbd merger had happened a year earlier, or if djenks and tw tried to pitch this show a year later, there’s no way this show would’ve been made. s1 was given the runtime and the creative freedom needed to tell the story the way the showrunners wanted to, and the final product benefited from it so much that it became a huge hit from sheer gay word of mouth. and for all the imperfections with s2—the shorter episode order, the hard 30 minute per episode limit, the last-minute script changes, the finale a butchered mess of the intended creative vision—the team behind ofmd managed to tell a beautiful story despite the uphill battle they undoubtedly were up against. they ended the season with the main characters in a happy place. ed and stede are together, and our last shot of ed isn’t of him sobbing uncontrollably (like i rlly can’t stress enough how much i would have never been able to acknowledge the existence of this show again if s1 was all we got)

like. y’all. we were this close to a world where ofmd never got to exist. for me, at least, the pain of an undue cancellation is worth getting to have this story at all. so rather than taking my comfort in the form of righteous anger at david zaslav or at wbd or at the entire streaming industry as a whole, i’m trying to focus on how lucky i am to get to have the show in the first place.

bc really, even as i’m reeling in grief to know this is the end of the road for ofmd, a part of me still can’t quite wrap my head around that this show is real. a queer romcom about middle-aged men, a rejection of washboard abs and facetuned beauty standards, a masterful deconstruction and criticism of toxic masculinity, well-written female characters who get to shine despite being in a show that is primarily about manhood and masculinity, diverse characters whose stories never center around oppression and bigotry, a casually nonbinary character, violent revenge fantasies against oppressors that are cathartic but at the same time are not what brings the characters healing and joy, a queer found family, a strong theme of anti colonialism throughout the entire show. a diverse writers room that got to use their perspectives and experiences to inform the story. the fact that above all else, this show is about the love story between ed and stede, which means the character arcs, the thoughts, the feelings, the motivations, the backstories, and everything else that make up the characters of ed and stede are given the most focus and the most care.

bc there rlly aren’t a lot of shows where a character like stede—a flamboyant and overtly gay middle-aged man who abandoned his family to live his life authentically—gets to be the main character of a romcom, gets to be the hero who the show is rooting for.

and god, there definitely aren’t a lot of shows where a character like ed—a queer indigenous man who is famous, successful, hyper-competent, who feels trapped by rigid standards of toxic hypermasculinity, who yearns for softness and gentleness and genuine interpersonal connection and vulnerability, whose mental health struggles and suicidal intentions are given such a huge degree of attention and delicate care in their depiction, who messes up and hurts people when he’s in pain but who the show is still endlessly sympathetic towards—gets to exist at all, much less as the romantic lead and the second protagonist of the show.

so fuck the studios, fuck capitalism, fuck everything that brought the show to an end before the story was told all the way through. because the forces that are keeping s3 from being made are the same forces that would’ve seen the entire show canceled before it even began. s3 is canceled, and s2 suffered from studio meddling, but we still won. we got to have this show. we got to have these characters. there’s been so much working against this show from the very beginning but here we are, two years later, lives changed bc despite all odds, ofmd exists. they can’t take that away from us. they can’t make us stop talking abt or stop caring abt this show. i’m gonna be a fan of this show til the day i die, and the studios hate that. they hate that we care about things that don’t fit into their business strategy, they hate that not everyone will blindly consume endless IP reboots and spin-offs and cheap reality tv.

anyway i dont rlly have a neat way to end this post. sorta just rambling abt my feelings. idk, i know this sucks but im not rlly feeling like wallowing in it. i think my gratitude for the show is outweighing my grief and anger, at least for right now. most important thing tho is im not going anywhere. and my love for this show is certainly not fucking going anywhere.

#ofmd#our flag means death#save ofmd#s3 renewal hell#txt#mine#og#studio crit#edward teach#stede bonnet#gentlebeard

325 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

Centralizing AWS Root access for AWS Organizations customers

Security teams will be able to centrally manage AWS root access for member accounts in AWS Organizations with a new feature being introduced by AWS Identity and Access Management (IAM). Now, managing root credentials and carrying out highly privileged operations is simple.

Managing root user credentials at scale

Historically, accounts on Amazon Web Services (AWS) were created using root user credentials, which granted unfettered access to the account. Despite its strength, this AWS root access presented serious security vulnerabilities.

The root user of every AWS account needed to be protected by implementing additional security measures like multi-factor authentication (MFA). These root credentials had to be manually managed and secured by security teams. Credentials had to be stored safely, rotated on a regular basis, and checked to make sure they adhered to security guidelines.

This manual method became laborious and error-prone as clients’ AWS systems grew. For instance, it was difficult for big businesses with hundreds or thousands of member accounts to uniformly secure AWS root access for every account. In addition to adding operational overhead, the manual intervention delayed account provisioning, hindered complete automation, and raised security threats. Unauthorized access to critical resources and account takeovers may result from improperly secured root access.

Additionally, security teams had to collect and use root credentials if particular root actions were needed, like unlocking an Amazon Simple Storage Service (Amazon S3) bucket policy or an Amazon Simple Queue Service (Amazon SQS) resource policy. This only made the attack surface larger. Maintaining long-term root credentials exposed users to possible mismanagement, compliance issues, and human errors despite strict monitoring and robust security procedures.

Security teams started looking for a scalable, automated solution. They required a method to programmatically control AWS root access without requiring long-term credentials in the first place, in addition to centralizing the administration of root credentials.

Centrally manage root access

AWS solve the long-standing problem of managing root credentials across several accounts with the new capability to centrally control root access. Two crucial features are introduced by this new capability: central control over root credentials and root sessions. When combined, they provide security teams with a safe, scalable, and legal method of controlling AWS root access to all member accounts of AWS Organizations.

First, let’s talk about centrally managing root credentials. You can now centrally manage and safeguard privileged root credentials for all AWS Organizations accounts with this capability. Managing root credentials enables you to:

Eliminate long-term root credentials: To ensure that no long-term privileged credentials are left open to abuse, security teams can now programmatically delete root user credentials from member accounts.

Prevent credential recovery: In addition to deleting the credentials, it also stops them from being recovered, protecting against future unwanted or unauthorized AWS root access.

Establish secure accounts by default: Using extra security measures like MFA after account provisioning is no longer necessary because member accounts can now be created without root credentials right away. Because accounts are protected by default, long-term root access security issues are significantly reduced, and the provisioning process is made simpler overall.

Assist in maintaining compliance: By centrally identifying and tracking the state of root credentials for every member account, root credentials management enables security teams to show compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials.

Aid in maintaining compliance By systematically identifying and tracking the state of root credentials across all member accounts, root credentials management enables security teams to prove compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials. However, how can it ensure that certain root operations on the accounts can still be carried out? Root sessions are the second feature its introducing today. It provides a safe substitute for preserving permanent root access.

Security teams can now obtain temporary, task-scoped root access to member accounts, doing away with the need to manually retrieve root credentials anytime privileged activities are needed. Without requiring permanent root credentials, this feature ensures that operations like unlocking S3 bucket policies or SQS queue policies may be carried out safely.

Key advantages of root sessions include:

Task-scoped root access: In accordance with the best practices of least privilege, AWS permits temporary AWS root access for particular actions. This reduces potential dangers by limiting the breadth of what can be done and shortening the time of access.

Centralized management: Instead of logging into each member account separately, you may now execute privileged root operations from a central account. Security teams can concentrate on higher-level activities as a result of the process being streamlined and their operational burden being lessened.

Conformity to AWS best practices: Organizations that utilize short-term credentials are adhering to AWS security best practices, which prioritize the usage of short-term, temporary access whenever feasible and the principle of least privilege.

Full root access is not granted by this new feature. For carrying out one of these five particular acts, it offers temporary credentials. Central root account management enables the first three tasks. When root sessions are enabled, the final two appear.

Auditing root user credentials: examining root user data with read-only access

Reactivating account recovery without root credentials is known as “re-enabling account recovery.”

deleting the credentials for the root user Eliminating MFA devices, access keys, signing certificates, and console passwords

Modifying or removing an S3 bucket policy that rejects all principals is known as “unlocking” the policy.

Modifying or removing an Amazon SQS resource policy that rejects all principals is known as “unlocking a SQS queue policy.”

Accessibility

With the exception of AWS GovCloud (US) and AWS China Regions, which do not have root accounts, all AWS Regions offer free central management of root access. You can access root sessions anywhere.

It can be used via the AWS SDK, AWS CLI, or IAM console.

What is a root access?

The root user, who has full access to all AWS resources and services, is the first identity formed when you create an account with Amazon Web Services (AWS). By using the email address and password you used to establish the account, you can log in as the root user.

Read more on Govindhtech.com

#AWSRoot#AWSRootaccess#IAM#AmazonS3#AWSOrganizations#AmazonSQS#AWSSDK#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

Navigating AWS: A Comprehensive Guide for Beginners

In the ever-evolving landscape of cloud computing, Amazon Web Services (AWS) has emerged as a powerhouse, providing a wide array of services to businesses and individuals globally. Whether you're a seasoned IT professional or just starting your journey into the cloud, understanding the key aspects of AWS is crucial. With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries. This blog will serve as your comprehensive guide, covering the essential concepts and knowledge needed to navigate AWS effectively.

1. The Foundation: Cloud Computing Basics

Before delving into AWS specifics, it's essential to grasp the fundamentals of cloud computing. Cloud computing is a paradigm that offers on-demand access to a variety of computing resources, including servers, storage, databases, networking, analytics, and more. AWS, as a leading cloud service provider, allows users to leverage these resources seamlessly.

2. Setting Up Your AWS Account

The first step on your AWS journey is to create an AWS account. Navigate to the AWS website, provide the necessary information, and set up your payment method. This account will serve as your gateway to the vast array of AWS services.

3. Navigating the AWS Management Console

Once your account is set up, familiarize yourself with the AWS Management Console. This web-based interface is where you'll configure, manage, and monitor your AWS resources. It's the control center for your cloud environment.

4. AWS Global Infrastructure: Regions and Availability Zones

AWS operates globally, and its infrastructure is distributed across regions and availability zones. Understand the concept of regions (geographic locations) and availability zones (isolated data centers within a region). This distribution ensures redundancy and high availability.

5. Identity and Access Management (IAM)

Security is paramount in the cloud. AWS Identity and Access Management (IAM) enable you to manage user access securely. Learn how to control who can access your AWS resources and what actions they can perform.

6. Key AWS Services Overview

Explore fundamental AWS services:

Amazon EC2 (Elastic Compute Cloud): Virtual servers in the cloud.

Amazon S3 (Simple Storage Service): Scalable object storage.

Amazon RDS (Relational Database Service): Managed relational databases.

7. Compute Services in AWS

Understand the various compute services:

EC2 Instances: Virtual servers for computing capacity.

AWS Lambda: Serverless computing for executing code without managing servers.

Elastic Beanstalk: Platform as a Service (PaaS) for deploying and managing applications.

8. Storage Options in AWS

Explore storage services:

Amazon S3: Object storage for scalable and durable data.

EBS (Elastic Block Store): Block storage for EC2 instances.

Amazon Glacier: Low-cost storage for data archiving.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Top AWS Training Institute.

9. Database Services in AWS

Learn about managed database services:

Amazon RDS: Managed relational databases.

DynamoDB: NoSQL database for fast and predictable performance.

Amazon Redshift: Data warehousing for analytics.

10. Networking Concepts in AWS

Grasp networking concepts:

Virtual Private Cloud (VPC): Isolated cloud networks.

Route 53: Domain registration and DNS web service.

CloudFront: Content delivery network for faster and secure content delivery.

11. Security Best Practices in AWS

Implement security best practices:

Encryption: Ensure data security in transit and at rest.

IAM Policies: Control access to AWS resources.

Security Groups and Network ACLs: Manage traffic to and from instances.

12. Monitoring and Logging with AWS CloudWatch and CloudTrail

Set up monitoring and logging:

CloudWatch: Monitor AWS resources and applications.

CloudTrail: Log AWS API calls for audit and compliance.

13. Cost Management and Optimization

Understand AWS pricing models and manage costs effectively:

AWS Cost Explorer: Analyze and control spending.

14. Documentation and Continuous Learning

Refer to the extensive AWS documentation, tutorials, and online courses. Stay updated on new features and best practices through forums and communities.

15. Hands-On Practice

The best way to solidify your understanding is through hands-on practice. Create test environments, deploy sample applications, and experiment with different AWS services.

In conclusion, AWS is a dynamic and powerful ecosystem that continues to shape the future of cloud computing. By mastering the foundational concepts and key services outlined in this guide, you'll be well-equipped to navigate AWS confidently and leverage its capabilities for your projects and initiatives. As you embark on your AWS journey, remember that continuous learning and practical application are key to becoming proficient in this ever-evolving cloud environment.

2 notes

·

View notes

Text

Becoming an AWS Solutions Architect: A Comprehensive Guide

With the cloud age now, businesses are increasingly employing cloud computing platforms to cut costs, automate processes, and innovate. Among the first to pioneer cloud platforms is Amazon Web Services (AWS), which has numerous services that small, medium, and large enterprises can leverage. In order to effectively manage and optimize the services, businesses hire AWS Solutions Architects. What does it take to be an AWS Solutions Architect, and what is an AWS Solutions Architect in a business?

What is an AWS Solutions Architect?

AWS Solutions Architect is a technical professional who has the job to design, deploy, and execute scalable, secure, and high-performing cloud applications on Amazon Web Services. He cooperates with the development teams and clients in a manner that the architecture should be as per the business requirement and utilize the functionalities of AWS in the best way possible.

AWS Solutions Architects have knowledge in cloud architecture, system design, networking, and security. AWS Solutions Architects provide best-practice recommendations, cost savings, performance, and disaster recovery. AWS architects lead the way to help organizations realize maximum value of AWS in an effort to accomplish their missions.

Key Responsibilities of an AWS Solutions Architect

The work of an AWS Solutions Architect is diverse with numerous varied jobs. The essential jobs of an AWS Solutions Architect are:

Cloud Solution Design: Solutions Architects interact with stakeholders to receive business requirements and create cloud solutions that satisfy the requirements. It is performed by selecting suitable AWS services, stacking them in an open style, and designing highly available and fault-tolerant systems.

AWS Resource Monitoring and Management: They must monitor deploying and managing AWS resources such as EC2 instances, S3 buckets, RDS databases, etc. Resources must be ensured to scale as well as get optimized accordingly based on anticipated demands.

Security and Compliance: AWS Solutions Architects deploy cloud infrastructure with best-in-class security. They use encryption, access management, and identity practices to protect against sensitive information. They enable organizational compliance requirements.

Cost Optimization: Yet another of the biggest challenges of cloud deployment is managing costs. AWS Solutions Architects take into consideration usage patterns and suggest spending reduction without affecting performance. It could be selecting cost-effective services, rightsizing instances, or Reserved Instances.

Troubleshooting and Support: AWS Solutions Architects identify and fix cloud infrastructure issues. They collaborate with operations and development teams to address technical issues, ensuring the cloud environment remains up and running.

Skills and Certifications for AWS Solutions Architects

To become an AWS Solutions Architect, the candidate must possess technical as well as soft skills. Some of the most important skills and certifications are listed below:

Technical Skills

AWS Services: Strong knowledge of AWS services such as EC2, S3, Lambda, CloudFormation, VPC, and RDS.

Cloud Architecture: Experienced in designing scalable, highly available, and fault-tolerant architecture on AWS.

Networking and Security: Clear knowledge of networking fundamentals, firewalls, load balancers, and security controls in the AWS environment.

DevOps Tools: Knowledge of automated tools such as Terraform, Ansible, and CloudFormation for managing infrastructure.

Soft Skills

Problem-Solving: Solutions Architects need to have effective communication skills, wherein they can analyze and develop possible solutions to complex issues.

Communication: There is a requirement of good communication skills from the job with an effort to communicate clearly with development teams, stakeholders, and clients.

Project Management: Doing work under a series of varied projects and best possible ordering of tasks must be managed for the job.

Certifications

Experience is prioritized most, but certification aids in generating experience and interest towards learning accomplishments. AWS offers various certifications which are:

AWS Certified Solutions Architect – Associate: It is one of the recent additions in cloud architecture.

AWS Certified Solutions Architect – Professional: It is professional certification reflecting improved knowledge of AWS services and architecture design.

AWS Certified Security Specialty: It is AWS security practice-based certification.

Career Path and Opportunities for AWS Solutions Architects

The career of AWS Solutions Architects is slowly gaining momentum with more and more businesses shifting to the cloud. They begin as a cloud engineer, systems architect, or DevOps engineer in most scenarios. With the right skills, an AWS Solutions Architect can transition to senior positions such as cloud architect, enterprise architect, or even Chief Technology Officer (CTO).

Also, the majority of AWS Solutions Architects are independent consultants for various companies, and some are contracted by large companies, consulting firms, or tech companies. The profession has vast opportunities for advancement as well as work-life balance.

Conclusion

One must have a mix of technical expertise, problem-solving abilities, and in-depth knowledge regarding AWS services as well as cloud computing principles to be an AWS Solutions Architect. With cheap, safe, and adaptable cloud architecture, AWS Solutions Architects enable cloud success for companies. With more and more companies jumping on the cloud bandwagon every day, there is an endless future waiting to be taken by talented AWS Solutions Architects in a company that is expanding exponentially with profits only remaining for the rest.

0 notes

Text

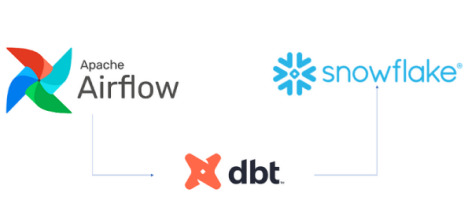

Building Data Pipelines with Snowflake and Apache Airflow

1. Introduction to Snowflake

Snowflake is a cloud-native data platform designed for scalability and ease of use, providing data warehousing, data lakes, and data sharing capabilities. Unlike traditional databases, Snowflake’s architecture separates compute, storage, and services, making it highly scalable and cost-effective. Some key features to highlight:

Zero-Copy Cloning: Allows you to clone data without duplicating it, making testing and experimentation more cost-effective.

Multi-Cloud Support: Snowflake works across major cloud providers like AWS, Azure, and Google Cloud, offering flexibility in deployment.

Semi-Structured Data Handling: Snowflake can handle JSON, Parquet, XML, and other formats natively, making it versatile for various data types.

Automatic Scaling: Automatically scales compute resources based on workload demands without manual intervention, optimizing cost.

2. Introduction to Apache Airflow

Apache Airflow is an open-source platform used for orchestrating complex workflows and data pipelines. It’s widely used for batch processing and ETL (Extract, Transform, Load) tasks. You can define workflows as Directed Acyclic Graphs (DAGs), making it easy to manage dependencies and scheduling. Some of its features include:

Dynamic Pipeline Generation: You can write Python code to dynamically generate and execute tasks, making workflows highly customizable.

Scheduler and Executor: Airflow includes a scheduler to trigger tasks at specified intervals, and different types of executors (e.g., Celery, Kubernetes) help manage task execution in distributed environments.

Airflow UI: The intuitive web-based interface lets you monitor pipeline execution, visualize DAGs, and track task progress.

3. Snowflake and Airflow Integration

The integration of Snowflake with Apache Airflow is typically achieved using the SnowflakeOperator, a task operator that enables interaction between Airflow and Snowflake. Airflow can trigger SQL queries, execute stored procedures, and manage Snowflake tasks as part of your DAGs.

SnowflakeOperator: This operator allows you to run SQL queries in Snowflake, which is useful for performing actions like data loading, transformation, or even calling Snowflake procedures.

Connecting Airflow to Snowflake: To set this up, you need to configure a Snowflake connection within Airflow. Typically, this includes adding credentials (username, password, account, warehouse, and database) in Airflow’s connection settings.

Example code for setting up the Snowflake connection and executing a query:pythonfrom airflow.providers.snowflake.operators.snowflake import SnowflakeOperator from airflow import DAG from datetime import datetimedefault_args = { 'owner': 'airflow', 'start_date': datetime(2025, 2, 17), }with DAG('snowflake_pipeline', default_args=default_args, schedule_interval=None) as dag: run_query = SnowflakeOperator( task_id='run_snowflake_query', sql="SELECT * FROM my_table;", snowflake_conn_id='snowflake_default', # The connection ID in Airflow warehouse='MY_WAREHOUSE', database='MY_DATABASE', schema='MY_SCHEMA' )

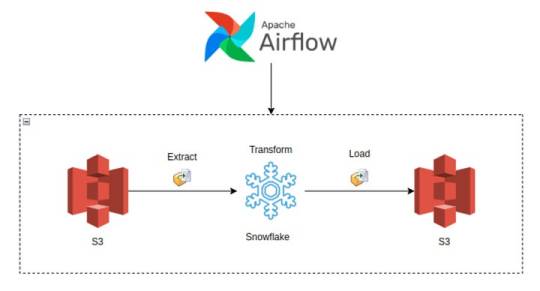

4. Building a Simple Data Pipeline

Here, you could provide a practical example of an ETL pipeline. For instance, let’s create a pipeline that:

Extracts data from a source (e.g., a CSV file in an S3 bucket),

Loads the data into a Snowflake staging table,

Performs transformations (e.g., cleaning or aggregating data),

Loads the transformed data into a production table.

Example DAG structure:pythonfrom airflow.providers.snowflake.operators.snowflake import SnowflakeOperator from airflow.providers.amazon.aws.transfers.s3_to_snowflake import S3ToSnowflakeOperator from airflow import DAG from datetime import datetimewith DAG('etl_pipeline', start_date=datetime(2025, 2, 17), schedule_interval='@daily') as dag: # Extract data from S3 to Snowflake staging table extract_task = S3ToSnowflakeOperator( task_id='extract_from_s3', schema='MY_SCHEMA', table='staging_table', s3_keys=['s3://my-bucket/my-file.csv'], snowflake_conn_id='snowflake_default' ) # Load data into Snowflake and run transformation transform_task = SnowflakeOperator( task_id='transform_data', sql='''INSERT INTO production_table SELECT * FROM staging_table WHERE conditions;''', snowflake_conn_id='snowflake_default' ) extract_task >> transform_task # Define task dependencies

5. Error Handling and Monitoring

Airflow provides several mechanisms for error handling:

Retries: You can set the retries argument in tasks to automatically retry failed tasks a specified number of times.

Notifications: You can use the email_on_failure or custom callback functions to notify the team when something goes wrong.

Airflow UI: Monitoring is easy with the UI, where you can view logs, task statuses, and task retries.

Example of setting retries and notifications:pythonwith DAG('data_pipeline_with_error_handling', start_date=datetime(2025, 2, 17)) as dag: task = SnowflakeOperator( task_id='load_data_to_snowflake', sql="SELECT * FROM my_table;", snowflake_conn_id='snowflake_default', retries=3, email_on_failure=True, on_failure_callback=my_failure_callback # Custom failure function )

6. Scaling and Optimization

Snowflake’s Automatic Scaling: Snowflake can automatically scale compute resources based on the workload. This ensures that data pipelines can handle varying loads efficiently.

Parallel Execution in Airflow: You can split your tasks into multiple parallel branches to improve throughput. The task_concurrency argument in Airflow helps manage this.

Task Dependencies: By optimizing task dependencies and using Airflow’s ability to run tasks in parallel, you can reduce the overall runtime of your pipelines.

Resource Management: Snowflake supports automatic suspension and resumption of compute resources, which helps keep costs low when there is no processing required.1. Introduction to Snowflake

0 notes

Text

Qlik SaaS: Transforming Data Analytics in the Cloud

In the era of digital transformation, businesses need fast, scalable, and efficient analytics solutions to stay ahead of the competition. Qlik SaaS (Software-as-a-Service) is a cloud-based business intelligence (BI) and data analytics platform that offers advanced data integration, visualization, and AI-powered insights. By leveraging Qlik SaaS, organizations can streamline their data workflows, enhance collaboration, and drive smarter decision-making.

This article explores the features, benefits, and use cases of Qlik SaaS and why it is a game-changer for modern businesses.

What is Qlik SaaS?

Qlik SaaS is the cloud-native version of Qlik Sense, a powerful data analytics platform that enables users to:

Integrate and analyze data from multiple sources

Create interactive dashboards and visualizations

Utilize AI-driven insights for better decision-making

Access analytics anytime, anywhere, on any device

Unlike traditional on-premise solutions, Qlik SaaS eliminates the need for hardware management, allowing businesses to focus solely on extracting value from their data.

Key Features of Qlik SaaS

1. Cloud-Based Deployment

Qlik SaaS runs entirely in the cloud, providing instant access to analytics without requiring software installations or server maintenance.

2. AI-Driven Insights

With Qlik Cognitive Engine, users benefit from machine learning and AI-powered recommendations, improving data discovery and pattern recognition.

3. Seamless Data Integration

Qlik SaaS connects to multiple cloud and on-premise data sources, including:

Databases (SQL, PostgreSQL, Snowflake)

Cloud storage (Google Drive, OneDrive, AWS S3)

Enterprise applications (Salesforce, SAP, Microsoft Dynamics)

4. Scalability and Performance Optimization

Businesses can scale their analytics operations without worrying about infrastructure limitations. Dynamic resource allocation ensures high-speed performance, even with large datasets.

5. Enhanced Security and Compliance

Qlik SaaS offers enterprise-grade security, including:

Role-based access controls

End-to-end data encryption

Compliance with industry standards (GDPR, HIPAA, ISO 27001)

6. Collaborative Data Sharing

Teams can collaborate in real-time, share reports, and build custom dashboards to gain deeper insights.

Benefits of Using Qlik SaaS

1. Cost Savings

By adopting Qlik SaaS, businesses eliminate the costs associated with on-premise hardware, software licensing, and IT maintenance. The subscription-based model ensures cost-effectiveness and flexibility.

2. Faster Time to Insights

Qlik SaaS enables users to quickly load, analyze, and visualize data without lengthy setup times. This speeds up decision-making and improves operational efficiency.

3. Increased Accessibility

With cloud-based access, employees can work with data from any location and any device, improving flexibility and productivity.

4. Continuous Updates and Innovations

Unlike on-premise BI solutions that require manual updates, Qlik SaaS receives automatic updates, ensuring users always have access to the latest features.

5. Improved Collaboration

Qlik SaaS fosters better collaboration by allowing teams to share dashboards, reports, and insights in real time, driving a data-driven culture.

Use Cases of Qlik SaaS

1. Business Intelligence & Reporting

Organizations use Qlik SaaS to track KPIs, monitor business performance, and generate real-time reports.

2. Sales & Marketing Analytics

Sales and marketing teams leverage Qlik SaaS for:

Customer segmentation and targeting

Sales forecasting and pipeline analysis

Marketing campaign performance tracking

3. Supply Chain & Operations Management

Qlik SaaS helps optimize logistics by providing real-time visibility into inventory, production efficiency, and supplier performance.

4. Financial Analytics

Finance teams use Qlik SaaS for:

Budget forecasting

Revenue and cost analysis

Fraud detection and compliance monitoring

Final Thoughts

Qlik SaaS is revolutionizing data analytics by offering a scalable, AI-powered, and cost-effective cloud solution. With its seamless data integration, robust security, and collaborative features, businesses can harness the full power of their data without the limitations of traditional on-premise systems.

As organizations continue their journey towards digital transformation, Qlik SaaS stands out as a leading solution for modern data analytics.

1 note

·

View note

Text

Prevent Subdomain Takeover in Laravel: Risks & Fixes

Introduction

Subdomain takeover is a serious security vulnerability that occurs when an attacker gains control of an unused or misconfigured subdomain. If your Laravel application has improperly removed subdomains or relies on third-party services like GitHub Pages or AWS, it may be at risk. In this blog, we will explore the causes, risks, and how to prevent subdomain takeover in Laravel with practical coding examples.

A compromised subdomain can lead to phishing attacks, malware distribution, and reputational damage. Let’s dive deep into how Laravel developers can safeguard their applications against this threat.

🔍 Related: Check out more cybersecurity insights on our Pentest Testing Corp blog.

What is Subdomain Takeover?

A subdomain takeover happens when a subdomain points to an external service that has been deleted or is no longer in use. Attackers exploit this misconfiguration by registering the service and gaining control over the subdomain.

Common Causes of Subdomain Takeover:

Dangling DNS Records: A CNAME record still points to an external service that is no longer active.

Unused Subdomains: Old test or staging subdomains that are no longer monitored.

Third-Party Services: If a subdomain was linked to GitHub Pages, AWS, or Heroku and the service was removed without updating the DNS settings.

How to Detect a Subdomain Takeover Vulnerability

Before diving into the fixes, let’s first identify if your Laravel application is vulnerable.

Manual Detection Steps:

Check for dangling subdomains: Run the following command in a terminal: nslookup subdomain.example.com If the response shows an unresolved host but still points to an external service, the subdomain may be vulnerable.

Verify the HTTP response: If visiting the subdomain returns a "404 Not Found" or an error stating that the service is unclaimed, it is at risk.

Automated Detection Using Our Free Tool

We recommend scanning your website using our free Website Security Scanner to detect subdomain takeover risks and other security vulnerabilities.

📷 Image 1: Screenshot of our free tool’s webpage:

Screenshot of the free tools webpage where you can access security assessment tools.

How to Prevent Subdomain Takeover in Laravel

Now, let’s secure your Laravel application from subdomain takeover threats.

1. Remove Unused DNS Records

If a subdomain is no longer in use, remove its DNS record from your domain provider.

For example, in Cloudflare DNS, go to: Dashboard → DNS → Remove the unwanted CNAME or A record

2. Claim Third-Party Services Before Deleting

If a subdomain points to GitHub Pages, AWS S3, or Heroku, ensure you delete the service before removing it from your DNS.

Example: If your subdomain points to a GitHub Page, make sure to claim it back before deleting it.

3. Implement a Subdomain Ownership Validation

Modify Laravel’s routes/web.php to prevent unauthorized access:

Route::get('/verify-subdomain', function () { $host = request()->getHost(); $allowedSubdomains = ['app.example.com', 'secure.example.com']; if (!in_array($host, $allowedSubdomains)) { abort(403, 'Unauthorized Subdomain Access'); } return 'Valid Subdomain'; });

This ensures that only predefined subdomains are accessible in your Laravel app.

4. Use Wildcard TLS Certificates

If you manage multiple subdomains, use wildcard SSL certificates to secure them.

Example nginx.conf setup for Laravel apps:

server { listen 443 ssl; server_name *.example.com; ssl_certificate /etc/ssl/certs/example.com.crt; ssl_certificate_key /etc/ssl/private/example.com.key; }

5. Automate Monitoring for Subdomain Takeovers

Set up a cron job to check for unresolved CNAME records:

#!/bin/bash host subdomain.example.com | grep "not found" if [ $? -eq 0 ]; then echo "Potential Subdomain Takeover Risk Detected!" | mail - s "Alert" [email protected] fi

This script will notify administrators if a subdomain becomes vulnerable.

Test Your Subdomain Security

To ensure your Laravel application is secure, use our free Website Security Checker to scan for vulnerabilities.

📷 Image 2: Screenshot of a website vulnerability assessment report generated using our free tool to check website vulnerability:

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

Conclusion

Subdomain takeover is a critical vulnerability that can be easily overlooked. Laravel developers should regularly audit their DNS settings, remove unused subdomains, and enforce proper subdomain validation techniques.

By following the prevention techniques discussed in this blog, you can significantly reduce the risk of subdomain takeover. Stay ahead of attackers by using automated security scans like our Website Security Checker to protect your web assets.

For more security tips and in-depth guides, check out our Pentest Testing Corp blog.

🚀 Stay secure, stay ahead!

1 note

·

View note

Text

Cloud Cost Optimization: Strategies for Maximizing Value While Minimizing Spend

Cloud computing offers organizations tremendous flexibility, scalability, and cost-efficiency. However, without proper management, cloud expenses can spiral out of control. Companies often find themselves paying for unused resources or inefficient architectures that inflate their cloud costs. Effective cloud cost optimization helps businesses reduce waste, improve ROI, and maintain performance.

At Salzen Cloud, we understand that optimizing cloud costs is crucial for businesses of all sizes. In this blog post, we’ll explore strategies for maximizing cloud value while minimizing spend.

1. Right-Sizing Cloud Resources

One of the most common sources of unnecessary cloud spending is over-provisioned resources. It’s easy to overestimate the required capacity when planning for cloud services, but this can lead to wasted compute power, storage, and bandwidth.

Best Practices:

Regularly monitor resource utilization: Use cloud-native monitoring tools (like AWS CloudWatch, Azure Monitor, or Google Cloud Operations Suite) to track the usage of compute, storage, and network resources.

Resize instances based on demand: Opt for flexible, scalable resources like AWS EC2 Auto Scaling, Azure Virtual Machine Scale Sets, or Google Compute Engine’s Managed Instance Groups to adjust resources dynamically based on your needs.

Perform usage reviews: Conduct quarterly audits to ensure that your cloud resources are appropriately sized for your workloads.

2. Leverage Reserved Instances and Savings Plans

Cloud providers offer pricing models like Reserved Instances (RIs) and Savings Plans that provide significant discounts in exchange for committing to a longer-term contract (usually one or three years).

Best Practices:

Analyze your long-term cloud usage patterns: Identify workloads that are predictable and always on (e.g., production servers) and reserve these resources to take advantage of discounted rates.

Choose the right commitment level: With services like AWS EC2 Reserved Instances, Azure Reserved Virtual Machines, or Google Cloud Committed Use Contracts, choose the term and commitment level that match your needs.

Combine RIs with Auto Scaling: While Reserved Instances provide savings, Auto Scaling helps accommodate variable workloads without overpaying for unused resources.

3. Optimize Storage Costs

Storage is another major contributor to cloud costs, especially when data grows rapidly or is stored inefficiently. Managing storage costs effectively requires regularly assessing your storage usage and ensuring that you're using the most appropriate types of storage for your needs.

Best Practices:

Use the right storage class: Choose the most cost-effective storage class based on your data access patterns. For example, Amazon S3 Standard for frequently accessed data, and S3 Glacier or Azure Blob Storage Cool Tier for infrequently accessed data.

Implement data lifecycle policies: Set up automatic policies to archive or delete obsolete data, reducing the amount of storage required. Use tools like AWS S3 Lifecycle Policies or Azure Blob Storage Lifecycle Management to automate this process.

Consolidate and deduplicate data: Use data deduplication techniques and ensure that you are not storing redundant data across multiple buckets or services.

4. Take Advantage of Spot Instances and Preemptible VMs

For workloads that are flexible or can tolerate interruptions, Spot Instances (AWS), Preemptible VMs (Google Cloud), and Azure Spot Virtual Machines provide a great opportunity to save money. These instances are available at a significantly lower price than standard instances but can be terminated by the cloud provider with little notice.

Best Practices:

Leverage for non-critical workloads: Use Spot Instances or Preemptible VMs for workloads like batch processing, big data analytics, or development environments.

Build for fault tolerance: Design your applications to be fault-tolerant, allowing them to handle interruptions without downtime. Utilize services like AWS Auto Scaling or Google Kubernetes Engine for managing containerized workloads on Spot Instances.

5. Use Cloud Cost Management Tools

Most cloud providers offer built-in tools to monitor, track, and optimize cloud spending. These tools can provide deep insights into where and how costs are accumulating, enabling you to take actionable steps toward optimization.

Best Practices:

Enable cost tracking and budgeting: Use AWS Cost Explorer, Azure Cost Management, or Google Cloud Cost Management to track spending, forecast future costs, and set alerts when your spending exceeds budget thresholds.

Tagging for cost allocation: Implement a consistent tagging strategy across your resources. By tagging resources with meaningful identifiers, you can categorize and allocate costs to specific projects, departments, or teams.

Analyze and optimize recommendations: Take advantage of cost optimization recommendations provided by cloud platforms. For instance, AWS Trusted Advisor and Azure Advisor provide actionable recommendations for reducing costs based on usage patterns.

6. Automate Scaling and Scheduling

Many organizations often leave resources running after hours or during off-peak times, which leads to unnecessary costs. Automating scaling and resource scheduling can help eliminate waste.

Best Practices:

Schedule resources to turn off during non-peak hours: Use AWS Instance Scheduler, Azure Automation, or Google Cloud Scheduler to automatically shut down development or staging environments during nights and weekends.

Auto-scale your infrastructure: Set up auto-scaling for your cloud infrastructure to automatically adjust the resources based on traffic demands. This helps ensure you're only using the resources you need when you need them.

7. Monitor and Optimize Network Costs

Network costs are often overlooked but can be significant, especially for businesses with high data transfer volumes. Cloud providers often charge for data transfer across regions, availability zones, or between services.

Best Practices:

Optimize data transfer across regions: Minimize the use of cross-region data transfer by ensuring that your applications and data are located in the same region.

Use Content Delivery Networks (CDNs): Leverage CDNs (like AWS CloudFront, Azure CDN, or Google Cloud CDN) to cache static content closer to users and reduce the cost of outbound data transfer.

Consider dedicated connections: If you’re transferring large volumes of data between on-premises infrastructure and the cloud, look into solutions like AWS Direct Connect or Azure ExpressRoute to reduce data transfer costs.

8. Adopt a Cloud-Native Architecture

Using a cloud-native architecture that leverages serverless technologies and microservices can drastically reduce infrastructure costs. Serverless offerings like AWS Lambda, Azure Functions, or Google Cloud Functions automatically scale based on demand and only charge for actual usage.

Best Practices:

Embrace serverless computing: Move event-driven workloads or batch jobs to serverless platforms to eliminate the need for provisioning and managing servers.

Use containers for portability and scaling: Adopt containerization technologies like Docker and Kubernetes to run applications in a flexible and cost-efficient way, scaling only when needed.

9. Continuous Review and Improvement

Cloud cost optimization isn’t a one-time effort; it’s an ongoing process. The cloud is dynamic, and your usage patterns, workloads, and pricing models are constantly evolving.

Best Practices:

Regularly review your cloud environment: Schedule quarterly or bi-annual cloud cost audits to identify new optimization opportunities.

Stay updated on new pricing models and features: Cloud providers frequently introduce new pricing models, services, and discounts. Make sure to keep an eye on these updates and adjust your architecture accordingly.

Conclusion

Cloud cost optimization is not just about cutting expenses; it’s about ensuring that your organization is using cloud resources efficiently and effectively. By applying strategies like right-sizing resources, leveraging reserved instances, automating scaling, and utilizing cost management tools, you can drastically reduce your cloud spend while maximizing value.

At Salzen Cloud, we specialize in helping businesses optimize their cloud environments to maximize performance and minimize costs. If you need assistance with cloud cost optimization or any other cloud-related services, feel free to reach out to us!

0 notes

Text

AWS Data Analytics Training | AWS Data Engineering Training in Bangalore

What’s the Most Efficient Way to Ingest Real-Time Data Using AWS?

AWS provides a suite of services designed to handle high-velocity, real-time data ingestion efficiently. In this article, we explore the best approaches and services AWS offers to build a scalable, real-time data ingestion pipeline.

Understanding Real-Time Data Ingestion

Real-time data ingestion involves capturing, processing, and storing data as it is generated, with minimal latency. This is essential for applications like fraud detection, IoT monitoring, live analytics, and real-time dashboards. AWS Data Engineering Course

Key Challenges in Real-Time Data Ingestion

Scalability – Handling large volumes of streaming data without performance degradation.

Latency – Ensuring minimal delay in data processing and ingestion.

Data Durability – Preventing data loss and ensuring reliability.

Cost Optimization – Managing costs while maintaining high throughput.

Security – Protecting data in transit and at rest.

AWS Services for Real-Time Data Ingestion

1. Amazon Kinesis

Kinesis Data Streams (KDS): A highly scalable service for ingesting real-time streaming data from various sources.

Kinesis Data Firehose: A fully managed service that delivers streaming data to destinations like S3, Redshift, or OpenSearch Service.

Kinesis Data Analytics: A service for processing and analyzing streaming data using SQL.

Use Case: Ideal for processing logs, telemetry data, clickstreams, and IoT data.

2. AWS Managed Kafka (Amazon MSK)

Amazon MSK provides a fully managed Apache Kafka service, allowing seamless data streaming and ingestion at scale.

Use Case: Suitable for applications requiring low-latency event streaming, message brokering, and high availability.

3. AWS IoT Core

For IoT applications, AWS IoT Core enables secure and scalable real-time ingestion of data from connected devices.

Use Case: Best for real-time telemetry, device status monitoring, and sensor data streaming.

4. Amazon S3 with Event Notifications

Amazon S3 can be used as a real-time ingestion target when paired with event notifications, triggering AWS Lambda, SNS, or SQS to process newly added data.

Use Case: Ideal for ingesting and processing batch data with near real-time updates.

5. AWS Lambda for Event-Driven Processing

AWS Lambda can process incoming data in real-time by responding to events from Kinesis, S3, DynamoDB Streams, and more. AWS Data Engineer certification

Use Case: Best for serverless event processing without managing infrastructure.

6. Amazon DynamoDB Streams

DynamoDB Streams captures real-time changes to a DynamoDB table and can integrate with AWS Lambda for further processing.

Use Case: Effective for real-time notifications, analytics, and microservices.

Building an Efficient AWS Real-Time Data Ingestion Pipeline

Step 1: Identify Data Sources and Requirements

Determine the data sources (IoT devices, logs, web applications, etc.).

Define latency requirements (milliseconds, seconds, or near real-time?).

Understand data volume and processing needs.

Step 2: Choose the Right AWS Service

For high-throughput, scalable ingestion → Amazon Kinesis or MSK.

For IoT data ingestion → AWS IoT Core.

For event-driven processing → Lambda with DynamoDB Streams or S3 Events.

Step 3: Implement Real-Time Processing and Transformation

Use Kinesis Data Analytics or AWS Lambda to filter, transform, and analyze data.

Store processed data in Amazon S3, Redshift, or OpenSearch Service for further analysis.

Step 4: Optimize for Performance and Cost

Enable auto-scaling in Kinesis or MSK to handle traffic spikes.

Use Kinesis Firehose to buffer and batch data before storing it in S3, reducing costs.

Implement data compression and partitioning strategies in storage. AWS Data Engineering online training

Step 5: Secure and Monitor the Pipeline

Use AWS Identity and Access Management (IAM) for fine-grained access control.

Monitor ingestion performance with Amazon CloudWatch and AWS X-Ray.

Best Practices for AWS Real-Time Data Ingestion

Choose the Right Service: Select an AWS service that aligns with your data velocity and business needs.

Use Serverless Architectures: Reduce operational overhead with Lambda and managed services like Kinesis Firehose.

Enable Auto-Scaling: Ensure scalability by using Kinesis auto-scaling and Kafka partitioning.

Minimize Costs: Optimize data batching, compression, and retention policies.

Ensure Security and Compliance: Implement encryption, access controls, and AWS security best practices. AWS Data Engineer online course

Conclusion

AWS provides a comprehensive set of services to efficiently ingest real-time data for various use cases, from IoT applications to big data analytics. By leveraging Amazon Kinesis, AWS IoT Core, MSK, Lambda, and DynamoDB Streams, businesses can build scalable, low-latency, and cost-effective data pipelines. The key to success is choosing the right services, optimizing performance, and ensuring security to handle real-time data ingestion effectively.

Would you like more details on a specific AWS service or implementation example? Let me know!

Visualpath is Leading Best AWS Data Engineering training.Get an offering Data Engineering course in Hyderabad.With experienced,real-time trainers.And real-time projects to help students gain practical skills and interview skills.We are providing 24/7 Access to Recorded Sessions ,For more information,call on +91-7032290546

For more information About AWS Data Engineering training

Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

#AWS Data Engineering Course#AWS Data Engineering training#AWS Data Engineer certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering training in Hyderabad#AWS Data Engineer online course#AWS Data Engineering Training in Bangalore#AWS Data Engineering Online Course in Ameerpet#AWS Data Engineering Online Course in India#AWS Data Engineering Training in Chennai#AWS Data Analytics Training

0 notes

Text

Looking for DevOps Training in Pune? Join CloudWorld Now!

In today's fast-paced tech world, the demand for skilled DevOps professionals is soaring. Organizations are looking for ways to integrate development and operations to streamline processes, automate workflows, and improve collaboration across teams. If you're eager to boost your career in the IT industry, enrolling in DevOps training in Pune is a fantastic way to gain the skills necessary to meet this growing demand.

CloudWorld is a leading DevOps training institute in Pune, offering top-notch DevOps courses in Pune that provide comprehensive knowledge and hands-on experience. Whether you're new to DevOps or seeking to advance your skills, CloudWorld is the best choice for DevOps classes in Pune.

Why Choose DevOps Training in Pune?

Pune is a hub for tech professionals, making it the perfect city to pursue specialized training programs. As a top-tier DevOps training institute in Pune, CloudWorld offers in-depth training on various tools and techniques used in modern DevOps workflows. The training is designed to equip you with the skills needed to work with CI/CD pipelines, automation, cloud infrastructure, containerization, and more.

By completing a DevOps course in Pune, you'll be prepared for a wide range of roles, including DevOps Engineer, Cloud Architect, and Automation Specialist. The city's thriving IT ecosystem ensures that you have ample career opportunities to choose from.

What to Expect from DevOps Training in Pune at CloudWorld?

Expert Trainers: CloudWorld boasts a team of certified trainers with years of real-world experience. Their expertise in the field ensures you receive the best DevOps training classes in Pune.

Comprehensive Curriculum: The DevOps course in Pune covers essential topics such as:

Introduction to DevOps and Agile methodologies

Continuous Integration (CI) & Continuous Delivery (CD)

Infrastructure as Code (IaC)

Automation tools like Jenkins, Ansible, Chef, and Puppet

Docker & Kubernetes for containerization and orchestration

Monitoring and Logging

Cloud platforms (AWS, Azure, Google Cloud)

Hands-On Projects: The DevOps classes in Pune at CloudWorld offer practical exposure through real-time projects. You'll work on live projects, building your confidence in handling DevOps tools and technologies.

AWS DevOps Classes: CloudWorld specializes in AWS DevOps classes in Pune, ensuring you gain hands-on experience with AWS services like EC2, S3, RDS, Lambda, and more. These skills are highly sought after in the IT industry.

Placement Assistance: One of the standout features of CloudWorld is its DevOps classes in Pune with placement support. The institute collaborates with top companies and offers assistance to help you land your dream job in the DevOps field. Whether you're a fresher or an experienced professional, their dedicated placement team works to connect you with employers who are looking for DevOps talent.

Benefits of Choosing CloudWorld for DevOps Training

Flexible Learning Options: CloudWorld offers both online and offline training options, allowing you to choose a schedule that fits your lifestyle.

Affordable Fee Structure: The DevOps training in Pune is priced competitively to ensure that quality education is accessible to everyone.

Post-Course Support: CloudWorld offers continued guidance even after course completion, helping you stay updated with the latest trends in the DevOps industry.

Conclusion

If you're searching for the best DevOps classes in Pune, CloudWorld stands out as the top choice. With expert trainers, a comprehensive curriculum, hands-on projects, and placement assistance, CloudWorld offers everything you need to jump-start your DevOps career.

Don't miss out on the opportunity to enhance your skills and secure your place in one of the fastest-growing fields in the tech industry. Enroll in DevOps training in Pune today and take the first step toward a successful future in DevOps!

#online devops training in pune#devops training in pune#devops classes in pune#devops course in pune#best devops classes in pune#aws devops classes in pune#best devops training institute in pune#devops classes in pune with placement

0 notes

Text

The Role of an AWS Solutions Architect: Key Skills, Responsibilities, and Career Prospects

In today's modern digital world, the heart of the business operations lies in cloud computing. Among all the cloud platforms, the most widely accepted and versatile provider of cloud services is Amazon Web Services (AWS). As a result, there is a massive demand for cloud solution designers and managers. The role that has gained much importance is that of an AWS Solutions Architect.

What is an AWS Solutions Architect?

An AWS solutions architect is the professional designing, deploying, and managing applications and systems on the AWS platform. Such architects work with companies to identify needs and then utilize the AWS suite of services in order to create secure, scalable, and cost-effective solutions. This role thus demands a very good understanding of the services, best practices related to architecture, and the business or industry requirements being addressed.

Key Responsibilities of AWS Solution Architect

Designing such an effective cloud solution that ensures scalability and dependability is quite a big duty of designing cloud solutions in a remit defined by the term AWS solution architectures. Other ones include selection for the best suitability of services pertaining to companies into AWS, keeping security compliance over the system design, and constructing architecture with perfect performance at cheap cost.

Most of these consultants work directly with clients to understand their technical and business requirements. They work with various stakeholders to deliver, among others, guidance of technical nature to make deliveries that are aimed at customizing solutions to fit the business requirements.

Security and Compliance. Cloud computing places a high priority on security, and the AWS Solutions Architects ensure that their solution designs meet the requirements of industry standards and regulatory compliance. They use encryption, access controls, and network security protocols to secure systems and data.

Optimization and Cost Management: AWS services are flexible and come in various configurations. A cloud architect designing an AWS solution should optimize cloud resources for both performance and cost. This is achieved through choosing the correct pricing models, resource allocation management, and regular review of the architecture to determine the potential areas of improvement.

Troubleshooting and Support: An AWS Solutions Architect should be able to diagnose issues and provide ongoing support for cloud-based applications and systems. This includes monitoring system performance, identifying potential problems, and applying solutions as needed.

Essential Skills for an AWS Solutions Architect

Deep knowledge of various AWS services: An AWS Solutions Architect must be pretty well aware of each and every one of the differing AWS services. Such as EC2, S3, RDS, Lambda, VPC, and the likes. He must know how to combine those services to create comprehensive cloud solutions.

Architectural best practices: He has to be an expert in designing highly secure, scalable, and highly available cloud architecture. Familiarity with AWS Well-Architected Framework is a plus.