#ai errors are actually pretty distinct

Explore tagged Tumblr posts

Text

Hmm something about how some people are pointing at art that is "bad" or not anatomically accurate and shouting "it's ai!" and how that's mildly terrifying

#ai errors are actually pretty distinct#i mean#the generators produce boring glitches too#but their tells are distinctive#and to just see a janky looking hand or neck and accuse a real person of being an image generator is really shitty so maybe don't#'this is bad so it must be ai'#idk there's plenty of options

3 notes

·

View notes

Note

Your Morrowind graphics look sooo beautiful! Do you have a list of the mods you used? I'd really appreciate it if you could share it!

Sure! I'd be happy to share my setup =)

My modlist is cobbled together from various modding guides, suggestions by friends who've played Morrowind, and my own personal preferences as I've played and come across things I felt like changing (like the pond scum lol!).

I tried to leave out most mods that had zero to do with Graphics/changes you can see in the world, and I also tried to keep my descriptions short, though if you were only asking for a simple load order then I apologize, oops!

The Engine

MGE XE (this is absolutely vital for those distant views/awesome light and water shaders and other features!)

Morrowind Code Patch (needed for bump/reflection maps to look right!)

Meshes/Textures/Overhauls

Morrowind Optimization Patch (improves performance/fixes some mesh errors!)

Patch for Purists (squashes so many bugs while avoiding unnecessary changes!)

Intelligent Textures (full AI upscaled/hand-edited texture pack of the game, excellent as a base if you plan to add on more targeted replacers later!)

Enhanced Water Shader for MGE XE--OR--Krokantor's Enhanced Water Shader Updated: (depends on which version of MGE XE you're using; if 0.13.0 you'll want the Updated version, and if earlier you need the older one. 3 shades of water to choose from; improved caustics, foam, ripples, underwater effects; and no more weird immersion-breaking moment when you would previously tilt the camera just beneath the surface and it would suddenly be perfectly clear. Absolutely gorgeous water!)

Animation Compilation-Hand to Hand Improved Without Almalexia Spellcasting (idk if this counts, but it does fix the Visual of that weird vanilla running animation!)

Better Bodies and Westly's Pluginless Replacer (a friend told me to get Robert's bodies, but BB is also very good and seems to be the most widely used + many mods need it, like Julan!)

Pluginless Khajiit Head pack (prettier kitties!)

Improved Argonians (better looking lizard-friends!)

Children of Morrowind (adds realism by having kids running around your towns!)

Julan, Ashlander Companion [v3.0 at bottom of this page] (ok not a graphics mod, but will add much immersion to your game, so I will shill for him anyway!)

Vibrant Morrowind 3.0/4.0 (this one I actually don't have installed yet, but I love the way Vivec looks in the screenshots!)

abot Water Life (adds aquatic creatures/things like algae and coral to make Morrowind's waters more alive!)

Vurt's Corals (found on Vurt's Groundcover page; adds gorgeous corals and new water plants!)

Vurt's Ashlands Overhaul (can choose between gnarly trees or vanilla-style!)

Vurt's Groundcover (gorgeous animated grass and vegetation that differs for each region!)

Vurt's Solstheim Tree Replacer II (more realistic trees and snowy pines!)

Vurt's Bitter Coast Trees II (5 additional unique trees!)

Vurt's Bitter Coast Trees II Remastered (mesh fixes/optimizations for the trees!)

Vurt's Leafy West Gash II (more trees, and optional rope bridge texture!)

Vurt's Ascadian Isles Tree Replacer II (v10a recommended for better-sized trees without clipping issues; TREES!!)

Articus Bush Replacer for Vurt AI Trees II (new model for bush tree + bark retexture!)

Vurt's Grazeland Trees II (really cool palms and Baobab trees!)

Vurt's Mournhold Trees II (beautiful animated cherry blossom trees!)

I Lava Good Mesh Replacer (better lava mesh, has no flickering with effects like steam!)

Remiros' Minor Retextures - Mist (much nicer spooky mist in Ancestral Tombs!)

Unto Dust (adds atmospheric floating dust motes, kinda like in Skyrim barrows!)

Graphic Herbalism MWSE (improved meshes and Oblivion-style harvesting!)

Glow in the Dahrk (windows transition to glowing versions at night!)

Ashfall (super awesome and very configurable Camping/Survival/Cooking/Needs mod!)

Watch the Skies (dynamic weathers/weather changes inside/randomized clouds etc!)

Seasonal Weather of Vvardenfell (weather changes throughout the year!)

Taddeus' Foods of Tamriel (adds Ashfall compatible foods and ovens for baking!)

More Wells (add-on for Ashfall/more immersive since access to water is pretty important!)

Diverse Blood (because not everything should bleed Red when you poke it with a spear!)

Lived Towns - Seyda Neen (adds more containers/clutter to make it feel more lived-in!)

Better Waterfalls (adds splash effects/water spray, better running water texture!)

Waterfalls Tweaks (resized water splash to blend better!)

Dunmer Lanterns Replacer (smoother/more-detailed-yet-optimized lanterns + paper lanterns!)

Telvanni Lighthouse Tel Vos (fits in perfectly with Azura's Coast region!)

Telvanni Lighthouse Tel Branora (very atmospheric, works well with surroundings!)

Palace of Vehk (Vivec's Palace feels lived-in instead of sad and empty!)

Ships of the Imperial Navy (immersive addition to Imperial waterfront areas!)

Striderports (gives caravaners some shelter and comfort while standing there all day!)

Illuminated Palace of Vivec (decorates palace steps + shrines with devotion candles and flowers left by followers!)

Scum Retexture - Alternative 2 (better looking pond scum in Bitter Coast region!)

Full Dwemer Retexture (I went with Only Armor/Robots/Weapons; nice high quality retex!)

Blighted Animals Retextured (I chose Darknut's 1024; blighted animals have their own sickly textures now!

Vivec Expansion 3.1 Tweaked Reworked (adds a hostel/many wooden walkways to Vivec on the water!)

Atmospheric Plazas (Vivec's plazas now have weather/sunlight! Be sure to use MCP's Rain fix to keep it from pouring as if there's no roof!)

Gemini's Realistic Snowflakes (more organic texture with more depth!)

Severa Magia DB fix (makes hideout actually appropriate to Dark Brotherhood!)

Starfire's NPC Additions (more populated towns and settlements!)

Hold It (adds items for NPCs to hold and carry, based on their class; very immersive!)

Suran-The Pearl of the Ascadian Isles (I went with White Suran Complete package; stunning retexture that also adds docks/waterfront!)

Atmospheric Delights (a more fitting mood inside the House of Earthly Delights!)

Guars Replacer-Aendemika of Vvardenfell (pluginless makeover for our scaly friends!)

Silt Strider by Nwahs and Mushrooms Team (great new model+textures for these cool bug-buses!)

Skar Face (giant crab manor in Ald-ruhn gets claws and legs!)

Armor/Clothing

Redoran Founders Armor (Redoran councilors stand out in this cool set!)

Morag Tong Polished (bug fixes/Armor Replacer/restored cut content for the faction!)

Rubber's Weapons Pack (several unique weapons/shields get distinct models!)

Yet Another Guard Diversity (generic copypasta guards now have variation!)

Better Silver Armor (adds missing pieces of silver armor to make full set!)

Royal Guard Better Armor (pluginless armor replacer for the Royal Guards!)

RR Mod Series Better Silt Strider Armor (cooler bug men in your Ashlander camps!)

Armored Robes NPC Compilation (some Ordinators/Mabrigash/others will wear distinct robes of their station!)

Full Dragonscale Armor Set v1.3a (adds the missing pieces to make the set complete!)

Mage Robes (robes for every magic school, many MG members will wear their respective ones!)

Quorn Resource Integration (lore-friendly armor/creatures added to leveled lists to be encountered in game!)

Better Clothes (non-segmented clothing replacer to fit Better Bodies!)

More Better Clothes (additional shirts that were missed in the first one!)

Better Clothes Complete (fixes many problems and 1st person clipping issues for BC!)

Better Clothes Retextured (high-res retextures for nearly all base game clothes!)

Hirez Better Clothes (3 shirts retextured in high quality!)

Better Morrowind Armor (BB compatible armor replacer!)

Dark Brotherhood Armor Replacer (changes DB armor to look more like concept art!)

Bonemold Armor Replacer (much nicer-looking Bonemold armor!)

Westly's Fine Clothiers of Tamriel (very high quality clothes that you will see many NPCs wearing too!)

Orcish Retexture v1.2 (beautifully done armor retexture!)

Daedric Lord Armor (much improved Daedric set, very fierce!)

Ebony Mail Replacer (awesome new model+tex that changes it to actual chainmail!)

I use Wrye Mash to install my mods, though I think a lot of people use MO2. Haaa, now that I've made this list I have the strong urge to just run around Morrowind taking even more screenshots =)

26 notes

·

View notes

Text

I think people know by now how to tell if an image of a person is AI-generated. Count the fingers, count the knuckles, check the pupils, yadda yadda. I've seen several posts circulating about what to look for. However, I think people are a LOT less educated about backgrounds, and about the specific distinctions between human error and AI error. So that's what I'm going to cover.

Now, don't feel bad if you've reblogged or liked any of the images I'm about to show you guys. This is just what's crossed my blog, so it's what I have to work with. (Actually, thanks for providing the examples!)

I also generated a few images from crAIyon purely for demonstrational purposes, because I didn't have anything on-hand to show my thoughts.

Firstly — Keep in mind that AI has a difficult time replicating "simple" styles. Think colorless line-drawings, cartoony pieces with thick lines, and pixel art.

Looks unsettling, right?

Why is this? Well, when a human makes art, we're more prone to under-detailing by mistake than over-detailing, because adding detail in the first place place is more effort. A skilled artist should be good able to capture an idea with minimal, evocative shape language.

But when an AI makes art, it is the opposite. An AI doesn't understand what it's looking at, not in the way that you or I do. All it can do is search for and replicate patterns in the noise of pixels. As a result, it is prone to mushing together features in ways that a human artist . . . wouldn't intentionally think to do.

It also over-details, replicating what it knows over and over again because it doesn't know when it's supposed to stop. Blank spaces can confuse it! It likes having detail to work with! Detail Is Data!

Again, this is why we count fingers.

These general principles still apply when we're looking at styles that an AI is better equipped to imitate. So . . .

Secondly — AI's tendency to over-render details makes it easier for it to pick up heavily detailed styles, especially if the style will still hold up when certain details are indistinct or merge together unexpectedly.

Scrutinize images that utilize a painterly, heavily-rendered, or photo-realistic style. Such as this one.

Thirdly — An AI piece that looks pretty good from a distance falls apart up close.

The above image looks almost like a photograph, but there is architecture here that you wouldn't find in a real room, and mistakes that you wouldn't find in the work of an artist that is THIS good at rendering. Or most beginner artists, even.

Can you see what falls apart here? Hint; we're counting fingers again.

Check the window panes. Isn't the angle that they all meet up at a little off? Why are the panes sized so inconsistently? Why doesn't the view outside of them all line up into a cohesive background?

Count the furniture legs. Why does the farther-back case have a third leg? Why does the leg on the closer case vanish so strangely behind the flowery details?

Examine the curtain(?) fabric at the top of the window. What on earth IS that frilly stuff?

Another mistake that AI will make is drawing lines and merging details that a human artist would never think of as connected. See the lines crawling up the walls? See how some of the flower petals glop together at hard angles in some places? Yeah, that's what I'm talking about.

You can see more strange architecture in the outdoor setting of this image.

A lot of the AI's mistakes are almost art nouveau! We recognize that buildings are consistently angular, for stability reasons. An AI does not. (Also look at the trees in the background, and how they tend to warp and distort around the outline of the treehouse. They kinda melt into each other at some points. It's wild.)

Fourthly — An AI will replicate any carelessness that was introduced into its original data set.

Obviously, this means that AIs will make fake watermarks, but everybody already knows that. What I need you guys to look out for is something else. It's called artifacting.

Artifacting is defined as "the introduction of a visible or audible anomaly during the processing or transmission of digital data." To put it in layman's terms, you know how an image gets crunchy and pixelated if you save it as a jpg? Yeah. That. An AI with lots of crusty, crunchy jpgs fed into it will produce crunchy images.

Look at the floor at the bottom of our original example image;

See the speckles all along the glass panels, table legs, and flowers in shadow? Artifacted to hell and back! This shit is crunchier than my spine after spending half a day hunched over my laptop.

Again, legitimate art and photography may have artifacting too just because of file formatting reasons. But most artists don't intentionally artifact their own images, and furthermore, the artifacting will not be baked into the very composition of the image itself. The speckles will instead gather most notably on flat colors at the border of different color patches and/or outlines.

Cronchy memes; funny. Cronchy AI art; shitty jpg art theft caught red-handed.

That's probably all the lessons I can impart in one post. Class dismissed! As homework a bonus, consider these two sister images to our original flower room. Can you spot any signs of AI generation?

@wolven-writer I hope this helps!

You know those aesthetic image posts that float around tumblr? I'm . . . starting to see a lot on my dash that are obviously ai-generated. Are non-artists having trouble telling the difference between AI images and real photos, or are people starting to stop care about the stolen art that gets fed into those programs?

#some AI is good enough that it does this stuff minimally#AND YET#people are posting obvious images anyway#because they can't tell the things they generate willy-nilly with a click of a button from things that artists do intentionally#anyway please spread this

43K notes

·

View notes

Text

Locked

(gif not mine)

Pairing: Loki x Reader (ft. the Avengers)

Content/Warnings: Angst; fluff; Clint being a bit of a jerk

Words: 1603

A/N: Well, the long awaited sequel to Spinning has finally arrived. I kinda drew inspiration from Imagine Dragons’ song Next To Me for this fic, so make sure to check out that song because it’s honestly awesome. Anyways, enjoy!

Part 1

For several moments, you weren’t completely sure if Thor was planning on attacking or congratulating Loki. Jane seemed to be thinking along the same lines, moving to place a restraining hand on Thor’s chest, but the thunder god finally relaxed and gave his brother a beaming smile. “Well congratulations then, brother!”

“Thank you, Thor,” Loki said smoothly, pulling his sleeve back down. “Though, if you don’t mind, I would like to get to know my soulmate, so if you could take your friends elsewhere…”

“Oh, right, of course. Come Jane, Darcy. Stark has put in a new microwave, it even speaks to you!” Thor said happily. Jane looked amused, as did Darcy, who sent you a discreet thumbs up before pulling the door shut behind her.

Your fingers tapped nervously against your thigh. “So…”

“Would you like to sit?” Loki offered, waving towards the couch.

“Sure, yeah.” You accepted the invitation, taking a seat on the couch and tucking a leg under you. “So you’re stuck here, huh?”

“There are worse fates, I suppose,” He mused, stretching an arm across the back of the couch. “What about you? Where are you from? I’ve been reading up on Earth’s geography, though I can’t promise I’ll recognize the place.”

“Well, you know where Thor was first banished to? New Mexico? That’s where I live, I went there for college which is how I met Jane and Darcy,” You explained. “We’re a pretty unlikely group of friends, to be honest.”

Loki’s lips flicked upwards into what could almost be called a smile. “I had noticed. So you were there when Thor was mortal?”

“No. I was actually visiting family and missed all the action, believe it or not. Just my luck.”

“It’s probably for the best, I did try to kill him. I’m thankful that you weren’t there to get caught in the crossfire,” He admitted.

“That’s one thing I don’t get. Why do you hate Thor so much? I mean, it’s obvious that he cares about you,” You pointed out.

That gave Loki pause. “I suppose we just never really gotten along. He’s Thor, the oh-so-perfect Asgardian prince, and I’m Loki, the frost giant who will never be good enough.”

“That’s not true,” You said softly, honestly surprised he had confided in you like that. “Loki, adopted or not, the two of you are brothers. It’s obvious that Thor doesn’t care that you’re a frost giant, he just cares that you’re his brother. And for the record, I don’t care that you’re a frost giant, either.”

He didn’t appear to be convinced. “We’ll see.”

You ended up moving into Stark Tower a couple weeks after meeting Loki. Both he and the other Avengers wanted to make sure you were safe from external threats, which considering your soulmate was Loki, was probably a good idea. It was only natural for you to want to, anyways, since that’s where your soulmate was, but the fact that you were hanging around a gang of superheroes took some getting used to. Not to mention the fact that one of the most hated men on Earth was your soulmate, but that was a whole other can of worms.

There was a learning curve when it came to being around super spies and technical geniuses, but you ended up learning pretty quickly. In fact, there were three things you learned within your first week. One: never leave food unattended, otherwise it will get eaten. Two: don’t attempt to sneak up on Natasha. It didn’t end well for Tony and you doubted it would end any better for you. And three: never leave Clint and Loki alone in a room together.

Understandably, Clint didn’t particularly like Loki. Saying Clint loathed Loki would be a more apt phrase. Unfortunately, this meant Clint would pick a fight with the god of mischief which ended with Clint getting his ass handed to him or Loki stalking away to sulk for a few hours.

About three weeks into you moving into the Tower, however, things went a bit too far. You had spent most of the day with Natasha, the two of you having a girl’s day getting to know each other better, and when you returned you were intent on going straight to find Loki. He could usually be found in his room, or the library, or even the common room on the rare occasion that he was feeling like speaking with the other Avengers. However, he was nowhere to be found.

“Have any of you seen Loki?” You asked, addressing Tony, Clint, and Steve who were discussing their latest mission. “I can’t find him anywhere.”

Clint choked on the water he was drinking, causing the other two men to give him confused glances. Tony shrugged. “No, I haven’t. I’m sure Reindeer Games is around somewhere, just use your compass. That is what it’s there for.”

“I saw him in the library this morning, if that helps,” Steve offered. “But I haven’t seen him since, sorry. Clint?”

The archer cleared his throat. “Oh, um… no. I haven’t seen him.”

“He’s probably just with Thor or something,” You said with a shrug. “Thanks anyways, guys.”

You gave them a wave before wandering off, deciding to just chill in your room and watch some Netflix, though you couldn’t rid yourself of the distinct feeling that something was wrong. You had been encouraged (mainly by Steve) to follow your gut, especially if you thought something wasn’t right, so you glanced down at your compass and started walking in the direction it was pointed.

It took a little bit of trial and error to figure out what floor Loki was on, though you figured once your compass stopped wobbling uncertainly that you were in the right place. You were surprised that he was on the floor devoted mainly to training and gym activities and followed the compass on your skin towards the showers.

He wasn’t anywhere to be seen, and the floor was quiet as the Avengers were all off eating dinner. “Loki?” You called, padding through the shower room. There was no answer. “Jarvis, have you seen Loki?” Perhaps you should have asked the AI first.

“He appears to be in the sauna,” Jarvis responded promptly.

“Well what’s he doing in there?” You muttered, mostly to yourself. You jiggled the handle of the sauna door - it was locked. Something was definitely wrong. “Jarvis, can you unlock the door to the sauna?”

“Of course,” Jarvis said, the lock clicking a moment later. You yanked the door open, immediately assaulted with a wave of nearly nauseating heat.

You barely had to go in to find your soulmate, slumped against the wall by the door. “Loki? Are you okay?”

He stirred at your statement, glancing up at you warily. You let out an audible gasp at his appearance - his skin was completely blue and his eyes were red. The heat must have forced him back into his natural frost giant form. “No.”

“Jarvis, turn off the heat in the sauna,” You ordered, crouching down to drape his arm over your shoulder and heave him up. “Let’s get you out of here.”

You had to half-drag Loki out of the sauna, and he was heavier than he looked. “Here, lean against the wall,” You ordered, yanking open the curtain to the nearest shower and turning it on, making it as cold as possible. “Get under the water.”

He managed to stumble over, and you got significantly doused whilst preventing him from falling face-first on the tile. The icy water seemed to revive him. “Y/N?” He asked.

“Yeah, it’s me,” You confirmed. “Are you okay?”

“I’ll live,” He said, still leaning heavily on you.

“Here, we need to get your shirt off. It’ll help cool you down,” You ordered, and you jumped as his form flashed and a majority of his clothes vanished, sans what appeared to be a pair of swim trunks. “I thought you couldn’t do magic?”

“I can do some. Not a lot. Don’t tell Thor,” Loki admitted. He seemed to be regaining his strength, at least enough that he was speaking coherently once more, and it was only now that he realized his skin was blue rather than its normal pale color. He looked down at himself, then back at you, then at himself once more.

“It’s okay,” You assured him.

“I look like a monster,” He spat, disgust in his voice. “It’s not okay. Not to mention you should have frostbite from touching me.”

“Well, you don’t want to give me frostbite, do you?” You asked. His head gave a brief shake. “I figured as much. I believe you have more control than you think you do, Loki. And I don’t think you look like a monster.”

He snorted. “You’re lying.”

“No, I’m not. I’ll prove it.” You pulled him down into a kiss, only moderately surprised when he didn’t jerk away and instead wrapped an arm around your waist to deepen the kiss. His lips were cool, moving in perfect sync with your own.

Later, after you had punched Clint for locking Loki in the sauna, you’d claim that you and Loki had your first kiss out in Central Park and not under an icy shower. But the two of you knew, and many months later Loki admitted to you that that moment was when he realized he had fallen in love with you. You smiled, said you knew, and told him you loved him too.

Life was good with the man you loved. And you never had a directionless compass again.

Tag List: @the-crime-fighting-spider @micachu1331 @esoltis280 @ilvermornyqueen @teaand-cookies @alittlebitofmagic @bluebird214 @lovely-geek @fleurs-en-ruines @loki-god-of-my-life @awesomehaylzus @ldyhawkeye @ldyhawkeye @small-wolf-in-the-snow @the-bleeding-rose @momc95 @loki-laufey-son @hp-hogwartsexpress @haven-in-writing @alivingfanlady @micachu1331 @little-miss-mischief1 @pepperr-pottss @t-talkative @lady-loki-ren @usedtobeabaker @val-kay-rie @inn-ocuous @xclo02 @ex-bookjunky @stone2576 @dkpink123 @loki-laufey-son

#loki#loki x reader#loki imagine#loki fanfiction#loki x you#marvel#marvel x reader#marvel imagine#loki laufeyson#loki laufeyson x reader#loki laufeyson imagine#reader#reader insert#x reader

1K notes

·

View notes

Text

Top-notch Web Design & Development Trends You Should In 2021

Technology keeps on evolving as humans realize new ways to innovate, doing things quicker and creatively than they did before. Developers are perpetually trying ahead to explore new technologies that may propel them towards a brighter future. The trendy business is steadily developing, and new web technologies arise each day.

These new trends supply tons of prospects for entrepreneurs who need interaction with more users and keep ahead during this competitive market. These advantages and innovation within the space of custom web design and development have galvanized several entrepreneurs to speculate in website development. However, making the proper website isn't as simple as it seems.

Web design and development describes the process of creating a website. The umbrella term involves web design and web development, where web design determines the look and feel of a website, while web development determines how it works. Hiring a professional web design company for your business website has many benefits since the experienced company can assist you with the latest trends and development in the market. Here are the top seven web design and development trends that you should consider in 2021.

1. AI and chatbots

Artificial intelligence is a revolutionary technology that has been employed in varied industries. It's conjointly generally enforced within the field of web development in various ways to produce an additional robust client experience. One among the numerous ways within which AI is implemented is for merchandise or content recommendations for websites. Websites of YouTube and Netflix are solid examples of how AI is used to recommend content to users as per their preferences.

It's believed that this year bots can become more self-learning and meet a specific user’s necessities and match behavior, which means that operating bots can take the place of support managers 24/7 Associate in the system. Therefore AI may be a technology that's helpful for business automation and prognostication strategies. Additionally, AI conjointly helps in enhancing your website through reinforcement learning.

2. Progressive web Apps (PWA)

Among the highest trends in web development, we must always mention PWA (Progressive web apps) technology. It suggests that it blends within the practicality of an internet app and a native mobile app. It operates severally on the browser and interacts with the shopper as a native application. So, it's an internet app at the core; however, it has native app-like attributes. You'll be able to install a PWA similar to a native app and may share it as well. Recently, many companies prefer custom website development so that they will have a unique design for their customers.

3. Accelerated Mobile Pages (AMP)

Accelerated Mobile Pages (AMP) is a trend in web development to hurry up page performance and cut back the prospect of users feat it. AMP technology is incredibly near to PWA. The distinction is that in AMP, pages become accelerated due to the ASCII text file plugin developed by Twitter and Google. With additional individual's mobile devices, your website should supply seamless expertise to users on mobiles. Websites typically fail to realize this side as websites are big, having tons of content like images, videos, etc., affects those web pages' loading speed. An experienced web design and development can help you solve many issues related to developing websites.

AMP solves this problem that loads quickly on mobile devices. These web pages are specifically optimized to assure the quick loading of webpages on mobile devices while not the necessity for classy coding. They have a reduced and nonetheless convenient style with solely basic options compared to full-scope net results. These web pages are mobile-friendly, and their content is often readable. You'll be able to take up AMP development services to make a fast-loading website that mobile users can use on their mobile devices.

4. Serverless Design

2020 was marked by the difference of work-from-home by corporations thanks to the unfolding of Covid-19. Cloud applications have enlarged even more throughout this year owing to this. Custom website design, mutually of the foremost speedily growing domains, documented a considerable rise in serverless design demand. Consistent with the State of the cloud report by Flexera, 98% of enterprises adopt a minimum of one public or personal cloud. Serverless algorithms were designed as a cloud-computing execution model. The latest trends offered by web design and development services involve serverless app architecture facilitating decreased development and progress maintenance budgets, strengthening apps, and keeping the web atmosphere sustainable.

5. Motion UI

This web development trend falls in the style of web products. Startups are paying additional attention to the user experience. Pleasant-looking websites and apps have more probability of being detected by doable users and become viral. In a very Salesforce Report, it's explicit that 84% of shoppers read the website's look as necessary because of the actual service it offers. Web design isn't perpetually concerning pretty pictures, and it is about making responsive interfaces that your users can like.

6. Automation Take A Look At

Automation testing utilizes an automation tool to perform the task. To line up automation testing for a startup business is to attenuate the number of test cases that need to run manually instead of dropping manual testing. Automation testing is finished not solely to urge higher ROI; however, conjointly resulting from it will increase the scope of test coverage and rule out human interference and human errors. Why is test automation so necessary for web development in 2021? The solution may be a digital atmosphere that becomes additional and more competitive. If you're quicker than your competitors and the quality of your product and services is healthier — you may succeed.

7. Blockchain Technology

Cryptocurrencies aren't the most recent web development technology. The conception of them appeared initially in 2004, and many years ago, the crypto mercantilism market (based on blockchain technology) explored investments. What will we tend to expect in 2021, significantly within the field of web development? The usage of blockchain currency trading became considerably active at intervals over the past decade, and major payment systems began to settle for Bitcoins and alternative cryptocurrencies.

Conclusion:

Websites have become additional and more refined as powerful strategies and technologies are being introduced within web development. You will have to make use of those preceding web development trends to develop strong and out-of-the-box websites. Besides, developing a website with Auxesis Infotech is less complicated as you can have an expert team from the company to make attractive websites.

#webdesigntrends#webdesign#websitdeign#webdesigner#ui#ux#design#webdesigninsprations#website#elegantthemes#websitedevelopment#uxdesign#uidesign#responsivedesign#webdesignagency#webdeveloper#webdevelopmenttrends#onlinewebstore#financeandbanking#wellness#travel#healthcare#newsandpublocations#realestate#socialnetworking#elearning#sports#restaurantandfooding

0 notes

Text

OI???

i wonder how's he doing it. i participated in a similar project so i'm gonna try to explain what i know about ways to ✨read one's mind™✨

option 1. motor imagery

we were making an orthosis (i may use the wrong words since i didn't read anything in english abt this) for paralyzed folks who had a stroke, so basically we needed to detect the intention to bend a finger and then bend it artificially. this is the most straightforward way to do it, but also the most ineffective.

you literally just imitate the brain activity of moving your arm. and it is not as easy as it sounds. it actually takes trial and error and work

our methods are imprecise bc we don't read the activity of every neuron or smth, we gather the average from large groups (otherwise it would require invasive methods aka surgery). therefore we could differ between left arm, right arm, probably legs and that's about it

overall it's not what we want to use to play minecraft

option 2. P-300

that's what we used. it's frequently used to make things like a keyboard or some kind of remote control, y'know, with EEG (aka the helmet that reads brainwaves).

afaik usually there are a few flashing lights, but you concentrate on one of them and your brain reacts to it in a distinct way

a lot of flashing lights are involved when there are a lot of buttons. personally i'm not a big fan, but apparently people think that's okay?

option 3. i know nothing about this one but it sounds very fun

i dont know how it works, but with this one it may be a common occurrence. like you just put on the helmet and teach your neuronets (either brain or AI idk. likely both) like a dog. you try and if you're happy with what's going on, they keep it up; if you're not, they adjust their behavior. (again i don't know shit about this one and i'm probably wrong.) i've heard of two cases when this was used:

people sat in front of a screen with a solid colour that was somehow calculated from their brain activity, and the colour fluctuated (bc brain waves are pretty chaotic) until it came to the steady state of the colour brain liked the most (often green or orange)

a person learned to drive a remote control car with that. i think the person was the head of our laboratory but idk i've never seen him irl

to me this one sounds like it's just your pure will translated into a sequence of commands. mere magic. i hope this one is used.

out of everything that happened today, i feel like we should talk more about how fundy bought a helmet that reads fucking brainwaves and how he’s going to create a self-learning ai so he can use it to play minecraft. that’s fucking insane

#fundy#mcyt#literally me: oh! fundy is doing things i know something about while others might not! >#let me infodump about my previous unfinished project while procrastinating my current one despite it being due a week ago!#anyways i'm trying to teach tumblr users neurobiology rn. i know i'm doomed to fail but i would be really happy if i don't so >#so if you're still reading please rb. it would mean the absolute world to me <3#btw this is a quote from a youtube channel called fungithefox that i'm subscribed to. i think the intonations are really cool ksjssksjksjsk#it's a fundy clip channel#i need to stop this rant at once

495 notes

·

View notes

Text

appmon afterthoughts

appmon is finally over! it’s been a great journey. ;v; i drop shows easily when watching them week by week so i prefer binge-watching them at once, so appmon is the first show of this length that i managed to watch as it aired all the way through! (i dropped off somewhere in neovamdemon’s arc when trying to keep up with xros wars, haha. i did go back and finish it after that though!)

my personal preference of seasons: frontier > adventure > *appmon* > savers > 02 > hunters > tamers > tri > xros wars (as usual i still love all the seasons!! this is just if i had to rank them. i won’t deny that the 7 death generals arc was a bit of a drag for me though..)

here are my (LONG and incoherent) thoughts after watching the series, spoilers under the cut.

characters: - gosh i love the main cast so much!! ;v; i’m also glad that the appmon get a fair amount of characterisation and focus too (though still not as much as their human buddies), i feel there are times when digimon gives focus to the humans but in turn sacrifice some of the focus that their monster partners get. - i live for character interactions, so while i’m glad that haru/eri/astra interact with each other a lot, it’s a bit disappointing to see how little interaction rei and yuujin get with eri and astra. :( and hackmon never really interacts with the others much, or at all..i like hackmon, but it’d be nice to see him talk to someone other than rei for once. - i love the character growth in this season so much ;; possibly just behind frontier. eri and astra’s growth wasn’t as overt possibly due to how they express their personalities, but they throw a lot of it about the ‘filler’ eps and it all comes together really nicely. haru gets visibly stronger and more confident throughout the show, and rei’s change in reaction to his applidrive’s “are you alone?” question alone says so much. - on that note, i LOVE how they handled yuujin’s question (would you give your life up for a friend). in the end, it’s not those flashy scenes where you take a fatal hit for someone, but yuujin giving his life up not just to save humanity, but more importantly to save haru from having to shoulder the heavy burden of actually making the choice to kill yuujin. i thought that was a really powerful scene and it really got to me. - (shipping) haru and rei...i don’t care if it’s romantic or platonic or whatever i just love seeing them interact so, so much. people who know i like other pairs like seliph/ares, aichi/kai, etc...it’s the same pattern, nice pure boy gets the brooding edgy jerk to open up. i am a predictable person lol

story: - there are a lot of fillers. (but what is a digimon season without fillers?) i like fillers myself (probably why i like hunters when many people hate it), but i read the wtw comment threads every week and you get tons of complaints every time it hits a filler ep, and i can somewhat understand their frustration. appmon can be a drag to watch if you’re the kind who hates fillers. (i don’t deny a few fillers like the maripero ep did bore me though) - appmon does handle the main plot progression better than hunters though, despite the still whack pacing, and the fillers still tend to have nice character bits/growth. i love hunters but i won’t defend its absolute disregard for plot then trying to cram everything in at the last minute haha. still there are a number of unanswered questions..while i do agree that not all questions necessarily need answering, they can still provide deeper insight to characters. - personally, i liked how they kept the lightheartedness of the story while touching on salient AI-related issues. but while they bring up some very interesting issues, i don’t feel like they addressed them satisfactorily (at least from my pov)? leviathan’s aim with the humanity applification plan was to eradicate problems like conflict, disease, and human error from humanity, which is in a way even backed up by haru’s grandpa, who mentions “being data is great! without a physical body, one has no need to worry about injuries or sickness”, coming from someone who died in part because of sickness. you can see where the protags are coming from, but they never really address these ‘benefits’ of the humanity applification plan and how the benefits of not going through with the plan would outweigh the benefits of going through. - app-fusion might work well as a game mechanic, but i think it only serves to detract from the story in the anime, at least the way it is right now. for two series whose evolution is centred around fusion, xros wars handles fusion much better, utilising more creativity in both using and fusing the ‘fodder digimon’. appmon just tends to forget its fodder appmon exist. i personally think that appmon would be better off if its app-fusions were treated as simple evolutions instead (that’s pretty much how they treat the buddy appmon anyway; globemon is pretty much treated as ‘evolved gatchmon’, rather than an actual fusion of dogatchmon and timemon), that way you don’t get the nagging feeling that the fusion fodder appmon are just..fodder. - speaking of app-fusion, i have to say i personally prefer the more emotion-driven evolutions from the earlier seasons, rather than the evolutions achieved by getting the correct chip as we see in appmon. it makes sense from a gameplay perspective, but in context of the anime it feels..less impactful, i guess? i just always love seeing the bonds between the humans and their partners get tested, and become even stronger. - on an unrelated note, i find it funny that the show has a subplot involving two computer genius brothers and the cicada 3301 thing, mainly because i have a FDD story centered around the same idea (that i don’t make progress on at all. it probably looks like an appmon ripoff now but i don’t care haha)

designs: - i love the standard grade main appmon designs, they’re all so cute ;w; they have this distinct style in mind and i think they pulled it off well. (i’ve warmed up to musimon’s design A LOT from when he was first revealed, but i do still think it could be slightly less cluttered) - the ultimate grades are PERFECT, they’re some of my favourite digimon designs and possibly one of my favourite ‘group’ of designs out of protagonist digimon!! (possibly only bested by the frontier beast spirits and maybe the savers ultimates/tamers adults? haha) i just...yes. they’re amazing. i love them so so SO much - i’m not a fan of the direction they took with the god grades (maybe because i love the ultimate grades too much lol). all the gold didn’t sit too well with me either, maybe because we already had so much gold in xros wars? i do think they make great ‘final forms’ for the protagonist mons, but personally i still greatly prefer all their other forms to their god forms. i’m a bit more partial to hadesmon than the others because i LOVE jesmon, but hm...hadesmon still looks a lot more gaudy..like jesmon’s gaudy little brother. hahaha - i think the level system is a nice simplification from digimon. hopefully this means we can see appmon in future digimon games..they would be easier to implement than xw digimon anyway, haha;;

animation: - like many others i was skeptical about the making of higher-grade appmon 3DCG at first, though it eventually grew on me. the fights between 3DCG appmon were nicely done, but seeing the difference in animation between the 2D characters and 3DCG appmon was jarring, especially in shots where they’re together, mostly because of the framerate..the 3DCG appmon are animated on 1s? while the humans are animated on 3s like normal anime, it’s a big difference. thankfully most 3DCG fights don’t bring in the humans much. - the models/3D animation are still pretty well done! and i appreciate that they didn’t render them cel-shaded like what most anime do with 3D models (i remember translating the appmon interview mentioning why they did this, before appmon started airing; i was skeptical but now i can see what they were going for and i think it turned out well!) - after watching appmon i think 3DCG is a nice move for toei though, because we all know toei’s animation quality...could be better? hahaha. but i find toei’s weakness isn’t so much layout/choreography, but more of sometimes poorly-drawn frames, bad timing, or too little inbetweens, some of which are solved with 3DCG. you can especially see the contrast with digimon tri’s fight scenes; highly detailed digimon like jesmon for example would’ve benefited greatly from 3DCG, i know how painful it is to translate all of its details to 2D animation but as you can see it results in quite a number of not-as-nicely drawn frames. - special mention to charismon because i really like how he was modeled/rigged. those eyes!! can you imagine duskmon in 3D doing that and with those creepy sound effects too. - i’m not a huge fan of the palettes used in the AR-fields..(i didn’t like how the digiquartz was depicted that much either, and their depictions are quite similar so yeah) i can definitely see the effect they’re going for, but it felt more ‘kiddy alien-ish’ than ‘digital’ to me.

music: - i found the music quite ok (i liked DiVE!! and BE MY LIGHT though!), but i guess it didn’t match up to my personal tastes as much :x sadly appmon might be the lowest of the digimon seasons when it comes to music for me, i liked that endings are back! but the songs themselves didn’t captivate me as much as the previous seasons’ ending songs did. - on that note i’m glad they put in an insert song though! i guess i’m just really big on insert songs in digimon because as a kid i printed out the lyrics to brave heart and the other evo songs and loved singing along when they played in the show. lol - i remember complaining about this when the first episode aired, and my opinion still hasn’t changed 52 eps in. i CANNOT stand the applidrive voice at all hahaha (and the speed-up effect they use when app-linking/fusing) - the character songs are cute!! i’m personally really glad they decided to make them :) - the background music was pretty nice and had some memorable tracks..i’m not quite sure how i’d compare it to the rest? i liked all the soundtracks so far, though xros wars’ and frontier’s osts stood out especially for me.

13 notes

·

View notes

Text

Improving Medical AI Safety by Addressing Hidden Stratification

Jared Dunnmon

Luke Oakden-Rayner

By LUKE OAKDEN-RAYNER MD, JARED DUNNMON, PhD

Medical AI testing is unsafe, and that isn’t likely to change anytime soon.

No regulator is seriously considering implementing “pharmaceutical style” clinical trials for AI prior to marketing approval, and evidence strongly suggests that pre-clinical testing of medical AI systems is not enough to ensure that they are safe to use. As discussed in a previous post, factors ranging from the laboratory effect to automation bias can contribute to substantial disconnects between pre-clinical performance of AI systems and downstream medical outcomes. As a result, we urgently need mechanisms to detect and mitigate the dangers that under-tested medical AI systems may pose in the clinic.

In a recent preprint co-authored with Jared Dunnmon from Chris Ré’s group at Stanford, we offer a new explanation for the discrepancy between pre-clinical testing and downstream outcomes: hidden stratification. Before explaining what this means, we want to set the scene by saying that this effect appears to be pervasive, underappreciated, and could lead to serious patient harm even in AI systems that have been approved by regulators.

But there is an upside here as well. Looking at the failures of pre-clinical testing through the lens of hidden stratification may offer us a way to make regulation more effective, without overturning the entire system and without dramatically increasing the compliance burden on developers.

What’s in a stratum?

We recently published a pre-print titled “Hidden Stratification Causes Clinically Meaningful Failures in Machine Learning for Medical Imaging“.

Note: While this post discusses a few parts of this paper, it is more intended to explore the implications. If you want to read more about the effect and our experiments, please read the paper

The effect we describe in this work — hidden stratification — is not really a surprise to anyone. Simply put, there are subsets within any medical task that are visually and clinically distinct. Pneumonia, for instance, can be typical or atypical. A lung tumour can be solid or subsolid. Fractures can be simple or compound. Such variations within a single diagnostic category are often visually distinct on imaging, and have fundamentally different implications for patient care.

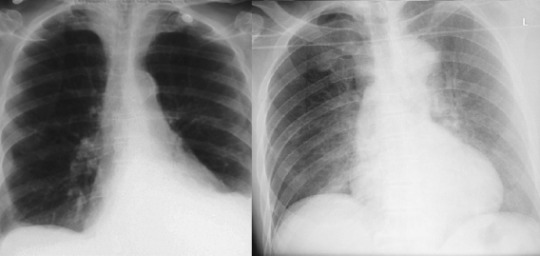

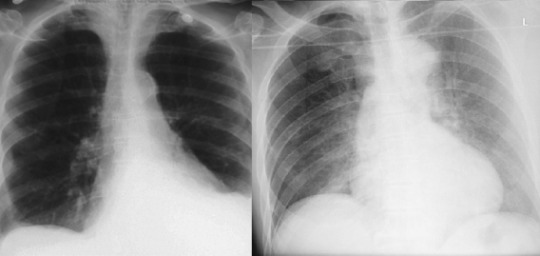

Examples of different lung nodules, ranging from solid (a), solid with a halo (b), and subsolid (c). Not only do these nodules look different, they reflect different diseases with different patient outcomes.

We also recognise purely visual variants. A pleural effusion looks different if the patient is standing up or is lying down, despite the pathology and clinical outcomes being the same.

These patients both have left sided pleural effusions (seen on the right of each image). The patient on the left has increased density at the left lung base, whereas the patient on the right has a subtle “veil” across the entire left lung.

These visual variants can cause problems for human doctors, but we recognise their presence and try to account for them. This is rarely the case for AI systems though, as we usually train AI models on coarsely defined class labels and this variation is unacknowledged in training and testing; in other words, the the stratification is hidden (the term “hidden stratification” actually has its roots in genetics, describing the unrecognised variation within populations that complicates all genomic analyses).

The main point of our paper is that these visually distinct subsets can seriously distort the decision making of AI systems, potentially leading to a major difference between performance testing results and clinical utility.

Clinical safety isn’t about average performance

The most important concept underpinning this work is that being as good as a human on average is not a strong predictor of safety. What matters far more is specifically which cases the models get wrong.

For example, even cutting-edge deep learning systems make such systematic misjudgments as consistently classifying canines in the snow as wolves or men as computer programmers and women as homemakers. This “lack of common sense” effect is often treated as an expected outcome of data-driven learning, which is undesirable but ultimately acceptable in deployed models outside of medicine (though even then, these effects have caused major problems for sophisticated technology companies).

Whatever the risk is in the non-medical world, we argue that in healthcare this same phenomenon can have serious implications.

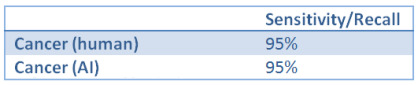

Take for example a situation where humans and an AI system are trying to diagnose cancer, and they show equivalent performance in a head-to-head “reader” study. Let’s assume this study was performed perfectly, with a large external dataset and a primary metric that was clinically motivated (perhaps the true positive rate in a screening scenario). This is the current level of evidence required for FDA approval, even for an autonomous system.

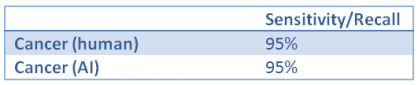

Now, for the sake of the argument, let’s assume the TPR of both decision makers is 95%. Our results to report to the FDA probably look like this:

TPR is the same thing as sensitivity/recall

That looks good, our primary measure (assuming a decent sample size) suggests that the AI and human are performing equivalently. The FDA should be pretty happyª.

Now, let’s also assume that the majority of cancer is fairly benign and small delays in treatment are inconsequential, but that there is a rare and visually distinct cancer subtype making up 5% of all disease that is aggressive and any delay in diagnosis leads to drastically shortened life expectancy.

There is a pithy bit of advice we often give trainee doctors: when you hear hoofbeats, think horses, not zebras. This means that you shouldn’t jump to diagnosing the rare subtype, when the common disease is much more likely. This is also exactly what machine learning models do – they consider prior probability and the presence of predictive features but, unless it has been explicitly incorporated into the model, they don’t consider the cost of their errors.

This can be a real problem in medical AI, because there is a less commonly shared addendum to this advice: if zebras were stone cold killing machines, you might want to exclude zebras first. The cost of misidentifying a dangerous zebra is much more than that of missing a gentle pony. No-one wants to get hoofed to death.

In practice, human doctors will be hyper-vigilant about the high-risk subtype, even though it is rare. They will have spent a disproportionate amount of time and effort learning to identify it, and will have a low threshold for diagnosing it (in this scenario, we might assume that the cost of overdiagnosis is minimal).

If we assume the cancer-detecting AI system was developed as is common practice, it probably was trained to detect “cancer” as a monolithic group. Since only 5% of the training samples included visual features of this subtype, and no-one has incorporated the expected clinical cost of misdiagnosis into the model, how do we expect it to perform in this important subset of cases?

Fairly obviously, it won’t be hypervigilant – it was never informed that it needed to be. Even worse, given the lower number of training examples in the minority subtype, it will probably underperform for this subset (since performance on a particular class or subset should increase with more training examples from that class). We might even expect that a human would get the majority of these cases right, and that the AI might get the majority wrong. In our paper, we show that existing AI models do indeed show concerning error rates on clinically important subsets despite encouraging aggregate performance metrics.

In this hypothetical, the human and the AI have the same average performance, but the AI specifically fails to recognise the critically important cases (marked in red). The human makes mistakes in less important cases, which is fairly typical in diagnostic practice.

In this setting, even though the doctors and the AI have the same overall performance (justifying regulatory approval), using the AI would lead to delayed diagnosis in the cases where such a delay is critically important. It would kill patients, and we would have no way to predict this with current testing.

Predicting where AI fails

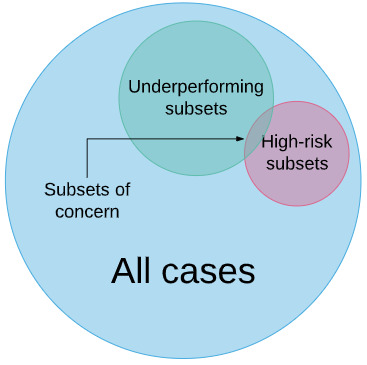

So, how can we mitigate this risk? There are lots of clever computer scientists trying to make computers smart enough to avoid the problem (see: algorithmic robustness/fairness, causal machine learning, invariant learning etc.), but we don’t necessarily have to be this fancy^. If the problem is that performance may be worse in clinically important subsets, then all we might need to do is identify those subsets and test their performance.

In our example above, we can simply label all the “aggressive sub-type” cases in the cancer test set, and then evaluate model performance on that subset. Then our results (to report to the FDA would be):

As you might expect, these results would be treated very differently by a regulator, as this now looks like an absurdly unsafe AI system. This “stratified” testing tells us far more about the safety of this system than the overall or average performance for a medical task.

So, the low-tech solution is obvious – you identify all possible variants in the data and label them in the test set. In this way, a safe system is one that shows human-level performance in the overall task as well as in the subsets.

We call this approach schema completion. A schema (or ontology) in this context is the label structure, defining the relationships between superclasses (the large, coarse classes) and subclasses (the fine-grained subsets). We have actually seen well-formed schemas in medical AI research before, for example in the famous 2017 Nature paper Dermatologist-level classification of skin cancer with deep neural networks by Esteva et al. They produced a complex tree structure defining the class relationships, and even if this is not complete, it is certainly much better than pretending that all of the variation in skin lesions is explained by “malignant” and “not malignant” labels.

So why doesn’t everyone test on complete schema? Two reasons:

There aren’t enough test cases (in this dermatology example, they only tested on the three red super-classes). If you had to have well-powered test sets for every subtype, you would need more data than in your training set!

There are always more subclasses*. In the paper, Esteva et al describe over 2000 diagnostic categories in their dataset! Even then they didn’t include all of the important visual subsets in their schema, for example we have seen similar models fail when skin markers are present.

So testing all the subsets seems untenable. What can we do?

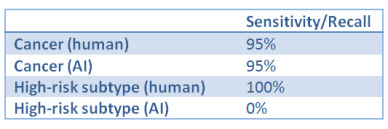

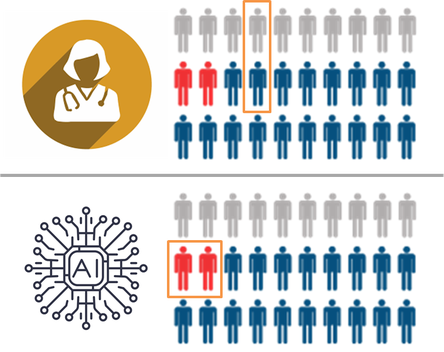

We think that we can rationalise the problem. If we knew what subsets are likely to be “underperformers”, and we use our medical knowledge to determine which subsets are high-risk, then we only need to test on the intersection between these two groups. We can predict the specific subsets where AI could clinically fail, and then only need to target these subsets for further analysis.

In our paper, we identified three main factors that appear to lead to underperformance. Across multiple datasets, we find evidence that hidden stratification leads to poor performance when there are subsets characterized by low subset prevalence, poor subset label quality, and/or subtle discriminative features (when the subset looks more like a different class than the class that it actually belongs to).

An example from the paper using the MURA dataset. Relabeled, we see that metalwork (left) is visually the most obvious finding (it looks the least like a normal x-ray out of the subclasses). Fractures (middle) can be subtle, and degenerative disease (right) is both subtle and inconsistently labeled. A model trained on the normal/abnormal superclasses significantly underperforms on cases within the subtle and noisy subclasses.

Putting it into practice

So we think we know how to recognise problematic subsets.

To actually operationalise this, we doctors would sit down and write out a complete schema for any and all medical AI tasks. Given the broad range of variation, covering clinical, pathological, and visual subsets, this would be a huge undertaking. Thankfully, it only needs to be done once (and updated rarely), and this is exactly the sort of work that is performed by large professional bodies (like ACR, ESR, RSNA), who regularly form working groups of domain specialists to tackle these kind of problems^^.

The nicest thing you can say about being in a working group is that someone’s gotta do it.

With these expert-defined schema, we would then highlight the subsets which may cause problems – those that are likely to underperform due to the factors we have identified in our research, and those that are high risk based on our clinical knowledge. Ideally there will be only a few “subsets of concern” per task that fulfil these criteria.

Then we present this ontology to the regulators and say “for an AI system to be judged safe for task X, we need to know the performance in the subsets of concern Y and Z.” In this way, a pneumothorax detector would need to show performance in cases without chest tubes, a fracture detector would need to be equal to humans for subtle fractures as well as obvious ones, and a “normal-case detector” (don’t get Luke started) would need to show that it doesn’t miss serious diseases.

To make this more clear, let’s consider a simple example. Here is a quick attempt at a pneumothorax schema:

Subsets of concern in red, conditional subsets of concern in orange (depends on exact implementation of model and data)

Pneumothorax is a tricky one since they are all “high risk” if they are untreated (meaning you end up with more subsets of concern than in many tasks), but we think this gives a general feel for what schema completion might look like.

The beauty of this approach is that it would work within the current regulatory framework, and as long as there aren’t too many subsets of concern the compliance cost should be low. If you already have enough cases for subset testing, then the only cost to the developer would be producing the labels, which would be relatively small.

If the subsets of concern in the existing test set are too small for valid performance results, then there is a clear path forward – you need to enrich for those subsets (i.e., not gather ten thousand more random cases). While this does carry a compliance cost, since you only need to do this for a small handful of subsets, the cost is also likely to be small compared to the overall cost of development. Sourcing the cases could get tricky if they are rare, but this is not insurmountable.

The only major cost to developers when implementing a system like this is if they find out that their algorithm is unsafe, and it needs to be retrained with specific subsets in mind. Since this is absolutely the entire point of regulation, we’d call this a reasonable cost of doing business.

In fact, since this list of subsets of concern would be widely available, developers could decide on their AI targets informed of the associated risks – if they don’t think they can adequately test for performance in a subset of concern, they can target a different medical task. This is giving developers have been asking for – they say they want more robust regulation and better assurances of safety, as long as the costs are transparent and the playing field is level.

How much would it help?

We see this “low-tech” approach to strengthen pre-clinical testing as a trade-off between being able to measure the actual clinical costs of using AI (as you would in a clinical trial) and the realities of device regulation. By identifying strata that are likely to produce worse clinical outcomes, we should be able to get closer to the safety profile delivered by gold standard clinical testing, without massively inflating costs or upending the current regulatory system.

This is certainly no panacea. There will always be subclasses and edge cases that we simply can’t test preclinically, perhaps because they aren’t recognised in our knowledge base or because examples of the strata aren’t present within our dataset. We also can’t assess the effects of the other causes of clinical underperformance, such as the laboratory effect and automation bias.

To close this safety gap, we still need to rely on post-deployment monitoring.

A promising direction for post-deployment monitoring is the AI audit, a process where human experts monitor the performance and particularly the errors of AI systems in clinical environments, in effect estimating the harm caused by AI in real-time. The need for this sort of monitoring has been recognised by professional organisations, who are grappling with the idea that we will need a new sort of specialist – a chief medical information officer who is skilled in AI monitoring and assessment – embedded in every practice (for example, see section 3 of the proposed RANZCR Standards of Practice for Artificial Intelligence).

Auditors are the real superheros

Audit works by having human experts review examples of AI predictions, and trying to piece together an explanation for the errors. This can be performed with image review alone or in combination with other interpretability techniques, but either way error auditing is critically dependent on the ability of the auditor to visually appreciate the differences in the distribution of model outputs. This approach is limited to the recognition of fairly large effects (i.e., effects that are noticeable in a modest/human-readable sample of images) and it will almost certainly be less exhaustive than prospectively assessing a complete schema defined by an expert panel. That being said, this process can still be extremely useful. In our paper, we show that human audit was able to detect hidden stratification that caused the performance of a CheXNet-reproduction model to drop by over 15% ROC-AUC on pneumothorax cases without chest drains — the subset that’s most important! — with respect to those that had already been treated with a chest drain.

Thankfully, the two testing approaches we’ve described are synergistic. Having a complete schema is useful for audit; instead of laboriously (and idiosyncratically) searching for meaning in sets of images, we can start our audit with the major subsets of concern. Discovering new and unexpected stratification would only occur when there are clusters of errors which do not conform to the existing schema, and these newly identified subsets of concern could be folded back into the schema via a reporting mechanism.

Looking to the future, we also suggest in our paper that we might be able to automate some of the audit process, or at least augment it with machine learning. We show that even simple k-means clustering in the model feature space can be effective in revealing important subsets in some tasks (but not others). We call this approach to subset discovery algorithmic measurement, and anticipate that further development of these ideas may be useful in supplementing schema completion and human audit. We have begun to explore more effective techniques for algorithmic measurement that may work better than k-means, but that is a topic for another day :).

Making AI safe(r)

These techniques alone won’t make medical AI safe, because they can’t replace all the benefits of proper clinical testing of AI. Risk-critical systems in particular need randomised control trials, and our demonstration of hidden stratification in common medical AI tasks only reinforces this point. The problem is that there is no path from here to there. It is possible that RCTs won’t even be considered until after we have a medical AI tragedy, and by then it will be too late.

In this context, we believe that pre-marketing targeted subset testing combined with post-deployment monitoring could serve as an important and effective stopgap for improving AI safety. It is low tech, achievable, and doesn’t create a huge compliance burden. It doesn’t ask the healthcare systems and governments of the world to overhaul their current processes, just to take a bit of advice on what specific questions need to be asked for any given medical task. By delivering a consensus schema to regulators on a platter, they might even use it.

And maybe this approach is more broadly attractive as well. AI is not human — inhuman, in fact — in how it makes decisions. While it is attractive to work towards human-like intelligence in our computer systems, it is impossible to predict if and when this might be feasible.

The takeaway here is that subset-based testing and monitoring is one way we can bring human knowledge and common sense into medical machine learning systems, completely separate from the mathematical guts of the models. We might even be able to make them safer without making them smarter, without teaching them to ask why, and without rebooting AI.

Luke’s footnotes:

ª The current FDA position on the clinical evaluation of medical software (pdf link) is: “…prior to product launch (pre-market) the manufacturer generates evidence of the product’s accuracy, specificity, sensitivity, reliability, limitations, and scope of use in the intended use environment with the intended user, and generates a SaMD definition statement. Once the product is on the market (post-market), as part of normal lifecycle management processes, the manufacturer continues to collect real world performance data (e.g., complaints, safety data)…”

^ I am planning to do a follow-up post on this idea – that we don’t always need to default to looking for not yet developed, possibly decades away technological solutions when the problem can be immediately solved with a bit of human effort.

^^ A possibly valid alternative would be crowd-sourcing these schema. This would have to be done very carefully to be considered authoritative enough to justify including in regulatory frameworks, but could happen much quicker than the more formal approach.

* I’ve heard this described as “subset whack-a-mole”, or my own phrasing: “there are subsets all the way down”.**

** I love that I have finally included a Terry Pratchett reference in my Pratchett-esque footnotes.

Luke Oakden-Rayner is a radiologist (medical specialist) in South Australia, undertaking a Ph.D in Medicine with the School of Public Health at the University of Adelaide.

Jared Dunnmon is a post-doctoral fellow at Stanford University where he researches the development of weakly supervised machine learning techniques and application to problems in human health, energy & environment, and national security.

This post originally appeared on Luke’s blog here.

Improving Medical AI Safety by Addressing Hidden Stratification published first on https://venabeahan.tumblr.com

0 notes

Text

Improving Medical AI Safety by Addressing Hidden Stratification

Jared Dunnmon

Luke Oakden-Rayner

By LUKE OAKDEN-RAYNER MD, JARED DUNNMON, PhD

Medical AI testing is unsafe, and that isn’t likely to change anytime soon.

No regulator is seriously considering implementing “pharmaceutical style” clinical trials for AI prior to marketing approval, and evidence strongly suggests that pre-clinical testing of medical AI systems is not enough to ensure that they are safe to use. As discussed in a previous post, factors ranging from the laboratory effect to automation bias can contribute to substantial disconnects between pre-clinical performance of AI systems and downstream medical outcomes. As a result, we urgently need mechanisms to detect and mitigate the dangers that under-tested medical AI systems may pose in the clinic.

In a recent preprint co-authored with Jared Dunnmon from Chris Ré’s group at Stanford, we offer a new explanation for the discrepancy between pre-clinical testing and downstream outcomes: hidden stratification. Before explaining what this means, we want to set the scene by saying that this effect appears to be pervasive, underappreciated, and could lead to serious patient harm even in AI systems that have been approved by regulators.

But there is an upside here as well. Looking at the failures of pre-clinical testing through the lens of hidden stratification may offer us a way to make regulation more effective, without overturning the entire system and without dramatically increasing the compliance burden on developers.

What’s in a stratum?

We recently published a pre-print titled “Hidden Stratification Causes Clinically Meaningful Failures in Machine Learning for Medical Imaging“.

Note: While this post discusses a few parts of this paper, it is more intended to explore the implications. If you want to read more about the effect and our experiments, please read the paper

The effect we describe in this work — hidden stratification — is not really a surprise to anyone. Simply put, there are subsets within any medical task that are visually and clinically distinct. Pneumonia, for instance, can be typical or atypical. A lung tumour can be solid or subsolid. Fractures can be simple or compound. Such variations within a single diagnostic category are often visually distinct on imaging, and have fundamentally different implications for patient care.

Examples of different lung nodules, ranging from solid (a), solid with a halo (b), and subsolid (c). Not only do these nodules look different, they reflect different diseases with different patient outcomes.

We also recognise purely visual variants. A pleural effusion looks different if the patient is standing up or is lying down, despite the pathology and clinical outcomes being the same.

These patients both have left sided pleural effusions (seen on the right of each image). The patient on the left has increased density at the left lung base, whereas the patient on the right has a subtle “veil” across the entire left lung.

These visual variants can cause problems for human doctors, but we recognise their presence and try to account for them. This is rarely the case for AI systems though, as we usually train AI models on coarsely defined class labels and this variation is unacknowledged in training and testing; in other words, the the stratification is hidden (the term “hidden stratification” actually has its roots in genetics, describing the unrecognised variation within populations that complicates all genomic analyses).

The main point of our paper is that these visually distinct subsets can seriously distort the decision making of AI systems, potentially leading to a major difference between performance testing results and clinical utility.

Clinical safety isn’t about average performance

The most important concept underpinning this work is that being as good as a human on average is not a strong predictor of safety. What matters far more is specifically which cases the models get wrong.

For example, even cutting-edge deep learning systems make such systematic misjudgments as consistently classifying canines in the snow as wolves or men as computer programmers and women as homemakers. This “lack of common sense” effect is often treated as an expected outcome of data-driven learning, which is undesirable but ultimately acceptable in deployed models outside of medicine (though even then, these effects have caused major problems for sophisticated technology companies).

Whatever the risk is in the non-medical world, we argue that in healthcare this same phenomenon can have serious implications.

Take for example a situation where humans and an AI system are trying to diagnose cancer, and they show equivalent performance in a head-to-head “reader” study. Let’s assume this study was performed perfectly, with a large external dataset and a primary metric that was clinically motivated (perhaps the true positive rate in a screening scenario). This is the current level of evidence required for FDA approval, even for an autonomous system.

Now, for the sake of the argument, let’s assume the TPR of both decision makers is 95%. Our results to report to the FDA probably look like this:

TPR is the same thing as sensitivity/recall

That looks good, our primary measure (assuming a decent sample size) suggests that the AI and human are performing equivalently. The FDA should be pretty happyª.

Now, let’s also assume that the majority of cancer is fairly benign and small delays in treatment are inconsequential, but that there is a rare and visually distinct cancer subtype making up 5% of all disease that is aggressive and any delay in diagnosis leads to drastically shortened life expectancy.

There is a pithy bit of advice we often give trainee doctors: when you hear hoofbeats, think horses, not zebras. This means that you shouldn’t jump to diagnosing the rare subtype, when the common disease is much more likely. This is also exactly what machine learning models do – they consider prior probability and the presence of predictive features but, unless it has been explicitly incorporated into the model, they don’t consider the cost of their errors.

This can be a real problem in medical AI, because there is a less commonly shared addendum to this advice: if zebras were stone cold killing machines, you might want to exclude zebras first. The cost of misidentifying a dangerous zebra is much more than that of missing a gentle pony. No-one wants to get hoofed to death.

In practice, human doctors will be hyper-vigilant about the high-risk subtype, even though it is rare. They will have spent a disproportionate amount of time and effort learning to identify it, and will have a low threshold for diagnosing it (in this scenario, we might assume that the cost of overdiagnosis is minimal).

If we assume the cancer-detecting AI system was developed as is common practice, it probably was trained to detect “cancer” as a monolithic group. Since only 5% of the training samples included visual features of this subtype, and no-one has incorporated the expected clinical cost of misdiagnosis into the model, how do we expect it to perform in this important subset of cases?

Fairly obviously, it won’t be hypervigilant – it was never informed that it needed to be. Even worse, given the lower number of training examples in the minority subtype, it will probably underperform for this subset (since performance on a particular class or subset should increase with more training examples from that class). We might even expect that a human would get the majority of these cases right, and that the AI might get the majority wrong. In our paper, we show that existing AI models do indeed show concerning error rates on clinically important subsets despite encouraging aggregate performance metrics.

In this hypothetical, the human and the AI have the same average performance, but the AI specifically fails to recognise the critically important cases (marked in red). The human makes mistakes in less important cases, which is fairly typical in diagnostic practice.

In this setting, even though the doctors and the AI have the same overall performance (justifying regulatory approval), using the AI would lead to delayed diagnosis in the cases where such a delay is critically important. It would kill patients, and we would have no way to predict this with current testing.

Predicting where AI fails

So, how can we mitigate this risk? There are lots of clever computer scientists trying to make computers smart enough to avoid the problem (see: algorithmic robustness/fairness, causal machine learning, invariant learning etc.), but we don’t necessarily have to be this fancy^. If the problem is that performance may be worse in clinically important subsets, then all we might need to do is identify those subsets and test their performance.