#Spinnaker deployments

Text

SRE Technologies: Transforming the Future of Reliability Engineering

In the rapidly evolving digital landscape, the need for robust, scalable, and resilient infrastructure has never been more critical. Enter Site Reliability Engineering (SRE) technologies—a blend of software engineering and IT operations aimed at creating a bridge between development and operations, enhancing system reliability and efficiency. As organizations strive to deliver consistent and reliable services, SRE technologies are becoming indispensable. In this blog, we’ll explore the latest trends in SRE technologies that are shaping the future of reliability engineering.

1. Automation and AI in SRE

Automation is the cornerstone of SRE, reducing manual intervention and enabling teams to manage large-scale systems effectively. With advancements in AI and machine learning, SRE technologies are evolving to include intelligent automation tools that can predict, detect, and resolve issues autonomously. Predictive analytics powered by AI can foresee potential system failures, enabling proactive incident management and reducing downtime.

Key Tools:

PagerDuty: Integrates machine learning to optimize alert management and incident response.

Ansible & Terraform: Automate infrastructure as code, ensuring consistent and error-free deployments.

2. Observability Beyond Monitoring

Traditional monitoring focuses on collecting data from pre-defined points, but it often falls short in complex environments. Modern SRE technologies emphasize observability, providing a comprehensive view of the system’s health through metrics, logs, and traces. This approach allows SREs to understand the 'why' behind failures and bottlenecks, making troubleshooting more efficient.

Key Tools:

Grafana & Prometheus: For real-time metric visualization and alerting.

OpenTelemetry: Standardizes the collection of telemetry data across services.

3. Service Mesh for Microservices Management

With the rise of microservices architecture, managing inter-service communication has become a complex task. Service mesh technologies, like Istio and Linkerd, offer solutions by providing a dedicated infrastructure layer for service-to-service communication. These SRE technologies enable better control over traffic management, security, and observability, ensuring that microservices-based applications run smoothly.

Benefits:

Traffic Control: Advanced routing, retries, and timeouts.

Security: Mutual TLS authentication and authorization.

4. Chaos Engineering for Resilience Testing

Chaos engineering is gaining traction as an essential SRE technology for testing system resilience. By intentionally introducing failures into a system, teams can understand how services respond to disruptions and identify weak points. This proactive approach ensures that systems are resilient and capable of recovering from unexpected outages.

Key Tools:

Chaos Monkey: Simulates random instance failures to test resilience.

Gremlin: Offers a suite of tools to inject chaos at various levels of the infrastructure.

5. CI/CD Integration for Continuous Reliability

Continuous Integration and Continuous Deployment (CI/CD) pipelines are critical for maintaining system reliability in dynamic environments. Integrating SRE practices into CI/CD pipelines allows teams to automate testing and validation, ensuring that only stable and reliable code makes it to production. This integration also supports faster rollbacks and better incident management, enhancing overall system reliability.

Key Tools:

Jenkins & GitLab CI: Automate build, test, and deployment processes.

Spinnaker: Provides advanced deployment strategies, including canary releases and blue-green deployments.

6. Site Reliability as Code (SRaaC)

As SRE evolves, the concept of Site Reliability as Code (SRaaC) is emerging. SRaaC involves defining SRE practices and configurations in code, making it easier to version, review, and automate. This approach brings a new level of consistency and repeatability to SRE processes, enabling teams to scale their practices efficiently.

Key Tools:

Pulumi: Allows infrastructure and policies to be defined using familiar programming languages.

AWS CloudFormation: Automates infrastructure provisioning using templates.

7. Enhanced Security with DevSecOps

Security is a growing concern in SRE practices, leading to the integration of DevSecOps—embedding security into every stage of the development and operations lifecycle. SRE technologies are now incorporating automated security checks and compliance validation to ensure that systems are not only reliable but also secure.

Key Tools:

HashiCorp Vault: Manages secrets and encrypts sensitive data.

Aqua Security: Provides comprehensive security for cloud-native applications.

Conclusion

The landscape of SRE technologies is rapidly evolving, with new tools and methodologies emerging to meet the challenges of modern, distributed systems. From AI-driven automation to chaos engineering and beyond, these technologies are revolutionizing the way we approach system reliability. For organizations striving to deliver robust, scalable, and secure services, staying ahead of the curve with the latest SRE technologies is essential. As we move forward, we can expect even more innovation in this space, driving the future of reliability engineering.

0 notes

Text

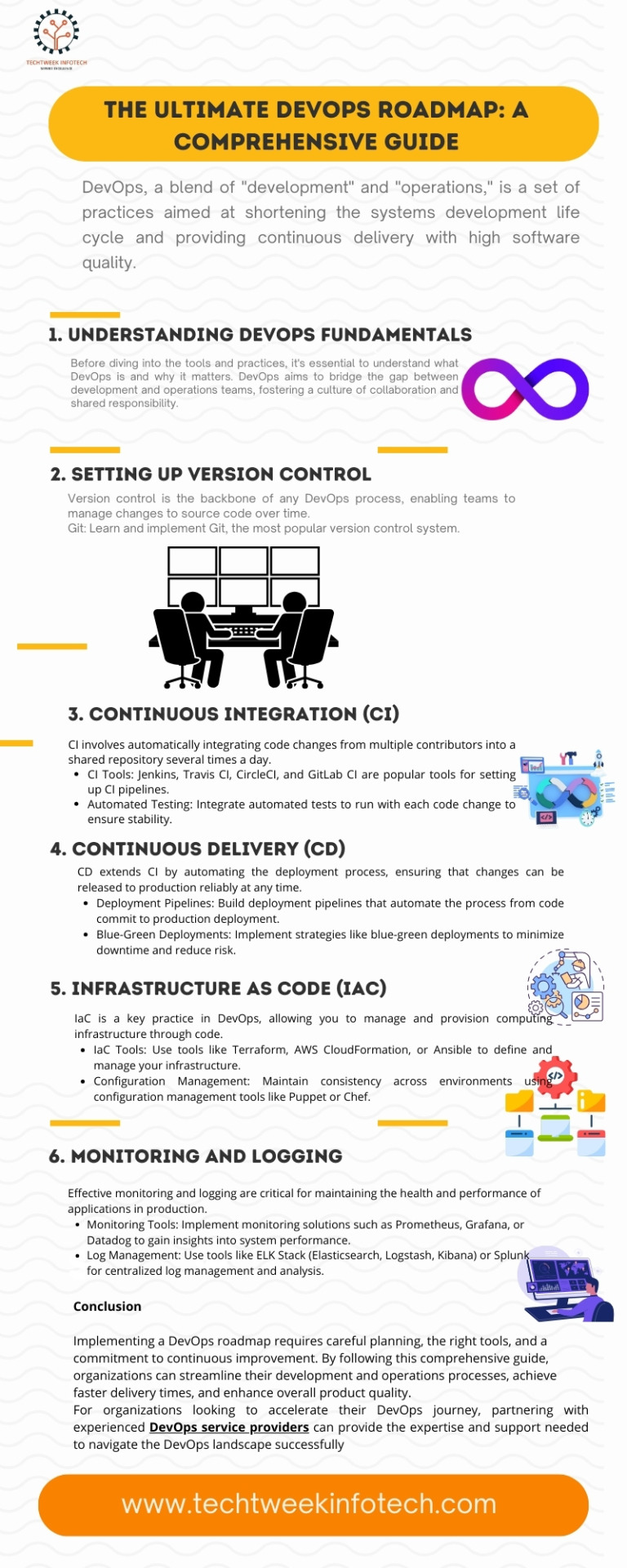

Introduction

The DevOps approach has revolutionized the way software development and operations teams collaborate, significantly improving efficiency and accelerating the delivery of high-quality software. Understanding the DevOps roadmap is crucial for organizations looking to implement or enhance their DevOps practices. This roadmap outlines the key stages, skills, and tools necessary for a successful DevOps transformation.

Stage 1: Foundation

1.1 Understanding DevOps Principles: Before diving into tools and practices, it's essential to grasp the core principles of DevOps. This includes a focus on collaboration, automation, continuous improvement, and customer-centricity.

1.2 Setting Up a Collaborative Culture: DevOps thrives on a culture of collaboration between development and operations teams. Foster open communication, shared goals, and mutual respect.

Stage 2: Toolchain Setup

2.1 Version Control Systems (VCS): Implement a robust VCS like Git to manage code versions and facilitate collaboration.

2.2 Continuous Integration (CI): Set up CI pipelines using tools like Jenkins, GitLab CI, or Travis CI to automate code integration and early detection of issues.

2.3 Continuous Delivery (CD): Implement CD practices to automate the deployment of applications. Tools like Jenkins, CircleCI, or Spinnaker can help achieve seamless delivery.

2.4 Infrastructure as Code (IaC): Adopt IaC tools like Terraform or Ansible to manage infrastructure through code, ensuring consistency and scalability.

Stage 3: Automation and Testing

3.1 Test Automation: Incorporate automated testing into your CI/CD pipelines. Use tools like Selenium, JUnit, or pytest to ensure that code changes do not introduce new bugs.

3.2 Configuration Management: Use configuration management tools like Chef, Puppet, or Ansible to automate the configuration of your infrastructure and applications.

3.3 Monitoring and Logging: Implement monitoring and logging solutions like Prometheus, Grafana, ELK Stack, or Splunk to gain insights into application performance and troubleshoot issues proactively.

Stage 4: Advanced Practices

4.1 Continuous Feedback: Establish feedback loops using tools like New Relic or Nagios to collect user feedback and performance data, enabling continuous improvement.

4.2 Security Integration (DevSecOps): Integrate security practices into your DevOps pipeline using tools like Snyk, Aqua Security, or HashiCorp Vault to ensure your applications are secure by design.

4.3 Scaling and Optimization: Continuously optimize your DevOps processes and tools to handle increased workloads and enhance performance. Implement container orchestration using Kubernetes or Docker Swarm for better scalability.

Stage 5: Maturity

5.1 DevOps Metrics: Track key performance indicators (KPIs) such as deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate to measure the effectiveness of your DevOps practices.

5.2 Continuous Learning and Improvement: Encourage a culture of continuous learning and improvement. Stay updated with the latest DevOps trends and best practices by participating in conferences, webinars, and training sessions.

5.3 DevOps as a Service: Consider offering DevOps as a service to other teams within your organization or to external clients. This can help standardize practices and further refine your DevOps capabilities.

Conclusion

Implementing a DevOps roadmap requires careful planning, the right tools, and a commitment to continuous improvement. By following this comprehensive guide, organizations can streamline their development and operations processes, achieve faster delivery times, and enhance overall product quality.

For organizations looking to accelerate their DevOps journey, partnering with experienced DevOps service providers can provide the expertise and support needed to successfully navigate the DevOps landscape.

1 note

·

View note

Text

Spinnaker Deployment Bag

I sewed a bag to facilitate deployment of the spinnaker and its sock. The bag has a 24″ diameter stainless steel hoop sewn into the opening and the top has an elastic band for closing. The bag also has two clips that can be attached to the lifeline.

View On WordPress

0 notes

Text

DevOps Tools and Toolchains

DevOps Course in Chandigarh,

DevOps tools and toolchains are crucial components in the DevOps ecosystem, helping teams automate, integrate, and manage various aspects of the software development and delivery process. These tools enable collaboration, streamline workflows, and enhance the efficiency and effectiveness of DevOps practices.

Version Control Systems (VCS): Tools like Git and SVN are fundamental for managing source code, enabling versioning, branching, and merging. They facilitate collaboration among developers and help maintain a history of code changes.

Continuous Integration (CI) Tools: Jenkins, Travis CI, CircleCI, and GitLab CI/CD are popular CI tools. They automate the process of integrating code changes, running tests, and producing build artifacts. This ensures that code is continuously validated and ready for deployment.

Configuration Management Tools: Tools like Ansible, Puppet, and Chef automate the provisioning and management of infrastructure and application configurations. They ensure consistency and reproducibility in different environments.

Containerization and Orchestration: Docker is a widely used containerization tool that packages applications and their dependencies. Kubernetes is a powerful orchestration platform that automates the deployment, scaling, and management of containerized applications.

Continuous Deployment (CD) Tools: Tools like Spinnaker and Argo CD facilitate automated deployment of applications to various environments. They enable continuous delivery by automating the release process.

Monitoring and Logging Tools: Tools like Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana), and Splunk provide visibility into application and infrastructure performance. They help monitor metrics, logs, and events to identify and resolve issues.

Collaboration and Communication Tools: Platforms like Slack, Microsoft Teams, and Jira facilitate communication and collaboration among team members. They enable seamless sharing of updates, notifications, and project progress.

Infrastructure as Code (IaC) Tools: Terraform, AWS CloudFormation, and Azure Resource Manager allow teams to define and manage infrastructure using code. This promotes automation, versioning, and reproducibility of environments.

Continuous Testing Tools: Tools like Selenium, JUnit, and Mocha automate testing processes, including unit tests, integration tests, and end-to-end tests. They ensure that code changes do not introduce regressions.

Security and Compliance Tools: Tools like SonarQube, OWASP ZAP, and Nessus help identify and mitigate security vulnerabilities and ensure compliance with industry standards and regulations.

In summary, DevOps tools and toolchains form the backbone of DevOps practices, enabling teams to automate, integrate, and manage various aspects of the software development lifecycle. These tools promote collaboration, efficiency, and reliability, ultimately leading to faster and more reliable software delivery.

0 notes

Text

Senior DevOps Engineer - Remote

Company: Spokeo

JOB DESCRIPTION

Spokeo is a people search engine that both enlightens and empowers our customers. With over 12 billion records and 14 million visitors per month, we reconnect friends, reunite families, prevent fraud, and more.

We are seeking a Senior DevOps Engineer who understands and embraces the DevOps philosophy, can work closely with Scrum teams in an Agile workflow and is comfortable with cloud-based infrastructure. If you're excited about building big data architectures and creating a PaaS, then you should talk to us.

Deliverables. including estimated time of how much of an average week is spent doing each item. This is subject to change:

- Contribute cross-functionally with Scrum teams to develop deployment strategies for new and existing services

- Evaluate and implement technologies that improve efficiency, robustness, performance, and security at scale

- Develop creative solutions that automate system functions, reducing the manual and unplanned effort

- Analyze, design, implement, and validate strategies for streamlined CI/CD workflows

- Design and implement automated dynamic environments to support the needs of delivery teams

- Apply principles of best-practice, self-organization, autonomy, and continuous improvement to self and team

Requirements:

- Must have experience designing, building, and managing solutions built on cloud-based infrastructures; AWS preferred

- Have worked at least one year on teams practicing agile methodology, using Scrum and/or Kanban

- Have excellent customer service and communication skills (verbal and written) to effectively collaborate with a diverse team of people across Business and Engineering Teams

- Be able to demonstrate Systems Administration fundamentals, including mastery of one or more Linux distributions, virtualization, storage, networking, SQL / NoSQL databases, scripting, and ability to troubleshoot high-usage, high-throughput systems.

- Have written quality code to solve complex automation problems in at least one of these languages: Bash, Python, Ruby, Perl, or Go

- Have hands-on development experience utilizing at least one Configuration Management system: Ansible, Chef, Puppet, SaltStack, etc.

- Have architected or developed a programmable infrastructure using IaC tools such as Terraform and CloudFormation

- Understand SDLC and CI/CD models, with the ability to architect the workflow using tools like Git, Jenkins, Spinnaker, etc.

- Be able to gather and interpret metrics from monitoring tools like New Relic, CloudWatch, Elastic Stack, and Ganglia to make informed troubleshooting and performance-tuning decisions.

- Have experience supporting big data, ETL, and analytics platforms, such as Redshift, EMR / Hadoop, Apache Spark, DynamoDB, Apache Airflow, Hitachi Vantara / Pentaho, Tableau, etc.

- Be highly self-motivated and ready to learn new concepts and technologies

- Candidates with a B. S. degree in Computer Science, Information Systems, or a related field preferred

- Hands-on experience designing and delivering containerized microservice architectures, EKS preferred

Spokeo offers a bonus program, equity plans, and 401K matching for qualified roles. Twice a year, we do discretionary, merit-based salary increases. Additional benefits include; 100% coverage for medical/dental/vision for all employees and unlimited PTO.

Spokeo extends written offers to candidates who successfully complete their selection process. Spokeo’s offers include a base salary, participation in a company bonus program, stock options, and comprehensive benefits. A final offer will depend on several factors, including, but not limited to, marketplace competition, job leveling, the candidate’s experience, skills, etc.

Privacy Notice for Candidates: https://www.spokeo.com/recruiting-policy

Spokeo is an equal-opportunity employer. Applicants will receive consideration for employment without regard to race, color, religion, sex, national origin, sexual orientation, gender identity, disability, or protected veteran status. Spokeo fosters a business culture where ideas and decisions from all people help us grow, innovate, create the best products, and be relevant in a rapidly changing world.

Recruiters or staffing agencies: Spokeo is not obligated to compensate any external recruiter or search firm who presents a candidate or their resume or profile to a Spokeo employee without 1) a current, fully executed agreement on file, and 2) being assigned to the open position (as a search) via our applicant tracking solution.

APPLY ON THE COMPANY WEBSITE

To get free remote job alerts, please join our telegram channel “Global Job Alerts” or follow us on Twitter for latest job updates.

Disclaimer:

- This job opening is available on the respective company website as of 6thJuly 2023. The job openings may get expired by the time you check the post.

- Candidates are requested to study and verify all the job details before applying and contact the respective company representative in case they have any queries.

- The owner of this site has provided all the available information regarding the location of the job i.e. work from anywhere, work from home, fully remote, remote, etc. However, if you would like to have any clarification regarding the location of the job or have any further queries or doubts; please contact the respective company representative. Viewers are advised to do full requisite enquiries regarding job location before applying for each job.

- Authentic companies never ask for payments for any job-related processes. Please carry out financial transactions (if any) at your own risk.

- All the information and logos are taken from the respective company website.

Read the full article

0 notes

Text

The 10 Best DevOps Tools to Look Out For in 2023

As the demand for efficient software delivery continues to rise, DevOps tools play a pivotal role in enabling organizations to streamline their processes, enhance collaboration, and achieve faster and more reliable releases. In this article, we will explore the 10 best DevOps tools to look out for in 2023. These tools are poised to make a significant impact on the DevOps landscape, empowering organizations to stay competitive and meet the evolving needs of software development.

Jenkins:

Jenkins remains a staple in the DevOps toolchain. With its extensive plugin ecosystem, it offers robust support for continuous integration (CI) and continuous delivery (CD) pipelines. Jenkins allows for automated building, testing, and deployment, enabling teams to achieve faster feedback cycles and reliable software releases.

2. GitLab CI/CD:

Ranked among the top CI/CD tools, GitLab CI/CD simplifies the software delivery process with its user-friendly interface, robust pipeline configuration, and scalable container-based execution. Its seamless integration of version control and CI/CD capabilities makes it an ideal choice for organizations seeking efficient and streamlined software delivery.

3. Kubernetes:

Kubernetes has emerged as the de facto standard for container orchestration. With its ability to automate deployment, scaling, and management of containerized applications, Kubernetes simplifies the process of managing complex microservices architectures and ensures optimal resource utilization.

4. Ansible:

Ansible is a powerful automation tool that simplifies infrastructure provisioning, configuration management, and application deployment. With its agentless architecture, Ansible offers simplicity, flexibility, and scalability, making it an ideal choice for managing infrastructure as code (IaC).

5. Terraform:

Terraform is an infrastructure provisioning tool that enables declarative definition and management of cloud infrastructure. It allows teams to automate the creation and modification of infrastructure resources across various cloud providers, ensuring consistent and reproducible environments.

6. Prometheus:

Prometheus is a leading open-source monitoring and alerting tool designed for cloud-native environments. It provides robust metrics collection, storage, and querying capabilities, empowering teams to gain insights into application performance and proactively detect and resolve issues.

7. Spinnaker:

Spinnaker is a multi-cloud continuous delivery platform that enables organizations to deploy applications reliably across different cloud environments. With its sophisticated deployment strategies and canary analysis, Spinnaker ensures smooth and controlled software releases with minimal risk.

8. Vault:

Vault is a popular tool for secrets management and data protection. It offers a secure and centralized platform to store, access, and manage sensitive information such as passwords, API keys, and certificates, ensuring strong security practices across the DevOps pipeline.

9. Grafana:

Grafana is a powerful data visualization and monitoring tool that allows teams to create interactive dashboards and gain real-time insights into system performance. With support for various data sources, Grafana enables comprehensive visibility and analysis of metrics from different systems.

10. SonarQube:

SonarQube is a widely used code quality and security analysis tool. It provides continuous inspection of code to identify bugs, vulnerabilities, and code smells, helping teams maintain high coding standards and ensure the reliability and security of their software.

Conclusion:

The DevOps landscape is constantly evolving, and in 2023, these 10 DevOps tools are poised to make a significant impact on software development and delivery. From CI/CD automation to infrastructure provisioning, monitoring, and security, these tools empower organizations to optimize their processes, enhance collaboration, and achieve faster and more reliable software releases. By leveraging these tools, organizations can stay ahead of the curve and meet the increasing demands of the dynamic software development industry.

Partnering with a reputable DevOps development company amplifies the impact of these tools, providing expertise in implementation and integration. By leveraging their knowledge, organizations can optimize processes, enhance collaboration, and achieve faster, more reliable software releases.

#app development#software#software development#DevOps development company#DevOps development#DevOps Tools

0 notes

Text

Armory announces plugins for Spinnaker for faster and more secure continuous deployment

http://i.securitythinkingcap.com/SkWV7S

0 notes

Text

9 essential skills for AWS DevOps Engineers

AWS DevOps engineers cover a lot of ground. The good ones maintain a cross-disciplinary skill set that touches upon a cloud, development, operations, continuous delivery, data, security, and more.

Here are the skills that AWS DevOps Engineers need to master to rock their role.

1. Continuous delivery

For this role, you’ll need a deep understanding of continuous delivery (CD) theory, concepts, and real-world application. You’ll not only need experience with CD tools and systems, but you’ll need intimate knowledge of their inner workings so you can integrate different tools and systems together to create fully functioning, cohesive delivery pipelines. Committing, merging, building, testing, packaging, and deploying code all come into play within the software release process.

If you’re using the native AWS services for your continuous delivery pipelines, you’ll need to be familiar with AWS CodeDeploy, AWS CodeBuild, and AWS CodePipeline. Other CD tools and systems you might need to be familiar with include GitHub, Jenkins, GitLab, Spinnaker, Travis, or others.

2. Cloud

An AWS DevOps engineer is expected to be a subject matter expert on AWS services, tools, and best practices. Product development teams will come to you with questions on various services and ask for recommendations on what service to use and when. As such, you should have a well-rounded understanding of the varied and numerous AWS services, their limitations, and alternate (non-AWS) solutions that might serve better in particular situations.

With your expertise in cloud computing, you’ll architect and build cloud-native systems, wrangle cloud systems’ complexity, and ensure that best practices are followed when utilizing a wide variety of cloud service offerings. When designing and recommending solutions, you’ll also weigh the pros and cons of using IaaS services versus PaaS and other managed services. If you want to go beyond this blog and master the skill, you must definitely visit AWS DevOps Course and get certified!

3. Observability

Logging, monitoring, and alerting, oh my! Shipping a new application to production is great, but it’s even better if you know what it’s doing. Observability is a critical area of work for this role. An AWS DevOps engineer should ensure that an application and its systems implement appropriate monitoring, logging, and alerting solutions. APM (Application Performance Monitoring) can help unveil critical insights into an application’s inner workings and simplify debugging custom code. APM solutions include New Relic, AppDynamics, Dynatrace, and others. On the AWS side, you should have deep knowledge of Amazon CloudWatch (including CloudWatch Agent, CloudWatch Logs, CloudWatch Alarms, and CloudWatch Events), AWS X-Ray, Amazon SNS, Amazon Elasticsearch Service, and Kibana. You might utilize tools and systems in this space, including Syslog, logrotate, Logstash, Filebeat, Nagios, InfluxDB, Prometheus, and Grafana.

4. Infrastructure as code

An AWS DevOps Engineer will ensure that the systems under her purview are built repeatedly, using Infrastructure as Code (IaC) tools such as CloudFormation, Terraform, Pulumi, and AWS CDK (Cloud Development Kit). Using IaC ensures that cloud objects are documented as code, version controlled, and can be reliably replaced using an appropriate IaC provisioning tool.

5. Configuration Management

On the IaaS (Infrastructure as a Service) side for virtual machines, once ec2 instances have been launched, their configuration and setup should be codified with a Configuration Management tool. Some of the more popular options in this space include Ansible, Chef, Puppet, and SaltStack. For organizations with most of their infrastructure running Windows, you might find Powershell Desired State Configuration (DSC) as the tool of choice in this space.

6. Containers

Many modern organizations are migrating away from the traditional deployment models of apps being pushed to VMs and to a containerized system landscape. In the containerized world, configuration management becomes much less important, but there is also a whole new world of container-related tools that you’ll need to be familiar with. These tools include Docker Engine, Docker Swarm, systemd-nspawn, LXC, container registries, Kubernetes (which includes dozens of tools, apps, and services within its ecosystem), and many more.

7. Operations

IT operations are most often associated with logging, monitoring, and alerting. You need to have these things in place to properly operate, run, or manage production systems. We covered these in our observability section above. Another large facet of the Ops role is responding to, troubleshooting, and resolving issues as they occur. To effectively respond to issues and resolve them quickly, you’ll need to have experience working with and troubleshooting operating systems like Ubuntu, CentOS, Amazon Linux, RedHat Enterprise Linux, and Windows. You’ll also need to be familiar with common middleware software like web servers (Apache, Nginx, Tomcat, Nodejs, and others), load balancers, and other application environments and runtimes.

Database administration can also be an important function of a (Dev)Ops role. To be successful here, you’ll need to have knowledge of data stores such as PostgreSQL and MySQL. You should also be able to read and write some SQL code. And increasingly, you should be familiar with NoSQL data stores like Cassandra, MongoDB, AWS DynamoDB, and possibly even a graph database or two!

8. Automation

Eliminating toil is the ethos of the site reliability engineer, and this mission is very much applicable to the DevOps engineer role. In your quest to automate everything, you’ll need experience and expertise with scripting languages such as bash, GNU utilities, Python, JavaScript, and PowerShell for the Windows side. You should be familiar with cron, AWS Lambda (the service of the serverless function), CloudWatch Events, SNS, and others.

9. Collaboration and communication

Last (but not least) is the cultural aspect of DevOps. While the term “DevOps” can mean a dozen different things to a dozen people, one of the best starting points for talking about this shift in our industry is CAMS: culture, automation, measurement, and sharing. DevOps is all about breaking down barriers between IT operations and development. In this modern DevOps age, we no longer have developers throwing code “over the wall” to operations. We now strive to be one big happy family, with every role invested in the success of the code, the applications, and the value delivered to customers. This means that (Dev)Ops engineers must work closely with software engineers. This necessitates excellent communication and collaboration skills for any person who wishes to fill this keystone role of a DevOps engineer.

AWS Devops?

AWS offers various flexible services that allow organizations to create and release services more efficiently and reliably through the implementation of DevOps techniques.

These services make the provisioning and management of infrastructures, such as deploying application code and automating the release process for software, and monitoring your application's and infrastructure's performance.

0 notes

Text

Gennaker vs code zero

Gennaker vs code zero code#

Gennaker vs code zero professional#

Gennaker vs code zero code#

This is a much larger furled gennaker than the Code Zero. This webinar is perfect for shorthanded sailors, performance cruisers, or those looking for more. I have heard people saying very good things about Code D (Delta voile) and if what they say on the Doyle site by its costumers deserve some credibility, it seems that it is also the case there. Broad reaches are, in percentage terms, less frequent than upwind or deep downwind legs. Our cruising experts discuss various styles of Code Zero’s. This sail permits both things and will not collapse like a spinnaker when too near the wind. I have sailed many years with a Code 0 and it is an incredible sail upwind till 120/130º tw but I could not run. The boat go very good with the selftacking ACL North sails at a AWA from 30 to 90even in light to moderate winds.The code 0 will do it better by light winds from AWA 40 to 90 but more downwind you need a gennaker or a spinnaker. In fact you will have a sail that can do both things relatively well. Of course they are exaggerating a bit, it would not be as efficient as a Code 0 upwind neither as efficient as a spinnaker downwind, but the difference would not be much, in what regards a cruiser's perspective. With this new kind of sails you can have the two in one and even have it on an easily deployable furler.Īs Doyle says: The Utility Power Sail, UPS, gives cruising sailors the speed and power of a traditional spinnaker and the ability to sail at wind angles as close as 35 degrees. To sail fast in light winds against the wind you need a geenaker, to run you need a Spinaker or an asymetric spinnaker. Grouped collectively under the name reacher, they are ideal for those hot zero- to 10-knot days, when they can take you from drifting to moving. MAKE YOUR OWN SAIL BAG If hoisting a gennaker or a code zero is something you. Among the different monikers are the gennaker, reacher, screacher (a term used primarily for multihulls), drifter, light-air genoa and cruising code zero (the flattest variation). these sails are set on roller furling systems like Harkens Code Zero ot Facnor FX Code Zero. This sail is precisely about that, about to have less sails. SECURING THE SPINNAKER HALYARD As a general rule, all three corners of the. Asymmetrical spinnaker or gennaker: Called by either name. The effect of this fibre layout is to reduce the load on the Mylar film, helping the sail to retain its shape longer.Click to expand.Yes it can but the sail has to be modified. Our ZigZag fibre layout ensures efficient support and distribution of the loads over the radial panels in your sail.

Gennaker vs code zero professional#

Stormlite is of a similar weight and finish to the equivalent Nylon spinnaker cloth but its Polyester weave gives it greater stability under load.Ĭontender Sailcloth has responded to the high demand for increasingly lighter but form stable sails with a series of laminate fabrics suitable for yachtsmen from the performance-oriented cruiser through to the professional racing teams. Clearly mounting a Code 0 or 1 or a genaker or an asym spinnaker on a furler solves the size and handling problem. If you do prefer a woven fabric, we suggest you ask your sailmaker about Contender Stormlite polyester sailcloth. For a boat of that size (and smaller ones as well for that matter) I would consider a furling code 1. Using Contender ZL Code Zero laminate your sailmaker can make you a sail which is very stable but still light, pliable and easy to handle. That is why we recommend our ZL Code Zero laminate, designed especially for this type of sail. We are thinking of replacing the Marlow torsion rope for a Manufactured one by Future Fibres in an attempt to reduce the amount of twist in the rope when we furl. Our boat is a Van de Stadt 47 foot cruising yacht with a big fractional rig. Nylon spinnaker fabric is not sufficiently stable for these sails. Code zero's and gennakers are always either fully furled or fully unfurled and historically people have been content to tie or cleat off the furling line when the sail is furled However, increasingly customers like the security of using a lock to prevent the accidental unfurl. We use a custom modified Harken code zero furler for a Top down furl on our Quantum Vision V3 of 2100 sqf. A screecher and a code zero are the same thing. Gennaker is just a general term for a potential downwind sail, a cross between a Genoa and an asymmetrical spinnaker. Excellent series of furlers for sailing yachts of 24 to 42. The term gennaker can cover a code zero, screecher, or reaching spinnaker. Get to know affordable and reliable NS gennaker furlers - asymmetrical spinnaker furling systems. Is your performance cruiser or racing yacht equipped with a non-overlapping jib and/or a bowsprit? Then a rolling Code Zero or an asymmetrical reaching spinnaker can considerably improve the performance of your yacht in lighter weather conditions. Code zero is another name for a gennaker. The term gennaker can cover a code zero, screecher, or reaching spinnaker. Great performance on beam/broad reach courses Code zero is another name for a gennaker.

0 notes

Text

Julie Bradley - Author of “Escape from the Ordinary”, retired from the US Army at 40, to sail around the world for 8 years with her husband.

Julie Bradley was born with adventure genes and shares her passions in her books “Escape from the Ordinary” and “Crossing Pirate Waters”. Both are true stories about an 8 year adventure of sailing around the world with her husband, Glen.

After twenty years in the Army as a Military Intelligence Officer she traded her uniform and worldly possessions to pursue the dream that had inspired her to keep going through the long separations of military deployments.

During those years her stack of Cruising World, Sail and Latitude 38 magazines grew to hoarder proportions until the big purge of all belongings to buy their new home: a French built Amel blue-water sailboat.

Julie shares more about her passion for adventure and sailing and what it was like selling everything she owned to sail around the world.

New episodes of the Tough Girl Podcast go live every Tuesday at 7am UK time - Make sure to subscribe so you don’t miss out.

To support the mission to increase the amount of female role models in the media. Sign up as a Patron - www.patreon.com/toughgirlpodcast. Thank you.

*Content Warning - during this episode - addiction to alcohol is talked about.

Show Notes

Julie in her own words

Living out on the water with her husband

Joining the army after high school

Getting hooked on an adventure

Julie's decision to leave the army after 20 years

Sailing around the world with her husband for eight years

Not worrying about the money

Julie and her husband's background in sailing

Delivering a friend's boat

Being a company commander and sailing at the same time

Selling everything and buying a sailboat

Sailing in the South Pacific

What it was like to sail through the storm

How did they spend their days on the boat

Writing her books Escape from the Ordinary (Escape Series Book 1) and Crossing Pirate Waters (Escape Series Book 2)

How she lost control of her addiction to alcohol

Keeping a close relationship with her husband

Deciding to stop sailing

Final words of advice

Social Media

Website: www.juliebradleyauthor.com

Facebook: @julie.bradley.798

Twitter: @redjuliebradley

Books: Escape from the Ordinary (Escape Series Book 1)

Meet Glen and Julie, sailors who follow their dream and discover that reality can be even bigger than imagined. From Force 10 storms in the North Atlantic to the crystal blue waters and native dancers of French Polynesia, Escape from the Ordinary (book 1 of the Escape Series) opens a window to adventures in extraordinary places not found in travel brochures.

Told with keen observations and sparkling with wry humor, Julie describes the terrors and pleasures of living a life of total independence on a sailboat where even simple decisions can have big consequences. This exhilarating, true story will thrill those planning to sail off into the sunset as well as armchair adventurers. Escape from the Ordinary reminds you of the unlimited possibilities in life and offers inspiration to go “all in” on your own dreams.

Crossing Pirate Waters (Escape Series Book 2)

You don’t have to know a spinnaker from a mainsail to enjoy this adventure book. Join Glen and Julie as they continue around the world through less traveled, dangerous areas on the far side of the world.

Turmoil in the Mideast convinces Glen and Julie to linger in the South Pacific visiting primitive villages on remote islands. But hang on tight, because to finish their voyage they must leave friendly shores and navigate through trouble in the Indian Ocean, Gulf of Aden and the Red Sea before arriving in Europe.

Full of dry wit and compelling descriptions, Julie takes the reader along for the ride of a lifetime in this sequel (book 2 of the Escape Series) to her previous bestseller, Escape from the Ordinary.

Check out this episode!

#podcast#women#sports#health#motivation#challenges#change#adventure#active#wellness#explore#grow#support#encourage#running#swimming#triathlon#exercise#weights

1 note

·

View note

Link

Many developers, DevOps engineers, and SREs are clearly excited about using Spinnaker to modernize their overall software delivery process. And the primary reasons for this excitement are Spinnaker is opensource software and the only tool to offer hybrid and multicloud deployment in the marketplace.

At the same time, many organizations are hesitant to move forward with Spinnaker for continuous delivery because it has a reputation of being difficult to get right.

If you are considering adopting Spinnaker to transform your continuous delivery, I am confident that you can be successful. Simplifying Spinnaker isn’t easy, but it can be done.

Our many customers around the world have seen compelling success – driving down costs, slashing delivery errors, and improving cycle time. They have mastered the challenges of scaling Spinnaker, even though they had plenty of questions along the way.

That’s the purpose of this blog – to highlight the top four challenges you should address to ensure a successful transformation to Spinnaker Continuous Delivery (CD) – and move quickly from your very first service in production with Spinnaker to your first 100 applications.

Remember that adopting any product with the potential of Spinnaker, and updating any process as important as software delivery, brings with it some complexity and potential for error. You’re not alone – most companies have struggled with the same difficulties that you have now – and have overcome them.

Nearly every company today must transform their software delivery process to meet their business goals. Leverage the learning of the Spinnaker early adopters to eliminate the risk of being buried underneath an avalanche of work as you adopt Spinnaker and transform your Continuous Delivery processes across your organization.

Integrate Spinnaker with your Existing Tools

Spinnaker is a great product and saves time, energy, and effort in moving your services and applications through your deployment process, culminating with the apps running successfully in production.

However, Spinnaker doesn’t exist in a vacuum. You probably already have a well developed tool chain, perhaps using GitHub for source control, Jenkins for build, Jira for tickets, Slack for communication, JFrog Artifactory for binaries, and perhaps many others.

The first element that companies struggle to address is to completely configure Spinnaker so it is set up to fit well into your existing tool chain. Some of the integrations are simple, others are more complex. Unfortunately, Spinnaker doesn’t provide an “easy button” for any of the integrations. And extending your existing processes to include a new tool like Spinnaker always takes more thought and effort that we’d like.

So before you install Spinnaker or begin thinking about creating your first few pipelines, consider how Spinnaker will fit into your overall tool chain and how fit in with the processes that are adjacent to the deployment step in your SDLC.

Design Spinnaker CD Pipelines for Broad Adoption

Once Spinnaker is integrated into your tool chain and word gets around that you have enabled Spinnaker, you’ll be a hero because Spinnaker reduces work for everyone involved.

At the same time, you’ll likely be deluged with requests for assistance – especially for “onboarding” new applications on to Spinnaker. The process for building new pipelines for applications isn’t really onerous, but Spinnaker on its own doesn’t provide an easy way for individuals to do this work on their own.

Further, it is highly likely that you won’t use the same deployment strategy across all your different applications. When should you use the different strategies available to you? How should you set up Spinnaker to utilize a dark, canary, blue/green, rolling, or highlander deployment? How do you modify any of these strategies to your specific environment? Not all these questions have obvious answers when you’re getting started.

Once you have experience with the first few applications, and have the pipelines happily moving changes through the process, you should consider how to streamline the process of deploying new applications. Can you set up the system so that new teams can onboard their applications without you providing significant assistance?

Each organization is of course different. Some want to have full control by a central team; other times the individual development teams should have the ability to control the process for each service they build. Still other times the company wants to outsource the whole thing to an outside team so their inside teams can concentrate on their highest value.

Whichever option is good for you, consider the onboarding process or you will have difficulty moving past the first few applications toward broad deployment.

Handle Governance and Security Concerns in Continuous Delivery

Making Spinnaker operational is one thing. Making sure that the system is secure and follows your organization’s rules and regulations is also critically important before you move to wide adoption.

There are many different sub bullets to this general topic of governance, much more than I can cover in a single blog. The good news is that Spinnaker is built for large scale operations and so you can effectively handle just about every security and governance concern that you might have.

In order to ensure your specific governance requirements with Spinnaker are met for a broad range of services and applications, the top items that you should address are: secrets management, user onboarding and access control, audit, and policy compliance.

Different organizations have different strategies for secrets management, some heavily using Git, others using a product like Vault from Hashicorp. You must configure Spinnaker to fit into your security and secrets processes before you’re able to broadly deploy it. You definitely don’t want to manage your passwords and other secrets within Spinnaker itself.

User onboarding and permissions is a second area to consider. All but the smallest organizations have some centralized user management in place, and you’ll want to integrate Spinnaker into the system you’ve chosen, whether it be LDAP or something different.

Audit is the next element that typically impedes organizations from broad deployment. Ensuring that you can track the successes and failures of all the different changes that are pushed into production is important for every company, not just those in regulated industries.

And finally, general policy compliance is a must for organization-wide adoption of any product or process, especially one in as important an area as software deployment. Whether the policies are as simple as blackout windows (at which times completed software can move into production) or as complex as who within the organization can or must approve any move, and under what circumstances a move can be approved, there must be a simple way to ensure that the rules are followed (and of course, the compliance must be documented and easily verified).

Trust an Experienced Navigator for your CD Transformation

Once Spinnaker is in place for more than a few applications, you’ll see demand for it increase quickly. And with more users, there will be more need for support. Many of the questions will be simple, but you will want to be sure that your team has someone available at all times to have their questions answered – you don’t want to be first, second, and third level support to your Spinnaker installation.

In addition to simple support questions, a healthy percentage of requests will be around the best way to use different Spinnaker capabilities, and which approach enabled by Spinnaker would be recommended for your specific situation. These types of best practice questions should be answered by someone with specific knowledge of your situation and deep knowledge of and experience with Spinnaker. Of course, you could let everyone experiment and build best practices over time, but it’s much more efficient to leverage best practices from someone who has plenty of experience. Addressing this challenge is required before you can really start to scale past your first 100 applications. Don’t feel that you need to go it alone – work with an external company like OpsMx that you can trust and that has led multiple CD transformations with Spinnaker.

Check out our professional services that will help you to enhance and customize Spinnaker for your enterprise requirements.

Now is the Time to Start

Organizations that are successful with Spinnaker achieve impressive results. They dramatically improve the speed at which changes are deployed into production. And they are able to simultaneously slash costs, reduce errors in production, improve the experience of their end users, and better maintain compliance with standards and regulations.

Spinnaker is proven in organizations around the world, many of whom are OpsMx customers. They confronted the same anxiety that you may be having as you begin your Spinnaker rollout. We helped them get past the initial Spinnaker struggles and would be excited to work with you as well.

#Audit Service#AWS#CD pipeline#Compliance#Continuous Delivery#Jenkins#Professional Service#Spinnaker#Spinnaker deployments#multicloud deployment

0 notes

Link

Enterprises looking to modernize their CI/CD process need to consider Spinnaker continuous delivery tool for its cloud-native capabilities vs Jenkins

0 notes

Text

Deploying Spring Boot Apps to AWS with Netflix Nebula and Spinnaker: Part 2 of 2

Deploying Spring Boot Apps to AWS with Netflix Nebula and Spinnaker: Part 2 of 2

Part One of this post examined enterprise deployment tools and introduced two of Netflix’s open-source deployment tools, the Nebula Gradle plugins, and Spinnaker. In Part Two, we will deploy a production-ready Spring Boot application, the Election microservice, to multiple Amazon EC2 instances, behind an Elastic Load Balancer (ELB). We will use a fully automated DevOps workflow. The build, test,…

View On WordPress

#AWS#CD#CI#Continuous Delivery#continuous deployment#Debian#GitOps#hashicorp#Jenkins#Packer#Spinnaker#Spring#Spring Boot

1 note

·

View note

Text

[CI/CD] Spinnaker Introduction

Spinnaker Introduction - a concept of Spinnaker.

第一次使用 Spinnaker 先來了解一下什麼是 Spinnaker 以及為什麼要使用 Spinnaker, 它能帶來什麼好處.

Netflix 推出這個 Open-Source lib, 用來作 CI 之後的發佈, 先來看一下 Github 上面關於 Spinnaker 對自己的定位.

Spinnaker is an open source, multi-cloud continuous delivery platform for releasing software changes with high velocity and confidence.

關鍵一句話: 可以快速部署到多種不同雲的 delivery platform. 可以從下圖看到 Spinnaker 概括的開發週期.

Spinnaker Adapted Development Cycle

而 Spinnaker…

View On WordPress

0 notes

Text

What are the essential skills for AWS DevOps engineers?

Engineers with AWS DevOps frequently travel. The good ones maintain a cross-disciplinary skill set that includes cloud, development, operations, continuous delivery, data, security, and more.

The abilities that AWS DevOps Engineers must master in order to excel in their position are listed below.

1.Continuous delivery:

If you want to be successful in this role, you must possess a deep understanding of continuous delivery (CD) theory, concepts, and their actual application. You will require both familiarity with CD technologies and systems as well as a thorough understanding of their inner workings in order to integrate diverse tools and systems to create fully effective, coherent delivery pipelines. Committing, merging, building, testing, packaging, and releasing code are only a few of the tasks involved in the software release process.

If you intend to use the native AWS services for your continuous delivery pipelines, you must be familiar with AWS CodeDeploy, AWS CodeBuild, and AWS CodePipeline. You might also need to be familiar with other CD tools and systems, such as GitHub, Jenkins, GitLab, Spinnaker, and Travis.

2.Cloud:

An AWS DevOps engineer should be familiar with AWS resources, services, and best practices. Product development teams will get in touch with you with questions regarding various services as well as requests for guidance on when and which service to use. You should therefore be knowledgeable about the vast array of AWS services, their limitations, and other (non-AWS) options that might be more suitable in specific situations.

Your understanding of cloud computing will help you plan and develop cloud native systems, manage the complexity of cloud systems, and ensure best practices are followed when leveraging a variety of cloud service providers. You'll think about the benefits and drawbacks of using IaaS services as opposed to PaaS and other managed services while planning and making recommendations.

3.Observability:

Oh my, logging, monitoring, and alerting! Although it's great to release a new application into production, knowing what it does makes it much better. An important area of research for this function is observability. An AWS DevOps engineer is in charge of implementing appropriate monitoring, logging, and alerting solutions for an application and the platforms it uses. Application performance monitoring, or APM, can facilitate the debugging of custom code and provide insightful information about how an application functions. There are many APM tools, including New Relic, AppDynamics, Dynatrace, and others. You must be highly familiar with AWS X-Ray, Amazon SNS, Amazon Elasticsearch Service, and Kibana on the AWS side, as well as Amazon CloudWatch (including CloudWatch Agent, CloudWatch Logs, CloudWatch Alarms, and CloudWatch Events). Additional apparatus Other applications you may utilise Among the tools used in this field are Syslog, logrotate, logstash, Filebeat, Nagios, InfluxDB, Prometheus, and Grafana.

4.Code for infrastructure:

AWS Infrastructure as Code (IaC) solutions like CloudFormation, Terraform, Pulumi, and AWS CDK are used by AWS DevOps Engineers to guarantee that the systems they are in charge of are produced in a repeatable manner (Cloud Development Kit). The use of IaC ensures that cloud objects are code-documented, version-controlled, and able to be reliably replaced with the appropriate IaC provisioning tool.

5.Implementation Management:

An IaaS (Infrastructure as a Service) configuration management tool should be used to document and set up EC2 instances after they have been launched. In this area, some of the more well-liked alternatives are Ansible, Chef, Puppet, and SaltStack. In businesses where Windows is the preferred infrastructure, PowerShell Desired State Configuration (DSC) may be the best tool for the job.

6. Packaging:

Many contemporary enterprises are switching from the conventional deployment methodologies of pushing software to VMs to a containerized system environment. Configuration management loses a lot of importance in the containerized environment, but you'll also need to be conversant with a whole new set of container-related technologies. These tools include Kubernetes (which has a large ecosystem of tools, apps, and services), Docker Engine, Docker Swarm, systemd-nspawn, LXC, container registries, and many others.

7.Operations:

Logging, monitoring, and alerting are the most frequently mentioned components of IT operations. To effectively operate, run, or manage production systems, you must have these things in place. These were discussed in the observability section up top. Responding to, debugging, and resolving problems as they arise is a significant aspect of the Ops function. You must have experience working with and troubleshooting operating systems including Ubuntu, CentOS, Amazon Linux, RedHat Enterprise Linux, and Windows if you want to respond to problems quickly and efficiently. Additionally, you'll need to be knowledgeable about middleware tools like load balancers, various application environments, and runtimes, as well as web servers like Apache, nginx, Tomcat, and Node.js.

An essential role for a database manager might also be database administration. (Dev)Ops position You'll need to be familiar with data stores like PostgreSQL and MySQL to succeed here. Additionally, you should be able to read and write a little SQL code. You should also be more familiar with NoSQL data stores like Cassandra, MongoDB, AWS DynamoDB, and perhaps even one or more graph databases.

8.Automation:

The site reliability engineer's purpose is to eliminate labor, and the DevOps engineer's mission is also extremely appropriate. You'll need knowledge and proficiency with scripting languages like bash, GNU utilities, Python, JavaScript, and PowerShell on the Windows side in your desire to automate everything. Cron, AWS Lambda (the serverless functions service), CloudWatch Events, SNS, and other terms should be familiar to you.

9. Working together and communicating:

The cultural aspect of DevOps is last, but certainly not least. Even while the word "DevOps" can represent a variety of things to a variety of people, CAMS—culture, automation, measurement, and sharing—is one of the greatest places to start when discussing this change in our industry. The main goal of DevOps is to eliminate barriers between IT operations and development. Developers no longer "throw code over the wall" to operations in the new DevOps era. With everyone playing a part in the success of the code, with these applications and the value being provided to consumers, we now want to be one big happy family. As a result, software developers and (Dev)Ops engineers must collaborate closely. The ability to collaborate and communicate effectively is required for any candidate for the essential position of a DevOps engineer.

Conclusion:

You need a blend of inborn soft skills and technical expertise to be successful in the DevOps job path. Together, these two essential characteristics support your development. Your technical expertise will enable you to build a DevOps system that is incredibly productive. Soft skills, on the other hand, will enable you to deliver the highest level of client satisfaction.

With the help of Great Learning's cloud computing courses, you may advance your skills and land the job of your dreams by learning DevOps and other similar topics.

If you are brand-new to the DevOps industry, the list of required skills may appear overwhelming to you. However, these are the main DevOps engineer abilities that employers are searching for, so mastering them will give your CV a strong advantage. We hope that this post was able to provide some clarity regarding the DevOps abilities necessary to develop a lucrative career.

#devops#cloud#aws#programming#cloudcomputing#technology#developer#linux#python#coding#azure#software#iot#cybersecurity#kubernetes#it#css#javascript#java#devopsengineer#tech#ai#datascience#docker#softwaredeveloper#webdev#machinelearning#programmer#bigdata#security

1 note

·

View note

Text

DevOps vs SRE – What’s the difference?

With the growing complexity of application development, organizations are increasingly adopting methodologies that enable reliable, scalable software. DevOps vs SRE (Site Reliability Engineering) are two methods for improving the product release cycle by enhancing collaboration, automation, and monitoring. Both approaches use automation and collaboration to assist teams in developing resilient and dependable software, but there are significant distinctions in what they offer and how they work.

As a result, the purpose of DevOps vs SRE is explored in this article. We’ll look at both techniques’ advantages, disadvantages, and major aspects.

What is DevOps?

DevOps is an approach to the development of software. DevOps adheres to lean or agile practices, distinguishing this approach from other methods. DevOps concentrates on enabling continual delivery, a regular release, and an automated method of developing apps and software. The DevOps approach comprises the norms and technology practices that allow the rapid flow through planned activities.

The DevOps process has the following goals in mind:

Speed up the time to get products to the market.

Reduce the time to develop software.

Increase the responsiveness to market demands.

DevOps combines operations and development teams to deploy software smoothly and efficiently. It is based on creating an environment of close communication and an extremely high degree of automation. According to DevOps rules, the team responsible for programming is also accountable for maintaining the code once it has been put into production. This means that traditionally separate teams of operations and development collaborate to improve software released.

What are the advantages of DevOps?

First, DevOps improves software delivery speed by making minor changes and releasing them more often. So, companies can get products to market quicker. Updating and fixing are quicker and easier, while the software’s stability is increased. Furthermore, even small modifications are easy to roll back in a short time when needed. Another benefit is that the software delivery is safer.

What DevOps does and how

DevOps is a fantastic way to create an environment of collaboration right from the beginning. The focus is on the teams that have to be able to work together to get the code into production and then keep it. It implies that the DevOps team is accountable for creating the code, fixing the bugs, and everything else associated with the code. The DevOps procedure is built around five core principles:

Eliminate silos – The DevOps team’s mission is to share information from the operations and development. This means that more insight is gained, and better communication is encouraged.

Accept failure and fail quickly. The DevOps procedure identifies methods to minimize the risk. In this way, similar errors are not likely to occur twice. The team uses test automation to spot the flaws earlier in the release cycle.

Introduce changes gradually. The DevOps team frequently makes small, incremental changes instead of deploying massive modifications to the production. This makes it simpler to examine changes and identify problems.

Utilize automation and tools. The team constructs the release pipeline using automation tools. This speeds up the process, improves accuracy, and reduces the risk of human mistakes. The unnecessary manual effort is minimized.

Monitor everything. Take note of everything DevOps utilizes data to evaluate the effects of every action undertaken. The four most frequently used metrics that measure the effectiveness of the DevOps process are the time it takes to make changes and the frequency of deployment, the time it takes to restore service and the failure rate.

To function effectively, DevOps needs some powerful tools to run their workflow. They use versions to control all of the code (using tools such as GitHub as well as GitLab) and Continuous Integration software (Jenkins, Spinnaker, etc. ), deployment automation tools, test automation tools (Selenium, etc. ) and tools for managing incidents (PagerDuty, Opsgenie, etc.)

Read more: Top 30 Most Effective DevOps Tools for 2022

What is Site Reliability Engineering (SRE)?

The concept of Site Reliability Engineering was first introduced in 2003. It was initially developed to provide a framework for developers when creating large-scale software. Today, SRE is carried out by experts with solid development backgrounds who employ engineering methods to resolve common problems while running systems in production. It’s like a system engineer that’s also responsible for operations. It’s a blend of system operation responsibilities, developing software, and engineers in software. Various duties are covered – writing and building code, distributing the code, and finally having the code in production.

The primary goal of SRE is to create a stable and super-scalable system or a software program. In the past, operations staff and software engineers were two groups with different types of work. They dealt with problems in different ways. Site Reliability Engineering goes beyond the conventional approach, and its collaborative nature has been gaining in popularity.

SRE employs 3 Service Level Commitments to measure how well a system performs:

Services level agreements (SLAs) define the needed reliability, performance, and latency as intended by end-users.

Services level objectives (SLOs) target values and goals established by SRE teams to be achieved to meet SLAs.

Service Level Indicators (SLIs) measure specific parameters and aspects that indicate how well a system is conforming with the standards of SLOs. Typical SLIs include request latency, system throughput, lead time, development frequency, mean time to restore (MTTR), and availability error rate.

What are the advantages that come from Site Reliability Engineering?

Firstly, SRE enhances the uptime significantly. The approach focuses on keeping the platform or service no matter what. The tasks like disaster prevention, risk mitigation, reliability, and redundancy are of the utmost importance. The SRE team’s primary objective is to determine the most effective methods to stop problems before they may result in interruptions in service. This is especially important when dealing with massive systems. Another advantage is that Site Reliability Engineering helps brands reduce manual work, which gives developers more time to innovate. All flaws are identified and quickly fixed.

What is SRE does and how

The function that Site Reliability Engineering in a business is fairly simple – the SRE team ensures that the service or platform is readily available to users whenever they wish to use it.

What are the responsibilities of SRE?

SRE eliminates silos differently than DevOps. SRE assists developers in creating more reliable systems because they focus on operations and development. As a result, the developers have a much better context for supporting systems in production.

SRE relies on metrics to help improve the efficiency of the system. This perspective on reliability is extremely beneficial in determining if a release for a change is going into production. The core of SRE is three indicators: SLO (service-level objective), SLA (service-level agreement) and SLI (service-level indicator).

Site Reliability Engineering handles support escalation issues. It also encourages customers to take part in and report on incident reviews.

The SRE team evaluates and validates the new features and updates and develops the system’s documentation.

SRE tools

SRE teams depend on automatizing routine tasks by employing tools and methods that standardize the operation across the software life cycle. A few tools and technologies that aid in Site Reliability Engineering include:

Containers package applications in a unified environment across multiple deployment platforms, enabling cloud-native development.

Kubernetes is a well-known container orchestrator that can manage containers running across several environments effectively.

Cloud platforms allow you to create scalable, flexible, and reliable applications in distributed environments. The most popular platforms are Microsoft Azure, Amazon AWS and Google Cloud.

Tools for project planning and management let you control IT operations across distributed teams. A few of the most well-known tools are JIRA and Pivotal Tracker.

Tools for controlling source code like Subversion and GitHub eliminate the boundaries between operators and developers, allowing seamless collaboration and release of application delivery. Tools for controlling source code are Subversion as well as GitHub.

DevOps vs SRE – What makes them different?

DevOps is for writing and the deployment of code. On the other hand, SRE is more comprehensive, with the team taking a wider ‘end-user’s’ perspective while working on the system.

A DevOps team is working on an app or a product using an agile approach. They develop, test, deploy, and monitor apps in a manner that is fast, controlled, and high-quality. An SRE team is regularly providing the team of developers with feedback. The team’s goal is to harness the operations data and software engineering, mostly through automatizing IT operations tasks- all of which can speed up software delivery. A DevOps team’s mission is to make the whole company more efficient and efficient.

The goal of SRE is to streamline IT operations by using methodologies that were previously employed only by software engineers. Site Reliability Engineering focuses on keeping the application or platform available to customers (it focuses on the customers’ requirements through prioritizing SLA, SLI and SLO metrics). On the other hand, DevOps concentrates on the processes that help successfully deploy a product. Below are the distinctions between DevOps and SRE.

The role of the developer team

DevOps combines the skillsets of developers and IT operations engineers. SRE solves the problems of IT operations using the developer’s mindset and tools.

Skills

DevOps teams primarily work with the code. They write it, test it, and push it out into production to get software that will help someone’s problem. They also set up and run a CI/CD pipeline. Site Reliability Engineering has a more extensive method. The team conducts analyses to determine the reasons for why something went wrong. They’ll do whatever they can to stop the issue from continuing or reoccurring.

SRE vs DevOps – any similarities?

SRE and DevOps indeed have a lot in common since they are methodologies put in the process to monitor production and ensure that operation management works according to plan. They share a common goal: to achieve better results for complex distributed systems. Both agree that changes are necessary for improvement. Both are focused on people working together as a team with the same duties. DevOps and SRE consider that keeping everything functioning is the responsibility of all. Ownership is shared – from initial code writing to software builds to deployment to production and maintenance. In both SRE and DevOps, engineers write code and optimize it before deployment in production.

In summarizing, DevOps and SRE should collaborate toward the same goal.

Read more: The Importance of DevOps in Mobile App Development

What is the way SRE support DevOps principles & philosophies?

DevOps vs SRE aren’t competing for methods. This is because SRE is a practical method to address most DevOps issues.

In this section, we’ll look at how teams can use SRE to carry out the philosophies and concepts of DevOps:

Eliminating organizational silos

DevOps is a way to ensure that various departments/software teams aren’t isolated from one another and ensure that they all have a common objective.

SRE helps in this process by establishing the sharing of project responsibility between teams. With SRE, each team is using the same tools as well as techniques and codebase to help:

Uniformity

Continuous collaboration

Implementing gradual change

DevOps is a system that embraces slow, gradual change to allow for continuous improvements. SRE will enable teams to carry out regular, small updates that minimize the impact of changes on the availability of applications and stability.

Furthermore, SRE teams use CI/CD tools for change management and continuous testing to ensure effective deployment of changes to code.

Accepting the failure as normal

Both SRE and DevOps concepts consider failures and errors as inevitable occurrences. While DevOps’s goal is to manage runtime errors and enable groups to learn from these, SRE enforces error management through Service Level Commitments (SLx) to ensure that all failures are dealt with.

SRE also provides a cost of risk, which lets teams test failure limits for reevaluation and innovation.

Making use of tools and automation

Both DevOps vs SRE utilize automation to improve processes and delivery of services. SRE allows teams to use the same tools and services via flexible application programming interfaces (APIs). While DevOps encourages the use of technology that automates processes, SRE makes sure that every team member can access the updated automation tools and technologies.

Measure everything

Since both DevOps and SRE support automation, you’ll need to continuously monitor the developed systems to ensure every process runs as planned.

DevOps collects metrics via a feedback loop. However, SRE enforces measurement by offering SLIs, SLOs, and SLAs for measuring. Because Ops are defined by software, SRE monitors toil and reliability, ensuring consistent service delivery.

Read more: Top 10 DevOps Trends to Watch 2022

Summing up DevOps vs SRE

DevOps vs SRE are frequently referred to as two sides of the same coin, with SRE tools and techniques supporting DevOps philosophies and practices. SRE involves the use of principles of software engineering to automate and improve ITOps functions, for example:

Disaster response

Capacity planning

Monitoring

However, a DevOps model allows the quick release of software products through collaboration between operations and development teams.

Over the years, of all the companies that have implemented DevOps, 50% of enterprises have already implemented SRE to increase reliability. One reason is that SRE principles provide better monitoring and control of dynamic applications that depend on automation.

In the end, both methodologies aim to improve the end-to final cycle of an IT ecosystem, namely the lifecycle of an application by implementing DevOps and the management of operations lifecycle by using SRE.

0 notes