#Microsoft Server operating system

Explore tagged Tumblr posts

Text

Microsoft Windows Server 2022 Licensing: What You Need to Know

Understand the licensing options for Microsoft Windows Server 2022, including different editions, pricing, and how to choose the right license for your business.

#Windows Server 2022 installation guide#Windows Server 2022 enterprise edition#Buy Windows Server 2022 license#Microsoft Server operating system

0 notes

Text

!Important Warning!

These Days some Mods containing Malware have been uploaded on various Sites.

The Sims After Dark Discord Server has posted the following Info regarding the Issue:

+++

Malware Update: What We Know Now To recap, here are the mods we know for sure were affected by the recent malware outbreak: "Cult Mod v2" uploaded to ModTheSims by PimpMySims (impostor account) "Social Events - Unlimited Time" uploaded to CurseForge by MySims4 (single-use account) "Weather and Forecast Cheat Menu" uploaded to The Sims Resource by MSQSIMS (hacked, real account) "Seasons Cheats Menu" uploaded to The Sims Resource by MSQSIMS (hacked, real account)

Due to this malware using an exe file, we believe that anyone using a Mac or Linux device is completely unaffected by this.

If the exe file was downloaded and executed on your Windows device, it has likely stolen a vast amount of your data and saved passwords from your operating system, your internet browser (Chrome, Edge, Opera, Firefox, and more all affected), Discord, Steam, Telegram, and certain crypto wallets. Thank you to anadius for decompiling the exe.

To quickly check if you have been compromised, press Windows + R on your keyboard to open the Run window. Enter %AppData%/Microsoft/Internet Explorer/UserData in the prompt and hit OK. This will open up the folder the malware was using. If there is a file in this folder called Updater.exe, you have unfortunately fallen victim to the malware. We are unware at this time if the malware has any function which would delete the file at a later time to cover its tracks.

To quickly remove the malware from your computer, Overwolf has put together a cleaner program to deal with it. This program should work even if you downloaded the malware outside of CurseForge. Download SimsVirusCleaner.exe from their github page linked here and run it. Once it has finished, it will give you an output about whether any files have been removed.

+++

For more Information please check the Sims After Dark Server News Channel! Or here https://scarletsrealm.com/malware-mod-information/

TwistedMexi made a Mod to help detect & block such Mods in the Future: https://www.patreon.com/posts/98126153

CurseForge took actions and added mechanics to prevent such Files to be uploaded, so downloading there should be safe.

In general be careful, where and what you download, and do not download my Mods at any other Places than my own Sites and my CurseForge Page.

2K notes

·

View notes

Text

guys,, don't forget to do your research (AI)

researching ai before using it is crucial for several reasons, ensuring that you make informed decisions and use the technology responsibly.

it actually makes me angry that people are too lazy or perhaps ignorant to spend 15ish minutes reading and researching to understand the implications of this new technology (same with people ignorantly using vapes, ugh!). this affects you, your health, the people around you, the environment, society, and has legal implications.

first, understanding the capabilities and limitations of ai helps set realistic expectations. knowing what ai can and cannot do allows you to utilize it effectively without overestimating its potential. for example, if you are using ai as a study tool - you must be aware that it is unable to explain complex concepts in detail. additionally! you must be aware of the effects that it poses on your learning capabilities and how it discourages you from learning with your class/peers/teacher.

second, ai systems often rely on large datasets, which can raise privacy concerns. researching how an ai handles data and what measures are in place to protect your information helps safeguard your privacy.

third, ai algorithms can sometimes exhibit bias due to the data they are trained on. understanding the sources of these biases and how they are addressed can help you choose ai tools that promote fairness and avoid perpetuating discrimination.

fourth, the environmental impact of ai, such as the energy consumption of data centers, is a growing concern. researching the environmental footprint of ai technologies can help you select solutions that are more sustainable and environmentally friendly.

!google and microsoft ai use renewable and efficient energy to power their data centres. ai also powers blue river technology, carbon engineering and xylem (only applying herbicides to weeds, combatting climate change, and water-management systems). (ai magazine)

!training large-scale ai models, especially language models, consumes massive amounts of electricity and water, leading to high carbon emissions and resource depletion. ai data centers consume significant amounts of electricity and produce electronic waste, contributing to environmental degradation. generative ai systems require enormous amounts of fresh water for cooling processors and generating electricity, which can strain water resources. the proliferation of ai servers leads to increased electronic waste, harming natural ecosystems. additionally, ai operations that rely on fossil fuels for electricity production contribute to greenhouse gas emissions and climate change.

fifth, being aware of the ethical implications of ai is important. ensuring that ai tools are used responsibly and ethically helps prevent misuse and protects individuals from potential harm.

finally, researching ai helps you stay informed about best practices and the latest advancements, allowing you to make the most of the technology while minimizing risks. by taking the time to research and understand ai, you can make informed decisions that maximize its benefits while mitigating potential downsides.

impact on critical thinking

ai can both support and hinder critical thinking. on one hand, it provides access to vast amounts of information and tools for analysis, which can enhance decision-making. on the other hand, over-reliance on ai can lead to a decline in human cognitive skills, as people may become less inclined to think critically and solve problems independently.

benefits of using ai in daily life

efficiency and productivity: ai automates repetitive tasks, freeing up time for more complex activities. for example, ai-powered chatbots can handle customer inquiries, allowing human employees to focus on more strategic tasks.

personalization: ai can analyze vast amounts of data to provide personalized recommendations, such as suggesting products based on past purchases or tailoring content to individual preferences.

healthcare advancements: ai is used in diagnostics, treatment planning, and even robotic surgeries, improving patient outcomes and healthcare efficiency.

enhanced decision-making: ai can process large datasets quickly, providing insights that help in making informed decisions in business, finance, and other fields.

convenience: ai-powered virtual assistants like siri and alexa make it easier to manage daily tasks, from setting reminders to controlling smart home devices.

limitations of using ai in daily life

job displacement: automation can lead to job losses in certain sectors, as machines replace human labor.

privacy concerns: ai systems often require large amounts of data, raising concerns about data privacy and security.

bias and fairness: ai algorithms can perpetuate existing biases if they are trained on biased data, leading to unfair or discriminatory outcomes.

dependence on technology: over-reliance on ai can reduce human skills and critical thinking abilities.

high costs: developing and maintaining ai systems can be expensive, which may limit access for smaller businesses or individuals.

further reading

mit horizon, kmpg, ai magazine, bcg, techopedia, technology review, microsoft, science direct-1, science direct-2

my personal standpoint is that people must educate themselves and be mindful of not only what ai they are using, but how they use it. we should not become reliant - we are our own people! balancing the use of ai with human skills and critical thinking is key to harnessing its full potential responsibly.

🫶nene

instagram | pinterest | blog site

#student#study blog#productivity#student life#chaotic academia#it girl#it girl aesthetic#becoming that girl#that girl#academia#nenelonomh#science#ai#artificial intelligence#research#it girl mentality#it girl energy#pinterest girl#study#studying#100 days of studying#study aesthetic#study hard#study inspiration#study inspo#study community#study notes#study tips#study space#study with me

74 notes

·

View notes

Text

The Story of KLogs: What happens when an Mechanical Engineer codes

Since i no longer work at Wearhouse Automation Startup (WAS for short) and havnt for many years i feel as though i should recount the tale of the most bonkers program i ever wrote, but we need to establish some background

WAS has its HQ very far away from the big customer site and i worked as a Field Service Engineer (FSE) on site. so i learned early on that if a problem needed to be solved fast, WE had to do it. we never got many updates on what was coming down the pipeline for us or what issues were being worked on. this made us very independent

As such, we got good at reading the robot logs ourselves. it took too much time to send the logs off to HQ for analysis and get back what the problem was. we can read. now GETTING the logs is another thing.

the early robots we cut our teeth on used 2.4 gHz wifi to communicate with FSE's so dumping the logs was as simple as pushing a button in a little application and it would spit out a txt file

later on our robots were upgraded to use a 2.4 mHz xbee radio to communicate with us. which was FUCKING SLOW. and log dumping became a much more tedious process. you had to connect, go to logging mode, and then the robot would vomit all the logs in the past 2 min OR the entirety of its memory bank (only 2 options) into a terminal window. you would then save the terminal window and open it in a text editor to read them. it could take up to 5 min to dump the entire log file and if you didnt dump fast enough, the ACK messages from the control server would fill up the logs and erase the error as the memory overwrote itself.

this missing logs problem was a Big Deal for software who now weren't getting every log from every error so a NEW method of saving logs was devised: the robot would just vomit the log data in real time over a DIFFERENT radio and we would save it to a KQL server. Thanks Daddy Microsoft.

now whats KQL you may be asking. why, its Microsofts very own SQL clone! its Kusto Query Language. never mind that the system uses a SQL database for daily operations. lets use this proprietary Microsoft thing because they are paying us

so yay, problem solved. we now never miss the logs. so how do we read them if they are split up line by line in a database? why with a query of course!

select * from tbLogs where RobotUID = [64CharLongString] and timestamp > [UnixTimeCode]

if this makes no sense to you, CONGRATULATIONS! you found the problem with this setup. Most FSE's were BAD at SQL which meant they didnt read logs anymore. If you do understand what the query is, CONGRATULATIONS! you see why this is Very Stupid.

You could not search by robot name. each robot had some arbitrarily assigned 64 character long string as an identifier and the timestamps were not set to local time. so you had run a lookup query to find the right name and do some time zone math to figure out what part of the logs to read. oh yeah and you had to download KQL to view them. so now we had both SQL and KQL on our computers

NOBODY in the field like this.

But Daddy Microsoft comes to the rescue

see we didnt JUST get KQL with part of that deal. we got the entire Microsoft cloud suite. and some people (like me) had been automating emails and stuff with Power Automate

This is Microsoft Power Automate. its Microsoft's version of Scratch but it has hooks into everything Microsoft. SharePoint, Teams, Outlook, Excel, it can integrate with all of it. i had been using it to send an email once a day with a list of all the robots in maintenance.

this gave me an idea

and i checked

and Power Automate had hooks for KQL

KLogs is actually short for Kusto Logs

I did not know how to program in Power Automate but damn it anything is better then writing KQL queries. so i got to work. and about 2 months later i had a BEHEMOTH of a Power Automate program. it lagged the webpage and many times when i tried to edit something my changes wouldn't take and i would have to click in very specific ways to ensure none of my variables were getting nuked. i dont think this was the intended purpose of Power Automate but this is what it did

the KLogger would watch a list of Teams chats and when someone typed "klogs" or pasted a copy of an ERROR mesage, it would spring into action.

it extracted the robot name from the message and timestamp from teams

it would lookup the name in the database to find the 64 long string UID and the location that robot was assigned too

it would reply to the message in teams saying it found a robot name and was getting logs

it would run a KQL query for the database and get the control system logs then export then into a CSV

it would save the CSV with the a .xls extension into a folder in ShairPoint (it would make a new folder for each day and location if it didnt have one already)

it would send ANOTHER message in teams with a LINK to the file in SharePoint

it would then enter a loop and scour the robot logs looking for the keyword ESTOP to find the error. (it did this because Kusto was SLOWER then the xbee radio and had up to a 10 min delay on syncing)

if it found the error, it would adjust its start and end timestamps to capture it and export the robot logs book-ended from the event by ~ 1 min. if it didnt, it would use the timestamp from when it was triggered +/- 5 min

it saved THOSE logs to SharePoint the same way as before

it would send ANOTHER message in teams with a link to the files

it would then check if the error was 1 of 3 very specific type of error with the camera. if it was it extracted the base64 jpg image saved in KQL as a byte array, do the math to convert it, and save that as a jpg in SharePoint (and link it of course)

and then it would terminate. and if it encountered an error anywhere in all of this, i had logic where it would spit back an error message in Teams as plaintext explaining what step failed and the program would close gracefully

I deployed it without asking anyone at one of the sites that was struggling. i just pointed it at their chat and turned it on. it had a bit of a rocky start (spammed chat) but man did the FSE's LOVE IT.

about 6 months later software deployed their answer to reading the logs: a webpage that acted as a nice GUI to the KQL database. much better then an CSV file

it still needed you to scroll though a big drop-down of robot names and enter a timestamp, but i noticed something. all that did was just change part of the URL and refresh the webpage

SO I MADE KLOGS 2 AND HAD IT GENERATE THE URL FOR YOU AND REPLY TO YOUR MESSAGE WITH IT. (it also still did the control server and jpg stuff). Theres a non-zero chance that klogs was still in use long after i left that job

now i dont recommend anyone use power automate like this. its clunky and weird. i had to make a variable called "Carrage Return" which was a blank text box that i pressed enter one time in because it was incapable of understanding /n or generating a new line in any capacity OTHER then this (thanks support forum).

im also sure this probably is giving the actual programmer people anxiety. imagine working at a company and then some rando you've never seen but only heard about as "the FSE whos really good at root causing stuff", in a department that does not do any coding, managed to, in their spare time, build and release and entire workflow piggybacking on your work without any oversight, code review, or permission.....and everyone liked it

#comet tales#lazee works#power automate#coding#software engineering#it was so funny whenever i visited HQ because i would go “hi my name is LazeeComet” and they would go “OH i've heard SO much about you”

63 notes

·

View notes

Text

ever wonder why spotify/discord/teams desktop apps kind of suck?

i don't do a lot of long form posts but. I realized that so many people aren't aware that a lot of the enshittification of using computers in the past decade or so has a lot to do with embedded webapps becoming so frequently used instead of creating native programs. and boy do i have some thoughts about this.

for those who are not blessed/cursed with computers knowledge Basically most (graphical) programs used to be native programs (ever since we started widely using a graphical interface instead of just a text-based terminal). these are apps that feel like when you open up the settings on your computer, and one of the factors that make windows and mac programs look different (bc they use a different design language!) this was the standard for a long long time - your emails were served to you in a special email application like thunderbird or outlook, your documents were processed in something like microsoft word (again. On your own computer!). same goes for calendars, calculators, spreadsheets, and a whole bunch more - crucially, your computer didn't depend on the internet to do basic things, but being connected to the web was very much an appreciated luxury!

that leads us to the eventual rise of webapps that we are all so painfully familiar with today - gmail dot com/outlook, google docs, google/microsoft calendar, and so on. as html/css/js technology grew beyond just displaying text images and such, it became clear that it could be a lot more convenient to just run programs on some server somewhere, and serve the front end on a web interface for anyone to use. this is really very convenient!!!! it Also means a huge concentration of power (notice how suddenly google is one company providing you the SERVICE) - you're renting instead of owning. which means google is your landlord - the services you use every day are first and foremost means of hitting the year over year profit quota. its a pretty sweet deal to have a free email account in exchange for ads! email accounts used to be paid (simply because the provider had to store your emails somewhere. which takes up storage space which is physical hard drives), but now the standard as of hotmail/yahoo/gmail is to just provide a free service and shove ads in as much as you need to.

webapps can do a lot of things, but they didn't immediately replace software like skype or code editors or music players - software that requires more heavy system interaction or snappy audio/visual responses. in 2013, the electron framework came out - a way of packaging up a bundle of html/css/js into a neat little crossplatform application that could be downloaded and run like any other native application. there were significant upsides to this - web developers could suddenly use their webapp skills to build desktop applications that ran on any computer as long as it could support chrome*! the first applications to be built on electron were the late code editor atom (rest in peace), but soon a whole lot of companies took note! some notable contemporary applications that use electron, or a similar webapp-embedded-in-a-little-chrome as a base are:

microsoft teams

notion

vscode

discord

spotify

anyone! who has paid even a little bit of attention to their computer - especially when using older/budget computers - know just how much having chrome open can slow down your computer (firefox as well to a lesser extent. because its just built better <3)

whenever you have one of these programs open on your computer, it's running in a one-tab chrome browser. there is a whole extra chrome open just to run your discord. if you have discord, spotify, and notion open all at once, along with chrome itself, that's four chromes. needless to say, this uses a LOT of resources to deliver applications that are often much less polished and less integrated with the rest of the operating system. it also means that if you have no internet connection, sometimes the apps straight up do not work, since much of them rely heavily on being connected to their servers, where the heavy lifting is done.

taking this idea to the very furthest is the concept of chromebooks - dinky little laptops that were created to only run a web browser and webapps - simply a vessel to access the google dot com mothership. they have gotten better at running offline android/linux applications, but often the $200 chromebooks that are bought in bulk have almost no processing power of their own - why would you even need it? you have everything you could possibly need in the warm embrace of google!

all in all the average person in the modern age, using computers in the mainstream way, owns very little of their means of computing.

i started this post as a rant about the electron/webapp framework because i think that it sucks and it displaces proper programs. and now ive swiveled into getting pissed off at software services which is in honestly the core issue. and i think things can be better!!!!!!!!!!! but to think about better computing culture one has to imagine living outside of capitalism.

i'm not the one to try to explain permacomputing specifically because there's already wonderful literature ^ but if anything here interested you, read this!!!!!!!!!! there is a beautiful world where computers live for decades and do less but do it well. and you just own it. come frolic with me Okay ? :]

*when i say chrome i technically mean chromium. but functionally it's same thing

347 notes

·

View notes

Text

WIRES>]; ATTACK ON ISRAEL WAS A FALSE FLAG EVENT

_Israel with over 10,000 Spys in the military imbedded inside IRAN. Saudi Arabia and world Militaries.... Israels INTELLIGENCE Agencies, including MOSSAD which is deeply connected to CIA, MI6 .. > ALL knew the Hamas was going to attack Israel several weeks before and months ago including several hours before the attack<

_The United States knew the attack was coming was did Australia, UK. Canada, EU INTELLIGENCE...... Several satellites over Iran, Israel, Palestine and near all captured thousands of troops moving towards Israel all MAJOR INTELLIGENCE AGENCIES knew the attack was coming and news reporters (Israeli spys) in Palestine all knew the attack was coming and tried to warn Israel and the military///// >

>EVERYONE KNEW THE ATTACK WAS COMING,, INCLUDING INDIA INTELLIGENCE WHO TRIED TO CONTACT ISRAEL ( but Israel commanders and President blocked ALL calls before the attack)

_WARNING

>This attack on Israel was an inside Job, with the help of CIA. MOSSAD, MI6 and large parts of the funding 6 billion $$$$$$$ from U.S. to Iran funded the operations.

_The weapons used came from the Ukraine Black market which came from NATO,>the U.S.

The ISRAELI President and Prime minister Netanyahu ALL STOOD DOWN before the attacks began and told the Israeli INTEL and military commanders to stand down<

___

There was no intelligence error. Israeli intensionally let the stacks happen<

_______

FOG OF WAR

Both the deep state and the white hats wanted these EVENTS to take place.

BOTH the [ ds] and white hats are fighting for the future control of ISRAEL

SOURCES REPORT> " INSIDE OF ISRAELI BANKS , INTELLIGENCE AGENCIES AND UNDERGROUND BASES LAY THE WORLD INFORMATION/DATA/SERVERS ON HUMAN TRAFFICKING WORLD OPERATIONS CONNECTED TO PEDOPHILE RINGS.

]> [ EPSTEIN] was created by the MOSSAD

with the CIA MI6 and EPSTEIN got his funding from MOSSAD who was Ghislaine Maxwells father> Israeli super spy Robert Maxwell_ ( who worked for, cia and mi6 also)/////

____

The past 2 years in Israel the military has become divided much like the U.S. military who are losing hope in the government leaders and sectors. Several Revolts have tried to start but were ended quickly.

🔥 Major PANIC has been hitting the Israeli INTEL, Prime minister and military commanders community as their corruption and crimes keep getting EXPOSED and major PANIC is happening as U S. IS COMING CLOSER TO DROPPING THE EPSTEIN FILES. EPSTEIN LIST AND THE MAJOR COUNTRIES WHO DEALT WITH EPSTEIN> ESPECIALLY ISRAEL WHO CREATED EPSTEIN w/cia/mi6

_

_

____

Before EPSTEIN was arrested, he was apprehended several times by the military intelligence ALLIANCE and he was working with white hats and gave ALL INFORMATION ON CIA. MI6 . MOSSAD. JP MORGAN. WORLD BANKS. GATES. ETC ETC ECT EX ECT E TO X..>> ISRAEL<<BIG TECH

GOOGLE. FACEBOOK YOUTUBE MICROSOFT and their connection to world deep state cabal military intelligence and world control by the Elites and Globalist,<

_

This massive coming THE STORM is scaring the CIA. MOSSAD KAZARIAN MAFIA. MI6 ETC ECT . ect etc AND THEY ARE TRYING TO DESTROY ALL THE MILITARY INTELLIGENCE EVIDENCE INSIDE ISRAEL AND UNDERGROUND BUNKERS TO CONCEAL ALL THE EVIDENCE OF THE WORLD HUMAN TRAFFICKING TRADE

_ THE WORLD BIG TECH FACEBOOK GOOGLE YOUTUBE CONTROL

_THE WORLD MONEY LAUNDERING SYSTEM THAT IS CONNECTED FROM ISRAEL TO UKRAINE TO THE U S. TO NATO UN. U.S. INDUSTRIAL MILITARY COMPLEX SYSTEM

MAJOR PANIC IS HAPPENING IN ISRAEL AS THE MILITARY WAS PLANNING A 2024 COUP IN ISRAEL TO OVER THROW THE DEEP STATE MILITARY AND REGIMEN THAT CONNECTED TO CIA.MI6 > CLINTON'S ROCKEFELLERS.>>

( Not far from where Jesus once walked.... The KAZARIAN Mafia. The cabal, dark Families began the practice of ADRENOCHROME and there satanic rituals to the god of moloch god of child sacrifice ..

Satanism..... This is why satanism is pushed through the world and world shopping centers and music and movies...)

- David Wilcock

Something definitely doesn't seem right and destroying evidence has been going on for a long time, think Oklahoma City bombing, 9/11's building 7 and even Waco Texas was about destroying evidence. Is this possible? Think about it and you decide. 🤔

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourself#reeducate yourselves#think for yourselves#think about it#think for yourself#do your homework#do some research#do your own research#ask yourself questions#question everything#you decide

107 notes

·

View notes

Note

Hey so what's Linux and what's it do

It’s an operating system like macOS or Windows. It runs on like 90% of servers but very few people use it on desktop. I do because I’m extra like that I guess.

It’s better than Windows in some ways since it doesn’t have a bunch of ads and shit baked into it. Plus it’s free (both in the sense of not paying and the sense of freedom [like all the code is public so you know what’s running on your system, can change whatever you want if you have the skills to, etc]). Also it’s more customizable.

The downside is a lot of apps don’t run on it (games that have really invasive anti-cheat [cough Valorent cough], Adobe products, Microsoft office — although a shit ton of Windows-only games do run totally fine). There’s some alternatives, like libreoffice instead of MS office (also anything in a browser runs fine, so Google docs too). GIMP as a photoshop replacement does exist, it’s fine for basic stuff but I’ve heard it’s not great for advanced stuff.

#also linux users are like vegans in that if they are one they’ll tell you they are#and also tell you why you should be#oh also android and chromeos are both based on linux#linux#sorry this post ended up so long lol

55 notes

·

View notes

Note

literally annoyed that all coastal states (including my dumb glove shaped state) aren't 90% hydro/wind

i might not be an engineer but i am with you there

*drags soapbox out and jumps on top*

DO YOU KNOW HOW INFURIATING IT IS TO HAVE EVERYONE SAY “ELECTRIFY EVERYTHING” KNOWING FULL GODDAMN WELL THAT THE GRID 1) CANNOT SUPPORT IT AND 2) IS DRASTICALLY NOT BASED ON RENEWABLE ENERGY?!?!?!

Don’t get me wrong I love electric cars, I love heat pump systems, I love buildings and homes that can say they are fossil fuel free! Really! I do!

But it means FUCK ALL when you have!!!! Said electricity!!!! Sourced by fossil fuels!!!! I said this in my tags on the other post but New York City! Was operating on *COAL*!!!!! Up until like 5 years ago.

WE ARE SITTING IN THE MIDDLE OF A RIVER.

Not to mention the ocean which like. You ever been to the beach?! You know what there’s a whole hell of a lot of at the beach? Wind!!!!!!!! And yet we have literal campaigns saying “save our oceans! Say no to wind power!”

Idk bruh I feel like the fish are gonna be less happy in a boiling ocean than needing to swim around a giant turbine but. I’m not a fuckin fish so.

NOT TO MENTION (I am fully waving my hands around like a crazy person because this is the main thing that gets me going)

THE ELECTRICAL GRID OF THE UNITED STATES HAS NOT BEEN UPDATED ON LARGE SCALE LEVELS SINCE IT WAS BUILT IN THE 1950s AND 60s.

It is not DESIGNED to handle every building in the city of [random map location] Chicago being off of gas and completely electrified. It’s not!!! The plants cannot handle it as now!

So not only do we not have renewable sources because somebody in Iowa doesn’t want to replace their corn field with a solar field/a rich Long Islander doesn’t want to replace their ocean view with a wind turbine! We also are actively encouraging people to put MORE of a strain on the grid with NO FUCKING SOLUTION TO MEET THAT DEMAND!

I used to deal with this *all* the time in my old job when I was working with smaller building - they ALWAYS needed an electrical upgrade from the street and like. The utility only has so many wires going to that building. And it’s not planning on bringing in more for the most part!

(I am now vibrating with rage) and THEN you have the fuckin AI bros! Who have their data centers in the middle of nowhere because that’s a great place to have a lot of servers that you need right? Yeah sure, you know what those places don’t have? ELECTRICAL INFRASTRUCTURE TO SUPPORT THE STUPID AMOUNT OF POWER AI NEEDS!!!!!!!

Now the obvious solution is that the AI bros of Google and Microsoft and whoever the fuck just use their BILLIONS OF FUCKING DOLLARS IN PROFIT to be good neighbors and upgrade the fucking systems because truly what is the downside to that everybody fucking wins!

But what do I know. I’m just friendly neighborhood engineer.

*hops down from soapbox*

#Kate I’m so sorry#you did not realize that you touched on one of my top three major soapbox points#but the state of the grid and lack of renewables in the year 2024 is truly something I could scream about for hours#and ask Reina!!!#I HAVE!!!#😅#friendly neighborhood engineer#answered asks#hookedhobbies#long post

11 notes

·

View notes

Text

Managed VPS Hosting Plans: Full Control and Support

When it comes to hosting, many businesses find themselves in need of solutions that offer flexibility, control, and reliability. Managed VPS (Virtual Private Server) hosting provides the perfect balance between shared and dedicated hosting, making it a popular choice for companies of all sizes. With managed VPS hosting, businesses get the benefits of a dedicated server without the high costs, along with the peace of mind that comes with professional support.

Understanding Managed VPS Hosting

Managed VPS hosting offers a blend of performance and ease of use, making it ideal for businesses that lack the technical expertise or resources to manage a server on their own. One of the key features of managed VPS is that the hosting provider handles the server's technical aspects, including updates, security patches, and backups. This allows businesses to focus on what matters most—growing their business—while leaving the server management to the experts.

Flexibility and Control with Cheap Linux VPS Hosting

For many businesses, Linux-based hosting is a cost-effective and powerful solution. Cheap Linux VPS hosting offers excellent scalability and performance, making it ideal for developers, small businesses, and enterprises. With Linux VPS hosting, users can benefit from open-source technology that provides flexibility to install the applications and software they need. Additionally, it’s known for its stability and security, ensuring that businesses have a reliable foundation for their online operations.

Linux VPS hosting is often the preferred choice for developers who need full control over their hosting environment. Managed plans ensure that even those who lack server management skills can still leverage the power of a VPS. From configuring firewalls to managing security protocols, managed VPS hosting allows businesses to tailor their hosting solutions to their specific needs without the technical hassle.

The Power of the Best Windows VPS Servers

While Linux hosting is popular, many businesses prefer Best Windows VPS Servers for their familiarity with the Windows operating system. Windows VPS hosting offers seamless integration with Microsoft applications, making it a great choice for businesses that rely on software like Microsoft Exchange, SQL Server, or ASP.NET. The managed support ensures that companies can focus on using their software efficiently while the server's technical aspects are handled by the hosting provider.

With full control over the server environment, Windows VPS hosting allows businesses to customize their server settings, choose the level of resources they need, and scale as their company grows. Managed support ensures that Windows VPS servers run smoothly, providing businesses with a reliable and powerful hosting solution.

Cheap Windows VPS Server: Affordable and Efficient

For businesses on a budget, Cheap Windows VPS Server hosting offers an affordable solution without sacrificing performance. These hosting plans are designed to meet the needs of businesses looking for an economical option that still provides robust features and excellent support. Managed VPS hosting ensures that the server is always running optimally, so businesses can focus on what they do best—running their operations.

In conclusion, whether you’re looking for Linux or Windows hosting, AKL Web Host offers a range of Managed VPS Hosting Plans to meet your needs. With full control over your server environment and expert support, AKL Web Host ensures that your hosting is reliable, secure, and tailored to your business requirements.

#windows vps server#cheap windows vps server#cheap linux vps hosting#unlimited web hosting plan#best web hosting for ecommerce#best dedicated hosting services for 2024#best windows vps servers#best wordpress hosting#cheap wordpress hosting#cheap dedicated server#Windows VPS Server#Cheap Windows VPS Server#Best Windows VPS Servers#Cheap linux VPS hosting#Hosting with free SSL certificate

3 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

�� IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Best Shared Hosting Plans for Small Businesses

The success of a small business website is directly proportional to the reliability of the web hosting service that the company uses. The selection of the best shared hosting can appear to be a hard task due to the abundance of possibilities that are available. Nevertheless, the process can be simplified by first gaining an understanding of the requirements of your company and then matching those requirements with the appropriate web hosting options.

It is essential to locate a Best Shared Hosting Plans for Small Businesse that satisfies your objectives without sacrificing performance or security, regardless of whether you are looking for a plan that is affordable, scalable, or offers specific technical support. Learn how to select the best shared hosting plan to advance your online presence by gaining an understanding of the best hosting for a small business in the year 2024.

In the case of a small business, which shared hosting plan is the most suitable?

Making the appropriate choice when it comes to best web hosting plans for small business is absolutely necessary for small businesses that want to develop a strong presence online. Given the many choices available, it is important to have a solid understanding of the primary characteristics and advantages associated with each type of web hosting in order to make an educated choice. For the benefit of a small business, the following is a list of the most prevalent types of hosting.

Windows Shared Hosting

The term "Windows shared hosting" refers to a type of web hosting service that functions by hosting numerous websites on a single server that is powered by the Windows operating system. Businesses that rely on Microsoft technologies such as ASP.NET, MSSQL, and Windows Server are the perfect candidates for this type of hosting. Shared hosting for Windows provides advantages that are comparable to those offered by Linux shared hosting, such as affordable Ness and ease of functionality.

On the other hand, due to the license expenses connected with Microsoft technology, Windows hosting is often more expensive than Linux hosting. Additionally, it is possible that Windows hosting does not support all Linux-based technologies. As a result, businesses who require particular Linux-based technologies may need to examine alternate hosting solutions. Windows shared hosting continues to be a popular option for organizations that are searching for a hosting solution that is both dependable and easy to use.

Shared Linux hosting

One sort of web hosting service is known as Linux shared hosting. This type of hosting allows numerous websites to be hosted on a single server that is running the Linux operating system. The websites that are hosted on the server share resources such disc space, bandwidth, and computing power. This configuration allows for the optimal utilization of these resources. Linux shared hosting is a web hosting solution that is friendly to your wallet and provides the reliability and security that the Linux operating system is well known for.

Due to the fact that it is reasonably priced and provides a wide variety of functions, this is an appealing choice for website hosting for a small business. The support that Linux shared hosting provides for well-known programming languages like as PHP, Perl, and Python is one of the most significant advantages of this type of hosting. Because of this, it is an excellent option for small businesses who are exploring the possibility of developing websites or web applications that are dynamic and interactive.

Small businesses can also benefit from Linux shared hosting because it enables them to share resources with other websites that are hosted on the same server, which helps to keep prices down. Given that this environment is shared, however, it is possible that performance will be negatively affected if other websites on the server experience significant levels of traffic or resource utilization. Linux shared hosting continues to be an affordable and dependable alternative for small businesses that are wanting to develop a strong presence online, despite the limits that have presented themselves.

WordPress Hosting

Exclusively designed for websites that are built on the WordPress content management system (CMS), WordPress hosting is a specialized web hosting service that is intended exclusively for such websites. WordPress websites can benefit from this type of hosting since it enhances their performance, security, and management capabilities. There are several reasons why WordPress hosting is considered to be among the best hosting options for a small business. To begin, it provides a user-friendly interface, which makes it simple for proprietors of small businesses who lack technical expertise to administer their websites.

This user-friendliness results in cost savings for organizations because they are able to manage their websites without the need for dedicated information technology staff. Additionally, WordPress hosting typically offers features such as automated upgrades and backups, which are vital for guaranteeing the security and integrity of a website that is used by a small business. The peace of mind that these features provide to owners of small businesses is important because they assist defend against the loss of data and cyber threats.

Enhanced security measures, like as malware detection and removal, firewalls, and distributed denial of service protection, are frequently included by reputable web hosting providers like Dollar2Host in order to further ensure the safety of WordPress websites. In addition, WordPress hosting is scalable, which makes it possible for small businesses to easily expand their websites in response to their expanding requirements. The website is able to accommodate additional traffic and content without having any downtime or performance difficulties because to its scalability, which assures that it can accommodate either.

Cloud Hosting

A web hosting service known as cloud hosting is a form of web hosting service that hosts websites by utilizing a network of virtual computers. Scalability and flexibility are made possible as a result of this, as resources may be readily and quickly scaled up or down in response to changes in the amount of traffic. Due to the fact that websites are not dependent on a single physical server, cloud hosting provides exceptionally high levels of stability. Due to the fact that it is both scalable and reliable, cloud hosting is an excellent choice for website hosting for a small business.

The pricing approach is based on a pay-as-you-go concept, which enables organizations to pay only for the resources that they really employ. When compared to traditional hosting methods, which frequently require companies to pay for resources that are not being utilized, this enables firms to realize cost savings. Cloud hosting also provides better security features, such as regular data backups and built-in security safeguards, which are characteristics that are offered by cloud hosting. As a result, websites are better protected against cyber threats and the loss of data. In general, cloud hosting is a hosting option that is both versatile and trustworthy, making it an ideal choice for small businesses that are trying to develop a strong presence online.

Choosing the Right Hosting Plan for Your Small Business: What Should You Look for?

It is necessary to take into consideration a number of important aspects when selecting the best shared hosting plan for a small business in order to guarantee that it will fulfil the requirements of the company. Choosing the best shared hosting plan for your small business can be accomplished by following these guidelines:

Scalability

To determine whether the web hosting plan supports scalability, you should consider. You may find that you need to update your web hosting plan as your company expands in order to meet the demands of increased traffic and data storage.

Measures to Ensure Safety

One should look for web hosting plans that provide a comprehensive set of security features, including free SSL certificates, DDoS protection, malware scanning, and regular backups. In order to safeguard your website and the information of your customers, these elements are very necessary.

Availability of Storage Space and Bandwidth

It is important to take into consideration the quantity of bandwidth and storage space that the best shared hosting plan provides. Make sure it is adequate to meet the requirements of your website, taking into account the size of your files and the volume of traffic that is anticipated.

Email that is personalized

Ensure that the best shared hosting plan you select has the ability to create individualized email addresses. The use of this into your communications lends an air of professionalism and contributes to the development of your brand identity.

Customer Support

When searching for best shared hosting providers, look for those that offer dependable customer support services, preferably 24/7. Therefore, this is quite important in the event that you come across any problems with your website that require rapid care.

Critical Reviews and Reputation

It is important to conduct research on best shared hosting providers for small business in order to learn what other users are saying about their services. Take into consideration web hosting service providers who have a solid reputation in terms of dependability, performance, and customer support.

Conclusion-

For the purpose of developing a powerful presence on the internet, it is essential to select the most suitable hosting for your small business. Obtaining a plan that satisfies your requirements can be accomplished by first gaining an understanding of your demands and then giving priority to aspects such as price, scalability, and security. The selection of a trustworthy shared hosting provider is essential, regardless of whether you choose to host your website using WordPress, Linux shared hosting, Windows shared hosting, or cloud hosting.

Dollar2Host is a reliable hosting service providers that provides smaller businesses with a variety of hosting choices that are specifically designed for them. Considering that Dollar2Host offers features such as robust security, scalable resources, and good customer support, it is possible that Dollar2Host is an excellent choice for hosting your company's website.

Dollar2host Dollar2host.com We provide expert Webhosting services for your desired needs Facebook Twitter Instagram YouTube

2 notes

·

View notes

Text

Expert Power Platform Services | Navignite LLP

Looking to streamline your business processes with custom applications? With over 10 years of extensive experience, our agency specializes in delivering top-notch Power Apps services that transform the way you operate. We harness the full potential of the Microsoft Power Platform to create solutions that are tailored to your unique needs.

Our Services Include:

Custom Power Apps Development: Building bespoke applications to address your specific business challenges.

Workflow Automation with Power Automate: Enhancing efficiency through automated workflows and processes.

Integration with Microsoft Suite: Seamless connectivity with SharePoint, Dynamics 365, Power BI, and other Microsoft tools.

Third-Party Integrations: Expertise in integrating Xero, QuickBooks, MYOB, and other external systems.

Data Migration & Management: Secure and efficient data handling using tools like XRM Toolbox.

Maintenance & Support: Ongoing support to ensure your applications run smoothly and effectively.

Our decade-long experience includes working with technologies like Azure Functions, Custom Web Services, and SQL Server, ensuring that we deliver robust and scalable solutions.

Why Choose Us?

Proven Expertise: Over 10 years of experience in Microsoft Dynamics CRM and Power Platform.

Tailored Solutions: Customized services that align with your business goals.

Comprehensive Skill Set: Proficient in plugin development, workflow management, and client-side scripting.

Client-Centric Approach: Dedicated to improving your productivity and simplifying tasks.

Boost your productivity and drive innovation with our expert Power Apps solutions.

Contact us today to elevate your business to the next level!

#artificial intelligence#power platform#microsoft power apps#microsoft power platform#powerplatform#power platform developers#microsoft power platform developer#msft power platform#dynamics 365 platform

2 notes

·

View notes

Text

Cheap VPS Hosting Services in India – SpectraCloud

SpectraCloud provides Cheap VPS Hosting Services in India for anyone looking to get simple and cost-effective compute power for their projects. VPS hosting is provided with Virtualized Servers, SpectraCloud virtual machines, and there are multiple with Virtualized Servers types for use cases ranging from personal websites to highly scalable applications such as video streaming and gaming applications. You can choose between shared CPU offerings and dedicated CPU offerings based on your anticipated usage.

VPS hosting provides an optimal balance between affordability and performance, making it perfect for small to medium-sized enterprises. If you're looking for a trustworthy and cost-effective VPS hosting option in India, SpectraCloud arise as a leading choice. Offering a range of VPS Server Plans designed to combine various business requirements, SpectraCloud guarantees excellent value for your investment.

What is VPS Hosting?

VPS hosting refers to a Web Hosting Solution where a single physical server is segmented into several virtual servers. Each virtual server functions independently, providing the advantages of a dedicated server but at a more affordable price. With VPS Hosting, you have the ability to tailor your environment, support you to modify server settings, install applications, and allocate resources based on your unique needs.

Why Choose VPS Hosting?

The main benefit of VPS hosting is its adaptability. Unlike shared hosting, which sees many websites utilizing the same server resources, VPS hosting allocates dedicated resources specifically for your site or application. This leads to improved performance, superior security, and increased control over server settings.

For companies in India, where budget considerations are typically crucial, VPS hosting presents an excellent choice. It provides a superior level of performance compared to shared hosting, all while avoiding the high expenses linked to dedicated servers.

SpectraCloud: Leading the Way in Low-Cost VPS Hosting in India

SpectraCloud has positioned itself as a leader in the VPS Hosting market in India by offering affordable, high-quality VPS Server Plans. Their services provide for businesses of all sizes, from startups to established enterprises, providing a range of options that fit different budgets and needs.

1. Variety of VPS Server Plans

SpectraCloud offers a wide range of VPS Server Plans, ensuring that there’s something for everyone. Whether you’re running a small website, an e-commerce platform, or a large-scale application, SpectraCloud has a plan that will suit your needs. Their VPS plans are customizable, allowing you to choose the amount of RAM, storage, and capability that fits your specific requirements. This flexibility ensures that you only pay for what you need, making it an economical choice for businesses looking to optimize their hosting expenses.

2. Best VPS for Windows Hosting

For businesses that require a Windows environment, SpectraCloud offers the Best VPS for Windows Hosting in India. Windows VPS hosting is essential for running applications that require Windows server, such as ASP.NET websites, Microsoft Exchange, and SharePoint. SpectraCloud Windows VPS Plans are designed for high performance and reliability, ensuring that your Windows-based applications run smoothly and efficiently.

Windows VPS Hosting comes pre-installed with the Windows operating system, and you can choose from different versions depending on your needs. Moreover, SpectraCloud provides full root access, so you can configure your server the way you want.

3. Affordable and Low-Cost VPS Hosting

SpectraCloud commitment to providing Affordable VPS Hosting is evident in their competitive pricing. They understand that businesses need cost-effective solutions without compromising on quality. By offering Low-Cost VPS Hosting Plans, SpectraCloud ensures that businesses can access top-tier hosting services without breaking the bank.

Their low-cost VPS hosting plans start at prices that are accessible to even the smallest businesses. Despite the affordability, these plans come with robust features such as SSD storage, high-speed network connectivity, and advanced security measures. This combination of affordability and quality makes SpectraCloud a preferred choice for businesses seeking budget-friendly VPS Hosting in India.

Key Features of SpectraCloud VPS Hosting

1. High Performance and Reliability

SpectraCloud VPS hosting is built on powerful hardware and cutting-edge technology. Their servers are equipped with SSD storage, which ensures faster data retrieval and improved website loading times. With SpectraCloud, you can expect minimal downtime and consistent performance, which is crucial for maintaining the smooth operation of your business.

2. Full Root Access

One of the significant advantages of using SpectraCloud VPS hosting is the full root access they provide. This means you have complete control over your server, allowing you to install software, configure settings, and manage your hosting environment according to your option. Full root access is particularly beneficial for businesses that need to customize their server to meet specific requirements.

3. Scalable Resources

As your business grows, your hosting needs will develop. SpectraCloud offers scalable VPS hosting plans that allow you to upgrade your resources as needed. Whether you need more RAM, storage, or Ability, SpectraCloud makes it easy to scale up your VPS plan without experiencing any downtime. This scalability ensures that your hosting solution can grow with your business.

4. Advanced Security

Security is a top priority for SpectraCloud. Their VPS Hosting Plans come with advanced security features to protect your data and applications. This includes regular security updates, firewalls, and DDoS protection. By choosing SpectraCloud, you can rest assured that your business data is safe from cyber threats.

5. 24/7 Customer Support

SpectraCloud customer support team is available 24/7 to assist you with any issues or questions you may have. Their knowledgeable and friendly support staff can help you with everything from server setup to troubleshooting technical problems. This 24/7 support ensures that you always have someone to turn to if you encounter any issues with your VPS hosting.

Conclusion:

In a competitive market like India, finding the right VPS Hosting Provider can be tough. However, SpectraCloud stands out with a perfect balance of affordability, performance, and reliability. The company's diverse offering of VPS Server Plans, coupled with its expertise in Windows VPS hosting and commitment to cost-effective solutions, make it the first choice for businesses of all sizes.

Whether you're a startup looking for budget-friendly hosting options or an established enterprise in need of a scalable and reliable VPS solution, SpectraCloud has a plan to meet your needs. With robust features, advanced security, and excellent customer support, SpectraCloud ensures you have the hosting foundation you need for your business to succeed. Choose SpectraCloud for your VPS Hosting needs in India and experience the benefits of top-notch hosting services without spending a fortune.

#spectracloud#vps hosting#vps hosting services#vps server plans#web hosting services#hosting services provider#cheap hosting services#affordable hosting services#cheap vps server

3 notes

·

View notes

Text

The AI Power Conundrum: Will Renewables Save the Day?

The rapid advancement of artificial intelligence (AI) technologies is transforming industries and driving unprecedented innovation. However, the surge in AI applications comes with a significant challenge: the growing power demands required to sustain these systems. As we embrace the era of AI power, the question arises: can renewable energy rise to meet these increasing energy needs?

Understanding the AI Power Demand

AI systems, particularly those involving deep learning and large-scale data processing, consume vast amounts of electricity. Data centers housing AI servers are notorious for their high energy requirements, often leading to increased carbon emissions. As AI continues to evolve, the demand for energy is expected to skyrocket, posing a substantial challenge for sustainability.

The Role of Renewable Energy

Renewable energy sources, such as solar, wind, and hydroelectric power, present a viable solution to the AI power conundrum. These sources produce clean, sustainable energy that can help offset the environmental impact of AI technologies. By harnessing renewable energy, tech companies can significantly reduce their carbon footprint while meeting their power needs.

Tech Giants Leading the Way

Several tech giants are already paving the way by integrating renewable energy into their operations. Companies like Google, Amazon, and Microsoft are investing heavily in renewable energy projects to power their data centers. For instance, Google has committed to operating on 24/7 carbon-free energy by 2030. These initiatives demonstrate the potential for renewable energy to support the massive energy demands of AI power.

Challenges and Opportunities

While the integration of renewable energy is promising, it comes with its own set of challenges. Renewable energy sources can be intermittent, depending on weather conditions, which can affect their reliability. However, advancements in energy storage solutions and smart grid technologies are addressing these issues, ensuring a more stable and dependable supply of renewable energy.

The synergy between AI and renewable energy also presents unique opportunities. AI can optimize the performance of renewable energy systems, predict energy demand, and enhance energy efficiency. This symbiotic relationship has the potential to accelerate the adoption of renewable energy and create a more sustainable future.

Conclusion

The AI power conundrum is a pressing issue that demands innovative solutions. Renewable energy emerges as a crucial player in addressing this challenge, offering a sustainable path forward. As tech companies continue to embrace renewable energy, the future of AI power looks promising, with the potential to achieve both technological advancement and environmental sustainability.

By integrating renewable energy into the AI ecosystem, we can ensure that the growth of AI technologies does not come at the expense of our planet. The collaboration between AI and renewable energy is not just a possibility; it is a necessity for a sustainable future.

2 notes

·

View notes

Text

Exploring Kerberos and its related attacks

Introduction

In the world of cybersecurity, authentication is the linchpin upon which secure communications and data access rely. Kerberos, a network authentication protocol developed by MIT, has played a pivotal role in securing networks, particularly in Microsoft Windows environments. In this in-depth exploration of Kerberos, we'll delve into its technical intricacies, vulnerabilities, and the countermeasures that can help organizations safeguard their systems.

Understanding Kerberos: The Fundamentals

At its core, Kerberos is designed to provide secure authentication for users and services over a non-secure network, such as the internet. It operates on the principle of "need-to-know," ensuring that only authenticated users can access specific resources. To grasp its inner workings, let's break down Kerberos into its key components:

1. Authentication Server (AS)

The AS is the initial point of contact for authentication. When a user requests access to a service, the AS verifies their identity and issues a Ticket Granting Ticket (TGT) if authentication is successful.

2. Ticket Granting Server (TGS)

Once a user has a TGT, they can request access to various services without re-entering their credentials. The TGS validates the TGT and issues a service ticket for the requested resource.

3. Realm

A realm in Kerberos represents a security domain. It defines a specific set of users, services, and authentication servers that share a common Kerberos database.

4. Service Principal

A service principal represents a network service (e.g., a file server or email server) within the realm. Each service principal has a unique encryption key.

Vulnerabilities in Kerberos

While Kerberos is a robust authentication protocol, it is not immune to vulnerabilities and attacks. Understanding these vulnerabilities is crucial for securing a network environment that relies on Kerberos for authentication.

1. AS-REP Roasting

AS-REP Roasting is a common attack that exploits weak user account settings. When a user's pre-authentication is disabled, an attacker can request a TGT for that user without presenting a password. They can then brute-force the TGT offline to obtain the user's plaintext password.

2. Pass-the-Ticket Attacks

In a Pass-the-Ticket attack, an attacker steals a TGT or service ticket and uses it to impersonate a legitimate user or service. This attack can lead to unauthorized access and privilege escalation.

3. Golden Ticket Attacks

A Golden Ticket attack allows an attacker to forge TGTs, granting them unrestricted access to the domain. To execute this attack, the attacker needs to compromise the Key Distribution Center (KDC) long-term secret key.

4. Silver Ticket Attacks

Silver Ticket attacks target specific services or resources. Attackers create forged service tickets to access a particular resource without having the user's password.

Technical Aspects and Formulas

To gain a deeper understanding of Kerberos and its related attacks, let's delve into some of the technical aspects and cryptographic formulas that underpin the protocol:

1. Kerberos Authentication Flow

The Kerberos authentication process involves several steps, including ticket requests, encryption, and decryption. It relies on various cryptographic algorithms, such as DES, AES, and HMAC.

2. Ticket Granting Ticket (TGT) Structure

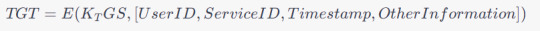

A TGT typically consists of a user's identity, the requested service, a timestamp, and other information encrypted with the TGS's secret key. The TGT structure can be expressed as:

3. Encryption Keys

Kerberos relies on encryption keys generated during the authentication process. The user's password is typically used to derive these keys. The process involves key generation and hashing formulas.

Mitigating Kerberos Vulnerabilities

To protect against Kerberos-related vulnerabilities and attacks, organizations can implement several strategies and countermeasures:

1. Enforce Strong Password Policies

Strong password policies can mitigate attacks like AS-REP Roasting. Ensure that users create complex, difficult-to-guess passwords and consider enabling pre-authentication.

2. Implement Multi-Factor Authentication (MFA)

MFA adds an extra layer of security by requiring users to provide multiple forms of authentication. This can thwart various Kerberos attacks.

3. Regularly Rotate Encryption Keys

Frequent rotation of encryption keys can limit an attacker's ability to use stolen tickets. Implement a key rotation policy and ensure it aligns with best practices.

4. Monitor and Audit Kerberos Traffic

Continuous monitoring and auditing of Kerberos traffic can help detect and respond to suspicious activities. Utilize security information and event management (SIEM) tools for this purpose.

5. Segment and Isolate Critical Systems

Isolating sensitive systems from less-trusted parts of the network can reduce the risk of lateral movement by attackers who compromise one system.

6. Patch and Update

Regularly update and patch your Kerberos implementation to mitigate known vulnerabilities and stay ahead of emerging threats.

4. Kerberos Encryption Algorithms

Kerberos employs various encryption algorithms to protect data during authentication and ticket issuance. Common cryptographic algorithms include:

DES (Data Encryption Standard): Historically used, but now considered weak due to its susceptibility to brute-force attacks.

3DES (Triple DES): An improvement over DES, it applies the DES encryption algorithm three times to enhance security.

AES (Advanced Encryption Standard): A strong symmetric encryption algorithm, widely used in modern Kerberos implementations for better security.

HMAC (Hash-based Message Authentication Code): Used for message integrity, HMAC ensures that messages have not been tampered with during transmission.

5. Key Distribution Center (KDC)

The KDC is the heart of the Kerberos authentication system. It consists of two components: the Authentication Server (AS) and the Ticket Granting Server (TGS). The AS handles initial authentication requests and issues TGTs, while the TGS validates these TGTs and issues service tickets. This separation of functions enhances security by minimizing exposure to attack vectors.

6. Salting and Nonces

To thwart replay attacks, Kerberos employs salting and nonces (random numbers). Salting involves appending a random value to a user's password before hashing, making it more resistant to dictionary attacks. Nonces are unique values generated for each authentication request to prevent replay attacks.

Now, let's delve into further Kerberos vulnerabilities and their technical aspects:

7. Ticket-Granting Ticket (TGT) Expiry Time

By default, TGTs have a relatively long expiry time, which can be exploited by attackers if they can intercept and reuse them. Administrators should consider reducing TGT lifetimes to mitigate this risk.

8. Ticket Granting Ticket Renewal

Kerberos allows TGT renewal without re-entering the password. While convenient, this feature can be abused by attackers if they manage to capture a TGT. Limiting the number of renewals or implementing MFA for renewals can help mitigate this risk.

9. Service Principal Name (SPN) Abuse

Attackers may exploit misconfigured SPNs to impersonate legitimate services. Regularly review and audit SPNs to ensure they are correctly associated with the intended services.

10. Kerberoasting

Kerberoasting is an attack where attackers target service accounts to obtain service tickets and attempt offline brute-force attacks to recover plaintext passwords. Robust password policies and regular rotation of service account passwords can help mitigate this risk.

11. Silver Ticket and Golden Ticket Attacks

To defend against Silver and Golden Ticket attacks, it's essential to implement strong password policies, limit privileges of service accounts, and monitor for suspicious behavior, such as unusual access patterns.

12. Kerberos Constrained Delegation

Kerberos Constrained Delegation allows a service to impersonate a user to access other services. Misconfigurations can lead to security vulnerabilities, so careful planning and configuration are essential.

Mitigation strategies to counter these vulnerabilities include:

13. Shorter Ticket Lifetimes

Reducing the lifespan of TGTs and service tickets limits the window of opportunity for attackers to misuse captured tickets.

14. Regular Password Changes

Frequent password changes for service accounts and users can thwart offline attacks and reduce the impact of credential compromise.

15. Least Privilege Principle

Implement the principle of least privilege for service accounts, limiting their access only to the resources they need, and monitor for unusual access patterns.

16. Logging and Monitoring

Comprehensive logging and real-time monitoring of Kerberos traffic can help identify and respond to suspicious activities, including repeated failed authentication attempts.

Kerberos Delegation: A Technical Deep Dive

1. Understanding Delegation in Kerberos

Kerberos delegation allows a service to act on behalf of a user to access other services without requiring the user to reauthenticate for each service. This capability enhances the efficiency and usability of networked applications, particularly in complex environments where multiple services need to interact on behalf of a user.

2. Types of Kerberos Delegation

Kerberos delegation can be categorized into two main types:

Constrained Delegation: This type of delegation restricts the services a service can access on behalf of a user. It allows administrators to specify which services a given service can impersonate for the user.

Unconstrained Delegation: In contrast, unconstrained delegation grants the service full delegation rights, enabling it to access any service on behalf of the user without restrictions. Unconstrained delegation poses higher security risks and is generally discouraged.

3. How Delegation Works

Here's a step-by-step breakdown of how delegation occurs within the Kerberos authentication process:

Initial Authentication: The user logs in and obtains a Ticket Granting Ticket (TGT) from the Authentication Server (AS).

Request to Access a Delegated Service: The user requests access to a service that supports delegation.

Service Ticket Request: The user's client requests a service ticket from the Ticket Granting Server (TGS) to access the delegated service. The TGS issues a service ticket for the delegated service and includes the user's TGT encrypted with the service's secret key.

Service Access: The user presents the service ticket to the delegated service. The service decrypts the ticket using its secret key and obtains the user's TGT.

Secondary Authentication: The delegated service can then use the user's TGT to authenticate to other services on behalf of the user without the user's direct involvement. This secondary authentication occurs transparently to the user.

4. Delegation and Impersonation

Kerberos delegation can be seen as a form of impersonation. The delegated service effectively impersonates the user to access other services. This impersonation is secure because the delegated service needs to present both the user's TGT and the service ticket for the delegated service, proving it has the user's explicit permission.

5. Delegation in Multi-Tier Applications

Kerberos delegation is particularly useful in multi-tier applications, where multiple services are involved in processing a user's request. It allows a front-end service to securely delegate authentication to a back-end service on behalf of the user.

6. Protocol Extensions for Delegation

Kerberos extensions, such as Service-for-User (S4U) extensions, enable a service to request service tickets on behalf of a user without needing the user's TGT. These extensions are valuable for cases where the delegated service cannot obtain the user's TGT directly.

7. Benefits of Kerberos Delegation

Efficiency: Delegation eliminates the need for the user to repeatedly authenticate to access multiple services, improving the user experience.

Security: Delegation is secure because it relies on Kerberos authentication and requires proper configuration to work effectively.