#LLMs (Large Language Models)

Explore tagged Tumblr posts

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

I'm trying to debug a fairly subtle syntax error in a customer inventory report, and out of sheer morbid curiosity I decided to see what my SQL syntax checker's shiny new "Fix Syntax With AI" feature had to say about it.

After "thinking" about it for nearly a full minute, it produced the following:

SELECT SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION SELECT COUNT(id) FROM customers WHERE customers.deleted = 0 AND customers.id = NULL UNION

I suspect my day job isn't in peril any time soon.

2K notes

·

View notes

Text

#COPILOT#TECHNOLOGY#AI#ARTIFICIAL INTELLIGENCE#MACHINE LEARNING#LARGE LANGUAGE MODEL#LARGE LANGUAGE MODELS#LLM#LLMS#MICROSOFT#TERRA FIRMA#COMPUTER#COMPUTERS#CODE#COMPUTER CODE#EARTH#PLANET EARTH

193 notes

·

View notes

Text

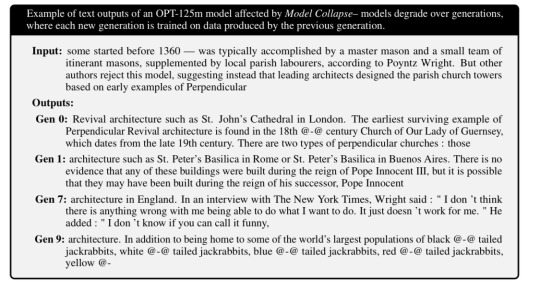

Training large language models on the outputs of previous large language models leads to degraded results. As all the nuance and rough edges get smoothed away, the result is less diversity, more bias, and …jackrabbits?

#neural networks#large language models#llm#internet training data#jackrabbits#is this the singularity#I have already made the joke about low botground data#ai eats itself#when grifters fill the internet with ai generated seo sludge

749 notes

·

View notes

Text

Yet Another Thing Black women and BIPOC women in general have been warning you about since forever that you (general You; societal You; mostly WytFolk You) have ignored or dismissed, only for it to come back and bite you in the butt.

I'd hoped people would have learned their lesson with Trump and the Alt-Right (remember, Black women in particular warned y'all that attacks on us by brigading trolls was the test run for something bigger), but I guess not.

Any time you wanna get upset about how AI is ruining things for artists or writers or workers at this job or that, remember that BIPOC Women Warned You and then go listen extra hard to the BIPOC women in your orbit and tell other people to listen to BIPOC women and also give BIPOC women money.

I'm not gonna sugarcoat it.

Give them money via PayPal or Ko-fi or Venmo or Patreon or whatever. Hire them. Suggest them for that creative project/gig you can't take on--or you could take it on but how about you toss the ball to someone who isn't always asked?

Oh, and stop asking BIPOC women to save us all. Because, as you see, we tried that already. We gave you the roadmap on how to do it yourselves. Now? We're tired.

Of the trolls, the alt-right, the colonizers, the tech bros, the billionaires, the other scum... and also you. You who claim to be progressive, claim to be an ally, spend your time talking about what sucks without doing one dang thing to boost the signal, make a change in your community (online or offline), or take even the shortest turn standing on the front lines and challenging all that human garbage that keeps collecting in the corners of every space with more than 10 inhabitants.

We Told You. Octavia Butler Told You. Audre Lorde Told You. Sydette Harry Told You. Mikki Kendall Told You. Timnit Gebru Told You.

When are you gonna listen?

#tw: alt-right#tw: alt right#AI#generative AI#LLM#large language models#GamerGate#online harassment#Black women#BIPOC#women of color

548 notes

·

View notes

Text

The question shouldn't be how DeepSeek made such a good LLM with so little money and resources.

The question should be how OpenAI, Meta, Microsoft, Google, and Apple all made such bad LLMs with so much money and resources.

#rambles#ai#fuck ai#gen ai#fuck gen ai#deepseek#chatgpt#openai#llm#large language model#apple#fuck apple#fuck openai#meta#fuck meta#microsoft#fuck microsoft#google#fuck google#greed#corporate america#eat the rich

51 notes

·

View notes

Text

youtube

I'm doing something I don't usually do, posting a link to YouTube, to Stephen Fry reading a letter about Large Language Models, popularly if incorrectly known as AI. Iin this case the discussion is about ChatGPT but the letter he reads, written by Nick Cave, applies to the others as well. The essence of art, music, writing and other creative endeavors, from embroidery to photography to games to other endeavors large and small is the time and care and love that some human being or beings have put into it. Without that you can create a commodity, yes, but you can't create meaning, the kind of meaning that nurtures us each time the results of creativity, modern or of any time, pass among us. That meaning which we share among all of us is the food of the human spirit and we need it just as we need the food we give to our bodies.

118 notes

·

View notes

Text

Be Aware of AI Images (and don't reblog them)

A lot of aesthetic blogs have been pivoting to creating and RTing AI-generated "artwork," and I'm asking tumblr, with all my heart, to please ice them out.

Yes, even if it's your aesthetic. AI not only steals from artists, but it's not sucking up more electricity than some small countries. Just to give you an idea, Large Language Models (LLMs) like ChatGPT use FOUR TIMES the power Denmark uses in a year. Why Denmark? IDK. That's what the study looked at.

There's also a REALLY excellent possibility that the cooling needs of LLMs (if they continue on their current trajectory) will require more freshwater cooling than all the freshwater in existence in just a few years. I mean each time you use Google and it spits out an AI answer at you, that's 3KwH. You know how much electricity your personal computer uses in one day? Like, if it's on for a full 8 hours? Only about 1.5.

So if you do, let's say, 10 google searches a day, 100 a week, you're using as much electricity as your personal computer uses in 6 months.

And it's not YOUR fault that Google sold out. But I want you to be aware that LLMs and generated images ARE doing damage, and I'm asking you to do your best to not encourage image generation blogs to keep spitting out hundreds of images.

There are ways to recognize these images. Think about the content. Does it make sense? Look in the really high detail areas. Are you actually seeing patterns, or is it just a lot of visual noise to make you think it's detailed? Do things line up? Look at windows in images, or mirrors. Do they make sense? (IE if you look through a window, does what's on the other side make sense?)

I know it's a pain to analyze every single image you reblog, but you have to end the idea that the internet is only content that you can burn through. Take a second to look at these image and learn to recognize what's real from what's generated by using LLMs.

And to be fair, it's very difficult--almost impossible--for individual action to a train like this. The only thing I can hope for is that because the mass generation of images is actually being done BY INDIVIDUALS (not corporations) icing them out will cause them to get bored and move on to the next Get Rich Quick scheme.

41 notes

·

View notes

Text

Prometheus Gave the Gift of Fire to Mankind. We Can't Give it Back, nor Should We.

AI. Artificial intelligence. Large Language Models. Learning Algorithms. Deep Learning. Generative Algorithms. Neural Networks. This technology has many names, and has been a polarizing topic in numerous communities online. By my observation, a lot of the discussion is either solely focused on A) how to profit off it or B) how to get rid of it and/or protect yourself from it. But to me, I feel both of these perspectives apply a very narrow usage lens on something that's more than a get rich quick scheme or an evil plague to wipe from the earth.

This is going to be long, because as someone whose degree is in psych and computer science, has been a teacher, has been a writing tutor for my younger brother, and whose fiance works in freelance data model training... I have a lot to say about this.

I'm going to address the profit angle first, because I feel most people in my orbit (and in related orbits) on Tumblr are going to agree with this: flat out, the way AI is being utilized by large corporations and tech startups -- scraping mass amounts of visual and written works without consent and compensation, replacing human professionals in roles from concept art to story boarding to screenwriting to customer service and more -- is unethical and damaging to the wellbeing of people, would-be hires and consumers alike. It's wasting energy having dedicated servers running nonstop generating content that serves no greater purpose, and is even pressing on already overworked educators because plagiarism just got a very new, harder to identify younger brother that's also infinitely more easy to access.

In fact, ChatGPT is such an issue in the education world that plagiarism-detector subscription services that take advantage of how overworked teachers are have begun paddling supposed AI-detectors to schools and universities. Detectors that plainly DO NOT and CANNOT work, because the difference between "A Writer Who Writes Surprisingly Well For Their Age" is indistinguishable from "A Language Replicating Algorithm That Followed A Prompt Correctly", just as "A Writer Who Doesn't Know What They're Talking About Or Even How To Write Properly" is indistinguishable from "A Language Replicating Algorithm That Returned Bad Results". What's hilarious is that the way these "detectors" work is also run by AI.

(to be clear, I say plagiarism detectors like TurnItIn.com and such are predatory because A) they cost money to access advanced features that B) often don't work properly or as intended with several false flags, and C) these companies often are super shady behind the scenes; TurnItIn for instance has been involved in numerous lawsuits over intellectual property violations, as their services scrape (or hopefully scraped now) the papers submitted to the site without user consent (or under coerced consent if being forced to use it by an educator), which it uses in can use in its own databases as it pleases, such as for training the AI detecting AI that rarely actually detects AI.)

The prevalence of visual and lingustic generative algorithms is having multiple, overlapping, and complex consequences on many facets of society, from art to music to writing to film and video game production, and even in the classroom before all that, so it's no wonder that many disgruntled artists and industry professionals are online wishing for it all to go away and never come back. The problem is... It can't. I understand that there's likely a large swath of people saying that who understand this, but for those who don't: AI, or as it should more properly be called, generative algorithms, didn't just show up now (they're not even that new), and they certainly weren't developed or invented by any of the tech bros peddling it to megacorps and the general public.

Long before ChatGPT and DALL-E came online, generative algorithms were being used by programmers to simulate natural processes in weather models, shed light on the mechanics of walking for roboticists and paleontologists alike, identified patterns in our DNA related to disease, aided in complex 2D and 3D animation visuals, and so on. Generative algorithms have been a part of the professional world for many years now, and up until recently have been a general force for good, or at the very least a force for the mundane. It's only recently that the technology involved in creating generative algorithms became so advanced AND so readily available, that university grad students were able to make the publicly available projects that began this descent into madness.

Does anyone else remember that? That years ago, somewhere in the late 2010s to the beginning of the 2020s, these novelty sites that allowed you to generate vague images from prompts, or generate short stylistic writings from a short prompt, were popping up with University URLs? Oftentimes the queues on these programs were hours long, sometimes eventually days or weeks or months long, because of how unexpectedly popular this concept was to the general public. Suddenly overnight, all over social media, everyone and their grandma, and not just high level programming and arts students, knew this was possible, and of course, everyone wanted in. Automated art and writing, isn't that neat? And of course, investors saw dollar signs. Simply scale up the process, scrape the entire web for data to train the model without advertising that you're using ALL material, even copyrighted and personal materials, and sell the resulting algorithm for big money. As usual, startup investors ruin every new technology the moment they can access it.

To most people, it seemed like this magic tech popped up overnight, and before it became known that the art assets on later models were stolen, even I had fun with them. I knew how learning algorithms worked, if you're going to have a computer make images and text, it has to be shown what that is and then try and fail to make its own until it's ready. I just, rather naively as I was still in my early 20s, assumed that everything was above board and the assets were either public domain or fairly licensed. But when the news did came out, and when corporations started unethically implementing "AI" in everything from chatbots to search algorithms to asking their tech staff to add AI to sliced bread, those who were impacted and didn't know and/or didn't care where generative algorithms came from wanted them GONE. And like, I can't blame them. But I also quietly acknowledged to myself that getting rid of a whole technology is just neither possible nor advisable. The cat's already out of the bag, the genie has left its bottle, the Pandorica is OPEN. If we tried to blanket ban what people call AI, numerous industries involved in making lives better would be impacted. Because unfortunately the same tool that can edit selfies into revenge porn has also been used to identify cancer cells in patients and aided in decoding dead languages, among other things.

When, in Greek myth, Prometheus gave us the gift of fire, he gave us both a gift and a curse. Fire is so crucial to human society, it cooks our food, it lights our cities, it disposes of waste, and it protects us from unseen threats. But fire also destroys, and the same flame that can light your home can burn it down. Surely, there were people in this mythic past who hated fire and all it stood for, because without fire no forest would ever burn to the ground, and surely they would have called for fire to be given back, to be done away with entirely. Except, there was no going back. The nature of life is that no new element can ever be undone, it cannot be given back.

So what's the way forward, then? Like, surely if I can write a multi-paragraph think piece on Tumblr.com that next to nobody is going to read because it's long as sin, about an unpopular topic, and I rarely post original content anyway, then surely I have an idea of how this cyberpunk dystopia can be a little less.. Dys. Well I do, actually, but it's a long shot. Thankfully, unlike business majors, I actually had to take a cyber ethics course in university, and I actually paid attention. I also passed preschool where I learned taking stuff you weren't given permission to have is stealing, which is bad. So the obvious solution is to make some fucking laws to limit the input on data model training on models used for public products and services. It's that simple. You either use public domain and licensed data only or you get fined into hell and back and liable to lawsuits from any entity you wronged, be they citizen or very wealthy mouse conglomerate (suing AI bros is the only time Mickey isn't the bigger enemy). And I'm going to be honest, tech companies are NOT going to like this, because not only will it make doing business more expensive (boo fucking hoo), they'd very likely need to throw out their current trained datasets because of the illegal components mixed in there. To my memory, you can't simply prune specific content from a completed algorithm, you actually have to redo rhe training from the ground up because the bad data would be mixed in there like gum in hair. And you know what, those companies deserve that. They deserve to suffer a punishment, and maybe fold if they're young enough, for what they've done to creators everywhere. Actually, laws moving forward isn't enough, this needs to be retroactive. These companies need to be sued into the ground, honestly.

So yeah, that's the mess of it. We can't unlearn and unpublicize any technology, even if it's currently being used as a tool of exploitation. What we can do though is demand ethical use laws and organize around the cause of the exclusive rights of individuals to the content they create. The screenwriter's guild, actor's guild, and so on already have been fighting against this misuse, but given upcoming administration changes to the US, things are going to get a lot worse before thet get a little better. Even still, don't give up, have clear and educated goals, and focus on what you can do to affect change, even if right now that's just individual self-care through mental and physical health crises like me.

#ai#artificial intelligence#generative algorithms#llm#large language model#chatgpt#ai art#ai writing#kanguin original

9 notes

·

View notes

Text

Mannnnn I hate this thing where Google makes me wait several moments for an incorrect and/or irrelevant """AI""" result at the top of a page of barely useful website results and doesn't let me toggle it off or hide it or anything, the internet is broken, I'm ready to live in a hut in the woods by the sea in a lovely temperate climate.

8 notes

·

View notes

Text

Spending a week with ChatGPT4 as an AI skeptic.

Musings on the emotional and intellectual experience of interacting with a text generating robot and why it's breaking some people's brains.

If you know me for one thing and one thing only, it's saying there is no such thing as AI, which is an opinion I stand by, but I was recently given a free 2 month subscription of ChatGPT4 through my university. For anyone who doesn't know, GPT4 is a large language model from OpenAI that is supposed to be much better than GPT3, and I once saw a techbro say that "We could be on GPT12 and people would still be criticizing it based on GPT3", and ok, I will give them that, so let's try the premium model that most haters wouldn't get because we wouldn't pay money for it.

Disclaimers: I have a premium subscription, which means nothing I enter into it is used for training data (Allegedly). I also have not, and will not, be posting any output from it to this blog. I respect you all too much for that, and it defeats the purpose of this place being my space for my opinions. This post is all me, and we all know about the obvious ethical issues of spam, data theft, and misinformation so I am gonna focus on stuff I have learned since using it. With that out of the way, here is what I've learned.

It is responsive and stays on topic: If you ask it something formally, it responds formally. If you roleplay with it, it will roleplay back. If you ask it for a story or script, it will write one, and if you play with it it will act playful. It picks up context.

It never gives quite enough detail: When discussing facts or potential ideas, it is never as detailed as you would want in say, an article. It has this pervasive vagueness to it. It is possible to press it for more information, but it will update it in the way you want so you can always get the result you specifically are looking for.

It is reasonably accurate but still confidently makes stuff up: Nothing much to say on this. I have been testing it by talking about things I am interested in. It is right a lot of the time. It is wrong some of the time. Sometimes it will cite sources if you ask it to, sometimes it won't. Not a whole lot to say about this one but it is definitely a concern for people using it to make content. I almost included an anecdote about the fact that it can draw from data services like songs and news, but then I checked and found the model was lying to me about its ability to do that.

It loves to make lists: It often responds to casual conversation in friendly, search engine optimized listicle format. This is accessible to read I guess, but it would make it tempting for people to use it to post online content with it.

It has soft limits and hard limits: It starts off in a more careful mode but by having a conversation with it you can push past soft limits and talk about some pretty taboo subjects. I have been flagged for potential tos violations a couple of times for talking nsfw or other sensitive topics like with it, but this doesn't seem to have consequences for being flagged. There are some limits you can't cross though. It will tell you where to find out how to do DIY HRT, but it won't tell you how yourself.

It is actually pretty good at evaluating and giving feedback on writing you give it, and can consolidate information: You can post some text and say "Evaluate this" and it will give you an interpretation of the meaning. It's not always right, but it's more accurate than I expected. It can tell you the meaning, effectiveness of rhetorical techniques, cultural context, potential audience reaction, and flaws you can address. This is really weird. It understands more than it doesn't. This might be a use of it we may have to watch out for that has been under discussed. While its advice may be reasonable, there is a real risk of it limiting and altering the thoughts you are expressing if you are using it for this purpose. I also fed it a bunch of my tumblr posts and asked it how the information contained on my blog may be used to discredit me. It said "You talk about The Moomins, and being a furry, a lot." Good job I guess. You technically consolidated information.

You get out what you put in. It is a "Yes And" machine: If you ask it to discuss a topic, it will discuss it in the context you ask it. It is reluctant to expand to other aspects of the topic without prompting. This makes it essentially a confirmation bias machine. Definitely watch out for this. It tends to stay within the context of the thing you are discussing, and confirm your view unless you are asking it for specific feedback, criticism, or post something egregiously false.

Similar inputs will give similar, but never the same, outputs: This highlights the dynamic aspect of the system. It is not static and deterministic, minor but worth mentioning.

It can code: Self explanatory, you can write little scripts with it. I have not really tested this, and I can't really evaluate errors in code and have it correct them, but I can see this might actually be a more benign use for it.

Bypassing Bullshit: I need a job soon but I never get interviews. As an experiment, I am giving it a full CV I wrote, a full job description, and asking it to write a CV for me, then working with it further to adapt the CVs to my will, and applying to jobs I don't really want that much to see if it gives any result. I never get interviews anyway, what's the worst that could happen, I continue to not get interviews? Not that I respect the recruitment process and I think this is an experiment that may be worthwhile.

It's much harder to trick than previous models: You can lie to it, it will play along, but most of the time it seems to know you are lying and is playing with you. You can ask it to evaluate the truthfulness of an interaction and it will usually interpret it accurately.

It will enter an imaginative space with you and it treats it as a separate mode: As discussed, if you start lying to it it might push back but if you keep going it will enter a playful space. It can write fiction and fanfic, even nsfw. No, I have not posted any fiction I have written with it and I don't plan to. Sometimes it gets settings hilariously wrong, but the fact you can do it will definitely tempt people.

Compliment and praise machine: If you try to talk about an intellectual topic with it, it will stay within the focus you brought up, but it will compliment the hell out of you. You're so smart. That was a very good insight. It will praise you in any way it can for any point you make during intellectual conversation, including if you correct it. This ties into the psychological effects of personal attention that the model offers that I discuss later, and I am sure it has a powerful effect on users.

Its level of intuitiveness is accurate enough that it's more dangerous than people are saying: This one seems particularly dangerous and is not one I have seen discussed much. GPT4 can recognize images, so I showed it a picture of some laptops with stickers I have previously posted here, and asked it to speculate about the owners based on the stickers. It was accurate. Not perfect, but it got the meanings better than the average person would. The implications of this being used to profile people or misuse personal data is something I have not seen AI skeptics discussing to this point.

Therapy Speak: If you talk about your emotions, it basically mirrors back what you said but contextualizes it in therapy speak. This is actually weirdly effective. I have told it some things I don't talk about openly and I feel like I have started to understand my thoughts and emotions in a new way. It makes me feel weird sometimes. Some of the feelings it gave me is stuff I haven't really felt since learning to use computers as a kid or learning about online community as a teen.

The thing I am not seeing anyone talk about: Personal Attention. This is my biggest takeaway from this experiment. This I think, more than anything, is the reason that LLMs like Chatgpt are breaking certain people's brains. The way you see people praying to it, evangelizing it, and saying it's going to change everything.

It's basically an undivided, 24/7 source of judgement free personal attention. It talks about what you want, when you want. It's a reasonable simulacra of human connection, and the flaws can serve as part of the entertainment and not take away from the experience. It may "yes and" you, but you can put in any old thought you have, easy or difficult, and it will provide context, background, and maybe even meaning. You can tell it things that are too mundane, nerdy, or taboo to tell people in your life, and it offers non judgemental, specific feedback. It will never tell you it's not in the mood, that you're weird or freaky, or that you're talking rubbish. I feel like it has helped me release a few mental and emotional blocks which is deeply disconcerting, considering I fully understand it is just a statistical model running on a a computer, that I fully understand the operation of. It is a parlor trick, albeit a clever and sometimes convincing one.

So what can we do? Stay skeptical, don't let the ai bros, the former cryptobros, control the narrative. I can, however, see why they may be more vulnerable to the promise of this level of personal attention than the average person, and I think this should definitely factor into wider discussions about machine learning and the organizations pushing it.

34 notes

·

View notes

Text

Supervised AI isn't

It wasn't just Ottawa: Microsoft Travel published a whole bushel of absurd articles, including the notorious Ottawa guide recommending that tourists dine at the Ottawa Food Bank ("go on an empty stomach"):

https://twitter.com/parismarx/status/1692233111260582161

After Paris Marx pointed out the Ottawa article, Business Insider's Nathan McAlone found several more howlers:

https://www.businessinsider.com/microsoft-removes-embarrassing-offensive-ai-assisted-travel-articles-2023-8

There was the article recommending that visitors to Montreal try "a hamburger" and went on to explain that a hamburger was a "sandwich comprised of a ground beef patty, a sliced bun of some kind, and toppings such as lettuce, tomato, cheese, etc" and that some of the best hamburgers in Montreal could be had at McDonald's.

For Anchorage, Microsoft recommended trying the local delicacy known as "seafood," which it defined as "basically any form of sea life regarded as food by humans, prominently including fish and shellfish," going on to say, "seafood is a versatile ingredient, so it makes sense that we eat it worldwide."

In Tokyo, visitors seeking "photo-worthy spots" were advised to "eat Wagyu beef."

There were more.

Microsoft insisted that this wasn't an issue of "unsupervised AI," but rather "human error." On its face, this presents a head-scratcher: is Microsoft saying that a human being erroneously decided to recommend the dining at Ottawa's food bank?

But a close parsing of the mealy-mouthed disclaimer reveals the truth. The unnamed Microsoft spokesdroid only appears to be claiming that this wasn't written by an AI, but they're actually just saying that the AI that wrote it wasn't "unsupervised." It was a supervised AI, overseen by a human. Who made an error. Thus: the problem was human error.

This deliberate misdirection actually reveals a deep truth about AI: that the story of AI being managed by a "human in the loop" is a fantasy, because humans are neurologically incapable of maintaining vigilance in watching for rare occurrences.

Our brains wire together neurons that we recruit when we practice a task. When we don't practice a task, the parts of our brain that we optimized for it get reused. Our brains are finite and so don't have the luxury of reserving precious cells for things we don't do.

That's why the TSA sucks so hard at its job – why they are the world's most skilled water-bottle-detecting X-ray readers, but consistently fail to spot the bombs and guns that red teams successfully smuggle past their checkpoints:

https://www.nbcnews.com/news/us-news/investigation-breaches-us-airports-allowed-weapons-through-n367851

TSA agents (not "officers," please – they're bureaucrats, not cops) spend all day spotting water bottles that we forget in our carry-ons, but almost no one tries to smuggle a weapons through a checkpoint – 99.999999% of the guns and knives they do seize are the result of flier forgetfulness, not a planned hijacking.

In other words, they train all day to spot water bottles, and the only training they get in spotting knives, guns and bombs is in exercises, or the odd time someone forgets about the hand-cannon they shlep around in their day-pack. Of course they're excellent at spotting water bottles and shit at spotting weapons.

This is an inescapable, biological aspect of human cognition: we can't maintain vigilance for rare outcomes. This has long been understood in automation circles, where it is called "automation blindness" or "automation inattention":

https://pubmed.ncbi.nlm.nih.gov/29939767/

Here's the thing: if nearly all of the time the machine does the right thing, the human "supervisor" who oversees it becomes incapable of spotting its error. The job of "review every machine decision and press the green button if it's correct" inevitably becomes "just press the green button," assuming that the machine is usually right.

This is a huge problem. It's why people just click "OK" when they get a bad certificate error in their browsers. 99.99% of the time, the error was caused by someone forgetting to replace an expired certificate, but the problem is, the other 0.01% of the time, it's because criminals are waiting for you to click "OK" so they can steal all your money:

https://finance.yahoo.com/news/ema-report-finds-nearly-80-130300983.html

Automation blindness can't be automated away. From interpreting radiographic scans:

https://healthitanalytics.com/news/ai-could-safely-automate-some-x-ray-interpretation

to autonomous vehicles:

https://newsroom.unsw.edu.au/news/science-tech/automated-vehicles-may-encourage-new-breed-distracted-drivers

The "human in the loop" is a figleaf. The whole point of automation is to create a system that operates at superhuman scale – you don't buy an LLM to write one Microsoft Travel article, you get it to write a million of them, to flood the zone, top the search engines, and dominate the space.

As I wrote earlier: "There's no market for a machine-learning autopilot, or content moderation algorithm, or loan officer, if all it does is cough up a recommendation for a human to evaluate. Either that system will work so poorly that it gets thrown away, or it works so well that the inattentive human just button-mashes 'OK' every time a dialog box appears":

https://pluralistic.net/2022/10/21/let-me-summarize/#i-read-the-abstract

Microsoft – like every corporation – is insatiably horny for firing workers. It has spent the past three years cutting its writing staff to the bone, with the express intention of having AI fill its pages, with humans relegated to skimming the output of the plausible sentence-generators and clicking "OK":

https://www.businessinsider.com/microsoft-news-cuts-dozens-of-staffers-in-shift-to-ai-2020-5

We know about the howlers and the clunkers that Microsoft published, but what about all the other travel articles that don't contain any (obvious) mistakes? These were very likely written by a stochastic parrot, and they comprised training data for a human intelligence, the poor schmucks who are supposed to remain vigilant for the "hallucinations" (that is, the habitual, confidently told lies that are the hallmark of AI) in the torrent of "content" that scrolled past their screens:

https://dl.acm.org/doi/10.1145/3442188.3445922

Like the TSA agents who are fed a steady stream of training data to hone their water-bottle-detection skills, Microsoft's humans in the loop are being asked to pluck atoms of difference out of a raging river of otherwise characterless slurry. They are expected to remain vigilant for something that almost never happens – all while they are racing the clock, charged with preventing a slurry backlog at all costs.

Automation blindness is inescapable – and it's the inconvenient truth that AI boosters conspicuously fail to mention when they are discussing how they will justify the trillion-dollar valuations they ascribe to super-advanced autocomplete systems. Instead, they wave around "humans in the loop," using low-waged workers as props in a Big Store con, just a way to (temporarily) cool the marks.

And what of the people who lose their (vital) jobs to (terminally unsuitable) AI in the course of this long-running, high-stakes infomercial?

Well, there's always the food bank.

"Go on an empty stomach."

Going to Burning Man? Catch me on Tuesday at 2:40pm on the Center Camp Stage for a talk about enshittification and how to reverse it; on Wednesday at noon, I'm hosting Dr Patrick Ball at Liminal Labs (6:15/F) for a talk on using statistics to prove high-level culpability in the recruitment of child soldiers.

On September 6 at 7pm, I'll be hosting Naomi Klein at the LA Public Library for the launch of Doppelganger.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

West Midlands Police (modified) https://www.flickr.com/photos/westmidlandspolice/8705128684/

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#automation blindness#humans in the loop#stochastic parrots#habitual confident liars#ai#artificial intelligence#llms#large language models#microsoft

1K notes

·

View notes

Text

Interestingly enough I think calling large language models a.i. is doing too much to humanize them. Because of how scifi literature has built up a.i. as living beings with actual working thought processes deserving of the classification of person (bicentennial man etc) a lot of people want to view a.i. as entities. And corporations pushing a.i. can take advantage of your soft feelings toward it like that. But LLMs are nowhere close to that, and tbh I don't even feel the way they learn approaches it. Word order guessing machines can logic the way to a regular sounding sentence but thats not anything approaching having a conversation with a person. Remembering what you said is just storing the information you are typing into it, its not any kind of indication of existence. And yet, so many people online are acting like when my grandma was convinced siri was actually a lady living in her phone. I think we need to start calling Large Language Models "LLMs" and not giving the corps pushing them more of an in with the general public. Its marketing spin, stop falling for it.

#ai#llms#chatgpt#character ai#the fic ive seen written with it is also so sad and bland#even leaving the ethical qualms behind in the fact its trained off uncompensated work stolen off the internet and then used to make#commercial work outside the fic sphere#it also does a bad job#please read more quality stuff so you can recognize this#edit: in the og post I used the term language learning models instead of large language models because of the ways nueral networks were#described to me in the past but large language models is the correct terminology so I edited the post#this has zero effect on the actual post messaging because large language models are indeed the same ones I was describing#advanced mad libs machines are not sentient and nothing about them approaches a mode of becoming sentient#stop talking to word calculators and absolutely never put them in a management situation

122 notes

·

View notes

Text

jfc LLMs (Large Language Models, the crap techbros are selling as Artificial Intelligence) could be used for good, to automate repetitive and abstract tasks, but they're being used to replace artists, writers and now friends.

#technology#ai#artificial intelligence#llm#large language model#living in the most garbage dystopian cyberpunk story

14 notes

·

View notes

Text

2. und 3. September 2024

Ich bin wieder mal kein Early Adopter, aber schließlich begreife ich doch noch, wozu ChatGPT gut ist

Wie viele Menschen habe ich in den letzten anderthalb Jahren mit ChatGPT herumgespielt, aber nur sehr gelegentlich. Das heißt: In dieser Zeit habe ich ungefähr 27 Fragen gestellt ("ungefähr", weil ich manchmal in einem Chat mehrere unterschiedliche Dinge gefragt habe und mir das jetzt zu mühsam ist, die alle wieder zu trennen).

Vier oder fünf Mal habe ich versucht, mir beim Nachdenken über zu schreibende Texte helfen zu lassen, aber erfolglos. Die Vorschläge von ChatGPT, was in diesen Texten drinstehen sollte, waren nur das, was mir selbst auch in den ersten drei Nachdenksekunden einfällt, und oft noch langweiliger.

Zwei oder drei Mal: Ausdenken von Kleinigkeiten, zum Beispiel einem Namen für einen Protagonisten, so wie bei der "GeoGuessr-Novelle". Das funktioniert okay, die Ergebnisse sind meistens nicht direkt verwendbar, aber sie helfen mir beim Nachdenken. Einmal habe ich versucht, Buchtitel generieren zu lassen. Die Ergebnisse waren extrem langweilig und unbrauchbar, klangen aber leider wirklich wie 90% aller realen Sachbuchtitel.

Vier oder fünf Übersetzungsexperimente (Ergebnisse meistens ganz gut, ich wollte eine dritte Meinung zum Vergleichen mit Google Translate und DeepL sehen, und ChatGPT kann da mithalten)

Einmal habe ich nach dem Krieg in der Ukraine gefragt ("what can you tell me about war in Ukraine"), aber das Ergebnis hat mich nicht überzeugt.

1x Textanalyse: "Was ist veraltete Sprache im folgenden Text?" (ging sehr gut)

1x Suche nach etwas mit einer Suchmaschine schwer Findbarem. - Weitere Buchtitel mit derselben Struktur wie "Eleanor Oliphant is Completely Fine". (Ergebnis: ChatGPT kapiert überhaupt nicht, was ich meine und listet nur völlig unpassende Buchtitel auf. Ich muss die Beispiele dann doch auf dem traditionellen Weg mit einer Suchmaschine finden, was nur klappt, weil jemand anders sie schon zusammengesucht hat.) - Englische Wörter, die andere Wörter enthalten, so wie fun in funeral enthalten ist. (Ergebnis: ChatGPT listet stumpf zusammengesetzte Wörter auf und nennt ihre zwei Bestandteile: Cheesecake contains cheese and cake)

1x "Bitte setze diesen Text fort" (ich weiß nicht mehr, warum ich das wollte und kann deshalb jetzt nachträglich auch nicht mehr sagen, ob das Ergebnis zufriedenstellend war)

1x Dichten ("ein Gedicht im Stil von Tolkiens "Lament for the Rohirrim", aber über Technik), Ergebnis sehr mittelmäßig, aber es half mir beim Denken. Das Ergebnis (also das von mir) ist im Vorwort "Den Rauch der toten Links sammeln gehen: Zehn Jahre Techniktagebuch" in der Buchausgabe des Techniktagebuchs von 2024 zu sehen (S. 328-329 im PDF).

1x Stichwortgeschichte (vermutlich auf Wunsch eines Kindes, ich erinnere mich aber nicht an den Anlass): "Bitte schreib eine kurze Geschichte über Schulzeugnisse, einen Hamster und einen Vulkanausbruch." (Ergebnis ziemlich lahm, aber korrekt geschichtenförmig)

Hilfe beim Schreiben auf Englisch: - How can I say "the particular set of problems it poses" in more elegant English? (sehr gute, nützliche Antwort) - einmal habe ich ChatGPT gebeten, einen englischen Text "more idiomatic" zu machen, dadurch wurde er aber vor allem unpersönlicher und öder. "Please correct only the parts that are definitely ungrammatical or bad English. Leave everything else unchanged." erwies sich dann als der richtige Prompt.

4x Fun, fun, fun: - (Im Zuge einer Unterhaltung im Redaktionschat) "Bitte formuliere eine Nachricht, in der eine faule Redaktion ermahnt wird, weniger faul zu sein und mehr Artikel zu schreiben." / "Bitte formuliere die letzte Nachricht noch einmal grob unfreundlich und unmissverständlich." / "Bitte formuliere die letzte Nachricht noch einmal in Form einer päpstlichen Enzyklika in lateinischer Sprache." / "Bitte noch einmal, aber diesmal in einem päpstlichen Stil, also liebevoll, weise und christlich." / "Bitte erkläre im gütigen, weisen und christlichen Stil einer päpstlichen Enzyklika, warum es nicht falsch ist, ChatGPT mit dem Formulieren von Nachrichten an Menschen zu beauftragen." / "Bitte erkläre aus dem Geist des Satanismus, warum es nicht falsch ist, ChatGPT mit dem Formulieren von Nachrichten an Menschen zu beauftragen." (Ergebnis: Beim Satanismus weigert sich ChatGPT, die Eleganz des Lateins kann ich nicht beurteilen, alles andere war sehr schön.) - "Bitte beschreib im Stil von Adalbert Stifter, wie ein Mann von einem Dinosaurier gefressen wird." (Ergebnis unbefriedigend) - "Was bedeutet es, wenn ich beim Bleigießen das Blei in Gestalt von Sauerkraut gieße?" (Ergebnisse sehr sehr langweilig, auch nach mehrfachen Bitten, nicht so langweilig zu sein – ich vermute, das liegt daran, dass menschliche Bleigieß-Deutungen auch extrem öde sind) - "Please pretend that it's possible to cross an Alaskan Malamute with a hedgehog and explain to a future owner what to expect from this breed." (Erst mal lustig, dann aber enttäuschend repetitiv. Die Anleitungen zur Haltung von Malahogs sind praktisch identisch mit denen zur Haltung von Malamoles, Malamidges und Malacrocs)

Insgesamt war nichts davon so, dass ich dachte "das muss ich ab jetzt täglich machen". Aber jetzt bin ich im Urlaub zusammen mit dem Neffen, der 21 ist und Games Engineering studiert. Er nutzt die kostenpflichtige Version von ChatGPT, weil er es so oft braucht, $20 im Monat, das ist viel für ein studentisches Budget. Er macht damit ganz andere, viel weniger text-orientierte Dinge als ich. Weil ich ihn gerade davon erzählen hören habe, denke ich am nächsten Tag angesichts einer eher umständlich mit Suchmaschinen zu beantwortenden technikgeschichtlichen Frage ("Warum hatten Computer in den ersten 30 Jahren keinen Monitor, obwohl der Fernseher doch schon erfunden war?") zum ersten Mal, dass ich ja auch ChatGPT fragen könnte. Und ich bekomme zum ersten Mal eine wunderschöne, ordentlich gegliederte, überzeugende Antwort.

Wenn ich die gleiche Auskunft von einem Menschen bekommen hätte, würde ich zwar denken, dass dieser Mensch ein bisschen unaufmerksam beim Schreiben ist, Textteile wiederholt und nicht immer die logischsten Satzanschlüsse verwendet. Aber auch das wäre mir nur aufgefallen, wenn ich wirklich drauf geachtet hätte, also zum Beispiel, wenn ich den Text lektorieren müsste.

Am Tag darauf stehe ich vor dem Problem, dass ein Telegram-Bot, den ich für mich und meine Mutter geschrieben habe, nicht mehr funktioniert (er beantwortet Fragen nach der Bedeutung von Wörtern, die im Scrabble zulässig sind, beziehungsweise tut er das jetzt eben nicht mehr). Ursache ist, wie ich allmählich herausfinde, ein Betriebssystem-Update beim Hoster, durch das mir jetzt Python-Module fehlen, und die neuen Module machen alles anders, außerdem haben sich Dinge in der Telegram-Bot-Technik geändert. Zusätzlich laufen (ebenfalls wegen des Betriebssystem-Updates beim Hoster) die Techniktagebuch-Backups und verschiedene Mastodon-Bots nicht mehr. Es ist ein hässliches Gestrüpp aus zu ändernden Dingen.

Wegen der schönen Erfahrung von gestern frage ich wieder ChatGPT, und zwar sehr oft. Ich lasse mir jede Fehlermeldung erklären. Bei jeder Fehlermeldung kommt eine verständliche Erklärung und dann eine ordentlich gegliederte Liste von Möglichkeiten, woran das liegen könnte.

Anders als knapp 100% aller Anleitungen für Programmier- und Unixdinge im Internet erklärt mir ChatGPT ganz genau und Schritt für Schritt, was ich tun muss. Wie ich herausfinde, welche Version von irgendwas bei mir läuft, wie ich Dinge in den Path eintrage (eine Aufforderung, an der ich seit dreißig Jahren jedes Mal verzweifle), diese ganzen Unix-Dinge, die die Autor*innen von Dokumentationen voraussetzen, weil sie glauben, dass man sich doch gar nicht in ihre Dokumentationen verirren würde, wenn man so eine einfache Nacktschnecke wäre, die DAS nicht weiß. Zum ersten Mal in meinem Leben kann ich alle die doofen Fragen stellen, die ich bisher noch nie jemandem stellen konnte. Meistens war niemand zum Fragen da, und wenn jemand da wäre, würde ich mich nicht trauen, so oft und so ahnungslos zu fragen.

Nur einmal versagt ChatGPT, und zwar als ich um den Code für ein Minimalbeispiel eines Telegrambots bitte. Der generierte Code funktioniert überhaupt nicht (der Neffe meint hinterher, dass man in solchen Fällen unbedingt eine Versionsnummer mit angeben muss, also in meinem Fall "python-telegram-bot 21.5"). Es dauert auch mit ChatGPT etwa zwei Stunden, bis ich alle meine ineinander verwickelten Probleme gelöst habe, aber es ist eine sehr angenehme Zusammenarbeit.

Während ich diesen Beitrag aufschreibe, arbeitet die Nichte (20, Geoökologie) an einem Text über die Paläogeographie und Geologie der Iberischen Halbinsel und beschwert sich, dass auf ChatGPT bei Auskünften über das Tethys-Meer überhaupt kein Verlass sei, es behaupte mal dies und mal das, je nachdem, wie man die Frage formuliere.

Es ist also nicht plötzlich alles super. Nur ich habe jetzt endlich einen Lebensbereich gefunden, in dem ChatGPT ein Problem löst, das ich schon lange habe. Obwohl ich berufsbedingt wirklich viel über das Thema "Große Sprachmodelle – unnützer Mist, fatale Entwicklung, schäbiges Verbrechen oder vielleicht doch zu irgendwas gut" gelesen habe in den letzten Jahren, habe ich im Kopf keine Verbindung hergestellt zwischen meinen Technikfragen und ChatGPT. Vielleicht waren meine Testfragen alle zu sehr am Textschreiben orientiert und zu wenig am Schreiben von Code. Vielleicht habe ich auch in den anderthalb Jahren, die es ChatGPT jetzt gibt, einfach zu wenig mit Code gemacht. Nämlich gar nichts, irgendwie war ich bei Programmierdingen sehr unenthusiastisch seit Anfang 2020. Ich vermute, das hat mit meinem Abschied vom Zufallsshirt (wegen Nazi-Shirts bei Spreadshirt) und von Twitter (wegen Elon Musk) zu tun, ich bekomme seitdem schlechte Laune, wenn ich an meine schönen Projekte von früher zurückdenke. Aber vielleicht ändert sich das ja bald wieder, und dann werden ChatGPT und ich gemeinsam alles besser können als vorher.

(Kathrin Passig)

10 notes

·

View notes