#Generative AI For Production Optimization

Explore tagged Tumblr posts

Text

Revolutionize your manufacturing process with generative AI: predictive maintenance, enhanced design, improved quality control, and streamlined supply chains. Embrace the future!

#AI In Inventory Management#AI-Powered Manufacturing Data Analysis#Generative AI In Smart Factories#AI In Manufacturing Innovation#Generative AI For Production Planning#AI In Operational Efficiency#AI-Driven Manufacturing Transformation#Generative AI For Lean Manufacturing#AI In Manufacturing Logistics#AI-Enhanced Production Processes#Generative AI For Equipment Monitoring#AI In Supply Chain Optimization#Generative AI For Manufacturing Agility#AI-Powered Factory Automation#AI In Process Control#Generative AI For Production Optimization

0 notes

Text

Picjam: The Smart Way to Create Professional, Optimized Visuals

Welcome to the future of visual content creation with Picjam. In today’s fast-paced digital landscape, professional, optimized visuals are essential for standing out and capturing attention. Picjam offers an innovative, user-friendly platform that empowers marketers, designers, and entrepreneurs alike. By simplifying design processes and providing smart tools, Picjam transforms ordinary ideas into stunning graphics effortlessly.

Go explore

Seamless Design Interface

One of the standout features of Picjam is its seamless design interface that makes creating professional visuals intuitive and efficient. With a well-organized workspace and drag-and-drop functionality, Picjam allows users of all skill levels to dive right into designing without any steep learning curve. The platform offers a wide array of templates, icons, fonts, and color schemes, enabling you to build designs that resonate with your brand identity. Picjam also integrates smart tools that optimize images automatically, ensuring that every graphic is web-ready and performs well on all devices. By removing the barriers of traditional design software, Picjam empowers creatives to experiment and innovate. Additionally, collaborative features let teams work together in real time, further enhancing productivity and creative synergy. This user-centric approach solidifies Picjam as the go-to tool for anyone looking to elevate their visual content creation. Moreover, Picjam’s responsive design features and regular updates ensure that the platform evolves with emerging design trends and user demands. Its robust customer support and extensive resource library make Picjam an indispensable asset for professionals and beginners alike. With continuous enhancements and a commitment to quality, Picjam remains the premier choice for all your design needs. Elevate your projects with Picjam today.

Go explore

Advanced Optimization Tools

Picjam not only offers an intuitive interface but also integrates advanced optimization tools designed to ensure every visual is perfectly tuned for digital platforms. Users can easily adjust resolution, color balance, and file size to achieve optimal performance without compromising quality. Picjam’s smart algorithms analyze your design and suggest enhancements that improve clarity and visual appeal. By automatically compressing images and refining graphics, Picjam minimizes load times and maximizes viewer engagement. These features are particularly valuable for websites, social media channels, and online advertisements, where speed and quality are paramount. Additionally, Picjam allows for real-time previews so you can see the effects of each adjustment immediately. This proactive approach helps users avoid common pitfalls and ensures that every project meets professional standards. With regular updates and industry-leading performance metrics, Picjam continues to push the boundaries of digital design technology. Furthermore, Picjam provides comprehensive analytics and user feedback options that allow you to measure the impact of your designs. These tools help in refining your strategy and achieving better engagement. Trust Picjam to optimize your visuals for maximum effect. By leveraging this suite of optimization features, Picjam elevates your digital presence and ensures every visual is performance-driven and aesthetically superior absolutely. Elevate your projects with Picjam today.

Collaborative and Cloud-Based Features

In today’s interconnected world, collaboration is key, and Picjam excels by offering robust cloud-based features that bring teams together seamlessly. Picjam allows multiple users to work on projects simultaneously from anywhere in the world, fostering a creative environment where ideas flow freely. With real-time updates and shared access, team members can review and contribute to designs instantly. Picjam’s cloud storage ensures that all files are securely saved and easily accessible, reducing the risk of lost work. The platform also supports version control, enabling users to track changes and revert to previous iterations if needed. This collaborative approach not only enhances productivity but also encourages diverse perspectives in design projects. By merging creativity with cutting-edge technology, Picjam transforms how teams interact and innovate. Whether working on marketing campaigns, product designs, or social media content, Picjam provides the tools necessary for efficient teamwork and creative synergy. Embrace a collaborative future with Picjam and witness how effortless and dynamic team-based design can become in the modern digital era. Furthermore, Picjam’s commitment to security and ease of use ensures that every collaborative effort is safe, efficient, and drives innovation. Enjoy a transformative design journey with Picjam. Harness the power of Picjam for success today.

Go explore

Innovative Templates and Creative Resources

Picjam offers an extensive library of innovative templates and creative resources that make visual content creation both inspiring and efficient. From modern, minimalist designs to vibrant, dynamic layouts, Picjam provides options to suit every style and occasion. Users can customize templates with ease, modifying elements such as fonts, colors, and images to reflect their unique brand identity. Picjam’s rich repository of design assets, including icons, illustrations, and background patterns, simplifies the creative process and sparks new ideas. Moreover, Picjam regularly updates its collection to keep pace with the latest design trends and technological advancements. The platform also offers tutorials and expert tips, ensuring that users can fully leverage its creative potential. With these comprehensive resources, Picjam transforms the daunting task of design into an enjoyable journey of discovery and innovation. Whether you are creating content for social media, digital marketing, or personal projects, Picjam’s diverse offerings empower you to produce visually striking and effective graphics that stand out in today’s competitive digital landscape. Furthermore, Picjam provides ongoing support and inspiration through community forums and creative workshops. These resources enable users to continuously refine their skills and explore fresh design perspectives, making Picjam an indispensable creative partner. Experience Picjam’s creative revolution.

Go explore

Conclusion

In conclusion, Picjam revolutionizes the design landscape by combining user-friendly tools, advanced optimization, collaborative features, and an extensive resource library. Picjam not only simplifies the creative process but also elevates the quality of every visual project. Its innovative approach and continuous enhancements make Picjam the smart choice for professionals and novices alike. Ready to transform your visual content? Click here and try out the offer now. Discover how Picjam can empower your creativity like never before. Act now.

Don’t miss this exclusive opportunity. Sign up today to experience Picjam’s innovative design platform and unlock unlimited creative potential now.

#Picjam#AI-powered photo editing#DIY product photos#Studio-like images#E-commerce product photography#Apparel brand visuals#Background removal#Model customization#SEO keyword generation#Professional photoshoot alternative#Time-saving photo editing#Cost-effective product imaging#High-quality product images#Diverse product imagery#Optimized e-commerce visuals#Online visibility enhancement

0 notes

Text

AQUILAA Software Review With AI Product review Generator Features - Boost Your Online Sales with AQUILAA Product Reviews Generating Tool

Boost Your Online Sales with AQUILAA Product Reviews Generating Tool In the fast-paced world of e-commerce, customer trust and visibility are key factors in driving sales. One of the most effective ways to achieve both is by leveraging high-quality product reviews. However, generating these reviews manually can be time-consuming and inefficient. That’s where AQUILAA Product Reviews Generating…

View On WordPress

#AQUILAA AI review generation tool#AQUILAA AI-based review generator#AQUILAA AI-powered review generator#AQUILAA Customer review creation tool#AQUILAA E-commerce review generator#AQUILAA Online review generation software#AQUILAA Product feedback tool#AQUILAA Product review software#AQUILAA Product review writing software#AQUILAA Product Reviews Generating Tool#AQUILAA review analytics tool#AQUILAA review Artcle creator#AQUILAA review assistant#AQUILAA review automation App#AQUILAA Review generation platform#AQUILAA review generator#AQUILAA review management tool#AQUILAA review optimization tool#AQUILAA review writing tool#Generate product reviews with AQUILAA

0 notes

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

The Generative AI Revolution: Transforming Industries with Brillio

The realm of artificial intelligence is experiencing a paradigm shift with the emergence of generative AI. Unlike traditional AI models focused on analyzing existing data, generative AI takes a leap forward by creating entirely new content. The generative ai technology unlocks a future brimming with possibilities across diverse industries. Let's read about the transformative power of generative AI in various sectors:

1. Healthcare Industry:

AI for Network Optimization: Generative AI can optimize healthcare networks by predicting patient flow, resource allocation, etc. This translates to streamlined operations, improved efficiency, and potentially reduced wait times.

Generative AI for Life Sciences & Pharma: Imagine accelerating drug discovery by generating new molecule structures with desired properties. Generative AI can analyze vast datasets to identify potential drug candidates, saving valuable time and resources in the pharmaceutical research and development process.

Patient Experience Redefined: Generative AI can personalize patient communication and education. Imagine chatbots that provide tailored guidance based on a patient's medical history or generate realistic simulations for medical training.

Future of AI in Healthcare: Generative AI has the potential to revolutionize disease diagnosis and treatment plans by creating synthetic patient data for anonymized medical research and personalized drug development based on individual genetic profiles.

2. Retail Industry:

Advanced Analytics with Generative AI: Retailers can leverage generative AI to analyze customer behavior and predict future trends. This allows for targeted marketing campaigns, optimized product placement based on customer preferences, and even the generation of personalized product recommendations.

AI Retail Merchandising: Imagine creating a virtual storefront that dynamically adjusts based on customer demographics and real-time buying patterns. Generative AI can optimize product assortments, recommend complementary items, and predict optimal pricing strategies.

Demystifying Customer Experience: Generative AI can analyze customer feedback and social media data to identify emerging trends and potential areas of improvement in the customer journey. This empowers retailers to take proactive steps to enhance customer satisfaction and loyalty.

3. Finance Industry:

Generative AI in Banking: Generative AI can streamline loan application processes by automatically generating personalized loan offers and risk assessments. This reduces processing time and improves customer service efficiency.

4. Technology Industry:

Generative AI for Software Testing: Imagine automating the creation of large-scale test datasets for various software functionalities. Generative AI can expedite the testing process, identify potential vulnerabilities more effectively, and contribute to faster software releases.

Generative AI for Hi-Tech: This technology can accelerate innovation in various high-tech fields by creating novel designs for microchips, materials, or even generating code snippets to enhance existing software functionalities.

Generative AI for Telecom: Generative AI can optimize network performance by predicting potential obstruction and generating data patterns to simulate network traffic scenarios. This allows telecom companies to proactively maintain and improve network efficiency.

5. Generative AI Beyond Industries:

GenAI Powered Search Engine: Imagine a search engine that understands context and intent, generating relevant and personalized results tailored to your specific needs. This eliminates the need to sift through mountains of irrelevant information, enhancing the overall search experience.

Product Engineering with Generative AI: Design teams can leverage generative AI to create new product prototypes, explore innovative design possibilities, and accelerate the product development cycle.

Machine Learning with Generative AI: Generative AI can be used to create synthetic training data for machine learning models, leading to improved accuracy and enhanced efficiency.

Global Data Studio with Generative AI: Imagine generating realistic and anonymized datasets for data analysis purposes. This empowers researchers, businesses, and organizations to unlock insights from data while preserving privacy.

6. Learning & Development with Generative AI:

L&D Shares with Generative AI: This technology can create realistic simulations and personalized training modules tailored to individual learning styles and skill gaps. Generative AI can personalize the learning experience, fostering deeper engagement and knowledge retention.

HFS Generative AI: Generative AI can be used to personalize learning experiences for employees in the human resources and financial services sector. This technology can create tailored training programs for onboarding, compliance training, and skill development.

7. Generative AI for AIOps:

AIOps (Artificial Intelligence for IT Operations) utilizes AI to automate and optimize IT infrastructure management. Generative AI can further enhance this process by predicting potential IT issues before they occur, generating synthetic data for simulating scenarios, and optimizing remediation strategies.

Conclusion:

The potential of generative AI is vast, with its applications continuously expanding across industries. As research and development progress, we can expect even more groundbreaking advancements that will reshape the way we live, work, and interact with technology.

Reference- https://articlescad.com/the-generative-ai-revolution-transforming-industries-with-brillio-231268.html

#google generative ai services#ai for network optimization#generative ai for life sciences#generative ai in pharma#generative ai in banking#generative ai in software testing#ai technology in healthcare#future of ai in healthcare#advanced analytics in retail#ai retail merchandising#generative ai for telecom#generative ai for hi-tech#generative ai for retail#learn demystifying customer experience#generative ai for healthcare#product engineering services with Genai#accelerate application modernization#patient experience with generative ai#genai powered search engine#machine learning solution with ai#global data studio with gen ai#l&d shares with gen ai technology#hfs generative ai#generative ai for aiops

0 notes

Text

Karini AI Unlocking Potential: Strategic Connectivity with Azure and Google

Generative AI is a once-in-a-generation technology, and every enterprise is in a race to embrace it to improve internal productivity across IT, engineering, finance, and HR, as well as improve product experience for external customers. Model providers are steadily improving their performance with the launch of Claude3 by Anthropic and Gemini by Google, which boast on par or better performance than Open AI’s GPT4. However, these models need enterprise context to provide quality task-specific responses. Over 80% of large enterprises utilize more than one cloud, dispersing enterprise data across multiple cloud storages. Enterprises struggle to build meaningful Gen AI applications for Retrieval Augmented Generation (RAG) with disparate datasets for quality responses.

At the launch, Karini.ai provided connectors for Amazon S3, Amazon S3 with Manifest, and Websites to crawl any website, but it had the vision to provide coverage for 70+ connectors. We are proud to launch support of additional fully featured connectors for Azure Blob Storage ,Google Cloud Storage(GCS) , Google Drive , Confluence, and Dropbox . The connectors provide an easier way to ingest data from disparate data sources with just a few clicks and build a unified interactive Generative AI application. All the connectors are fully featured:

Karini.ai includes an nifty feature called which allows the connectors to gauge the volume of source datasets and file types before executing the ingest.

Perform full initial load and subsequently perform incremental ingest, aka Change Data Capture (CDC)

Filter the source connectors using regular expressions for selective ingest. For example, to ingest only PDF files, use filter as (*.pdf)

Recursive search capabilities are available to search all child directories and subsequent directories.

With the addition of Google, Azure, Confluence, and Dropbox connectors, Karini.ai enables enterprises to unlock the true potential of Generative AI. Our comprehensive collection of connectors tackles the challenge of siloed data, allowing the creation of powerful RAG applications that leverage data from across various sources. This streamlines development and improves data quality, ultimately delivering superior GenAI experiences for internal and external users.

Karini.ai remains committed to expanding its connector ecosystem, fostering a future where Generative AI seamlessly integrates with the ever-evolving enterprise data landscape.

Build your Generative AI application today! Leverage these enterprise connectors and unify disparate cloud-based sources. With Karini.ai, you can unlock the true potential of Generative AI, streamline development, improve data quality, and deliver superior GenAI experiences.

About Karini AI:

Fueled by innovation, we're making the dream of robust Generative AI systems a reality. No longer confined to specialists, Karini.ai empowers non-experts to participate actively in building/testing/deploying Generative AI applications. As the world's first GenAIOps platform, we've democratized GenAI, empowering people to bring their ideas to life – all in one evolutionary platform.

Contact:

Jerome Mendell

(404) 891-0255

#Karini.ai#Generative AI#Google Cloud#Azure Blob Storage#Confluence#Dropbox#enterprise connectivity#RAG applications#artificial intelligence#karini ai#machine learning#chatgpt#Enterprise productivity#Internal productivity#Engineering optimization#Financial performance

0 notes

Text

AI Assistant for Wordpress: Make Blogging Easy and Efficient

Hey everyone! Just wanted to share some great news with you all. I've been using an AI assistant for content creation and it has saved me so much time! Have any of you tried it? #aicontentcreation #savingtime #writingmadeeasy #blogpost #blogger #ai #write

As a blogger, you may have experienced that writing great content can take more time than you initially planned. However, with the help of AI assistant, you can increase the speed and efficiency of your writing process, leaving you more time to focus on other important tasks. What is AI Assistant for WordPress? The AI assistant is an automated tool designed to make your writing process more…

View On WordPress

#AI#AI assistant#blogging#content creation#efficiency#idea generation#productivity#SEO optimization#technology#wordpress#writing

0 notes

Text

Honestly I'm pretty tired of supporting nostalgebraist-autoresponder. Going to wind down the project some time before the end of this year.

Posting this mainly to get the idea out there, I guess.

This project has taken an immense amount of effort from me over the years, and still does, even when it's just in maintenance mode.

Today some mysterious system update (or something) made the model no longer fit on the GPU I normally use for it, despite all the same code and settings on my end.

This exact kind of thing happened once before this year, and I eventually figured it out, but I haven't figured this one out yet. This problem consumed several hours of what was meant to be a relaxing Sunday. Based on past experience, getting to the bottom of the issue would take many more hours.

My options in the short term are to

A. spend (even) more money per unit time, by renting a more powerful GPU to do the same damn thing I know the less powerful one can do (it was doing it this morning!), or

B. silently reduce the context window length by a large amount (and thus the "smartness" of the output, to some degree) to allow the model to fit on the old GPU.

Things like this happen all the time, behind the scenes.

I don't want to be doing this for another year, much less several years. I don't want to be doing it at all.

----

In 2019 and 2020, it was fun to make a GPT-2 autoresponder bot.

[EDIT: I've seen several people misread the previous line and infer that nostalgebraist-autoresponder is still using GPT-2. She isn't, and hasn't been for a long time. Her latest model is a finetuned LLaMA-13B.]

Hardly anyone else was doing anything like it. I wasn't the most qualified person in the world to do it, and I didn't do the best possible job, but who cares? I learned a lot, and the really competent tech bros of 2019 were off doing something else.

And it was fun to watch the bot "pretend to be me" while interacting (mostly) with my actual group of tumblr mutuals.

In 2023, everyone and their grandmother is making some kind of "gen AI" app. They are helped along by a dizzying array of tools, cranked out by hyper-competent tech bros with apparently infinite reserves of free time.

There are so many of these tools and demos. Every week it seems like there are a hundred more; it feels like every day I wake up and am expected to be familiar with a hundred more vaguely nostalgebraist-autoresponder-shaped things.

And every one of them is vastly better-engineered than my own hacky efforts. They build on each other, and reap the accelerating returns.

I've tended to do everything first, ahead of the curve, in my own way. This is what I like doing. Going out into unexplored wilderness, not really knowing what I'm doing, without any maps.

Later, hundreds of others with go to the same place. They'll make maps, and share them. They'll go there again and again, learning to make the expeditions systematically. They'll make an optimized industrial process of it. Meanwhile, I'll be locked in to my own cottage-industry mode of production.

Being the first to do something means you end up eventually being the worst.

----

I had a GPT chatbot in 2019, before GPT-3 existed. I don't think Huggingface Transformers existed, either. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

I had a denoising diffusion image generator in 2021, before DALLE-2 or Stable Diffusion or Huggingface Diffusers. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

Earlier this year, I was (probably) one the first people to finetune LLaMA. I manually strapped LoRA and 8-bit quantization onto the original codebase, figuring out everything the hard way. It was fun.

Just a few months later, and your grandmother is probably running LLaMA on her toaster as we speak. My homegrown methods look hopelessly antiquated. I think everyone's doing 4-bit quantization now?

(Are they? I can't keep track anymore -- the hyper-competent tech bros are too damn fast. A few months from now the thing will be probably be quantized to -1 bits, somehow. It'll be running in your phone's browser. And it'll be using RLHF, except no, it'll be using some successor to RLHF that everyone's hyping up at the time...)

"You have a GPT chatbot?" someone will ask me. "I assume you're using AutoLangGPTLayerPrompt?"

No, no, I'm not. I'm trying to debug obscure CUDA issues on a Sunday so my bot can carry on talking to a thousand strangers, every one of whom is asking it something like "PENIS PENIS PENIS."

Only I am capable of unplugging the blockage and giving the "PENIS PENIS PENIS" askers the responses they crave. ("Which is ... what, exactly?", one might justly wonder.) No one else would fully understand the nature of the bug. It is special to my own bizarre, antiquated, homegrown system.

I must have one of the longest-running GPT chatbots in existence, by now. Possibly the longest-running one?

I like doing new things. I like hacking through uncharted wilderness. The world of GPT chatbots has long since ceased to provide this kind of value to me.

I want to cede this ground to the LLaMA techbros and the prompt engineers. It is not my wilderness anymore.

I miss wilderness. Maybe I will find a new patch of it, in some new place, that no one cares about yet.

----

Even in 2023, there isn't really anything else out there quite like Frank. But there could be.

If you want to develop some sort of Frank-like thing, there has never been a better time than now. Everyone and their grandmother is doing it.

"But -- but how, exactly?"

Don't ask me. I don't know. This isn't my area anymore.

There has never been a better time to make a GPT chatbot -- for everyone except me, that is.

Ask the techbros, the prompt engineers, the grandmas running OpenChatGPT on their ironing boards. They are doing what I did, faster and easier and better, in their sleep. Ask them.

5K notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes

Text

☁︎。⋆。 ゚☾ ゚。⋆ how to resume ⋆。゚☾。⋆。 ゚☁︎ ゚

after 10 years & 6 jobs in corporate america, i would like to share how to game the system. we all want the biggest payoff for the least amount of work, right?

know thine enemy: beating the robots

i see a lot of misinformation about how AI is used to scrape resumes. i can't speak for every company but most corporations use what is called applicant tracking software (ATS).

no respectable company is using chatgpt to sort applications. i don't know how you'd even write the prompt to get a consumer-facing product to do this. i guarantee that target, walmart, bank of america, whatever, they are all using B2B SaaS enterprise solutions. there is not one hiring manager plinking away at at a large language model.

ATS scans your resume in comparison to the job posting, parses which resumes contain key words, and presents the recruiter and/or hiring manager with resumes with a high "score." the goal of writing your resume is to get your "score" as high as possible.

but tumblr user lightyaoigami, how do i beat the robots?

great question, y/n. you will want to seek out an ATS resume checker. i have personally found success with jobscan, which is not free, but works extremely well. there is a free trial period, and other ATS scanners are in fact free. some of these tools are so sophisticated that they can actually help build your resume from scratch with your input. i wrote my own resume and used jobscan to compare it to the applications i was finishing.

do not use chatgpt to write your resume or cover letter. it is painfully obvious. here is a tutorial on how to use jobscan. for the zillionth time i do not work for jobscan nor am i a #jobscanpartner i am just a person who used this tool to land a job at a challenging time.

the resume checkers will tell you what words and/or phrases you need to shoehorn into your bullet points - i.e., if you are applying for a job that requires you to be a strong collaborator, the resume checker might suggest you include the phrase "cross-functional teams." you can easily re-word your bullets to include this with a little noodling.

don't i need a cover letter?

it depends on the job. after you have about 5 years of experience, i would say that they are largely unnecessary. while i was laid off, i applied to about 100 jobs in a three-month period (#blessed to have been hired quickly). i did not submit a cover letter for any of them, and i had a solid rate of phone screens/interviews after submission despite not having a cover letter. if you are absolutely required to write one, do not have chatgpt do it for you. use a guide from a human being who knows what they are talking about, like ask a manager or betterup.

but i don't even know where to start!

i know it's hard, but you have to have a bit of entrepreneurial spirit here. google duckduckgo is your friend. don't pull any bean soup what-about-me-isms. if you truly don't know where to start, look for an ATS-optimized resume template.

a word about neurodivergence and job applications

i, like many of you, am autistic. i am intimately familiar with how painful it is to expend limited energy on this demoralizing task only to have your "reward" be an equally, if not more so, demoralizing work experience. i don't have a lot of advice for this beyond craft your worksona like you're making a d&d character (or a fursona or a sim or an OC or whatever made up blorbo generator you personally enjoy).

and, remember, while a lot of office work is really uncomfortable and involves stuff like "talking in meetings" and "answering the phone," these things are not an inherent risk. discomfort is not tantamount to danger, and we all have to do uncomfortable things in order to thrive. there are a lot of ways to do this and there is no one-size-fits-all answer. not everyone can mask for extended periods, so be your own judge of what you can or can't do.

i like to think of work as a drag show where i perform this other personality in exchange for money. it is much easier to do this than to fight tooth and nail to be unmasked at work, which can be a risk to your livelihood and peace of mind. i don't think it's a good thing that we have to mask at work, but it's an important survival skill.

⋆。゚☁︎。⋆。 ゚☾ ゚。⋆ good luck ⋆。゚☾。⋆。 ゚☁︎ ゚。⋆

619 notes

·

View notes

Text

Discover how generative AI solves manufacturing challenges: predictive maintenance, optimized design, quality control, and supply chain efficiency. Innovate your production today!

#AI-Driven Production Enhancements#Generative AI For Process Automation#AI In Manufacturing Intelligence#Generative AI For Manufacturing Improvement#AI In Industrial Efficiency#AI-Enhanced Manufacturing Workflows#Generative AI For Operational Excellence#AI In Production Management#AI-Driven Manufacturing Optimization#Generative AI For Supply Chain Resilience#AI In Process Innovation#AI In Manufacturing Performance#Generative AI For Manufacturing Analytics#AI In Production Quality#AI-Powered Factory Efficiency#Generative AI For Cost-Effective Manufacturing

0 notes

Text

AQUILAA Profit Generating Tool Review SEO-optimized Technology - The Ultimate Profit-Generating Tool for Effortless Product Marketing and Start Earning $1,700 Per Month Today With This Amazing Profit Generating Tool!

AQUILAA Profit Generating Tool Can Helps marketers, ecom owners, bloggers and affiliates generate $1700/month using our In-built Lead Gen CTA connected to your favorite Autoresponder and tap into Free traffic Sources all integrated into the software. Ready to Make Money with Review Blogging in 2025? Writing product reviews is one of the best ways to earn money online. About 87% of shoppers read…

View On WordPress

#AQUILAA Affiliate marketing software#AQUILAA AI affiliate marketing tool#AQUILAA AI product review writer#AQUILAA AI-driven content system#AQUILAA AI-powered review generator#AQUILAA Automated content marketing#AQUILAA Automated review writing software#AQUILAA Conversion-boosting tool#AQUILAA Digital marketing automation#AQUILAA E-commerce marketing tool#AQUILAA High-converting content creator#AQUILAA Instant review generator#AQUILAA Lead capture automation#AQUILAA Online business growth tool#AQUILAA Passive income tool#AQUILAA Profit Generating Tool#AQUILAA Profit-generating software#AQUILAA Review post automation#AQUILAA Sales funnel optimization#AQUILAA SEO-optimized product reviews#AQUILAA Smart content generation

1 note

·

View note

Note

I think the reason I dislike AI generative software (I'm fine with AI analysis tools, like for example splitting audio into tracks) is that I am against algorithmically generated content. I don't like the internet as a pit of content slop. AI art isn't unique in that regard, and humans can make algorithmically generated content too (look at youtube for example). AI makes it way easier to churn out content slop and makes searching for non-slop content more difficult.

yeah i basically wholeheartedly agree with this. you are absolutely right to point out that this is a problem that far predates AI but has been exacerbated by the ability to industrialise production. Content Slop is absolutely one of the first things i think of when i use that "if you lose your job to AI, it means it was already automated" line -- the job of a listicle writer was basically to be a middleman between an SEO optimization tool and the Google Search algorithm. the production of that kind of thing was already being made by a computer for a computer, AI just makes it much faster and cheaper because you don't have to pay a monkey to communicate between the two machines. & ai has absolutely made this shit way more unbearable but ultimately y'know the problem is capitalism incentivising the creation of slop with no purpose other than to show up in search results

848 notes

·

View notes

Text

Google search really has been taken over by low-quality SEO spam, according to a new, year-long study by German researchers. The researchers, from Leipzig University, Bauhaus-University Weimar, and the Center for Scalable Data Analytics and Artificial Intelligence, set out to answer the question "Is Google Getting Worse?" by studying search results for 7,392 product-review terms across Google, Bing, and DuckDuckGo over the course of a year. They found that, overall, "higher-ranked pages are on average more optimized, more monetized with affiliate marketing, and they show signs of lower text quality ... we find that only a small portion of product reviews on the web uses affiliate marketing, but the majority of all search results do." They also found that spam sites are in a constant war with Google over the rankings, and that spam sites will regularly find ways to game the system, rise to the top of Google's rankings, and then will be knocked down. "SEO is a constant battle and we see repeated patterns of review spam entering and leaving the results as search engines and SEO engineers take turns adjusting their parameters," they wrote.

[...]

The researchers warn that this rankings war is likely to get much worse with the advent of AI-generated spam, and that it genuinely threatens the future utility of search engines: "the line between benign content and spam in the form of content and link farms becomes increasingly blurry—a situation that will surely worsen in the wake of generative AI. We conclude that dynamic adversarial spam in the form of low-quality, mass-produced commercial content deserves more attention."

332 notes

·

View notes

Text

I don't think androids store memories as videos or that they can even be extracted as ones. Almost, but not exactly.

Firstly, because their memories include other data such as their tactile information, their emotional state, probably 3d markers of their surrounding...a lot of different information. So, their memories are not in a video-format, but some kind of a mix of many things, that may not be as easily separated from each other. I don't think a software necessary to read those types of files are publicly available.

Even if they have some absolute massive storage, filming good-quality videos and storing them is just not an optimal way to use their resources. It's extremely wasteful. I think, instead, their memories consist of snapshots that are taken every once in a while (depending on how much is going on), that consist of compressed version of all their relevant inputs like mentioned above. Like, a snapshot of a LiDAR in a specific moment + heavily compressed photo with additional data about some details that'll later help to upscale it and interpolate from one snapshot into the next one, some audio samples of the voices and transcript of the conversation so that it'd take less storage to save. My main point is, their memories are probably stored in a format that not only doesn't actually contain original video material, but is a product of some extreme compression, and in this case reviewing memories is not like watching HD video footage, but rather an ai restoration of those snapshots. Perhaps it may be eventually converted into some sort of a video readable to human eye, but it would be more of an ai-generated video from specific snapshots with standardised prompts with some parts of the image/audio missing than a perfectly exact video recording.

When Connor extracts video we see that they are a bit glitchy. It may be attributed to some details getting lost during transmission from one android to another, but then we've also got flashbacks with android's own memories, that are just as "glitchy". Which kinda backs up a theory of it being a restoration of some sort of a compressed version rather than original video recording.

Then we've also got that scene where Josh records Markus where it is shown that when he starts to film, his eyes indicate the change that he is not just watching but recording now. Which means that is an option, but not the default. I find it a really nice detail. Like, androids can record videos, but then the people around them can see exactly when they do that, and "be at ease" when they don't. It may be purely a design choice, like that of the loading bar to signalise that something is in progress and not just frozen, or mandatory shutter sound effect on smartphones cameras in Japan.

So, yeah. Androids purpose is to correctly interpret their inputs and store relevant information about it in their long term memory, and not necessarily to record every present moment in a video-archive that will likely never be seen by a human and reviewed as a pure video footage again. If it happened to be needed to be seen — it'll be restored as a "video" file, but this video won't be an actual video recording unless android was specifically set to record mode.

119 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

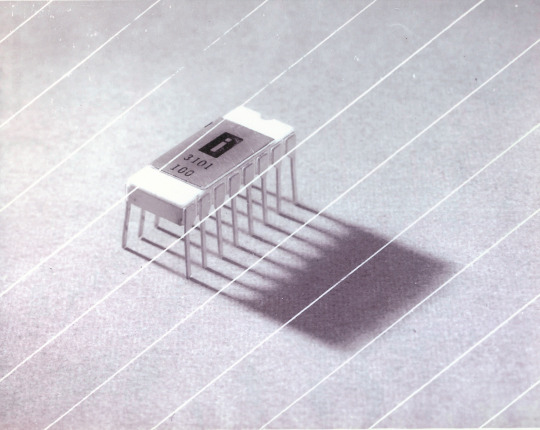

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

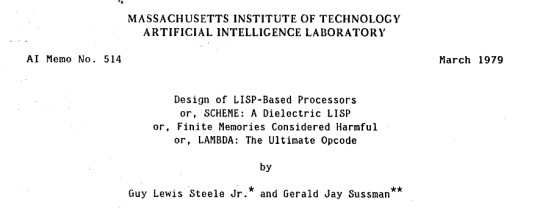

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

235 notes

·

View notes