#Fabric SQL Database

Explore tagged Tumblr posts

Text

🚀 Struggling to balance transactional (OLTP) & analytical (OLAP) workloads? Microsoft Fabric SQL Database is the game-changer! In this blog, I’ll share best practices, pitfalls to avoid, and optimization tips to help you master Fabric SQL DB. Let’s dive in! 💡💬 #MicrosoftFabric #SQL

#Data management#Database Benefits#Database Optimization#Database Tips#Developer-Friendly#Fabric SQL Database#Microsoft Fabric#SQL database#SQL Performance#Transactional Workloads#Unlock SQL Potential

0 notes

Text

#DataAnalytics#Analytics#Conference#Training#DataWarehousing#Azure#Database#BusinessIntelligence#Realtime#Microsoft#Fabric#DataPlatform#DataEngineering#SQL#Reporting#Insights#Visualization#DAX#PowerQuery#Administration#DBA#DataScience#MachineLearning#AI#MicrosoftAI#Architecture#BestPractices

0 notes

Text

Master SQL in Microsoft Fabric – The Smart Way!

Setting up and optimizing SQL databases in Microsoft Fabric is a game-changer for businesses handling large-scale data. With built-in scalability, real-time analytics, and seamless integration with Power BI, Microsoft Fabric is redefining data management.

Want to streamline your database workflows and enhance performance? Dive into our expert guide and get started today!

0 notes

Text

Accelerating Digital Transformation with Acuvate’s MVP Solutions

A Minimum Viable Product (MVP) is a basic version of a product designed to test its concept with early adopters, gather feedback, and validate market demand before full-scale development. Implementing an MVP is vital for startups, as statistics indicate that 90% of startups fail due to a lack of understanding in utilizing an MVP. An MVP helps mitigate risks, achieve a faster time to market, and save costs by focusing on essential features and testing the product idea before fully committing to its development

• Verifying Product Concepts: Validates product ideas and confirms market demand before full development.

Gathering User Feedback: Collects insights from real users to improve future iterations.

Establishing Product-Market Fit: Determines if the product resonates with the target market.

Faster Time-to-Market: Enables quicker product launch with fewer features.

Risk Mitigation: Limits risk by testing the product with real users before large investments.

Gathering User Feedback: Provides insights that help prioritize valuable features for future development.

Here are Acuvate’s tailored MVP models for diverse business needs

Data HealthCheck MVP (Minimum Viable Product)

Many organizations face challenges with fragmented data, outdated governance, and inefficient pipelines, leading to delays and missed opportunities. Acuvate’s expert assessment offers:

Detailed analysis of your current data architecture and interfaces.

A clear, actionable roadmap for a future-state ecosystem.

A comprehensive end-to-end data strategy for collection, manipulation, storage, and visualization.

Advanced data governance with contextualized insights.

Identification of AI/ML/MV/Gen-AI integration opportunities and cloud cost optimization.

Tailored MVP proposals for immediate impact.

Quick wins and a solid foundation for long-term success with Acuvate’s Data HealthCheck.

know more

Microsoft Fabric Deployment MVP

Is your organization facing challenges with data silos and slow decision-making? Don’t let outdated infrastructure hinder your digital progress.

Acuvate’s Microsoft Fabric Deployment MVP offers rapid transformation with:

Expert implementation of Microsoft Fabric Data and AI Platform, tailored to your scale and security needs using our AcuWeave data migration tool.

Full Microsoft Fabric setup, including Azure sizing, datacenter configuration, and security.

Smooth data migration from existing databases (MS Synapse, SQL Server, Oracle) to Fabric OneLake via AcuWeave.

Strong data governance (based on MS PurView) with role-based access and robust security.

Two custom Power BI dashboards to turn your data into actionable insights.

know more

Tableau to Power BI Migration MVP

Are rising Tableau costs and limited integration holding back your business intelligence? Don’t let legacy tools limit your data potential.

Migrating from Tableau to Microsoft Fabric Power BI MVP with Acuvate’s Tableau to Power BI migration MVP, you’ll get:

Smooth migration of up to three Tableau dashboards to Power BI, preserving key business insights using our AcuWeave tool.

Full Microsoft Fabric setup with optimized Azure configuration and datacenter placement for maximum performance.

Optional data migration to Fabric OneLake for seamless, unified data management.

know more

Digital Twin Implementation MVP

Acuvate’s Digital Twin service, integrating AcuPrism and KDI Kognitwin, creates a unified, real-time digital representation of your facility for smarter decisions and operational excellence. Here’s what we offer:

Implement KDI Kognitwin SaaS Integrated Digital Twin MVP.

Overcome disconnected systems, outdated workflows, and siloed data with tailored integration.

Set up AcuPrism (Databricks or MS Fabric) in your preferred cloud environment.

Seamlessly integrate SAP ERP and Aveva PI data sources.

Establish strong data governance frameworks.

Incorporate 3D laser-scanned models of your facility into KDI Kognitwin (assuming you provide the scan).

Enable real-time data exchange and visibility by linking AcuPrism and KDI Kognitwin.

Visualize SAP ERP and Aveva PI data in an interactive digital twin environment.

know more

MVP for Oil & Gas Production Optimalisation

Acuvate’s MVP offering integrates AcuPrism and AI-driven dashboards to optimize production in the Oil & Gas industry by improving visibility and streamlining operations. Key features include:

Deploy AcuPrism Enterprise Data Platform on Databricks or MS Fabric in your preferred cloud (Azure, AWS, GCP).

Integrate two key data sources for real-time or preloaded insights.

Apply Acuvate’s proven data governance framework.

Create two AI-powered MS Power BI dashboards focused on production optimization.

know more

Manufacturing OEE Optimization MVP

Acuvate’s OEE Optimization MVP leverages AcuPrism and AI-powered dashboards to boost manufacturing efficiency, reduce downtime, and optimize asset performance. Key features include:

Deploy AcuPrism on Databricks or MS Fabric in your chosen cloud (Azure, AWS, GCP).

Integrate and analyze two key data sources (real-time or preloaded).

Implement data governance to ensure accuracy.

Gain actionable insights through two AI-driven MS Power BI dashboards for OEE monitoring.

know more

Achieve Transformative Results with Acuvate’s MVP Solutions for Business Optimization

Acuvate’s MVP solutions provide businesses with rapid, scalable prototypes that test key concepts, reduce risks, and deliver quick results. By leveraging AI, data governance, and cloud platforms, we help optimize operations and streamline digital transformation. Our approach ensures you gain valuable insights and set the foundation for long-term success.

Conclusion

Scaling your MVP into a fully deployed solution is easy with Acuvate’s expertise and customer-focused approach. We help you optimize data governance, integrate AI, and enhance operational efficiencies, turning your digital transformation vision into reality.

Accelerate Growth with Acuvate’s Ready-to-Deploy MVPs

Get in Touch with Acuvate Today!

Are you ready to transform your MVP into a powerful, scalable solution? Contact Acuvate to discover how we can support your journey from MVP to full-scale implementation. Let’s work together to drive innovation, optimize performance, and accelerate your success.

#MVP#MinimumViableProduct#BusinessOptimization#DigitalTransformation#AI#CloudSolutions#DataGovernance#MicrosoftFabric#DataStrategy#PowerBI#DigitalTwin#AIIntegration#DataMigration#StartupGrowth#TechSolutions#ManufacturingOptimization#OilAndGasTech#BusinessIntelligence#AgileDevelopment#TechInnovation

1 note

·

View note

Text

Microsoft Fabric data warehouse

Microsoft Fabric data warehouse

What Is Microsoft Fabric and Why You Should Care?

Unified Software as a Service (SaaS), offering End-To-End analytics platform

Gives you a bunch of tools all together, Microsoft Fabric OneLake supports seamless integration, enabling collaboration on this unified data analytics platform

Scalable Analytics

Accessibility from anywhere with an internet connection

Streamlines collaboration among data professionals

Empowering low-to-no-code approach

Components of Microsoft Fabric

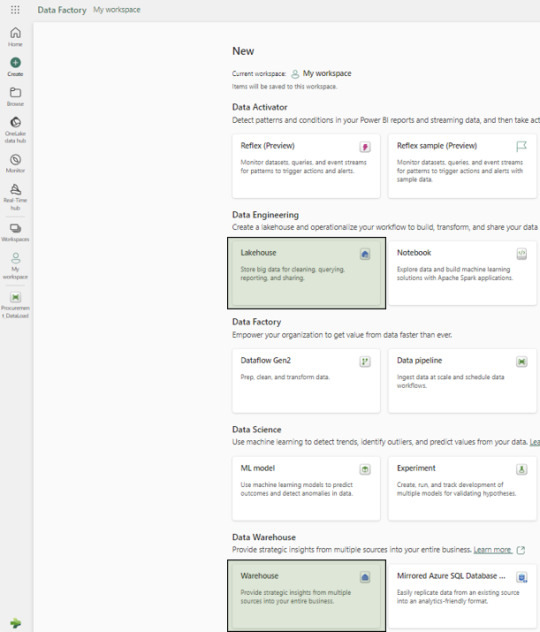

Fabric provides comprehensive data analytics solutions, encompassing services for data movement and transformation, analysis and actions, and deriving insights and patterns through machine learning. Although Microsoft Fabric includes several components, this article will use three primary experiences: Data Factory, Data Warehouse, and Power BI.

Lake House vs. Warehouse: Which Data Storage Solution is Right for You?

In simple terms, the underlying storage format in both Lake Houses and Warehouses is the Delta format, an enhanced version of the Parquet format.

Usage and Format Support

A Lake House combines the capabilities of a data lake and a data warehouse, supporting unstructured, semi-structured, and structured formats. In contrast, a data Warehouse supports only structured formats.

When your organization needs to process big data characterized by high volume, velocity, and variety, and when you require data loading and transformation using Spark engines via notebooks, a Lake House is recommended. A Lakehouse can process both structured tables and unstructured/semi-structured files, offering managed and external table options. Microsoft Fabric OneLake serves as the foundational layer for storing structured and unstructured data Notebooks can be used for READ and WRITE operations in a Lakehouse. However, you cannot connect to a Lake House with an SQL client directly, without using SQL endpoints.

On the other hand, a Warehouse excels in processing and storing structured formats, utilizing stored procedures, tables, and views. Processing data in a Warehouse requires only T-SQL knowledge. It functions similarly to a typical RDBMS database but with a different internal storage architecture, as each table’s data is stored in the Delta format within OneLake. Users can access Warehouse data directly using any SQL client or the in-built graphical SQL editor, performing READ and WRITE operations with T-SQL and its elements like stored procedures and views. Notebooks can also connect to the Warehouse, but only for READ operations.

An SQL endpoint is like a special doorway that lets other computer programs talk to a database or storage system using a language called SQL. With this endpoint, you can ask questions (queries) to get information from the database, like searching for specific data or making changes to it. It’s kind of like using a search engine to find things on the internet, but for your data stored in the Fabric system. These SQL endpoints are often used for tasks like getting data, asking questions about it, and making changes to it within the Fabric system.

Choosing Between Lakehouse and Warehouse

The decision to use a Lakehouse or Warehouse depends on several factors:

Migrating from a Traditional Data Warehouse: If your organization does not have big data processing requirements, a Warehouse is suitable.

Migrating from a Mixed Big Data and Traditional RDBMS System: If your existing solution includes both a big data platform and traditional RDBMS systems with structured data, using both a Lakehouse and a Warehouse is ideal. Perform big data operations with notebooks connected to the Lakehouse and RDBMS operations with T-SQL connected to the Warehouse.

Note: In both scenarios, once the data resides in either a Lakehouse or a Warehouse, Power BI can connect to both using SQL endpoints.

A Glimpse into the Data Factory Experience in Microsoft Fabric

In the Data Factory experience, we focus primarily on two items: Data Pipeline and Data Flow.

Data Pipelines

Used to orchestrate different activities for extracting, loading, and transforming data.

Ideal for building reusable code that can be utilized across other modules.

Enables activity-level monitoring.

To what can we compare Data Pipelines ?

microsoft fabric data pipelines Data Pipelines are similar, but not the same as:

Informatica -> Workflows

ODI -> Packages

Dataflows

Utilized when a GUI tool with Power Query UI experience is required for building Extract, Transform, and Load (ETL) logic.

Employed when individual selection of source and destination components is necessary, along with the inclusion of various transformation logic for each table.

To what can we compare Data Flows ?

Dataflows are similar, but not same as :

Informatica -> Mappings

ODI -> Mappings / Interfaces

Are You Ready to Migrate Your Data Warehouse to Microsoft Fabric?

Here is our solution for implementing the Medallion Architecture with Fabric data Warehouse:

Creation of New Workspace

We recommend creating separate workspaces for Semantic Models, Reports, and Data Pipelines as a best practice.

Creation of Warehouse and Lakehouse

Follow the on-screen instructions to setup new Lakehouse and a Warehouse:

Configuration Setups

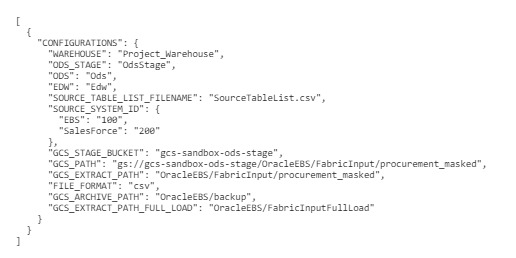

Create a configurations.json file containing parameters for data pipeline activities:

Source schema, buckets, and path

Destination warehouse name

Names of warehouse layers bronze, silver and gold – OdsStage,Ods and Edw

List of source tables/files in a specific format

Source System Id’s for different sources

Below is the screenshot of the (config_variables.json) :

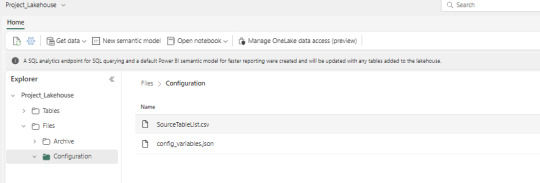

File Placement

Place the configurations.json and SourceTableList.csv files in the Fabric Lakehouse.

SourceTableList will have columns such as – SourceSystem, SourceDatasetId, TableName, PrimaryKey, UpdateKey, CDCColumnName, SoftDeleteColumn, ArchiveDate, ArchiveKey

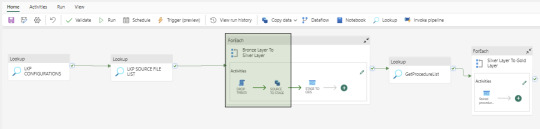

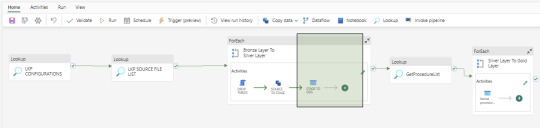

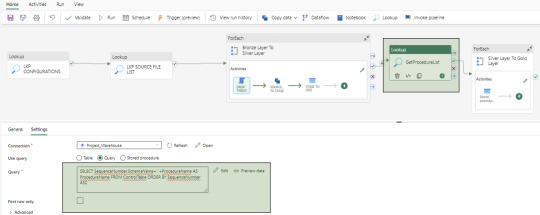

Data Pipeline Creation

Create a data pipeline to orchestrate various activities for data extraction, loading, and transformation. Below is the screenshot of the Data Pipeline and here you can see the different activities like – Lookup, ForEach, Script, Copy Data and Stored Procedure

Bronze Layer Loading

Develop a dynamic activity to load data into the Bronze Layer (OdsStage schema in Warehouse). This layer truncates and reloads data each time.

We utilize two activities in this layer: Script Activity and Copy Data Activity. Both activities receive parameterized inputs from the Configuration file and SourceTableList file. The Script activity drops the staging table, and the Copy Data activity creates and loads data into the OdsStage table. These activities are reusable across modules and feature powerful capabilities for fast data loading.

Silver Layer Loading

Establish a dynamic activity to UPSERT data into the Silver layer (Ods schema in Warehouse) using a stored procedure activity. This procedure takes parameterized inputs from the Configuration file and SourceTableList file, handling both UPDATE and INSERT operations. This stored procedure is reusable. At this time, MERGE statements are not supported by Fabric Warehouse. However, this feature may be added in the future.

Control Table Creation

Create a control table in the Warehouse with columns containing Sequence Numbers and Procedure Names to manage dependencies between Dimensions, Facts, and Aggregate tables. And finally fetch the values using a Lookup activity.

Gold Layer Loading

To load data into the Gold Layer (Edw schema in the warehouse), we develop individual stored procedures to UPSERT (UPDATE and INSERT) data for each dimension, fact, and aggregate table. While Dataflow can also be used for this task, we prefer stored procedures to handle the nature of complex business logic.

Dashboards and Reporting

Fabric includes the Power BI application, which can connect to the SQL endpoints of both the Lakehouse and Warehouse. These SQL endpoints allow for the creation of semantic models, which are then used to develop reports and applications. In our use case, the semantic models are built from the Gold layer (Edw schema in Warehouse) tables.

Upcoming Topics Preview

In the upcoming articles, we will cover topics such as notebooks, dataflows, lakehouse, security and other related subjects.

Conclusion

microsoft Fabric data warehouse stands as a potent and user-friendly data manipulation platform, offering an extensive array of tools for data ingestion, storage, transformation, and analysis. Whether you’re a novice or a seasoned data analyst, Fabric empowers you to optimize your workflow and harness the full potential of your data.

We specialize in aiding organizations in meticulously planning and flawlessly executing data projects, ensuring utmost precision and efficiency.

Curious and would like to hear more about this article ?

Contact us at [email protected] or Book time with me to organize a 100%-free, no-obligation call

Follow us on LinkedIn for more interesting updates!!

DataPlatr Inc. specializes in data engineering & analytics with pre-built data models for Enterprise Applications like SAP, Oracle EBS, Workday, Salesforce to empower businesses to unlock the full potential of their data. Our pre-built enterprise data engineering models are designed to expedite the development of data pipelines, data transformation, and integration, saving you time and resources.

Our team of experienced data engineers, scientists and analysts utilize cutting-edge data infrastructure into valuable insights and help enterprise clients optimize their Sales, Marketing, Operations, Financials, Supply chain, Human capital and Customer experiences.

0 notes

Text

Karthik Ranganathan, Co-Founder and Co-CEO of Yugabyte – Interview Series

New Post has been published on https://thedigitalinsider.com/karthik-ranganathan-co-founder-and-co-ceo-of-yugabyte-interview-series/

Karthik Ranganathan, Co-Founder and Co-CEO of Yugabyte – Interview Series

Karthik Ranganathan is co-founder and co-CEO of Yugabyte, the company behind YugabyteDB, the open-source, high-performance distributed PostgreSQL database. Karthik is a seasoned data expert and former Facebook engineer who founded Yugabyte alongside two of his Facebook colleagues to revolutionize distributed databases.

What inspired you to co-found Yugabyte, and what gaps in the market did you see that led you to create YugabyteDB?

My co-founders, Kannan Muthukkaruppan, Mikhail Bautin, and I, founded Yugabyte in 2016. As former engineers at Meta (then called Facebook), we helped build popular databases including Apache Cassandra, HBase, and RocksDB – as well as running some of these databases as managed services for internal workloads.

We created YugabyteDB because we saw a gap in the market for cloud-native transactional databases for business-critical applications. We built YugabyteDB to cater to the needs of organizations transitioning from on-premises to cloud-native operations and combined the strengths of non-relational databases with the scalability and resilience of cloud-native architectures. While building Cassandra and HBase at Facebook (which was instrumental in addressing Facebook’s significant scaling needs), we saw the rise of microservices, containerization, high availability, geographic distribution, and Application Programming Interfaces (API). We also recognized the impact that open-source technologies have in advancing the industry.

People often think of the transactional database market as crowded. While this has traditionally been true, today Postgres has become the default API for cloud-native transactional databases. Increasingly, cloud-native databases are choosing to support the Postgres protocol, which has been ingrained into the fabric of YugabyteDB, making it the most Postgres-compatible database on the market. YugabyteDB retains the power and familiarity of PostgreSQL while evolving it to an enterprise-grade distributed database suitable for modern cloud-native applications. YugabyteDB allows enterprises to efficiently build and scale systems using familiar SQL models.

How did your experiences at Facebook influence your vision for the company?

In 2007, I was considering whether to join a small but growing company–Facebook. At the time, the site had about 30 to 40 million users. I thought it might double in size, but I couldn’t have been more wrong! During my over five years at Facebook, the user base grew to 2 billion. What attracted me to the company was its culture of innovation and boldness, encouraging people to “fail fast” to catalyze innovation.

Facebook grew so large that the technical and intellectual challenges I craved were no longer present. For many years I had aspired to start my own company and tackle problems facing the common user–this led me to co-create Yugabyte.

Our mission is to simplify cloud-native applications, focusing on three essential features crucial for modern development:

First, applications must be continuously available, ensuring uptime regardless of backups or failures, especially when running on commodity hardware in the cloud.

Second, the ability to scale on demand is crucial, allowing developers to build and release quickly without the delay of ordering hardware.

Third, with numerous data centers now easily accessible, replicating data across regions becomes vital for reliability and performance.

These three elements empower developers by providing the agility and freedom they need to innovate, without being constrained by infrastructure limitations.

Could you share the journey from Yugabyte’s inception in 2016 to its current status as a leader in distributed SQL databases? What were some key milestones?

At Facebook, I often talked with developers who needed specific features, like secondary indexes on SQL databases or occasional multi-node transactions. Unfortunately, the answer was usually “no,” because existing systems weren’t designed for those requirements.

Today, we are experiencing a shift towards cloud-native transactional applications that need to address scale and availability. Traditional databases simply can’t meet these needs. Modern businesses require relational databases that operate in the cloud and offer the three essential features: high availability, scalability, and geographic distribution, while still supporting SQL capabilities. These are the pillars on which we built YugabyteDB and the database challenges we’re focused on solving.

In February 2016, the founders began developing YugabyteDB, a global-scale distributed SQL database designed for cloud-native transactional applications. In July 2019, we made an unprecedented announcement and released our previously commercial features as open source. This reaffirmed our commitment to open-source principles and officially launched YugabyteDB as a fully open-source relational database management system (RDBMS) under an Apache 2.0 license.

The latest version of YugabyteDB (unveiled in September) features enhanced Postgres compatibility. It includes an Adaptive Cost-Based Optimizer (CBO) that optimizes query plans for large-scale, multi-region applications, and Smart Data Distribution that automatically determines whether to store tables together for lower latency, or to shard and distribute data for greater scalability. These enhancements allow developers to run their PostgreSQL applications on YugabyteDB efficiently and scale without the need for trade-offs or complex migrations.

YugabyteDB is known for its compatibility with PostgreSQL and its Cassandra-inspired API. How does this multi-API approach benefit developers and enterprises?

YugabyteDB’s multi-API approach benefits developers and enterprises by combining the strengths of a high-performance SQL database with the flexibility needed for global, internet-scale applications.

It supports scale-out RDBMS and high-volume Online Transaction Processing (OLTP) workloads, while maintaining low query latency and exceptional resilience. Compatibility with PostgreSQL allows for seamless lift-and-shift modernization of existing Postgres applications, requiring minimal changes.

In the latest version of the distributed database platform, released in September 2024, features like the Adaptive CBO and Smart Data Distribution enhance performance by optimizing query plans and automatically managing data placement. This allows developers to achieve low latency and high scalability without compromise, making YugabyteDB ideal for rapidly growing, cloud-native applications that require reliable data management.

AI is increasingly being integrated into database systems. How is Yugabyte leveraging AI to enhance the performance, scalability, and security of its SQL systems?

We are leveraging AI to enhance our distributed SQL database by addressing performance and migration challenges. Our upcoming Performance Copilot, an enhancement to our Performance Advisor, will simplify troubleshooting by analyzing query patterns, detecting anomalies, and providing real-time recommendations to troubleshoot database performance issues.

We are also integrating AI into YugabyteDB Voyager, our database migration tool that simplifies migrations from PostgreSQL, MySQL, Oracle, and other cloud databases to YugabyteDB. We aim to streamline transitions from legacy systems by automating schema conversion, SQL translation, and data transformation, with proactive compatibility checks. These innovations focus on making YugabyteDB smarter, more efficient, and easier for modern, distributed applications to use.

What are the key advantages of using an open-source SQL system like YugabyteDB in cloud-native applications compared to traditional proprietary databases?

Transparency, flexibility, and robust community support are key advantages when using an open-source SQL system like YugabyteDB in cloud-native applications. When we launched YugabyteDB, we recognized the skepticism surrounding open-source models. We engaged with users, who expressed a strong preference for a fully open database to trust with their critical data.

We initially ran on an open-core model, but rapidly realized it needed to be a completely open solution. Developers increasingly turn to PostgreSQL as a logical Oracle alternative, but PostgreSQL was not built for dynamic cloud platforms. YugabyteDB fills this gap by supporting PostgreSQL’s feature depth for modern cloud infrastructures. By being 100% open source, we remove roadblocks to adoption.

This makes us very attractive to developers building business-critical applications and to operations engineers running them on cloud-native platforms. Our focus is on creating a database that is not only open, but also easy to use and compatible with PostgreSQL, which remains a developer favorite due to its mature feature set and powerful extensions.

The demand for scalable and adaptable SQL solutions is growing. What trends are you observing in the enterprise database market, and how is Yugabyte positioned to meet these demands?

Larger scale in enterprise databases often leads to increased failure rates, especially as organizations deal with expanded footprints and higher data volumes. Key trends shaping the database landscape include the adoption of DBaaS, and a shift back from public cloud to private cloud environments. Additionally, the integration of generative AI brings opportunities and challenges, requiring automation and performance optimization to manage the growing data load.

Organizations are increasingly turning to DBaaS to streamline operations, despite initial concerns about control and security. This approach improves efficiency across various infrastructures, while the focus on private cloud solutions helps businesses reduce costs and enhance scalability for their workloads.

YugabyteDB addresses these evolving demands by combining the strengths of relational databases with the scalability of cloud-native architectures. Features like Smart Data Distribution and an Adaptive CBO, enhance performance and support a large number of database objects. This makes it a competitive choice for running a wide range of applications.

Furthermore, YugabyteDB allows enterprises to migrate their PostgreSQL applications while maintaining similar performance levels, crucial for modern workloads. Our commitment to open-source development encourages community involvement and provides flexibility for customers who want to avoid vendor lock-in.

With the rise of edge computing and IoT, how does YugabyteDB address the challenges posed by these technologies, particularly regarding data distribution and latency?

YugabyteDB’s distributed SQL architecture is designed to meet the challenges posed by the rise of edge computing and IoT by providing a scalable and resilient data layer that can operate seamlessly in both cloud and edge contexts. Its ability to automatically shard and replicate data ensures efficient distribution, enabling quick access and real-time processing. This minimizes latency, allowing applications to respond swiftly to user interactions and data changes.

By offering the flexibility to adapt configurations based on specific application requirements, YugabyteDB ensures that enterprises can effectively manage their data needs as they evolve in an increasingly decentralized landscape.

As Co-CEO, how do you balance the dual roles of leading technological innovation and managing company growth?

Our company aims to simplify cloud-native applications, compelling me to stay on top of technology trends, such as generative AI and context switches. Following innovation demands curiosity, a desire to make an impact, and a commitment to continuous learning.

Balancing technological innovation and company growth is fundamentally about scaling–whether it’s scaling systems or scaling impact. In distributed databases, we focus on building technologies that scale performance, handle massive workloads, and ensure high availability across a global infrastructure. Similarly, scaling Yugabyte means growing our customer base, enhancing community engagement, and expanding our ecosystem–while maintaining operational excellence.

All this requires a disciplined approach to performance and efficiency.

Technically, we optimize query execution, reduce latency, and improve system throughput; organizationally, we streamline processes, scale teams, and enhance cross-functional collaboration. In both cases, success comes from empowering teams with the right tools, insights, and processes to make smart, data-driven decisions.

How do you see the role of distributed SQL databases evolving in the next 5-10 years, particularly in the context of AI and machine learning?

In the next few years, distributed SQL databases will evolve to handle complex data analysis, enabling users to make predictions and detect anomalies with minimal technical expertise. There is an immense amount of database specialization in the context of AI and machine learning, but that is not sustainable. Databases will need to evolve to meet the demands of AI. This is why we’re iterating and enhancing capabilities on top of pgvector, ensuring developers can use Yugabyte for their AI database needs.

Additionally, we can expect an ongoing commitment to open source in AI development. Five years ago, we made YugabyteDB fully open source under the Apache 2.0 license, reinforcing our dedication to an open-source framework and proactively building our open-source community.

Thank you for all of your detailed responses, readers who wish to learn more should visit YugabyteDB.

#2024#adoption#ai#AI development#Analysis#anomalies#Apache#Apache 2.0 license#API#applications#approach#architecture#automation#backups#billion#Building#Business#CEO#Cloud#cloud solutions#Cloud-Native#Collaboration#Community#compromise#computing#containerization#continuous#curiosity#data#data analysis

0 notes

Text

Best One Year Courses with Fees, Syllabus, and Benefits

One-Year Course List, Fee, and Syllabus: Unlock Your Career Potential

In the fast-paced world we live in, acquiring relevant skills quickly has become a top priority for students and professionals alike. A one-year course is a perfect solution for those who want to upgrade their skills, switch careers, or dive into a new field without investing years in traditional education.

In this article, we’ll explore a curated list of one-year courses, their approximate fees, and their syllabi, to help you make an informed decision.

Benefits of a One-Year Course

Before diving into the list, let’s understand why one-year courses are so popular:

Time-Saving: They provide targeted knowledge in just 12 months.

Cost-Effective: These courses are significantly more affordable than multi-year degree programs.

Practical Learning: Focused on real-world applications, these courses equip learners with job-ready skills.

Career Growth: They open doors to better job opportunities, promotions, or new career paths.

One-Year Course List

Here’s a list of popular one-year courses across different domains, along with their approximate fees and an overview of their syllabi.

1. Digital Marketing

Fee: ₹20,000 to ₹1,00,000

Syllabus:

Basics of Digital Marketing

Search Engine Optimization (SEO)

Pay-Per-Click (PPC) Advertising

Social Media Marketing

Email Marketing

Analytics and Reporting

This course is ideal for those looking to build a career in the online marketing domain or expand their business digitally.

2. Graphic Design

Fee: ₹25,000 to ₹1,50,000

Syllabus:

Fundamentals of Design

Adobe Photoshop, Illustrator, and InDesign

Typography and Color Theory

Branding and Logo Design

User Interface (UI) Design

A graphic design course is perfect for creative minds looking to excel in digital and print media design.

3. Data Science and Analytics

Fee: ₹50,000 to ₹2,00,000

Syllabus:

Introduction to Data Science

Python and R Programming

Data Visualization with Tableau

Machine Learning Basics

Big Data Analytics

This course is highly sought-after due to the increasing demand for data professionals across industries.

4. Web Development

Fee: ₹30,000 to ₹1,50,000

Syllabus:

HTML, CSS, and JavaScript Basics

Front-End Frameworks (React, Angular)

Back-End Development (Node.js, PHP)

Database Management (SQL, MongoDB)

Deployment and Hosting

A web development course is ideal for individuals looking to create websites or web applications.

5. Medical Coding

Fee: ₹40,000 to ₹1,20,000

Syllabus:

Basics of Medical Terminology

Anatomy and Physiology

Coding Systems (ICD, CPT, HCPCS)

Medical Billing Process

HIPAA Compliance

This course is an excellent option for those interested in healthcare administration and documentation.

6. Culinary Arts

Fee: ₹50,000 to ₹2,50,000

Syllabus:

Basics of Culinary Techniques

International and Regional Cuisine

Baking and Pastry Arts

Food Presentation and Plating

Kitchen Management

If you’re passionate about cooking, a culinary arts course can turn your hobby into a career.

7. Accounting and Taxation

Fee: ₹30,000 to ₹1,00,000

Syllabus:

Basics of Accounting

Financial Statements and Bookkeeping

Tax Laws and GST

Tally ERP and QuickBooks

Payroll Management

An accounting and taxation course is ideal for individuals aiming to work in finance or manage their own business accounts.

8. Fashion Designing

Fee: ₹50,000 to ₹3,00,000

Syllabus:

Elements of Fashion Design

Fabric Studies and Textile Design

Pattern Making and Draping

Fashion Illustration

Portfolio Development

This course is perfect for aspiring fashion designers or those interested in the apparel industry.

9. Photography

Fee: ₹20,000 to ₹1,50,000

Syllabus:

Basics of Photography

Lighting Techniques

Portrait and Landscape Photography

Post-Processing with Adobe Lightroom

Portfolio Development

A photography course can help enthusiasts turn their passion into a professional career.

How to Choose the Right One-Year Course

1. Identify Your Goals

Determine why you want to take up a course. Is it for career advancement, skill enhancement, or exploring a new field?

2. Research the Institute

Choose a course from a reputed institute or platform to ensure that your certification holds value.

3. Compare Fees and ROI

While affordability is essential, also consider the return on investment in terms of career prospects.

4. Check Flexibility

If you’re a working professional, opt for courses with flexible schedules or online options.

5. Read Reviews

Look for testimonials and alumni success stories to assess the course’s credibility.

Conclusion

A one-year course is a practical and efficient way to gain industry-relevant skills, enhance your career prospects, and achieve your goals. With numerous options available across various domains, you can find a course tailored to your interests and aspirations.

By investing just 12 months, you can open doors to a promising future in your chosen field. Choose wisely, and take the first step toward a brighter career today!

IPA offers:-

Accounting Course , Diploma in Taxation, Courses after 12th Commerce , courses after bcom

Diploma in Financial Accounting , SAP fico Course , Accounting and Taxation Course , GST Course , Basic Computer Course , Payroll Course, Tally Course , Advanced Excel Course , One year course , Computer adca course

0 notes

Text

Internship in BCA Course: An Introduction to the Power

Given the high level of competition and unrestricted competition prevailing in the job market today, internships have emerged as a vital academic component of a college course in particular for students in Computer Applications. During a BCA course, an internship is more a powerful tool for strengthened learning and real-world skills building rather than an excellent stepping stone to create a better-trained graduate. MSB College is one of the Best BCA Colleges in Bhubaneswar and the Best BCA College in Odisha, which makes it focus heavily on internships and collaborations with leading tech companies to bring hands-on experience for students. This blog post discusses the role that internships play during a course in BCA and how well-prepared a student would be after completing it to join computer applications-related jobs successfully.

Bridging the Gap Between Classroom Learning and Real-World Applications

Although classroom always forms an important part of the learning of theoretical concepts, practical application is of a different fabric. An internship bridges academic knowledge with practical application: providing the student an opportunity to apply what has been learned in a working environment. During the period of interns, students can see how theories in computer science and programming translate into real-world solutions.

Example:

Programming Languages and Tools: Exposures to languages like Python, Java, or SQL allow students hands-on practice with the same. It is not only coding but also debugging and optimizing programs in real-world situations.

Software Development Life Cycle (SDLC): While students may have learned SDLC theories in class, internships provide firsthand experience of every stage, from requirements gathering to deployment, with deadlines, teamwork, and other related aspects. At the MSB College - a Top BCA College in Bhubaneswar, curriculum is designed to promote internships that give students the opportunity to apply concepts learned in the class to real-world projects.

Skills Acquisition-Based Capabilities

In the field of technology, skills are just as vital as knowledge. Internships help students hone technical skills needed for different roles, such as software development, database management, and so much more.

Crucial Technical Skills Acquired During Internship Coding and Debugging: Live projects are a means by which the students practice their coding abilities while practically understanding the nuances of debugging complex code structures. Database Management: Students are also taught on databases such as MySQL, MongoDB, or Oracle where they learn how to manage and query data securely and maintain it safely.

Networking and Cybersecurity: Students would also be exposed to the most basic networking concepts and methods or measures of cybersecurity, which is an ever-increasing aspect of today's digital world.

These hands-on technical experiences make students from MSB College industry-ready, thereby making it the Best BCA College in Odisha for aspiring tech professionals.

Building Soft Skills and Professionalism

Internships not only provide technical skills but also an ideal opportunity to develop most of the needed soft skills, such as communication, time management, and teamwork. In the corporate world, interns learn about the culture of how to relate and work with colleagues on projects, work within organizational structures- skills indispensable for most jobs in tech.

Primary Soft Skills Obtained Through Internships

Communication: Interns learn the way to communicate technical information to non-technical stakeholders, explain ideas very clearly, and participate in team discussions.

Time Management: Work on real projects teaches them how to manage deadlines, prioritize tasks, and work efficiently.

Teamwork: Interns usually work with a diversified team, which develops collaboration skills, accepts constructive feedback, and contributes toward collective goals.

Preparing the student through these skills from internship helps to make an all-rounded professional at MSB College. Hence, it is a Top BCA College in Odisha with holistic development of the students.

Improving Post-Graduation Job Opportunities

Employers like a candidate who has experiential practices, and internships would be awesome added value to any resume. Recruiters, in many cases, hire persons who have already worked in relevant settings because they require almost no training and can be productive within no time.

Competitive Advantage: This internship will give BCA students an edge, as they can present their actual experience in resumes.

Industry Connections: This also means students get to connect with industry professionals who may be able to place them elsewhere or give them a reference.

Job Opportunities Post Graduation: As an avenue for recruitment, most companies have an arrangement where the best performing interns will be retained after graduation. Thus, owing to the enhanced employability and better career prospects while studying at MSB College, one of the Best BCA Colleges in Bhubaneswar, students have a higher placement after completion.

Unveiling the Scope of Career Options and Specializations

BCA is a practice area related to software development and network administration. An internship enables a student to explore these career paths and finally settle for goals that will be worked on in the future. Through practical training, students discover which specializations suit them the most as per their skills and interests.

Discovering Career Paths via Internship:

Software Development: Relevant internships in software development teach a student how to code, testing, and the lifecycle management of software. It is an excellent opportunity for students in learning programming and other development aspects.

Data Science: There are also data science opportunities where a student would be exposed to hands-on experience in data analysis, machine learning, and visualization. With the expanse of data science in recent years, many students would find this exciting.

Network Administration and Cybersecurity: Internship opportunities in the IT department expose students to the configuration and monitoring of networks as well as cybersecurity basics.

Such exposure helps MSB College students make the best career choices, hence becoming the Top BCA College in Bhubaneswar for students with a bouquet of career opportunities.

Harvesting Mentorship from Experienced Industry Professionals

BCA internships would surely enable the students to get in touch with the industry practitioners. Mentorship by the industry veterans would help the students gain insight into the industry trends, best practices and advice that would turn out to be precious for a BCA student's career.

Advantages of Mentorship in Internships: Best Practices Learnt-Interns learn best practices on the coding, design of software, and project management skills required to do work efficiently. Career Guidance: Sometimes, mentors give career guidance. The students are made to know their strengths and weaknesses and advise on their careers as well. Networking : A mentor can introduce interns to a network of professionals, which can be very helpful in job placement and career advancement.

With the network of alumni and industry contacts, MSB College takes all measures to ensure that its students are interning under mentors who are skilled. Therefore, MSB College proves to be the Best BCA College in Odisha for students on a lookout for a mentor-supported internship experience.

Developing Problem-Solving and Critical Thinking Skills

The rapid nature of the tech industry requires individuals who can think critically, debug some issues, and solve problems properly. The challenges that real-world internships pose to BCA students challenge them to devise solutions and make decisions on their own.

Critical Thinking: Interns must critically analyze complex issues, whether it is debugging code or optimizing algorithms, and thus intensify their critical thinking skills.

Immediate Problem Resolution: Through working on real projects with close deadlines, the student learns to troubleshoot problems quickly and effectively. Adaptability is also taught to students by internships due to changing requirements or unexpected problems in the tech world. This problem-solving experience gives a sound boost to the abilities of MSB College students, making it the Top BCA College in Odisha by developing strong critical thinkers and apt problem solvers.

Portfolio of Projects

Experience on actual projects: It enables the students to show what they have learned and exhibited within their portfolios. A strong portfolio filled with project-based work and assignments from an internship can indeed speak about the student skills and experience for usage in applying for jobs.

Project Diversity: Interns are working on various projects that might be anything as simple as app development, data analysis, or web designs. Demonstrates practical application: An internship project portfolio is full of proof that a student can apply knowledge in real-world applications, and such proof carries more weight for the student with employers than it would otherwise. They can demonstrate skills competence: Projects in a portfolio speak to the competency of a student in programming languages, frameworks, and tools.

Here, the students of MSB College are always encouraged to compile a portfolio during their internship, which provides them with an excellent advantage in the job market, making MSB stand out as the Best BCA College in Bhubaneswar.

Developing Confidence and Professional Identity

An experience in an internship is not only focused on the acquisition of skills but also on building confidence and professional identity. If students can face and overcome the real challenges of the field, it helps them build greater confidence and self-assurance that they could eventually become proficient members in this tech field.

Professional Confidence: Internship work on actual projects builds confidence regarding technical and soft skills.

Understanding Workplace Dynamics: The process of staying in a professional environment helps students understand corporate culture, hierarchy, and workplace dynamics.

Gaining Professional Identity: It makes the students feel like professionals because through internships, they are motivated to set better goals and keep striving for improvements.

Being one of the top BCA colleges in Odisha, MSB College trains not only knowledgeable but also confident professionals. Moreover, students are encouraged to grow both in their personal and professional lives.

Conclusion: Worth of Internships for BCA Students at MSB College

Internship forms an integral part of the whole academic and professional journey of a BCA student. Internships give the students many advantages like developing the necessary technical skills, enhancing employability, building a professional network, and so on. This cannot be done in the class and needs knowledge of the real world. Thus, MSB College is the best BCA college in Bhubaneswar and the Best BCA College in Odisha which makes sure to provide the students with useful internship experiences for exceling the ever-evolving industry of tech.

An internship is an investment in the future for BCA students. As they gain real-world experience, develop their hard and soft skills, and explore different avenues of career opportunities, they become prepared for entering workforces with both the confidence and competence to perform well in it. MSB The college provides all supports, advice, and opportunities to shine in internships to ensure that their students are all set for a successful career in computer applications.

0 notes

Text

Microsoft SQL Server 2025: A New Era Of Data Management

Microsoft SQL Server 2025: An enterprise database prepared for artificial intelligence from the ground up

The data estate and applications of Azure clients are facing new difficulties as a result of the growing use of AI technology. With privacy and security being more crucial than ever, the majority of enterprises anticipate deploying AI workloads across a hybrid mix of cloud, edge, and dedicated infrastructure.

In order to address these issues, Microsoft SQL Server 2025, which is now in preview, is an enterprise AI-ready database from ground to cloud that applies AI to consumer data. With the addition of new AI capabilities, this version builds on SQL Server’s thirty years of speed and security innovation. Customers may integrate their data with Microsoft Fabric to get the next generation of data analytics. The release leverages Microsoft Azure innovation for customers’ databases and supports hybrid setups across cloud, on-premises datacenters, and edge.

SQL Server is now much more than just a conventional relational database. With the most recent release of SQL Server, customers can create AI applications that are intimately integrated with the SQL engine. With its built-in filtering and vector search features, SQL Server 2025 is evolving into a vector database in and of itself. It performs exceptionally well and is simple for T-SQL developers to use.Image credit to Microsoft Azure

AI built-in

This new version leverages well-known T-SQL syntax and has AI integrated in, making it easier to construct AI applications and retrieval-augmented generation (RAG) patterns with safe, efficient, and user-friendly vector support. This new feature allows you to create a hybrid AI vector search by combining vectors with your SQL data.

Utilize your company database to create AI apps

Bringing enterprise AI to your data, SQL Server 2025 is a vector database that is enterprise-ready and has integrated security and compliance. DiskANN, a vector search technology that uses disk storage to effectively locate comparable data points in massive datasets, powers its own vector store and index. Accurate data retrieval through semantic searching is made possible by these databases’ effective chunking capability. With the most recent version of SQL Server, you can employ AI models from the ground up thanks to the engine’s flexible AI model administration through Representational State Transfer (REST) interfaces.

Furthermore, extensible, low-code tools provide versatile model interfaces within the SQL engine, backed via T-SQL and external REST APIs, regardless of whether clients are working on data preprocessing, model training, or RAG patterns. By seamlessly integrating with well-known AI frameworks like LangChain, Semantic Kernel, and Entity Framework Core, these tools improve developers’ capacity to design a variety of AI applications.

Increase the productivity of developers

To increase developers’ productivity, extensibility, frameworks, and data enrichment are crucial for creating data-intensive applications, such as AI apps. Including features like support for REST APIs, GraphQL integration via Data API Builder, and Regular Expression enablement ensures that SQL will give developers the greatest possible experience. Furthermore, native JSON support makes it easier for developers to handle hierarchical data and schema that changes regularly, allowing for the development of more dynamic apps. SQL development is generally being improved to make it more user-friendly, performant, and extensible. The SQL Server engine’s security underpins all of its features, making it an AI platform that is genuinely enterprise-ready.

Top-notch performance and security

In terms of database security and performance, SQL Server 2025 leads the industry. Enhancing credential management, lowering potential vulnerabilities, and offering compliance and auditing features are all made possible via support for Microsoft Entra controlled identities. Outbound authentication support for MSI (Managed Service Identity) for SQL Server supported by Azure Arc is introduced in SQL Server 2025.

Additionally, it is bringing to SQL Server performance and availability improvements that have been thoroughly tested on Microsoft Azure SQL. With improved query optimization and query performance execution in the latest version, you may increase workload performance and decrease troubleshooting. The purpose of Optional Parameter Plan Optimization (OPPO) is to greatly minimize problematic parameter sniffing issues that may arise in workloads and to allow SQL Server to select the best execution plan based on runtime parameter values supplied by the customer.

Secondary replicas with persistent statistics mitigate possible performance decrease by preventing statistics from being lost during a restart or failover. The enhancements to batch mode processing and columnstore indexing further solidify SQL Server’s position as a mission-critical database for analytical workloads in terms of query execution.

Through Transaction ID (TID) Locking and Lock After Qualification (LAQ), optimized locking minimizes blocking for concurrent transactions and lowers lock memory consumption. Customers can improve concurrency, scalability, and uptime for SQL Server applications with this functionality.

Change event streaming for SQL Server offers command query responsibility segregation, real-time intelligence, and real-time application integration with event-driven architectures. New database engine capabilities will be added, enabling near real-time capture and publication of small changes to data and schema to a specified destination, like Azure Event Hubs and Kafka.

Azure Arc and Microsoft Fabric are linked

Designing, overseeing, and administering intricate ETL (Extract, Transform, Load) procedures to move operational data from SQL Server is necessary for integrating all of your data in conventional data warehouse and data lake scenarios. The inability of these conventional techniques to provide real-time data transfer leads to latency, which hinders the development of real-time analytics. In order to satisfy the demands of contemporary analytical workloads, Microsoft Fabric provides comprehensive, integrated, and AI-enhanced data analytics services.

The fully controlled, robust Mirrored SQL Server Database in Fabric procedure makes it easy to replicate SQL Server data to Microsoft OneLake in almost real-time. In order to facilitate analytics and insights on the unified Fabric data platform, mirroring will allow customers to continuously replicate data from SQL Server databases running on Azure virtual machines or outside of Azure, serving online transaction processing (OLTP) or operational store workloads directly into OneLake.

Azure is still an essential part of SQL Server. To help clients better manage, safeguard, and control their SQL estate at scale across on-premises and cloud, SQL Server 2025 will continue to offer cloud capabilities with Azure Arc. Customers can further improve their business continuity and expedite everyday activities with features like monitoring, automatic patching, automatic backups, and Best Practices Assessment. Additionally, Azure Arc makes SQL Server licensing easier by providing a pay-as-you-go option, giving its clients flexibility and license insight.

SQL Server 2025 release date

Microsoft hasn’t set a SQL Server 2025 release date. Based on current data, we can make some confident guesses:

Private Preview: SQL Server 2025 is in private preview, so a small set of users can test and provide comments.

Microsoft may provide a public preview in 2025 to let more people sample the new features.

General Availability: SQL Server 2025’s final release date is unknown, but it will be in 2025.

Read more on govindhtech.com

#MicrosoftSQLServer2025#DataManagement#SQLServer#retrievalaugmentedgeneration#RAG#vectorsearch#GraphQL#Azurearc#MicrosoftAzure#MicrosoftFabric#OneLake#azure#microsoft#technology#technews#news#govindhtech

0 notes

Text

Microsoft Fabric - Unlocking Data’s True Potential

As the world faces an explosion of data, businesses are under increasing pressure to find intelligent, unified solutions to harness its value. Microsoft Fabric is one such solution, promising to address complex data challenges in industries like healthcare, manufacturing, and logistics. But what does this platform truly offer? Let’s explore how Microsoft Fabric can transform the way businesses approach their data strategy.

Tackling Industry-Specific Challenges with Fabric

Every industry grapples with its own unique data challenges. For manufacturers, data silos across departments like production, quality control, and logistics prevent companies from leveraging data for comprehensive decision-making. Healthcare faces its own hurdle: fragmented patient records that make it harder for providers to deliver timely, personalized care.

Microsoft Fabric stands out by unifying data, providing one cohesive platform where businesses can centralize and analyze data from across departments. This integrated approach makes data actionable, offering insights that directly tackle the specific challenges of each industry. Companies leveraging Microsoft Fabric consulting can better customize these solutions to meet their unique needs, accelerating the path to optimized data strategies.

Real-World Use Cases & Measurable Benefits

Predictive Maintenance in Manufacturing Manufacturers can collect real-time data from IoT sensors across their equipment to monitor the health of machinery. With Microsoft Fabric services, companies can employ predictive analytics to foresee maintenance needs, reducing unplanned downtime by 30% and cutting maintenance costs by 25%. This data-driven approach not only saves money but ensures that production lines stay operational with minimal interruptions.

Enhanced Patient Care in Healthcare In healthcare, unifying patient data is a game-changer. With Microsoft Fabric, healthcare providers can consolidate patient records from various departments and external sources, enabling them to offer more personalized and timely care. As a result, providers have seen a 20% increase in patient satisfaction scores, thanks to faster response times and more informed decision-making.

Bridging Data Gaps with Integration

Microsoft Fabric service integrates with critical tools like Power BI, SQL databases, and machine learning models, making it easier to unify data sources. This allows businesses to draw insights across platforms without needing to overhaul existing systems.

For instance, a manufacturing company can leverage Fabric to combine IoT data from production equipment with inventory data, enabling real-time insights into stock levels and machinery health. This integration supports proactive restocking and maintenance, helping maintain consistent production schedules. In healthcare, Fabric can unify patient data from wearables and electronic medical records, offering a comprehensive patient view that improves care coordination and response times. By bridging data gaps, Fabric empowers these industries with a holistic view of operations, enhancing both efficiency and outcomes.

Prioritizing Data Governance and Compliance

For industries like healthcare, finance, and government, data governance isn’t optional—it's essential. These industries operate under strict regulations, and mishandling data can lead to severe penalties and reputational damage. Microsoft Fabric’s built-in governance features, such as role-based access, data lineage tracking, and audit trails, help businesses comply with these regulations while maintaining control over their data. By giving organizations a clear view of data access and usage, Fabric allows teams to focus on innovation rather than constantly worrying about compliance.

Future-Proofing Data Strategy with Fabric

As businesses grow, their data analysis needs become more sophisticated. Microsoft Fabric is built to scale with those needs, allowing businesses to expand data operations without starting from scratch. Whether you're adding new data sources, increasing storage, or expanding your analytics capabilities, Fabric adapts as your business grows.

Fabric's adaptability also positions it as an ideal platform for businesses looking to leverage artificial intelligence (AI) and machine learning (ML) in the future. The platform’s flexibility supports AI-driven innovations, ensuring businesses can stay competitive in a rapidly changing data landscape. Engaging with Microsoft Fabric consultants allows organizations to strategically plan for the future and ensure their data strategies evolve as their needs grow.

Conclusion

Microsoft Fabric is a powerful data platform that promises to unify and empower organizations through actionable insights, integrated data, and top-notch governance features. With its industry-specific solutions and scalable framework, Fabric equips businesses to confidently address data challenges and advance their data strategy. It’s an ideal choice for organizations ready to transform their data into a strategic asset, paving the way for growth and innovation across sectors.

Ready to transform how your organization handles data? Contact PreludeSys to explore how Microsoft Fabric can be tailored to your needs and drive measurable impact in your industry.

#microsoft fabric service#microsoft fabric services#microsoft fabric consulting#microsoft fabric consulting service#microsoft fabric consulting company

0 notes

Text

Microsoft Fabric Training | Microsoft Fabric Course

Using Data Flow in Azure Data Factory in Microsoft Fabric Course

Using Data Flow in Azure Data Factory in Microsoft Fabric Course

In Microsoft Fabric Course-Azure Data Factory (ADF) is a powerful cloud-based data integration service that allows businesses to build scalable data pipelines. One of the key features of ADF is the Data Flow capability, which allows users to visually design and manage complex data transformation processes. With the rise of modern data integration platforms such as Microsoft Fabric, organizations are increasingly leveraging Azure Data Factory to handle big data transformations efficiently. If you’re considering expanding your knowledge in this area, enrolling in a Microsoft Fabric Training is an excellent way to learn how to integrate tools like ADF effectively.

What is Data Flow in Azure Data Factory?

Data Flow in Azure Data Factory enables users to transform and manipulate data at scale. Unlike traditional data pipelines, which focus on moving data from one point to another, Data Flows allow businesses to design transformation logic that modifies the data as it passes through the pipeline. You can apply various transformation activities such as filtering, aggregating, joining, and more. These activities are performed visually, which makes it easier to design complex workflows without writing any code.

The use of Microsoft Fabric Training helps you understand how to streamline this process further by making it compatible with other Microsoft solutions. By connecting your Azure Data Factory Data Flows to Microsoft Fabric, you can take advantage of its analytical and data management capabilities.

Key Components of Data Flow

There are several important components in Azure Data Factory’s Data Flow feature, which you will also encounter in Microsoft Fabric Training in Hyderabad:

Source and Sink: These are the starting and ending points of the data transformation pipeline. The source is where the data originates, and the sink is where the transformed data is stored, whether in a data lake, a database, or any other storage service.

Transformation: Azure Data Factory offers a variety of transformations such as sorting, filtering, aggregating, and conditional splitting. These transformations can be chained together to create a custom flow that meets your specific business requirements.

Mapping Data Flows: Mapping Data Flows are visual representations of how the data will move and transform across various stages in the pipeline. This simplifies the design and maintenance of complex pipelines, making it easier to understand and modify the workflow.

Integration with Azure Services: One of the key benefits of using Azure Data Factory’s Data Flows is the tight integration with other Azure services such as Azure SQL Database, Data Lakes, and Blob Storage. Microsoft Fabric Training covers these integrations and helps you understand how to work seamlessly with these services.

Benefits of Using Data Flow in Azure Data Factory

Data Flow in Azure Data Factory provides several advantages over traditional ETL tools, particularly when used alongside Microsoft Fabric Course skills:

Scalability: Azure Data Factory scales automatically according to your data needs, making it ideal for organizations of all sizes, from startups to enterprises. The underlying infrastructure is managed by Azure, ensuring that you have access to the resources you need when you need them.

Cost-Effectiveness: You only pay for what you use. With Data Flow, you can easily track resource consumption and optimize your processes to reduce costs. When combined with Microsoft Fabric Training, you’ll learn how to make cost-effective decisions for data integration and transformation.

Real-Time Analytics: By connecting ADF Data Flows to Microsoft Fabric, businesses can enable real-time data analytics, providing actionable insights from massive datasets in a matter of minutes or even seconds. This is particularly valuable for industries such as finance, healthcare, and retail, where timely decisions are critical.

Best Practices for Implementing Data Flow

Implementing Data Flow in Azure Data Factory requires planning and strategy, particularly when scaling for large data sets. Here are some best practices, which are often highlighted in Microsoft Fabric Training in Hyderabad:

Optimize Source Queries: Use filters and pre-aggregations to limit the amount of data being transferred. This helps reduce processing time and costs.

Monitor Performance: Utilize Azure’s monitoring tools to keep an eye on the performance of your Data Flows. Azure Data Factory provides built-in diagnostics and monitoring that allow you to quickly identify bottlenecks or inefficiencies.

Use Data Caching: Caching intermediate steps of your data flow can significantly improve performance, especially when working with large datasets.

Modularize Pipelines: Break down complex transformations into smaller, more manageable modules. This approach makes it easier to debug and maintain your data flows.

Integration with Microsoft Fabric: Use Microsoft Fabric to further enhance your data flow capabilities. Microsoft Fabric Training will guide you through this integration, teaching you how to make the most of both platforms.

Conclusion

Using Data Flow in Azure Data Factory offers businesses a robust and scalable way to transform and manipulate data across various stages. When integrated with other Microsoft tools, particularly Microsoft Fabric, it provides powerful analytics and real-time insights that are critical in today’s fast-paced business environment. Through hands-on experience with a Microsoft Fabric Course, individuals and teams can gain the skills needed to optimize these data transformation processes, making them indispensable in today’s data-driven world.

Whether you are a data engineer or a business analyst, mastering Azure Data Factory and its Data Flow features through Microsoft Fabric Training will provide you with a solid foundation in data integration and transformation. Consider enrolling in Microsoft Fabric Training in Hyderabad to advance your skills and take full advantage of this powerful toolset.

Visualpath is the Leading and Best Software Online Training Institute in Hyderabad. Avail complete Microsoft Fabric Training Worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on — +91–9989971070.

Visit https://www.visualpath.in/online-microsoft-fabric-training.html

#Microsoft Fabric Training#Microsoft Fabric Course#Microsoft Fabric Training In Hyderabad#Microsoft Fabric Certification Course#Microsoft Fabric Course in Hyderabad#Microsoft Fabric Online Training Course#Microsoft Fabric Online Training Institute

1 note

·

View note

Text

Style rooms with this SQL Programmer Wall Art Minimalist Design! Order it and hang it straight away with built-in wall mounts and rubber pads on the back ready to protect against damage. • 0.75″ (19.05 mm) thick canvas • Canvas fabric weight: 10.15 +/- 0.74 oz/yd² (344 g/m² +/- 25g/m²) • Wall mounts attached • Rubber pads on the back to avoid damage • Slimmer than regular canvases Important: This product is available in the US, Canada, Europe, and the UK only. If your shipping address is outside these regions, please choose a different product. This product is made especially for you as soon as you place an order, which is why it takes us a bit longer to deliver it to you. Making products on demand instead of in bulk helps reduce overproduction, so thank you for making thoughtful purchasing decisions! Discover the beauty in simplicity with our SQL Programmer Wall Art. This minimalist design is ideal for programmers who want to add a touch of sophistication to their space.

0 notes

Text

Oracle SQL Server Training in Mohali accesses your database opportunity

In the computerized age, information rules, driving development, effectiveness, and upper hand across enterprises. As organizations saddle the force of information to go with informed choices and smooth out activities, the interest for gifted experts capable in data set administration advancements, for example, Oracle and SQL Server keeps on taking off. In the energetic city of Mohali, hopeful people and old pros the same are quickly taking advantage of the chance to improve their aptitude through particular training programs custom fitted to meet the developing requirements of the business.

Oracle\SQL Server training mohali offers a far reaching educational program intended to outfit members with the information, abilities, and active experience expected to succeed in data set organization, improvement, and enhancement. With an emphasis on pragmatic, genuine applications, these training programs overcome any issues among hypothesis and work on, enabling students to explore complex information bases with certainty and accuracy.

One of the critical benefits of Oracle\SQL Server training in Mohali is the admittance to master teachers who bring an abundance of industry experience and knowledge to the homeroom. These old pros influence their aptitude to direct members through the complexities of data set plan, execution, and the board, offering significant bits of knowledge and best practices gathered from long stretches of active involvement with the field.

Besides, Oracle\SQL Server training in Mohali is recognized by its cutting edge offices and state of the art innovation framework. Members approach vigorous programming stages and devices ordinarily utilized in data set administration, furnishing them with a sensible learning climate where they can apply hypothetical ideas to reasonable situations. From data set displaying and questioning to execution tuning and catastrophe recuperation, these training programs cover many points fundamental for outcome in the powerful universe of information the board.

Besides, Oracle\SQL Server training in Mohali takes special care of students of all levels, from fledglings hoping to lay out areas of strength for an in data set essentials to experienced experts trying to grow their range of abilities and keep up to date with the most recent industry patterns. With adaptable booking choices and modified training modules, members can fit their growth opportunity to suit their singular objectives, inclinations, and accessibility.

The complete idea of Oracle\SQL Server training in Mohali stretches out past specialized capability to envelop fundamental delicate abilities like correspondence, coordinated effort, and critical thinking. Through intelligent talks, active activities, and gathering projects, members foster decisive abilities to reason and cooperation abilities fundamental for outcome in the present high speed, cooperative workplaces.

Oracle\SQL Server training mohali offers significant systems administration open doors, permitting members to interface with peers, industry specialists, and possible businesses. These systems administration occasions, studios, and courses give a stage to information sharing, proficient turn of events, and professional success, enabling members to fabricate significant connections and grow their expert organization inside the nearby and worldwide data set local area.

Oracle\SQL Server training in Mohali fills in as an impetus for profession development and progression in the field of data set administration. By giving extensive, active training, master guidance, and significant systems administration potential open doors, these projects engage members to dominate fundamental abilities, upgrade their skill, and open new open doors in the powerful universe of information. Whether you're an old pro hoping to remain on the ball or a hopeful individual anxious to break into the field, Oracle\SQL Server training in Mohali offers the information, assets, and backing you really want to prevail in the present information driven scene.

For More Info:-

Animation & Multimedia training mohali

0 notes

Text

Leveraging the Capabilities of Web Development

An outline Developing a comprehensive and resilient online presence is critical for any organisation seeking to thrive and differentiate itself from rivals in the current era of digitalization.

The creation of websites is an essential component in the development of interactive and efficient online platforms. Having a comprehensive comprehension of the fundamentals of web development can substantially enhance your digital strategies, Irrespective of your organisational status (small business owner, freelancer, or member of a large corporation). The phrase "web development" pertains to the undertaking of tasks involved in the creation and management of websites and web applications. The user interface design, database administration, and the development of software components are a few of the responsibilities that will be expected of you.

The domain of web development encompasses all tasks associated with the creation of websites that are visible via the internet or an intranet. Web development entails the construction of applications that operate on a web browser. The complexity of these applications can vary widely, spanning from basic static pages of text to intricate web-based enterprises and social networking platforms. The importance of web development is derived from the crucial function it performs in the establishment and upkeep of websites. Websites are critical platforms that afford individuals, organisations, and businesses the ability to exhibit their products or services, establish an online presence, and interact with their target audience in a dynamic and interactive fashion.

In the current landscape of fierce market competition, the domain of web development encompasses a broader scope than mere website creation. The aims of this undertaking are to enhance functionality, guarantee universal website accessibility across all devices, and deliver an engaging user experience.