#Extract Google Maps Data

Explore tagged Tumblr posts

Text

A complete guide on extracting restaurant data from Google Maps includes using web scraping tools like BeautifulSoup or Scrapy in Python, leveraging the Google Places API for structured data access, and ensuring compliance with Google's terms of service. It covers steps from setup to data extraction and storage.

0 notes

Text

Discover the techniques of extracting location data from Google Maps. Learn to harness the power of this resource to gather accurate geographic information.

Read More: https://www.locationscloud.com/location-data-extraction-from-google-maps/

#Extract Google Maps Data#LocationsCloud#Store Location Data#Location Intelligence#Location Data Provider

0 notes

Text

Dragon Age: The Veilguard

▶ Extracted Asset Drive Folder

Recently finished my first DAV playthrough and wanted to get my hands into the files 🤚 so just like I did with CP77, I put up little google drive folder with extracted assets! Made possible thanks to the Frostbite Modding Tool ◀

OBVIOUS Spoiler warning - I don't recommend looking at the files until you're done with the game's main story!

I wasn't able to grab everything just yet as the majority of assets aren't fully accessible yet (corrupted/missing data). Expect some extracted assets to have some artifacts as well!

But you can already find:

HUD elements

Codex entries's full art

CC, Map, Journal Icons

...and more!

Every elements has been sorted in folders for an (hopefully) easy browsing - I'll try to keep this drive updated :3

▶ THIS IS FOR PERSONAL USE ONLY!

You can use the assets for your videos, thumbnails, character templates, art, mods... but do NOT use these assets for any commercial purposes! Every assets and files are the property of Bioware and their artists

This is from a fan for fans, let's keep it fair and fun! 🙏

If you appreciate my work consider supporting me on Ko-Fi 💜

1K notes

·

View notes

Text

How Web Scraping is Used to Extract Google Maps Data for Non-Techs?

Google maps evolved almost 15 years back and emerged as an app with entirely new advertising levels and prospects from just straightforward navigation for all types of businesses.

Uses Of Google Maps Data

It is safe to say that gathering data on a B2B lead is a monkey task that doesn’t stimulate much innovation or drive productivity. Automated solutions will address this. It is establishing a potential clientele.

We are locating the specific product’s place of sale and selecting the top result from the list of options.

Here we will use Google Maps data scraper to analyze geospatial data for engineering or scientific purposes. For instance, while calculating global distances on Earth to the location of cyclones and doing climate research using geolocation and satellite data.

How To Scrape Google Maps?

1. Specify the business or location in which you are interested.

Now, you can copy the URL from the webpage. You can search by location (state, city, or country) if you have a particular area in mind. Organizations can readily find using a keyword or industry.

2. Data fields that you need to scrape.

Next, specify the information you want to include in the finished file. These could be information about the company, reviews, etc. We can retrieve all necessary information, including rating, name, location, category, hours, website, and phone, for industry leads from the information page.

3. Apply Here To Receive A Free Extract.

Completing an online process for RetailGators requires you to input your contact email address, the URL you previously copied, and the details you need to scrape. If you have any unique needs, please be as detailed as possible.

4. Take a look at the output test file.

We will send the sample of the collected data to your email address. You must look through every file to ensure the necessary information is present.

Conclusion

One minor drawback is that it’s faster and more effective to use proxies when Google Map Scraping. As a result, if you start extracting Maps regularly, it will eventually cost you money. Still, in the meanwhile, you get a free month of Retailgators Proxy as soon as you sign up at Retailgators. So act quickly and use our free Google Maps Scraper on Retailgators Store to start your first month.

As per your needs, we can offer eCommerce data scraping services. Every time you require information from Google Maps, use RetailGators. Save time and obtain information without difficulty.

#Data extraction#Extract data from google map#Data scraping services#Google map scraper#eCommerce data scraping services

1 note

·

View note

Text

How To Extract Food Data From Google Maps With Google Colab & Python?

Do you want a comprehensive list of restaurants with reviews and locations every time you visit a new place or go on vacation? Sure you do, because it makes your life so much easier. Data scraping is the most convenient method.

Web scraping, also known as data scraping, is the process of transferring information from a website to a local network. The result is in the form of spreadsheets. So you can get a whole list of restaurants in your area with addresses and ratings in one simple spreadsheet! In this blog, you will learn how to use Python and Google Colab to Extract food data From Google Maps.

WWe are scraping restaurant and food data using Python 3 scripts since installing Python can be pretty handy. We use Google Colab to run the proofreading script since it allows us to run Python scripts on the server.

As our objective is to get a detailed listing of locations, extracting Google Maps data is an ideal solution. Using Google Maps data scraping, you can scrape data like name, area, location, place types, ratings, phone numbers, and other applicable information. For startups, we can utilize a places data scraping API. A places Scraping API makes that very easy to scrape location data.

Step 1: What information would you need?

For example, here we are searching for "restaurants near me" in Sanur, Bali, within 1 kilometer. So the criteria could be "restaurants," "Sanur Beach," and "1 mile."Let us convert this into Python:

These "keywords" help us find places categorized as restaurants OR results that contain the term "restaurant." A comprehensive list of sites whose names and types both have the word "restaurant" is better than using "type" or "name" of places.

For example, we can make reservations at Se'i Sapi and Sushi Tei at the same time. If we use the term "name," we will only see places whose names contain the word "restaurant." If we use the word "type," we get areas whose type is "restaurant." However, using "keywords" has the disadvantage that data cleaning takes longer.

Step 2: Create some necessary libraries, like:

Create some necessary modules, such as:

The "files imported from google. colab" did you notice? Yes, to open or save data in Google Colab, we need to use google. colab library.

Step 3: Create a piece of code that generates data based on the first Step's variables.

With this code, we get the location's name, longitude, latitude, IDs, ratings, and area for each keyword and coordinate. Suppose there are 40 locales near Sanur; Google will output the results on two pages. If there are 55 results, there are three pages. Since Google only shows 20 entries per page, we need to specify the 'next page token' to retrieve the following page data.

The maximum number of data points we retrieve is 60, which is Google's policy. For example, within one kilometer of our starting point, there are 140 restaurants. This means that only 60 of the 140 restaurants will be created.

So, to avoid inconsistencies, we need to get both the radius and the coordinates right. Ensure that the diameter is not too large so that "only 60 points are created, although there are many of them". Also, ensure the radius is manageable, as this would result in a long list of coordinates. Neither can be efficient, so we need to capture the context of a location earlier.

Continue reading the blog to learn more how to extract data from Google Maps using Python.

Step 4: Store information on the user's computer

Final Step: To integrate all these procedures into a complete code:

You can now quickly download data from various Google Colab files. To download data, select "Files" after clicking the arrow button in the left pane!

Your data will be scraped and exported in CSV format, ready for visualization with all the tools you know! This can be Tableau, Python, R, etc. Here we used Kepler.gl for visualization, a powerful WebGL-enabled web tool for geographic diagnostic visualizations.

The data is displayed in the spreadsheet as follows:

In the Kepler.gl map, it is shown as follows:

From our location, lounging on Sanur beach, there are 59 nearby eateries. Now we can explore our neighborhood cuisine by adding names and reviews to a map!

Conclusion:

Food data extraction using Google Maps, Python, and Google Colab can be an efficient and cost-effective way to obtain necessary information for studies, analysis, or business purposes. However, it is important to follow Google Maps' terms of service and use the data ethically and legally. However, you should be aware of limitations and issues, such as managing web-based applications, dealing with CAPTCHA, and avoiding Google blocking.

Are you looking for an expert Food Data Scraping service provider? Contact us today! Visit the Food Data Scrape website and get more information about Food Data Scraping and Mobile Grocery App Scraping. Know more : https://www.fooddatascrape.com/how-to-extract-food-data-from-google-maps-with-google-colab-python.php

#Extract Food Data From Google Maps#extracting Google Maps data#Google Maps data scraping#Food data extraction using Google Maps

0 notes

Text

Top 5 Selling Odoo Modules.

In the dynamic world of business, having the right tools can make all the difference. For Odoo users, certain modules stand out for their ability to enhance data management and operations. To optimize your Odoo implementation and leverage its full potential.

That's where Odoo ERP can be a life savior for your business. This comprehensive solution integrates various functions into one centralized platform, tailor-made for the digital economy.

Let’s drive into 5 top selling module that can revolutionize your Odoo experience:

Dashboard Ninja with AI, Odoo Power BI connector, Looker studio connector, Google sheets connector, and Odoo data model.

1. Dashboard Ninja with AI:

Using this module, Create amazing reports with the powerful and smart Odoo Dashboard ninja app for Odoo. See your business from a 360-degree angle with an interactive, and beautiful dashboard.

Some Key Features:

Real-time streaming Dashboard

Advanced data filter

Create charts from Excel and CSV file

Fluid and flexible layout

Download Dashboards items

This module gives you AI suggestions for improving your operational efficiencies.

2. Odoo Power BI Connector:

This module provides a direct connection between Odoo and Power BI Desktop, a Powerful data visualization tool.

Some Key features:

Secure token-based connection.

Proper schema and data type handling.

Fetch custom tables from Odoo.

Real-time data updates.

With Power BI, you can make informed decisions based on real-time data analysis and visualization.

3. Odoo Data Model:

The Odoo Data Model is the backbone of the entire system. It defines how your data is stored, structured, and related within the application.

Key Features:

Relations & fields: Developers can easily find relations ( one-to-many, many-to-many and many-to-one) and defining fields (columns) between data tables.

Object Relational mapping: Odoo ORM allows developers to define models (classes) that map to database tables.

The module allows you to use SQL query extensions and download data in Excel Sheets.

4. Google Sheet Connector:

This connector bridges the gap between Odoo and Google Sheets.

Some Key features:

Real-time data synchronization and transfer between Odoo and Spreadsheet.

One-time setup, No need to wrestle with API’s.

Transfer multiple tables swiftly.

Helped your team’s workflow by making Odoo data accessible in a sheet format.

5. Odoo Looker Studio Connector:

Looker studio connector by Techfinna easily integrates Odoo data with Looker, a powerful data analytics and visualization platform.

Some Key Features:

Directly integrate Odoo data to Looker Studio with just a few clicks.

The connector automatically retrieves and maps Odoo table schemas in their native data types.

Manual and scheduled data refresh.

Execute custom SQL queries for selective data fetching.

The Module helped you build detailed reports, and provide deeper business intelligence.

These Modules will improve analytics, customization, and reporting. Module setup can significantly enhance your operational efficiency. Let’s embrace these modules and take your Odoo experience to the next level.

Need Help?

I hope you find the blog helpful. Please share your feedback and suggestions.

For flawless Odoo Connectors, implementation, and services contact us at

[email protected] Or www.techneith.com

#odoo#powerbi#connector#looker#studio#google#microsoft#techfinna#ksolves#odooerp#developer#web developers#integration#odooimplementation#crm#odoointegration#odooconnector

4 notes

·

View notes

Text

Tutorial - Extracting the assets from Shining Nikki for conversion for Sims games (or anything, really)

Finally! In advance I'm sorry for any errors since english isn't my first language (and even writing in my actual language is difficult for me so)

And first, a shoutout to The VG Resource forums, where I found initially info about this topic 😊 I'm just compilating all the knowledge I found there + the stuff I figured out in a single text, because boy I really wanted to find a guide like that when I first thought about converting SN stuff lol (and because there's a lot of creators more seasoned than me that could do a really good job with these assets 👀)

What this tutorial will teach you:

How to find and extract meshes and textures (when there's any) for later use, and some tips about how stuff are mapped etc on Shining Nikki.

What this tutorial will not teach you:

How to fully convert these assets for something usable for any sims game (because honestly neither I know how to do that stuff properly lol). It is assumed that you already know how to do that. If you don't know but has interest in learning about CC making (specially for TS3), I'd suggest you take a look at the TS3 Tutorial Hub, the MTS tutorials and This Post by Plumdrops if you're interested in hair conversion. Also take a look on my TS3 tutorials tag, that's where I reblog tutorials that I think might be useful :)

What you'll need:

An Android emulator (I recomend Nox)

A HEX editor (I recomend HxD)

Python and This Script for mass editing

AssetStudio

A 3D Modeling Software for later use. I use Blender 2.93 for major editing, and (begrudingly) Milkshape for hair (mostly because of the extra data tool).

Download everything you don't have and install it before starting this tutorial.

Now, before we continue, a little advice:

I wrote this tutorial assuming that people who would benefit from it will not put the finished work derivative from these assets behind a paywall or in any sort of monetization. These assets belong to Paper Games. So please don't be an ass and put your Shining Nikki conversions/edits/whatever behind a paywall.

The tutorial starts after the cut (and it's a long one).

Step 1:

Launch Nox, then open Play Store and log in with a Google account (if you don't have one, create it). Now download Shining Nikki from there.

After downloading the game, launch it. It will download a part of the game files. After that, log in on the game, or create a new account in any server (the server is only important if you want to actually play the game. For extracting it doesn't really matter since the game already has the assets for the upcoming events and chapters. It also doesn't matter if you actually own an item in game, you can extract the meshes and textures even if you don't have it in game). If you're creating a new account, the game will lead you through the presentation of it etc (unfortunately there's no way to skip it).

After that, click on that little arrow button on the main screen. There, you can download the actual clothing assets. Wait for the download to finish (at the date I'm writing this tutorial, it is around 13GB). When finished, close the game (not the emulator).

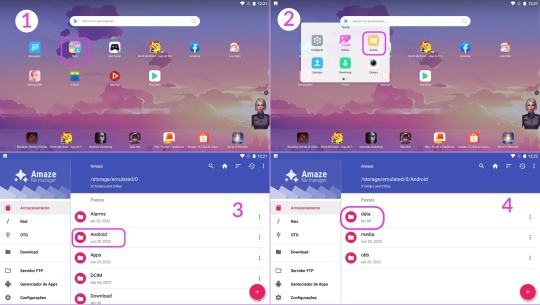

Step 2:

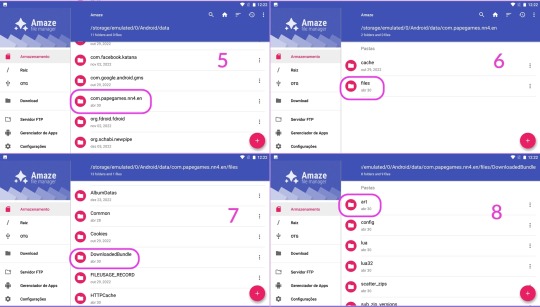

Now we're going to copy the assets to our computer. Click on Tools, then on Amaze File Manager. Navigate to Android > data > com.papergames.nn4.en > files > DownloadedBundle > art > character. This is the folder where (I believe) most of the assets are stored.

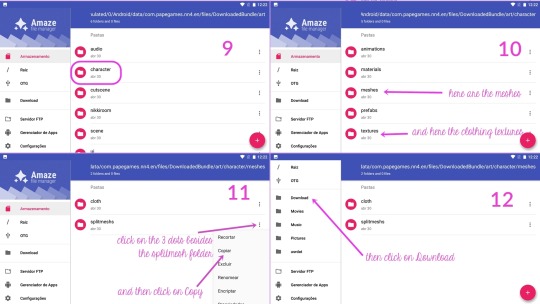

Now, where the stuff is located respectively:

Meshes are on the meshes > splitmeshs folder

Textures are on the textures > cloth folder

Tip: Want to really data dump everything? Just select the folders you want and copy to your PC! 😉

Click on the three dots on the side of the wished folder, then in copy. Then click on the three lines on the left upper corner to open the menu, and then click on Download. Now just pull the header of the app to show the Paste option and click on it. It might take a while to copy completely (the cloth folder might take longer since it's bigger, so be patient).

If you're confused, just follow the guide below:

The copied folder will be located at C:\Users\{your username}\Nox_share\Download

Step 3:

Now that we got the files, we need to make them readable by AssetStudio.

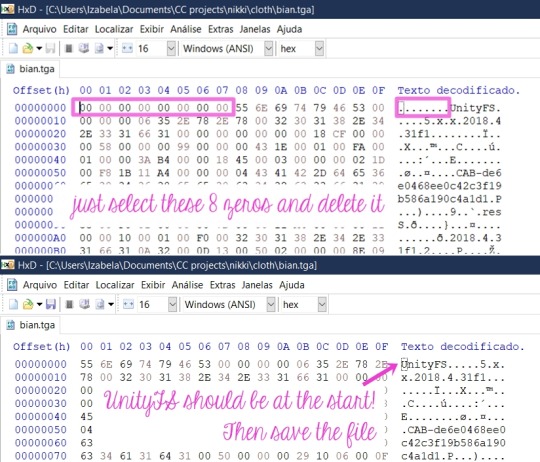

For this, we need to open the desired .asset file on a hex editor, and then delete the first 8 bytes of the file, and then save.

You can see it is a pain to do that manually to a lot of files right? This is why I asked my boyfriend to create a script to mass edit them. (I only manually edit when I'm grabbing the textures I want, because afaik the script won't work with .tga and the .png files, more about that forward this tutorial)

How to use the script:

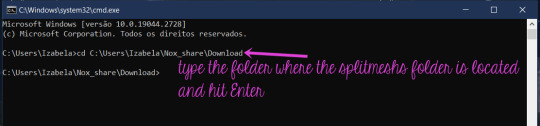

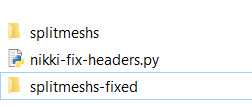

Make sure Python is already installed, grab the nikki-fix-headers.py file and place it on the folder where you copied the folder from the game (mine is still the Nox_Share Download folder).

It should look like this, the meshs folder and the script.

Let's open the Command Prompt. Hit Windows + R to open the Run dialog box, then type in cmd and hit Enter.

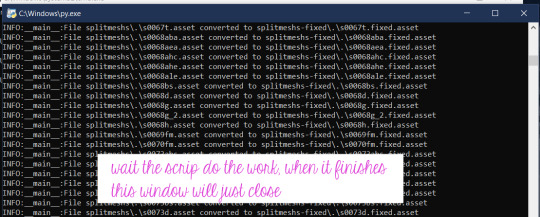

Now follow the instructions pictured below:

The folder with the edited files will be at the same location:

Now, we finally can open it all on AssetStudio and see whats inside 👀

Step 4:

Open AssetStudio. Now click on File > Load Folder and select the folder where your edited meshes are (mine is "splitmeshs-fixed"). Wait the program load everything. Click on Filter Type > Mesh, and the on the Asset List tab, click twice on the Name to sort everything by the right order, and now we can see the meshes!

To extract any asset, just select and right-click the desired groups, click in Export selected assets and select a folder where you wish to save it.

Stuff you need to know about the meshes:

Step 4-A: Everything is separated by groups.

Of course you'll have to export everything to have a complete piece. Only a few pieces has a single group. When exporting, you have to select every group with the same name (read below), and the result will be .obj files of each group that you have to put together in a 3D application.

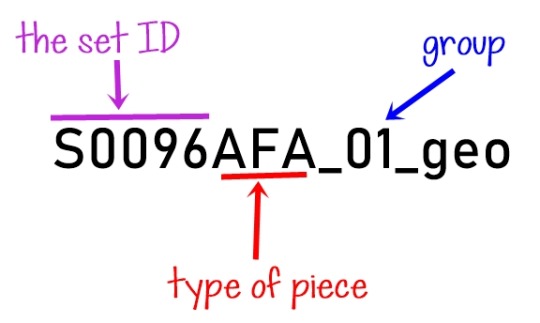

Step 4-B: The names are weird.

They're a code that indicates the set, the piece, the group.

Items that doesn't belong to a set won't have the "S...something", instead they'll have another letter with numbers, but the part/piece type and group logic is the same.

As for the parts, here are the ones I figured out so far:

D = Dress

H = Hair

AEA = Earrings

ANE = Necklace

BS = Shoes

ABA = Handheld accessory

AHE and AHC = Headpieces/hats/hairpins

AFA = Face accessory (as glasses, eyepatches, masks)

(maybe I'll update here in the future with the ones I remember)

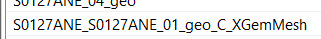

Step 4-C: The "missing pearls" issue.

Often you'll find a group that seems empty, and it has a weird name like this:

I figured out that it's referent to pearls that a piece might contain (as in a pearl necklace, or a little pearl in a earring, pearls decorating a dress, etc). The group seems empty, but when you import it to Blender, you can see that it actually has some vertices, and they're located where the aforementioned pearls would be. I think that Unity (SN engine) uses this to generate/place the pearls from a master mesh, but I honestly have no idea of how the game does that. So you'll probably have to model a sphere to place where the pearls were located, I don't know 🤷♀️ (And if you know how to turn the vertices into spheres (???) please let me know!)

Step 5:

Now that you already extracted a mesh, we're gonna extract the textures (when any). Copy the textures > cloth folder to your PC like you did with the splitmeshs folder.

Open it, and in the search box, type the name of the desired item like this. If the item has textures, it will show in the results.

Grab all the files and open them in HxD (I usually just open HxD and drag the files I want to edit there), and edit them like I teached above. Then you can open them (or load the cloth folder) on AssetStudio, and export them like you did with the meshes.

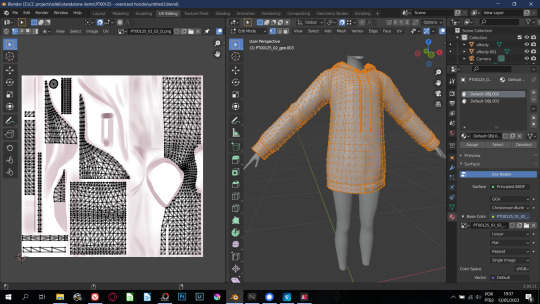

Stuff you need to know about the textures, UV map, etc:

Step 5-A: The UV mapping is a hot mess (at least for us used to how things works in sims games).

See this half edited hoodie and the UV map for a idea:

So for any Sims game, you'll have to remap everything 🙃 Also, stencil-like textures all have their own separated file.

As for hair, they all use the same texture and mapping! BUT sometimes they are arranged like this...

Here's the example of a very messed one (it even has some WTF poly). Most of them aren't that messy, but be prepared to find stuff like this.

Shining Nikki just repeat the texture so it end up covering everything, for Sims you'll need to remap, and the easiest way is by selecting "blocks" of hair strands, ticking the magnet button to make your seletion snap to what is already placed (if you have familiarity with blender, you know what I'm saying). Oh, some clothes are also mapped with the same logic.

Regarding the hair texture, I couldn't locate where they are, but here is a pack with all of them ripped and ready to use. You can also grab the textures from any SN hair I already converted :)

The only items with a fine UV map are the accessories, at least for TS3 that the accessory has a UV map independent from the body.

"But I typed the ID for the set and piece and couldn't find anything!"

A good thing to do is to search with only the set ID and edit all the files with it, because some items (especially accessories) share the same texture file. But if even then you can't find anything, it means that there's no texture for this particular item/group because Shining Nikki use material shaders* to render different materials like metal, crystal, some fancy fabrics, etc. So you'll have to bake or paint a texture for it.

*I believe that those shaders are located on the other cloth folder in the game files. This one is way bigger than the other one and once I copied it to see what it was, AssetStudio took ages to load everything, almost used all my 16GB of RAM, and then there was only code that the illiterate me didn't know what it was 🤷♀️

So that was it! I hope I explained everything, although it is a little confusing.

If you have any questions, you can comment on this post or send me a PM!

#sims 3 tutorial#converting stuff for sims#honestly idk what else to tag#reblog so your fave cc creator sees this!#sims 3 how to#sims 3 cas tutorial#sims 3 clothing tutorial#sims 3 hair tutorial

26 notes

·

View notes

Text

Submitted via Google Form:

I made a rough map but then after assigning how much time it takes people to get around with various transports and prices and alternatives as well as trying to make it make sense and not just have transport because. However I've found lots of plot holes and started editing things but one edit makes the rest of the map go awry. So I made a more detailed map so sometimes I don't need to edit the entire thing. But now things are spiralling and I have a massive map with hundreds of points and extreme details that get even more screwed up as I make changes. Uhh. What do I do? Timing and optimising ideal times/budgets to get to places, etc is an important part of the character's actions and plot points.

Tex: The fantastic thing about maps is that you can have more than one of them, each suited to a different subject regarding the same area. A master map with all of the data points that you want is very useful, and will allow you to extract specific information for maps about more focused subjects (i.e. geographical features vs settlements vs train lines vs climate zones vs botanical). This is helpful in that on individual, subject-specific maps, you can coordinate colour keys in order to generate an overall scheme, such as which colours you prefer for greater vs lower densities, or background vs foreground information.

The Wikipedia page on maps contains a lot of useful information in this regard, and I would also recommend making a written outline of the information that you have on your map, in order to organize the information displayed there, keep track of changes, and plan how to group your information.

Licorice: It sounds as if you’re having difficulty deciding whether your map should dictate your plot, or vice versa. If your story were set on Earth, your map would be fixed, and the means of transport would be, to some extent, dictated to you; you’d only have to decide where your characters should go next - Beijing, or New York?

I’m getting the impression that with this story, you’re creating the map and devising the plot simultaneously, and what you’ve ended up with is a map that’s so detailed it has become inconsistent and difficult even for its own creator to make sense of. It sounds as if the map is becoming an obstacle to writing the story rather than an aid to it. At the same time, though, it sounds like you have a much clearer idea of your plot points and where this story is going than you did when you started.

Maybe it’s time to put the map aside and focus on writing the story? Then when you’re done, update the map so it’s fully in line with the story you’ve written.

Alternatively, put the story aside for a moment, draft a new simplified map using everything you’ve learnt about your story so far, and then treat it like something as fixed as a map of Earth - something to which additional details can be added, but nothing can be changed.

I found on tumblr this chart of the daily distances a person can travel using different modes of transport, which may be useful to you:

Realistic Travel Chart

Good luck! Your project sounds fascinating.

Mod Note: I’ll toss in our two previous map masterposts here for reader reference as well

Mapmaking Part 1

Mapmaking Part 2 Mapping Cities and Towns

18 notes

·

View notes

Text

How Google Maps, Spotify, Shazam and More Work

"How does Google Maps use satellites, GPS and more to get you from point A to point B? What is the tech that powers Spotify’s recommendation algorithm?

From the unique tech that works in seconds to power tap-to-pay to how Shazam identifies 23,000 songs each minute, WSJ explores the engineering and science of technology that catches our eye.

Chapters:

0:00 Google Maps

9:07 LED wristbands

14:30 Spotify’s algorithm

21:30 Tap-to-Pay

28:18 Noise-canceling headphones

34:33 MSG Sphere

41:30 Shazam "

Source: The Wall Street Journal

#Tech#Algorithm#WSJ

Additional information:

" How Does Google Map Works?

Google Maps is a unique web-based mapping service brought to you by the tech giant, Google. It offers satellite imagery, aerial photography, street maps, 360° panoramic views of streets, real-time traffic conditions, and route planning for traveling by foot, car, bicycle, or public transportation.

A short history of Google maps:

Google Maps was first launched in February 2005, as a desktop web mapping service. It was developed by a team at Google led by Lars and Jens Rasmussen, with the goal of creating a more user-friendly and accurate alternative to existing mapping services. In 2007, Google released the first version of Google Maps for mobile, which was available for the Apple iPhone. This version of the app was a huge success and quickly became the most popular mapping app on the market. As time has passed, Google Maps has consistently developed and enhanced its capabilities, including the addition of new forms of map data like satellite and aerial imagery and integration with other Google platforms like Google Earth and Google Street View.

In 2013, Google released a new version of Google Maps for the web, which included a redesigned interface and new features like enhanced search and integration with Google+ for sharing and reviewing places.

Today, Google Maps is available on desktop computers and as a mobile app for Android and iOS devices. It is used by millions of people around the world to get directions, find places, and explore new areas.

How does google maps work?

Google Maps works by using satellite and aerial imagery to create detailed maps of the world. These maps are then made available to users through a web-based interface or a mobile app.

When you open Google Maps, you can search for a specific location or browse the map to explore an area. You can also use the app to get directions to a specific place or find points of interest, such as businesses, landmarks, and other points of interest. Google Maps uses a combination of GPS data, user input, and real-time traffic data to provide accurate and up-to-date information about locations and directions. The app also integrates with other Google services, such as Google Earth and Google Street View, to provide additional information and features.

Overall, Google Maps is a powerful tool that makes it easy to find and explore locations around the world. It’s available on desktop computers and as a mobile app for Android and iOS devices.

Google uses a variety of algorithms in the backend of Google Maps to provide accurate and up-to-date information about locations and directions. Some of the main algorithms used by Google Maps include:

Image recognition: Google Maps uses image recognition algorithms to extract useful information from the satellite and street view images used to create the map. These algorithms can recognize specific objects and features in the images, such as roads, buildings, and landmarks, and use this information to create a detailed map of the area.

Machine learning: Google Maps uses machine learning algorithms to analyze and interpret data from a variety of sources, including satellite imagery, street view images, and user data. These algorithms can identify patterns and trends in the data, allowing Google Maps to provide more accurate and up-to-date information about locations and directions.

Geospatial data analysis: Google Maps uses geospatial data analysis algorithms to analyze and interpret data about the earth’s surface and features. This includes techniques like geographic information systems (GIS) and geospatial data mining, which are used to extract useful information from large datasets of geospatial data.

Overall, these algorithms are an essential part of the backend of Google Maps, helping the service to provide accurate and up-to-date information to users around the world.

Google Maps uses a variety of algorithms to determine the shortest path between two points:

Here are some of the algorithms that may be used:

Dijkstra’s algorithm: This is a classic algorithm for finding the shortest path between two nodes in a graph. It works by starting at the source node and progressively exploring the graph, adding nodes to the shortest path as it goes.

A* search algorithm: This is another popular algorithm for finding the shortest path between two points. It works by combining the benefits of Dijkstra’s algorithm with a heuristic function that helps guide the search toward the destination node.

It’s worth noting that Google Maps may use a combination of these algorithms, as well as other specialized algorithms, to determine the shortest path between two points. The specific algorithms used may vary depending on the specifics of the route, such as the distance, the number of turns, and the type of terrain. "

Source: geeksforgeeks.org - -> You can read the full article at geeksforgeeks.org

#mktmarketing4you#corporatestrategy#marketing#M4Y#lovemarketing#IPAM#ipammarketingschool#ContingencyPlanning#virtual#volunteering#project#Management#Economy#ConsumptionBehavior#BrandManagement#ProductManagement#Logistics#Lifecycle

#Brand#Neuromarketing#McKinseyMatrix#Viralmarketing#Facebook#Marketingmetrics#icebergmodel#EdgarScheinsCultureModel#GuerrillaMarketing #STARMethod #7SFramework #gapanalysis #AIDAModel #SixLeadershipStyles #MintoPyramidPrinciple #StrategyDiamond #InternalRateofReturn #irr #BrandManagement #dripmodel #HoshinPlanning #XMatrix #backtobasics #BalancedScorecard #Product #ProductManagement #Logistics #Branding #freemium #businessmodel #business #4P #3C #BCG #SWOT #TOWS #EisenhowerMatrix #Study #marketingresearch #marketer #marketing manager #Painpoints #Pestel #ValueChain # VRIO #marketingmix

Thank you for following All about Marketing 4 You

youtube

2 notes

·

View notes

Text

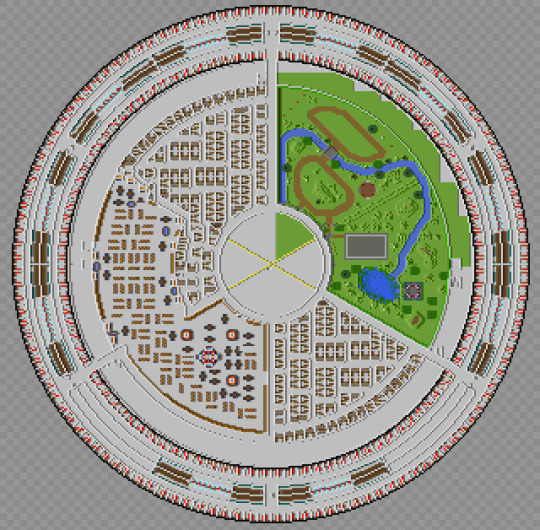

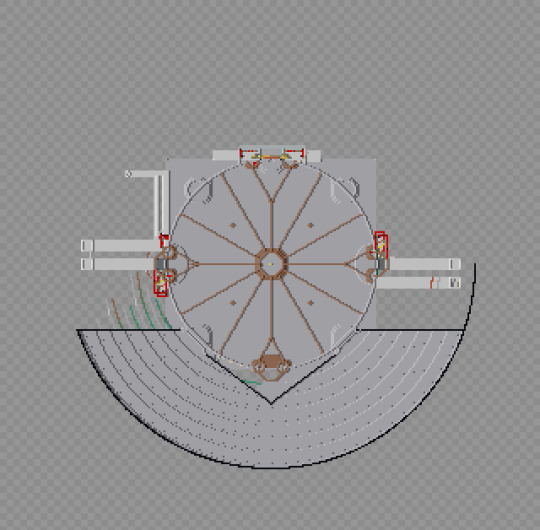

The Scholomance in Minecraft: Final Tour

The build is, for all intense and purposes, done. Now for the final tour.

Okay, let me be blunt, I can't post this here. That tour is 147 images long, not counting the one I'm reusing from the book. So I really insist if you want to see the whole thing, go visit the Imgur post. That said, I did figure out how to do something quite interesting, and I will be sharing it only here, along with some random thoughts and notes. So, who wants to see a map of the Scholomance in Minecraft?

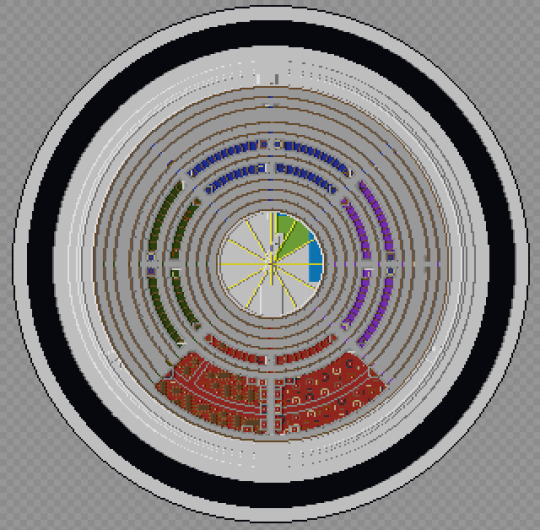

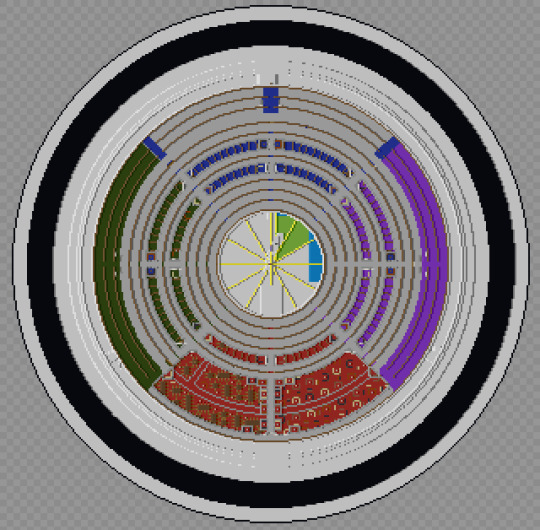

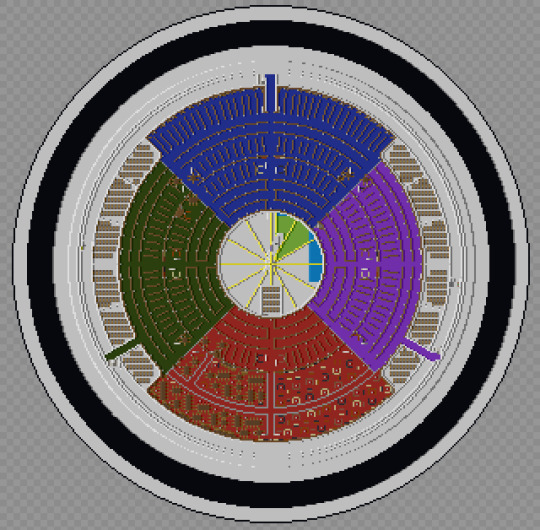

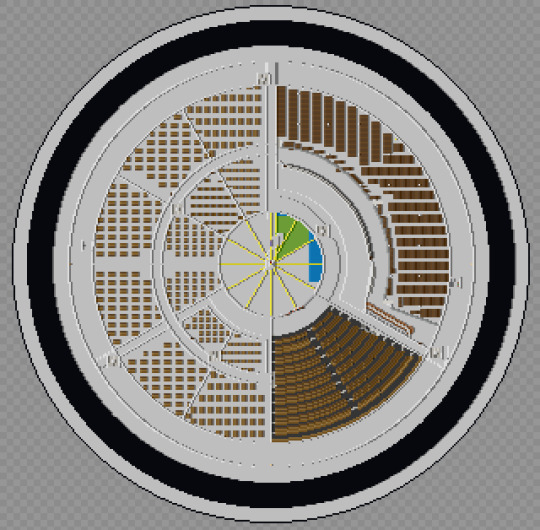

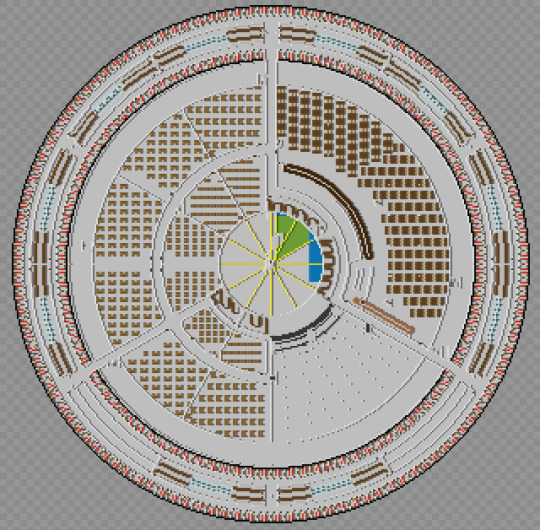

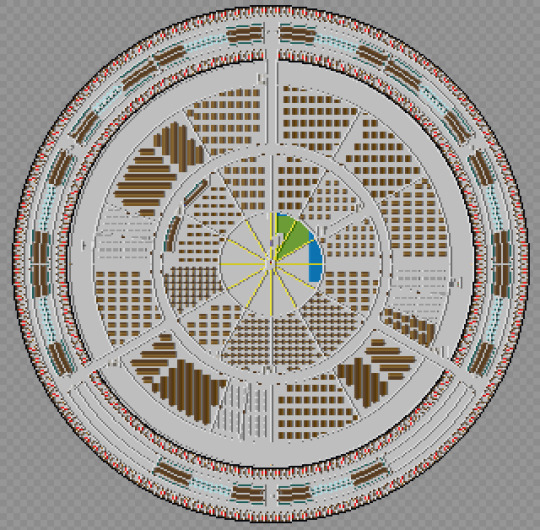

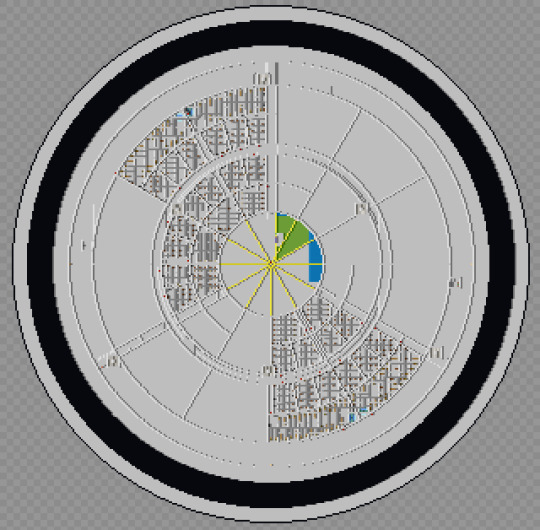

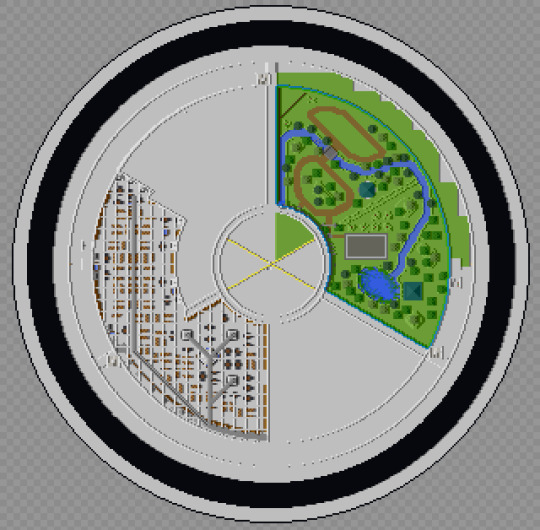

The program is called Mineways, and it lets you extract the data from any Minecraft world, and do all sorts of things, like build import it to 3d modeling software, 3d print it, or just generate some maps. We'll start at the top and work our way down.

This view was almost standard for much of the build and still how I think of the school as a whole. I never removed much of the access stuff I used for building, so you'll see things like that inside the core throughout.

So the school is roughly 120 blocks tall, which puts it at about 40 stories tall. Minus the library and graduation hall, that means climbing from the Senior dorms to the library is climbing about the equivalent of 27 stories. The seniors had to have one hell of a work out just to get lunch, let alone the gym runs. The only thing holding them back from being full on Olympic athletes is the fact that they were malnourished!

I did some math at one point trying to figure out how many books the library could contain, and surprisingly they have figures for how many books fit in a cubic meter. My math (possibly wrong) came up with 116 million. Google claims there's only 156 million books total. Now this is possibly wrong (I may have messed up my math), but it's still crazy how big the library actually is. I still can't get over it honestly.

Maleficaria Studies got me wondering something: Who made the mural? The only ones who would ever see the Graduation Hall and it's inhabitants would be graduating seniors, and I doubt many took the time to sketch the scene. And even then, how did it get back into the school? And then up on the walls in a way that could be torn down and turned into wire? More than that, what was the original purpose? When they planned for it to be for 800 male enclavers, did they need it to be what it became? Or was it just an auditorium/theater and at one point they had bands or were performing Shakespeare or something?

Speaking of those early days, I think it's pretty clear there had to be some communication from outside the school to inside, if only to the artifice itself. After all, they had to have some way to tell the school to halve the room sizes so it could support more students.

BTW, while writing this, do you notice how there's a couple blanks spaces for the bathrooms along the bottom? Yeah, I missed two sets of bathrooms. I did go back and fill those in, I just didn't redo these maps.

Speaking of bathrooms, I decided to make the interior restrooms unisex while the dorm ones were gendered. Why? My theory is that because the dorms were designed to be re done on a regular basis it wasn't hard to fix but the interior ones couldn't be so easily changed, so instead of separating by gender, they just left them unisex as they couldn't do anything else. So why no urinals? After all it was originally an all boys school. Mostly it was easier to build, but also because I really couldn't find a ratio between urinals and toilets, and only got annoyed me when the one reference I found said that for all male locations there should be more toilets/urinals total than one for all female or even mixed populations. That might explain a lot if that's considered the standard in the building industry.

This image actually shows the grid and vent work in the alchemy labs as it's difficult to see when you're in the school. I really just wanted to point it out. I used this floor to figure out the numbering system for the rooms in the school. As shown here:

I did make an effort to stick to this map, but I'm sure I messed it up somewhere.

I have a theory on the construction of the school. We're told that the doors were meant to be the thing to get people to invest, in the larger project, but was it all in one go? I think maybe not. Sure the grad hall and gates came first, then they suggested maybe we could build another floor, and then another, and then why not just build the whole thing and before the enclaves knew what was happening they were building a world wonder. Feature creep at it's finest.

The vent work in the Workshop is almost impossible to see from inside the school. I managed to find one spot, right over the power hammer for the final tour post and it's only barely visible. Here though, you can see the whole thing. It's the grey line that runs through it.

The labyrinth isn't quite as wild as the images in the books displayed, in fact it's quite regular from this angle, but when you're inside, getting lost is probably the stupidly easiest thing that can happen. It was mostly intentional, of course, but I'm surprised how well it worked and how easily I got turned around.

I will also say I'm so happy with how the gym ultimately turned out. Oh it could be better, but the stark difference between it and the rest of the school is something I really wanted to make clear. Also if you look close to the edges of the gym and workshop, you can see the current and old shafts, I never did remove the old ones.

The Grad Hall has the most leftover bits in the school. The multiple extra shafts, the remains of the lines for the levels of the landing, and square base of the whole thing. I would love to say I left it as an easter egg or something, but really it was one part laziness and one part really freaking dark down there. If I couldn't see it and know it's there, no one else can see it at all.

Of all the parts of the school, we know both a lot about the Graduation Hall, and almost nothing. It's shape is only hinted at, the general arrangement (entry doors on one side, exit on the other) is vague, and we really don't know where the shafts come in at all. My original build actually had the shafts going up toward the middle of the school, making the whole thing quite narrow indeed, but this felt much more likely to be true. This version feels more right, even if it still remains a bit too small. But only a bit.

And now a gif from the top to bottom. No it doesn't have every layer, but it has a lot of them.

Well that's the end. Link to final file below. Please feel free to download and explore it, modify or just play inside. And if someone gets a server of this running let me know. Hell let me know whatever you do with it, I'm straight up curious.

And since there's a chance she's reading this, thank you for the wonderful series and hope my build came at least pretty close to what you imagined, even if was made in Minecraft.

#the scholomance#the golden enclaves#a deadly education#naomi novik#scholomance#the last graduate#minecraft

7 notes

·

View notes

Text

What is LeadExtractorPro?

LeadExtractorPro is a software designed to help users extract targeted leads from various online sources quickly and efficiently. Whether you’re looking for email addresses, phone numbers, or social media profiles, this tool simplifies the process and delivers results in minutes. It’s perfect for affiliate marketers, local businesses, e-commerce stores, and anyone who needs a steady stream of high-quality leads.

Key Features:

Extract Leads from Multiple Sources:

The software allows you to scrape leads from platforms like Google Maps, Facebook, Instagram, LinkedIn, and more. This versatility ensures you can find leads relevant to your niche.

Targeted Lead Generation:

You can filter leads based on location, industry, keywords, and other criteria. This ensures you’re only getting high-quality, targeted leads that are more likely to convert.

Email and Phone Number Extraction:

LeadExtractorPro can extract email addresses and phone numbers directly from websites and social media profiles, saving you hours of manual work.

User-Friendly Interface:

The software is incredibly intuitive, even for beginners. The step-by-step process makes it easy to set up and start extracting leads within minutes.

Export Options:

Once you’ve extracted your leads, you can export them in various formats (CSV, Excel, etc.) for use in your email marketing tools, CRMs, or other platforms.

Time-Saving Automation:

The tool automates the entire lead generation process, allowing you to focus on other aspects of your business. No more wasting time on manual searches!

My Experience:

I used LeadExtractorPro to generate leads for my local real estate business, and the results were incredible. Within 30 minutes, I had a list of over 500 targeted leads, complete with email addresses and phone numbers. The best part? The leads were highly relevant to my niche, which meant my outreach efforts were much more effective.

I also tested it for an affiliate marketing campaign, and it worked like a charm. I was able to extract leads from Facebook groups related to my niche, and the engagement on my campaign skyrocketed. The software is fast, accurate, and incredibly reliable.

Who is it For?

LeadExtractorPro is perfect for:

Affiliate marketers looking for targeted leads.

Local businesses (real estate, restaurants, salons, etc.) wanting to reach local customers.

E-commerce store owners needing customer data.

Digital marketers running email or SMS campaigns.

Entrepreneurs who want to grow their email list quickly.

Final Thoughts:

LeadExtractorPro has completely transformed the way I approach lead generation. It’s fast, efficient, and delivers high-quality results every time. If you’re tired of wasting time and money on ineffective lead generation methods, this tool is worth every penny. It’s a no-brainer for anyone serious about growing their business

0 notes

Text

How To Use google map data extractor software Online- R2media

youtube

Unlock Targeted Leads with Google Map Data Extractor: A Game-Changer for Businesses

In today’s competitive digital landscape, having access to accurate and high-quality business leads is essential for success. Whether you’re a digital marketer, sales professional, or business owner, finding the right prospects can make all the difference. That’s where Google Map Data Extractor from R2Media comes in — a powerful tool designed to automate lead generation by extracting valuable business information directly from Google Maps.

What is Google Map Data Extractor?

Google Map Data Extractor is an advanced software that collects crucial business and contact details from Google Maps. It helps users extract data such as:

🏢 Business names

📍 Addresses

📞 Phone numbers

📧 Email IDs (if available)

🌐 Website URLs

⭐ Ratings and reviews

🏷️ Business categories

🌍 Geographical coordinates (latitude & longitude)

This tool is widely used by businesses looking to expand their reach, generate leads, and optimize marketing campaigns efficiently.

How Does Google Map Data Extractor Work?

The software simplifies the process of data collection with automation, making it easy to extract business details in just a few steps:

🔎 Keyword Input — Enter search queries such as “restaurants in New York” or “real estate agents in Mumbai” into the software.

🤖 Automated Scraping — The tool scans Google Maps and extracts all relevant business information.

🛠️ Data Processing — It filters and organizes the extracted data, removing duplicates and irrelevant entries.

📂 Exporting Data — The data can be exported in CSV or Excel format for seamless integration into marketing or sales tools.

🚀 Utilization — Use the extracted data for lead generation, email marketing, cold calling, competitor analysis, and market research.

By automating this process, businesses can save time and effort while accessing up-to-date and accurate business information. 🚀

How Many Leads Can You Extract in One Search?

The number of leads collected depends on multiple factors:

🔢 Google Maps Search Limit — Google usually displays up to 300 results per search.

💻 Software Capabilities — R2Media’s extractor can run multiple searches to collect thousands of leads.

🎯 Search Filters — Broad searches (e.g., “restaurants in India”) yield more results, while niche searches (e.g., “vegan restaurants in Mumbai”) provide highly targeted leads.

🛡️ Google Restrictions — Excessive scraping in a short period may trigger Google’s security measures. Using proxies or rotating IPs can help avoid limitations.

On average, users can extract hundreds to thousands of leads per session, maximizing their lead generation efforts.

Is It Legal to Use Google Map Data Extractor?

Using data extraction tools falls into a legal gray area. While collecting publicly available business information for personal use, research, and marketing is generally acceptable, users must ensure:

⚖️ Compliance with Google’s Terms of Service — Excessive scraping may violate their policies.

✉️ Ethical Use of Data — Avoid spamming or misusing extracted contact details.

🔐 Data Protection Laws — Adhere to GDPR, CCPA, or local data privacy laws when handling personal data.

To stay compliant, it is recommended to use the tool responsibly and reach out to businesses ethically.

Why Choose R2Media’s Google Map Data Extractor?

⚡ High-speed data extraction with accurate results.

🖥️ User-friendly interface with simple search functionalities.

📊 Ability to export data in multiple formats.

🔍 Advanced filtering for better lead segmentation.

💰 Affordable and scalable solution for businesses of all sizes.

Final Thoughts

If you’re looking for an efficient way to gather targeted business leads, R2Media’s Google Map Data Extractor is a must-have tool. Whether you’re in sales, marketing, or market research, this software helps automate the lead generation process, saving time and effort.

Ready to supercharge your outreach? Try Google Map Data Extractor today and take your business to the next level!

🔗 Learn more here 📺 Watch the tool in action: YouTube Video

1 note

·

View note

Text

Road Map for Data Science: A Complete Guide to Becoming a Data Scientist

In the digital-first world of today, data science is one of the most in-demand career paths. Every industry-from healthcare to finance and e-commerce-is driven by data-driven decision-making, which makes it a very rewarding and future-proof career.

However, how would someone begin? Which tools are needed to know? What type of career exists? This handbook acts as a roadmap, allowing up-and-coming professionals in data science the path forward toward creating a sustainable and thriving career.

Step 1: Knowing What Data Science Is

Data science is essentially the art of extracting insights from data. It is where skills in statistics, programming, and domain expertise are combined to analyze large pieces of information while driving decisions for business.

Key Areas in Data Science

To become a good data scientist, one has to be very skilled in

✔ Data Collection & Cleaning: Gathering raw data and making it usable.

✔ Exploratory Data Analysis (EDA): Understanding patterns and trends.

✔ Machine Learning & AI: Building predictive models for automation.

✔ Big Data Technologies: Handling large-scale datasets efficiently.

✔ Data Visualization: Communicating insights effectively using charts and graphs.

As the use of data-driven decision-making becomes more prevalent, the need for individuals to be proficient in these areas increases across all industries.

Learning Step 2: Tools and Technologies to Master

One needs to master tools to execute real-world analytics. Some of the most popular tools applied in the industry are:

Programming Languages: Python (most in-demand), R, SQL

Data Manipulation: Pandas, NumPy

Machine Learning Frameworks: Scikit-learn, TensorFlow, PyTorch

Big Data & Cloud Platforms: Apache Spark, AWS, Google Cloud, Azure

Data Visualization: Matplotlib, Seaborn, Tableau, Power BI

Databases: SQL, MongoDB

A data scientist doesn't need to learn all these at once. Starting with Python, SQL, and machine learning frameworks is a good starting point.

Step 3: Establishing a Mathematical and Statistical Backbone

Mathematics and statistics are the backbone of data science, and there is wide focus to be sure to master the following topics:

✅ Linear Algebra: Matrices and vectors are very necessary in machine learning models

✅ Probability & Statistics: Used for prediction and understanding data distributions

✅ Calculus: Used in optimization techniques applied in machine learning algorithms.

While coding is important, mathematical intuition does help a data scientist build better models and make quality decisions.

Step 4: Acquiring Practical Experience via Projects

Theoretical knowledge is not sufficient-there must be real-world projects to close the gap between learning and application.

Here's how one can acquire practical experience:

Work on datasets from Kaggle, UCI Machine Learning Repository, or real world business cases.

Work on building models of machine learning to predict trends (such as stock prices, customer churn).

Build dashboards using Tableau or Power BI.

Hackathons/competitions. This will really challenge your skills.

A robust portfolio of projects with real-world applications is one of the greatest ways to communicate expertise to prospective employers.

Step 5: Career Opportunities in Data Science

Data scientists are in high demand because businesses see the value of data-driven strategy. Some career paths include the following:

1. Data Scientist

Most common, where people work on machine learning models, data insights.

2. Data Analyst

Deals with cleaning and visualization of data and reports in SQL, Excel, BI tools.

3. Machine Learning Engineer

The job deals with developing and deploying AI-based applications. The job requires deep knowledge in deep learning and cloud platforms.

4. Business Intelligence Analyst

This role deals with company data interpretation and developing dashboards for the guidance of executives to take decisions.

5. AI Research Scientist

Works on emerging innovations related to AI and model development

More and more companies are now using AI for automation. With that kind of promise, a career in data science has never looked better.

Here are some best data analytics courses for beginners and professionals looking to upskill. In return, this helps with structured learning and industry-specific training.

India and the Global Boom in Data Science

India is at a massive threshold change in data science and AI. With initiatives such as Digital India, AI for All, and Smart Cities, the whole nation is in the process of taking big data analytics and AI very rapidly within different industries.

There are key points that shape the data science revolution in India.

✔ Growing IT Ecosystem: TCS, Infosys, Wipro, and Accenture are already pumping in capital on AI solutions.

✔ Booming Startups: India has become a hub for AI startups in fintech, e-commerce, and healthcare.

✔ Government Support: The Indian government is actively investing in AI research and innovation.

✔ Skilled Workforce: India produces thousands of engineers and data scientists every year.

The foremost in-demand skills in today's era are those related to python, SQL, Pandas, and machine learning, where AI-driven solutions are on the rise.

Step 6: Keeping Up and Networking

Data science is a constantly evolving field. To keep up, professionals must:

✅ Follow AI research papers and case studies.

✅ Join data science communities and forums like Kaggle, GitHub, and Stack Overflow.

Attend conferences and webinars.

Network with industry professionals through LinkedIn and local meetups.

Building connections and staying up-to-date with new tools and trends ensures long-term success in the field.

Conclusion

The road to becoming a data scientist requires dedication, continuous learning, and hands-on experience. By mastering programming, machine learning, statistics, and business intelligence tools, one can build a rewarding career in this high-growth field.

As India continues with its AI-driven transformation, there will only be a greater need for skilled professionals. Be you a fresher or an experienced professional, the scope for data science is infinite, providing one with limitless avenues to innovate, grow, and make a difference.

Start now and be a part of the future of AI and analytics!

0 notes

Text

Best Informatica Cloud Training in India | Informatica IICS

Cloud Data Integration (CDI) in Informatica IICS

Introduction

Cloud Data Integration (CDI) in Informatica Intelligent Cloud Services (IICS) is a powerful solution that helps organizations efficiently manage, process, and transform data across hybrid and multi-cloud environments. CDI plays a crucial role in modern ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) operations, enabling businesses to achieve high-performance data processing with minimal complexity. In today’s data-driven world, businesses need seamless integration between various data sources, applications, and cloud platforms. Informatica Training Online

What is Cloud Data Integration (CDI)?

Cloud Data Integration (CDI) is a Software-as-a-Service (SaaS) solution within Informatica IICS that allows users to integrate, transform, and move data across cloud and on-premises systems. CDI provides a low-code/no-code interface, making it accessible for both technical and non-technical users to build complex data pipelines without extensive programming knowledge.

Key Features of CDI in Informatica IICS

Cloud-Native Architecture

CDI is designed to run natively on the cloud, offering scalability, flexibility, and reliability across various cloud platforms like AWS, Azure, and Google Cloud.

Prebuilt Connectors

It provides out-of-the-box connectors for SaaS applications, databases, data warehouses, and enterprise applications such as Salesforce, SAP, Snowflake, and Microsoft Azure.

ETL and ELT Capabilities

Supports ETL for structured data transformation before loading and ELT for transforming data after loading into cloud storage or data warehouses.

Data Quality and Governance

Ensures high data accuracy and compliance with built-in data cleansing, validation, and profiling features. Informatica IICS Training

High Performance and Scalability

CDI optimizes data processing with parallel execution, pushdown optimization, and serverless computing to enhance performance.

AI-Powered Automation

Integrated Informatica CLAIRE, an AI-driven metadata intelligence engine, automates data mapping, lineage tracking, and error detection.

Benefits of Using CDI in Informatica IICS

1. Faster Time to Insights

CDI enables businesses to integrate and analyze data quickly, helping data analysts and business teams make informed decisions in real-time.

2. Cost-Effective Data Integration

With its serverless architecture, businesses can eliminate on-premise infrastructure costs, reducing Total Cost of Ownership (TCO) while ensuring high availability and security.

3. Seamless Hybrid and Multi-Cloud Integration

CDI supports hybrid and multi-cloud environments, ensuring smooth data flow between on-premises systems and various cloud providers without performance issues. Informatica Cloud Training

4. No-Code/Low-Code Development

Organizations can build and deploy data pipelines using a drag-and-drop interface, reducing dependency on specialized developers and improving productivity.

5. Enhanced Security and Compliance

Informatica ensures data encryption, role-based access control (RBAC), and compliance with GDPR, CCPA, and HIPAA standards, ensuring data integrity and security.

Use Cases of CDI in Informatica IICS

1. Cloud Data Warehousing

Companies migrating to cloud-based data warehouses like Snowflake, Amazon Redshift, or Google BigQuery can use CDI for seamless data movement and transformation.

2. Real-Time Data Integration

CDI supports real-time data streaming, enabling enterprises to process data from IoT devices, social media, and APIs in real-time.

3. SaaS Application Integration

Businesses using applications like Salesforce, Workday, and SAP can integrate and synchronize data across platforms to maintain data consistency. IICS Online Training

4. Big Data and AI/ML Workloads

CDI helps enterprises prepare clean and structured datasets for AI/ML model training by automating data ingestion and transformation.

Conclusion

Cloud Data Integration (CDI) in Informatica IICS is a game-changer for enterprises looking to modernize their data integration strategies. CDI empowers businesses to achieve seamless data connectivity across multiple platforms with its cloud-native architecture, advanced automation, AI-powered data transformation, and high scalability. Whether you’re migrating data to the cloud, integrating SaaS applications, or building real-time analytics pipelines, Informatica CDI offers a robust and efficient solution to streamline your data workflows.

For organizations seeking to accelerate digital transformation, adopting Informatics’ Cloud Data Integration (CDI) solution is a strategic step toward achieving agility, cost efficiency, and data-driven innovation.

For More Information about Informatica Cloud Online Training

Contact Call/WhatsApp: +91 7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Informatica Training in Hyderabad#IICS Training in Hyderabad#IICS Online Training#Informatica Cloud Training#Informatica Cloud Online Training#Informatica IICS Training#Informatica Training Online#Informatica Cloud Training in Chennai#Informatica Cloud Training In Bangalore#Best Informatica Cloud Training in India#Informatica Cloud Training Institute#Informatica Cloud Training in Ameerpet

0 notes

Text

0 notes

Text

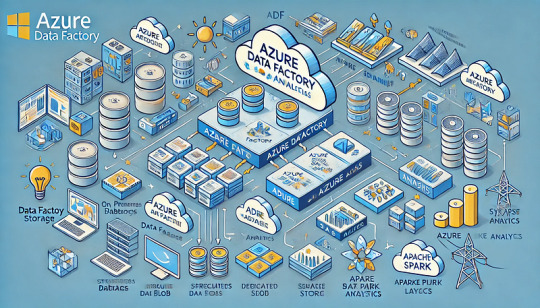

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes