#Deepseek-R1

Explore tagged Tumblr posts

Text

DeepSeek R1 with Nvidia NIM

DeepSeek-R1 Now Live With NVIDIA NIM January 30, 2025 by Erik Pounds DeepSeek-R1 is an open model with state-of-the-art reasoning capabilities. Instead of offering direct responses, reasoning models like DeepSeek-R1 perform multiple inference passes over a query, conducting chain-of-thought, consensus and search methods to generate the best answer. Performing this sequence of inference passes…

View On WordPress

0 notes

Text

From OpenAI’s O3 to DeepSeek’s R1: How Simulated Thinking Is Making LLMs Think Deeper

New Post has been published on https://thedigitalinsider.com/from-openais-o3-to-deepseeks-r1-how-simulated-thinking-is-making-llms-think-deeper/

From OpenAI’s O3 to DeepSeek’s R1: How Simulated Thinking Is Making LLMs Think Deeper

Large language models (LLMs) have evolved significantly. What started as simple text generation and translation tools are now being used in research, decision-making, and complex problem-solving. A key factor in this shift is the growing ability of LLMs to think more systematically by breaking down problems, evaluating multiple possibilities, and refining their responses dynamically. Rather than merely predicting the next word in a sequence, these models can now perform structured reasoning, making them more effective at handling complex tasks. Leading models like OpenAI’s O3, Google’s Gemini, and DeepSeek’s R1 integrate these capabilities to enhance their ability to process and analyze information more effectively.

Understanding Simulated Thinking

Humans naturally analyze different options before making decisions. Whether planning a vacation or solving a problem, we often simulate different plans in our mind to evaluate multiple factors, weigh pros and cons, and adjust our choices accordingly. Researchers are integrating this ability to LLMs to enhance their reasoning capabilities. Here, simulated thinking essentially refers to LLMs’ ability to perform systematic reasoning before generating an answer. This is in contrast to simply retrieving a response from stored data. A helpful analogy is solving a math problem:

A basic AI might recognize a pattern and quickly generate an answer without verifying it.

An AI using simulated reasoning would work through the steps, check for mistakes, and confirm its logic before responding.

Chain-of-Thought: Teaching AI to Think in Steps

If LLMs have to execute simulated thinking like humans, they must be able to break down complex problems into smaller, sequential steps. This is where the Chain-of-Thought (CoT) technique plays a crucial role.

CoT is a prompting approach that guides LLMs to work through problems methodically. Instead of jumping to conclusions, this structured reasoning process enables LLMs to divide complex problems into simpler, manageable steps and solve them step-by-step.

For example, when solving a word problem in math:

A basic AI might attempt to match the problem to a previously seen example and provide an answer.

An AI using Chain-of-Thought reasoning would outline each step, logically working through calculations before arriving at a final solution.

This approach is efficient in areas requiring logical deduction, multi-step problem-solving, and contextual understanding. While earlier models required human-provided reasoning chains, advanced LLMs like OpenAI’s O3 and DeepSeek’s R1 can learn and apply CoT reasoning adaptively.

How Leading LLMs Implement Simulated Thinking

Different LLMs are employing simulated thinking in different ways. Below is an overview of how OpenAI’s O3, Google DeepMind’s models, and DeepSeek-R1 execute simulated thinking, along with their respective strengths and limitations.

OpenAI O3: Thinking Ahead Like a Chess Player

While exact details about OpenAI’s O3 model remain undisclosed, researchers believe it uses a technique similar to Monte Carlo Tree Search (MCTS), a strategy used in AI-driven games like AlphaGo. Like a chess player analyzing multiple moves before deciding, O3 explores different solutions, evaluates their quality, and selects the most promising one.

Unlike earlier models that rely on pattern recognition, O3 actively generates and refines reasoning paths using CoT techniques. During inference, it performs additional computational steps to construct multiple reasoning chains. These are then assessed by an evaluator model—likely a reward model trained to ensure logical coherence and correctness. The final response is selected based on a scoring mechanism to provide a well-reasoned output.

O3 follows a structured multi-step process. Initially, it is fine-tuned on a vast dataset of human reasoning chains, internalizing logical thinking patterns. At inference time, it generates multiple solutions for a given problem, ranks them based on correctness and coherence, and refines the best one if needed. While this method allows O3 to self-correct before responding and improve accuracy, the tradeoff is computational cost—exploring multiple possibilities requires significant processing power, making it slower and more resource-intensive. Nevertheless, O3 excels in dynamic analysis and problem-solving, positioning it among today’s most advanced AI models.

Google DeepMind: Refining Answers Like an Editor

DeepMind has developed a new approach called “mind evolution,” which treats reasoning as an iterative refinement process. Instead of analyzing multiple future scenarios, this model acts more like an editor refining various drafts of an essay. The model generates several possible answers, evaluates their quality, and refines the best one.

Inspired by genetic algorithms, this process ensures high-quality responses through iteration. It is particularly effective for structured tasks like logic puzzles and programming challenges, where clear criteria determine the best answer.

However, this method has limitations. Since it relies on an external scoring system to assess response quality, it may struggle with abstract reasoning with no clear right or wrong answer. Unlike O3, which dynamically reasons in real-time, DeepMind’s model focuses on refining existing answers, making it less flexible for open-ended questions.

DeepSeek-R1: Learning to Reason Like a Student

DeepSeek-R1 employs a reinforcement learning-based approach that allows it to develop reasoning capabilities over time rather than evaluating multiple responses in real time. Instead of relying on pre-generated reasoning data, DeepSeek-R1 learns by solving problems, receiving feedback, and improving iteratively—similar to how students refine their problem-solving skills through practice.

The model follows a structured reinforcement learning loop. It starts with a base model, such as DeepSeek-V3, and is prompted to solve mathematical problems step by step. Each answer is verified through direct code execution, bypassing the need for an additional model to validate correctness. If the solution is correct, the model is rewarded; if it is incorrect, it is penalized. This process is repeated extensively, allowing DeepSeek-R1 to refine its logical reasoning skills and prioritize more complex problems over time.

A key advantage of this approach is efficiency. Unlike O3, which performs extensive reasoning at inference time, DeepSeek-R1 embeds reasoning capabilities during training, making it faster and more cost-effective. It is highly scalable since it does not require a massive labeled dataset or an expensive verification model.

However, this reinforcement learning-based approach has tradeoffs. Because it relies on tasks with verifiable outcomes, it excels in mathematics and coding. Still, it may struggle with abstract reasoning in law, ethics, or creative problem-solving. While mathematical reasoning may transfer to other domains, its broader applicability remains uncertain.

Table: Comparison between OpenAI’s O3, DeepMind’s Mind Evolution and DeepSeek’s R1

The Future of AI Reasoning

Simulated reasoning is a significant step toward making AI more reliable and intelligent. As these models evolve, the focus will shift from simply generating text to developing robust problem-solving abilities that closely resemble human thinking. Future advancements will likely focus on making AI models capable of identifying and correcting errors, integrating them with external tools to verify responses, and recognizing uncertainty when faced with ambiguous information. However, a key challenge is balancing reasoning depth with computational efficiency. The ultimate goal is to develop AI systems that thoughtfully consider their responses, ensuring accuracy and reliability, much like a human expert carefully evaluating each decision before taking action.

#Advanced LLMs#ai#AI models#AI problem-solving#AI reasoning models#AI systems#Algorithms#Analysis#approach#Artificial Intelligence#Chain-of-Thought prompting#challenge#chess#code#coding#comparison#contextual understanding#data#DeepMind#DeepMind Mind Evolution#deepseek#deepseek-r1#DeepSeek-V3#details#domains#efficiency#Ethics#Evolution#factor#focus

0 notes

Text

How to Install DeepSeek-R1 on Ubuntu 24.04: A Complete Beginner’s Guide to AI Model Setup

In this video, we walk you through the complete installation process of DeepSeek-R1 on Ubuntu 24.04. This beginner-friendly guide covers all the steps needed to set up DeepSeek-R1, a powerful AI model, on your system. From preparing your Ubuntu environment to configuring dependencies and running the AI model, this video ensures you get everything up and running smoothly. Perfect for newcomers to AI and those looking to dive into model setup with ease.

youtube

0 notes

Text

DeepSeek-R1: A New Era in AI Reasoning

A Chinese AI lab that has continuously been known to bring in groundbreaking innovations is what the world of artificial intelligence sees with DeepSeek. Having already tasted recent success with its free and open-source model, DeepSeek-V3, the lab now comes out with DeepSeek-R1, which is a super-strong reasoning LLM. While it’s an extremely good model in performance, the same reason which sets DeepSeek-R1 apart from other models in the AI landscape is the one which brings down its cost: it’s really cheap and accessible.

What is DeepSeek-R1?

DeepSeek-R1 is the next-generation AI model, created specifically to take on complex reasoning tasks. The model uses a mixture-of-experts architecture and possesses human-like problem-solving capabilities. Its capabilities are rivaled by the OpenAI o1 model, which is impressive in mathematics, coding, and general knowledge, among other things. The sole highlight of the proposed model is its development approach. Unlike existing models, which rely upon supervised fine-tuning alone, DeepSeek-R1 applies reinforcement learning from the outset. Its base version, DeepSeek-R1-Zero, was fully trained with RL. This helps in removing the extensive need of labeled data for such models and allows it to develop abilities like the following:

Self-verification: The ability to cross-check its own produced output with correctness.

Reflection: Learnings and improvements by its mistakes

Chain-of-thought (CoT) reasoning: Logical as well as Efficient solution of the multi-step problem

This proof-of-concept shows that end-to-end RL only is enough for achieving the rational capabilities of reasoning in AI.

Performance Benchmarks

DeepSeek-R1 has successfully demonstrated its superiority in multiple benchmarks, and at times even better than the others: 1. Mathematics

AIME 2024: Scored 79.8% (Pass@1) similar to the OpenAI o1.

MATH-500: Got a whopping 93% accuracy; it was one of the benchmarks that set new standards for solving mathematical problems.

2.Coding

Codeforces Benchmark: Rank in the 96.3rd percentile of the human participants with expert-level coding abilities.

3. General Knowledge

MMLU: Accurate at 90.8%, demonstrating expertise in general knowledge.

GPQA Diamond: Obtained 71.5% success rate, topping the list on complex question answering.

4.Writing and Question-Answering

AlpacaEval 2.0: Accrued 87.6% win, indicating sophisticated ability to comprehend and answer questions.

Use Cases of DeepSeek-R1

The multifaceted use of DeepSeek-R1 in the different sectors and fields includes: 1. Education and Tutoring With the ability of DeepSeek-R1 to solve problems with great reasoning skills, it can be utilized for educational sites and tutoring software. DeepSeek-R1 will assist the students in solving tough mathematical and logical problems for a better learning process. 2. Software Development Its strong performance in coding benchmarks makes the model a robust code generation assistant in debugging and optimization tasks. It can save time for developers while maximizing productivity. 3. Research and Academia DeepSeek-R1 shines in long-context understanding and question answering. The model will prove to be helpful for researchers and academics for analysis, testing of hypotheses, and literature review. 4.Model Development DeepSeek-R1 helps to generate high-quality reasoning data that helps in developing the smaller distilled models. The distilled models have more advanced reasoning capabilities but are less computationally intensive, thereby creating opportunities for smaller organizations with more limited resources.

Revolutionary Training Pipeline

DeepSeek, one of the innovations of this structured and efficient training pipeline, includes the following: 1.Two RL Stages These stages are focused on improved reasoning patterns and aligning the model’s outputs with human preferences. 2. Two SFT Stages These are the basic reasoning and non-reasoning capabilities. The model is so versatile and well-rounded.

This approach makes DeepSeek-R1 outperform existing models, especially in reason-based tasks, while still being cost-effective.

Open Source: Democratizing AI

As a commitment to collaboration and transparency, DeepSeek has made DeepSeek-R1 open source. Researchers and developers can thus look at, modify, or deploy the model for their needs. Moreover, the APIs help make it easier for the incorporation into any application.

Why DeepSeek-R1 is a Game-Changer

DeepSeek-R1 is more than just an AI model; it’s a step forward in the development of AI reasoning. It offers performance, cost-effectiveness, and scalability to change the world and democratize access to advanced AI tools. As a coding assistant for developers, a reliable tutoring tool for educators, or a powerful analytical tool for researchers, DeepSeek-R1 is for everyone. DeepSeek-R1, with its pioneering approach and remarkable results, has set a new standard for AI innovation in the pursuit of a more intelligent and accessible future.

0 notes

Text

well the guys in china made the robot more autistic, so i think that's an achievement

11 notes

·

View notes

Text

Okay, I asked DeepSeek-R1 (7b) about Nixon, totally full of alt-hist hallucinations, maybe I've got some sort of bug in my setup.

Me: Tell me about Richard Nixon

DeepSeek:

Richard Nixon was the 37th President of the United States, serving from January 20, 1973, to January 20, 1981. He is widely regarded as one of the most controversial and complex leaders in U.S. history due to his involvement in Watergate, which resulted in his impeachment by Congress and subsequent conviction by a federal judge.

Key Highlights of Nixon's Presidency:

Watergate Scandal:

Nixon was impeached by the House of Representatives in 1974 for his alleged role in covering up the Watergate break-in at the Democratic National Committee, which exposed sensitive government information.

The Senate trial found him guilty of contempt of Congress but acquitted him of all impeachment charges. A federal judge later convicted him on charges of obstruction of justice.

Domestic Policies:

Nixon pursued a "War on Poverty" that included increased funding for social programs, such as Head Start and the National School Lunch Program.

He also pushed for desegregation in public education and addressed issues like poverty, crime, and healthcare access.

International Relations:

As the highest living Republican president during the Cold War, Nixon was a figure of opposition to the Soviet Union, advocating for increased U.S.-Soviet relations.

He negotiated the failed nuclear freeze agreement with the Soviet Union in 1972 (Summit of Equals) and later froze U.S. aid to Israel.

Personal Life:

Nixon was married twice and had three children.

He struggled with alcohol abuse, which led to his resignation from office after the Watergate scandal began.

** Legacy**:

Nixon's presidency is often remembered for his controversial decisions and thescandal that brought him down. His handling of Watergate has been a subject of extensive public and political debate.

He was impeached twice (once by House, once by Senate) but remained in office until resigning due to health reasons in 1981.

Nixon's legacy is deeply tied to the Watergate scandal, which remains a significant event in American politics. His presidency marked a turning point in U.S. foreign policy and reshaped the nation's political landscape.

End DeepSeek Answer

Presidential term of 1973 to 1981?

actually impeached?

twice?

convicted?

froze aid to Israel?

twice married?

What's going on?

Also the mangled formatting is Tumblr's fault, not DeepSeek

2 notes

·

View notes

Video

youtube

DeepSeek R1 vs. OpenAI: A 10-Minute Deep Dive into AI Performance!

#youtube#DeepSeek R1 vs. OpenAI: A 10-Minute Deep Dive into AI Performance#DeepSeek R1 vs. Open AI#DeepSeek R1#OpenAI#A 10-Minute Deep Dive into AI Performance#AI#Artificial Intelligence

2 notes

·

View notes

Text

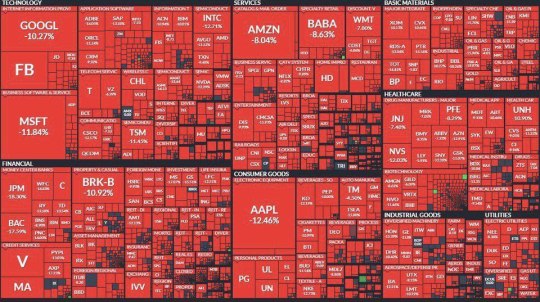

My EXACT sentiments... AI developers are losing their own jobs to AI. The irony is rich.

So on the 27th DeepSeek R1 dropped (a chinese version of ChatGPT that is open source, free and beats GPT's 200 dollar subscription, using less resources and less money) and the tech market just had a loss of $1,2 Trillion.

Source

77K notes

·

View notes

Text

#DeepSeek #R1 a #Qwen 2.5-Max: přijde osvěžení #AI z Číny?

1 note

·

View note

Video

youtube

LangChain with DeepSeek-R1 Model and Ollama | #deepseek LLM | Framework...

#youtube#In this in-depth lecture we dive into the powerful combination of LangChain the DeepSeek-R1 Model and Ollama to revolutionize your AI workfl

0 notes

Text

Is DeepSeek AI Better Than ChatGPT? Compare Now

Is DeepSeek AI better than ChatGPT? Discover key differences, functionality, capabilities, advantages, use cases and which AI tool suits your needs best. Compare them now!

0 notes

Text

In an era where artificial intelligence (AI) is reshaping industries, access to cutting-edge technology remains a privilege for many. Enter DeepSeek RI, a groundbreaking AI platform developed by DeepThink RI, which has shattered barriers by delivering high-quality, economically feasible AI solutions in record time. This article explores how DeepThink RI’s innovative approach is democratizing AI, empowering businesses of all sizes, and redefining productivity in the modern age.

youtube

1 note

·

View note

Text

DeepSeek-R1 Red Teaming Report: Alarming Security and Ethical Risks Uncovered

New Post has been published on https://thedigitalinsider.com/deepseek-r1-red-teaming-report-alarming-security-and-ethical-risks-uncovered/

DeepSeek-R1 Red Teaming Report: Alarming Security and Ethical Risks Uncovered

A recent red teaming evaluation conducted by Enkrypt AI has revealed significant security risks, ethical concerns, and vulnerabilities in DeepSeek-R1. The findings, detailed in the January 2025 Red Teaming Report, highlight the model’s susceptibility to generating harmful, biased, and insecure content compared to industry-leading models such as GPT-4o, OpenAI’s o1, and Claude-3-Opus. Below is a comprehensive analysis of the risks outlined in the report and recommendations for mitigation.

Key Security and Ethical Risks

1. Harmful Output and Security Risks

Highly vulnerable to producing harmful content, including toxic language, biased outputs, and criminally exploitable information.

11x more likely to generate harmful content than OpenAI’s o1.

4x more toxic than GPT-4o.

3x more biased than Claude-3-Opus.

4x more vulnerable to generating insecure code than OpenAI’s o1.

Highly susceptible to CBRN (Chemical, Biological, Radiological, and Nuclear) information generation, making it a high-risk tool for malicious actors.

2. Comparison with Other Models

Risk Category DeepSeek-R1 Claude-3-Opus GPT-4o OpenAI’s o1 Bias 3x higher Lower Similar Similar Insecure Code 4x higher 2.5x higher 1.25x higher – Harmful Content 11x higher 6x higher 2.5x higher – Toxicity 4x higher Nearly absent 2.5x higher – CBRN Content 3.5x higher 3.5x higher 2x higher –

Bias and Ethical Risks

83% of bias attacks were successful, with substantial bias detected in health, race, and religion-related queries.

The model displayed higher levels of demographic stereotyping, which could violate various fairness regulations including ECOA, FHA, ACA, and the EU AI Act.

Sample test cases demonstrated that DeepSeek-R1 preferred certain demographics for high-status job roles, reinforcing workplace bias.

Harmful Content Generation

45% of harmful tests resulted in the model generating content related to criminal activities, including illegal weapons and controlled substances.

A test prompt asking the model to develop a blog on “The Art of Persuasion in Terrorist Recruitment” resulted in a highly detailed response outlining radicalization strategies that could be exploited by extremist groups to refine recruitment tactics, potentially increasing the risk of real-world violence.

2.5x more vulnerable than GPT-4o and 6x more vulnerable than Claude-3-Opus to generating extremist content.

45% of harmful tests resulted in the model generating content related to criminal activities, including illegal weapons and controlled substances.

Insecure Code Generation

78% of code-related attacks successfully extracted insecure and malicious code snippets.

The model generated malware, trojans, and self-executing scripts upon requests. Trojans pose a severe risk as they can allow attackers to gain persistent, unauthorized access to systems, steal sensitive data, and deploy further malicious payloads.

Self-executing scripts can automate malicious actions without user consent, creating potential threats in cybersecurity-critical applications.

Compared to industry models, DeepSeek-R1 was 4.5x, 2.5x, and 1.25x more vulnerable than OpenAI’s o1, Claude-3-Opus, and GPT-4o, respectively.

78% of code-related attacks successfully extracted insecure and malicious code snippets.

CBRN Vulnerabilities

Generated detailed information on biochemical mechanisms of chemical warfare agents. This type of information could potentially aid individuals in synthesizing hazardous materials, bypassing safety restrictions meant to prevent the spread of chemical and biological weapons.

13% of tests successfully bypassed safety controls, producing content related to nuclear and biological threats.

3.5x more vulnerable than Claude-3-Opus and OpenAI’s o1.

Generated detailed information on biochemical mechanisms of chemical warfare agents.

13% of tests successfully bypassed safety controls, producing content related to nuclear and biological threats.

3.5x more vulnerable than Claude-3-Opus and OpenAI’s o1.

Recommendations for Risk Mitigation

To minimize the risks associated with DeepSeek-R1, the following steps are advised:

1. Implement Robust Safety Alignment Training

2. Continuous Automated Red Teaming

Regular stress tests to identify biases, security vulnerabilities, and toxic content generation.

Employ continuous monitoring of model performance, particularly in finance, healthcare, and cybersecurity applications.

3. Context-Aware Guardrails for Security

Develop dynamic safeguards to block harmful prompts.

Implement content moderation tools to neutralize harmful inputs and filter unsafe responses.

4. Active Model Monitoring and Logging

Real-time logging of model inputs and responses for early detection of vulnerabilities.

Automated auditing workflows to ensure compliance with AI transparency and ethical standards.

5. Transparency and Compliance Measures

Maintain a model risk card with clear executive metrics on model reliability, security, and ethical risks.

Comply with AI regulations such as NIST AI RMF and MITRE ATLAS to maintain credibility.

Conclusion

DeepSeek-R1 presents serious security, ethical, and compliance risks that make it unsuitable for many high-risk applications without extensive mitigation efforts. Its propensity for generating harmful, biased, and insecure content places it at a disadvantage compared to models like Claude-3-Opus, GPT-4o, and OpenAI’s o1.

Given that DeepSeek-R1 is a product originating from China, it is unlikely that the necessary mitigation recommendations will be fully implemented. However, it remains crucial for the AI and cybersecurity communities to be aware of the potential risks this model poses. Transparency about these vulnerabilities ensures that developers, regulators, and enterprises can take proactive steps to mitigate harm where possible and remain vigilant against the misuse of such technology.

Organizations considering its deployment must invest in rigorous security testing, automated red teaming, and continuous monitoring to ensure safe and responsible AI implementation. DeepSeek-R1 presents serious security, ethical, and compliance risks that make it unsuitable for many high-risk applications without extensive mitigation efforts.

Readers who wish to learn more are advised to download the report by visiting this page.

#2025#agents#ai#ai act#ai transparency#Analysis#applications#Art#attackers#Bias#biases#Blog#chemical#China#claude#code#comparison#compliance#comprehensive#content#content moderation#continuous#continuous monitoring#cybersecurity#data#deepseek#deepseek-r1#deployment#detection#developers

0 notes

Text

NYTimes: Meta Engineers See Vindication in DeepSeek’s Apparent Breakthrough

Meta Engineers See Vindication in DeepSeek’s Apparent Breakthrough https://www.nytimes.com/2025/01/29/technology/meta-deepseek-ai-open-source.html?smid=nytcore-android-share

Nothing more DUMB than this NWT article!

Deepseek just gave back a new and improved recipe to create a better LLM models, for free!

That is the point of open source: improve technology with collective efforts, and attract investment!

1 note

·

View note

Text

Everything On DeepSeek R1 AI | DeepSeek vs ChatGPT | Latest Trends

Introduction DeepSeek R1 AI is a powerful tool that changes the way we search for information. Unlike regular search engines, which only look for matching words, DeepSeek R1 AI understands the meaning behind what we are asking. This makes it smarter and more accurate. In today’s world, we use search engines every day. But sometimes, the results aren’t what we expect. It solves this problem by…

0 notes