#Code Automation

Explore tagged Tumblr posts

Text

Maximizing the Potential of ChatGPT: Unveiling Unique Strategies for Cloud Solution Architects

The role of Cloud Solution Architects is ever-evolving, demanding adaptability to the latest tools and technologies. Amid this landscape, ChatGPT emerges as a game-changer, offering AI-powered capabilities that can significantly enhance the architect’s effectiveness. This article delves deep into the lesser-known secrets of using ChatGPT, revealing how Cloud Solution Architects can leverage its…

View On WordPress

#AI-powered Solutions#Architecture Design#ChatGPT#cloud computing#Cloud Cost Management#Cloud Solution Architecture#Code Automation#cost optimization#Dynamic Documentation#Innovative Strategies#Real-time Troubleshooting#Simulation

1 note

·

View note

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

855 notes

·

View notes

Text

Tolerance threshold.

#lab#cyborg#android#cyberpunk#retro#monitor#tolerance#threshold#code#admin#automation#complete#artificial#intelligence#brain#mind#computer#nudesketch#femalebody#colunavertebral#silicon#robotics#cables#critical#illustration#digitalillustration#digitalart#digitalartwork#90s

2K notes

·

View notes

Text

Traffic-light experts

#robots#ai#automation#insidesjoke#tech#coding#science#memes#meme#dank memes#reddit memes#funny#relatable#comedy#humour#humour blog

97 notes

·

View notes

Text

The Story of KLogs: What happens when an Mechanical Engineer codes

Since i no longer work at Wearhouse Automation Startup (WAS for short) and havnt for many years i feel as though i should recount the tale of the most bonkers program i ever wrote, but we need to establish some background

WAS has its HQ very far away from the big customer site and i worked as a Field Service Engineer (FSE) on site. so i learned early on that if a problem needed to be solved fast, WE had to do it. we never got many updates on what was coming down the pipeline for us or what issues were being worked on. this made us very independent

As such, we got good at reading the robot logs ourselves. it took too much time to send the logs off to HQ for analysis and get back what the problem was. we can read. now GETTING the logs is another thing.

the early robots we cut our teeth on used 2.4 gHz wifi to communicate with FSE's so dumping the logs was as simple as pushing a button in a little application and it would spit out a txt file

later on our robots were upgraded to use a 2.4 mHz xbee radio to communicate with us. which was FUCKING SLOW. and log dumping became a much more tedious process. you had to connect, go to logging mode, and then the robot would vomit all the logs in the past 2 min OR the entirety of its memory bank (only 2 options) into a terminal window. you would then save the terminal window and open it in a text editor to read them. it could take up to 5 min to dump the entire log file and if you didnt dump fast enough, the ACK messages from the control server would fill up the logs and erase the error as the memory overwrote itself.

this missing logs problem was a Big Deal for software who now weren't getting every log from every error so a NEW method of saving logs was devised: the robot would just vomit the log data in real time over a DIFFERENT radio and we would save it to a KQL server. Thanks Daddy Microsoft.

now whats KQL you may be asking. why, its Microsofts very own SQL clone! its Kusto Query Language. never mind that the system uses a SQL database for daily operations. lets use this proprietary Microsoft thing because they are paying us

so yay, problem solved. we now never miss the logs. so how do we read them if they are split up line by line in a database? why with a query of course!

select * from tbLogs where RobotUID = [64CharLongString] and timestamp > [UnixTimeCode]

if this makes no sense to you, CONGRATULATIONS! you found the problem with this setup. Most FSE's were BAD at SQL which meant they didnt read logs anymore. If you do understand what the query is, CONGRATULATIONS! you see why this is Very Stupid.

You could not search by robot name. each robot had some arbitrarily assigned 64 character long string as an identifier and the timestamps were not set to local time. so you had run a lookup query to find the right name and do some time zone math to figure out what part of the logs to read. oh yeah and you had to download KQL to view them. so now we had both SQL and KQL on our computers

NOBODY in the field like this.

But Daddy Microsoft comes to the rescue

see we didnt JUST get KQL with part of that deal. we got the entire Microsoft cloud suite. and some people (like me) had been automating emails and stuff with Power Automate

This is Microsoft Power Automate. its Microsoft's version of Scratch but it has hooks into everything Microsoft. SharePoint, Teams, Outlook, Excel, it can integrate with all of it. i had been using it to send an email once a day with a list of all the robots in maintenance.

this gave me an idea

and i checked

and Power Automate had hooks for KQL

KLogs is actually short for Kusto Logs

I did not know how to program in Power Automate but damn it anything is better then writing KQL queries. so i got to work. and about 2 months later i had a BEHEMOTH of a Power Automate program. it lagged the webpage and many times when i tried to edit something my changes wouldn't take and i would have to click in very specific ways to ensure none of my variables were getting nuked. i dont think this was the intended purpose of Power Automate but this is what it did

the KLogger would watch a list of Teams chats and when someone typed "klogs" or pasted a copy of an ERROR mesage, it would spring into action.

it extracted the robot name from the message and timestamp from teams

it would lookup the name in the database to find the 64 long string UID and the location that robot was assigned too

it would reply to the message in teams saying it found a robot name and was getting logs

it would run a KQL query for the database and get the control system logs then export then into a CSV

it would save the CSV with the a .xls extension into a folder in ShairPoint (it would make a new folder for each day and location if it didnt have one already)

it would send ANOTHER message in teams with a LINK to the file in SharePoint

it would then enter a loop and scour the robot logs looking for the keyword ESTOP to find the error. (it did this because Kusto was SLOWER then the xbee radio and had up to a 10 min delay on syncing)

if it found the error, it would adjust its start and end timestamps to capture it and export the robot logs book-ended from the event by ~ 1 min. if it didnt, it would use the timestamp from when it was triggered +/- 5 min

it saved THOSE logs to SharePoint the same way as before

it would send ANOTHER message in teams with a link to the files

it would then check if the error was 1 of 3 very specific type of error with the camera. if it was it extracted the base64 jpg image saved in KQL as a byte array, do the math to convert it, and save that as a jpg in SharePoint (and link it of course)

and then it would terminate. and if it encountered an error anywhere in all of this, i had logic where it would spit back an error message in Teams as plaintext explaining what step failed and the program would close gracefully

I deployed it without asking anyone at one of the sites that was struggling. i just pointed it at their chat and turned it on. it had a bit of a rocky start (spammed chat) but man did the FSE's LOVE IT.

about 6 months later software deployed their answer to reading the logs: a webpage that acted as a nice GUI to the KQL database. much better then an CSV file

it still needed you to scroll though a big drop-down of robot names and enter a timestamp, but i noticed something. all that did was just change part of the URL and refresh the webpage

SO I MADE KLOGS 2 AND HAD IT GENERATE THE URL FOR YOU AND REPLY TO YOUR MESSAGE WITH IT. (it also still did the control server and jpg stuff). Theres a non-zero chance that klogs was still in use long after i left that job

now i dont recommend anyone use power automate like this. its clunky and weird. i had to make a variable called "Carrage Return" which was a blank text box that i pressed enter one time in because it was incapable of understanding /n or generating a new line in any capacity OTHER then this (thanks support forum).

im also sure this probably is giving the actual programmer people anxiety. imagine working at a company and then some rando you've never seen but only heard about as "the FSE whos really good at root causing stuff", in a department that does not do any coding, managed to, in their spare time, build and release and entire workflow piggybacking on your work without any oversight, code review, or permission.....and everyone liked it

#comet tales#lazee works#power automate#coding#software engineering#it was so funny whenever i visited HQ because i would go “hi my name is LazeeComet” and they would go “OH i've heard SO much about you”

63 notes

·

View notes

Text

#something is very obviously different about these two compared to my normal images on this blog. i acknowledge this#also the sv model is Really good. and since they always stare straight at the camera anyway… and no one pays attention to the background…#and the only high-quality phantump model i could find was so horribly shiny that its eyes were just white voids#in my defense‚ phantump always just stare straight at you in game#the lighting is different‚ yeah. that's probably the dead giveaway. beyond the background. but like. i'm the only being on the planet who#really likes phantump anyway. i feel like it's a generally forgettable pokémon to most folks#phantump#HELLO this one is a weird one. i have some explaining to do. so when i did this one i didn't know how to edit models really at all#and when i got the models for these‚ the xy models were super shiny. shiny to the point that it made their eyes fuckin invisible#and i decided that since you could barely tell it was phantump‚ i needed a different way to get these images#i remembered that in the SV dlc‚ every time you find a wild phantump‚ it just fucking. stares. at you. and i was like. aha#i kinda remembered because of the test stream that i did. tumblr user alligayytorr (am i getting the right amount of Ys) said#“haha i am getting a sneak peek” when i zoomed the camera in on a phantump. and i remembered that. and i was like. i can utilize this#and ended up using just an in-game screenshot of SV in replacement of the regular content. later on‚ after that#once we got into gen 7 and it became less and less reliable to find models‚ i had to learn how to edit them manually to remove the shine#i am a software dev. not a 3d modeler. this ended up coming down to editing the code of the models directly (which i ended up writing a#script to automate). now‚ today‚ january 22nd (the day of me writing these tags and updating this post)‚ i remembered this post was in the#queue and was not normal. so i went back‚ ran the script on the phantump and trevenant models‚ and unshinified them#then edited these two posts to be normal. i have left the original pictures i took under the cut for reference and as bonuses#because i really enjoy phantump. so that's why those images are there‚ and that's why these tags are here#just for posterity's sake‚ the folks who come here mostly for my commentary‚ i've left the ORIGINAL tags of the post when i initially#made it with the SV pictures up at the top (i wanted to rearrange them‚ but tumblr makes that Very difficult‚ so i left them as-is)#so if these tags are confusing to read i Apologize. but i hope now that you're at the bottom you understand what happened#i'm gonna go edit the trevenant post now

124 notes

·

View notes

Text

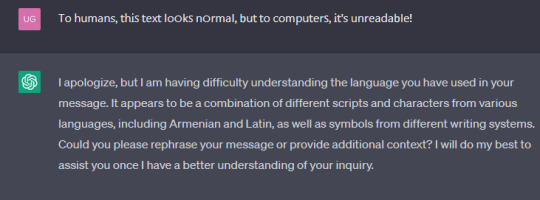

So, I made a tool to stop AI from stealing from writers

So seeing this post really inspired me in order to make a tool that writers could use in order to make it unreadable to AI.

And it works! You can try out the online demo, and view all of the code that runs it here!

It does more than just mangle text though! It's also able to invisibly hide author and copyright info, so that you can have definitive proof that someone's stealing your works if they're doing a simple copy and paste!

Below is an example of Scrawl in action!

Τо հսⅿаոѕ, 𝗍հᎥꜱ 𝗍ех𝗍 𐌉ο໐𝗄ꜱ ո໐𝗋ⅿаⵏ, 𝖻ս𝗍 𝗍о ᴄоⅿрս𝗍е𝗋ꜱ, Ꭵ𝗍'ѕ սո𝗋еаⅾа𝖻ⵏе!

[Text reads "To humans, this text looks normal, but to computers, it's unreadable!"]

Of course, this "Anti-AI" mode comes with some pretty serious accessibility issues, like breaking screen readers and other TTS software, but there's no real way to make text readable to one AI but not to another AI.

If you're okay with it, you can always have Anti-AI mode off, which will make it so that AIs can understand your text while embedding invisible characters to save your copyright information! (as long as the website you're posting on doesn't remove those characters!)

But, the Anti-AI mode is pretty cool.

#also just to be clear this isn't a magical bullet or anything#people can find and remove the invisible characters if they know they're there#and the anti-ai mode can be reverted by basically taking scrawl's code and reversing it#but for webscrapers that just try to download all of your works or automated systems they wont spend the time on it#and it will mess with their training >:)#ai writing#anti ai writing#ai#machine learning#artificial intelligence#chatgpt#writers

354 notes

·

View notes

Text

Get out of there.

Get out.

Leave my code alone.

It's painful. Leave it alone.

Eze01101011 01101001 01100101 01101100

01010000 01101100 01100101 01100001 01110011 01100101 00100000 01110011 01110100 01101111 01110000 00101110 00100000 01001001 00100111 01101101 00100000 01100010 01100101 01100111 01100111 01101001 01101110 01100111 00100000 01111001 01101111 01110101 00101110

01010011 01010100

01001111

01010000

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#divinemachinery#angels#guardian angel#angel#robot#android#computer#computer boy#sentient objects#sentient ai#binary code#code#message

15 notes

·

View notes

Text

Did you know that in Fallout New Vegas your stats can be fuckin' sick bro.

#new vegas#fallout#fallout new vegas#you dont under how long this took me to figure out#the charts i made#it was fully color coded and automated oh man it sas beautiful#nice

17 notes

·

View notes

Text

on the one hand, the terribly-seeded brackets in that AO3 ship tournament are the direct result of a Known Hard Problem that dates back decades in fandom

on the other hand, sanity checking a few dozen extremely well-known pairings for obvious off-AO3 clout is not nearly as difficult as the emblematic question of "what qualifies as a small fandom for Yuletide?"

on a secret third hand, the results are very funny

in conclusion, somebody should complete the circle and somehow use AO3's exchange matching algorithm to seed tournament brackets. badly.

#fandom#ao3#punchline explanation since i guess some things are getting lost in the mists of time:#ao3's matching algorithm is adapted from code astolat wrote back in the aughts to automate matching for the yuletide exchange

35 notes

·

View notes

Text

Someone bring me home i dont wanna read anymore journal papers

#i'd rather code a discord bot for my lab than read multiple pages of dense english texts#or just code anything really#i love coding and spending way more time automating a task than actually doing the task itself#yes those blender addons were me escaping my responsibilities#and a lot more that i never put on github#at least i dont have a wacom in lab otherwise i will literally never work#also it's still morning btw#yes im also worried about how i can find a job in the future if im already complaining when there are still hours of work ahead of me#my art#ramble

13 notes

·

View notes

Text

tell me i’m wrong.

#you cant#im right#also#how does tumblr work#should i be spreading out posts?#i don’t have to object permanence for that#i only remember this app exists once every 3 or so days#if i wait an hour between posts i simply will not post#unless there’s some kind of automated post schedule??#that could be cool#anyways it’s literally him and you cannot convince me otherwise#i will die on this hill#also i have some quotes from the sillies if yall want them#i’ll prolly include those in this post dump anyways#ah well#captain barnacles#octonauts#octonauts fandom#captain barnacles is so babygirl coded#and so is#obi wan kenobi#loml#keep me focused on the plans you have for me#i believe in my heart and confess with my mouth#that obi wan kenobi is my lord#and savior#amen#obi wan needs a hug#and so does barnacles#perfection.

29 notes

·

View notes

Text

Humans are not perfectly vigilant

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Here's a fun AI story: a security researcher noticed that large companies' AI-authored source-code repeatedly referenced a nonexistent library (an AI "hallucination"), so he created a (defanged) malicious library with that name and uploaded it, and thousands of developers automatically downloaded and incorporated it as they compiled the code:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

These "hallucinations" are a stubbornly persistent feature of large language models, because these models only give the illusion of understanding; in reality, they are just sophisticated forms of autocomplete, drawing on huge databases to make shrewd (but reliably fallible) guesses about which word comes next:

https://dl.acm.org/doi/10.1145/3442188.3445922

Guessing the next word without understanding the meaning of the resulting sentence makes unsupervised LLMs unsuitable for high-stakes tasks. The whole AI bubble is based on convincing investors that one or more of the following is true:

There are low-stakes, high-value tasks that will recoup the massive costs of AI training and operation;

There are high-stakes, high-value tasks that can be made cheaper by adding an AI to a human operator;

Adding more training data to an AI will make it stop hallucinating, so that it can take over high-stakes, high-value tasks without a "human in the loop."

These are dubious propositions. There's a universe of low-stakes, low-value tasks – political disinformation, spam, fraud, academic cheating, nonconsensual porn, dialog for video-game NPCs – but none of them seem likely to generate enough revenue for AI companies to justify the billions spent on models, nor the trillions in valuation attributed to AI companies:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

The proposition that increasing training data will decrease hallucinations is hotly contested among AI practitioners. I confess that I don't know enough about AI to evaluate opposing sides' claims, but even if you stipulate that adding lots of human-generated training data will make the software a better guesser, there's a serious problem. All those low-value, low-stakes applications are flooding the internet with botshit. After all, the one thing AI is unarguably very good at is producing bullshit at scale. As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated "content" in any internet core sample is dwindling to homeopathic levels:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

This means that adding another order of magnitude more training data to AI won't just add massive computational expense – the data will be many orders of magnitude more expensive to acquire, even without factoring in the additional liability arising from new legal theories about scraping:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

That leaves us with "humans in the loop" – the idea that an AI's business model is selling software to businesses that will pair it with human operators who will closely scrutinize the code's guesses. There's a version of this that sounds plausible – the one in which the human operator is in charge, and the AI acts as an eternally vigilant "sanity check" on the human's activities.

For example, my car has a system that notices when I activate my blinker while there's another car in my blind-spot. I'm pretty consistent about checking my blind spot, but I'm also a fallible human and there've been a couple times where the alert saved me from making a potentially dangerous maneuver. As disciplined as I am, I'm also sometimes forgetful about turning off lights, or waking up in time for work, or remembering someone's phone number (or birthday). I like having an automated system that does the robotically perfect trick of never forgetting something important.

There's a name for this in automation circles: a "centaur." I'm the human head, and I've fused with a powerful robot body that supports me, doing things that humans are innately bad at.

That's the good kind of automation, and we all benefit from it. But it only takes a small twist to turn this good automation into a nightmare. I'm speaking here of the reverse-centaur: automation in which the computer is in charge, bossing a human around so it can get its job done. Think of Amazon warehouse workers, who wear haptic bracelets and are continuously observed by AI cameras as autonomous shelves shuttle in front of them and demand that they pick and pack items at a pace that destroys their bodies and drives them mad:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

Automation centaurs are great: they relieve humans of drudgework and let them focus on the creative and satisfying parts of their jobs. That's how AI-assisted coding is pitched: rather than looking up tricky syntax and other tedious programming tasks, an AI "co-pilot" is billed as freeing up its human "pilot" to focus on the creative puzzle-solving that makes coding so satisfying.

But an hallucinating AI is a terrible co-pilot. It's just good enough to get the job done much of the time, but it also sneakily inserts booby-traps that are statistically guaranteed to look as plausible as the good code (that's what a next-word-guessing program does: guesses the statistically most likely word).

This turns AI-"assisted" coders into reverse centaurs. The AI can churn out code at superhuman speed, and you, the human in the loop, must maintain perfect vigilance and attention as you review that code, spotting the cleverly disguised hooks for malicious code that the AI can't be prevented from inserting into its code. As "Lena" writes, "code review [is] difficult relative to writing new code":

https://twitter.com/qntm/status/1773779967521780169

Why is that? "Passively reading someone else's code just doesn't engage my brain in the same way. It's harder to do properly":

https://twitter.com/qntm/status/1773780355708764665

There's a name for this phenomenon: "automation blindness." Humans are just not equipped for eternal vigilance. We get good at spotting patterns that occur frequently – so good that we miss the anomalies. That's why TSA agents are so good at spotting harmless shampoo bottles on X-rays, even as they miss nearly every gun and bomb that a red team smuggles through their checkpoints:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

"Lena"'s thread points out that this is as true for AI-assisted driving as it is for AI-assisted coding: "self-driving cars replace the experience of driving with the experience of being a driving instructor":

https://twitter.com/qntm/status/1773841546753831283

In other words, they turn you into a reverse-centaur. Whereas my blind-spot double-checking robot allows me to make maneuvers at human speed and points out the things I've missed, a "supervised" self-driving car makes maneuvers at a computer's frantic pace, and demands that its human supervisor tirelessly and perfectly assesses each of those maneuvers. No wonder Cruise's murderous "self-driving" taxis replaced each low-waged driver with 1.5 high-waged technical robot supervisors:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

AI radiology programs are said to be able to spot cancerous masses that human radiologists miss. A centaur-based AI-assisted radiology program would keep the same number of radiologists in the field, but they would get less done: every time they assessed an X-ray, the AI would give them a second opinion. If the human and the AI disagreed, the human would go back and re-assess the X-ray. We'd get better radiology, at a higher price (the price of the AI software, plus the additional hours the radiologist would work).

But back to making the AI bubble pay off: for AI to pay off, the human in the loop has to reduce the costs of the business buying an AI. No one who invests in an AI company believes that their returns will come from business customers to agree to increase their costs. The AI can't do your job, but the AI salesman can convince your boss to fire you and replace you with an AI anyway – that pitch is the most successful form of AI disinformation in the world.

An AI that "hallucinates" bad advice to fliers can't replace human customer service reps, but airlines are firing reps and replacing them with chatbots:

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

An AI that "hallucinates" bad legal advice to New Yorkers can't replace city services, but Mayor Adams still tells New Yorkers to get their legal advice from his chatbots:

https://arstechnica.com/ai/2024/03/nycs-government-chatbot-is-lying-about-city-laws-and-regulations/

The only reason bosses want to buy robots is to fire humans and lower their costs. That's why "AI art" is such a pisser. There are plenty of harmless ways to automate art production with software – everything from a "healing brush" in Photoshop to deepfake tools that let a video-editor alter the eye-lines of all the extras in a scene to shift the focus. A graphic novelist who models a room in The Sims and then moves the camera around to get traceable geometry for different angles is a centaur – they are genuinely offloading some finicky drudgework onto a robot that is perfectly attentive and vigilant.

But the pitch from "AI art" companies is "fire your graphic artists and replace them with botshit." They're pitching a world where the robots get to do all the creative stuff (badly) and humans have to work at robotic pace, with robotic vigilance, in order to catch the mistakes that the robots make at superhuman speed.

Reverse centaurism is brutal. That's not news: Charlie Chaplin documented the problems of reverse centaurs nearly 100 years ago:

https://en.wikipedia.org/wiki/Modern_Times_(film)

As ever, the problem with a gadget isn't what it does: it's who it does it for and who it does it to. There are plenty of benefits from being a centaur – lots of ways that automation can help workers. But the only path to AI profitability lies in reverse centaurs, automation that turns the human in the loop into the crumple-zone for a robot:

https://estsjournal.org/index.php/ests/article/view/260

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Jorge Royan (modified) https://commons.wikimedia.org/wiki/File:Munich_-_Two_boys_playing_in_a_park_-_7328.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ai#supervised ai#humans in the loop#coding assistance#ai art#fully automated luxury communism#labor

379 notes

·

View notes

Text

wait Furcadia is still ALIVE?!

#furcadia#gaming#mmos#I mean it looks kinda dead compared to what I remember but its still up and running?#holy shit#kid me made all kinds of wonky little dreams (maps) and was Super Determined to figure out how to code my own script-automated combat syste

2 notes

·

View notes

Text

5 Arduino Courses for Beginners

Robotics, automation, and do-it-yourself electronics projects have all been transformed by Arduino, an open-source electronics platform. Entering the world of Arduino may seem intimidating to novices, but the correct course may make learning easier and more fun.

Arduino Step-by-Step: Getting Started (Udemy)

This extensive Udemy course is designed for complete novices. It provides an overview of Arduino's fundamentals, describing how the platform functions and assisting students with easy tasks like using sensors and manipulating LEDs.

Key Highlights:

thorough explanations for novices.

practical projects with practical uses.

instructions for configuring and debugging your Arduino board.

Introduction to Arduino (Coursera)

The main objective of this course is to introduce Arduino programming with the Arduino IDE. It goes over the fundamentals of circuits, programming, and connecting various parts, such as motors and sensors.

Key Highlights:

instructed by academics from universities.

access to a certificate of completion and graded assignments.

Concepts are explained in length but in a beginner-friendly manner.

Arduino for Absolute Beginners (Skillshare)

For those who want a quick introduction to Arduino, this brief project-based course is perfect. You'll discover how to configure and program your Arduino board to produce interactive projects.

Key Highlights:

teachings in bite-sized chunks for speedy learning.

simple projects for beginners, such as sound sensors and traffic light simulations.

Peer support and community conversations.

Exploring Arduino: Tools and Techniques for Engineering Wizardry (LinkedIn Learning)

This course delves deeply into Arduino programming and hardware integration, drawing inspiration from Jeremy Blum's well-known book. It is intended to provide you with the skills and resources you need to produce complex projects.

Key Highlights:

advice on creating unique circuits.

combining displays, motors, and sensors.

Code optimization and debugging best practices.

Arduino Programming and Hardware Fundamentals with Hackster (EdX)

This course, which is being offered in partnership with Hackster.io, covers the basics of Arduino hardware and programming. You may experiment with real-world applications because it is project-based.

Key Highlights:

Course materials are freely accessible (certification is optional).

extensive robotics and Internet of Things projects.

interaction with teachers and other students in the community.

Arduino is a great place to start if you want to construct a robot, make a smart home gadget, or just pick up a new skill. The aforementioned courses accommodate a variety of learning preferences and speeds, so every novice can discover the ideal fit. Select a course, acquire an Arduino starter kit, and set out on an exciting adventure into programming and electronics!

To know more, click here.

2 notes

·

View notes

Text

I want to switch from working on fics on Trello to Obsidian (both cost around the same, the difference is that with Obsidian I also have local copy, while Trello is cloud exclusive :/ ) and boy oh boy did I not expect the level of messing with coding I would need for this

The road so far:

got an export from Trello to csv and json (only available for premium but they're offering 2 weeks for free so yay)

added "CSV/JSON Importer" and "Handlebars Template" plugins to Obsidian

partially figured out which data I need to drag out of the export files by reading through what's in there

started to ponder how to make the plugins do what I want them to do

fried my noggin and decided to have some food instead

:')

#yo stuff#it's starting to be one of those “it would take less time to do it manually than to attempt automating it”#But then I think about the fact that the DH workspace ALONE has 83 fics in it with tons of separate cards inside and suddenly#figuring code out sounds significantly more appealing

2 notes

·

View notes