#Amazon EC2

Explore tagged Tumblr posts

Text

What is Amazon EC2? What are the Benefits, Types, and Different Pricing Models of AWS EC2?

Did you know that Amazon EC2 (Elastic Compute Cloud) powers more than a million active customers across 245 countries and territories, making it one of the most popular cloud services in the world?

This comprehensive guide is for advanced users, DevOps professionals, beginners, and engineers who are looking to harness the power of AWS EC2 to enhance their cloud infrastructure.

0 notes

Text

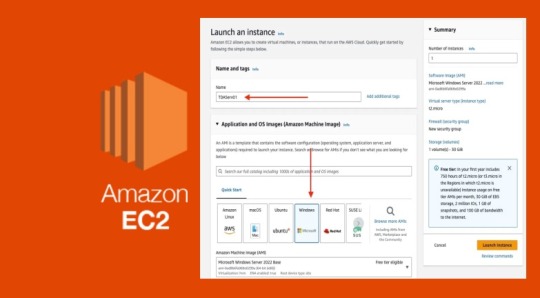

How to create an EC2 Instance

Amazon Elastic Compute Cloud (Amazon EC2) is a web service that provides secure, resizable computing capacity in the cloud. Amazon EC2 offers many options that help you build and run virtually any application. With these possibilities, getting started with EC2 is quick and easy to do. In this article, we shall discuss how to create an EC2 Instance. Please see the Various ways to restart an AWS…

View On WordPress

#Amazon EC2#Amazon Elastic Compute Cloud#Amazon Web Services#AWS#EC2#EC2 instance#EC2 Instances#EC2 Launch#EC2Launch

0 notes

Text

Amazon EC2 M7i Instances Hypercharge Cloud AI

Amazon EC2 M7i Instances

Intel would like to demonstrate how the latest Amazon EC2 M7i Instances and M7i-flex instances with 4th Generation Intel Xeon Scalable processors can support your AI, ML, and DL workloads in this second of a three-blog series. They explained these new instances and their broad benefits in the first blog. They wanted to know how AI, ML, and DL workloads perform on these new instances and how Intel CPUs may help.

One research assesses the AI industry at $136.55 billion USD and predicts 37.3% yearly growth until 2030. While you could credit the increase to apparent AI usage like Google and Tesla’s self-driving cars, the advertising and media sector dominates the worldwide AI market. AI and ML/DL workloads are everywhere and growing. Cloud service providers (CSPs) like Amazon Web Services (AWS) are investing in AI/ML/DL services and infrastructure to enable organizations adopt these workloads more readily. Hosting instances with 4th-generation Intel Xeon Scalable CPUs and AI accelerators is one investment.

This article will explain how Intel CPUs and AWS instances are ideal for AI workloads. Two typical ML/DL model types will be used to demonstrate how these instances performed these workloads.

Amazon EC2 M7i &M7i Flex with 4th Gen Intel Xeon Scalables

As mentioned in the previous blog, Amazon EC2 provides M7i and M7i-flex with the newest Intel Xeon CPU. Primary difference: M7i-flex offers changeable performance at a reduced price. This blog will concentrate on regular Amazon EC2 M7i instances for sustained, compute-intensive applications like training or executing machine learning models. M7i instances have 2–192 vCPUs for various requirements. Each instance may accommodate up to 128 EBS disks, providing ample of storage for your dataset. The newest Intel Xeon processors include various built-in accelerators to boost task performance.

For better deep learning performance, all Amazon EC2 M7i instances include Intel Advanced Matrix Extensions (AMX) accelerator. Intel AMX lets customers code AI tasks on the AMX instruction set while keeping non-AI workloads on the CPU ISA. Intel has optimized its oneAPI Deep Neural Network Library (oneDNN) to make AMX easier to use for developers. Open-source AI frameworks like PyTorch, TensorFlow, and ONYX support this API. Intel tested 4th Gen Intel Xeon Scalable processors with AMX capabilities to give 10 times the inference performance of earlier CPUs.

Engineers and developers must adjust their AI, ML, and DL workloads on the newest Amazon EC2 M7i instances with Intel AMX to maximize performance. Intel offers an AI tuning guide to take use of Intel processor benefits across numerous popular models and frameworks. OS-level optimizations, PyTorch, TensorFlow, OpenVINO, and other optimizations are covered throughout the guide. The Intel Model Zoo GitHub site contains pre-trained AI, ML, and DL models pre-validated for Intel hardware, AI workload optimization guidance, best practices, and more.

After learning how Intel and the newest Intel Xeon processors may better AI, ML, and DL workloads, let’s see how these instances perform with object identification and natural language processing.

Models for detecting objects

Object detection models control image-classification applications. This category includes 3D medical scan, self-driving car camera, face recognition, and other models. They will discuss ResNet-50 and RetinaNet.

A 50-layer CNN powers ResNet-50, an image recognition deep learning model. User-trained models identify and categorize picture objects. ResNet-50 models on Intel Model Zoo and others train using ImageNet’s big picture collection. Most object identification models have one or two stages, with two-stage models being more accurate but slower. ResNet-50 and RetinaNet are single-stage models, although RetinaNet’s Focal Loss function improves accuracy without losing speed.

Performance how rapidly these models process photos depends on their use. End consumers don’t want lengthy waits for device recognition and unlocking. Before plant diseases and insect incursions damage crops, farmers must discover them immediately. Intel’s MLPerf RetinaNet model demonstrates that Amazon EC2 M7i instances analyze 4.11 times more samples per second than M6i instances.

As CPUs rise, ResNet-50 performance scales nicely, so you can retain high performance independent of dataset and instance size. An Amazon EC2 M7i instance with 192 vCPUs has eight times the ResNet-50 throughput of a 16vCPU instance. Higher-performing instances provide better value. Amazon EC2 M7i instances analyzed 4.49 times more samples per dollar than M6i instances in RetinaNet testing. These findings demonstrate that Amazon EC2 M7i instances with 4th Gen Intel Xeon Scalable CPUs are ideal for object identification deep learning tasks.

Natural Language Models

You’re probably using natural language processing engines when you ask a search engine or chatbot a query. NLP models learn real speech patterns to comprehend and interact with language. BERT machine learning models can interpret and contextualize text in addition to storing and presenting it. Word processing and phone messaging applications now forecast content based on what users have typed. Small firms benefit from chat boxes for first consumer contacts, even if they don’t run Google Search. These firms require a clear, fast, accurate chatbot.

Chatbots and other NLP model applications demand real-time execution, therefore speed is crucial. With Amazon EC2 M7i instances and 4th Generation Intel Xeon processors, NLP models like BERT and RoBERTa, an optimized BERT, perform better. One benchmark test found that Amazon EC2 M7i instances running RoBERTa analyzed 10.65 times more phrases per second than Graviton-based M7g instances with the same vCPU count. BERT testing with the MLPerf suite showed that throughput scaled well when they raised the vCPU count of Amazon EC2 M7i instances, with the 192-vCPU instance attaining almost 4 times the throughput of the 32-vCPU instance.

The Intel AMX accelerator in the 4th Gen Intel Xeon Scalable CPUs helps the Amazon EC2 M7i instances function well. Intel gives clients everything they need to improve NLP workloads with publicly accessible pre-optimized Intel processor models and tuning instructions for particular models like BERT. Amazon EC2 M7i instances outperformed M7g instances by 8.62 times per dollar, as RetinaNet showed.

Conclusion

For AI, ML, and DL, cloud decision-makers should use Amazon EC2 M7i instances with 4th Generation Intel Xeon Scalable CPUs. These instances include Intel AMX acceleration, tuning guidelines, and optimized models for many typical ML applications, delivering up to 10 times the throughput of Graviton-based M7g instances. Watch for further articles on how the newest Amazon EC2 M7i and M7i-flex instances may serve different workloads.

Read more on Govindhtech.com

1 note

·

View note

Video

youtube

Lambda Lab | Start and Stop EC2 Instances using Lambda Function | Tech A...

0 notes

Text

code

#codeonedigest#cloud#aws#docker container#java#nodejs#javascript#docker image#dockerfile#docker file#ec2#ecs#elastic container service#elastic cloud computing#amazon ec2#amazon ecs#microservice#solid principle#python#kubernetes#salesforce#shopify#microservice design pattern#solid principles#java design pattern

0 notes

Text

Welche AWS-Services stehen für Cloud Computing zur Verfügung?: Die Überschrift lautet: "Cloud Computing mit AWS: Die verschiedenen Services im Überblick"

#CloudComputing #AWS #AmazonEC2 #AmazonS3 #AmazonRDS #AWSElasticBeanstalk #AWSLambda #AmazonRedshift #AmazonKinesis #AmazonECS #AmazonLightsail #AWSFargate Entdecken Sie die verschiedenen Services von AWS für Cloud Computing und machen Sie sich mit den Vor- und Nachteilen vertraut!

Cloud Computing ist in vielerlei Hinsicht eine revolutionäre Technologie. Es bietet Unternehmen die Möglichkeit, ihr Rechenzentrum zu einer kostengünstigen, zuverlässigen und flexiblen Infrastruktur zu machen. Mit Cloud Computing können Unternehmen auf jeder Plattform, zu jeder Zeit und an jedem Ort auf ihre Rechenzentrumsressourcen zugreifen. Amazon Web Services (AWS) ist einer der führenden…

View On WordPress

#Amazon EC2#Amazon ECS#Amazon Kinesis#Amazon Lightsail#Amazon RDS#Amazon Redshift#Amazon S3#AWS Elastic Beanstalk#AWS Fargate.#AWS Lambda

0 notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

Amazon Elastic Block Store, commonly known as EBS, is a significant component of Amazon Web Services (AWS) storage services, offering a versatile and reliable solution for managing data storage in the cloud. EBS allows users to create and attach block storage volumes to Amazon Elastic Compute Cloud (EC2) instances, making it an integral part of building scalable and high-performance applications on the AWS platform.

#amazon elastic block store#ebs#amazon web services#aws#ec2#amazon elastic compute cloud#cybersecurity#security#infosectrain#learntorise

0 notes

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-6)

In the previous parts (1,2,3,4 & 5) we discussed how one day digital market leader meet with the a friend AWS in the Cloud and become very popular pair. It bring a lot of gifts for the digital marketing persons. Then we started a journey into digital market leader house basement and structure, mainly repository CRX and the way its MK organized. Ways how both can live and what smaller modules they used to give architectural benefits.Also visited how they are structured together to give more.

In this part as well will see more on the eCommerce side of this combination.

Before going into this section of house , we want to go with eCommerce and content chemistry.

We got one picture in the house presenting AEM capabilities as content required in eCommerce.

Enhanced shopping experience with Adobe Commerce

Adobe Commerce, companies can integrate digital and physical shopping experiences

Best-in-class cloud-based omnichannel solutions, including in-store, retail associate, and order management technologies.

Adobe Commerce increases the rate you can provision your e-commerce to scale globally. It also offers flexibility for B2C and B2B experiences built on a headless, extensible architecture.

Adobe Commerce’s extensibility platform

Adobe Commerce’s extensibility platform and AWS services—such as Amazon Personalize, Amazon Connect, and Analytics on AWS — deliver omnichannel fulfillment and self-service.

Campaign Management Across Multichannel

Amazon AppFlow with Marketo Engage to securely transfer data between AWS services and cloud applications.

The fully-managed integration enables organization to expand the features of Marketo Engage. Organization select sales Leads and Activities Objects in Marketo and move them into AWS Services e.g.

Amazon S3, Amazon Redshift, or Amazon Lookout for Metrics.

Organization can also sync objects with CRM, Snowflake, and Upsolver and create new Leads objects in Marketo based on this data.

Adobe Experience Platform can generate value with data stored in AWS Analytics solutions. Data can ingest from various sources like Adobe applications, cloud-based storage, databases, etc.

Adobe Workfront :Marketing Process Optimizer

Adobe Workfront and AWS can serve as the blueprints for a marketing project, starting from campaign completion to process optimization.

Adobe Workfront delivers a 285% return on investment (ROI) for enterprise organization.Adobe Workfront and AWS provide an enterprise-grade, cloud-based work management platform that enables marketers to plan, predict, collaborate, evolve, and deliver their best work.

All necessary metadata is retained for real-time cataloging in Adobe Workfront, so campaign teams can streamline processes while maintaining visibility across all stages of the project development process.

Workfront Fusion: Workflows Automation way

Adobe Workfront Fusion is a powerful integration platform that quickly connects organization business-critical applications– with or without those built on AWS Cloud–by automating workflows and processes. This increase focus on core business solution without engaging developers into integrations or provide ongoing maintenance and engineering support.

Adobe Workfront Fusion, data and assets can flow freely across systems and teams in either direction to boost productivity.

Above steps summary:-

Adobe Photoshop:-Content editing process is complete then project status is updated in the Adobe Workfront

Workfront Fusion, Trigger a workflow to route the finished assets to relevant business applications, such as applications built on AWS cloud -Amazon S3, Lambda.

Amazon S3 events: Trigger an AWS Lambda runtime to resize the image.

The workflow then updates the finished content on the web, mobile, or tablet etc.

In this journey we walk through AEM & AWS for Adobe eCommerce to provide solutions to transform your business digitally. So any eCommerce solution deliver holistic, personalized experiences at scale, tailoring each moment of your eCommerce journey.

For more details on this interesting Journey you can browse back earlier parts from 1-5.

#aem#adobe#aws#wcm#cloud#programing#aws lambda#ec2#elb#security#adobe photoshop#adobephotoshop#Adobe eCommerce#AWS Lambda#amazon s3#workflow management#workflow automation#workflow optimization#marketing#content#professional#online#Adobe Workfront Fusion#business-critical#Adobe Workfront#campaign management#campaign optimization#Adobe Experience Platform#AWS Analytics#Adobe Analytics

1 note

·

View note

Text

How to set up a scalable web server on AWS EC2 with auto-scaling Did you know that by 2025, the global cloud computing market is projected to reach a staggering $623.3 billion? With the increasing demand for cloud services, it's crucial for businesses to optimize their infrastructure for scalability and reliability. One of the fundamental aspects of achieving this is setting up a scalable web server. In this comprehensive guide, we'll dive deep into the process of setting up a scalable web server on AWS EC2 with auto-scaling.

👉 Scalable Web Server Setup on AWS EC2 with Auto-Scaling: Required Resources, Benefits, Tips & Strategies

0 notes

Text

Deep Dive into Protecting AWS EC2, RDS Instances and VPC

Veeam Backup for Amazon Web Services (Veeam Backup for AWS or VBAWS) protects and facilitates disaster recovery for Amazon Elastic Compute Cloud (EC2), Amazon Relational Database Service (RDS), Amazon DynamoDB, and Amazon Elastic File System (EFS) environments. In this article, we shall deep dive into Protecting AWS EC2 and RDS Instances and VPC (Amazon Virtual Private Cloud) configurations as…

View On WordPress

#Amazon S3 Object Storage#AWS RDS Backups with Veeam#Database Backups#Protecting AWS EC2 and RDS#RDS Automated Backup#Veeam#Veeam Backup and Replication#Veeam Backup for AWS

0 notes

Text

Qualcomm Cloud AI 100 Lifts AWS’s Latest EC2!

Qualcomm Cloud AI 100 in AWS EC2

With the general release of new Amazon Elastic Compute Cloud (Amazon EC2) DL2q instances, the Qualcomm Cloud AI 100 launch, which built on the company’s technological collaboration with AWS, marked the first significant milestone in the joint efforts. The first instances of the Qualcomm artificial intelligence (AI) solution to be deployed in the cloud are the Amazon EC2 DL2q instances.

The Qualcomm Cloud AI 100 accelerator’s multi-core architecture is both scalable and flexible, making it suitable for a broad variety of use-cases, including:

Large Language Models (LLMs) and Generative AI: Supporting models with up to 16B parameters on a single card and 8x that in a single DL2q instance, LLMs address use cases related to creativity and productivity.

Classic AI: This includes computer vision and natural language processing.

They recently showcased a variety of applications using AWS EC DL2q powered by Qualcomm Cloud AI 100 at this year’s AWS re:Invent 2023:

A conversational AI that makes use of the Llama2 7B parameter LLM model.

Using the Stable Diffusion model, create images from text.

Utilising the Whisper Lite model to simultaneously transcribing multiple audio streams.

Utilising the transformer-based Opus mode to translate between several languages.

Nakul Duggal, SVP & GM, Automotive & Cloud Computing at Qualcomm Technologies, Inc., stated, “Working with AWS is empowering us to build on they established industry leadership in high-performance, low-power deep learning inference acceleration technology.” The work they have done so far shows how well cloud technologies can be integrated into software development and deployment cycles.

An affordable revolution in AI

EC2 customers can run inference on a variety of models with best-in-class performance-per-total cost of ownership (TCO) thanks to the Amazon EC2 DL2q instance. As an illustration:

For DL inference models, there is a price-performance advantage of up to 50% when compared to the latest generation of GPU-based Amazon EC2 instances.

With CV-based security, there is a reduction in Inference cards of over three times, resulting in a significantly more affordable system solution.

allowing for the optimization of 2.5 smaller models, such as Deci.ai models, on Qualcomm Cloud AI 100.

The Qualcomm AI Stack, which offers a consistent developer experience across Qualcomm AI in the cloud and other Qualcomm products, is a feature of the DL2q instance.

The DL2q instances and Qualcomm edge devices are powered by the same Qualcomm AI Stack and base AI technology, giving users a consistent developer experience with a single application programming interface (API) across their:

Cloud,

Automobile,

Computer,

Expanded reality, as well as

Environments for developing smartphones.

Customers can use the AWS Deep Learning AMI (DLAMI), which includes popular machine learning frameworks like PyTorch and TensorFlow along with Qualcomm’s SDK prepackaged.

Read more on Govindhtech.com

0 notes

Text

Dawn of the Dark Knight

"The Bat signal has been up for the last 15 minutes. Why didn't we get the alert?" Bruce was not happy about the delay. This signal is lit up only in the worst scenarios, and in such cases, every second counts.

"I am not sure, Master Wayne. I'll investigate that while you're away meeting Commissioner Gordon," Alfred said as he pulled up the logs for the Bat signal notification system. "Make sure we are not hacked. That's the last thing I want." Batman left for the Batcave in his Batmobile.

"You're late. I hope everything is alright?" Commissioner Gordon expressed concern since Batman was running late.

"Nothing for you to be concerned about," Batman said in his heavy voice. "Tell me why you summoned me."

"It's Joker again. He's kidnapped the mayor. He wants us to release Harley Quinn from Arkham Asylum," Commissioner Gordon explained, his expression reflecting concern.

Batman checked his Batwatch for the live feed on Harley Quinn, but to his dismay, the data did not load. "Strange," he murmured.

"Everything okay?" Gordon was getting anxious from Batman's expression.

"You needn't worry about me. Tell me what information we have thus far," Batman replied, refocusing the conversation.

"We have limited leads on Joker's location. He expects us to bring Quinn to Gotham Port. And..." Gordon paused, awaiting Batman's response.

"And what?" Batman inquired, still grappling with the malfunctioning Batwatch feed.

"He expects you to bring her there. He said he will release the mayor once he sees both of you at the Northern block of Gotham Port."

"No issues. I'll handle that. I'll meet you at the Asylum. Just tell me Harley's room." Batman assured Gordon.

Gordon went through the papers he held in his folder and read out, "Cell number 234, 8th floor, 3rd Block." As he lifted his face up after reading Harley's records, Batman was nowhere to be seen. Despite having seen the act a thousand times already, Gordon was still impressed.

Jailbreaking Quinn from the Asylum wasn't something that Batman was inexperienced with. In an hour, Batman was en route to Gotham Port with an unconscious Harley on the Batmobile.

"Alfred, what's going on with the Batwatch? The feed isn't updating properly, and our BatCall app is malfunctioning,” Bruce asked Alfred over Facetime, as the BatCall wasn't functioning properly.

"I'm uncertain, sir. I've thoroughly examined the logs, and it doesn't appear that we are being hacked. However, I'm investigating other possible issues," Alfred responded diligently.

"Damn it, Alfred."

"Sir, if I may, where are you headed? I haven’t been able to track the Batmobile’s location since you left."

"Perfect. So, the whole Bat Software system is down now? And we are clueless about the cause. We'll address this once I get back. Tell Damian that I will be in the Northern block. I just picked up Harley Quinn from the Asylum and I am off to Gotham Port."

"Harley Quinn? May I inquire why you have her with you at this moment, Master Wayne?"

"Long story. The mayor has been kidnapped by the Joker and I need to confront him. Tell Damian to wait for my arrival before taking any action."

"Will do, sir."

Bruce ended the call. He was a lot more concerned about how the Bat Software System was not working properly. Has the Joker hacked his way into his system?

"You're late, Batman... Wahahahahah!" The Joker's laughter echoed through the Northern block of Gotham port, laced with annoyance.

"I have Quinn with me. Now where's the mayor?" Batman dismissed Joker's comment, focusing on the task at hand.

"He's at the DA's office. I don’t care about him or her. The only person who matters to me is you. Now let's dance. Wahahahaha." Joker laughed menacingly as he threw a couple of grenades at Batman and the unconscious Harley.

"I forgot that you haven't been to the Asylum in a while. Let's fix that today," said Batman as he swiftly used the BatHook to manoeuvre. Placing Harley in a secure location, he returned to face Joker.

"Not bad, Batman. Though, you are slacking off lately. Now, why don't we spice it up a little?" said Joker as he released a mysterious gas from cylinders hidden around the Northern block. "Damn it. The Batwatch would've picked this up right when we arrived. Had it been working, that is..." Batman thought, realizing the extent of the system malfunction.

Batman felt suffocation and anxiety as he trembled, inhaling the gas. The memory of his parents getting shot came right in front of him.

"Now, Batman, let's settle this for once and for all," Joker took the knife he crafted from the Batarang and held it under Batman's neck. Batman felt too paralyzed to do anything.

"Bang!" A shot was heard before Joker collapsed to the ground.

"Take that, you psycho…" Damian, the Robin, glided down from one of the empty ships surrounding them after he shot down Joker using the tranquilizer.

"Aah the bot wonder. You got the moves alright… Guess you won this time, Batman... Wahah..." Joker fainted, before he could finish his laughter.

"Here, an antidote for Scarecrow's gas." Damian injected the antidote into Batman. "You're late. A couple more seconds, and you would’ve become the next Batman, on my vacancy." Batman said, as he got up, regaining his composure.

"Yeah, couldn't locate you since our entire software systems are down," he replied, acknowledging the complications they faced.

"Understandable. Let's sort this mess first, before digging onto that."

Soon, Joker and Quinn were transferred back to the Asylum, and the day was safe again in Gotham, thanks to the efforts of Batman and the team.

"Alfred, have you figured out the issue with our entire software system going down?" Bruce asked furiously as the setback on the last case still haunted him.

"I'm afraid, yes, Master Wayne," Alfred said as he showed Bruce an email.

"It turns out that Damian accidentally left an EC2 instance running on our AWS account for a very long time. All our Bat Software systems deployed in AWS will no longer function until we pay the bills."

"Well then, pay and close it, Alfred! Don't tell me there's something else."

"I'm afraid there's more. The bill exceeds your bank balance, unfortunately, and they have debited whatever was present in your account."

"Wtf?"

"I'm afraid you are broke, sir."

"F*ck Jeff Bezos!!!" Bruce yelled as he realized this is the end of his story as Batman, and probably Bruce Wayne's as well. Jeff Bezos had accomplished what the Joker never could.

Nb: This short fan fiction was written based on the following prompts:

Part 1

Pick your favorite fairytale/ superhero character & write a short piece. Make sure that the piece is suited to be read to & understood by a 10-year-old child.

Part 2

From where you have stopped, twist the tale in to an adult one.

Pointer - The original fairytales were quite grizzly, gory and twisted. Grimms took those & significantly toned it down to suit the young ones.

#batman#fanfic#gotham#alfred#joker#jeff besos#damian wayne#bruce wayne#amazon web services#aws#aws ec2

1 note

·

View note

Text

Deploy Docker Image to AWS Cloud ECS Service | Docker AWS ECS Tutorial

Full Video Link: https://youtu.be/ZlR5onuwZzw Hi, a new #video on #AWS #ECS tutorial is published on @codeonedigest #youtube channel. Learn how to deploy #docker image in AWS ECS fargate service. #deploydockerimageinaws #deploydockerimageinamazoncloud

Step by step guide for beginners to deploy docker image to cloud in AWS ECS service i.e. Elastic Container Service. Learn how to deploy docker container image to AWS ECS fargate. What is cluster and task definition in ECS service? How to create container in ECS service? How to run Task Definition to deploy Docker Image from Docker Hub repository? How to check the health of cluster and container?…

View On WordPress

#aws ecs demo#aws ecs docker compose tutorial#aws ecs fargate#aws ecs fargate tutorial#aws ecs service#aws ecs task definition#aws ecs tutorial#deploy docker container to aws#deploy docker image in amazon cloud#deploy docker image in aws#deploy docker image to aws#deploy docker image to aws ec2#deploy image to aws fargate#how to deploy docker image in ecs service#how to run docker image in ecs service#run docker image in cloud ecs service#what is ecs service

0 notes

Text

Was sind die Vor- und Nachteile des Amazon EC2?: "Vorteile und Nachteile des Amazon EC2: Ein DevOps Engineer Guide”

#AmazonEC2 bietet #Skalierbarkeit, #Flexibilität, #Kosteneffizienz und #Sicherheit. Mit der Cloud-Plattform steigern Sie Ihre #Verfügbarkeit und können Servicelevel-Agreemente nutzen. Vorteile wie #Automatisierung und die Elastic Compute Cloud machen das Ganze zu einer kosteneffizienten Lösung. #CloudComputing

Der Amazon Elastic Compute Cloud (EC2) ist ein Cloud-Computing-Dienst, der es Unternehmen ermöglicht, Ressourcen bereitzustellen und zu verwalten, um sich ihren Anforderungen anzupassen und in die Cloud zu skalieren. Mit Amazon EC2 können Unternehmen schnell und einfach Web- und Anwendungsressourcen hinzufügen, die Rechenleistung oder Speicherkapazität benötigen. Der Amazon EC2 bietet viele…

View On WordPress

#Automatisierung#Cloud-Plattform#Elastic Compute Cloud.#Flexibilität.#Keywörter: Amazon EC2#Kosteneffizienz#Servicelevel-Agreement#Sicherheit#Skalierbarkeit#Verfügbarkeit.

0 notes