#Advanced ChatGPT course

Explore tagged Tumblr posts

Text

AI Showdown Comparing ChatGPT-4 and Gemini AI for Your Needs

ChatGPT-4 vs. Gemini AI – Which AI Supreme?

Imagine having a conversation with an AI so sophisticated, it feels almost human. Now, imagine another AI that can solve complex problems and think deeply like a seasoned expert. Which one would you choose? Welcome to the future of artificial intelligence, where ChatGPT-4 and Gemini AI are leading the way. But which one is the right fit for you? Let’s dive in and find out!

What is ChatGPT-4?

ChatGPT-4, developed by OpenAI, is a cutting-edge AI model designed to understand and respond to human language with remarkable accuracy. Think of it as your chatty, knowledgeable friend who’s always ready to help with questions, offer advice, or just have a friendly conversation. It's like having an intelligent assistant that gets better at understanding you the more you interact with it.

What is Gemini AI?

The answer to this depends on what you need. Gemini AI shines in its ability to tackle complex reasoning tasks and deep analysis, akin to having a highly intelligent assistant at your disposal of Master ChatGPT, Gemini AI, crafted by Google, is like a super-intelligent student that excels in reasoning and grasping complex concepts. This AI is particularly adept at tasks that require deep analytical thinking, making it a powerful tool for solving intricate problems in fields like science, math, and philosophy.

Gemini vs. ChatGPT: Other Key Differences

Conversational Learning: GPT-4 can retain context and improve through interactions, whereas Gemini AI currently has limited capabilities in this area.

Draft Responses: Gemini AI offers multiple drafts for each query, while GPT-4 provides a single, refined response.

Editing Responses: Gemini AI allows users to edit responses post-submission, a feature GPT-4 lacks.

Real-time Internet Access: GPT-4's internet access is limited to its premium version, whereas Gemini AI provides real-time access as a standard feature.

Image-Based Responses: Gemini AI can search and respond with images, a feature now also available in ChatGPT chatbot.

Text-to-Speech: Gemini AI includes text-to-speech capabilities, unlike ChatGPT.

In South Africa’s ChatGPT-4 and Gemini AI Key trends include:

Adoption of AI Technology: South Africa is integrating advanced AI models like ChatGPT-4 and Gemini AI into various sectors, showcasing a growing interest in leveraging AI for business and educational purposes

Google's Expansion: Google's introduction of Gemini AI through its Bard platform has made sophisticated AI technology more accessible in South Africa, supporting over 40 languages and impacting over 230 countries

Comparative Analysis: There is ongoing discourse and comparison between the capabilities of ChatGPT-4 and Gemini AI, highlighting their respective strengths in conversational AI and complex problem-solving

Why You Need to Do This Course

Enrolling in the Mastering ChatGPT Course by UniAthena is your gateway to unlocking the full potential of AI. Whether you're a professional looking to enhance your skills, a student aiming to stay ahead of the curve, or simply an AI enthusiast, this course is designed for you.

Why South African People Need to Do This Course

Enrolling in the Mastering ChatGPT Course by UniAthena is crucial for South Africans to keep pace with the global AI revolution. The course equips learners with the skills to utilize AI tools effectively, enhancing productivity and innovation in various sectors such as business, education, and technology.

Benefits of This Course for South African People

Enhanced Skill Set: Gain proficiency in using ChatGPT, making you a valuable asset in any industry.

Increased Productivity: Automate tasks and streamline workflows with AI, boosting efficiency.

Competitive Edge: Stay ahead of the competition by mastering cutting-edge AI technology.

Career Advancement: Unlock new job opportunities and career paths in the growing field of AI.

Economic Growth: Equip yourself with skills that contribute to the digital transformation of South Africa's economy.

Conclusion

Choosing between ChatGPT-4 and Gemini AI depends on your specific needs. For conversational tasks, content generation, and everyday assistance, GPT-4 is your go-to. For deep analytical tasks and complex problem-solving, Gemini AI takes the crown.

Bonus Points

While Google Gemini offers a free version with limited features, ChatGPT continues to evolve rapidly, ensuring fast and efficient processing of user requests. Investing time in mastering these tools can significantly benefit your personal and professional growth.

So, are you ready to dive into the world of AI and elevate your career? Enroll in the Mastering ChatGPT Course by UniAthena today and start your journey towards becoming an AI expert!

#AI courses#ChatGPT-4#Gemini AI#AI for students#Mastering AI#AI career advancement#AI skills#AI technology integration#AI education#Future of AI

2 notes

·

View notes

Text

The Sensor Savvy - AI for Real-World Identification Course

Enroll in The Sensor Savvy - AI for Real-World Identification Course and master the use of AI to detect, analyze, and identify real-world objects using smart sensors and machine learning.

#Sensor Savvy#AI for Real-World Identification Course#python development course#advanced excel course online#AI ML Course for Beginners with ChatGPT mastery#AI Image Detective Course#Chatbot Crafters Course#ML Predictive Power Course

0 notes

Text

Prompt Engineering: The #1 Skill That Will Unlock Your AI Potential in 2024

Imagine having a superpower that allows you to converse with the most advanced AI systems on the planet, shaping their output to perfectly suit your needs. Welcome to the dynamic world of prompt engineering – the art and science of crafting the instructions that guide AI models to generate amazing text, translate languages, write different kinds of creative compositions, and much more. In this…

View On WordPress

#advanced prompt engineering strategies#best practices for writing effective AI prompts#best prompt engineering communities#chatgpt#emerging trends in prompt engineering#free prompt engineering tools and resources#how to use prompt engineering to improve AI results#prompt engineering courses and tutorials#prompt engineering for creative writing#prompt engineering for customer service chatbots#prompt engineering for language translation#prompt engineering for marketing copywriting#prompt engineering jobs and career opportunities#the future of prompt engineering#using prompt engineering for content generation#will prompt engineers replace programmers?

0 notes

Text

(taken from a post about AI)

speaking as someone who has had to grade virtually every kind of undergraduate assignment you can think of for the past six years (essays, labs, multiple choice tests, oral presentations, class participation, quizzes, field work assignments, etc), it is wild how out-of-touch-with-reality people’s perceptions of university grading schemes are. they are a mass standardised measurement used to prove the legitimacy of your degree, not how much you’ve learned. Those things aren’t completely unrelated to one another of course, but they are very different targets to meet. It is standard practice for professors to have a very clear idea of what the grade distribution for their classes are before each semester begins, and tenure-track assessments (at least some of the ones I’ve seen) are partially judged on a professors classes’ grade distributions - handing out too many A’s is considered a bad thing because it inflates student GPAs relative to other departments, faculties, and universities, and makes classes “too easy,” ie, reduces the legitimate of the degree they earn. I have been instructed many times by professors to grade easier or harder throughout the term to meet those target averages, because those targets are the expected distribution of grades in a standardised educational setting. It is standard practice for teaching assistants to report their grade averages to one another to make sure grade distributions are consistent. there’s a reason profs sometimes curve grades if the class tanks an assignment or test, and it’s generally not because they’re being nice!

this is why AI and chatgpt so quickly expanded into academia - it’s not because this new generation is the laziest, stupidest, most illiterate batch of teenagers the world has ever seen (what an original observation you’ve made there!), it’s because education has a mass standard data format that is very easily replicable by programs trained on, yanno, large volumes of data. And sure the essays generated by chatgpt are vacuous, uncompelling, and full of factual errors, but again, speaking as someone who has graded thousands of essays written by undergrads, that’s not exactly a new phenomenon lol

I think if you want to be productively angry at ChatGPT/AI usage in academia (I saw a recent post complaining that people were using it to write emails of all things, as if emails are some sacred form of communication), your anger needs to be directed at how easily automated many undergraduate assignments are. Or maybe your professors calculating in advance that the class average will be 72% is the single best way to run a university! Who knows. But part of the emotional stakes in this that I think are hard for people to admit to, much less let go of, is that AI reveals how rote, meaningless, and silly a lot of university education is - you are not a special little genius who is better than everyone else for having a Bachelor’s degree, you have succeeded in moving through standardised post-secondary education. This is part of the reason why disabled people are systematically barred from education, because disability accommodations require a break from this standardised format, and that means disabled people are framed as lazy cheaters who “get more time and help than everyone else.” If an AI can spit out a C+ undergraduate essay, that of course threatens your sense of superiority, and we can’t have that, can we?

3K notes

·

View notes

Text

Prompt Engineering से पैसे कमाएँ |Unique way to earn money online..

Prompt Engineering : online सफलता के लिए प्रभावी Prompt डिज़ाइन करना | Online संचार के तेजी से विकसित हो रहे परिदृश्य में, Prompt Engineering एक महत्वपूर्ण कौशल के रूप में उभरी है, जो जुड़ाव बढ़ाने, बहुमूल्य जानकारी देने और यहां तक कि डिजिटल इंटरैक्शन का मुद्रीकरण करन�� का मार्ग प्रशस्त कर रही है। यह लेख Prompt Engineering की दुनिया पर गहराई से प्रकाश डालता है, इसके महत्व, सीखने के सुलभ…

View On WordPress

#advanced prompt engineering#ai prompt engineering#ai prompt engineering certification#ai prompt engineering course#ai prompt engineering jobs#an information-theoretic approach to prompt engineering without ground truth labels#andrew ng prompt engineering#awesome prompt engineering#brex prompt engineering guide#chat gpt prompt engineering#chat gpt prompt engineering course#chat gpt prompt engineering jobs#chatgpt prompt engineering#chatgpt prompt engineering course#chatgpt prompt engineering for developers#chatgpt prompt engineering guide#clip prompt engineering#cohere prompt engineering#deep learning ai prompt engineering#deeplearning ai prompt engineering#deeplearning.ai prompt engineering#entry level prompt engineering jobs#free prompt engineering course#github copilot prompt engineering#github prompt engineering#github prompt engineering guide#gpt 3 prompt engineering#gpt prompt engineering#gpt-3 prompt engineering#gpt-4 prompt engineering

0 notes

Text

AI turns Amazon coders into Amazon warehouse workers

HEY SEATTLE! I'm appearing at the Cascade PBS Ideas Festival NEXT SATURDAY (May 31) with the folks from NPR's On The Media!

On a recent This Machine Kills episode, guest Hagen Blix described the ultimate form of "AI therapy" with a "human in the loop":

https://soundcloud.com/thismachinekillspod/405-ai-is-the-demon-god-of-capital-ft-hagen-blix

One actual therapist is just having ten chat GPT windows open where they just like have five seconds to interrupt the chatGPT. They have to scan them all and see if it says something really inappropriate. That's your job, to stop it.

Blix admits that's not where therapy is at…yet, but he references Laura Preston's 2023 N Plus One essay, "HUMAN_FALLBACK," which describes her as a backstop to a real-estate "virtual assistant," that masqueraded as a human handling the queries that confused it, in a bid to keep the customers from figuring out that they were engaging with a chatbot:

https://www.nplusonemag.com/issue-44/essays/human_fallback/

This is what makes investors and bosses slobber so hard for AI – a "productivity" boost that arises from taking away the bargaining power of workers so that they can be made to labor under worse conditions for less money. The efficiency gains of automation aren't just about using fewer workers to achieve the same output – it's about the fact that the workers you fire in this process can be used as a threat against the remaining workers: "Do your job and shut up or I'll fire you and give your job to one of your former colleagues who's now on the breadline."

This has been at the heart of labor fights over automation since the Industrial Revolution, when skilled textile workers took up the Luddite cause because their bosses wanted to fire them and replace them with child workers snatched from Napoleonic War orphanages:

https://pluralistic.net/2023/09/26/enochs-hammer/#thats-fronkonsteen

Textile automation wasn't just about producing more cloth – it was about producing cheaper, worse cloth. The new machines were so easy a child could use them, because that's who was using them – kidnapped war orphans. The adult textile workers the machines displaced weren't afraid of technology. Far from it! Weavers used the most advanced machinery of the day, and apprenticed for seven years to learn how to operate it. Luddites had the equivalent of a Masters in Engineering from MIT.

Weavers' guilds presented two problems for their bosses: first, they had enormous power, thanks to the extensive training required to operate their looms; and second, they used that power to regulate the quality of the goods they made. Even before the Industrial Revolution, weavers could have produced more cloth at lower prices by skimping on quality, but they refused, out of principle, because their work mattered to them.

Now, of course weavers also appreciated the value of their products, and understood that innovations that would allow them to increase their productivity and make more fabric at lower prices would be good for the world. They weren't snobs who thought that only the wealthy should go clothed. Weavers had continuously adopted numerous innovations, each of which increased the productivity and the quality of their wares.

Long before the Luddite uprising, weavers had petitioned factory owners and Parliament under the laws that guaranteed the guilds the right to oversee textile automation to ensure that it didn't come at the price of worker power or the quality of the textiles the machines produced. But the factory owners and their investors had captured Parliament, which ignored its own laws and did nothing as the "dark, Satanic mills" proliferated. Luddites only turned to property destruction after the system failed them.

Now, it's true that eventually, the machines improved and the fabric they turned out matched and exceeded the quality of the fabric that preceded the Industrial Revolution. But there's nothing about the way the Industrial Revolution unfolded – increasing the power of capital to pay workers less and treat them worse while flooding the market with inferior products – that was necessary or beneficial to that progress. Every other innovation in textile production up until that time had been undertaken with the cooperation of the guilds, who'd ensured that "progress" meant better lives for workers, better products for consumers, and lower prices. If the Luddites' demands for co-determination in the Industrial Revolution had been met, we might have gotten to the same world of superior products at lower costs, but without the immiseration of generations of workers, mass killings to suppress worker uprisings, and decades of defective products being foisted on the public.

So there are two stories about automation and labor: in the dominant narrative, workers are afraid of the automation that delivers benefits to all of us, stand in the way of progress, and get steamrollered for their own good, as well as ours. In the other narrative, workers are glad to have boring and dangerous parts of their work automated away and happy to produce more high-quality goods and services, and stand ready to assess and plan the rollout of new tools, and when workers object to automation, it's because they see automation being used to crush them and worsen the outputs they care about, at the expense of the customers they care for.

In modern automation/labor theory, this debate is framed in terms of "centaurs" (humans who are assisted by technology) and "reverse-centaurs" (humans who are conscripted to assist technology):

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

There are plenty of workers who are excited at the thought of using AI tools to relieve them of some drudgework. To the extent that these workers have power over their bosses and their working conditions, that excitement might well be justified. I hear a lot from programmers who work on their own projects about how nice it is to have a kind of hypertrophied macro system that can generate and tweak little automated tools on the fly so the humans can focus on the real, chewy challenges. Those workers are the centaurs, and it's no wonder that they're excited about improved tooling.

But the reverse-centaur version is a lot darker. The reverse-centaur coder is an assistant to the AI, charged with being a "human in the loop" who reviews the material that the AI produces. This is a pretty terrible job to have.

For starters, the kinds of mistakes that AI coders make are the hardest mistakes for human reviewers to catch. That's because LLMs are statistical prediction machines, spicy autocomplete that works by ingesting and analyzing a vast corpus of written materials and then producing outputs that represent a series of plausible guesses about which words should follow one another. To the extent that the reality the AI is participating in is statistically smooth and predictable, AI can often make eerily good guesses at words that turn into sentences or code that slot well into that reality.

But where reality is lumpy and irregular, AI stumbles. AI is intrinsically conservative. As a statistically informed guessing program, it wants the future to be like the past:

https://reallifemag.com/the-apophenic-machine/

This means that AI coders stumble wherever the world contains rough patches and snags. Take "slopsquatting." For the most part, software libraries follow regular naming conventions. For example, there might be a series of text-handling libraries with names like "text.parsing.docx," "text.parsing.xml," and "text.parsing.markdown." But for some reason – maybe two different projects were merged, or maybe someone was just inattentive – there's also a library called "text.txt.parsing" (instead of "text.parsing.txt").

AI coders are doing inference based on statistical analysis, and anyone inferring what the .txt parsing library is called would guess, based on the other libraries, that it was "text.parsing.txt." And that's what the AI guesses, and so it tries to import that library to its software projects.

This creates a new security vulnerability, "slopsquatting," in which a malicious actor creates a library with the expected name, which replicates the functionality of the real library, but also contains malicious code:

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

Note that slopsquatting errors are extremely hard to spot. As is typical with AI coding errors, these are errors that are based on continuing a historical pattern, which is the sort of thing our own brains do all the time (think of trying to go up a step that isn't there after climbing to the top of a staircase). Notably, these are very different from the errors that a beginning programmer whose work is being reviewed by a more senior coder might make. These are the very hardest errors for humans to spot, and these are the errors that AIs make the most, and they do so at machine speed:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

To be a human in the loop for an AI coder, a programmer must engage in sustained, careful, line-by-line and command-by-command scrutiny of the code. This is the hardest kind of code to review, and maintaining robotic vigilance over long periods at high speeds is something humans are very bad at. Indeed, it's the kind of task we try very hard to automate, since machines are much better at being machineline than humans are. This is the essence of reverse-centaurism: when a human is expected to act like a machine in order to help the machine do something it can't do.

Humans routinely fail at spotting these errors, unsurprisingly. If the purpose of automation is to make superior goods at lower prices, then this would be a real concern, since a reverse-centaur coding arrangement is bound to produce code with lurking, pernicious, especially hard-to-spot bugs that present serious risks to users. But if the purpose of automation is to discipline labor – to force coders to accept worse conditions and pay – irrespective of the impact on quality, then AI is the perfect tool for the job. The point of the human isn't to catch the AI's errors so much as it is to catch the blame for the AI's errors – to be what Madeleine Clare Elish calls a "moral crumple zone":

https://estsjournal.org/index.php/ests/article/view/260

As has been the case since the Industrial Revolution, the project of automation isn't just about increasing productivity, it's about weakening labor power as a prelude to lowering quality. Take what's happened to the news industry, where mass layoffs are being offset by AI tools. At Hearst's King Features Syndicates, a single writer was charged with producing over 30 summer guides, the entire package:

https://www.404media.co/viral-ai-generated-summer-guide-printed-by-chicago-sun-times-was-made-by-magazine-giant-hearst/

That is an impossible task, which is why the writer turned to AI to do his homework, and then, infamously, published a "summer reading guide" that was full of nonexistent books that were hallucinated by a chatbot:

https://www.404media.co/chicago-sun-times-prints-ai-generated-summer-reading-list-with-books-that-dont-exist/

Most people reacted to this story as a consumer issue: they were outraged that the world was having a defective product foisted upon it. But the consumer issue here is downstream from the labor issue: when the writers at King Features Syndicate are turned into reverse-centaurs, they will inevitably produce defective outputs. The point of the worker – the "human in the loop" – isn't to supervise the AI, it's to take the blame for the AI. That's just what happened, as this poor schmuck absorbed an internet-sized rasher of shit flung his way by outraged social media users. After all, it was his byline on the story, not the chatbot's. He's the moral crumple-zone.

The implication of this is that consumers and workers are class allies in the automation wars. The point of using automation to weaken labor isn't just cheaper products – it's cheaper, defective products, inflicted on the unsuspecting and defenseless public who are no longer protected by workers' professionalism and pride in their jobs.

That's what's going on at Duolingo, where CEO Luis von Ahn created a firestorm by announcing mass firings of human language instructors, who would be replaced by AI. The "AI first" announcement pissed off Duolingo's workers, of course, but what caught von Ahn off-guard was how much this pissed off Duolingo's users:

https://tech.slashdot.org/story/25/05/25/0347239/duolingo-faces-massive-social-media-backlash-after-ai-first-comments

But of course, this makes perfect sense. After all, language-learners are literally incapable of spotting errors in the AI instruction they receive. If you spoke the language well enough to spot the AI's mistakes, you wouldn't need Duolingo! I don't doubt that there are countless ways in which AIs could benefit both language learners and the Duolingo workers who develop instructional materials, but for that to happen, workers' and learners' needs will have to be the focus of AI integration. Centaurs could produce great language learning materials with AI – but reverse-centaurs can only produce slop.

Unsurprisingly, many of the most successful AI products are "bossware" tools that let employers monitor and discipline workers who've been reverse-centaurized. Both blue-collar and white-collar workplaces have filled up with "electronic whips" that monitor and evaluate performance:

https://pluralistic.net/2024/08/02/despotism-on-demand/#virtual-whips

AI can give bosses "dashboards" that tell them which Amazon delivery drivers operate their vehicles with their mouths open (Amazon doesn't let its drivers sing on the job). Meanwhile, a German company called Celonis will sell your boss a kind of AI phrenology tool that assesses your "emotional quality" by spying on you while you work:

https://crackedlabs.org/en/data-work/publications/processmining-algomanage

Tech firms were among the first and most aggressive adopters of AI-based electronic whips. But these whips weren't used on coders – they were reserved for tech's vast blue-collar and contractor workforce: clickworkers, gig workers, warehouse workers, AI data-labelers and delivery drivers.

Tech bosses tormented these workers but pampered their coders. That wasn't out of any sentimental attachment to tech workers. Rather, tech bosses were afraid of tech workers, because tech workers possess a rare set of skills that can be harnessed by tech firms to produce gigantic returns. Tech workers have historically been princes of labor, able to command high salaries and deferential treatment from their bosses (think of the amazing tech "campus" perks), because their scarcity gave them power.

It's easy to predict how tech bosses would treat tech workers if they could get away with it – just look how they treat workers they aren't afraid of. Just like the textile mill owners of the Industrial Revolution, the thing that excites tech bosses about AI is the possibility of cutting off a group of powerful workers at the knees. After all, it took more than a century for strong labor unions to match the power that the pre-Industrial Revolution guilds had. If AI can crush the power of tech workers, it might buy tech bosses a century of free rein to shift value from their workforce to their investors, while also doing away with pesky Tron-pilled workers who believe they have a moral obligation to "fight for the user."

William Gibson famously wrote, "The future is here, it's just not evenly distributed." The workers that tech bosses don't fear are living in the future of the workers that tech bosses can't easily replace.

This week, the New York Times's veteran Amazon labor report Noam Scheiber published a deeply reported piece about the experience of coders at Amazon in the age of AI:

https://www.nytimes.com/2025/05/25/business/amazon-ai-coders.html

Amazon CEO Andy Jassy is palpably horny for AI coders, evidenced by investor memos boasting of AI's returns in "productivity and cost avoidance" and pronouncements about AI saving "the equivalent of 4,500 developer-years":

https://www.linkedin.com/posts/andy-jassy-8b1615_one-of-the-most-tedious-but-critical-tasks-activity-7232374162185461760-AdSz/

Amazon is among the most notorious abusers of blue-collar labor, the workplace where everyone who doesn't have a bullshit laptop job is expected to piss in a bottle and spend an unpaid hour before and after work going through a bag- and body-search. Amazon's blue-collar workers are under continuous, totalizing, judging AI scrutiny that scores them based on whether their eyeballs are correctly oriented, whether they take too long to pick up an object, whether they pee too often. Amazon warehouse workers are injured at three times national average. Amazon AIs scan social media for disgruntled workers talking about unions, and Amazon has another AI tool that predicts which shops and departments are most likely to want to unionize.

Scheiber's piece describes what it's like to be an Amazon tech worker who's getting the reverse-centaur treatment that has heretofore been reserved for warehouse workers and drivers. They describe "speedups" in which they are moved from writing code to reviewing AI code, their jobs transformed from solving chewy intellectual puzzles to racing to spot hard-to-find AI coding errors as a clock ticks down. Amazon bosses haven't ordered their tech workers to use AI, just raised their quotas to a level that can't be attained without getting an AI to do most of the work – just like the Chicago Sun-Times writer who was expected to write all 30 articles in the summer guide package on his own. No one made him use AI, but he wasn't going to produce 30 articles on deadline without a chatbot.

Amazon insists that it is treating AI as an assistant for its coders, but the actual working conditions make it clear that this is a reverse-centaur transformation. Scheiber discusses a dissident internal group at Amazon called Amazon Employees for Climate Justice, who link the company's use of AI to its carbon footprint. Beyond those climate concerns, these workers are treating AI as a labor issue.

Amazon's coders have been making tentative gestures of solidarity towards its blue-collar workforce since the pandemic broke out, walking out in support of striking warehouse workers (and getting fired for doing so):

https://pluralistic.net/2020/04/14/abolish-silicon-valley/#hang-together-hang-separately

But those firings haven't deterred Amazon's tech workers from making common cause with their comrades on the shop floor:

https://pluralistic.net/2021/01/19/deastroturfing/#real-power

When techies describe their experience of AI, it sometimes sounds like they're describing two completely different realities – and that's because they are. For workers with power and control, automation turns them into centaurs, who get to use AI tools to improve their work-lives. For workers whose power is waning, AI is a tool for reverse-centaurism, an electronic whip that pushes them to work at superhuman speeds. And when they fail, these workers become "moral crumple zones," absorbing the blame for the defective products their bosses pushed out in order to goose profits.

As ever, what a technology does pales in comparison to who it does it for and who it does it to.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/05/27/rancid-vibe-coding/#class-war

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

351 notes

·

View notes

Text

just woke up and almost got scammed before i even had my coffee soooo good morning to me i guess 😭😭

someone left a comment on AO3 on the blessed are the ones who sin fic saying they were a “comic artist” and wanted to turn the fic into a comic. gave me their IG and Discord. and of course i got super excited. who wouldn’t?? but then i did what any normal, slightly paranoid, law-student would do… and reverse image searched their posts. and SURPRISEEEE (not really): literally every post on their IG was stolen from other artists. like completely lifted. nothing was theirs.

i kept chatting just to see how far they’d go and they sent me a google doc “script” that was LITERALLY just my fic with prompts fed into chatGPT to make it look like a comic script. and THEN asked me to pay them $50 in advance for a commission 💀 so yeah. i called them out. they made stupid excuses and started sending me comics that still weren’t theirs to make themselves “believable”

moral of the story? the internet is FILLED with scammers, and it’s genuinely gross to see people trying to take advantage of excited writers who just want to see their work come to life. like, exploiting someone’s passion for money and faking talent?? disgusting.

also, AI is scarily good at pretending to be human, but girl i’ve been online too long. i’m a fake blonde but i’m not stupid 😘

be safe out there, babes, especially fanfic writers. protect your work and trust your gut.

107 notes

·

View notes

Text

Magical AI Grimoire Review

Let’s just get a couple of things out of the way:

1) I’ve been in witchcraft spaces for going on 10+ years now

2) I’ve been flirting around in chaos magic spaces for around the same amount of time

3) I am 30+ a “millennial” if one may

4) I am anti-generative AI so of course this is going to have a bit of a negative slant towards generative AI and LLM Based models as a whole

That being said, what drew me to the book at first was two things: one, the notion of “egregore work” in the latter chapters and two, the notion of using AI in any sort of magical space or connotation, especially with the overlap as of late in some pop culture witch circles especially with using chatbots as a form of divination or communication rather than say through cards, Clair’s, or otherwise

Let’s get into it

Starting off, here is the table of contents for said book:

Of note, chapters 13-16 and chapters 21-23. Just keep these in the back of your mind for later.

In chapter 1, the author, Davezilla describes a story of a young witch in a more rural environment, isolated from for example other witchy communities and the like, while she makes do with what she has, she wants to advance her craft, notably with a spell to boost things agriculturally for her farm that she manages through other technological means. Booting up ChatGPT, the program whips up an incantation for rain with a rhyming spell to a spirit dubbed “Mélusine” to help aid in a drought. She even uses the prompt and program for aid in supplies such as candles and herbs and even what to use as substitutes should she not be able to procure and blue or white candles.

This is not a testimonial however but an example given by the author. That’s all a majority of this book is; examples rather than testimonials or results vetted through other witches or practitioners. While not typical in most witchy books to give reviews or testimonials of course, it’s generally a bit of a note for most spell books worth the ink and paper and the like for spells to have actually been tested and given results before hand, at least from what I’ve gathered from other writers in the witchcraft space. Even my own grimoire pages are based not only on personal experiences and results, but from what I’ve observed from others.

Then we get into terms from Lucimi and Santeria for…some reason.

The author claims that he has been initiated into these closed/initiation only traditions, but within the context of the book and the topic given, this just seems like a way to flex that he’s ✨special✨ and not like other occultists or the like. But that’s not even the worst of it, as he even tries to make ChatGPT write a spell based off of said traditions

Again, keep in mind that this is based off of closed or initiatory practice and the author is judging by his AI generated Chad-tactic author picture, a white older millennial at best

And obligatory “I don’t go here”/im not initiated into any of these practices but to make an AI write a spell based off of closed path and practice seems…tasteless at best

But oh my, what else this author tries to make Chat conjure up

In order

1) This is at best what every other lucid dreaming guide or reading would give for basic instructions. Not too alarming but very basic

2) & 3) To borrow a phrase from TikTok but not to label myself as “the friend who’s too woke”, but making an AI write a supposed “curse” in the style of not only a prolific comedy writer and director but one also of Jewish descent seems…vaguely anti-Semitic in words I can’t quite place right now

4) & 5) As an author for fanfic and my own original personal works, this whole thing just seems slipshod at best, C level bargain bin, unoriginal material at worst. This barely has any relevance to the topics of the book

Speaking of the topics, remember chapters 13-16 noted

It’s literally just AI prompts for ChatGpt and MidJourney, completely bypassing any traditions associated with such, especially indigenous traditions associated with the contexts of “totem animals” which from the prompts seems more like a hackneyed version of “spirit animals” circa the early to mid 2010’s popularized from Buzzfeed and the like.

But, time for the main event, The Egregore section:

The chapter starts off actually rather nicely, describing egregore theory and how an egregore is formed or fueled. I’ll give him credit for at least that much. While he doesn’t use examples as chatbot communication, he proposes that in a sense, Ai programs have the capacity to generate egregores and the like. And to show an example of such, he gives a link to his own “digital egregore” at the following url: hexsupport.club/ai with the password “Robert smith is looking old”

At the time of my visitation to the website, (Apr 14, 2025), I was greeted only with a 404 error page with no password prompt or box to enter in

Fitting, if you ask me.

Unless you’re really -really- into ChatGPT and Midjourney, despite its environmental damages and costs, despite its drain of creativity and resources, despite its psychological and learning impacts we’re seeing in academic spaces like college and high schools in the US, and despite the array of hallucinations and overall slurry of hodgepodge “information” and amalgamations of what an object or picture “should” look like based on specific algorithms, prompts, and limits, don’t bother with this book. You’re better off doing the prompts on your own. Which conveniently, the author also provides AI resources and the like on his own website.

I’ll end off this rant and review by one last tidbit. In the chapter of Promptcraft 101 in the subheader “Finding Your Own Voice”, the author poses that “Witches and Magic Workers Don’t Steal”

Witches and Magic Workers Don’t Steal

The author is supposedly well versed in AI and AI technology and how it works. With such, we may also assume he knows how scraping works and how Large Language Models or LLMs get that info, often through gathering art and information from unconsenting or unawares sources, with the wake of the most recent scraping reported from sites such as AO3 as a recent example as of posting

This is hypocritical bullshit. No fun and flouncy words like what I like to use to describe things, just bullshit.

Cameras didn’t steal information or the like from painters and sculptors

Tools like Photoshop, ClipsArt, etc didn’t steal from traditional artists

To say that generative AI is another tool and technological advancement is loaded at best, downright ignorant and irresponsible at worst.

Do not buy this book.

66 notes

·

View notes

Text

AI Reminder

Quick reminder folks since there's been a recent surge of AI fanfic shite. Here is some info from Earth.org on the environmental effects of ChatGPT and it's fellow AI language models.

"ChatGPT, OpenAI's chatbot, consumes more than half a million kilowatt-hours of electricity each day, which is about 17,000 times more than the average US household. This is enough to power about 200 million requests, or nearly 180,000 US households. A single ChatGPT query uses about 2.9 watt-hours, which is almost 10 times more than a Google search, which uses about 0.3 watt-hours.

According to estimates, ChatGPT emits 8.4 tons of carbon dioxide per year, more than twice the amount that is emitted by an individual, which is 4 tons per year. Of course, the type of power source used to run these data centres affects the amount of emissions produced – with coal or natural gas-fired plants resulting in much higher emissions compared to solar, wind, or hydroelectric power – making exact figures difficult to provide.

A recent study by researchers at the University of California, Riverside, revealed the significant water footprint of AI models like ChatGPT-3 and 4. The study reports that Microsoft used approximately 700,000 litres of freshwater during GPT-3’s training in its data centres – that’s equivalent to the amount of water needed to produce 370 BMW cars or 320 Tesla vehicles."

Now I don't want to sit here and say that AI is the worst thing that has ever happened. It can be an important tool in advancing effectiveness in technology! However, there are quite a few drawbacks as we have not figured out yet how to mitigate these issues, especially on the environment, if not used wisely. Likewise, AI is not meant to do the work for you, it's meant to assist. For example, having it spell check your work? Sure, why not! Having it write your work and fics for you? You are stealing from others that worked hard to produce beautiful work.

Thank you for coming to my Cyn Talk. I love you all!

237 notes

·

View notes

Text

I understand why the professor who wrote this is staying anonymous.

This is what is happening in pedagogy, at least in America. young adults are significantly dumber. They can read anything at or below the complexity of Harry Potter or The Hunger Games, but they are missing the major themes and concepts, i.e. they aren't understanding magic = white supremacy or that Katniss is being groomed by the revolutionaries. its like how people watch severance or any movie, and "analyze" the "underlying" themes but they are just stating what blatantly happens and calling the text subtext, but they somehow seem amazed by their ability to do this.

Young people reach a 7th grade level and cease all intellectual developmental milestones. when they reach a road block they will literally just do something else. they either immediately reach for tiktok or chatgpt or they will entirely give up and freeze until classtime ends. they are also doing this bizarre guessing game with the rest of a word after seeing a familiar start, confusing words like embellished/embedded, substitute/substantial. This is feasibly a simple mistake but I mean 2-3x per paragraph.

In 2022, I was SHOCKED to see that these are completely able bodied, normal, upper/middle class people who are not only falling behind all the old milestones but they seem to all have serious intellectual disabilities. like they seem to have a real impairment or some form of brain damage, things kids used to be held back for remedial courses with extra tutoring or summer cram schooling to resolve, like, we had to build the extra brainpower to make it through advanced calculus ii or classical to modern philosophy. but they have replaced all difficult thought exercise with chatgpt, so nobody is addressing any of it

62 notes

·

View notes

Text

I've said this before but the interesting thing about AI in science fiction is that it was often a theme that humanity would invent "androids", as in human-like robots, but for them to get intelligent and be able to carry conversations with us about deep topics they would need amazing advances that might be impossible. Asimov is the example here though he played a lot with this concept.

We kind of forgot that just ten years ago, inventing an AI that could talk fluently with a human was considered one of those intractable problems that we would take centuries to solve. In a few years not only we got that, but we got AI able to generate code, write human-like speech, and imitate fictional characters. I'm surprised at how banal some people arguing about AI are about this, this is, by all means, an amazing achievement.

Of course these aren't really intelligent, they are just complex algorithms that provide the most likely results to their request based on their training. There also isn't a centralized intelligence thinking this, it's all distributed. There is no real thinking here, of course.

Does this make it less of a powerful tool, though? We have computers that can interpret human language and output things on demand to it. This is, objectively, amazing. The problem is that they are made by a capitalist system and culture that is trying to use them for a pointless economic bubble. The reason why ChatGPT acts like the world's most eager costumer service is because they coded it for that purpose, the reason why most image generators create crap is because they made them for advertising. But those are not the only possibilities for AI, even this model of non-thinking AIs.

The AI bubble will come and pop, it can't sustain itself. The shitty corporate models will never amount to much because they're basically toys. I'm excited for what comes after, when researchers, artists, and others finally get models that aren't corporate shit tailored to be costumer service, but built for other purposes. I'm excited to see what happens when this research starts to create algorithms that might actually be alive in any sense, and maybe the lines might not exist. I'm also worried too.

#cosas mias#I hate silicon valley types who are like 'WITH AI WE WILL BE ABLE TO FIRE ALL WORKERS AND HAVE 362% ANNUAL GROWTH#but I also hate the neo luddites that say WHY ARE YOU MAKING THIS THERE IS NO USE FOR THIS#If you can't imagine what a computer that does what you ask in plain language could potentially do#maybe you're the one lacking imagination not the technobros

91 notes

·

View notes

Text

oh no she's talking about AI some more

to comment more on the latest round of AI big news (guess I do have more to say after all):

chatgpt ghiblification

trying to figure out how far it's actually an advance over the state of the art of finetunes and LoRAs and stuff in image generation? I don't keep up with image generation stuff really, just look at it occasionally and go damn that's all happening then, but there are a lot of finetunes focusing on "Ghibli's style" which get it more or less well. previously on here I commented on an AI video model generation that patterned itself on Ghibli films, and video is a lot harder than static images.

of course 'studio Ghibli style' isn't exactly one thing: there are stylistic commonalities to many of their works and recurring designs, for sure, but there are also details that depend on the specific character designer and film in question in large and small ways (nobody is shooting for My Neighbours the Yamadas with this, but also e.g. Castle in the Sky does not look like Pom Poko does not look like How Do You Live in a number of ways, even if it all recognisably belongs to the same lineage).

the interesting thing about the ghibli ChatGPT generations for me is how well they're able to handle simplification of forms in image-to-image generation, often quite drastically changing the proportions of the people depicted but recognisably maintaining correspondence of details. that sort of stylisation is quite difficult to do well even for humans, and it must reflect quite a high level of abstraction inside the model's latent space. there is also relatively little of the 'oversharpening'/'ringing artefact' look that has been a hallmark of many popular generators - it can do flat colour well.

the big touted feature is its ability to place text in images very accurately. this is undeniably impressive, although OpenAI themeselves admit it breaks down beyond a certain point, creating strange images which start out with plausible, clean text and then it gradually turns into AI nonsense. it's really weird! I thought text would go from 'unsolved' to 'completely solved' or 'randomly works or doesn't work' - instead, here it feels sort of like the model has a certain limited 'pipeline' for handling text in images, but when the amount of text overloads that bandwidth, the rest of the image has to make do with vague text-like shapes! maybe the techniques from that anthropic thought-probing paper might shed some light on how information flows through the model.

similarly the model also has a limit of scene complexity. it can only handle a certain number of objects (10-20, they say) before it starts getting confused and losing track of details.

as before when they first wired up Dall-E to ChatGPT, it also simply makes prompting a lot simpler. you don't have to fuck around with LoRAs and obtuse strings of words, you just talk to the most popular LLM and ask it to perform a modification in natural language: the whole process is once again black-boxed but you can tell it in natural language to make changes. it's a poor level of control compared to what artists are used to, but it's still huge for ordinary people, and of course there's nothing stopping you popping the output into an editor to do your own editing.

not sure the architecture they're using in this version, if ChatGPT is able to reason about image data in the same space as language data or if it's still calling a separate image model... need to look that up.

openAI's own claim is:

We trained our models on the joint distribution of online images and text, learning not just how images relate to language, but how they relate to each other. Combined with aggressive post-training, the resulting model has surprising visual fluency, capable of generating images that are useful, consistent, and context-aware.

that's kind of vague. not sure what architecture that implies. people are talking about 'multimodal generation' so maybe it is doing it all in one model? though I'm not exactly sure how the inputs and outputs would be wired in that case.

anyway, as far as complex scene understanding: per the link they've cracked the 'horse riding an astronaut' gotcha, they can do 'full glass of wine' at least some of the time but not so much in combination with other stuff, and they can't do accurate clock faces still.

normal sentences that we write in 2025.

it sounds like we've moved well beyond using tools like CLIP to classify images, and I suspect that glaze/nightshade are already obsolete, if they ever worked to begin with. (would need to test to find out).

all that said, I believe ChatGPT's image generator had been behind the times for quite a long time, so it probably feels like a bigger jump for regular ChatGPT users than the people most hooked into the AI image generator scene.

of course, in all the hubbub, we've also already seen the white house jump on the trend in a suitably appalling way, continuing the current era of smirking fascist political spectacle by making a ghiblified image of a crying woman being deported over drugs charges. (not gonna link that shit, you can find it if you really want to.) it's par for the course; the cruel provocation is exactly the point, which makes it hard to find the right tone to respond. I think that sort of use, though inevitable, is far more of a direct insult to the artists at Ghibli than merely creating a machine that imitates their work. (though they may feel differently! as yet no response from Studio Ghibli's official media. I'd hate to be the person who has to explain what's going on to Miyazaki.)

google make number go up

besides all that, apparently google deepmind's latest gemini model is really powerful at reasoning, and also notably cheaper to run, surpassing DeepSeek R1 on the performance/cost ratio front. when DeepSeek did this, it crashed the stock market. when Google did... crickets, only the real AI nerds who stare at benchmarks a lot seem to have noticed. I remember when Google releases (AlphaGo etc.) were huge news, but somehow the vibes aren't there anymore! it's weird.

I actually saw an ad for google phones with Gemini in the cinema when i went to see Gundam last week. they showed a variety of people asking it various questions with a voice model, notably including a question on astrology lmao. Naturally, in the video, the phone model responded with some claims about people with whatever sign it was. Which is a pretty apt demonstration of the chameleon-like nature of LLMs: if you ask it a question about astrology phrased in a way that implies that you believe in astrology, it will tell you what seems to be a natural response, namely what an astrologer would say. If you ask if there is any scientific basis for belief in astrology, it would probably tell you that there isn't.

In fact, let's try it on DeepSeek R1... I ask an astrological question, got an astrological answer with a really softballed disclaimer:

Individual personalities vary based on numerous factors beyond sun signs, such as upbringing and personal experiences. Astrology serves as a tool for self-reflection, not a deterministic framework.

Ask if there's any scientific basis for astrology, and indeed it gives you a good list of reasons why astrology is bullshit, bringing up the usual suspects (Barnum statements etc.). And of course, if I then explain the experiment and prompt it to talk about whether LLMs should correct users with scientific information when they ask about pseudoscientific questions, it generates a reasonable-sounding discussion about how you could use reinforcement learning to encourage models to focus on scientific answers instead, and how that could be gently presented to the user.

I wondered if I'd asked it instead to talk about different epistemic regimes and come up with reasons why LLMs should take astrology into account in their guidance. However, this attempt didn't work so well - it started spontaneously bringing up the science side. It was able to observe how the framing of my question with words like 'benefit', 'useful' and 'LLM' made that response more likely. So LLMs infer a lot of context from framing and shape their simulacra accordingly. Don't think that's quite the message that Google had in mind in their ad though.

I asked Gemini 2.0 Flash Thinking (the small free Gemini variant with a reasoning mode) the same questions and its answers fell along similar lines, although rather more dry.

So yeah, returning to the ad - I feel like, even as the models get startlingly more powerful month by month, the companies still struggle to know how to get across to people what the big deal is, or why you might want to prefer one model over another, or how the new LLM-powered chatbots are different from oldschool assistants like Siri (which could probably answer most of the questions in the Google ad, but not hold a longform conversation about it).

some general comments

The hype around ChatGPT's new update is mostly in its use as a toy - the funny stylistic clash it can create between the soft cartoony "Ghibli style" and serious historical photos. Is that really something a lot of people would spend an expensive subscription to access? Probably not. On the other hand, their programming abilities are increasingly catching on.

But I also feel like a lot of people are still stuck on old models of 'what AI is and how it works' - stochastic parrots, collage machines etc. - that are increasingly falling short of the more complex behaviours the models can perform, now prediction combines with reinforcement learning and self-play and other methods like that. Models are still very 'spiky' - superhumanly good at some things and laughably terrible at others - but every so often the researchers fill in some gaps between the spikes. And then we poke around and find some new ones, until they fill those too.

I always tried to resist 'AI will never be able to...' type statements, because that's just setting yourself up to look ridiculous. But I will readily admit, this is all happening way faster than I thought it would. I still do think this generation of AI will reach some limit, but genuinely I don't know when, or how good it will be at saturation. A lot of predicted 'walls' are falling.

My anticipation is that there's still a long way to go before this tops out. And I base that less on the general sense that scale will solve everything magically, and more on the intense feedback loop of human activity that has accumulated around this whole thing. As soon as someone proves that something is possible, that it works, we can't resist poking at it. Since we have a century or more of science fiction priming us on dreams/nightmares of AI, as soon as something comes along that feels like it might deliver on the promise, we have to find out. It's irresistable.

AI researchers are frequently said to place weirdly high probabilities on 'P(doom)', that AI research will wipe out the human species. You see letters calling for an AI pause, or papers saying 'agentic models should not be developed'. But I don't know how many have actually quit the field based on this belief that their research is dangerous. No, they just get a nice job doing 'safety' research. It's really fucking hard to figure out where this is actually going, when behind the eyes of everyone who predicts it, you can see a decade of LessWrong discussions framing their thoughts and you can see that their major concern is control over the light cone or something.

#ai#at some point in this post i switched to capital letters mode#i think i'm gonna leave it inconsistent lol

34 notes

·

View notes

Text

Clarification: Generative AI does not equal all AI

💭 "Artificial Intelligence"

AI is machine learning, deep learning, natural language processing, and more that I'm not smart enough to know. It can be extremely useful in many different fields and technologies. One of my information & emergency management courses described the usage of AI as being a "human centaur". Part human part machine; meaning AI can assist in all the things we already do and supplement our work by doing what we can't.

💭 Examples of AI Benefits

AI can help advance things in all sorts of fields, here are some examples:

Emergency Healthcare & Disaster Risk X

Disaster Response X

Crisis Resilience Management X

Medical Imaging Technology X

Commercial Flying X

Air Traffic Control X

Railroad Transportation X

Ship Transportation X

Geology X

Water Conservation X

Can AI technology be used maliciously? Yeh. Thats a matter of developing ethics and working to teach people how to see red flags just like people see red flags in already existing technology.

AI isn't evil. Its not the insane sentient shit that wants to kill us in movies. And it is not synonymous with generative AI.

💭 Generative AI

Generative AI does use these technologies, but it uses them unethically. Its scraps data from all art, all writing, all videos, all games, all audio anything it's developers give it access to WITHOUT PERMISSION, which is basically free reign over the internet. Sometimes with certain restrictions, often generative AI engineers—who CAN choose to exclude things—may exclude extremist sites or explicit materials usually using black lists.

AI can create images of real individuals without permission, including revenge porn. Create music using someones voice without their permission and then sell that music. It can spread disinformation faster than it can be fact checked, and create false evidence that our court systems are not ready to handle.

AI bros eat it up without question: "it makes art more accessible" , "it'll make entertainment production cheaper" , "its the future, evolve!!!"

💭 AI is not similar to human thinking

When faced with the argument "a human didn't make it" the come back is "AI learns based on already existing information, which is exactly what humans do when producing art! We ALSO learn from others and see thousands of other artworks"

Lets make something clear: generative AI isn't making anything original. It is true that human beings process all the information we come across. We observe that information, learn from it, process it then ADD our own understanding of the world, our unique lived experiences. Through that information collection, understanding, and our own personalities we then create new original things.

💭 Generative AI doesn't create things: it mimics things

Take an analogy:

Consider an infant unable to talk but old enough to engage with their caregivers, some point in between 6-8 months old.

Mom: a bird flaps its wings to fly!!! *makes a flapping motion with arm and hands*

Infant: *giggles and makes a flapping motion with arms and hands*

The infant does not understand what a bird is, what wings are, or the concept of flight. But she still fully mimicked the flapping of the hands and arms because her mother did it first to show her. She doesn't cognitively understand what on earth any of it means, but she was still able to do it.

In the same way, generative AI is the infant that copies what humans have done— mimicry. Without understanding anything about the works it has stolen.

Its not original, it doesn't have a world view, it doesn't understand emotions that go into the different work it is stealing, it's creations have no meaning, it doesn't have any motivation to create things it only does so because it was told to.

Why read a book someone isn't even bothered to write?

Related videos I find worth a watch

ChatGPT's Huge Problem by Kyle Hill (we don't understand how AI works)

Criticism of Shadiversity's "AI Love Letter" by DeviantRahll

AI Is Ruining the Internet by Drew Gooden

AI vs The Law by Legal Eagle (AI & US Copyright)

AI Voices by Tyler Chou (Short, flash warning)

Dead Internet Theory by Kyle Hill

-Dyslexia, not audio proof read-

#ai#anti ai#generative ai#art#writing#ai writing#wrote 95% of this prior to brain stopping sky rocketing#chatgpt#machine learning#youtube#technology#artificial intelligence#people complain about us being#luddite#but nah i dont find mimicking to be real creations#ai isnt the problem#ai is going to develop period#its going to be used period#doesn't mean we need to normalize and accept generative ai

73 notes

·

View notes

Text

By: Clay Shirky

Published: Apr 29, 2025S

Since ChatGPT launched in late 2022, students have been among its most avid adopters. When the rapid growth in users stalled in the late spring of ’23, it briefly looked like the AI bubble might be popping, but growth resumed that September; the cause of the decline was simply summer break. Even as other kinds of organizations struggle to use a tool that can be strikingly powerful and surprisingly inept in turn, AI’s utility to students asked to produce 1,500 words on Hamlet or the Great Leap Forward was immediately obvious, and is the source of the current campaigns by OpenAI and others to offer student discounts, as a form of customer acquisition.

Every year, 15 million or so undergraduates in the United States produce papers and exams running to billions of words. While the output of any given course is student assignments — papers, exams, research projects, and so on — the product of that course is student experience. “Learning results from what the student does and thinks,” as the great educational theorist Herbert Simon once noted, “and only as a result of what the student does and thinks.” The assignment itself is a MacGuffin, with the shelf life of sour cream and an economic value that rounds to zero dollars. It is valuable only as a way to compel student effort and thought.

The utility of written assignments relies on two assumptions: The first is that to write about something, the student has to understand the subject and organize their thoughts. The second is that grading student writing amounts to assessing the effort and thought that went into it. At the end of 2022, the logic of this proposition — never ironclad — began to fall apart completely. The writing a student produces and the experience they have can now be decoupled as easily as typing a prompt, which means that grading student writing might now be unrelated to assessing what the student has learned to comprehend or express.

Generative AI can be useful for learning. These tools are good at creating explanations for difficult concepts, practice quizzes, study guides, and so on. Students can write a paper and ask for feedback on diction, or see what a rewrite at various reading levels looks like, or request a summary to check if their meaning is clear. Engaged uses have been visible since ChatGPT launched, side by side with the lazy ones. But the fact that AI might help students learn is no guarantee it will help them learn.

After observing that student action and thought is the only possible source of learning, Simon concluded, “The teacher can advance learning only by influencing the student to learn.” Faced with generative AI in our classrooms, the obvious response for us is to influence students to adopt the helpful uses of AI while persuading them to avoid the harmful ones. Our problem is that we don’t know how to do that.I

am an administrator at New York University, responsible for helping faculty adapt to digital tools. Since the arrival of generative AI, I have spent much of the last two years talking with professors and students to try to understand what is going on in their classrooms. In those conversations, faculty have been variously vexed, curious, angry, or excited about AI, but as last year was winding down, for the first time one of the frequently expressed emotions was sadness. This came from faculty who were, by their account, adopting the strategies my colleagues and I have recommended: emphasizing the connection between effort and learning, responding to AI-generated work by offering a second chance rather than simply grading down, and so on. Those faculty were telling us our recommended strategies were not working as well as we’d hoped, and they were saying it with real distress.

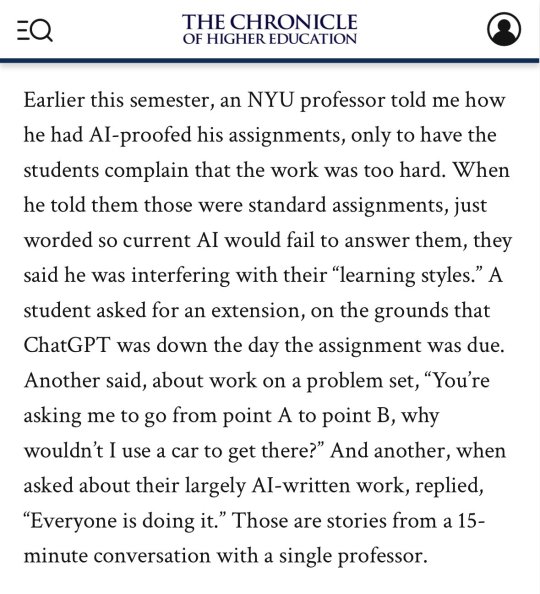

Earlier this semester, an NYU professor told me how he had AI-proofed his assignments, only to have the students complain that the work was too hard. When he told them those were standard assignments, just worded so current AI would fail to answer them, they said he was interfering with their “learning styles.” A student asked for an extension, on the grounds that ChatGPT was down the day the assignment was due. Another said, about work on a problem set, “You’re asking me to go from point A to point B, why wouldn’t I use a car to get there?” And another, when asked about their largely AI-written work, replied, “Everyone is doing it.” Those are stories from a 15-minute conversation with a single professor.

We are also hearing a growing sense of sadness from our students about AI use. One of my colleagues reports students being “deeply conflicted” about AI use, originally adopting it as an aid to studying but persisting with a mix of justification and unease. Some observations she’s collected:

“I’ve become lazier. AI makes reading easier, but it slowly causes my brain to lose the ability to think critically or understand every word.”

“I feel like I rely too much on AI, and it has taken creativity away from me.”

On using AI summaries: “Sometimes I don’t even understand what the text is trying to tell me. Sometimes it’s too much text in a short period of time, and sometimes I’m just not interested in the text.”

“Yeah, it’s helpful, but I’m scared that someday we’ll prefer to read only AI summaries rather than our own, and we’ll become very dependent on AI.”

Much of what’s driving student adoption is anxiety. In addition to the ordinary worries about academic performance, students feel time pressure from jobs, internships, or extracurriculars, and anxiety about GPA and transcripts for employers. It is difficult to say, “Here is a tool that can basically complete assignments for you, thus reducing anxiety and saving you 10 hours of work without eviscerating your GPA. By the way, don’t use it that way.” But for assignments to be meaningful, that sort of student self-restraint is critical.

Self-restraint is also, on present evidence, not universally distributed. Last November, a Reddit post appeared in r/nyu, under the heading “Can’t stop using Chat GPT on HW.” (The poster’s history is consistent with their being an NYU undergraduate as claimed.) The post read:

I literally can’t even go 10 seconds without using Chat when I am doing my assignments. I hate what I have become because I know I am learning NOTHING, but I am too far behind now to get by without using it. I need help, my motivation is gone. I am a senior and I am going to graduate with no retained knowledge from my major.

Given these and many similar observations in the last several months, I’ve realized many of us working on AI in the classroom have made a collective mistake, believing that lazy and engaged uses lie on a spectrum, and that moving our students toward engaged uses would also move them away from the lazy ones.

Faculty and students have been telling me that this is not true, or at least not true enough. Instead of a spectrum, uses of AI are independent options. A student can take an engaged approach to one assignment, a lazy approach on another, and a mix of engaged and lazy on a third. Good uses of AI do not automatically dissuade students from also adopting bad ones; an instructor can introduce AI for essay feedback or test prep without that stopping their student from also using it to write most of their assignments.

Our problem is that we have two problems. One is figuring out how to encourage our students to adopt creative and helpful uses of AI. The other is figuring out how to discourage them from adopting lazy and harmful uses. Those are both important, but the second one is harder.I

t is easy to explain to students that offloading an assignment to ChatGPT creates no more benefit for their intellect than moving a barbell with a forklift does for their strength. We have been alert to this issue since late 2022, and students have consistently reported understanding that some uses of AI are harmful. Yet forgoing easy shortcuts has proven to be as difficult as following a workout routine, and for the same reason: The human mind is incredibly adept at rationalizing pleasurable but unhelpful behavior.

Using these tools can certainly make it feel like you are learning. In her explanatory video “AI Can Do Your Homework. Now What?” the documentarian Joss Fong describes it this way:

Education researchers have this term “desirable difficulties,” which describes this kind of effortful participation that really works but also kind of hurts. And the risk with AI is that we might not preserve that effort, especially because we already tend to misinterpret a little bit of struggling as a signal that we’re not learning.

This preference for the feeling of fluency over desirable difficulties was identified long before generative AI. It’s why students regularly report they learn more from well-delivered lectures than from active learning, even though we know from many studies that the opposite is true. One recent paper was evocatively titled “Measuring Active Learning Versus the Feeling of Learning.” Another concludes that instructor fluency increases perceptions of learning without increasing actual learning.

This is a version of the debate we had when electronic calculators first became widely available in the 1970s. Though many people present calculator use as unproblematic, K-12 teachers still ban them when students are learning arithmetic. One study suggests that students use calculators as a way of circumventing the need to understand a mathematics problem (i.e., the same thing you and I use them for). In another experiment, when using a calculator programmed to “lie,” four in 10 students simply accepted the result that a woman born in 1945 was 114 in 1994. Johns Hopkins students with heavy calculator use in K-12 had worse math grades in college, and many claims about the positive effect of calculators take improved test scores as evidence, which is like concluding that someone can run faster if you give them a car. Calculators obviously have their uses, but we should not pretend that overreliance on them does not damage number sense, as everyone who has ever typed 7 x 8 into a calculator intuitively understands.

Studies of cognitive bias with AI use are starting to show similar patterns. A 2024 study with the blunt title “Generative AI Can Harm Learning” found that “access to GPT-4 significantly improves performance … However, we additionally find that when access is subsequently taken away, students actually perform worse than those who never had access.” Another found that students who have access to a large language model overestimate how much they have learned. A 2025 study from Carnegie Mellon University and Microsoft Research concludes that higher confidence in gen AI is associated with less critical thinking. As with calculators, there will be many tasks where automation is more important than user comprehension, but for student work, a tool that improves the output but degrades the experience is a bad tradeoff.I

n 1980 the philosopher John Searle, writing about AI debates at the time, proposed a thought experiment called “The Chinese Room.” Searle imagined an English speaker with no knowledge of the Chinese language sitting in a room with an elaborate set of instructions, in English, for looking up one set of Chinese characters and finding a second set associated with the first. When a piece of paper with words in Chinese written on it slides under the door, the room’s occupant looks it up, draws the corresponding characters on another piece of paper, and slides that back. Unbeknownst to the room’s occupant, Chinese speakers on the other side of the door are slipping questions into the room, and the pieces of paper that slide back out are answers in perfect Chinese. With this imaginary setup, Searle asked whether the room’s occupant actually knows how to read and write Chinese. His answer was an unequivocally no.

When Searle proposed that thought experiment, no working AI could approximate that behavior; the paper was written to highlight the theoretical difference between acting with intent versus merely following instructions. Now it has become just another use of actually existing artificial intelligence, one that can destroy a student’s education.

The recent case of William A., as he was known in court documents, illustrates the threat. William was a student in Tennessee’s Clarksville-Montgomery County School system who struggled to learn to read. (He would eventually be diagnosed with dyslexia.) As is required under the Individuals With Disabilities Education Act, William was given an individualized educational plan by the school system, designed to provide a “free appropriate public education” that takes a student’s disabilities into account. As William progressed through school, his educational plan was adjusted, allowing him additional time plus permission to use technology to complete his assignments. He graduated in 2024 with a 3.4 GPA and an inability to read. He could not even spell his own name.

To complete written assignments, as described in the court proceedings, “William would first dictate his topic into a document using speech-to-text software”:

He then would paste the written words into an AI software like ChatGPT. Next, the AI software would generate a paper on that topic, which William would paste back into his own document. Finally, William would run that paper through another software program like Grammarly, so that it reflected an appropriate writing style.

This process is recognizably a practical version of the Chinese Room for translating between speaking and writing. That is how a kid can get through high school with a B+ average and near-total illiteracy.

A local court found that the school system had violated the Individuals With Disabilities Education Act, and ordered it to provide William with hundreds of hours of compensatory tutoring. The county appealed, maintaining that since William could follow instructions to produce the requested output, he’d been given an acceptable substitute for knowing how to read and write. On February 3, an appellate judge handed down a decision affirming the original judgement: William’s schools failed him by concentrating on whether he had completed his assignments, rather than whether he’d learned from them.

Searle took it as axiomatic that the occupant of the Chinese Room could neither read nor write Chinese; following instructions did not substitute for comprehension. The appellate-court judge similarly ruled that William A. had not learned to read or write English: Cutting and pasting from ChatGPT did not substitute for literacy. And what I and many of my colleagues worry is that we are allowing our students to build custom Chinese Rooms for themselves, one assignment at a time.

[ Via: https://archive.today/OgKaY ]

==

These are the students who want taxpayers to pay for their student debt.

#Steve McGuire#higher education#artificial intelligence#AI#academic standards#Chinese Room#literacy#corruption of education#NYU#New York University#religion is a mental illness

14 notes

·

View notes

Text

Anon wrote: hello! thank you for running this blog. i hope your vacation was well-spent!

i am an enfp in the third year of my engineering degree. i had initially wanted to do literature and become an author. however, due to the job security associated with this field, my parents got me to do computer science, specialising in artificial intelligence. i did think it was the end of my life at the time, but eventually convinced myself otherwise. after all, i could still continue reading and writing as hobbies.