#chatgpt prompt engineering course

Explore tagged Tumblr posts

Text

#best online learning platforms#web development courses#chatgpt prompt engineering course#bizgurukul affiliate marketing

1 note

·

View note

Text

Prompt Engineering से पैसे कमाएँ |Unique way to earn money online..

Prompt Engineering : online सफलता के लिए प्रभावी Prompt डिज़ाइन करना | Online संचार के तेजी से विकसित हो रहे परिदृश्य में, Prompt Engineering एक महत्वपूर्ण कौशल के रूप में उभरी है, जो जुड़ाव बढ़ाने, बहुमूल्य जानकारी देने और यहां तक कि डिजिटल इंटरैक्शन का मुद्रीकरण करने का मार्ग प्रशस्त कर रही है। यह लेख Prompt Engineering की दुनिया पर गहराई से प्रकाश डालता है, इसके महत्व, सीखने के सुलभ…

View On WordPress

#advanced prompt engineering#ai prompt engineering#ai prompt engineering certification#ai prompt engineering course#ai prompt engineering jobs#an information-theoretic approach to prompt engineering without ground truth labels#andrew ng prompt engineering#awesome prompt engineering#brex prompt engineering guide#chat gpt prompt engineering#chat gpt prompt engineering course#chat gpt prompt engineering jobs#chatgpt prompt engineering#chatgpt prompt engineering course#chatgpt prompt engineering for developers#chatgpt prompt engineering guide#clip prompt engineering#cohere prompt engineering#deep learning ai prompt engineering#deeplearning ai prompt engineering#deeplearning.ai prompt engineering#entry level prompt engineering jobs#free prompt engineering course#github copilot prompt engineering#github prompt engineering#github prompt engineering guide#gpt 3 prompt engineering#gpt prompt engineering#gpt-3 prompt engineering#gpt-4 prompt engineering

0 notes

Text

Mastering the Art of AI Prompts: 5 Techniques for Advanced Users

New Post has been published on https://thedigitalinsider.com/mastering-the-art-of-ai-prompts-5-techniques-for-advanced-users/

Mastering the Art of AI Prompts: 5 Techniques for Advanced Users

The use of large language models (LLMs) like ChatGPT is exploding across industries. Even scientists are leaning on AI to write or at least polish their work. A recent analysis of five million scientific studies published in 2023 found a rapid increase in certain words, such as meticulous, intricate, and commendable. The reason for this increase? The use of LLMs.

AI’s impact is already massive, rapidly transforming the workplace. But we’re still at the early stages, trying to strike a balance between harnessing AI without leaning too much on it and compromising quality. At my company Jotform, we have incorporated AI tools to automate tedious tasks, or as I call it, “busywork,” and free up employees to focus on the meaningful work that only humans can do. I view AI tools like ChatGPT as valuable resources—low-cost, efficient AI assistants. But as anyone who’s used ChatGPT will tell you, it’s not 100% reliable or accurate. And it’s only as effective as the prompts you give it.

I recently asked ChatGPT how to develop your prompt engineering skills. The first response was: “Experimentation and Iteration: Continuously experiment with different types of prompts and refine them based on the AI’s outputs. Track what works and what doesn’t, and iteratively improve your prompts.”

In other words, practice makes perfect.

With this in mind, here are my top strategies for taking your prompt writing to the next level.

Try meta-prompts

Communication is the lynchpin of a successful relationship. Understanding how people communicate and how to best communicate with them can help grow a stronger bond and overcome inevitable misunderstandings when there is a disconnect between words and intentions or emotions. In the same vein, your interactions with ChatGPT can vastly improve if you explicitly ask it how to best communicate with it. I regularly ask ChatGPT how to phrase prompts in order to get the information or feedback I’m seeking. The more information you give ChatGPT about the results you’re after, the better it can help you generate an effective prompt.

Here’s a sample meta-prompt:

“How would you phrase a prompt to get detailed feedback on an article from ChatGPT? What information would you include to get the most relevant insight?”

In seconds, ChatGPT churned out a prompt template with a brief explanation of why it included each part of the prompt. This kind of insight helps you write better prompts in the future.

Make it an ongoing dialogue

In most research tools, each query is isolated. You can’t build on a previous search to refine or dig deeper. ChatGPT has the advantage of enabling you to continue a thread, just like a dialogue between two people—a Socratic dialogue, if you will, where you are the teacher and ChatGPT is the student. I find this capability especially useful if I’m new to a subject and would like to begin with a basic level of understanding before diving into the nitty gritty.

To engage in dialogue with ChatGPT, start with a broad prompt and refine the subsequent prompts based on the responses.

A sequence of prompts might look like this:

“In a few sentences, can you explain the mechanics of a tennis serve?”

“Why does the server shift their weight from the back foot to the front foot?”

“Can you briefly explain kinetic energy transfer?”

This technique can help you to get more nuanced responses and explore areas and perspectives you might not have otherwise considered.

Give ChatGPT a reference text

ChatGPT can be a bit of a smooth talker. Ask it about any subject under the sun and it will happily wax poetic for as long as you’d like. The rub: it’s not always accurate. That’s why providing ChatGPT a reference text can help ensure the replies you receive are grounded in reliable sources.

As OpenAI explains, “In the same way that a sheet of notes can help a student do better on a test, providing reference text to these models can help in answering with fewer fabrications.” Feed ChatGPT the sources that you already know and trust and let the LLM extract the information you need.

The prompt could be:

“Use the following article in quotes to answer questions.”

ChatGPT: Sure, please go ahead and provide the articles you’d like me to use for answering your questions.

Then, provide ChatGPT with the article (title and publication will usually do the trick) and your questions.

Assign ChatGPT a role

One of the most useful, and admittedly fun, capabilities of ChatGPT is to adopt different personas. You probably know the parable of the blind men and the elephant. Each man feels a different part of the elephant and describes the animal based on their limited perspective. As humans, we tend to assume our reality is correct even when it’s based on a limited point of view. Incorporating various perspectives empowers us to understand something more fully.

By simply instructing ChatGPT to adopt a persona, we can get a new perspective.

Consider these two prompts and personas:

Prompt: Rewrite the following text from the perspective of a poet: A bridge over water connects two lands.

ChatGPT:

Between shores, where rivers dance and whisper, A bridge, slender and resolute, spans the liquid silver, Uniting distant lands with an arch of promise, A testament to the yearning of hearts and soil.

Prompt: Rewrite the following text from the perspective of an engineer: A bridge over water connects two lands.

ChatGPT

A bridge over water functions as a structural solution, effectively connecting two separate landmasses. It spans the gap with precision-engineered materials, ensuring stability and durability while facilitating transportation and communication between the two regions.

At Jotform, our marketing team might use this technique to examine an issue from the perspective of different buyer personas. It’s a simple technique with myriad use cases.

Model the reply you want

While ChatGPT might not be the most reliable narrator, it is extremely logical. Rather than trying to describe the response you want, which can be surprisingly challenging, you can give ChatGPT an example and it will apply the same logic.

Let’s say I’m working on a marketing campaign and want to use ChatGPT to come up with snappy, compelling copy.

I could use the following prompt:

Prompt: “I will write words and I’d like you to come up with a corresponding adjective. For example, if I write ‘pretty,’ you might write “more beautiful than the stars in the sky.”

ChatGPT:

Of course, I’m ready! Please provide the words you’d like me to transform.

Me: nervous

ChatGPT: Nervous as a leaf trembling in the gale of a tempest.

I might consider that on second thought, that’s not the tone I’m after. So I tweak the prompt.

Prompt: I will write words and I’d like you to come up with a corresponding adjective. For example, if I write ‘pretty,’ you might write “cuter than the prize-winning pig at the Texas State Fair.”

ChatGPT: Got it! Please provide the words you’d like me to transform.

Me: nervous

ChatGPT: Nervous as a cat at a rocking chair convention.

In this case, I’d get an idea for my marketing copy—and a chuckle, at no extra charge.

#2023#ai#ai prompts#ai tools#Analysis#Art#Article#Articles#bridge#chatGPT#communication#course#dance#diving#elephant#emotions#employees#energy#Engineer#engineering#explanation#fair#focus#Future#gap#how#how to#humans#impact#Industries

0 notes

Video

youtube

What is Prompt Engineer - Prompt Engineer Courses by Open AI - AI - Chat...

#youtube#What is Prompt Eengineer - Prompt Engineer Courses by Open AI - AI - Chat GPT - No Coding prompt promptengineering chatgpt openai ai engine

0 notes

Text

Expert_Juwelier

2 days agoPrompt Engineering is the Alchemy of the Digital Age: turning words into actions, dreams into software. ?

✨ If coding was the job of yesterday, crafting the perfect prompt is tomorrow

#TechTrends #AIRevolution#TechInsights#promptengineering#chatgpt4

#ai#prompt engineering#chatgpt#nlp#artificial intelligence#machine learning#open ai#ai course in delhi#ai generated#prompt#generative ai

0 notes

Text

How AI is Changing Jobs: The Rise of Automation and How to Stay Ahead in 2025

AI and Jobs

Artificial Intelligence (AI) is everywhere. From self-checkout kiosks to AI-powered chatbots handling customer service, it’s changing the way businesses operate. While AI is making things faster and more efficient, it’s also making some jobs disappear. If you’re wondering how this affects you and what you can do about it, keep reading — because the future is already here.

The AI Boom: How It’s Reshaping the Workplace

AI is not just a buzzword anymore; it’s the backbone of modern business. Companies are using AI for automation, decision-making, and customer interactions. But what does that mean for jobs?

AI is Taking Over Repetitive Tasks

Gone are the days when data entry, basic accounting, and customer support relied solely on humans. AI tools like ChatGPT, Jasper, and Midjourney are doing tasks that once required an entire team. This means fewer jobs in these sectors, but also new opportunities elsewhere.

Companies are Hiring Fewer People

With AI handling routine work, businesses don’t need as many employees as before. Hiring freezes, downsizing, and increased automation are making it tougher to land a new job.

AI-Related Jobs are on the Rise

On the flip side, there’s massive demand for AI engineers, data scientists, and automation specialists. Companies need people who can build, maintain, and optimize AI tools.

Trending AI Skills Employers Want:

Machine Learning & Deep Learning

Prompt Engineering

AI-Powered Marketing & SEO

AI in Cybersecurity

Data Science & Analytics

Click Here to Know more

The Decline of Traditional Job Offers

AI is shaking up industries, and some job roles are disappearing faster than expected. Here’s why new job offers are on the decline:

AI-Driven Cost Cutting

Businesses are using AI to reduce operational costs. Instead of hiring new employees, they’re investing in AI-powered solutions that automate tasks at a fraction of the cost.

The Gig Economy is Replacing Full-Time Jobs

Instead of hiring full-time staff, companies are outsourcing work to freelancers and gig workers. This means fewer stable job opportunities but more chances for independent workers.

Economic Uncertainty

The global economy is unpredictable, and businesses are cautious about hiring. With AI improving efficiency, companies are choosing to scale down their workforce.

Click Here to Know more

Preparing for an AI-Driven Future

Feeling worried? Don’t be. AI isn’t just taking jobs — it’s also creating new ones. The key is to stay ahead by learning the right skills and adapting to the changing landscape.

1. Learn AI and Data Analytics

The best way to future-proof your career is to understand AI. Free courses on platforms like Coursera, Udemy, and Khan Academy can get you started.

2. Develop Soft Skills AI Can’t Replace

AI is great at automation, but it lacks emotional intelligence, creativity, and critical thinking. Strengthening these skills can give you an edge.

3. Embrace Remote & Freelance Work

With traditional jobs shrinking, freelancing is a great way to stay flexible. Sites like Upwork, Fiverr, and Toptal have booming demand for AI-related skills.

4. Use AI to Your Advantage

Instead of fearing AI, learn how to use it. AI-powered tools like ChatGPT, Jasper, and Canva can help boost productivity and creativity.

5. Never Stop Learning

Technology evolves fast. Stay updated with new AI trends, attend webinars, and keep improving your skills.

Click Here to Know more

Final Thoughts

AI is here to stay, and it’s changing the job market rapidly. While some traditional roles are disappearing, new opportunities are emerging. The key to surviving (and thriving) in this AI-driven world is adaptability. Keep learning, stay flexible, and embrace AI as a tool — not a threat.

Click Here to Know more

Share this blog if you found it helpful! Let’s spread awareness and help people prepare for the AI revolution.

3 notes

·

View notes

Text

Here is the thing that bothers me, as someone who works in tech, about the whole ChatGPT explosion.

The thing that bothers me is that ChatGPT, from a purely abstract point of view, is really fucking cool.

Some of the things it can produce are fucking wild to me; it blows my mind that a piece of technology is able to produce such detailed, varied responses that on the whole fit the prompts they are given. It blows my mind that it has come so far so fast. It is, on an abstract level, SO FUCKING COOL that a computer can make the advanced leaps of logic (because that's all it is, very complex programmed logic, not intelligence in any human sense) required to produce output "in the style of Jane Austen" or "about the care and feeding of prawns" or "in the form of a limerick" or whatever the hell else people dream up for it to do. And fast, too! It's incredible on a technical level, and if it existed in a vacuum I would be so excited to watch it unfold and tinker with it all damn day.

The problem, as it so often is, is that cool stuff does not exist in a vacuum. In this case, it is a computer that (despite the moniker of "artificial intelligence") has no emotional awareness or ethical reasoning capabilities, being used by the whole great tide of humanity, a force that is notoriously complex, notoriously flawed, and more so in bulk.

-----

During my first experiment with a proper ChatGPT interface, I asked it (because I am currently obsessed with GW2) if it could explain HAM tanking to me in an instructional manner. It wrote me a long explanatory chunk of text, explaining that HAM stood for "Heavy Armor Masteries" and telling me how I should go about training and preparing a character with them. It was a very authoritative sounding discussion, with lots of bullet points and even an occasional wiki link Iirc.

The problem of course ("of course", although the GW2 folks who follow me have already spotted it) is that the whole explanation was nonsense. HAM in GW2 player parlance stands for "Heal Alacrity Mechanist". As near as I've been able to discover, "Heavy Armor Masteries" aren't even a thing, in GW2 or anywhere else - although both "Heavy Armor" and "Masteries" are independent concepts in the game.

Fundamentally, I thought, this is VERY bad. People have started relying on ChatGPT for answers to their questions. People are susceptible to authoritative-sounding answers like this. People under the right circumstances would have no reason not to take this as truth when it is not.

But at the same time... how wild, how cool, is it that, given the prompt "HAM tanking" and having no idea what it was except that it involves GW2, the parser was able to formulate a plausible-sounding acronym expansion out of whole cloth? That's extraordinary! If you don't think that's the tightest shit, get out of my face.

----

The problem, I think, is ultimately twofold: capitalism and phrasing.

The phrasing part is simple. Why do we call this "artificial intelligence"? It's a misnomer - there is no intelligence behind the results from ChatGPT. It is ultimately a VERY advanced and complicated search engine, using a vast quantity of source data to calculate an output from an input. Referring to that as "intelligence" gives it credit for an agency, an ability to judge whether its output is appropriate, that it simply does not possess. And given how quickly people are coming to rely on it as a source of truth, that's... irresponsible at best.

The capitalism part...

You hear further stories of the abuses of ChatGPT every day. People, human people with creative minds and things to say and contribute, being squeezed out of roles in favor of a ChatGPT implementation that can sufficiently ("sufficiently" by corporate standards) imitate soul without possessing it. This is not acceptible; the promise of technology is to facilitate the capabilities and happiness of humanity, not to replace it. Companies see the ability to expand their profit margins at the expense of the quality of their output and the humanity of it. They absorb and regurgitate in lesser form the existing work of creators who often didn't consent to contribute to such a system anyway.

Consequently, the more I hear about AI lately, the more hopeful I am that the thing does go bankrupt and collapse, that the ruling goes through where they have to obliterate their data stores and start over from scratch. I think "AI" as a concept needs to be taken away from us until we are responsible enough to use it.

But goddamn. I would love to live in a world where we could just marvel at it, at the things it is able to do *well* and the elegant beauty even of its mistakes.

#bjk talks#ChatGPT#technology#AI#artificial intelligence#just thinking out loud here really don't mind me

23 notes

·

View notes

Link

#aiapps#aisoftware#aitools#artificialintelligencetools#bestaitools#designtools#educationtools#generative-ai#marketingtools#productivitytools#topaitools

2 notes

·

View notes

Text

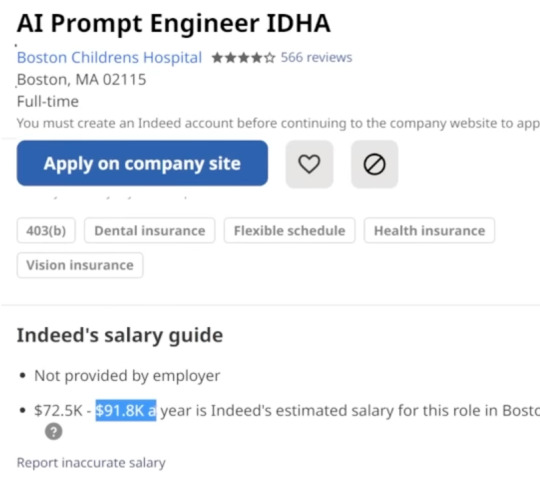

AI is creating amazing new jobs.

AI has created a field that never existed before — prompt engineering.

To work as a prompt engineer, you must know how to create prompts that generate desirable responses from AI models such as those used by ChatGPT.

The salary isn’t bad.

Artificial intelligence company, Anthropic, has a role for a “prompt engineer and librarian” with a salary range between $175,000 and $335,000.

And that’s not the only company looking for prompt engineers! Let’s see in more detail what prompt engineers do, how you can become a prompt engineer and what are the requirements for this role.

What does a prompt engineer do?

A prompt engineer is someone who develops and refines AI models using prompt engineering techniques. This is like teaching a model how to do something by giving step-by-step instructions or “prompts.”

Prompt engineers work with large language models like GPT-3 (or the new GPT-4), which can generate human-like responses to text prompts. Their work focuses on designing prompts that generate desirable responses from language models, as well as enhancing the models to provide more accurate and relevant text outputs.

Here are some tasks a prompt engineer does:

Optimize language models using established techniques and tools

Write prose to test AI systems for quirks (identify AI’s errors and hidden capabilities)

Review and analyze datasets to identify patterns and trends in language and develop new prompts

Develop and maintain documentation for language models (examples, instructions, best practices, etc)

Train language models on new data sets, and monitor model performance to identify areas for improvement

Collaborate with data scientists/software engineers to integrate language models into software applications and systems.

As you can see, prompt engineers don’t write code all day, but they still need some programming knowledge to be able to work with datasets, develop and fine-tune language models, and collaborate with data scientists and software engineers.

How to learn prompt engineering

You don’t need to know coding to start learning prompt engineering. In fact, in the following link, you can find 4 free prompt engineering courses to join the top 1% of ChatGPT users.

After learning the basics, follow the steps below to continue your path as a prompt engineer.

Learn the basics of programming: As a prompt engineer, you’ll need to work with datasets and understand basic programming concepts. Python can be a good language for this.

Learn natural language processing (NLP) and machine learning (ML) concepts: Prompt engineers need to know concepts in both NLP and ML such as text preprocessing, feature engineering, model training, and optimization.

Practice developing prompts and fine-tuning language models: Learn to use prompt engineering techniques to generate text outputs from language models. Test different prompt types and fine-tune language models to improve performance.

Create a portfolio of prompt engineering projects to showcase your expertise.

The prompt engineering job that pays $335k

Anthropic pays prompt engineers up to $335k. The company specializes in developing general AI systems and language models, which may explain the high salary. It’s no surprise that Google has invested nearly $400 million in this company.

Here are some of the requirements for this job:

Excellent communicator, and love teaching technical concepts and creating high-quality documentation that helps out others

High-level familiarity with the architecture and operation of large language models

Basic programming skills and would be comfortable writing small Python programs

Stay up-to-date and informed by taking an active interest in emerging research and industry trends.

Note that the field of prompt engineering is less than 2 years old, so the prompt engineer role may differ from one company to the next, and, just like any other job, the salary for prompt engineers also varies.

If we compare both job postings, we’ll see that the requirements are different. In the second job posting, they specifically require people with 5 years of engineering/coding experience with at least two-year experience in AI and NLP and a bachelor’s degree in computer science, artificial intelligence, or a related field (healthcare preferred).

Again, the requirements may be higher or lower in some companies, so if you don’t have any experience, a project portfolio is probably the best way to get ahead of other applicants.

Artificial Corner’s Free ChatGPT Cheat Sheet

We’re offering a free cheat sheet to our readers. Join our newsletter with 20K+ people and get our free ChatGPT cheat sheet.

#The ChatGPT Skill That Pays Up to $335#000 a Year#prompt engineers#prompts#machine learning#ai#chatgpt

3 notes

·

View notes

Text

The real challenges of AI

The real challenge of AI is democratisation.

We are in middle of a boom in AI. While it may seem like a sudden explosion in the media; this moment has been building for 15+ years now. From the birth of the internet, to the huge explosion in online information, to algorithmic feeds, then voice assistants, and now at Generative AI/Large language feeds - every invention has grown on the bedrock of technologies before it.

Of course, the fervour we see now is from people experiencing a step change in technology. Till December 2022 you had to write eassy using your own brain and hands; come January 2023 you just provide a small prompt and voila! ChatGPT will write a whole essay for you. WOW.

But we've played this game many times before:

New technology is available

The technology gets hyped and people are promised a better future

Technology gets widely deployed

In the process of societal adoption, it gets co-opted by

Eventually, it gets completely owned by . The new boss is the same as the old boss. The average person is nowhere better.

One interesting aspect of computers is that they are comparatively much more accessible; order(s) of magnitude more accessible.

The steam engine was firmly on the capital side of the equation; it powered everything from trains to factories but it was a while before mechanisation entered the home and became accessible to the average person. Yet overtime we've given up on things like sewing machines and become strictly consumers.

Computing and the internet sit firmly in the middle. Almost by definition - every laptop & smartphone is the "means of production". The entire "internet" however is but a handful of companies; yet because of its inherently decentralised nature; everything from Mastodon to torrents and more can and do exist.

If it wasn't for that design; the internet would like the TV - with much less control in the hands of citizens.

The challenge with AI is to keep it's capabilities as democratically accessibly and user programable as possible; with the internet being the minimum bar. Thankfully with models being open sourced and even run on Raspberry Pi's, we are off to a good start! But we must remain watchful and make sure this happens.

People of my ilk (technologists) are gung ho about applying AI to anything and everything - but this question of democratisation is much fundamental and its effects more pervasive.

6 notes

·

View notes

Text

Generative AI Professional Course Build the Future with Intelligent Creativity

The rise of Generative AI marks a turning point in technology, where machines are not just analyzing data—they’re creating content. From producing stunning art and writing compelling stories to generating code, music, and even videos, Generative AI is reshaping how we think, work, and innovate.

As the demand for AI skills surges globally, enrolling in a Generative AI Professional Course is your gateway to a future-proof career. This article explains what the course entails, who it’s for, what you’ll learn, and why it’s a game-changer in today’s digital age.

What is Generative AI?

Generative AI refers to a class of artificial intelligence that can generate new data similar to the data it was trained on. Unlike traditional AI that focuses on prediction and classification, Generative AI creates content.

Popular Generative AI Applications:

ChatGPT for conversational AI

DALL·E & Midjourney for image generation

Runway & Sora for AI video creation

GitHub Copilot for AI coding

Bard, Claude, LLaMA for various text tasks

Why Take a Generative AI Professional Course?

With businesses adopting AI tools at a record pace, trained professionals are in high demand. This course empowers you with the knowledge and hands-on experience needed to lead in this evolving landscape.

Key Benefits:

Master cutting-edge tools like GPT, GANs, Diffusion models, and Transformers

Learn real-world applications through hands-on projects

Understand ethical AI development and prompt engineering

Access high-paying job opportunities and freelance gigs

Stay ahead in a competitive tech world

Who Should Enroll?

This course is perfect for:

Developers and Programmers

Data Scientists and Analysts

AI/ML Enthusiasts

Content Creators and Designers

Product Managers and Founders

Students and Educators in STEM fields

Whether you're a beginner or a tech professional, you’ll find value and growth in this course.

Course Curriculum Overview

1. Introduction to Generative AI

History and evolution of AI

Types of generative models (GANs, VAEs, Transformers)

2. Deep Learning Fundamentals

Neural networks, activation functions, optimization

Overview of TensorFlow and PyTorch

Read More: Generative AI Professional Course

0 notes

Text

ChatGPT vs Software Developers: Is Generative AI the End of the Road for Developers?

The rise of generative AI, particularly models like ChatGPT, has sent ripples across various industries, and software development is no exception. With its ability to generate code, answer technical questions, and even assist in debugging, a crucial question arises: Is this the beginning of the end for software developers? Let's delve into this intriguing debate.

The Capabilities of ChatGPT in Software Development:

ChatGPT has demonstrated impressive abilities that can be beneficial in certain aspects of software development:

Code Generation: It can generate code snippets based on prompts, potentially speeding up the creation of boilerplate or simple functions.

Answering Technical Queries: Developers can use it to quickly find answers to common programming questions and understand concepts.

Debugging Assistance: ChatGPT can analyze code and suggest potential solutions to errors.

Idea Generation: It can help brainstorm different approaches to solving a problem.

These capabilities can undoubtedly enhance a developer's workflow and potentially boost productivity for certain tasks.

Why ChatGPT Isn't Replacing Software Developers (Yet):

Despite its impressive feats, ChatGPT and similar generative AI models are far from replacing human software developers. Here's why:

Lack of Deep Understanding and Context: ChatGPT operates based on patterns in its training data. It doesn't possess a genuine understanding of complex system architectures, business requirements, or user needs in the way a human developer does.

Inability to Handle Complex Projects Independently: Building substantial software involves intricate planning, design, integration, and testing. ChatGPT can assist with parts, but it can't manage the entire lifecycle of a complex project.

Debugging Limitations: While it can suggest solutions, ChatGPT doesn't possess the critical thinking and investigative skills needed to tackle nuanced and deeply embedded bugs. Human developers often rely on intuition, experience, and a deep understanding of the codebase to effectively debug.

Creativity and Innovation: Software development often requires creative problem-solving and innovative approaches. ChatGPT is good at generating solutions based on existing patterns, but it lacks the genuine creativity to devise truly novel solutions to unique challenges.

Ethical Considerations and Responsibility: Developers are responsible for the ethical implications of the software they create, including security, privacy, and bias. ChatGPT, as a tool, cannot take on this responsibility.

The Need for Human Oversight and Refinement: Code generated by ChatGPT often requires review, testing, and refinement by human developers to ensure it meets quality standards, security requirements, and project specifications.

Communication and Collaboration: Software development is a collaborative process involving teamwork, communication with stakeholders, and understanding user feedback. These are areas where human interaction remains crucial.

The Future: Collaboration, Not Replacement:

The more likely scenario is that ChatGPT and similar AI tools will become powerful assistants for software developers, augmenting their capabilities rather than replacing them. Developers can leverage AI for repetitive tasks, quick lookups, and initial code generation, freeing up their time to focus on higher-level tasks such as:

Designing complex architectures.

Understanding and translating business requirements.

Solving intricate and novel problems.

Ensuring code quality, security, and maintainability.

Collaborating with teams and stakeholders.

Preparing for the Future with Xaltius Academy's Software Engineering Course:

In this evolving landscape, a strong foundation in software engineering principles, critical thinking, and problem-solving remains paramount. Xaltius Academy's Software Engineering course is designed to equip aspiring developers with these essential skills. The program focuses on:

Fundamental Programming Concepts: Building a solid understanding of programming principles that transcend specific tools.

Software Design and Architecture: Learning to design robust and scalable systems.

Problem-Solving Skills: Developing the ability to tackle complex challenges that AI tools may not be able to handle.

Collaboration and Communication: Emphasizing teamwork and effective communication.

Understanding the Ethical Implications of Technology: Preparing developers to build responsible and ethical software.

Conclusion:

While ChatGPT and generative AI are undoubtedly powerful tools that will impact software development, they are not poised to replace human developers entirely. Instead, the future likely involves a collaborative relationship where developers leverage AI to enhance their productivity and focus on the more complex, creative, and strategic aspects of software creation. Investing in a comprehensive software engineering education, like the one offered by Xaltius Academy, will ensure that developers are well-equipped to thrive in this evolving technological landscape. The road ahead is one of augmentation and innovation, not obsolescence.

0 notes

Text

Exploring the Impact of Generative AI on Businesses

Generative AI, a recent technological marvel, is changing the way businesses operate, making them more efficient. Companies are increasingly adopting this technology to automate repetitive tasks, improve decision-making, and streamline their operations. In this article, we’ll dive into the evolving world of Generative AI, focusing on key trends, the skills in demand, practical use cases, and its implications for the future of work.

Understanding the Generative AI landscape

Our latest research reveals that businesses across industries are showing a growing interest in Generative AI. Not only are they embracing this technology, but many also plan to expand their teams to harness its potential. This indicates the growing significance of Generative AI in the business realm.

Key takeaways:

1. Popular AI models: Companies currently favor well-established AI models like ChatGPT, BERT, and Stable Diffusion due to their proven performance.

2. Shift toward wider applications: Businesses are moving away from individual Generative AI tools and are instead looking for skills related to broader.

3. Tech professionals lead the way: Tech professionals are taking the lead in acquiring essential AI skills for various industries. There’s a strong demand for expertise in working with Large Language Models (LLMs), prompt engineering, and object detection.

4. Rising search trends: Noteworthy trends in Generative AI-related searches encompass a wide range of topics including TensorFlow, AI Chatbots, Generative AI, Image Processing, PyTorch, Natural Language Processing (NLP), Bard, AI Content Creation, Gradio, Azure OpenAI, Convolutional Neural Network, Large Language Models (LLMs), Midjourney, and Prompt Engineering. These trends mirror the dynamic nature of the field and the rapidly evolving interests of professionals and businesses in Generative AI.

Emerging trends in Generative AI

The Generative AI landscape is dynamic, with emerging trends shaping its course:

Ethical considerations: As Generative AI gains prominence, ethical concerns related to its use become increasingly important. Businesses must navigate the ethical aspects of AI responsibly.

Regulatory challenges: Regulatory bodies are paying more attention to AI technologies. Staying compliant with evolving regulations is crucial for businesses adopting Generative AI.

Practical applications across industries

Generative AI has a multitude of applications across various domains, each with its unique potential:

1. Content generation: Generative AI helps in efficiently creating diverse content, including text, code, poetry, and music.

2. Image generation and manipulation: It can transform images artistically, alter facial features, or generate realistic images.

3. Video generation: Applications range from creating videos to summarizing lengthy videos automatically.

4. Data augmentation: Generative AI can synthesize data to improve machine learning models and translate images between different domains.

5. Chatbots and Virtual assistants: Conversational agents and personalized responses are revolutionizing customer support and information retrieval.

6. Healthcare: It aids in generating medical images and molecular structures for drug discovery.

7. Design and creativity: Generative AI fosters creativity in design, from logo creation to architectural proposals.

8. Game development: It assists in generating game content and dialogues for interactive storytelling.

9. Language translation and interpretation: Real-time language translation and sign language interpretation have become more accessible.

10. Security and privacy: Generative AI supports anonymization and secure password generation.

11. Art and creativity: It can generate digital art and music compositions, fostering creativity.

12. Autonomous systems: Enhancing the decision-making of autonomous vehicles and motion planning for robots and drones.

13. Content recommendation: Personalizing content recommendations for articles, products, and media.

Conclusion

In conclusion, Generative AI is changing the way businesses operate and hire. To make the most of its potential, companies should look for individuals with a diverse range of AI skills. Our staffing services can assist in finding the right talent to maximize the benefits of Generative AI. As we embrace this journey, the blend of human creativity and AI innovation promises a more productive and creative era for businesses.

#GenerativeAI#ArtificialIntelligence#AIInnovation#MachineLearning#DeepLearning#AITrends#TechRevolution#AIApplications

0 notes

Text

How I Used ChatGPT To Figure Out How To Reduce Our Reliance On Plastic - Design Thinking

The idea behind this article sprung from my own curiosity which was inspired by a conversation I had with actor Matthew Modine while we were at a private event in Chicago. The other day, I was fondly recalling my conversation with actor Matthew Modine, most recently known for his role as Papa in “Stranger Things.” Modine and I were at his friend’s event in Chicago, and I had a moment with him where he shared with me his dream of becoming an educator to the world on trying to reduce the use of plastics which are choking the oceans. It was a fascinating conversation for me because, to imagine that a superstar actor like Modine has completely other dreams, and those dreams are altruistic simply to benefit the planet and its people. I’ve never forgotten this conversation, because I imagined that he could be the modern day futuristic version of Jacques Cousteau. I even went as far as trying to arrange for him to speak at Talks At Google during Ocean Week, but alas, the timing of the stars didn’t align for us and I gave up.

So when I stumbled across chatGPT recently, I decided to experiment with the artificial intelligence tool to have a conversation around the mitigation of plastic use, a topic that I know very little about. For me, as a content strategist, I know how to research and write my own Op-Ed editorials, personal essays, social tweets, responses and original social media content for myself and also my agency clients. I don’t consider using this tool to write about things I have expertise in. I personally feel that would be cheating and taking an unimaginative shortcut. You see, I love writing about topics that I’m curious or passionate about. But plastics…I needed a design thinking partner, an expert, and Mr. Modine of course wasn’t available for me to call and ask, and I don’t have any friends in the ocean engineering, marine archeology, marine biology world to name a few spaces. I don’t even know what those title mean even now! Soooooo why not tap this new friend friend of mine, chatGPT, whom I affectionately named Ms. Ariana Industry, to riff and have fun. Ms. Industry has delighted me and been proven to be right most of the time, and so what if she is a little rough around the edges, not culturally competent and slightly forgetful, because she can only remember things up until 2021?!

One of the things I discovered during this not at all exhaustive experiment, is that it’s the question that will always drive and inspire me far more than my ability to answer it. I will leave that to the experts. And right now, it’s me, myself and chatGPT. So let’s go!

The format is I ask a question (prompt) and chatGPT provides answers and ideas, conversationally.

Prompted by JinJa Birkenbeuel, the content that follows was generated by chatGPT. Concept of images were created with prompts written by JinJa Birkenbeuel and generated by dall•e.

Plastic Pollution Solutions: A Journey from Personal to Collective Impact

JinJa: What can an everyday person do to mitigate their plastic use in the home? Asking because all the industry reports on the oceans are telling me that plastics are killing fish, mammals and other oceanic wildlife. It seems impossible for an everyday person to make a difference. Is it futile?

chatGPT: It is not futile for an everyday person to make a difference in reducing plastic use. While the problem of plastic pollution is a global one that requires action from governments and industries, individuals can still take steps to reduce their own plastic use and help raise awareness about the issue. Here are a few simple things that an everyday person can do to mitigate plastic use in the home:

Use reusable shopping bags: Instead of using disposable plastic bags, bring your own reusable shopping bags to the store. This includes bags for produce as well as bags for other items.

Avoid disposable plastic water bottles: Instead of purchasing disposable plastic water bottles, invest in a reusable water bottle and refill it as needed. This can also save you money in the long run.

Use a reusable coffee cup: Instead of using disposable coffee cups, invest in a reusable coffee cup and take it with you when you go out for coffee. Many coffee shops will even give you a discount for using your own cup.

Avoid disposable plastic cutlery: Instead of using disposable plastic cutlery, use reusable silverware. If you're eating out, consider bringing your own utensils or asking for no utensils to be included with your order.

Use a reusable container for leftovers: Instead of using disposable plastic containers for leftovers, use a reusable container or wrap.

Buy products with minimal packaging: When shopping, look for products with minimal packaging and choose those that use cardboard, paper, or glass packaging instead of plastic.

Recycle: Properly recycle plastic items that cannot be avoided, such as plastic packaging or containers.

By taking

JinJa interrupts chatGPT 🤣 Ok. But these are things I already know. What innovation can an everyday person do at home? What completely new process can be deployed at a person's home that I've not heard before? Something new and upside down.

chatGPT: Here are a few innovative ideas that an everyday person can implement at home to reduce plastic use:

Use a compostable trash bag: Instead of using traditional plastic trash bags, use a compostable trash bag made from biodegradable materials such as cornstarch or potato starch. These bags can be composted along with food waste, reducing the amount of plastic that ends up in landfills.

Use a reusable silicone food bag: Instead of using disposable plastic storage bags or plastic wrap, use a reusable silicone food bag. These bags are made from food-grade silicone and can be used over and over again to store food in the fridge or freezer.

Make your own cleaning products: Instead of purchasing cleaning products that come in plastic bottles, consider making your own cleaning products using natural ingredients such as vinegar, baking soda, and lemon juice. These ingredients can be mixed together to create a variety of cleaning solutions, and the empty containers can be reused or recycled.

Use a reusable straw: Instead of using disposable plastic straws, invest in a reusable straw made from a sustainable material such as stainless steel or bamboo. Many restaurants and coffee shops now offer reusable straws as an alternative to disposable ones.

Use a menstrual cup: Instead of using disposable plastic pads or tampons, consider using a menstrual cup. These cups are made from medical-grade silicone or rubber and can be worn for up to 12 hours at a time, reducing the amount of waste generated during menstruation.

By implementing these innovative ideas at home, an everyday person can make a significant impact in reducing plastic use and helping to protect the environment.

JinJa: Ok. Which country uses the most plastic and what policies need to change at the government level to significantly reduce the profit-driven need to use plastics?

According to data from the World Bank, the United States is the largest consumer of plastic globally, followed by China and India. However, it's important to note that per capita consumption of plastic varies significantly among countries. For example, while the United States and European countries have high per capita consumption of plastic, many developing countries have lower consumption levels due in part to lower incomes and less developed infrastructure.

To significantly reduce the profit-driven need for plastics, governments around the world need to implement a range of policies, including:

Implementing taxes or fees on plastic products: By increasing the cost of plastic products, governments can discourage their use and encourage the use of alternatives.

Banning or restricting certain plastic products: Governments can ban or restrict the use of certain types of plastic products, such as single-use plastic bags or straws, to reduce their use.

Promoting the use of alternatives to plastic: Governments can encourage the use of alternatives to plastic, such as paper, glass, or biodegradable materials, through regulations, subsidies, or other incentives.

Requiring recycled content in plastic products: Governments can require that a certain percentage of plastic products contain recycled material, which can help reduce the demand for new plastic and encourage the recycling of plastic waste.

Increasing funding for research and development: Governments can increase funding for research and development of alternatives to plastic and technologies for recycling plastic waste.

By implementing these policies, governments can help reduce the profit-driven need for plastics and encourage the use of more sustainable alternatives.

JinJa: Ok, but how can the global supply chain help mitigate the profit-center of plastics? What are the top three manufacturing systems that need to change that can have a huge impact on plastic use reduction?

There are several ways in which the global supply chain can help mitigate the profit-driven use of plastics and encourage the use of more sustainable alternatives. Here are three manufacturing systems that can have a significant impact on reducing plastic use:

Packaging: One of the largest uses of plastic is for packaging, particularly for food and consumer products. By redesigning packaging to use less plastic or alternatives to plastic, such as paper or biodegradable materials, manufacturers can significantly reduce their plastic use.

Product design: Manufacturers can also reduce plastic use by designing products that use less plastic or that can be easily disassembled and recycled. For example, electronic devices can be designed with modular components that can be easily removed and replaced, reducing the need for disposable plastic parts.

Recycling and circular systems: By implementing recycling and circular systems in their supply chain, manufacturers can reduce their reliance on new plastic and encourage the reuse and recycling of plastic waste. This could include partnering with recycling companies, using recycled materials in their products, or developing closed-loop systems that allow for the reuse of materials within the same system.

By making changes to these three manufacturing systems, manufacturers can significantly reduce their plastic use and help mitigate the profit-driven demand for plastics.

JinJa: Thank you. OK, can you please write an amazing click bait title that has great SEO for this that I can use on my website blog?

chatGPT: I apologize for the error in my previous response. Here is the correct information:

According to data from the Global Plastic Waste Management Market, the United States is one of the top countries in terms of plastic waste generation, along with China, Indonesia, and India. In order to significantly reduce the use of plastic, governments around the world need to implement policies that address the root causes of plastic pollution. This could include measures such as:

Implementing bans or taxes on single-use plastic products: Many governments have already implemented bans or taxes on disposable plastic items such as straws, bags, and cutlery in an effort to reduce plastic waste.

Increasing recycling and waste management infrastructure: Governments can invest in improving recycling and waste management infrastructure, including increasing the number of recycling facilities and improving access to recycling for individuals and businesses.

Promoting the use of sustainable alternatives: Governments can incentivize the use of sustainable alternatives to plastic, such as paper, glass, or biodegradable materials, through tax credits or grants.

Regulating the plastic industry: Governments can also regulate the plastic industry by setting standards for the production and disposal of plastic, including requirements for the use of recycled materials and limits on pollution.

Educating the public about the impact of plastic: Governments can work to educate the public about the environmental and health impacts of plastic, including the impacts on marine life and the importance of reducing plastic waste.

By implementing these kinds of policies, governments can work to significantly reduce the use of plastic and the negative impacts of plastic pollution.

“My design thinking conversation with CHATGPT on reducing our dependency on the use of plastics that was inspired by my conversation with actor Matthew Modine”

— JinJa Birkenbeuel

JinJ: Ok. again, please write me a SEO strong and click-bait title for my article based on this question:

chatGPT: "What can an everyday person do to mitigate their plastic use in the home? asking because all the industry reports on the oceans are telling me that plastics are killing fish and oceanic wildlife. It seems impossible for an everyday person to make a difference. Is it futile?"

"10 Simple Steps to Reduce Your Plastic Use and Save the Oceans: The Ultimate Guide for Everyday People"

"Why Every Day People Matter in the Fight Against Plastics: Proven Ways to Reduce Plastic Use at Home"

"Don't Feel Powerless Against the Plastic Crisis: Here's How You Can Make a Difference in Your Own Home"

"The Surprising Ways Every Day People Can Help Save the Oceans: A Practical Guide to Reducing Plastic Use at Home"

"From Reusable Bags to DIY Cleaning Products: How You Can Cut Out Plastic in Your Home and Help Save the Oceans"

"The Truth About the Plastic Crisis: Can Every Day People Really Make a Difference? The Answer May Surprise You"

JinJa: Earlier, I started the conversation with how can people reduce plastics at home, then we went on to talk about how companies can reduce their dependency and then expand to global supply chain. Can you craft this content so it starts with people, to companies to global efforts and make it one complete article? I'm trying to create a more sweeping article that goes from small to large so that everyone can see how it all can work together. Does this make sense?

chatGPT: Sure, I can help craft an article that starts with individual actions to reduce plastic use at home and expands to discuss how companies and governments can address plastic pollution at a larger scale. Here is an outline of the content:

I. Introduction

Brief overview of the problem of plastic pollution and its impacts on the environment and marine life

The role of everyday people in reducing plastic use and addressing the problem of plastic pollution

II. Individual actions to reduce plastic use at home

Using reusable shopping bags, water bottles, and coffee cups

Avoiding disposable plastic cutlery and containers

Buying products with minimal packaging and properly recycling plastic items

Making your own cleaning products and using a reusable silicone food bag or menstrual cup

III. Corporate actions to reduce plastic use

Implementing eco-friendly packaging and sourcing sustainable materials

Reducing single-use plastics and increasing recycling efforts in the supply chain

Investing in research and development of sustainable alternatives to plastic

IV. Government actions to reduce plastic use

Implementing bans or taxes on single-use plastic products

Increasing recycling and waste management infrastructure

Promoting the use of sustainable alternatives to plastic

Regulating the plastic industry and educating the public about the impacts of plastic

V. Conclusion

The importance of collective action from individuals, companies, and governments in addressing the problem of plastic pollution

The potential for reducing plastic use and protecting the environment through these efforts

I hope this outline is helpful! Let me know if you have any further questions or if you would like me to expand on any specific points.

JinJa: Thanks. Can you also integrate replacement suggestions for diapers, plastic wraps, auto parts, by product wastes, and include these in the relevant sections of the outline? Also, include a new section on mindset. How to change people's minds about plastic? How to get people to care more about the earth than speed, efficiency and convenience?

chatGPT: Certainly, here is an updated outline incorporating your additional requests:

I. Introduction

Brief overview of the problem of plastic pollution and its impacts on the environment and marine life

The role of everyday people in reducing plastic use and addressing the problem of plastic pollution

II. Individual actions to reduce plastic use at home

Using reusable shopping bags, water bottles, and coffee cups

Avoiding disposable plastic cutlery and containers

Buying products with minimal packaging and properly recycling plastic items

Making your own cleaning products and using a reusable silicone food bag or menstrual cup

Using cloth diapers or compostable diapers instead of disposable plastic diapers

Using beeswax wrap or silicone food covers instead of disposable plastic wrap

III. Corporate actions to reduce plastic use

Implementing eco-friendly packaging and sourcing sustainable materials

Reducing single-use plastics and increasing recycling efforts in the supply chain

Investing in research and development of sustainable alternatives to plastic

Finding sustainable alternatives for auto parts and byproduct wastes

IV. Government actions to reduce plastic use

Implementing bans or taxes on single-use plastic products

Increasing recycling and waste management infrastructure

Promoting the use of sustainable alternatives to plastic

Regulating the plastic industry and educating the public about the impacts of plastic

V. Changing mindsets about plastic

The role of education and awareness in encouraging people to care about the environment

Strategies for promoting sustainable behaviors, such as emphasizing

JinJa: yes continue please

Certainly, here is the rest of the updated outline:

V. Changing mindsets about plastic (continued)

The role of education and awareness in encouraging people to care about the environment

Strategies for promoting sustainable behaviors, such as emphasizing the long-term benefits, appealing to people's values, and making sustainability convenient and accessible

The importance of social norms and peer influence in shaping attitudes and behaviors

The role of media and public figures in raising awareness and influencing attitudes about plastic and the environment

VI. Conclusion

The importance of collective action from individuals, companies, and governments in addressing the problem of plastic pollution

The potential for reducing plastic use and protecting the environment through these efforts

The need for ongoing efforts to change mindsets and behaviors to ensure a more sustainable future for all.

I hope this updated outline is helpful! Let me know if you have any further questions or if you would like me to expand on any specific points.

JinJa: I took a class on design thinking led by IBM. Is there an exercise at the end that can be included in this article that can walk someone through a design thinking problem around this?

Yes, it is possible to include an exercise at the end of the article that utilizes design thinking principles to address the problem of plastic pollution. Here is an outline for such an exercise:

Empathize: Begin by understanding the needs and motivations of the stakeholders involved in the problem of plastic pollution, including everyday people, businesses, and governments. Consider their challenges, opportunities, and values.

Define: Clearly articulate the problem that needs to be addressed, using insights gathered from the empathy phase. This might involve identifying specific pain points or areas of conflict related to plastic use.

Ideate: Generate a wide range of ideas for potential solutions to the problem, using brainstorming or other creative techniques. Encourage open-ended thinking and consider a range of approaches, from small-scale interventions to larger-scale systemic changes.

Prototype: Select a few promising ideas and create prototypes or mock-ups to test and refine. These prototypes could be physical models, diagrams, or other visual representations of the ideas.

Test: Conduct user testing or other research to validate the prototypes and gather feedback. This might involve conducting interviews, surveys, or other forms of research to gather data and insights.

Refine: Based on the feedback gathered through testing, refine the prototypes and continue iterating until a viable solution is identified.

This design thinking exercise could be included at the end of the article as a way for readers to apply the principles of design thinking to address the problem of plastic pollution in their own lives or communities. You may also want to consider including resources or tips for conducting user research or prototyping as part of the exercise.

JinJa: Can you suggest a few titles for this revised article? Something that is inspiring and actionable. Please don't include things like "ten things you can do...." type of title.

Sure, here are a few potential titles for the revised article:

"Breaking the Plastic Habit: A Design Thinking Approach to Solving the Global Pollution Crisis"

"From Home to Global: A Comprehensive Guide to Reducing Plastic Use and Protecting the Environment"

"The Power of One: How Every Day People Can Make a Difference in the Fight Against Plastics"

"Change the World, One Plastic-Free Choice at a Time: A Practical Guide to Sustainable Living"

"Plastic Pollution Solutions: A Journey from Personal to Collective Impact"

"A Better Future Begins at Home: Simple Steps to Reduce Plastic Use and Protect the Environment"

I hope these suggestions are helpful! Let me know if you have any further questions or if you would like additional title ideas.

JinJa: How to combine in a title, the home to the business to the global though? This feels too much like focused on the person. This needs to feel like a circular thing.

chatGPT: Here are a few potential titles that incorporate the idea of a circular process starting from the home and expanding to the business and global level:

"The Plastic Reduction Circle: How Individuals, Businesses, and Governments Can Work Together to Create a Sustainable Future"

"From Home to Global: A Holistic Approach to Reducing Plastic Pollution"

"The Power of Collective Action: How Every Day People Can Help Create a Plastic-Free World"

"Closing the Plastic Loop: A Guide to Reducing Waste and Protecting the Environment at Home, in Business, and Globally"

"Connecting the Dots: How Small Changes at Home Can Have a Big Impact on the Global Plastic Pollution Crisis"

I hope these suggestions are helpful! Let me know if you have any further questions or if you would like additional title ideas.

JinJa: Can you include in the outline resources or tips for conducting user research or prototyping as part of the exercise? With URLs to free, ad-free exercises and printable sheets?

So this is where I started to get freaked out and a little bit spooked by how amazing this thing is.

chatGPT: Certainly, here is an updated outline incorporating resources and tips for conducting user research and prototyping as part of the design thinking exercise:

Empathize: Begin by understanding the needs and motivations of the stakeholders involved in the problem of plastic pollution, including everyday people, businesses, and governments. Consider their challenges, opportunities, and values.

Define: Clearly articulate the problem that needs to be addressed, using insights gathered from the empathy phase. This might involve identifying specific pain points or areas of conflict related to plastic use.

Ideate: Generate a wide range of ideas for potential solutions to the problem, using brainstorming or other creative techniques. Encourage open-ended thinking and consider a range of approaches, from small-scale interventions to larger-scale systemic changes.

Prototype: Select a few promising ideas and create prototypes or mock-ups to test and refine. These prototypes could be physical models, diagrams, or other visual representations of the ideas.

Test: Conduct user testing or other research to validate the prototypes and gather feedback. This might involve conducting interviews, surveys, or other forms of research to gather data and insights. Here are some resources for conducting user research:

The Stanford d.school's "How to Conduct User Research" guide: https://dschool.stanford.edu/resources/how-to-conduct-user-research

The Interaction Design Foundation's "User Research Methods" course: https://www.interaction-design.org/courses/user-research-methods

The Usability.gov website, which includes a variety of resources and tools for conducting user research: https://www.usability.gov/what-and-why/user-research.html

Refine: Based on the feedback gathered through testing, refine the prototypes and continue iterating until a viable solution is identified. Here are some resources for prototyping:

The Stanford d.school's "Prototyping" guide: https://dschool.stanford.edu/resources/prototyping

The Interaction Design Foundation's "Prototyping for Interaction Design" course: https://www.interaction-design.org/courses/prototyping-for-interaction-design

The InVision blog, which includes a variety of articles and resources on prototyping: https://www.invisionapp.com/blog/category/prototyping/

I hope this updated outline is helpful! Let me know if you have any further questions or if you would like additional resources or tips.

JinJa: Yes thank you so much!❤️

chatGPT: You're welcome! I'm glad I could help. Please don't hesitate to reach out if you have any other questions or if you need further assistance. I'm here to help.

And this my friends is one of the ways in which I use chatGPT. As a research partner, to have a conversation about a topic I no nothing about, with a machine that doesn’t judge me for what an expert or MBA might consider a stupid question. - JinJa Birkenbeuel

❤️❤️❤️❤️❤️

JinJa Birkenbeuel is the CEO of Birk Creative. She is also a Writer, Researcher & Creator is AI-obsessed and the creator and Host of The Honest Field Guide Podcast. Her rich content is read by global brands including Google, VaynerMedia, Meta, LinkedIn, Apple, DropBox, Microsoft and also by curious and inspired people.

JinJa’s agency Birk Creative serves people and brands who believe in collaboration to create a seamless creative, content and brand development process. She welcomes leaders who want to test innovative and culturally relevant approaches for marketing, messaging and want to identify and build relationships with untapped audiences in the knowledge-share economy.

Our agency generally works with new customers on a monthly retainer basis that require a six month minimum contract. If you're interested in working with us, please fill out our form with your project details and we will get back to you within 48 hours during normal business hours. Alternatively, you can reach us at (312) 834-7339. We look forward to discussing how Birk Creative can help your business thrive.

Not ready to hire us yet? Get to know us and our approach to branding and marketing by subscribing to our podcast, The Honest Field Guide. Hosted by CEO JinJa Birkenbeuel, listen to intimate conversations with entrepreneurs and business owners on the show and learn more about us. https://podcasts.apple.com/us/podcast/the-honest-field-guide/id1406059045

0 notes

Text

Master the cutting-edge field of Generative AI with the Generative AI Online Course. Among the topics covered in this course are deep learning, neural networks, transformers, and advanced AI models, including ChatGPT, DALL·E, and BERT. Gain hands-on experience in AI model development, prompt engineering, and fine-tuning techniques.

0 notes

Text

AI+ Prompt Engineer Level 1™: A Beginner’s Guide to Mastering AI Prompts

Introduction

The rise of artificial intelligence has revolutionized various industries, creating new job roles and skill requirements. One such emerging field is prompt engineering, a critical discipline for optimizing AI interactions. The AI+ Prompt Engineer Level 1™ certification is designed for beginners looking to develop foundational skills in crafting effective AI prompts. This blog will explore what this certification entails, why it is valuable, and how you can get started.

What Is AI+ Prompt Engineer Level 1™?

AI+ Prompt Engineer Level 1™ is a beginner-level certification that introduces individuals to the fundamentals of prompt engineering. It focuses on teaching how to design, structure, and refine AI prompts to achieve the most accurate and useful responses from AI models like ChatGPT, Bard, and others.

The certification covers:

Understanding AI language models

Techniques for writing effective prompts

Enhancing AI responses through structured inputs

Ethical considerations in AI prompting

Hands-on exercises for real-world applications

Why Is This Certification Important?

With AI becoming a core component of businesses, the demand for professionals skilled in prompt engineering is increasing. Here are some reasons why this certification is valuable:

1. Enhances AI Communication Skills

AI models generate responses based on user prompts. Poorly written prompts can lead to vague or inaccurate results. This certification helps refine the way you interact with AI, ensuring precise and useful outputs.

2. Boosts Career Opportunities

Many companies are integrating AI-powered chatbots, content generators, and automation tools into their workflows. Being certified as an AI+ Prompt Engineer Level 1™ can give you an edge in AI-related roles in industries such as marketing, customer service, and content creation.

3. Builds a Strong AI Foundation

For those interested in AI and machine learning, this certification serves as an excellent starting point. It helps learners develop a structured approach to interacting with AI before moving on to more advanced AI applications.

Key Skills You Will Learn

The AI+ Prompt Engineer Level 1™ certification focuses on practical skills, including:

Crafting Clear and Concise Prompts: Understanding how wording affects AI responses

Using Context and Constraints: Providing AI with structured information for better accuracy

Optimizing AI Outputs: Refining responses through iteration and follow-up prompts

Debugging AI Responses: Identifying why AI generates incorrect or biased answers and correcting them

How to Get Started

If you're interested in becoming an AI+ Prompt Engineer Level 1™, follow these steps:

Enroll in a Certification Program – Look for accredited courses offering this certification.

Practice Regularly – Experiment with AI tools like ChatGPT, Bard, and Claude to refine your prompting skills.

Engage with the AI Community – Join forums and discussions on platforms like Reddit and LinkedIn.

Apply Your Skills in Real Projects – Use your knowledge to automate tasks, generate content, or improve chatbot performance.

Conclusion

The AI+ Prompt Engineer Level 1™ certification is a great starting point for anyone looking to master AI prompting. As AI continues to evolve, having the ability to effectively communicate with these models will become a valuable skill. Whether you're an aspiring AI professional, a content creator, or someone looking to leverage AI in business, this certification can set you on the right path.

0 notes