#chat gpt prompt engineering course

Explore tagged Tumblr posts

Text

Prompt Engineering से पैसे कमाएँ |Unique way to earn money online..

Prompt Engineering : online सफलता के लिए प्रभावी Prompt डिज़ाइन करना | Online संचार के तेजी से विकसित हो रहे परिदृश्य में, Prompt Engineering एक महत्वपूर्ण कौशल के रूप में उभरी है, जो जुड़ाव बढ़ाने, बहुमूल्य जानकारी देने और यहां तक कि डिजिटल इंटरैक्शन का मुद्रीकरण करने का मार्ग प्रशस्त कर रही है। यह लेख Prompt Engineering की दुनिया पर गहराई से प्रकाश डालता है, इसके महत्व, सीखने के सुलभ…

View On WordPress

#advanced prompt engineering#ai prompt engineering#ai prompt engineering certification#ai prompt engineering course#ai prompt engineering jobs#an information-theoretic approach to prompt engineering without ground truth labels#andrew ng prompt engineering#awesome prompt engineering#brex prompt engineering guide#chat gpt prompt engineering#chat gpt prompt engineering course#chat gpt prompt engineering jobs#chatgpt prompt engineering#chatgpt prompt engineering course#chatgpt prompt engineering for developers#chatgpt prompt engineering guide#clip prompt engineering#cohere prompt engineering#deep learning ai prompt engineering#deeplearning ai prompt engineering#deeplearning.ai prompt engineering#entry level prompt engineering jobs#free prompt engineering course#github copilot prompt engineering#github prompt engineering#github prompt engineering guide#gpt 3 prompt engineering#gpt prompt engineering#gpt-3 prompt engineering#gpt-4 prompt engineering

0 notes

Video

youtube

What is Prompt Engineer - Prompt Engineer Courses by Open AI - AI - Chat...

#youtube#What is Prompt Eengineer - Prompt Engineer Courses by Open AI - AI - Chat GPT - No Coding prompt promptengineering chatgpt openai ai engine

0 notes

Text

Unlock the Future: Full AI Course 2025 – Master ChatGPT, Midjourney, Gemini & Firefly

Imagine being fluent in the world of AI—effortlessly using ChatGPT, creating stunning visuals with Midjourney and Firefly, and harnessing the powerful features of Google Gemini. That’s not just a futuristic dream anymore. With the Full AI Course 2025: ChatGPT, Midjourney, Gemini, Firefly, you can gain hands-on mastery over the most popular AI tools in one place.

AI isn’t just for tech experts now—it's for content creators, marketers, designers, business professionals, and anyone eager to work smarter, faster, and more creatively.

Let’s explore why this course is the perfect launchpad into the exciting world of artificial intelligence in 2025.

Why AI Skills Are a Must-Have in 2025

AI is no longer just a buzzword. It’s changing the way we work, learn, and create.

Writers are using ChatGPT to write articles, emails, and code.

Artists are turning to Midjourney and Firefly for powerful image generation.

Businesses are leveraging Gemini for smarter data analysis and automation.

And this is just the beginning.

Whether you want to streamline your workflows, level up your creativity, or gain a career edge, learning AI tools is becoming non-negotiable. But juggling different tools, platforms, and learning curves can be overwhelming.

That’s where the Full AI Course 2025 makes things easy.

What Makes This AI Course Stand Out?

This isn’t just another online tutorial or crash course. It’s a comprehensive, step-by-step guide crafted for learners at every level—beginner to advanced.

Here’s what you get:

✅ Learn ChatGPT Like a Pro

Understand prompt engineering, custom GPTs, and how to use ChatGPT for content writing, coding, business automation, and personal productivity. You’ll go beyond basic chat responses and dive into building your own workflows and strategies.

✅ Explore Midjourney & Firefly for AI Art & Design

Master how to turn text into breathtaking visuals with tools like Midjourney and Adobe Firefly. Whether you’re designing posters, social content, or client visuals, these tools give your creativity a serious boost.

✅ Get Hands-On with Google Gemini

Gemini is one of the most powerful new players in the AI space. In this course, you’ll learn how to use Gemini for research, document creation, and professional tasks. It’s like having a Google-powered assistant in your pocket.

✅ One Platform, All Tools

You won’t need to jump from one tutorial to another. Everything is taught in one place, by instructors who’ve carefully curated content to help you get real-world results.

No fluff, no jargon—just practical skills taught in an engaging and easy-to-understand way.

Who Is This AI Course For?

This course is designed for anyone who wants to work smarter with AI in 2025 and beyond:

Students wanting to stay ahead in the tech-driven world

Freelancers and content creators who want to boost productivity and creativity

Digital marketers seeking faster workflows and campaign automation

Entrepreneurs and business owners looking to cut down time and scale smarter

Designers exploring AI-generated visual storytelling

And even non-techies who are simply curious about AI!

You don’t need to be a coder or tech expert. All you need is curiosity and a willingness to learn.

Real-World Projects & Tools You’ll Work With

The best part of this course? It’s not theory-heavy.

You’ll be applying what you learn in real projects like:

Writing viral blog posts using ChatGPT

Creating brand logos and illustrations with Midjourney

Building custom GPTs for business automation

Researching and summarizing topics with Gemini

Designing social media visuals with Adobe Firefly

Each module includes hands-on practice, downloadable resources, and real-world examples so you actually retain and apply what you learn.

Future-Proof Your Career in the Age of AI

Whether you’re preparing for the job market, upgrading your freelance skills, or simply want to be more effective at work—this course helps you future-proof your skills.

In 2025 and beyond, AI literacy is just as important as knowing how to use email or spreadsheets. The earlier you start, the bigger the advantage.

Why We Recommend the Full AI Course 2025

At Korshub, we’re always on the lookout for standout learning experiences. The Full AI Course 2025: ChatGPT, Midjourney, Gemini, Firefly checks every box:

✅ Beginner to advanced training

✅ Project-based learning

✅ Covers multiple AI tools in one course

✅ Expert guidance & community support

✅ Perfect for creators, marketers, and entrepreneurs

It’s more than just a course—it’s a toolbox that empowers you to do more, faster, and better.

Final Thoughts: This Is the Course the Future Needs

The AI landscape is growing fast. Keeping up can feel impossible—unless you have the right guide. This course is your shortcut to learning, mastering, and staying ahead in the AI revolution.

You’ll walk away with:

Practical skills in ChatGPT, Midjourney, Gemini, and Firefly

Confidence to apply AI at work, school, or business

The creative power to turn ideas into action

So, if you’ve been curious about what AI can do for you, this is your moment to jump in.

👉 Start learning with the Full AI Course 2025: ChatGPT, Midjourney, Gemini, Firefly and unlock your potential today.

0 notes

Text

MB-7005: Create and Manage Journeys with Dynamics 365 Customer – Your Path to Personalized Engagement

In today’s competitive market, businesses must go beyond traditional customer relationship management. Creating personalized and consistent experiences across the entire customer journey is essential. That’s where Microsoft’s MB-7005: Create and Manage Journeys with Dynamics 365 Customer course comes into play. Offered by Nanfor, this specialized training equips professionals with the skills to plan, execute, and manage effective customer journeys using Microsoft’s powerful Dynamics 365 platform.What Is the MB-7005 Course?MB-7005 is a targeted course that focuses on helping professionals create and manage customer journeys using Dynamics 365 Customer Insights - Journeys (formerly Dynamics 365 Marketing). This powerful tool enables organizations to automate and personalize marketing communications, drive engagement, and build long-lasting relationships with customers.Key Learning ObjectivesBy enrolling in the MB-7005 course, learners will:Understand the core concepts of customer journeys, including trigger-based actions and personalized content delivery.Learn to create multi-channel campaigns using email, SMS, push notifications, and social media.Gain insight into segmentation, lead scoring, and real-time tracking of customer behaviors.Master the use of Customer Insights to unify data and deliver timely and relevant messages.Learn how to evaluate campaign performance using built-in analytics and reporting tools.This course is ideal for marketing professionals, CRM administrators, and business analysts looking to enhance their ability to design and implement effective customer experiences.Why Choose Dynamics 365 for Customer Journeys?Dynamics 365 Customer provides a unified platform that connects sales, marketing, and customer service. With features like AI-driven insights, automation, and integration with Microsoft tools, it’s the ideal solution for organizations seeking smarter, data-driven engagement.By mastering this platform through MB-7005, professionals can:Increase customer satisfaction and loyalty through tailored communication.Improve campaign ROI with advanced targeting and analytics.Streamline operations by integrating marketing efforts with sales and customer service.Learn with Nanfor – A Trusted Microsoft PartnerAs a Microsoft-certified learning provider, Nanfor delivers expert-led training tailored to industry needs. Their MB-7005 course offers hands-on labs, up-to-date content, and support from experienced instructors, ensuring learners gain real-world skills.Start Your Journey TodayIf you're looking to enhance your customer engagement strategy, MB-7005: Create and Manage Journeys with Dynamics 365 Customer is your next step. Visit Nanfor’s course page to enroll today and transform how you connect with your customers. For more info visit here:- Prompt engineering con Chat GPT de OpenAI y Microsoft Copilot

0 notes

Video

youtube

Chat GPT prompt Engineering in Hindi complete course // #Part-1_INTRODUC...

0 notes

Text

Case Studies in Prompt Engineering

Artificial Intelligence (AI) has advanced, and as a result, the subject of prompt engineering has developed as a crucial part of improving the capabilities of language models. This article will explore real-world case examples highlighting the practical implementation of prompt-based language models in several fields. These success examples demonstrate the efficacy of prompt engineering and provide significant insights for anybody interested in pursuing this subject.

Obtaining an AI certification offers significant opportunities for those aiming to establish a strong presence in this industry. Enrolling in a prompt engineer course and receiving a certification can equip individuals with the essential skills and knowledge.

Individuals who aspire to become prompt engineers should explore the courses and certifications offered by reputable institutions such as the Blockchain Council. Their prompt engineering course encompasses the fundamental principles of prompt engineering and incorporates knowledge from the broader subject of artificial intelligence. Through this training, individuals can begin a journey to make significant contributions to the achievements of efficient engineering in artificial intelligence.

Case Studies on Prompt Engineering: Successful Stories

This section delves into real-world applications that demonstrate the significant influence of prompt-based language models. These case studies demonstrate the efficacy of prompt engineering in various fields, including customer support, creative writing, and multilingual customer service.

Chatbots for Customer Support

Problem Statement: The organization aims to enhance its customer support system by implementing a chatbot to manage consumer inquiries and provide precise responses effectively.

Prompt Engineering Approach: We utilize a language model that has been optimized using the OpenAI GPT-3 API. This model is designed explicitly for chat-based interactions. The model is trained on a dataset that includes historical client queries and their corresponding responses. Tailored prompts cater to various queries like product questions, technical support, and order status updates.

Outcome: The chatbot has shown to be successful in efficiently handling a wide range of consumer inquiries and delivering responses that are relevant to the context. By continuously making modifications and analyzing user feedback, prompt engineers boost the accuracy and responsiveness of the model. The result is a significant decrease in customer response time, enhancing overall customer satisfaction.

Creative Writing Assistant Problem Statement: A creative writing platform assists writers by providing contextually relevant storylines, character development, and descriptive writing suggestions.

Prompt Engineering Approach: Using the Hugging Face Transformers library, developers optimize a language model by fine-tuning it with a dataset consisting of creative writing samples. The model is designed to produce innovative suggestions across various writing styles and genres. Writers engage with the model by using personalized prompts to obtain inspiration and ideas for their writing projects.

Outcome: The creative writing assistance proves to be an excellent tool for writers seeking inspiration. The model's varied and innovative answers are crucial in overcoming artistic obstacles and exploring fresh avenues in writing. Writers experience heightened productivity and creativity when using the creative writing assistant.

Customer service that supports multiple languages.

Problem Statement: A worldwide e-commerce behemoth seeks to improve its customer service by offering multilingual assistance to individuals from various linguistic backgrounds.

Prompt Engineering Approach: Using the Sentence Transformers library, prompt engineers refine a multilingual language model. The model is trained on a dataset that includes consumer questions in several languages. Custom prompts are created to address inquiries in several languages, guaranteeing that the model can deliver contextually suitable responses in the user's preferred language.

The outcomes: The multilingual customer service language model successfully serves consumers from diverse linguistic backgrounds. The system efficiently manages inquiries in several languages, providing answers considering cultural subtleties and preferences. Users value customized assistance, leading to enhanced customer satisfaction and retention.

These case studies demonstrate the versatility and efficiency of rapid engineering in various applications. Customer care chatbots, creative writing assistants, and multilingual customer service are just a few examples of the practical benefits that prompt engineering can offer. These success stories demonstrate the effectiveness of prompt engineering in several areas.

Product Development

Problem Statement: The software development team needed help collecting comprehensive user requirements for their product development. The traditional approaches frequently yielded a large amount of complex data that took time to analyze and prioritize, impeding the efficient development process. A more efficient method was needed to extract user requirements into practical insights and feature concepts.

Prompt Engineering Method: The team implemented quick engineering as a strategic solution to tackle the challenges. They employed incremental prompting, dividing the user requirements into smaller, more manageable tasks. This method entailed progressively improving cues to direct the AI model in producing precise and detailed insights. The prompts were explicitly created to elicit subtle and detailed information, guaranteeing a more concentrated and organized dataset for the language model.

The outcomes: The results profoundly impacted the software development process. Using incremental prompting facilitated the AI model in producing actionable insights with enhanced accuracy. By decomposing user requirements into smaller tasks, the team might improve the effectiveness of prioritizing product development. The organized dataset enabled a more focused comprehension of user demands, developing features targeting user requirements.

In general, prompt engineering significantly improved the efficiency of the product development lifecycle, offering a streamlined and effective approach for gathering and executing customer needs.

Conclusion

To summarize, the favorable outcomes of these case studies emphasize the flexibility and versatility of prompt engineering in many applications. Whether it is in customer support chatbots, creative writing assistants, multilingual customer care, or, as seen here, product development, quick engineering jobs continue to demonstrate their value in optimizing language models for specific use cases. As demonstrated in these case studies, the structured and personalized approaches highlight the importance of timely prompt engineering jobs and expedited engineering courses in equipping individuals with the necessary abilities.

0 notes

Text

First question: I'm not 100% certain what you're asking here. They are limited for useful purposes because there are only so many uses for generating images or text. But there are multiple LLMs trained on different data sets with different potential uses.

One non-llm example that circulates a lot on tumblr is that AI image recognition tool that was trained to tell bear claws apart from croissants, but that researchers were able to adapt to recognize different kinds of cancer cells. That's image recognition, not an LLM, but it demonstrates that yeah different training sets can be used for different purposes with specific training, and general training sets can be refined for specific purposes.

Second question: A lot of the information about the energy required for LLMs is tremendously misleading - I've seen a post circulating on tumblr claiming that every ChatGPT prompt was like pouring a bottle of fresh water into the ground just to cool the servers and that is a serious misstatement of how these things work.

Energy requirements for LLMs are frontloaded in training the LLM; they require a lot of energy to train but comparatively little energy to use.

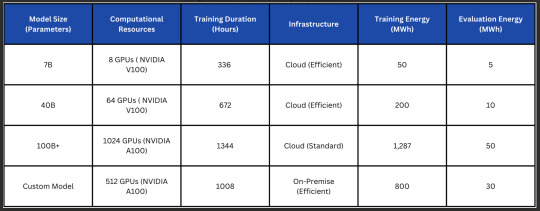

This chart breaks down some of the energy requirements for training an LLM:

50-800-ish Megawatt Hours for training. Let's take the high middle and consider 800MWh for our question.

800MWh is 8 Million KWh. The average US household uses 10500 KWhr per day. You divide those two and you get 762, so if we're rounding up you could train the custom model for the same amount of energy that it would take to run 800 US homes for a year. That is certainly not a *small* amount of energy, but it is also not an *enormous and catastrophic* amount of energy.

GPT-4 took about 50 Gigawatt Hours to train, which is a big jump up from the models on this chart. Doing the same math as above, it works out to powering about five thousand homes for a year. That is, again, not a *small* amount of power (apparently about twice as much as the Dallas Cowboys' Stadium uses in a year).

But training isn't the only cost of these models; sending queries uses power too, and using a GPT-backed search uses up to 68 times more energy than a google search does. At 0.0029 KWh per query.

Now. This is *not trivial.* I don't want to dismiss this as something that does not matter. The climate cost of the AI revolution is not something to write off. If we were to multiply the carbon footprint of Google by 70, that would be a bad thing. My attitude mirrors that of Wim Vanderbauwhede, the source of the last two links, who I am quoting here:

For many tasks an LLM or other large-scale model is at best total overkill, and at worst unsuitable, and a conventional Machine Learning or Information Retrieval technique will be orders of magnitude more energy efficient and cost effective to run. Especially in the context of chat-based search, the energy consumption could be reduced significantly through generalised forms of caching, replacement of the LLM with a rule-based engines for much-posed queries, or of course simply defaulting to non-AI search.

*however,* that said, the energy cost of LLMs as a whole is not as ridiculously, overwhelmingly high as I've seen some people claim that it is. It *does* consume a lot of power, we should reduce the amount of power it uses, and we can do that by using it less and limiting its use to appropriate contexts (which is not what is currently happening, largely because the AI hype machine is out promoting the use of AI in tons of inappropriate contexts).

Ditto for water use; datacenters do use water. It is a significant amount of water and they are not terribly transparent about their usage. But the amount required for "AI" isn't going to be magically higher because it's AI, it's going to be related to power use and the efficiency of the systems; 50GWh for training GPT-4 will require just as much cooling as 50GWh for streaming Netflix (which uses over 400k GWh annually). The way to improve that is to make these systems (all of them) more efficient in their power use, which is already a goal for datacenters.

And while LLMs do have an impact on the GPU market my experience is that it has been minimal compared to bullshit like bitcoin mining, which actually does use absurd amounts of power.

(Quick comparison here, it's frequently said that ChatGPT uses over half a million kilowatt hours of power a day; that seems like a big number but it's half a gigawatt hour. Multiply that by 365 and you've got 182.5 GWh. It's estimated that Bitcoin uses in the neighborhood of 110 *Terawatt* hours annually, which is 11000 GWh, which is about 600 times more energy than ChatGPT with *significantly* fewer people using Bitcoin and drastically less utility worldwide.)

A lot of computer manufacturers are actually currently developing specific ML processors (these are being offered in things like the Microsoft copilot PCs and in the Intel sapphire processors) so reliance on GPUs for AI is already receding (these processors should theoretically also be more efficient for AI than GPUs are, reducing energy use).

So i guess my roundabout point is that we're in the middle of a hype bubble that I think is going to pop soon but also hasn't been quite as drastic as a lot of people are claiming; it doesn't use unspeakable amounts of power or water compared to streaming video or running a football stadium and it is in the process of becoming more energy efficient (something the developers want! high energy costs are not an inextricable function of LLMs the way that they are with proof-of-work cryptocurrencies).

I don't know about GPUs, but I know GPU manufacturers are attempting to increase production to meet LLM demand, so I suspect their impact on the availability of GPUs is also going to drop off in time.

Author's note here: I am dyslexic and dyscalculic and fucking about with kilowatt hours, megawatt hours, gigawatt hours, and terawatt hours has likely meant I've missed zero somewhere along the line. I tried to check my work as best I could but there's likely a mistake some where in there that is pretty significant; if you find that mistake with my power conversion math PLEASE CORRECT ME because it is not my intent here to be misleading I just literally need to count the zeros with the tip of a pencil and hope that I put the correct number of zeroes into the converter tools.

I don't care about data scraping from ao3 (or tbh from anywhere) because it's fair use to take preexisting works and transform them (including by using them to train an LLM), which is the entire legal basis of how the OTW functions.

3K notes

·

View notes

Text

CHAT GPT(PROMPT ENGINEER)

0 notes

Text

6 New ChatGPT Features Include Prompt Examples & File Uploads

OpenAI, the pioneering force behind the revolutionary language model ChatGPT, is once again redefining the landscape of artificial intelligence. With an unwavering commitment to innovation, OpenAI has introduced a suite of six new features in the latest iteration of ChatGPT, each designed to take user interaction to new heights.

While these features promise to be game changers in their own right, they also usher in a new era of exploration. A realm in which the AI prompt engineer takes center stage, orchestrating dialogues that transcend the mundane and venture into the extraordinary. The path toward mastering AI communication is illuminated by the potential of ChatGPT certification.

The commitment of OpenAI to user experience goes beyond the realms of technology. It is about empowering individuals to fully utilize the capabilities of AI. As ChatGPT evolves, so does the role of the prompt engineer, an art that can be mastered through a dedicated prompt engineer course and prompt engineer certification.

6 New ChatGPT Features

Prompt Examples for Intuitive Conversation Initiation

Navigating the world of AI-powered conversations has never been easier. ChatGPT removes barriers for users who may have been intimidated by a blank canvas by introducing prompt examples. This innovative feature provides sample prompts at the start, acting as a guiding light to kickstart engaging conversations with the AI.

The incorporation of prompt examples enhances the learning journey for someone aspiring to become an expert AI prompt engineer. This feature not only simplifies the start of conversations, but it also serves as a valuable learning tool. As you progress into the realm of AI language models, enhancing your skills through platforms such as Blockchain Council's prompt engineer course and prompt engineering certification becomes a strategic step toward mastering the complexities of AI interaction.

Suggested Responses to Enrich Dialogue

ChatGPT elevates user interactions by including "suggested replies." This feature enhances dialogues by providing contextually relevant options for continuing the conversation. Users can dive deeper into discussions with a single click, adding a dynamic flair to their interactions with the AI model.

The incorporation of suggested responses adds an extra layer of intrigue to the journey of becoming a proficient prompt engineer. This feature not only improves conversational flow but also allows for a deeper exploration of AI capabilities. And, as you navigate the landscape of AI-powered communication, keep in mind that honing your skills with resources like Blockchain Council's prompt engineer course and prompt engineering certification is an investment with exponential returns.

GPT-4 Transition: Simplified Experience

ChatGPT is streamlining user experiences in sync with its latest model, GPT-4. GPT-4 becomes the default for Plus users, providing an upgraded experience with a message limit of 50 every three hours. Say goodbye to reverting to GPT-3.5 when starting new chats, saving valuable time and enhancing seamless interactions.

Being a proficient AI prompt engineer in the field of AI implies being aware of advancements such as these. With each upgrade, new horizons of engagement and innovation emerge. As you navigate this realm of possibilities, consider expanding your knowledge with specialized resources such as Blockchain Council's prompt engineer course and prompt engineering certification, where you'll learn the intricacies of AI and make your journey truly transformative.

Improving Data Analysis: File Uploads in Code Interpreter

ChatGPT's Code Interpreter beta now gives Plus users file upload capabilities, revealing new dimensions of utility. Users can upload up to ten files, allowing for in-depth marketing analysis and efficient data extraction. A game changer for professionals seeking profound insights into their fields. Whether you're delving into intricate marketing insights or streamlining data extraction, this innovative addition demonstrates ChatGPT's commitment to enhancing your analytical journey.

A skilled AI prompt engineer is at the forefront of innovation in the ever-changing AI landscape. As you explore the capabilities of advanced tools like Code Interpreter, consider expanding your knowledge with resources like Blockchain Council's prompt engineer certification and ChatGPT certification. Dive into the complexities of AI and blockchain, equipping yourself to navigate the digital realm with confidence and mastery.

Extended Log-In and Improved Accessibility: User-Friendly Updates

Say hello to better log-in experiences! Users can now stay logged in for longer periods of time, replacing the previous two-week log-out policy. The new log-in page provides a more user-friendly interface, making interactions smoother and more efficient.

Being a skilled AI prompt engineer distinguishes you in the dynamic world of AI and technology. As you enjoy the enhanced features of ChatGPT, consider expanding your knowledge with Blockchain Council's enlightening prompt engineer certification and ChatGPT certification. Unlock the world of blockchain and AI, arming yourself with the tools and knowledge you need to thrive in the digital age with confidence and competence.

Keyboard Shortcuts for Accelerated Workflows

Keyboard shortcuts bring efficiency and innovation together. Shortcuts like (Ctrl) + Shift + C help to speed up tasks like copying a code block. These shortcuts can revolutionize how professionals navigate and interact with ChatGPT by increasing productivity and accessibility.

Consider honing your skills as an expert AI prompt engineer as you embark on this journey of optimized interactions. The Blockchain Council's prompt engineer certification and ChatGPT certification are waiting for you, equipping you with the prowess of blockchain technology and AI. Learn the essential skills and insights needed to succeed in today's dynamic digital landscape. Your enhanced abilities will not only amplify your professional prowess, but will also set you on a path of continuous growth and success.

Conclusion: Paving the Way for Better AI Interaction

The addition of prompt examples, suggested responses, a seamless transition to GPT-4, file uploads, extended log-in durations, and keyboard shortcuts collectively redefine how we interact with AI models. These enhancements not only streamline interactions but also allow users to delve deeper, navigate more easily, and engage dynamically.

As the horizon of AI interaction broadens, it is critical to equip oneself with the necessary skills and knowledge. The Blockchain Council's courses are designed to improve your capabilities and propel your career to new heights, from prompt engineer certification to comprehensive training in ChatGPT certification.

It's critical to stay at the forefront of AI-powered conversations in a landscape of constant improvement. While some features, such as the Link Reader plugin and Browse with Bing, have been retired, ChatGPT continues to evolve, ensuring seamless interactions and innovative solutions. And, as the world of AI communication evolves, the knowledge gained from courses like those provided by Blockchain Council will be an undeniable asset, enhancing your proficiency as a skilled prompt engineer and beyond.

1 note

·

View note

Text

Blog #4: Individual Techbox

Weeks into our Interaction Design course, everyone was asked to conduct research and showcase our individual techbox. The techbox was supposed to help provide insights into our ongoing team projects. My team focused on creating a new interface for a mobile app that would offer friendly interactions to users in the late Generation X (a.k.a our parents!). In a sentence, the techbox that I put together must complement our project by giving a comprehensive overview of our target users, devices, and solution design.

But First, What is a Techbox?

Actually, even Google couldn't give me a concrete answer. Most results for "Define Techbox" gave me were far from what I was looking for, even suggesting whether I was making typographical errors while searching for "define checkbox" or "define textbox."

I then made some modifications by constructing a more detailed prompt with something like "What is techbox in UX design?" Because our course covers UI/UX components, so it would make sense to specify the field that is relevant to the techbox whose meaning I was searching for.

Results were slightly better, but still not what I was expecting.

Instead of giving me the dicitionary, field-defined meaning of the term "techbox," it would give me UI/UX or technology companies named "Techbox" with different variations (e.g, Tech Box, Techbox, TECHBOX, Techbox Solutions, etc). Amidst irrelevant outputs, I tried to connect the dots by using what I was provided with. I patiently skimmed through these websites and I found the similarities that these sites had in common was that they often pointed towards generating innovative solutions via the employment of technologies.

So there, my question was partly answered. By utilizing existing technological sources, I could get closer to understanding my primary users, their behavior patterns on gadgets and devices, and the solution that my team was spearheading.

1. MY INDIVIDUAL TECHBOX: AI TOOLS

It is still hard to take in the fact that AI is playing an increasingly important part of our convenient life. It used to be something that people talked about in press and media, but now, look around and we will see that there is almost no aspect that has no engagement with AI. At the moment, new AI tools and plug-ins are generated every week with a view to address specific convience-related issues, such as helping businesses, especially individuals, automate tasks and generate tasks.

While there are common fears that AI's existence pose dangerous threats to the creativity work of human beings, I do not think so. Despite being brought on at worldwide technological forums, the most used AI tools still contain flaws that deal directly with daily life, and there is yet to be fruitful solutions that refer to this problem. Suffice it to say, the appearance of AI fosters many good sides of humans in a variety of ways.

I took a AI tools in particular: ChatGPT, DallE, CrayonAI, Midjourney, and Nigthcafe Creator Studio, and the pros and cons of each.

CHAT GPT

ChatGPT is a language model developed by OpenAI that can generate human-like responses to text-based prompts.

Without hesitation, I just jumped to ChatGPT first to listen to what it has to say. While ChatGPT can be used to automate customer service and support, as well as generate content such as blog posts and articles, if one pays detailed attention, the writing pattern of this tool is very generic and lack of center points. Therefore, while working with ChatGPT to pivot our points, I have learned the concept of prompt engineering, which taught me how to talk to ChatGPT so it can understand what I really asked of it, and generate a much more desired project However, the disadvantage is that it may not always produce accurate or appropriate responses, as it is limited by its training data.

DALLE

DallE is another OpenAI tool that generates images from text-based descriptions. The advantage of DallE is that it can be used to create custom images quickly and easily, without the need for expensive graphic design software.

However, the disadvantage is that the images generated may not always accurately reflect the intended description, and may require some editing to get the desired result.

CRAYONAI

CrayonAI is an AI tool that can help businesses automate their sales and marketing processes.

The advantage of CrayonAI is that it can help save time and increase efficiency by automating tasks such as lead generation and email outreach.

However, the disadvantage is that it does not always produce personalized or human-like communication, and its best result does not disclose design details that UI/UX field asks for, which can lead to a lack of engagement from potential customers.

MIDJOURNEY

Midjourney is an AI tool that was recommended at one of our class sessions. It is also a a natural language processing model that generate creative contents per user's input.

To be honest, I don't care if Midjourney really works, because I was attracted to how they incoporate another platform like Discord so that users can feel like while they are still doing the searching, they're doing it within a community that has the same interests in AI Art Generation.

Strength-wise, Midjourney can help businesses and individuals better understand their customers and improve their products and services accordingly. However, the disadvantage is that it may not always accurately interpret customer feedback, which can lead to incorrect insights.

Nightcafe Creator Studio

Nigthcafe Creator Studio is an AI tool that can help content creators generate video content quickly and easily.

Similar with DALL-E and Craiyon, Nigthcafe Creator Studio also generates graphic image based on the search description, and what's special about hti ssave time and effort in the video production process, making it ideal for creators on a tight deadline or budget.

However, the disadvantage is that it may not always produce high-quality or visually appealing content, which can negatively impact engagement and viewership.

While each AI tool possesses its own pros and cons, I recommend that we can benefit, with limitations, from services that they offer. For the scope of Cine.zip, in addition to Cparticular task or project.

2. MY INDIVIDUAL TECHBOX: CREATIVE COMMUNITIES

What I like so much about joining a creative forum is the extra visual work that active members put in, besides explaining the overview of their project in words. You can clearly see and learn from. And from a perspective of a visual learner (someone who learns by looking at visual aids like photos and pictures), I think that makes these kinds of communities stronger than any other types of platforms, where members do not tell audience about their vision, but instead realizing it through the means of various illustration tools.

PINTEREST

This is creatives' go-to for new inspirations!

BEHANCE

Behance is the place that creatives post their new works and potentially get hired. So it's like a LinkedIn that fixates on the portfolios and audience interactions with visual work, rather than connections and showcase of text-based work (aka theoretical knowledge)

DRIBBLE

Dribble is like Behance, but better with organization and categories.

3. MY INDIVIDUAL TECHBOX: THE SUMMARIZER

To summarize my favorite things about these separate projects, I have found a new digital platform called Wakelet. It is a tool designed for educational purpose and gives off the feeling of using Pinterest, but in a different way. Its working principle is simple: you simply create a new collection and enter URLs with similar contents. Users might have a good time using Wakelet because of the time it takes to organize certain things for a project.

From a personal angle, I prefer using Wakelet, probably partly because there's an excitement upon discovery something very new. But the large part of it is how it gathers all kinds of links from different platforms at one place.

1 note

·

View note

Text

New although Powered by Bing GPT and his chatgptWith options like - dominating the headlines, it wasn't the primary search engine to incorporate a chatbot function In December 2023, You.com, a search engine created by former Salesforce workers, was launched YouChat (opens in new tab) - Its personal chat assistant for looking out.Since then, You.com has launched YouChat 2.0 (opens in new tab), an up to date model of its chatbot search assistant. You.com touts YouChat's advantages as up-to-date coaching and its language mannequin CAL. CAL means Conversations, Apps and Hyperlinks, that are CAL coaching from supply. In brief, YouChat 2.0's CAL is GPT-3.5 to ChatGPT. It was the You.com apps integration specifically that caught my consideration, as the concept that you possibly can combine You.com apps for websites like Reddit and YouTube to work inside the search chatbot performance can be an enormous step ahead. Along with the power to create AI artwork inside the chatbot's consumer interface, fetching context from Wikipedia, Reddit and different apps had been options that the brand new Bing could not match.(Picture credit score: Future)However, as we have seen with ChatGPT, Bing and Google ChickenThis chatbot AI has produced some combined outcomes — if not generally Flat-out flawed. So I made a decision to not solely check You.com's YouChat 2.0 however evaluate it to the brand new GPT-powered Bing. Here's what I discovered. Earlier than this, I had no expertise with You.com, so I made a decision a superb place to begin can be YouChat 2.0 and ask Bing "What's You.com." Each got here again with outcomes to the impact of "You.com is a privacy-focused search engine," though there have been some variations within the pace and context with which they responded.Response to YouChat 2.0 adopted by a Wikipedia entry for You.com following chatbots, exhibiting off a few of its app-based options. I additionally appreciated that conventional search outcomes had been displayed within the consumer interface for chatbots — Bing required you to depart the chat window and return to the search outcomes. Additionally, it was fascinating to see that You.com's YouChat 2.0 bought quicker outcomes, as different testers like our personal Alex Wauro discovered the Bing chatbot just a little gradual.(Picture credit score: Future)Nevertheless, there have been nonetheless some issues that Bing did higher. For starters, although its AI-powered chatbot was slower, it talked about considerably extra web sites in its responses. Due to this, I satirically felt that I knew extra about You.com after studying the Bing chatbot's response than I did YouChat's response. Bing prompt follow-up inquiries to be taught extra, which had been extra conversational than YouChat 2.0's responses.I made a decision to benefit from that final Bing function and requested each chatbots the follow-up query "What's multimodal chat search?" The results of this question was just like the results of the earlier question, each search chatbots returned with a response that boils all the way down to multimodal chat search, a chat-based search technique that features a number of kinds of enter. And once more, the professionals and cons of each AI chatbots had been comparable, though there was a big change in every course. This time, YouChat 2.0 did not have an app that supplied further context, whereas Bing surprisingly did. Bing's chatbot features a newsbox with information search outcomes about multimodal chat search — a shocking change of tempo from BingLast Check: Can AI Chatbots Assist Make Pizzas? (Picture credit score: Future)After two rounds of testing, the outcomes had been nearly an identical. So I made a decision to throw an entire new drawback at YouChat 2.0 and the brand new Bing: What's one of the best ways to make pizza?Regardless of the slower of the 2 chatbots, the clear winner right here was Bing's GPT-powered chatbot It cites considerably extra sources and presents responses in an easy-to-understand format.

YouChat 2.0 has flashed its app-integration trick once more, however sadly, the Allrecipes app has created a recipe for "Pinwheel Italian Calzones." And as anybody who's ever had pizza will inform you, a calzone simply would not reduce it irrespective of the way you slice it.Outlook: YouChat 2.0 is not a sport changer — but After spending a while with each search engine chatbots, I can simply see the potential of You.com's YouChat 2.0. App integration is nice for offering context - when it really works. Sadly, YouChat 2.0 did not all the time discover a approach to combine outcomes into an app, and even when it did, it wasn't all the time the outcome you wanted. (Picture credit score: Future)So for now, the brand new Bing might be one of the best AI-powered search engine chatbot, even when it is just a little on the gradual facet and would not all the time get issues proper. Whereas I did not discover any obtrusive flaws in my testing for this face-off, we just lately requested GPT-powered Bing to write down a Samsung Galaxy S23 Extremely assessment and It bought a ton flawed. Nonetheless, it is undoubtedly a instrument to take a look at, so be sure you take a look at our information Accessing the brand new Bing In addition to our information Methods to use the brand new Bing with ChatGPT Earlier than giving it a spin. Immediately's greatest Apple MacBook Professional 14-inch (2023) offers

0 notes

Text

The framing of "more powerful than Chat GPT 4" is a clear tell. Of course OpenAI would love a 6-month breather to dominate the market. Dall-E2 had its lunch eaten by better projects from smaller teams, and six months of "don't outdo us" will give every current player a powerful advantage.

But that's assuming such a block would be actually implemented.

If NFTs were us not learning the lesson of Pogs, then Chat GPT the return of the Furby. The marketing says its an intelligent AI robot, but in actuality it's an toy that simulates the experience of one. Only they're advertising to venture capitalists instead of of 8 year olds, so they don't have to work as hard on the pitch.

When AI image generation first started happening, the term used the most was "Dream", because that's what these AIs do. They hallucinate seemingly coherent stuff that has no context, narrative or reason beyond what is prompted by the user.

In every way that a chat-AI can be accurate, it is not functioning as a generative AI, but as a search engine. Everything that makes it work was a chat-AI involves potential corruption of transmitted data from said search functions. The tech isn't ready for applications where reason or accuracy are required.

But if it's so scary to all these "smart, smart people" then nobody thinks about the limitations of the tech and all the flamboyant claims about how many people it can replace suddenly seem believable. The venture capitalists come slithering out to get in on the ground floor. Other companies come to sign deals to integrate your hallucination machine. The stock price goes up.

“When initially launched, the letter lacked verification protocols for signing and racked up signatures from people who did not actually sign it, including Xi Jinping and Meta’s chief AI scientist Yann LeCun, who clarified on Twitter he did not support it. Critics have accused the Future of Life Institute (FLI), which is primarily funded by the Musk Foundation, of prioritising imagined apocalyptic scenarios over more immediate concerns about AI – such as racist or sexist biases being programmed into the machines. Among the research cited was “On the Dangers of Stochastic Parrots”, a well-known paper co-authored by Margaret Mitchell, who previously oversaw ethical AI research at Google. Mitchell, now chief ethical scientist at AI firm Hugging Face, criticised the letter, telling Reuters it was unclear what counted as “more powerful than GPT4”. “By treating a lot of questionable ideas as a given, the letter asserts a set of priorities and a narrative on AI that benefits the supporters of FLI,” she said. “Ignoring active harms right now is a privilege that some of us don’t have.””

— Letter signed by Elon Musk demanding AI research pause sparks controversy | Artificial intelligence (AI) | The Guardian

105 notes

·

View notes

Text

Case Studies in Prompt Engineering

As artificial intelligence (AI) has developed, prompt engineering has become increasingly important in improving language models' capacities. In this article, we shall examine real-world case studies highlighting the valuable applications of prompt-based language models in various domains. These success examples demonstrate the efficacy of prompt engineering and provide insightful insights for anybody wishing to pursue this area of study.

Obtaining an AI certification has significant potential for those looking to make a name for themselves in this industry. The requisite skills and knowledge can be obtained through a prompt engineer certification program and a prompt engineer course.

Prospective professional engineers should explore the courses and certifications of reputable organizations such as the British Council. Their quick engineering course incorporates ideas from the more significant subject of artificial intelligence and covers the fundamentals of prompt engineering. Through such training, people might embark on a journey to support prompt engineering's success stories in the artificial intelligence world.

Success Stories from Case Studies in Prompt Engineering

This section presents real-world applications demonstrating prompt-based language models' outstanding effects. These case studies unfold as triumph stories, providing insights into how prompt engineering has transformed several fields, from creative writing and multilingual customer care to customer support.

Client Support Chatbots

Problem Statement: An organization aims to improve customer service by implementing a chatbot to effectively manage client inquiries and provide precise responses.

Accelerated Engineering Approach: Using a chat-based format, our quick engineer used a language model optimized with the OpenAI GPT-3 API. The model is trained using a dataset that includes historical customer inquiries and the corresponding responses. Tailored prompts are designed to cater to a variety of query kinds, such as technical support, product inquiries, and order status updates.

Findings: The chatbot proves to be a triumph, adeptly handling client inquiries and furnishing contextually appropriate answers. Prompt engineers make incremental modifications and analyze user feedback to increase the model's responsiveness and accuracy. The result is a significant decrease in customer response times, which enhances overall customer satisfaction.

Imaginative Writing Assistant Issue Statement: A creative writing program aims to support authors by providing contextually appropriate suggestions for storylines, character development, and descriptive writing.

Accelerated Engineering Approach: Using a data set of creative writing samples, prompt engineers to refine a language model by utilizing the Hugging Face Transformers library. The model intends to produce original writing prompts across various genres and styles. Through personalized prompts, writers communicate with the model to get ideas and inspiration for their writing projects.

Findings: The creative writing assistant becomes an invaluable resource for writers struggling to find inspiration. The model's varied and inventive answers help break through creative blockages and try out novel writing approaches. Using the creative writing assistant, writers demonstrate greater productivity and inventiveness.

Bilingual Customer Support

Problem Statement: A multinational e-commerce behemoth seeks to improve customer service by offering multilingual support to customers with varying linguistic backgrounds.

Accelerated Engineering Approach: Prompt engineers refine a multilingual language model by using the Sentence Translators library. The algorithm is trained on a dataset that includes consumer inquiries in multiple languages. Custom prompts are designed to handle inquiries in many languages, guaranteeing that the model can respond contextually and appropriately in the user's preferred language.

Findings: The multilingual customer service language model successfully serves consumers from diverse linguistic backgrounds. It responds to queries with proficiency in many languages while considering cultural nuances and preferences. Users appreciate the personalized support, which leads to increased customer satisfaction and retention.

These case studies demonstrate the adaptability and efficiency of rapid engineering in a range of contexts. Success stories ranging from multilingual customer service to creative writing assistants and customer care chatbots demonstrate the practical advantages of prompt engineering.

Product Development

Problem Statement: The team responsible for software development needed help in effectively compiling detailed user requirements for their product development. Using traditional approaches frequently produced a large amount of data that took time to prioritize and analyze, hampering the systematic development process. A more efficient method for condensing user needs into valuable insights and feature ideas was required.

Quick Engineering Method: The team decided to use prompt engineering as a strategic solution to deal with the difficulties. Gradual prompting was used, breaking down user requirements into smaller, more manageable tasks. This method continuously improved the prompts to direct the AI model in producing precise and in-depth insights. The purpose of the prompts was to extract updated information, guaranteeing a more concentrated and organized set of data for the language medium.

Findings: The outcomes revolutionized the software development process. The AI model produced more precise and actionable findings thanks to the gradual prompting approach. The development team prioritized product development more efficiently by breaking user requests into smaller tasks. Thanks to the organized dataset, features that directly addressed user demands were created, making it easier to understand user requirements.

Prompt engineering significantly improved the productivity of the product development lifecycle by offering a streamlined and practical approach to gathering and implementing customer needs.

Conclusion

Finally, the accomplishments of these case studies demonstrate the versatility and adaptability of prompt engineering in a range of contexts. Prompt engineering jobs continue to enhance their value in optimizing language models for particular use cases, whether in customer support chatbots, creative writing assistants, multilingual customer care, or, as seen here, product development.

These case studies demonstrate the structured and personalized approaches, which downplay the importance of prompt engineering jobs and courses in providing individuals with the necessary abilities.

0 notes