#API bridges

Explore tagged Tumblr posts

Text

API Bridges Work in Algo Trading

API Bridges are a crucial part of algorithmic trading, which allows trading platforms, brokers, and custom trading algorithms to work seamlessly together. They provide real-time data transfer and order execution, thus making the trading strategy more efficient, faster, and accurate. In this article, we will explain how API bridges work in algo trading and further explore their importance for traders and developers, especially in India.

What is algorithmic trading? Algorithmic trading is the use of computer algorithms to automatically execute trades based on pre-defined criteria such as market conditions, technical indicators, or price movements. Unlike manual trading, algorithmic trading allows traders to make faster decisions and execute multiple orders simultaneously, minimizing human error and maximizing potential profits.

Understanding API Bridges in Algo Trading API bridges are the connector layer between different software platforms through which they can communicate with each other. In algo trading, an API bridge is used to bridge your trading algorithm running from platforms like Amibroker, MetaTrader 4/5, or TradingView to the broker's trading system for automated execution of orders.

Important Functions of API Bridges in Algorithmic Trading Data Feed Integration: API bridges enable direct access to live market data by the algo trader, such as current stock prices, volumes, and order books, from the broker's system. This will serve as the basis of information that the algorithm should interpret for better decision-making. Once the algorithm determines a suitable trading opportunity, the API bridge sends the buy or sell order directly to the broker’s trading system. This process is automated, ensuring timely execution without manual intervention.

Backtesting: API bridges enable traders to backtest their algorithms using historical data to evaluate performance before executing real trades. This feature is particularly useful for optimizing strategies and reducing risks.

Risk Management: An effective API bridge helps implement the risk management protocol in trading algorithms, for example, stop-loss or take-profit orders. When specific conditions are met, such orders are automatically entered to eliminate emotional decision-making and loss. Trade Monitoring: The API bridge continuously monitors trade execution with real-time updates on orders, positions, and account balances. The traders stay informed and make adjustments in their algorithms.

ALSO READ

Why API Bridges are the Need of Algo Trading? Speed and Efficiency: API bridges allow high-frequency trading (HFT), which enables traders to execute thousands of trades per second with minimal delay. This speed is very important in fast-moving markets where timing is everything to profitability.

Customization: With custom-built algorithms interacting with a multitude of brokers through the API bridge, traders can personalize their strategies, thus being able to implement advanced trading strategies that otherwise would not be possible to manually implement.

Integration is smooth. API bridges enable traders to connect their favorite platforms, such as Amibroker or TradingView, with brokers like Angel One, Alice Blue, or Zerodha. In other words, traders can continue using the software they are familiar with while availing of the execution capabilities of the broker's platform.

Cost-Effective: In comparison to hiring a dedicated team of traders or using expensive proprietary systems, API bridges are more cost-effective for algo traders. They allow traders to use the power of automation without the high overhead costs. Improved Risk Management: By automating risk controls, such as setting limits for loss and profits, the algorithmic system ensures that the trades are executed with minimal risk, thus helping traders in India and worldwide to manage the risk exposure better.

API Bridges Working with Popular Trading Platforms Amibroker: Amibroker is a more popular software used by algo traders for technical analysis and backtesting. The integration of Amibroker with API bridge enables traders to execute a strategy in real-time against their preferred broker's interface, which enriches trading experience.

MetaTrader MT4/MT5: MetaTrader is also a widely used platform for algorithmic trading. Through an API bridge, traders can link their trading robots (Expert Advisors) to brokers supporting the MT4 or MT5 platforms to automatically execute trades based on their algorithms.

TradingView: The most renowned trading view is a charting platform famous for its user-friendly interface and powerful scripting language called Pine Script. With an API bridge, users can send real-time trading signals to their brokers for the broker to execute.

The Best API Bridges for Algo Trading in India are by Combiz Services Pvt. Ltd.: Combiz Services Pvt. Ltd. provides customized API solutions that ensure seamless integration between brokers and trading platforms. Their API bridges support a wide range of trading platforms such as Amibroker, MetaTrader, and TradingView, which makes it a good option for Indian traders seeking flexibility and speed in algorithmic trading.

AlgoTrader: AlgoTrader provides an advanced algorithmic trading platform that supports integration with various brokers through API bridges. It is known for its scalability and high-speed trading capabilities, making it a favorite among professional traders.

Interactive Brokers API: Interactive Brokers offers a robust API that allows traders to link their algorithms directly to their trading platform. With a rich set of features such as market data feeds and execution capabilities, the Interactive Brokers' API bridge is highly regarded by the algo traders.

How to Set Up an API Bridge for Algo Trading

Select a Trading Platform and Broker: You may select Amibroker or MetaTrader as the trading platform. Then, go for a broker who gives access to APIs, such as Zerodha or Alice Blue. Connect API: Once you have made a selection of the above-mentioned platforms and broker, you must connect the API bridge with your algorithm in relation to the broker's system. In this step, generally, it involves configuration settings and keys of the APIs. Create or Select Algorithm: If you are a new algo trader, you can make use of pre-built strategies or create your own using programming languages like Python or AFL (AmiBroker Formula Language).

Backtest and test the algorithm: Before you deploy the algorithm, backtest it with historical data to ensure it performs as expected.

Monitor and Adjust: After you have deployed the algorithm, monitor its performance and make adjustments according to the changing market conditions.

Conclusion API bridges are a must-have tool in the world of algorithmic trading, providing smooth integration, faster execution, and improved risk management. Using Amibroker, MetaTrader, or TradingView platforms, API bridges make sure that your trading strategy is executed efficiently and effectively. The power of API bridges enables traders to stay ahead in the competitive world of algo trading and maximize opportunities in the Indian stock market.

For someone seeking a robust and highly customizable solution for algo trading needs, Combiz Services Pvt. Ltd. has the best API bridge services that guarantee seamless integration and faster trade execution.

0 notes

Text

rage hatred suffering

i chose a text to translate for the computer science translation class and the teacher said there should be no translation available so i checked and there was none and she approved my text. i sent my translation yesterday and today she replied and said theres a translation. that fucking page was translated since the last time i checked it like. 2 weeks ago ???

so now i found another text (probably harder to translate too) and im looking everywhere before sending it to her, like if i find anyone translated oracle's vm virtualbox user manual somewhere i will obliterate them

#nourann.txt#translation is my passion !!!!!!!!!#i mean it is but aughhhh i thought i was done with this shit i have to work on my thesis not this#going to implode frfr#at least the teacher was nice and said i can send her something else to translate and shell give me enough time#also she said that there are similarities between my translation and the website's translation (by google's cloud translation api)#like ok idk man this isnt literary translation. not many ways to translate a text explaining how android debug bridge works#sometimes automatic translation doesnt completely suck ass. still hurt my feelings a bit tho#interestingly enough it kept daemon in french. i think both daemon and démon can be used ?#maybe i was influenced by termium. a canadian moment if you will#personal

2 notes

·

View notes

Text

Best MetaTrader API Bridge Solutions for Copy Trading

Copy trading has revolutionized the financial markets, making it possible for traders to mimic the trades of experienced professionals. Among the most effective ways through which one can perform copy trading is by the use of a MetaTrader API Bridge, which connects trading platforms with other external systems for the smooth execution of trade. In this article, we shall seek to explore the best MetaTrader API Bridge solutions for copy trading, the benefits, and how they enhance trading efficiency. A MetaTrader API Bridge is a kind of software that connects MetaTrader 4 or MetaTrader 5 with the outside trading system, liquidity providers, or multi-accounts seamlessly. This makes it possible to automate trade executions, replicate strategies between accounts, and enhance trading performance in general. An API bridge is required for copy trading as it will ensure real-time trade synchronization, low-latency execution, and efficient trade distribution across multiple accounts. Advantages of MetaTrader API Bridge for Copy Trading 1. Instant Execution of Traded Orders Trades get executed in real-time across connected accounts with MetaTrader API Bridge. This guarantees no delay which could affect profit generation. 2. Multi-Account Trading The bridge enables the management of multiple accounts at the same time, making it suitable for professional traders and portfolio managers. 3. Automated Copy Trading API bridges enable automated trade replication, thereby eliminating the need for manual interventions. 4. Reduced Latency Latency is a significant issue in copy trading. High-speed API bridges minimize execution delays, ensuring that copied trades are placed at the best possible prices. 5. Customizable Trade Allocation Traders can use the trade allocation according to their account size, risk preferences, or predefined strategies. 6. Enhanced Risk Management Advanced risk management settings on the MT5 API Bridge can be used for stop-loss and take-profit protective measures for the copied trades. 7. Easy Integration with Algo Trading Traders that use algorithmic trading strategies can integrate their MT5 API Bridge with pre-configured bots that can automatically carry out optimized trades. Best MetaTrader API Bridge for Copy Trading 1. Combiz Services API Bridge Combiz Services API Bridge is one of the most reliable solutions, providing fast trade execution, real-time synchronization, and advanced copy trading features. It is perfect for traders looking for a robust and scalable solution. 2. Trade Copier for MT4 & MT5 This solution enables instant trade replication between multiple MetaTrader accounts, making it ideal for those traders who manage several clients. 3. One-Click Copy Trading API This bridge provides a friendly user interface and one-click trade copying for the comfort of beginner and professional traders. 4. FX Blue Personal Trade Copier A very powerful free tool, it can copy trades between accounts with very low latency, allowing customizable settings of trade copy parameters. 5. Social Trading Platforms with API Bridges Many social trading networks provide built-in API bridge functionality, enabling users to copy trades from expert traders directly to their MetaTrader accounts. How to Choose the Right MetaTrader API Bridge for Copy Trading When selecting an API bridge for copy trading, consider the following factors: Latency and Execution Speed: Choose a bridge that offers fast trade execution with minimal slippage. Multi-Account Compatibility: Ensure that the solution supports multiple trading accounts. Risk Management Features: This is a feature of a good bridge that allows having the stop-loss, take-profit, and risk allocation settings. Customization and Automation: The ultimate choice should allow support for custom trading strategies and algo trading integrations. Reliability and Support: A bridge must have a good track record with reliable customer support.

0 notes

Text

TrueFoundry Secures $19 Million Series A Funding to Revolutionize AI Deployment

New Post has been published on https://thedigitalinsider.com/truefoundry-secures-19-million-series-a-funding-to-revolutionize-ai-deployment/

TrueFoundry Secures $19 Million Series A Funding to Revolutionize AI Deployment

TrueFoundry, a pioneering AI deployment and scaling platform, has successfully raised $19 million in Series A funding. Led by Intel Capital, the round saw participation from Eniac Ventures, Peak XV’s Surge (formerly Sequoia Capital India & SEA), Jump Capital, and angel investors including Gokul Rajaram, Mohit Aron, and executives from Fortune 1000 companies. With this investment, Avi Bharadwaj, Investment Director at Intel Capital, will join TrueFoundry’s board of directors.

The exponential rise of generative AI has brought new challenges for enterprises looking to deploy machine learning models at scale. Governance, data privacy, and infrastructure costs have become critical roadblocks, often delaying AI implementation. TrueFoundry’s platform addresses these challenges, enabling companies to deploy, manage, and scale AI applications seamlessly, efficiently, and securely.

TrueFoundry was founded by Abhishek Choudhary, Anuraag Gupta, and Nikunj Bajaj, a team of industry veterans from Meta and IIT Kharagpur. Their extensive experience in deep learning models and large-scale infrastructure management led to the development of a state-of-the-art platform as a service (PaaS), built to eliminate AI deployment bottlenecks and streamline machine learning workflows.

“Enterprises using TrueFoundry have built and launched their internal AI platforms in as little as two months, achieving ROI within four months–a stark contrast to the industry average of 14 months,” said Nikunj Bajaj, CEO & Co-founder of TrueFoundry. “Our platform integrates seamlessly across clouds, models, and frameworks, ensuring no vendor lock-in while future-proofing deployments for evolving AI patterns like RAGs and Agents.”

TrueFoundry offers a unified Platform as a Service (PaaS) that empowers enterprise AI/ML teams to build, deploy, and manage large language model (LLM) applications across cloud and on-prem infrastructure. Designed with a developer-first interface, the platform simplifies AI deployment, allowing full-stack data scientists to independently create, test, and scale applications. Key features include model cataloging, fine-tuning, API deployment, and advanced governance tools that bridge the gap between DevOps and MLOps.

A Year of Growth and Industry Adoption

TrueFoundry’s funding announcement follows a year of remarkable milestones:

4X year-over-year growth in customer base

1,000+ AI/ML clusters deployed across enterprise clients

Partnerships with Siemens Healthineers, ResMed, Automation Anywhere, Games24x7, and NVIDIA

The company has delivered 10X faster business value for customers while significantly reducing infrastructure costs. For instance, NVIDIA leveraged TrueFoundry to optimize GPU usage for LLM workloads, cutting costs and improving efficiency through automated resource allocation and job scheduling.

“TrueFoundry is uniquely positioned to address the growing complexities of AI deployment. Their platform simplifies the process for AI teams, enabling them to build, deploy, and scale applications with speed and efficiency,” said Avi Bharadwaj, Investment Director at Intel Capital. “With a focus on cost-efficiency, governance, and security, TrueFoundry is solving critical challenges for businesses, and we believe they are poised to lead in the rapidly expanding AI infrastructure market.”

AI Managing AI – The Future of Scalable AI Infrastructure

With a total of $21 million in funding, TrueFoundry is accelerating its mission to eliminate infrastructure bottlenecks for enterprises deploying AI at scale. The investment will be used to expand its team and strengthen go-to-market strategies to drive broader adoption.

“At TrueFoundry, we’re building a future where AI manages AI—removing the bottlenecks of human intervention and unlocking unparalleled speed and scale,” said Abhishek Choudhary, CTO & Co-founder of TrueFoundry. “Our platform streamlines infrastructure with auto-scaling, intelligent maintenance, and proactive issue detection, ensuring smooth AI operations while maintaining top-tier security and compliance.”

Delivering Speed, Cost-Efficiency, and Security

TrueFoundry’s platform has redefined AI deployment with features that prioritize speed, performance, and cost-efficiency:

5-15X Faster Iteration Cycles – Optimized Docker builds, seamless VSCode integration, and rapid resource switching.

Enterprise-Grade Performance – Dynamic batching for ML models, 3-5X faster cold starts, and autoscaling with image streaming and model caching.

30-80% Cost Savings – Smart resource orchestration between spot and on-demand instances, inference optimizations, and granular cost tracking.

Security and Compliance – SOC 2, HIPAA, and GDPR-certified platform with role-based access control, audit trails, and SSO integration.

Looking ahead, TrueFoundry is set to introduce its AI Agent, a self-sustaining system that anticipates and adapts to AI workflows. By automating resource management, workflow optimization, and proactive issue resolution, enterprises will be able to scale AI faster and with greater efficiency than ever before.

#000#access control#adoption#agent#agents#ai#ai agent#AI Infrastructure#AI platforms#AI/ML#amp#API#applications#Art#as a service#audit#automation#board#bridge#Building#Business#CEO#Cloud#clouds#clusters#Companies#compliance#cost savings#CTO#cutting

0 notes

Text

"Hive with Care"

#beeingapis#bees#comic#honeybee#humor#beekeeping#apis#beeing#bee#beecomic#bridge#brace#wonky#comb#frame#hive#care#baby#board#babyonboard#sign

0 notes

Text

using LLMs to control a game character's dialogue seems an obvious use for the technology. and indeed people have tried, for example nVidia made a demo where the player interacts with AI-voiced NPCs:

youtube

this looks bad, right? like idk about you but I am not raring to play a game with LLM bots instead of human-scripted characters. they don't seem to have anything interesting to say that a normal NPC wouldn't, and the acting is super wooden.

so, the attempts to do this so far that I've seen have some pretty obvious faults:

relying on external API calls to process the data (expensive!)

presumably relying on generic 'you are xyz' prompt engineering to try to get a model to respond 'in character', resulting in bland, flavourless output

limited connection between game state and model state (you would need to translate the relevant game state into a text prompt)

responding to freeform input, models may not be very good at staying 'in character', with the default 'chatbot' persona emerging unexpectedly. or they might just make uncreative choices in general.

AI voice generation, while it's moved very fast in the last couple years, is still very poor at 'acting', producing very flat, emotionless performances, or uncanny mismatches of tone, inflection, etc.

although the model may generate contextually appropriate dialogue, it is difficult to link that back to the behaviour of characters in game

so how could we do better?

the first one could be solved by running LLMs locally on the user's hardware. that has some obvious drawbacks: running on the user's GPU means the LLM is competing with the game's graphics, meaning both must be more limited. ideally you would spread the LLM processing over multiple frames, but you still are limited by available VRAM, which is contested by the game's texture data and so on, and LLMs are very thirsty for VRAM. still, imo this is way more promising than having to talk to the internet and pay for compute time to get your NPC's dialogue lmao

second one might be improved by using a tool like control vectors to more granularly and consistently shape the tone of the output. I heard about this technique today (thanks @cherrvak)

third one is an interesting challenge - but perhaps a control-vector approach could also be relevant here? if you could figure out how a description of some relevant piece of game state affects the processing of the model, you could then apply that as a control vector when generating output. so the bridge between the game state and the LLM would be a set of weights for control vectors that are applied during generation.

this one is probably something where finetuning the model, and using control vectors to maintain a consistent 'pressure' to act a certain way even as the context window gets longer, could help a lot.

probably the vocal performance problem will improve in the next generation of voice generators, I'm certainly not solving it. a purely text-based game would avoid the problem entirely of course.

this one is tricky. perhaps the model could be taught to generate a description of a plan or intention, but linking that back to commands to perform by traditional agentic game 'AI' is not trivial. ideally, if there are various high-level commands that a game character might want to perform (like 'navigate to a specific location' or 'target an enemy') that are usually selected using some other kind of algorithm like weighted utilities, you could train the model to generate tokens that correspond to those actions and then feed them back in to the 'bot' side? I'm sure people have tried this kind of thing in robotics. you could just have the LLM stuff go 'one way', and rely on traditional game AI for everything besides dialogue, but it would be interesting to complete that feedback loop.

I doubt I'll be using this anytime soon (models are just too demanding to run on anything but a high-end PC, which is too niche, and I'll need to spend time playing with these models to determine if these ideas are even feasible), but maybe something to come back to in the future. first step is to figure out how to drive the control-vector thing locally.

46 notes

·

View notes

Text

Interior Chinatown: A Sharp Satire That Challenges Stereotypes and Forces Self-Reflection

Interior Chinatown is a brilliant yet understated reflection of the world—a mirror that exposes how society often judges people by their covers. The show captures this poignantly with the scene where Willis Wu can’t get into the police precinct until he proves his worth by delivering food. It’s a powerful metaphor: sometimes, if you don’t fit the mold, you have to prove your value in the most degrading or unexpected ways just to get a foot in the door. The locked precinct doors represent barriers faced by those who don’t match the “majority’s” idea of what’s acceptable or valuable.

While the series centers on the Asian and Pacific Islander (API) community and the stereotypical roles Hollywood has long relegated them to—background extras, kung fu fighters—it forces viewers to confront bigger questions. It makes you ask: Am I complicit in perpetuating these stereotypes? Am I limiting others—or even myself—by what I assume is their worth? It’s not just about API representation; it’s about how society as a whole undervalues anyone who doesn’t fit neatly into its preferred narrative.

The show can feel confusing if you don’t grasp its satirical lens upfront. But for me, knowing the context of Charles Yu’s original book helped it click. The production team does an incredible job balancing satire with sincerity, blurring the line between real life and the exaggerated Hollywood “procedural” format. They cleverly use contrasting visuals and distinct camera work to draw you into different headspaces—Hollywood’s glossy expectations versus the grittier reality of life.

Chloe Bennet’s involvement (real name Chloe Wang) ties into the show’s themes on a deeply personal level. She famously changed her last name to navigate Hollywood, caught in the impossible middle ground of not being “Asian enough” or “white enough” for casting directors. It’s a decision that sparks debate—was it an act of survival, assimilation, or betrayal? But for Bennett, it was about carving a space for herself to pursue her dreams.

This theme echoes in one of the show’s most poignant scenes, where Lana is told, “You will never completely understand. You’re mixed.” It’s a crushing acknowledgment of the barriers that persist, even when you’re trying to bridge divides. Lana’s story highlights how identity can be both a strength and an obstacle, and the line serves as a painful reminder of the walls society creates—externally and internally.

Interior Chinatown doesn’t just ask us to look at the system; it forces us to examine ourselves. Whether it’s Willis Wu at the precinct door or Lana trying to connect in a world that sees her as neither this nor that, the show unflinchingly portrays the struggle to belong. And as viewers, it challenges us to question our role in those struggles: Are we helping to dismantle the barriers, or are we quietly reinforcing them?

#interior chinatown#chloe bennet#taika waititi#hulu#tv series review#tv show review#tv reviews#series review#review#jimmy o. yang#ronny chieng#sullivan jones#lisa gilroy#tzi ma#Hulu interior Chinatown#charles yu#int. Chinatown#int Chinatown#writerblr#writeblr

49 notes

·

View notes

Note

is there a public mod list for terrimortis? purely curious whats all in there

I started to answer this on mobile and then it was bothering me so its laptop time. theres a couple notes for things but i can answer and questions about it. this is roughly it, excluding any libs an apis

Main Mods:

Ammendments

Armourer's Worskshop - lets us build cool cosmetics (Ezra's wheelchair, all the antenae, leopolds legs, etc)

Beautify Refabricated

Chipped

Clumps

Collective

Incendium

Indium

Structory

Supplementaries

Another Furniture

Better Furniture

Cluttered

Comforts

Convenient Name Tags

Cosmetic Name Tags - How we change our names!

Croptopia

Joy of Painting - pai n t in g mo d

Dark Paintings

Ferritecore

Lithium

Mighty Mail - mailboxes!!

Origins

World Edit

Spark

Trinkets

Stellarity

Twigs

Villager Names - note, makes it so wandering traders dont despawn

Building Wands

Macaws:

Bridges

Doors

Fences

Trapdoors

Lights and Lamps

Paths and Pavings

Windows

Clientside:

Build Guide

Entity Model Features - Lets us use custom models like with optifine

Entity Model Textures

CIT Resewn - allows us to use optifine packs

Continuity - connected textures

Iris Shaders

Jade - lil pop up when you hover over blocks

Jade Addons

JEI

Skin Layers 3D

Sodium

Xaero's Minimap

Xaero's World Map

Zoomify

Chiseled bookshelf visualizer

Cull Leaves

Custom Stars

JER

LambDynamicLights

More Mcmeta

More Mcmeta Emissive Texures

Sodium Extras

Bobby

40 notes

·

View notes

Text

So trying to recreate the qsmp mos pack (Personal use ig, just sawa bunch of mods I've never seen before and when Oh Shit thats exciting, then just diecided "Fuck it gonna gather as many of the mods they're using)

I Do Not have any of knowledge of any of the dungeons that they have so I ask if you have any insight let me know.

Here's the full list I have of confirmed mods and possible mods;

server runs on 1.20.1 qsmp 2024 mods: 1.Regions Unexplored 2.Croptopia 3.Biomes o plenty 4.born in chaos 5.exotic birds 6.enmey expansion 7.chocobo mod 8.farmers delight + some other food mods (Possibly multiple) with delight in the name. 9.Candlelight(?) 10.Handcrafted 11.Alex's mobs 12.Alex's caves (I can confirm because of a TRAP DOOR in the egg bakery. I'm in the trenches) 13.supplementaries 14.Beachparty 15.create 16.journey maps (Idk some map mod) 17.aquaculture 2 18.cluttered 19.chimes 20.fairy lights 21.FramedBlocks 22.Chipped 23.paraglider 24.Another furniture mod 25.waystones 26.connected glass 27.Create deco 28.Candlelight dinner 29.MOA DECOR 30.Tanuki decor 31.Orcz 32.Modern life 33.Bakery 34.Friends&Foes 35.Meadow 36.Abyssal decor 37.Twigs 38.lootr 39.when dungeons arise(to be confirmed) 40.nether's delight 41.rats 42.Additional lanterns 43.Alex's delight 44.Additional Lights 45.AstikorCarts Redux 46.Athena 47.Awesome dungeon net..(work?) 48.BOZOID 49.Apothic Attributes 50.AppleSkin 51.Balm 52.Better Archeology 53.Better ping Display 54.BetterF3 55.Aquaculture Delight 56.Bookshelf 57.Bygone Nether 58.CC: Tweaked 59.Artifacts 60.Camera Mod 61.Cataclysm Mod 62.Catalogue 63.Citadel 64.Cloth config v10 API 65.Clumps 66.Comforts 67.Configured 68.Controlling 69.CorgiLib 70.CoroUtil 71.Corpse 72.CosmeticArmorReworked 73.Create : Encased 74.Create Confectionery 75.Create Slice & Dice 76.Create: Interiors 77.Create: Steam 'n' Rails 78.Create: Structures 79.CreativeCore 80.Creeper Overhaul 81.Cristel Lib 82.Cupboard Utilities 83.Curios API 84.Customizable Player M(???) 85.Delightful 86.Distant Horizons 87.Domestication Innovations 88.Duckling 89.Dynamic Lights 90.Elevator Mod 91.Embeddium 92.Emotecraft 93.Enderman Overhaul 94.EntityCulling 95.Nether's exoticism 96.YUNG's (x) Mods (bridges, better dessert temples,mineshafts only ones i can confrim, might be all but idk for sure) 97.Securitycraft 98.Vinery (Confirmed because of Tubbo's drinking binge at spawn yesterday) 99.Mr.Crayfish (Furniture confirmed, possibly more) 100.Naturalist 101.Tom's simple storage

If you know or noticed mods that haven't been listed, reply/reblog with them please.

Things are numbered for my archival reasons, as some mods come in multiple separate mods (such as YUNG's) the numbering will not show the true number of the mods on the server.

I also have not checked the needed mods that any of these mods may need so.

(Please note that there may be spelling/grammar mistakes in the names of this mods!)

#qsmp#THanks to everyone providing mods!#everytime I update this thing I live in fear I'll accidentally copy paste the purgatory theory draft that lives in the same notepad

56 notes

·

View notes

Text

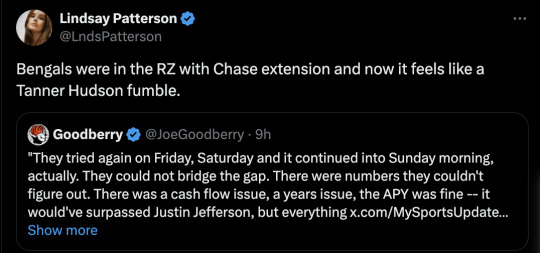

an unfortunately hilarious way to describe how how the bengals fumbled this deal. listen here

transcription (and some commentary) below

they tried friday night, they even tried on saturday into saturday night, and it continued into sunday morning actually. they could not bridge the gap, it just was not happening. there were numbers that they couldn’t figure out. there was a cash flow issue, there was a years issue. the APY was fine. it would have surpassed justin jefferson but everything else was not there. and basically, this is exactly what justin jefferson went through last year. justin jefferson also wanted to get extended after his third year, the vikings could not bridge the gap. they made him play out his fourth year and eventually it all worked out and he just became the highest paid receiver after playing four years. ja’marr’s gonna go through the same thing. he’s gonna play through his fourth year and eventually at this time next year he’s gonna try to get extended and he was looking for 36 million a year right now. if he has a ja’marr chase type of year right now, ja’marr will be able to ask for 40 million dollars a year next year and he’s going to get it from the bengals. thats where it is.

again, i don’t know why some of these organizations decide to wait when you have the star players, just pay them because if you don’t it gets more expensive…i’ll tell you this much, ja’marr’s side? not happy about it. ja’marr himself? not happy about it as well. he thought the deal would get done, this has been four, maybe even five, months of negotiations and they thought they were on the doorstep a couple of times only for it to collapse, and we’ve said it a million times - the bengals do negotiations way different than every other team. they're way more annoying about it and this player experienced it for the first time and he’s not happy about it. but there’s nothing he can do about it, he’s gonna play through the year. he handled it with class and he’s onto playing week 2 where he should be fully healthy.

Okay so! pretty revealing stuff!

the cash flow thing. upon further research, this isn't the classic "oh boohoo the brown family is too poor!" but actually more about guaranteed money. it's cash flow for the player throughout the deal. the bengals tend to only guarantee a certain amount in the first (and very rarely the second) year of the deal. ja'marr's camp wanted more guaranteed money throughout the contract (which basically ensures security throughout the whole thing rather than risk being cut at the end, and could also prevent him from wanting to hold out/in because he wouldn't be making much per game towards the end). it seems like the bengals had a limit on the money they'd guarantee throughout six years, not because they couldn't, but because they wouldn't. (they are billionaires and are more than capable of drumming up more liquid cash if they really wanted to, but they don't want to.)

one thing i DO find comforting though is that we are not the only team to fuck this up lol. justin tried for an extension last year, and they also couldn't bridge the gap, making him play his fourth year first. and it all ended happily, he is the highest paid receiver now. and next year, ja'marr will be.

but! ja'marr is NOT happy right now. which sucks! he's a pro and i know he can put it behind him and focus on the game. but it's just such a bummer. hopefully he can use this as motivation and play pissed off/with a chip on his shoulder/whatever, but still, annoying it got to this point!

the confirmation about how annoying the bengals are as an organization to deal with from every single agent. it really shouldn't be a secret after jessie, tee, etc. and it's such a poor reflection on us that we supposedly didn't pay those guys to pay ja'marr, and we couldn't even get that right (we will eventually, but they've already used up any good will they had from ja'marr's side, and are going to have to pay way more now!)

#SIGH.#sorry just needed to get my thoughts out on this via rant.#i do recommend listening to the podcast for yourself! very informative!#like as depressing as i find all of this i also find it super interesting from a business persepective#but also like god fuck us so hard this is not a winning strategy and i genuinely don't know how much longer they can go on like this#ja'marr chase

10 notes

·

View notes

Text

Two ideas:

1. I wonder if anyone has tried to build a Tumblr-a-like on ActivityPub?

2. I wonder if you could build a Tumblr/ActivityPub bridge out of the existing Tumblr API? Like I know Tumblr has said they plan to eventually make Tumblr part of ActivityPub, but you could always go "fuck you" and do it for them.

78 notes

·

View notes

Text

EPISODE 1

preview arc

Spoiler alert as always.

.

.

.

.

.

A significant contrast of ambiance I noticed when watching Gentar arc was the TV version used a lighter tone than the comic counterpart. And ... did the area feel bigger and brighter here?

I like that this version started in space rather than on earth. If they wanted to convince the audience the season 2 events happened within two months, they really needed to show how tight it was.

Unlike Sori and Windara's arc, this time I have to read the comic AND watch the show back to back.

This show has only 4 episodes but I felt like I'm missing something in the first ep.

For instance...

How the heck did the final goal from utilize Daun's 3rd tier, "Rimba/Jungle", into Api's 3rd tier, "Nova"?

Even the comic didn't bring the name in Baraju's early arc. It was a suggestion from Hang Kasa, not an order by the other person(in this case: the Commander).

And the show didn't explain the reason behind the commander's brief about this and you expected us to easily grasp it??

The show's barely started and I'm like:

"Am I missing something from Windara???"

They may want to cut long expositions of who those were and immediately shortened into the main issue. Mixed some lines from Baraju's arc so they could quickly jump into action for the future tv show. That's also why the gang was in this cut to bridge the story between Gentar and Baraju.

Except, Papa Zola resigned from the main cast (along with Adu Du and Probe). This arc is officially his last appearance in the Boboiboy series. He will be focused more on his adventure with his family in the upcoming movie later this year.

Also, I think it's funny, but when Gopal told Oboi to use Fusion elementals other than Frostfire because of how frequent it was, I laughed at the irony when Supra was in the mix yet he didn't even bring it up. The comic version made more sense in context since it was the only fusion he always used before Gentar by the way😅

Anywho, the TV ver started the story where the gang had to split up due to an unexpected distress signal during their journey to the planet Volcania. After that, it thematically followed the same structure as the comic ver, issue 15.

I said this in my preview post, we got to see Gentar's introduction right from the get go, especially in the opening vid. Aside from the main gang, the elementals are replaced with Gentar(but still got some tiny bits of Gempa & Hali for the sake of fusion part).

Oh, not to mention this is the first transformation where Oboi's front bangs cut sideways. Which IMO, that cut was cool as heck! Man, I love his suit on the tv ver! So good ... 😘

And I love how exaggerated his entrance was. The spotlight, the speeches, the ambiance, and every surrounding supported him as if he were the self-proclaimed celebrity in the block. And I love he purposely dragged his power-ups longer than the comic ver. It. Was. That. Necessary.

(If you guys telling me that this is not Boboiboy and totally a new character, I will 100% believe in you)

Other props to the tv ver is his weapon. They want to show how big and important it is. Not just randomly pop-out. Thus, enter his magnetic powers to assemble all the scrapes into his iconic hammer.

To match the whole "lively" vibe of the theme, the animation took very high notes of Japanese anime style and geniously incorporated into their 3D renders.

At first I thought they would go spiderverse-esque but using this method is also perfect for this arc. Kinda reminds me of Snoopy's art style actually.

(and also ashamed of why Sonic Prime didn't do this method even though their flat animations had some potential wtf!!!! Yes I'm still salty over the 2nd and their final season other than the animation period)

Ehem.

Okay. Continue to the main antagonists themselves: the Probe x Jo robot hybrid and Adu Du introduction.

I appreciate the animators that still manage to make it as creepy as ... I'd say the FNAF / Poppy Playtime horror standard. Although kinda a bit downed with the lighter background setting.

(now, will my country censor the horror part? Gonna be a nuisance I'd tell ya🙂↕️)

Whooo man when Adu Du entered the frame, my eyes instantly widened. I still remembered my reaction to how much his character development changes in the comic. But God I'm still not prepared in tv ver! Especially in this shot!

Like, sir who are you and why you are so cool?!?!! 😳😳

Aaaaaand that's all for now, onto the 2nd episode!

5 notes

·

View notes

Text

I want to make this piece of software. I want this piece of software to be a good piece of software. As part of making it a good piece of software, i want it to be fast. As part of making it fast, i want to be able to paralellize what i can. As part of that paralellization, i want to use compute shaders. To use compute shaders, i need some interface to graphics processors. After determining that Vulkan is not an API that is meant to be used by anybody, i decided to use OpenGL instead. In order for using OpenGL to be useful, i need some way to show the results to the user and get input from the user. I can do this by means of the Wayland API. In order to bridge the gap between Wayland and OpenGL, i need to be able to create an OpenGL context where the default framebuffer is the same as the Wayland surface that i've set to be a window. I can do this by means of EGL. In order to use EGL to create an OpenGL context, i need to select a config for the context.

Unfortunately, it just so happens that on my Linux partition, the implementation of EGL does not support the config that i would need for this piece of software.

Therefore, i am going to write this piece of software for 9front instead, using my 9front partition.

#Update#Programming#Technology#Wayland#OpenGL#Computers#Operating systems#EGL (API)#Windowing systems#3D graphics#Wayland (protocol)#Computer standards#Code#Computer graphics#Standards#Graphics#Computing standards#3D computer graphics#OpenGL API#EGL#Computer programming#Computation#Coding#OpenGL graphics API#Wayland protocol#Implementation of standards#Computational technology#Computing#OpenGL (API)#Process of implementation of standards

9 notes

·

View notes

Text

10 Best AI Accessibility Tools for Websites (January 2025)

New Post has been published on https://thedigitalinsider.com/10-best-ai-accessibility-tools-for-websites-january-2025/

10 Best AI Accessibility Tools for Websites (January 2025)

Web accessibility has become essential for businesses as digital inclusion moves from optional to mandatory. With over 1 billion people worldwide living with disabilities and increasing legal requirements around digital accessibility, organizations need effective tools to make their online presence accessible to everyone.

Here are some of the top leading AI-powered accessibility solutions that help businesses create inclusive digital experiences while maintaining compliance with accessibility standards.

UserWay brings AI-powered accessibility to websites through an intelligent widget that automatically enhances the online experience for users with disabilities. This smart solution transforms website code in real-time, ensuring compliance with major accessibility standards like WCAG 2.1 & 2.2 and ADA.

The system’s intelligence comes through its automatic detection and correction capabilities. It scans website code and makes precise adjustments without requiring technical expertise from site owners. Each visitor can access tailored accessibility profiles designed for specific needs – from motor impairments to visual challenges to cognitive differences. The widget acts as an intelligent interpreter, translating website content into formats that work for each user’s unique requirements.

Major brands like Payoneer, Fujitsu, and Kodak have seen concrete results from implementing UserWay. A case study by Natural Intelligence showed notable improvements in key metrics – higher click-through rates, lower bounce rates, and better conversion numbers. Beyond performance gains, UserWay also offers peace of mind through a $10,000 monetary pledge for annual Pro Widget customers, supporting them against potential accessibility challenges.

Key features:

Automated code analysis and correction system that maintains WCAG and ADA compliance

Specialized accessibility profiles addressing different disability types and user needs

Multi-language support framework serving over 50 languages worldwide

Privacy-focused architecture with ISO 27001 certification

Built-in legal protection system including documentation and support

Visit UserWay →

accessiBe brings together AI, machine learning, and computer vision to make websites naturally accessible to everyone. Their system works continuously in the background, intelligently adapting web content to meet diverse accessibility needs while keeping websites compliant with WCAG 2.1 and ADA standards.

The platform operates through two interconnected systems. The accessibility interface acts as a smart control center, giving users precise control over their browsing experience – from adjusting fonts and colors to optimizing navigation patterns. Meanwhile, the AI engine works invisibly, analyzing webpage elements to understand their purpose and function. This dual approach creates a seamless experience where websites naturally adapt to each user’s needs, whether they are using screen readers, keyboard navigation, or other assistive technologies.

With over 100,000 websites worldwide putting their trust in accessiBe, the platform has become a cornerstone of digital inclusion. The system’s development involved direct collaboration with users who have disabilities, ensuring it addresses real-world challenges effectively. For businesses, this translates to expanded reach and reduced legal risk, all through a solution that works continuously to maintain accessibility as websites evolve.

Key features:

AI-powered contextual analysis system for automatic content adaptation

Image recognition engine providing detailed alternative text descriptions

Daily monitoring framework ensuring continuous compliance

Customizable interface supporting various disability profiles

Built-in litigation support package with comprehensive documentation

Visit accessiBe →

Stark offers teams a unified platform that brings inclusivity into every stage of product development. This intelligent suite works seamlessly across popular design and development environments like Figma, Sketch, and GitHub.

The platform takes a proactive approach to accessibility. Rather than waiting until the end of development to address issues, Stark’s AI-powered system catches potential barriers as early as the first design draft. This early detection proves transformative – teams using Stark report fixing accessibility issues up to 10 times faster than with traditional methods, while reducing 56% of issues that typically slip into code where fixes become significantly more costly.

The world’s leading companies, from ambitious startups to Fortune 100 enterprises, have made Stark their foundation for digital accessibility compliance. The system’s GDPR and SOC2-certified platform provides real-time insights and continuous monitoring across design files, code repositories, and live products. For teams of all sizes, this means having a central hub where designers, developers, and product managers can collaborate effectively on creating inclusive digital experiences.

Key features:

Integrated accessibility testing system working across major design and development tools

AI-powered suggestion engine for rapid issue detection and resolution

Real-time monitoring framework providing continuous compliance insights

Role-specific toolsets supporting different team members’ needs

Enterprise-grade security architecture with zero-trust policy

Visit Stark →

AudioEye brings a mix of intelligent automation and human expertise to web accessibility, creating digital spaces where every visitor can fully engage with content. This platform goes beyond simple fixes, combining AI-driven solutions with insights from certified experts and members of the disability community.

The system operates through a three-layered approach to digital inclusion. First, active monitoring continuously scans web pages as visitors interact with them, gathering real-time insights about accessibility challenges. Then, a powerful automation engine instantly addresses common barriers, while certified experts craft custom solutions for more nuanced issues. This dual approach creates a dynamic shield against accessibility gaps, with the platform’s proven ability to reduce valid legal claims by 67% compared to other solutions.

Over 126,000 leading brands have chosen AudioEye as their path to digital inclusion. The platform’s comprehensive toolkit – from developer testing resources to custom legal responses – makes it the top-rated solution for ease of setup according to G2’s 2024 rankings. For businesses aiming to welcome all visitors, including the one in six people globally living with disabilities, AudioEye transforms accessibility from a technical requirement into a seamless part of the digital experience.

Key features:

Real-time monitoring system that adapts to user interactions

Hybrid automation engine combining AI fixes with expert solutions

Custom code development framework for complex accessibility challenges

Comprehensive legal support package with custom response services

Developer toolkit enabling proactive accessibility testing

Visit AudioEye →

Allyable takes digital accessibility beyond checkbox compliance by offering enterprises an AI-powered system. Their integrated platform combines automation with personalized expert guidance, creating digital spaces where technology and human insight work together.

The platform uses a modular ecosystem of solutions. The Ally360™ hub serves as a central command center, with real-time accessibility insights. But what sets Allyable apart is their approach to guiding teams through a thoughtful transition, starting with hands-on management and gradually building client teams’ expertise through A11yAcademy™ training and ongoing support.

With over 1.2 billion people worldwide living with disabilities, Allyable’s impact extends beyond technical compliance. Their system integrates seamlessly into existing workflows through a single line of code, connecting with popular tools like Jira and GitHub. For enterprises looking to improve digital inclusion while protecting against rising compliance challenges, Allyable’s mix of AI and human expertise creates a pathway to lasting accessibility.

Key features:

All-in-one accessibility hub providing real-time monitoring

Expert-guided transition system building team independence

Specialized training academy developing internal accessibility expertise

Single-line integration framework connecting with major development tools

Comprehensive security architecture protecting sensitive data

Visit Allyable →

Read Easy.ai transforms complex content into clear, digestible information for individuals with reading challenges. The system converts intricate language patterns into more approachable forms while preserving the essential meaning.

The platform offers versatile deployment across digital spaces. Through a Chrome extension, it functions as an invisible reading companion, instantly adapting website text on demand. For content creators, it embeds seamlessly into Microsoft Office as a thoughtful guide, offering real-time insights about readability and suggesting ways to make text more inclusive. Meanwhile, developers can tap into these capabilities through an API to implement it directly into their applications.

The numbers tell a compelling story about Read Easy.ai’s potential impact. In the US alone, 54% of adults read below a sixth-grade level, while across Europe, 20-25% of the population faces functional literacy challenges. By supporting multiple languages including English, Spanish, German, Dutch, and Portuguese, Read Easy.ai opens digital doors for millions who might otherwise struggle with online content, turning complex text into clear communication.

Key features:

AI-powered text analysis engine that maintains meaning while reducing complexity

Multi-platform integration system working across browsers and applications

Real-time feedback framework for content creators

Developer toolkit enabling custom accessibility solutions

Multi-language support system serving diverse global audiences

Visit Read Easy.ai →

Siteimprove offers organizations tools to proactively find and fix accessibility issues. The platform combines accessibility checking with AI-powered recommendations to improve website accessibility.

The platform includes an automated tool that checks web pages and PDF documents against WCAG 2.2 standards. For teams focused on digital accessibility, Siteimprove provides insights about accessibility issues and guidance for improvements. Their Frontier platform offers training resources to build accessibility knowledge across organizations.

Through built-in dashboards and reporting tools, organizations can monitor their accessibility efforts. The platform supplements automated checks with AI suggestions, helping teams address identified issues.

Key features:

Web page and PDF accessibility checking tools

AI-powered recommendations

Accessibility progress tracking tools

Educational resources for teams

Organization-wide training platform

Visit Siteimprove →

Evinced uses AI and machine learning to find website accessibility problems through visual analysis rather than just code review. This visual approach helps catch issues that traditional accessibility tools miss, including problems typically found only through manual testing.

The system works differently from standard accessibility scanners. When examining a website, it processes screens like a sighted person would, then groups related accessibility issues together. This means instead of producing hundreds of individual bug reports, Evinced identifies common patterns that developers can fix systematically. The platform integrates with existing development tools including Selenium and Cypress for web testing, plus XCUITest and Espresso for mobile apps.

Major development teams rely on Evinced’s suite of tools to catch accessibility issues early in their process. The platform tracks each problem from discovery through resolution, preventing duplicate reports while measuring fix times. This systematic approach helps teams identify and resolve accessibility barriers more efficiently than traditional testing methods.

Key features:

Visual AI analysis that finds issues beyond code scanning

Smart clustering system for related accessibility problems

Issue tracking framework across development cycles

Integration tools for major testing platforms

Complete toolkit spanning design through deployment

Visit Evinced →

RAMP offers web teams a systematic approach to finding and fixing accessibility issues across websites. The platform focuses on achieving compliance with ADA and WCAG standards through automated scanning and guided remediation.

The system works through a four-part process: discovery, understanding, fixing, and compliance monitoring. RAMP’s automated scanner identifies WCAG violations site-wide, while their browser extension shows issues directly on the page. For each problem found, RAMP provides detailed instructions, code snippets, and a task management system that integrates with Jira. Their A11y Center helps organizations track progress and manage accessibility statements.

Key features:

Site-wide scanning system for WCAG violations

Browser extension showing real-time accessibility issues

Task management system with expert remediation guides

Jira integration for streamlined workflow

Compliance tracking center with accessibility statement tools

Visit RAMP →

Equally AI offers an AI engine that works continuously to identify and resolve accessibility barriers while maintaining human oversight for accuracy.

The system’s core strength lies in its 24-hour monitoring cycle. Every day, it scans websites for new content and potential accessibility issues, automatically addressing concerns across WCAG, ADA, and EAA standards. For users, this means having access to customizable accessibility profiles that address specific needs – from vision impairments to cognitive disabilities, ADHD to dyslexia. Website owners can seamlessly integrate these features through popular platforms like WordPress, Wix, Shopify, and Webflow.

Major companies rely on Equally AI to streamline their accessibility efforts. By tackling over 50 common accessibility barriers, Equally AI helps organizations build websites that welcome all users while protecting against compliance risks.

Key features:

AI-powered accessibility correction system

Daily content monitoring and issue detection

Customizable profiles for different accessibility needs

Multi-platform integration framework

Automated compliance documentation tools

Visit Equally AI →

Building a More Accessible Digital Future

Recent data reveals a significant gap in digital accessibility: 89% of users with disabilities still face daily barriers online, despite the growing sophistication of AI-powered solutions. This disconnect between available tools and user experience highlights an essential truth – while AI offers powerful automation and monitoring capabilities, technology alone cannot bridge the accessibility divide.

The business implications are compelling and immediate. With 62% of users ready to switch to more accessible competitors and 51% actively seeking accessible alternatives, organizations can no longer treat accessibility as optional. These AI tools represent different approaches to solving the accessibility challenge – from automated remediation and real-time monitoring to development integration and compliance tracking. Yet their effectiveness ultimately depends on meaningful implementation and ongoing commitment.

As we move through 2025, organizations must shift from viewing accessibility as a compliance checkbox to recognizing it as a fundamental aspect of user experience and business success. Companies that combine these AI capabilities with genuine dedication to inclusion will not just meet legal requirements – they will gain a significant competitive advantage.

#000#2024#2025#accessiBe#Accessibility#ai#ai tools#AI-powered#amp#Analysis#API#applications#approach#apps#architecture#automation#background#Best Of#billion#brands#bridge#browser#browser extension#bug#Building#Business#Case Study#challenge#chrome#code

1 note

·

View note

Text

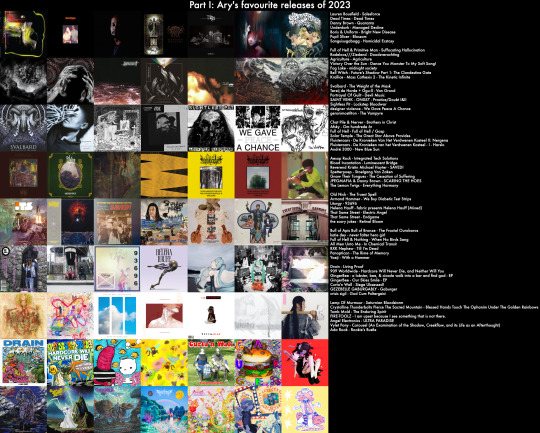

Part 1: Ary's favourite releases of 2023

Before you ask, yes I know that Mitski and Sufjan Stevens released albums this year! I'm gonna go ahead and assume they're already on a lot of other people's lists! However, if you think I'm missing out on YOUR favourite album of 2023, let me know. If you're thinking: "63 albums isn't that many, I wish there were more" - you're in luck because there's a Part 2. Part 2 has a different (more pop? upbeat? accessible?) vibe. Don't think too hard about it...

The chart isn't ranked, just arranged in a way that looked nice to me. Metal, hardcore, rap, emo, skramz, bedroom pop and more!!!

Here are Bandcamp links to all of the albums (for those not on BC there's a YouTube or Spotify link). Honestly I'm never quite sure what genre something is, but there's a lot of metal in any case.

Row 1

Lauren Bousfield - Salesforce [digital hardcore]

Dead Times - Dead Times [harsh noise extreme metal]

Danny Brown - Quaranta [rap/hiphop]

Underdark - Managed Decline [post black metal]

Boris & Uniform - Bright New Disease [psychedelic heavy metal]

PUPIL SLICER - BLOSSOM [blackened mathcore]

Sanguisugabogg - Homicidal Ecstasy [death metal]

Row 2

Full of Hell & Primitive Man - Suffocating Hallucination [death metal/grindcore]

Radeloos//Ziedend - Doodsverachting [blackened crust]

Agriculture - Agriculture [ecstatic black metal]

Victory Over the Sun - Dance You Monster To My Soft Song! [progressive black metal]

fog lake - midnight society [bedroom pop]

Bell Witch - Future's Shadow Part 1: The Clandestine Gate [funeral doom]

Krallice - Mass Cathexis 2 - The Kinetic Infinite [progressive black metal]

Row 3

Svalbard - The Weight Of The Mask [postmetal]

Terzij de Horde & Ggu:ll - Van Grond [vitalistic black metal]

portrayal of guilt - Devil Music [blackened post-hardcore]

SAINT VEHK - Practice/Doubt I&II [occult death industrial]

Sightless Pit - Lockstep Bloodwar [dub/power electronics]

Designer Violence - We Gave Peace A Chance [electropunk]

geronimostilton - The Vampyre [skramz]

Row 4

Chat Pile & Nerver - Brothers in Christ [sludgey death metal]

Afsky - Om hundrede år [depressive black metal]

Full of Hell & Gasp - FOH/Gasp (Split) [death metal/grindcore]

Solar Temple - The Great Star Above Provides [blackgaze]

Fluisteraars - De Kronieken Van Het Verdwenen Kasteel - II - Nergena [atmospheric black metal]

Fluisteraars - De Kronieken van het Verdwenen Kasteel - I - Harslo [atmospheric black metal]

Andre 3000 - New Blue Sun [spiritual flute jazz]

Row 5

Aesop Rock - Integrated Tech Solutions [rap/hiphop]

Blood Incantation - Luminescent Bridge [cosmic death metal]

Reverend Kristin Michael Hayter (fka LINGUA IGNOTA) - SAVED! [experimental gospel metal]

Spetterpoep - Stoelgang Van Zaken [coprogrind/grindcore]

Gnaw Their Tongues - The Cessation Of Suffering [blackened drone metal]

JPEGMAFIA & Danny Brown - SCARING THE HOES [rap/hiphop]

The Lemon Twigs - Everything Harmony [70s inspired rock]

Row 6

Old Nick - "The Truest Spell" [dungeon synth/raw black metal]

Armand Hammer - We Buy Diabetic Test Strips [rap/hiphop]

Liturgy - 93696 [transcendental black metal]

Helena Hauff - fabric presents Helena Hauff [hardcore techno]

That Same Street ⁻ Electric Angel [skramz]

That Same Street - Endgame [skramz]

the scary jokes - Retinal Bloom [dream pop]

Row 7

Bull of Apis Bull of Bronze - The Fractal Ouroboros [occult black metal]

Katie Dey - never falter hero girl [hyperpop]

Full of Hell & Nothing - When No Birds Sang [grindcore/shoegaze]

All Men Unto Me - Chemical Transit [classical/doom metal]

RXK Nephew - Till I'm Dead [rap/hiphop]

Panopticon - The Rime of Memory [rabm/black metal]

Yaeji - With A Hammer [electronic]

Row 8

DRAIN - LIVING PROOF [punk/hardcore]

909 Worldwide - Hardcore Will Never Die, and Neither Will You [happy hardcore/rave]

lobsterfight, gingerbee, Cicadahead, godfuck - a lobster, bee, & cicada walk into a bar and find god [skramz]

GingerBee - Our Skies Smile [skramz/5th wave emo]

Curta'n Wall - Siege Ubsessed! [dungeon synth/raw black metal]

GEZEBELLE GABURGABLY - Gaburger [alt pop]

crisis sigil - God Cum Poltergeist [cybergrind]

Row 9

Lamp Of Murmuur - Saturnian Bloodstorm [black metal]

Crystalline Thunderbolts - Blessed Hands Touch The Ophanim Under The Golden Rainbows [experimental black metal]

Tomb Mold - The Enduring Spirit [black/death metal]

FIRE TOOLZ - I am upset because I see something that is not there. [electro-industrial/experimental]

Angel Electronics - ULTRA PARADISE [happy post-hardcore]

Vylet Pony - Carousel (An Examination of the Shadow, Creekflow, and its Life as an Afterthought) [electronic]

Ada Rook - Rookie's Bustle [electronic]

This post took forever to make. Again if you have any thoughts on it please tell me!!!! And share widely with your friends :)

Love, Ary

#bandcamp#black metal#death metal#extreme metal#skramz#grindcore#bedroom pop#emo#power electronics#rap#hiphop#doom metal#hxc#dutch metal#full of hell#black dresses#liturgy#lingua ignota#industrial music#experimental music#rabm#postmetal#mathcore#prog metal#boris#sanguisugabogg#katie dey#ada rook#gnaw their tongues#gezebelle gaburgably

11 notes

·

View notes

Text

Crypto Exchange API Integration: Simplifying and Enhancing Trading Efficiency

The cryptocurrency trading landscape is fast-paced, requiring seamless processes and real-time data access to ensure traders stay ahead of market movements. To meet these demands, Crypto Exchange APIs (Application Programming Interfaces) have emerged as indispensable tools for developers and businesses, streamlining trading processes and improving user experience.

APIs bridge the gap between users, trading platforms, and blockchain networks, enabling efficient operations like order execution, wallet integration, and market data retrieval. This blog dives into the importance of crypto exchange API integration, its benefits, and how businesses can leverage it to create feature-rich trading platforms.

What is a Crypto Exchange API?

A Crypto Exchange API is a software interface that enables seamless communication between cryptocurrency trading platforms and external applications. It provides developers with access to various functionalities, such as real-time price tracking, trade execution, and account management, allowing them to integrate these features into their platforms.

Types of Crypto Exchange APIs:

REST APIs: Used for simple, one-time data requests (e.g., fetching market data or placing a trade).

WebSocket APIs: Provide real-time data streaming for high-frequency trading and live updates.

FIX APIs (Financial Information Exchange): Designed for institutional-grade trading with high-speed data transfers.

Key Benefits of Crypto Exchange API Integration

1. Real-Time Market Data Access

APIs provide up-to-the-second updates on cryptocurrency prices, trading volumes, and order book depth, empowering traders to make informed decisions.

Use Case:

Developers can build dashboards that display live market trends and price movements.

2. Automated Trading

APIs enable algorithmic trading by allowing users to execute buy and sell orders based on predefined conditions.

Use Case:

A trading bot can automatically place orders when specific market criteria are met, eliminating the need for manual intervention.

3. Multi-Exchange Connectivity

Crypto APIs allow platforms to connect with multiple exchanges, aggregating liquidity and providing users with the best trading options.

Use Case:

Traders can access a broader range of cryptocurrencies and trading pairs without switching between platforms.

4. Enhanced User Experience

By integrating APIs, businesses can offer features like secure wallet connections, fast transaction processing, and detailed analytics, improving the overall user experience.

Use Case:

Users can track their portfolio performance in real-time and manage assets directly through the platform.

5. Increased Scalability

API integration allows trading platforms to handle a higher volume of users and transactions efficiently, ensuring smooth operations during peak trading hours.

Use Case:

Exchanges can scale seamlessly to accommodate growth in user demand.

Essential Features of Crypto Exchange API Integration

1. Trading Functionality

APIs must support core trading actions, such as placing market and limit orders, canceling trades, and retrieving order statuses.

2. Wallet Integration

Securely connect wallets for seamless deposits, withdrawals, and balance tracking.

3. Market Data Access

Provide real-time updates on cryptocurrency prices, trading volumes, and historical data for analysis.

4. Account Management

Allow users to manage their accounts, view transaction history, and set preferences through the API.

5. Security Features

Integrate encryption, two-factor authentication (2FA), and API keys to safeguard user data and funds.

Steps to Integrate Crypto Exchange APIs

1. Define Your Requirements

Determine the functionalities you need, such as trading, wallet integration, or market data retrieval.

2. Choose the Right API Provider

Select a provider that aligns with your platform’s requirements. Popular providers include:

Binance API: Known for real-time data and extensive trading options.

Coinbase API: Ideal for wallet integration and payment processing.

Kraken API: Offers advanced trading tools for institutional users.

3. Implement API Integration

Use REST APIs for basic functionalities like fetching market data.

Implement WebSocket APIs for real-time updates and faster trading processes.

4. Test and Optimize

Conduct thorough testing to ensure the API integration performs seamlessly under different scenarios, including high traffic.

5. Launch and Monitor

Deploy the integrated platform and monitor its performance to address any issues promptly.

Challenges in Crypto Exchange API Integration

1. Security Risks

APIs are vulnerable to breaches if not properly secured. Implement robust encryption, authentication, and monitoring tools to mitigate risks.

2. Latency Issues

High latency can disrupt real-time trading. Opt for APIs with low latency to ensure a smooth user experience.

3. Regulatory Compliance

Ensure the integration adheres to KYC (Know Your Customer) and AML (Anti-Money Laundering) regulations.

The Role of Crypto Exchange Platform Development Services

Partnering with a professional crypto exchange platform development service ensures your platform leverages the full potential of API integration.

What Development Services Offer:

Custom API Solutions: Tailored to your platform’s specific needs.

Enhanced Security: Implementing advanced security measures like API key management and encryption.

Real-Time Capabilities: Optimizing APIs for high-speed data transfers and trading.

Regulatory Compliance: Ensuring the platform meets global legal standards.

Scalability: Building infrastructure that grows with your user base and transaction volume.

Real-World Examples of Successful API Integration

1. Binance

Features: Offers REST and WebSocket APIs for real-time market data and trading.

Impact: Enables developers to build high-performance trading bots and analytics tools.

2. Coinbase

Features: Provides secure wallet management APIs and payment processing tools.

Impact: Streamlines crypto payments and wallet integration for businesses.

3. Kraken

Features: Advanced trading APIs for institutional and professional traders.

Impact: Supports multi-currency trading with low-latency data feeds.

Conclusion

Crypto exchange API integration is a game-changer for businesses looking to streamline trading processes and enhance user experience. From enabling real-time data access to automating trades and managing wallets, APIs unlock endless possibilities for innovation in cryptocurrency trading platforms.

By partnering with expert crypto exchange platform development services, you can ensure secure, scalable, and efficient API integration tailored to your platform’s needs. In the ever-evolving world of cryptocurrency, seamless API integration is not just an advantage—it’s a necessity for staying ahead of the competition.

Are you ready to take your crypto exchange platform to the next level?

#cryptocurrencyexchange#crypto exchange platform development company#crypto exchange development company#white label crypto exchange development#cryptocurrency exchange development service#cryptoexchange

2 notes

·

View notes