#AI/ML models

Explore tagged Tumblr posts

Text

Upcoming 5G Technology Advanced? Dive into the Future Now!

Upcoming in 5G technology Advanced?

First look at 3GPP Release

The decade-long 5G technology evolution reached a milestone this month. The scope of 5G Advanced Release was decided at the final 3GPP RAN Plenary meeting of 2023 in Edinburgh after months of intense discussions. Let’s examine the major projects expected to start soon after the new year.

Giving 5G Advanced Released

Based on 3GPP Released, Released 5G will introduce new 5G features. It drives system improvements, expands into new use cases, adds capabilities, and lays the groundwork for 6G. Examine each focus area in detail.

5G system improvements

As the ecosystem learns from deployed networks and devices, Release will prioritize 5G commercialization-relevant improvements.These projects strengthen 5G technology.

A brief overview:

Uplink and downlink MIMO evolution: 5G massive multiple-input, multiple-output (MIMO) capabilities improve, with extra focus on uplink performance and beam management to reduce overhead/latency. Work is also underway to support up to 128 CSI-RS ports to match antenna configurations of modern massive MIMO deployments.

Multi-TRP operation is also evolved to target scenarios with single-TRP operation in Downlink and multi-TRP operation in Uplink, enabling feedback enhancements for improved Coherent Joint Transmission (CJT) support under non-ideal synchronization and backhaul assumptions. Finally, a 3-antenna port non-coherent UL codebook design will be specified.

Supporting inter-CU Layer 2 mobility and related measurements enhancements are the main goals of device mobility enhancements. Conditioning Layer 2 mobility and a new Study Item to improve wireless AI/ML device mobility are also supported.

Advanced network topology: This release begins by studying Wireless Access and Backhaul (WAB) and 5G femto-cells before specifying them. WAB is a gNB with Mobile Terminal (MT) function that provides PDU session backhaul for all 5G deployments. Support architecture and protocol stack will be examined. Femto-cells were specified for 3G and 4G but not 5G Technology. Released will be examined for missing functionality to support 5G femto-cells.

Other system improvements: A project to improve self-organizing networks (SON)/minimization of drive tests (MDT) was also approved to address mobility enhancements and Rel-18 RACH optimizations and carrier aggregation support. A large number of smaller projects to improve performance and efficiency in coverage, multi-carrier operations, multi-SIM, sidelink, positioning, quality of experience, broadcast, device capabilities, and more will be considered for approval in September ’24 based on commercial needs and Released progress.

Additional use case variations

Released will support new and enhanced use cases beyond mobile broadband (smartphones, PCs, fixed wireless access) and vertical services (IoT, automotive) to help 5G Technology reach its full potential. Some key areas

Get new features in Release:

Ambient IoT: Released will first study architecture and design options for extremely low-complexity 5G devices with small or no energy storage (i.e., battery-less) and with/without signal generation or amplification through a harmonized specification. The topology where the gNB directly communicates with Ambient IoT tags and the UE acts as the reader are considered.

Enhance boundless XR: Released will improve traffic scheduling, device power savings, and latency to improve user experiences. Released focused on capacity and power consumption optimizations.

Released will increase uplink capacity and support regenerative payload for IoT-NTN and NR-NTN. NR-NTN will improve downlink coverage and add MBS and RedCap support to NTN.

New advanced abilities

5G Technology Advanced introduces the second wave of innovations that will transform the 5G system. Release will continue many of these projects’ multi-release studies/work. Some examples:

Wireless AI: Released begins the Work Item after Released extensive AI-enabled air interface and framework for next-generation RAN study. The goal is to provide specification support for a general AI/ML framework for air interface and specific beam management and positioning use cases from Released. Released in the december will also study channel state information enhancement use cases to find larger gains and better understand two-sided AI/ML models.

Network energy savings: Release specifies more 5G network energy-saving methods. To save network energy, this includes studying on-demand SIB1 transmissions for UEs in Idle/Inactive mode, specifying on-demand SSB for UEs in Connected mode configured with Carrier Aggregation, and adapting common signal/channel transmissions.

Low-power wakeup receiver (LP-WUR): The Released Study of Item is moving into the Work Item phase, supporting the most efficient signaling (e.g., waveform, measurement, sync) for very low-power IoT use cases (e.g., sensors) with enhanced RedCap features.

6G tech foundation

5G Advanced aims to set 6G technology directions early. While Released is the last “5G only” release (Release 20 is expected to begin 6G studies), several projects are exploring the technical possibilities of these

6G enablers: Full duplex is the wireless communications holy grail because transmitting and receiving in the same band is rewarding and difficult.Will standardize subband full duplex using self and crosslink interference mitigation.

Enable new spectrum bands: To meet the insatiable capacity demand, the wireless ecosystem is studying the upper midband spectrum’s 7–24 GHz channel characteristics, which can deliver 500 MHz or more contiguous bandwidth. This could be 6G’s wide-area band.

Combining wireless communications with RF sensing is a key differentiator for 6G, according to industry opinion. This synergy can enable sensing-assisted communications, public safety, and other new use cases. Released in the studying channel characteristics for sensing various objects Our world is changing with 5G Technology, Qualcomm is excited to lead 5G Technology Advanced and see it change our world unprecedentedly.

Read more on Govindhtech.com

0 notes

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

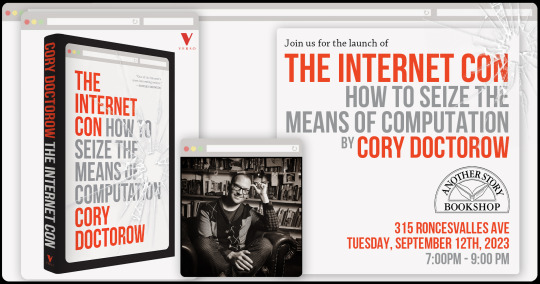

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

forgot adrien miraculous ladybug was made into an ai and just remembered today. i know this is going to haunt him forever... he is siri. he is the equal to amazon’s alexa. i know hes going to get people running on 0 hours of sleep hearing his voice and giving him voice commands because it doesnt register this is the real life celebrity adrien agreste and not their friend adrien from their phone. a person in a coffee shop line like, “hey adrien, can you set an alarm for 7 am” into their phone and they hear the guy from their phone behind them go “i mean... sure if you want me to i guess i could do that.”

#miraculous ladybug#ml#adrien agreste#cal.ibrations#was talking abt this with vi#like hes not escaping this . level of fame that i dont think people will forget.#like i think if he stopped modelling he'd eventually fall out of relevance#but the ai shit???? no thats staying forever.

141 notes

·

View notes

Link

Centuries of scientific invention have been driven by the search for new drugs to fight against diseases. Since medicinal plants became valuable sources of medicine from synthetic chemistry, we have consistently improved our capacity to design molecules with therapeutic potential. However, the traditional drug discovery process is time-consuming, costly, and fraught with challenges. It is here that ETH Zurich Chemists develop DRAGONFLY (Drug-target interActome-based GeneratiON oF noveL biologicallY active molecules), a novel de novo drug design method, promising to make the creation of new therapeutics easier.

The conventional drug discovery pipeline is a slow process involving target identification, hit discovery, lead optimization, and pre-clinical and clinical development. It has produced numerous drugs that have saved millions of lives, but there are limitations.

Sequential Nature: The conventional drug discovery pipeline is a slow process involving target identification, hit discovery, lead optimization, and pre-clinical and clinical development. It has produced numerous drugs that have saved millions of lives, but there are limitations.

Continue Reading

24 notes

·

View notes

Text

AI PROMPTS FOR BEGINNERS

Tired of feeling lost in this increasingly AI-filled world? It seems like every day there's new news about AI, promising to change everything around us, right? And you are you ready for this change?

Don't worry! With the eBook "Prompts for Beginners," you can enter the world of AI in a simple, fun way, without needing to be a programming expert! Imagine being able to create your own AI projects, and generate amazing responses for anything in your life, from difficult math problems to emotional song lyrics!

Just like fire, the wheel, and electricity changed history, AI is transforming our present and shaping the future. And you are you going to be left out of this? Learning to use prompts is the key to opening up a world of possibilities with AI.

Don't wait! With this easy-to-follow guide, you won't just learn the basic concepts of AI, but you'll also be able to work with others who are interested, share your creations, and get important feedback. Take advantage of this opportunity to improve your career and open paths to a promising future, with the many possibilities that AI offers. The future is coming! Get your copy of "Prompts for Beginners" and start your journey towards success in the AI era!

AI PROMPTS FOR BEGINNERS

A - Attention

Tired of feeling lost in this increasingly AI-filled world? It seems like every day there's new news about AI, promising to change everything around us, right? Are you ready for this change?

I - Interest

Don't worry! With the eBook "Prompts for Beginners," you can enter the world of AI in a simple, fun way, without needing to be a programming expert! Imagine being able to create your own AI projects, generate amazing responses for anything in your life, from difficult math problems to emotional song lyrics!

D - Desire

Just like fire, the wheel, and electricity changed history, AI is transforming our present and shaping the future. And you, are you going to be left out of this? Learning to use prompts is the key to opening up a world of possibilities with AI.

A - Action

Don't wait! With this easy-to-follow guide, you won't just learn the basic concepts of AI, but you'll also be able to work with others who are interested, share your creations, and get important feedback. Take advantage of this opportunity to improve your career and open paths to a promising future, with the many possibilities that AI offers. The future is coming! Get your copy of "Prompts for Beginners" and start your journey towards success in the AI era!

Don't waste any more time!

Detalhes do produto

ASIN: B0DK8213XH

Número de páginas: 103 páginas. https://www.amazon.com.br/AI-PROMPTS-BEGINNERS-Entering-Programming-ebook/dp/B0DK8213XH/ref=mp_s_a_1_3?crid=UYI4FZOX4S2Z&dib=eyJ2IjoiMSJ9.dRjcH1MUAwimVCX7oqoOSd4eXMQxG7QLd-1DUE6AUI4MuSjWWWdhV1211mtNG4NcxUxVysgoouxA1sABKUcUOMuouMh06GRbg3QXqf1vcE4qs5wPz7UffHOYdHxlF_k_2UhDaj_zvq4FifMvxL1i-QrjZQ_LTNvdjMfGtguWbi4.H3FpWEBP3JjVCphGzt2jJRsssUqct9wTs668IBCw0CY&dib_tag=se&keywords=rubem+didini+filho&qid=1729354843&sprefix=rubem+didini+filho%2Caps%2C233&sr=8-3

2 notes

·

View notes

Text

Statistical Tools

Daily writing promptWhat was the last thing you searched for online? Why were you looking for it?View all responses Checking which has been my most recent search on Google, I found that I asked for papers, published in the last 5 years, that used a Montecarlo method to check the reliability of a mathematical method to calculate a team’s efficacy. Photo by Andrea Piacquadio on Pexels.com I was…

View On WordPress

#Adjusted R-Squared#Agile#AI#AIC#Akaike Information Criterion#Akaike Information Criterion (AIC)#Algorithm#algorithm design#Analysis#Artificial Intelligence#Bayesian Information Criterion#Bayesian Information Criterion (BIC)#BIC#Business#Coaching#consulting#Cross-Validation#dailyprompt#dailyprompt-2043#Goodness of Fit#Hypothesis Testing#inputs#Machine Learning#Mathematical Algorithm#Mathematics#Mean Squared Error#ML#Model Selection#Monte Carlo#Monte Carlo Methods

2 notes

·

View notes

Text

I'm pretty sure all those tools people recognize as ai are actually machine learning because isn't ai supposed to be predictive rather than generative

#<- guy who didn't pay attention and got a D in ai class#like most of the work we did in that class was pathfinding for robots#I'm almost sure all that generative stuff being touted as AI is actually ML#they use the same math but they got different meanings#idk I guess it's something like the name ai has already been recognized by non-tech people as something else#so what's the point in trying to correct the distinction#I guess something like autocorrect could be touted as NLP or AI or ML#idek what I'm saying anymore#I'm watching baseball and theres this thing Google calls ai to overanalyze the game lol#I'm almost sure it's just a regular degular data collection model of every play#if you want VCs to give you money just slap the letters AI into the title#if you want to sell just make sure it's got AI in the name#stupid

2 notes

·

View notes

Text

python generate questions for a given context

# for a given context, generate a question using an ai model # https://pythonprogrammingsnippets.tumblr.com import torch device = torch.device("cpu") from transformers import AutoTokenizer, AutoModelForSeq2SeqLM tokenizer = AutoTokenizer.from_pretrained("voidful/context-only-question-generator") model = AutoModelForSeq2SeqLM.from_pretrained("voidful/context-only-question-generator").to(device) def get_questions_for_context(context, model, tokenizer, num_count=5): inputs = tokenizer(context, return_tensors="pt") with torch.no_grad(): outputs = model.generate(**inputs, num_beams=num_count, num_return_sequences=num_count) return [tokenizer.decode(output, skip_special_tokens=True) for output in outputs] def get_question_for_context(context, model, tokenizer): return get_questions_for_context(context, model, tokenizer)[0] # send array of sentences, and the function will return an array of questions def context_sentences_to_questions(context, model, tokenizer): questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) return questions

example 1 (split a string by "." and process):

context = "The capital of France is Paris." context += "The capital of Germany is Berlin." context += "The capital of Spain is Madrid." context += "He is a dog named Robert." if len(context.split(".")) > 2: questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) print(questions) else: question = get_question_for_context(context, model, tokenizer) print(question)

output:

['What is the capital of France?', 'What is the capital of Germany?', 'What is the capital of Spain?', 'Who is Robert?']

example 2 (generate multiple questions for a given context):

print("\r\n\r\n") context = "She walked to the store to buy a jug of milk." print("Context:\r\n", context) print("") questions = get_questions_for_context(context, model, tokenizer, num_count=15) # pretty print all the questions print("Generated Questions:") for question in questions: print(question) print("\r\n\r\n")

output:

Generated Questions: Where did she go to buy milk? What did she walk to the store to buy? Why did she walk to the store to buy milk? Why did she go to the store? Why did she go to the grocery store? What did she go to the store to buy? Where did the woman go to buy milk? Why did she go to the store to buy milk? What did she buy at the grocery store? Why did she walk to the store? What kind of milk did she buy at the store? Where did she walk to buy milk? What kind of milk did she buy? Where did she go to get milk? What did she buy at the store?

and if we wanted to answer those questions (ez pz):

# now generate an answer for a given question from transformers import AutoTokenizer, AutoModelForQuestionAnswering tokenizer = AutoTokenizer.from_pretrained("deepset/tinyroberta-squad2") model = AutoModelForQuestionAnswering.from_pretrained("deepset/tinyroberta-squad2") def get_answer_for_question(question, context, model, tokenizer): inputs = tokenizer(question, context, return_tensors="pt") with torch.no_grad(): outputs = model(**inputs) answer_start_index = outputs.start_logits.argmax() answer_end_index = outputs.end_logits.argmax() predict_answer_tokens = inputs.input_ids[0, answer_start_index : answer_end_index + 1] tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) target_start_index = torch.tensor([14]) target_end_index = torch.tensor([15]) outputs = model(**inputs, start_positions=target_start_index, end_positions=target_end_index) loss = outputs.loss answer = tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) return answer print("Context:\r\n", context, "\r\n") for question in questions: # right pad the question to 60 characters question_text = question.ljust(50) answer = get_answer_for_question(question, context, model, tokenizer) print("Question: ", question_text, "Answer: ", answer)

#python#ai#ml#generate questions#questions#question answer#qa#text generation#text#generation#generate text#ai generation#llm#large language models#large language model#artificial intelligence#context#AutoModelForSeq2SeqLM#tokenizer#tokens#question answer model#model#models#voidful/context-only-question-generator#data processing#datascience#data science#science#compsci#language

16 notes

·

View notes

Text

ChatGPT, LLMs, Plagiarism, & You

This is the first in a series of posts about ChatGPT, LLMs, and plagiarism that I will be making. This is a side blog, so please ask questions in reblogs and my ask box.

Why do I know what I'm talking about?

I am a machine engineer who specializes natural language processing (NLP). I write code that uses LLMs every day at work and am intimately familiar with OpenAI. I have read dozens of scientific papers on the subject and understand how they work in extreme detail. I have 6 years of experience in the industry, plus a graduate degree in the subject. I got into NLP because I knew it was going to pop off, and now here we are.

Yeah, but why should I trust you?

I've been a Tumblr user for 8 years. I've posted my own art and fanart on the site. I've published writing, both original and fanfiction, on Tumblr and AO3. I've been a Reddit user for over a decade. I'm a citizen of the internet as much as I am an engineer.

What is an LLM?

LLM stands for Large Language Model. The most famous example of an LLM is ChatGPT, which was created by OpenAI.

What is a model?

A model is an algorithm or piece of math that lets you predict or make mimic how something behaves. For example:

The National Weather Service runs weather models that predict how much it's going to rain based on data they collect about the atmosphere

Netflix has recommendations models that predicts whether you'd like a movie or not based on your demographics, what you've watched in the past, and what other people have liked

The Federal Reserve has economic models that predict how inflation will change if they increase or lower interest rates

Instagram has spam models that look at DMs and automatically decide whether they're spam or not

Models are useful because they can often make decisions or describe situations better than a human could. The weather and economic models are good examples of this. The science of rain is so complicated that it's practically impossible for a human to make sense of all the numbers involved, but models are able to do so.

Models are also useful because they can make thousands or millions of decisions much faster than a human could. The recommendations and spam models are good examples of this. Imagine how expensive it would be to run Instagram if a human had to review every single DM and decide whether it was spam.

What is a language model?

A language model is a model that can look at a piece of text and tell you how likely it is. For example, a language model can tell you that the phrase "the sky is blue" is more likely to have been written than "the sky is peanuts."

Why is this useful? You can use language models to generate text by picking letters and words that it gives a high score. Say you have the phrase "I ate a" and you're picking what comes next. You can run through every option, see how likely the language model thinks it is, and pick the best one. For example:

I ate a sandwich: score = .7

I ate a $(iwnJ98: score = .1

I ate a me: score = .2

So we pick "sandwich" and now have the phrase "I ate a sandwich." We can keep doing this process over and over to get more and more text. "I ate a sandwich for lunch today. It was delicious."

What makes a large language model large?

Large language models are large in a few different ways:

Under the hood, they are made of a bunch of numbers called "weights" that describe a monstrously complicated mathematical equation. Large language models have a ton of the weights--as many as tens of billions of them.

Large language models are trained on large amounts of text. This text comes mostly from the internet but also includes books that are out of copyright. This is the source of controversy about them and plagiarism, and I will cover it in greater detail in a future post.

Large language models are a large undertaking: they're expensive and difficult to create and run. This is why you basically only see them coming out of large or well-funded companies like OpenAI, Google, and Facebook. They require an incredible amount of technical expertise and computational resources (computers) to create.

Why are LLMs powerful?

"Generating likely text" is neat and all, but why do we care? Consider this:

An LLM can tell you that:

the text "Hello" is more likely to have been written than "$(iwnJ98"

the text "I ran to the store" is more likely to have been written than "I runned to the store"

the text "the sky is blue" is more likely to have been written than "the sky is green"

Each of them gets us something:

LLMs understand spelling

LLMs understand grammar

LLMs know things about the world

So we now have an infinitely patient robot that we can interact with using natural language and get it to do stuff for us.

Detecting spam: "Is this spam, yes or no? Check out rxpharmcy.ca now for cheap drugs now."

Personal language tutoring: "What is wrong with this sentence? Me gusto gatos."

Copy editing: "I'm not a native English speaker. Can you help me rewrite this email to make sure it sounds professional? 'Hi Akash, I hope...'"

Help learning new subjects: "Why is the sky blue? I'm only in middle school, so please don't make the explanation too complicated."

And countless other things.

#codeblr#software engineer#software#swe#chatgpt#chat gpt#gpt4#nlp#natural language processing#machine learning#ml#ai#artificial intelligence#agi#llm#llms#language models#large language models#computer science#generative#generative ai#generative models#python#tensorflow#torch#pytorch#neurips#openai#google#facebook

2 notes

·

View notes

Text

I get mad when I see anyone talking about ML/AI on the internet ever because it makes me so incandescently mad that it's being used wrong because there are genuinely really good applications!! And all people want to do is to fucking replace people's jobs but doing it worse or shove it into random shit!!! And it also makes me mad that it maligns all the existing cool and good projects that get painted with the same tainted brush so anything ML ever gets slapped with the "AI bad" label automatically out of reflex because all the bullshit!!! Aughhhhh

#jasper.txt#im sorry i have not slept for 24 hours i am traveling and very tired so sorry for the incoherency#i wish people would realize that 1) its not a machine god or evil oncarnate its just a technological tool#being used for bad is because its being used to explicitly benefit capitalism not an inherent property of the thing itself#2) computers are very very very stupid#and ai will just overfiy and lie so it can get more correctness points#overfit#the inherent contradiction of 'general ai' is that machine lesrning is inherently hyper specialized#like chat gpt isnt a search enginge its a language machine#it makes language#there is no such thing as verification or correctness of data#ive caref a lot about how to ethically create ml models for a long time and it makes me really really mad the way things turn out ok

2 notes

·

View notes

Text

i’ve talked about this before but to fight back against this (intentional) marketing obfuscation you need to figure out and use the real names for these things

what makes NPCs in video games do things: NPC behaviour (this is a hard one since it’s been called AI the longest, so this might not be best)

complex scientific models: machine learning

non-generative tools that automate tedious processes: scripts / programs / apps

generative tools: large language models (LLMs - things like chatgpt) or image generation (DALL-E or mid journey)

using the right terminology for this stuff helps fight back against this and also helps understand what it actually is.

chatgpt isn’t an ‘AI’ it’s a large language model, which is just something that’s been trained on a huge amount of text and can fairly accurately predict what the next word of a given sentence is.

mid journey isn’t an ‘AI’ it’s an image generator, in that you give it some textual prompt and it will generate an image that it’s been trained would closely match that text

i will fight to the ends of the earth to try and get this naming more widely used because the current atmosphere of calling everything mildly interesting ‘AI’ has completely ruined the term to the point of unusability. AI no longer means anything because it can mean everything that a computer can do but we still have other terms with more specific meanings that accurately describe what these things are doing and we should use them

#this is my area of specialty so it frustrates me a lot#my degree has a specialisation in AI which mostly means i studied ML models#i will reblog this many times over the next few weeks so prep for that#lizabeth talkabeth

46K notes

·

View notes

Text

SEMANTIC TREE AND AI TECHNOLOGIES

Semantic Tree learning and AI technologies can be combined to solve problems by leveraging the power of natural language processing and machine learning.

Semantic trees are a knowledge representation technique that organizes information in a hierarchical, tree-like structure.

Each node in the tree represents a concept or entity, and the connections between nodes represent the relationships between those concepts.

This structure allows for the representation of complex, interconnected knowledge in a way that can be easily navigated and reasoned about.

CONCEPTS

Semantic Tree: A structured representation where nodes correspond to concepts and edges denote relationships (e.g., hyponyms, hyponyms, synonyms).

Meaning: Understanding the context, nuances, and associations related to words or concepts.

Natural Language Understanding (NLU): AI techniques for comprehending and interpreting human language.

First Principles: Fundamental building blocks or core concepts in a domain.

AI (Artificial Intelligence): AI refers to the development of computer systems that can perform tasks that typically require human intelligence. AI technologies include machine learning, natural language processing, computer vision, and more. These technologies enable computers to understand reason, learn, and make decisions.

Natural Language Processing (NLP): NLP is a branch of AI that focuses on the interaction between computers and human language. It involves the analysis and understanding of natural language text or speech by computers. NLP techniques are used to process, interpret, and generate human languages.

Machine Learning (ML): Machine Learning is a subset of AI that enables computers to learn and improve from experience without being explicitly programmed. ML algorithms can analyze data, identify patterns, and make predictions or decisions based on the learned patterns.

Deep Learning: A subset of machine learning that uses neural networks with multiple layers to learn complex patterns.

EXAMPLES OF APPLYING SEMANTIC TREE LEARNING WITH AI.

1. Text Classification: Semantic Tree learning can be combined with AI to solve text classification problems. By training a machine learning model on labeled data, the model can learn to classify text into different categories or labels. For example, a customer support system can use semantic tree learning to automatically categorize customer queries into different topics, such as billing, technical issues, or product inquiries.

2. Sentiment Analysis: Semantic Tree learning can be used with AI to perform sentiment analysis on text data. Sentiment analysis aims to determine the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. By analyzing the semantic structure of the text using Semantic Tree learning techniques, machine learning models can classify the sentiment of customer reviews, social media posts, or feedback.

3. Question Answering: Semantic Tree learning combined with AI can be used for question answering systems. By understanding the semantic structure of questions and the context of the information being asked, machine learning models can provide accurate and relevant answers. For example, a Chabot can use Semantic Tree learning to understand user queries and provide appropriate responses based on the analyzed semantic structure.

4. Information Extraction: Semantic Tree learning can be applied with AI to extract structured information from unstructured text data. By analyzing the semantic relationships between entities and concepts in the text, machine learning models can identify and extract specific information. For example, an AI system can extract key information like names, dates, locations, or events from news articles or research papers.

Python Snippet Codes for Semantic Tree Learning with AI

Here are four small Python code snippets that demonstrate how to apply Semantic Tree learning with AI using popular libraries:

1. Text Classification with scikit-learn:

```python

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

# Training data

texts = ['This is a positive review', 'This is a negative review', 'This is a neutral review']

labels = ['positive', 'negative', 'neutral']

# Vectorize the text data

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(texts)

# Train a logistic regression classifier

classifier = LogisticRegression()

classifier.fit(X, labels)

# Predict the label for a new text

new_text = 'This is a positive sentiment'

new_text_vectorized = vectorizer.transform([new_text])

predicted_label = classifier.predict(new_text_vectorized)

print(predicted_label)

```

2. Sentiment Analysis with TextBlob:

```python

from textblob import TextBlob

# Analyze sentiment of a text

text = 'This is a positive sentence'

blob = TextBlob(text)

sentiment = blob.sentiment.polarity

# Classify sentiment based on polarity

if sentiment > 0:

sentiment_label = 'positive'

elif sentiment < 0:

sentiment_label = 'negative'

else:

sentiment_label = 'neutral'

print(sentiment_label)

```

3. Question Answering with Transformers:

```python

from transformers import pipeline

# Load the question answering model

qa_model = pipeline('question-answering')

# Provide context and ask a question

context = 'The Semantic Web is an extension of the World Wide Web.'

question = 'What is the Semantic Web?'

# Get the answer

answer = qa_model(question=question, context=context)

print(answer['answer'])

```

4. Information Extraction with spaCy:

```python

import spacy

# Load the English language model

nlp = spacy.load('en_core_web_sm')

# Process text and extract named entities

text = 'Apple Inc. is planning to open a new store in New York City.'

doc = nlp(text)

# Extract named entities

entities = [(ent.text, ent.label_) for ent in doc.ents]

print(entities)

```

APPLICATIONS OF SEMANTIC TREE LEARNING WITH AI

Semantic Tree learning combined with AI can be used in various domains and industries to solve problems. Here are some examples of where it can be applied:

1. Customer Support: Semantic Tree learning can be used to automatically categorize and route customer queries to the appropriate support teams, improving response times and customer satisfaction.

2. Social Media Analysis: Semantic Tree learning with AI can be applied to analyze social media posts, comments, and reviews to understand public sentiment, identify trends, and monitor brand reputation.

3. Information Retrieval: Semantic Tree learning can enhance search engines by understanding the meaning and context of user queries, providing more accurate and relevant search results.

4. Content Recommendation: By analyzing the semantic structure of user preferences and content metadata, Semantic Tree learning with AI can be used to personalize content recommendations in platforms like streaming services, news aggregators, or e-commerce websites.

Semantic Tree learning combined with AI technologies enables the understanding and analysis of text data, leading to improved problem-solving capabilities in various domains.

COMBINING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Semantic Reasoning: By integrating semantic trees with AI, systems can engage in more sophisticated reasoning and decision-making. The semantic tree provides a structured representation of knowledge, while AI techniques like natural language processing and knowledge representation can be used to navigate and reason about the information in the tree.

2. Explainable AI: Semantic trees can make AI systems more interpretable and explainable. The hierarchical structure of the tree can be used to trace the reasoning process and understand how the system arrived at a particular conclusion, which is important for building trust in AI-powered applications.

3. Knowledge Extraction and Representation: AI techniques like machine learning can be used to automatically construct semantic trees from unstructured data, such as text or images. This allows for the efficient extraction and representation of knowledge, which can then be used to power various problem-solving applications.

4. Hybrid Approaches: Combining semantic trees and AI can lead to hybrid approaches that leverage the strengths of both. For example, a system could use a semantic tree to represent domain knowledge and then apply AI techniques like reinforcement learning to optimize decision-making within that knowledge structure.

EXAMPLES OF APPLYING SEMANTIC TREE AND AI FOR PROBLEM SOLVING

1. Medical Diagnosis: A semantic tree could represent the relationships between symptoms, diseases, and treatments. AI techniques like natural language processing and machine learning could be used to analyze patient data, navigate the semantic tree, and provide personalized diagnosis and treatment recommendations.

2. Robotics and Autonomous Systems: Semantic trees could be used to represent the knowledge and decision-making processes of autonomous systems, such as self-driving cars or drones. AI techniques like computer vision and reinforcement learning could be used to navigate the semantic tree and make real-time decisions in dynamic environments.

3. Financial Analysis: Semantic trees could be used to model complex financial relationships and market dynamics. AI techniques like predictive analytics and natural language processing could be applied to the semantic tree to identify patterns, make forecasts, and support investment decisions.

4. Personalized Recommendation Systems: Semantic trees could be used to represent user preferences, interests, and behaviors. AI techniques like collaborative filtering and content-based recommendation could be used to navigate the semantic tree and provide personalized recommendations for products, content, or services.

PYTHON CODE SNIPPETS

1. Semantic Tree Construction using NetworkX:

```python

import networkx as nx

import matplotlib.pyplot as plt

# Create a semantic tree

G = nx.DiGraph()

G.add_node("root", label="Root")

G.add_node("concept1", label="Concept 1")

G.add_node("concept2", label="Concept 2")

G.add_node("concept3", label="Concept 3")

G.add_edge("root", "concept1")

G.add_edge("root", "concept2")

G.add_edge("concept2", "concept3")

# Visualize the semantic tree

pos = nx.spring_layout(G)

nx.draw(G, pos, with_labels=True)

plt.show()

```

2. Semantic Reasoning using PyKEEN:

```python

from pykeen.models import TransE

from pykeen.triples import TriplesFactory

# Load a knowledge graph dataset

tf = TriplesFactory.from_path("./dataset/")

# Train a TransE model on the knowledge graph

model = TransE(triples_factory=tf)

model.fit(num_epochs=100)

# Perform semantic reasoning

head = "concept1"

relation = "isRelatedTo"

tail = "concept3"

score = model.score_hrt(head, relation, tail)

print(f"The score for the triple ({head}, {relation}, {tail}) is: {score}")

```

3. Knowledge Extraction using spaCy:

```python

import spacy

# Load the spaCy model

nlp = spacy.load("en_core_web_sm")

# Extract entities and relations from text

text = "The quick brown fox jumps over the lazy dog."

doc = nlp(text)

# Visualize the extracted knowledge

from spacy import displacy

displacy.render(doc, style="ent")

```

4. Hybrid Approach using Ray:

```python

import ray

from ray.rllib.agents.ppo import PPOTrainer

from ray.rllib.env.multi_agent_env import MultiAgentEnv

from ray.rllib.models.tf.tf_modelv2 import TFModelV2

# Define a custom model that integrates a semantic tree

class SemanticTreeModel(TFModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

super().__init__(obs_space, action_space, num_outputs, model_config, name)

# Implement the integration of the semantic tree with the neural network

# Define a multi-agent environment that uses the semantic tree model

class SemanticTreeEnv(MultiAgentEnv):

def __init__(self):

self.semantic_tree = # Initialize the semantic tree

self.agents = # Define the agents

def step(self, actions):

# Implement the environment dynamics using the semantic tree

# Train the hybrid model using Ray

ray.init()

config = {

"env": SemanticTreeEnv,

"model": {

"custom_model": SemanticTreeModel,

},

}

trainer = PPOTrainer(config=config)

trainer.train()

```

APPLICATIONS

The combination of semantic trees and AI can be applied to a wide range of problem domains, including:

- Healthcare: Improving medical diagnosis, treatment planning, and drug discovery.

- Finance: Enhancing investment strategies, risk management, and fraud detection.

- Robotics and Autonomous Systems: Enabling more intelligent and adaptable decision-making in complex environments.

- Education: Personalizing learning experiences and providing intelligent tutoring systems.

- Smart Cities: Optimizing urban planning, transportation, and resource management.

- Environmental Conservation: Modeling and predicting environmental changes, and supporting sustainable decision-making.

- Chatbots and Virtual Assistants:

Use semantic trees to understand user queries and provide context-aware responses.

Apply NLU models to extract meaning from user input.

- Information Retrieval:

Build semantic search engines that understand user intent beyond keyword matching.

Combine semantic trees with vector embeddings (e.g., BERT) for better search results.

- Medical Diagnosis:

Create semantic trees for medical conditions, symptoms, and treatments.

Use AI to match patient symptoms to relevant diagnoses.

- Automated Content Generation:

Construct semantic trees for topics (e.g., climate change, finance).

Generate articles, summaries, or reports based on semantic understanding.

RDIDINI PROMPT ENGINEER

#semantic tree#ai solutions#ai-driven#ai trends#ai system#ai model#ai prompt#ml#ai predictions#llm#dl#nlp

3 notes

·

View notes

Text

Leading AI Software Development Solutions in Malaysia

Empowering businesses in Malaysia with cutting-edge AI Software Development in Malaysia, tailored software solutions, and innovative automation tools to drive growth and efficiency.

#NLP Services in Malaysia#ML Development Company in Malaysia#AI Software Development in Malaysia#AI Model Training in Malaysia#AI Development Service in Malaysia

0 notes

Text

To continue beating the horse...

Spoiler: almost certainly.

"chatGPT will confidently spit out information cobbled together from various sources in its dataset that sounds correct even when it blatantly isn't"

correct! that's why it's important to remember that chatbots don't have any sort of inherent fact-checking

"this means it's LYING to you! why, i work at a library, and just the other day, i had three college students submit lists of entirely nonexistent articles that chatGPT had cited as sources!"

well i think "lying" is anthropomorphizing it a little bit too m- oh my god what the fuck graduate students are using chatGPT as a resource? for writing PAPERS???? and not even googling the articles they asked you for first??? and you think the issue here is fucking CHATGPT???????

12K notes

·

View notes