#AI research 2024

Text

Latest Modern Advances in Prompt Engineering: A Comprehensive Guide

New Post has been published on https://thedigitalinsider.com/latest-modern-advances-in-prompt-engineering-a-comprehensive-guide/

Latest Modern Advances in Prompt Engineering: A Comprehensive Guide

Prompt engineering, the art and science of crafting prompts that elicit desired responses from LLMs, has become a crucial area of research and development.

From enhancing reasoning capabilities to enabling seamless integration with external tools and programs, the latest advances in prompt engineering are unlocking new frontiers in artificial intelligence. In this comprehensive technical blog, we’ll delve into the latest cutting-edge techniques and strategies that are shaping the future of prompt engineering.

Prompt Engineering

Advanced Prompting Strategies for Complex Problem-Solving

While CoT prompting has proven effective for many reasoning tasks, researchers have explored more advanced prompting strategies to tackle even more complex problems. One such approach is Least-to-Most Prompting, which breaks down a complex problem into smaller, more manageable sub-problems that are solved independently and then combined to reach the final solution.

Another innovative technique is the Tree of Thoughts (ToT) prompting, which allows the LLM to generate multiple lines of reasoning or “thoughts” in parallel, evaluate its own progress towards the solution, and backtrack or explore alternative paths as needed. This approach leverages search algorithms like breadth-first or depth-first search, enabling the LLM to engage in lookahead and backtracking during the problem-solving process.

Integrating LLMs with External Tools and Programs

While LLMs are incredibly powerful, they have inherent limitations, such as an inability to access up-to-date information or perform precise mathematical reasoning. To address these drawbacks, researchers have developed techniques that enable LLMs to seamlessly integrate with external tools and programs.

One notable example is Toolformer, which teaches LLMs to identify scenarios that require the use of external tools, specify which tool to use, provide relevant input, and incorporate the tool’s output into the final response. This approach involves constructing a synthetic training dataset that demonstrates the proper use of various text-to-text APIs.

Another innovative framework, Chameleon, takes a “plug-and-play” approach, allowing a central LLM-based controller to generate natural language programs that compose and execute a wide range of tools, including LLMs, vision models, web search engines, and Python functions. This modular approach enables Chameleon to tackle complex, multimodal reasoning tasks by leveraging the strengths of different tools and models.

Fundamental Prompting Strategies

Zero-Shot Prompting

Zero-shot prompting involves describing the task in the prompt and asking the model to solve it without any examples. For instance, to translate “cheese” to French, a zero-shot prompt might be:

Translate the following English word to French: cheese.

This approach is straightforward but can be limited by the ambiguity of task descriptions.

Few-Shot Prompting

Few-shot prompting improves upon zero-shot by including several examples of the task. For example:

Translate the following English words to French:

1. apple => pomme

2. house => maison

3. cheese => fromage

This method reduces ambiguity and provides a clearer guide for the model, leveraging the in-context learning abilities of LLMs.

Instruction Prompting

Instruction prompting explicitly describes the desired output, which is particularly effective with models trained to follow instructions. For example:

Translate the word "cheese" to French. The correct translation is "fromage."

Models like GPT-4 have been specifically fine-tuned to follow such instructions accurately.

Enhancing Reasoning Capabilities with Chain-of-Thought Prompting

One of the most significant breakthroughs in prompt engineering has been the development of Chain-of-Thought (CoT) prompting. This technique aims to elicit and improve the reasoning capabilities of LLMs by encouraging them to generate a step-by-step thought process or rationale before providing the final answer.

Left: Traditional methods of prompting LLMs rely on generic inputs (0-shot CoT) or necessitate labeled examples (few-shot CoT). Right: The novel approach prompts LLMs to self-create relevant examples prior to problem-solving, removing the need for labeling while customizing examples to each unique problem

CoT prompting works by presenting the LLM with examples that include not only the input and output but also the intermediate reasoning steps or “chain of thought” that leads to the solution. By learning from these exemplars, the LLM can then generate its own chain of thought when presented with new problems, improving its ability to tackle complex reasoning tasks.

Example:

Input: Natalie has 3 apples, and she wants to share them equally with her 2 friends. How many apples will each person get? Chain of Thought: Okay, let’s break this down step-by-step:

Natalie has 3 apples

She wants to share them equally with 2 friends

So there are 3 people in total (Natalie and her 2 friends)

To share the apples equally, we divide the total number of apples (3) by the number of people (3)

3 divided by 3 is 1

Therefore, each person will get 1 apple. Output: Each person will get 1 apple.

By explicitly generating the reasoning process, LLMs like GPT-3 and Codex have demonstrated significant improvements in arithmetic, commonsense, and symbolic reasoning tasks.

Self-Consistency

Self-consistency improves the reliability of CoT prompting by generating multiple chains of thought and taking a majority vote on the final answer. This method mitigates the impact of any single incorrect reasoning path.

Least-to-Most Prompting

Least-to-most prompting breaks down complex problems into simpler sub-problems, solving each one sequentially and using the context of previous solutions to inform subsequent steps. This approach is beneficial for multi-step reasoning tasks.

Recent Advances in Prompt Engineering

Prompt engineering is evolving rapidly, and several innovative techniques have emerged to improve the performance of large language models (LLMs). Let’s explore some of these cutting-edge methods in detail:

Auto-CoT (Automatic Chain-of-Thought Prompting)

What It Is: Auto-CoT is a method that automates the generation of reasoning chains for LLMs, eliminating the need for manually crafted examples. This technique uses zero-shot Chain-of-Thought (CoT) prompting, where the model is guided to think step-by-step to generate its reasoning chains.

How It Works:

Zero-Shot CoT Prompting: The model is given a simple prompt like “Let’s think step by step” to encourage detailed reasoning.

Diversity in Demonstrations: Auto-CoT selects diverse questions and generates reasoning chains for these questions, ensuring a variety of problem types and reasoning patterns.

Advantages:

Automation: Reduces the manual effort required to create reasoning demonstrations.

Performance: On various benchmark reasoning tasks, Auto-CoT has matched or exceeded the performance of manual CoT prompting.

Complexity-Based Prompting

What It Is: This technique selects examples with the highest complexity (i.e., the most reasoning steps) to include in the prompt. It aims to improve the model’s performance on tasks requiring multiple steps of reasoning.

How It Works:

Example Selection: Prompts are chosen based on the number of reasoning steps they contain.

Complexity-Based Consistency: During decoding, multiple reasoning chains are sampled, and the majority vote is taken from the most complex chains.

Advantages:

Improved Performance: Substantially better accuracy on multi-step reasoning tasks.

Robustness: Effective even under different prompt distributions and noisy data.

Progressive-Hint Prompting (PHP)

What It Is: PHP iteratively refines the model’s answers by using previously generated rationales as hints. This method leverages the model’s previous responses to guide it toward the correct answer through multiple iterations.

How It Works:

Initial Answer: The model generates a base answer using a standard prompt.

Hints and Refinements: This base answer is then used as a hint in subsequent prompts to refine the answer.

Iterative Process: This process continues until the answer stabilizes over consecutive iterations.

Advantages:

Accuracy: Significant improvements in reasoning accuracy.

Efficiency: Reduces the number of sample paths needed, enhancing computational efficiency.

Decomposed Prompting (DecomP)

What It Is: DecomP breaks down complex tasks into simpler sub-tasks, each handled by a specific prompt or model. This modular approach allows for more effective handling of intricate problems.

How It Works:

Task Decomposition: The main problem is divided into simpler sub-tasks.

Sub-Task Handlers: Each sub-task is managed by a dedicated model or prompt.

Modular Integration: These handlers can be optimized, replaced, or combined as needed to solve the complex task.

Advantages:

Flexibility: Easy to debug and improve specific sub-tasks.

Scalability: Handles tasks with long contexts and complex sub-tasks effectively.

Hypotheses-to-Theories (HtT) Prompting

What It Is: HtT uses a scientific discovery process where the model generates and verifies hypotheses to solve complex problems. This method involves creating a rule library from verified hypotheses, which the model uses for reasoning.

How It Works:

Induction Stage: The model generates potential rules and verifies them against training examples.

Rule Library Creation: Verified rules are collected to form a rule library.

Deduction Stage: The model applies these rules to new problems, using the rule library to guide its reasoning.

Advantages:

Accuracy: Reduces the likelihood of errors by relying on a verified set of rules.

Transferability: The learned rules can be transferred across different models and problem forms.

Tool-Enhanced Prompting Techniques

Toolformer

Toolformer integrates LLMs with external tools via text-to-text APIs, allowing the model to use these tools to solve problems it otherwise couldn’t. For example, an LLM could call a calculator API to perform arithmetic operations.

Chameleon

Chameleon uses a central LLM-based controller to generate a program that composes several tools to solve complex reasoning tasks. This approach leverages a broad set of tools, including vision models and web search engines, to enhance problem-solving capabilities.

GPT4Tools

GPT4Tools finetunes open-source LLMs to use multimodal tools via a self-instruct approach, demonstrating that even non-proprietary models can effectively leverage external tools for improved performance.

Gorilla and HuggingGPT

Both Gorilla and HuggingGPT integrate LLMs with specialized deep learning models available online. These systems use a retrieval-aware finetuning process and a planning and coordination approach, respectively, to solve complex tasks involving multiple models.

Program-Aided Language Models (PALs) and Programs of Thoughts (PoTs)

In addition to integrating with external tools, researchers have explored ways to enhance LLMs’ problem-solving capabilities by combining natural language with programming constructs. Program-Aided Language Models (PALs) and Programs of Thoughts (PoTs) are two such approaches that leverage code to augment the LLM’s reasoning process.

PALs prompt the LLM to generate a rationale that interleaves natural language with code (e.g., Python), which can then be executed to produce the final solution. This approach addresses a common failure case where LLMs generate correct reasoning but produce an incorrect final answer.

Similarly, PoTs employ a symbolic math library like SymPy, allowing the LLM to define mathematical symbols and expressions that can be combined and evaluated using SymPy’s solve function. By delegating complex computations to a code interpreter, these techniques decouple reasoning from computation, enabling LLMs to tackle more intricate problems effectively.

Understanding and Leveraging Context Windows

LLMs’ performance heavily relies on their ability to process and leverage the context provided in the prompt. Researchers have investigated how LLMs handle long contexts and the impact of irrelevant or distracting information on their outputs.

The “Lost in the Middle” phenomenon highlights how LLMs tend to pay more attention to information at the beginning and end of their context, while information in the middle is often overlooked or “lost.” This insight has implications for prompt engineering, as carefully positioning relevant information within the context can significantly impact performance.

Another line of research focuses on mitigating the detrimental effects of irrelevant context, which can severely degrade LLM performance. Techniques like self-consistency, explicit instructions to ignore irrelevant information, and including exemplars that demonstrate solving problems with irrelevant context can help LLMs learn to focus on the most pertinent information.

Improving Writing Capabilities with Prompting Strategies

While LLMs excel at generating human-like text, their writing capabilities can be further enhanced through specialized prompting strategies. One such technique is Skeleton-of-Thought (SoT) prompting, which aims to reduce the latency of sequential decoding by mimicking the human writing process.

SoT prompting involves prompting the LLM to generate a skeleton or outline of its answer first, followed by parallel API calls to fill in the details of each outline element. This approach not only improves inference latency but can also enhance writing quality by encouraging the LLM to plan and structure its output more effectively.

Another prompting strategy, Chain of Density (CoD) prompting, focuses on improving the information density of LLM-generated summaries. By iteratively adding entities into the summary while keeping the length fixed, CoD prompting allows users to explore the trade-off between conciseness and completeness, ultimately producing more informative and readable summaries.

Emerging Directions and Future Outlook

Advanced Prompt Engineering

The field of prompt engineering is rapidly evolving, with researchers continuously exploring new frontiers and pushing the boundaries of what’s possible with LLMs. Some emerging directions include:

Active Prompting: Techniques that leverage uncertainty-based active learning principles to identify and annotate the most helpful exemplars for solving specific reasoning problems.

Multimodal Prompting: Extending prompting strategies to handle multimodal inputs that combine text, images, and other data modalities.

Automatic Prompt Generation: Developing optimization techniques to automatically generate effective prompts tailored to specific tasks or domains.

Interpretability and Explainability: Exploring prompting methods that improve the interpretability and explainability of LLM outputs, enabling better transparency and trust in their decision-making processes.

As LLMs continue to advance and find applications in various domains, prompt engineering will play a crucial role in unlocking their full potential. By leveraging the latest prompting techniques and strategies, researchers and practitioners can develop more powerful, reliable, and task-specific AI solutions that push the boundaries of what’s possible with natural language processing.

Conclusion

The field of prompt engineering for large language models is rapidly evolving, with researchers continually pushing the boundaries of what’s possible. From enhancing reasoning capabilities with techniques like Chain-of-Thought prompting to integrating LLMs with external tools and programs, the latest advances in prompt engineering are unlocking new frontiers in artificial intelligence.

#advanced LLM techniques#ai#AI research 2024#Algorithms#API#APIs#apple#applications#approach#Art#artificial#Artificial Intelligence#attention#automation#Backtracking#benchmark#Blog#calculator#Chain-of-Thought prompting#code#codex#complexity#comprehensive#computation#cutting#data#Deep Learning#details#development#diversity

0 notes

Text

Toby Regbo & James Northcote on nowallslive on Twitch.TV – July 11, 2024

#12 Toby's AI Lasagna (x)

twitch_clip

#10 AI as a tool for research

twitch_clip

#11 Toby On Talking To AI Advisor

twitch_clip

#13 Claire on AI

#Toby Regbo#James Northcote#Video#nowallslive#Twitch#July 2024#Friends#Aethelred & Aldhelm#The Last Kingdom#AI#Help#Research#Advisor#Lasagna#LOL Ridiculous#Toby Is So Adorable And Funny#Claire Lim#Complex#Social Media#Interesting#Listening#Love Their Interactions#Twitch.TV

2 notes

·

View notes

Text

Supercharge Your SEO with Smart AI Integration

Discover how AI can revolutionize your SEO strategy.

In today’s fast-paced digital world, keeping your website ahead of the pack takes more than the usual SEO tricks. Artificial intelligence (AI) is now a game-changer in search engine optimization, transforming how businesses approach their online strategies. This guide dives into smart AI integration secrets to give your SEO a powerful boost and help your site shine.

Why AI is a Big Deal in…

#AI content creation#AI in SEO#AI personalization#AI SEO benefits#AI SEO integration#AI tools for SEO#AI-driven SEO tools#AI-enhanced SEO#AI-powered UX#artificial intelligence in SEO#boost your SEO#Content Optimization#keyword research with AI#rank higher with AI#SEO automation#SEO ranking tools#SEO strategy 2024#SEO trends 2024#smart AI for SEO#technical SEO

0 notes

Text

#AI chatbot#AI ethics specialist#AI jobs#Ai Jobsbuster#AI landscape 2024#AI product manager#AI research scientist#AI software products#AI tools#Artificial Intelligence (AI)#BERT#Clarifai#computational graph#Computer Vision API#Content creation#Cortana#creativity#CRM platform#cybersecurity analyst#data scientist#deep learning#deep-learning framework#DeepMind#designing#distributed computing#efficiency#emotional analysis#Facebook AI research lab#game-playing#Google Duplex

0 notes

Text

I missed the times when repeated video game banter lines didn’t bother me. Too late for the old 2004 Spider-Man 2 game to dazzle me like my same TMNT games did. Loved Black Cat in the game, and learning VERY late that Spidey could swing to Roosevelt Island, when I had Sophomore to Senior Level High School grades at that place.

#layouts#screenshots#collages#ai art cutouts#background remove#ai art exploring#cutout#hobby#habit#spider man phase#spidey kun#comic book pages#marvel comics#speech bubbles#valentines day 2024#researching#spider man game#bright colors#red hearts#spider man 2002#video gameplay#mtv spiderman#spiderman the new animated series#heart shaped#hero in distress#deliciously vulnerable#mary jane watson#jewelry#pastry#gumoko

1 note

·

View note

Text

𝐇𝐨𝐰 𝐀𝐫𝐭𝐢𝐟𝐢𝐜𝐢𝐚𝐥 𝐈𝐧𝐭𝐞𝐥𝐥𝐢𝐠𝐞𝐧𝐜𝐞 𝐖𝐢𝐥𝐥 𝐂𝐡𝐚𝐧𝐠𝐞 𝐓𝐡𝐞 𝐅𝐮𝐭𝐮𝐫𝐞 𝐨𝐟 𝐌𝐚𝐫𝐤𝐞𝐭𝐢𝐧𝐠 𝐈𝐧 𝟐𝟎𝟐𝟒

𝐈𝐧𝐭𝐫𝐨𝐝𝐮𝐜𝐭𝐢𝐨𝐧

In an era where technology evolves at an unprecedented pace, artificial intelligence (AI) stands out as a transformative force reshaping industries across the globe. One such sector undergoing a significant metamorphosis is marketing. As we delve into the intricacies of this evolving landscape, it becomes apparent that AI is not just a technological advancement but a game-changer for marketers seeking innovative ways to connect with their audience.

𝐇𝐨𝐰 𝐀𝐈 𝐢𝐬 𝐂𝐡𝐚𝐧𝐠𝐢𝐧𝐠 𝐌𝐚𝐫𝐤𝐞𝐭𝐢𝐧𝐠 𝐚𝐧𝐝 𝐀𝐝𝐯𝐞𝐫𝐭𝐢𝐬𝐢𝐧𝐠

Artificial intelligence has infiltrated marketing and advertising, altering traditional approaches and injecting them with unprecedented efficiency. Machine learning algorithms analyze vast datasets at lightning speed, enabling marketers to glean valuable insights into consumer behaviour, preferences, and trends. This data-driven approach allows for highly targeted and personalized campaigns, ensuring that businesses reach the right audience at the right time.

Chatbots, powered by AI, have become an integral part of customer service, providing instant responses and enhancing the user experience. Natural language processing (NLP) algorithms enable these bots to understand and respond to user queries, creating a seamless and interactive engagement platform.

AI-driven content creation tools, like automated writing and design platforms, are streamlining the creative process. These tools analyze data to generate compelling content that resonates with specific target audiences, freeing up time for marketers to focus on strategy and innovation.

𝐁𝐞𝐧𝐞𝐟𝐢𝐭𝐬 𝐨𝐟 𝐔𝐬𝐢𝐧𝐠 𝐀𝐈 𝐢𝐧 𝐌𝐚𝐫𝐤𝐞𝐭𝐢𝐧𝐠 𝐚𝐧𝐝 𝐀𝐝𝐯𝐞𝐫𝐭𝐢𝐬𝐢𝐧𝐠

1. Precision Targeting:

AI empowers marketers to target specific demographics with unparalleled precision, ensuring that promotional efforts are directed toward the most receptive audience. This not only maximizes the impact of marketing campaigns but also optimizes advertising budgets.

2. Personalization:

The ability to analyze vast amounts of data enables AI to create highly personalized customer experiences. From product recommendations to tailored content, AI ensures that every interaction is relevant and resonant, fostering customer loyalty and satisfaction.

3. Automation and Efficiency:

Mundane and time-consuming tasks are automated through AI, allowing marketing teams to focus on strategic initiatives. This boosts efficiency, reduces human error, and accelerates the pace of campaign execution.

Potential Drawbacks of AI in Marketing and Advertising:

1. Ethical Concerns:

The collection and utilization of vast amounts of user data raise ethical concerns surrounding privacy. Marketers must navigate the fine line between personalized advertising and invasive practices, respecting consumer privacy to maintain trust.

2. Overreliance on data:

While data-driven decision-making is a strength, an overreliance on AI algorithms may lead to a lack of human intuition in marketing strategy. It's essential to strike a balance between data-driven insights and creative human input.

3. Initial Implementation Costs:

Adopting AI technologies may involve significant upfront costs for businesses. Smaller enterprises may find it challenging to invest in AI, potentially creating a divide in marketing capabilities.

The Future of AI in Marketing and Advertising:

Looking ahead, the future of AI in marketing promises even more groundbreaking developments. Predictive analytics will become more sophisticated, allowing marketers to anticipate consumer needs and trends. Augmented reality (AR) and virtual reality (VR) experiences, driven by AI, will offer immersive and highly engaging marketing campaigns.

AI will continue to evolve content creation, producing not just personalized text but also dynamic multimedia content. Voice-activated devices and smart assistants will further integrate into marketing strategies, presenting new avenues for brand interaction.

Conclusion:

Artificial intelligence is not just a tool for marketers; it's a catalyst for innovation and progress. As we navigate the dynamic landscape of marketing and advertising, it's imperative to embrace AI responsibly, mindful of the ethical considerations and potential pitfalls. The benefits of precision targeting, personalization, and efficiency far outweigh the drawbacks, positioning AI as the driving force behind the future of marketing. By harnessing the power of AI, businesses can not only stay relevant in the digital age but also thrive in an era of unprecedented connectivity and opportunity.

𝐓𝐨 𝐑𝐞𝐚𝐝 𝐌𝐨𝐫𝐞 𝐨𝐟 𝐎𝐮𝐫 𝐁𝐥𝐨𝐠𝐬 𝐂𝐥𝐢𝐜�� 𝐓𝐡𝐞 𝐁𝐞𝐥𝐨𝐰 ��

#artificial intelligence future for business#artificial intelligence#artificial intelligence future#artificial intelligence tools 2024#artificial intelligence in marketing#ai in marketing 2024#marketing with ai in 2024#marketing & sales with ai in 2024#marketing research with ai in 2024

0 notes

Text

5 things about AI you may have missed today: AI sparks fears in finance, AI-linked misinformation, more

AI sparks fears in finance, business, and law; Chinese military trains AI to predict enemy actions on battlefield with ChatGPT-like models; OpenAI’s GPT store faces challenge as users exploit platform for ‘AI Girlfriends’; Anthropic study reveals alarming deceptive abilities in AI models- this and more in our daily roundup. Let us take a look.

1. AI sparks fears in finance, business, and law

AI’s…

View On WordPress

#ai#AI chatbot moderation challenges#AI chatbots#AI enemy behavior prediction#AI fueled misinformation#AI generated misinformation#AI girlfriend#AI risks in finance#Anthropic AI research#chatgpt#China military AI development#deceptive AI models#finance#FINRA AI emerging risk#HT tech#military AI#OpenAI GPT store#tech news#total solar eclipse 2024#world economic forum davos survey

0 notes

Text

taylorswift: Like many of you, I watched the debate tonight. If you haven’t already, now is a great time to do your research on the issues at hand and the stances these candidates take on the topics that matter to you the most. As a voter, I make sure to watch and read everything I can about their proposed policies and plans for this country.

Recently I was made aware that AI of ‘me’ falsely endorsing Donald Trump’s presidential run was posted to his site. It really conjured up my fears around AI, and the dangers of spreading misinformation. It brought me to the conclusion that I need to be very transparent about my actual plans for this election as a voter. The simplest way to combat misinformation is with the truth.

I will be casting my vote for Kamala Harris and Tim Walz in the 2024 Presidential Election. I’m voting for @kamalaharris because she fights for the rights and causes I believe need a warrior to champion them. I think she is a steady-handed, gifted leader and I believe we can accomplish so much more in this country if we are led by calm and not chaos. I was so heartened and impressed by her selection of running mate @timwalz, who has been standing up for LGBTQ+ rights, IVF, and a woman’s right to her own body for decades.

I’ve done my research, and I’ve made my choice. Your research is all yours to do, and the choice is yours to make. I also want to say, especially to first time voters: Remember that in order to vote, you have to be registered! I also find it’s much easier to vote early. I’ll link where to register and find early voting dates and info in my story.

With love and hope,

Taylor Swift

Childless Cat Lady

2K notes

·

View notes

Text

"Doctors have begun trialling the world’s first mRNA lung cancer vaccine in patients, as experts hailed its “groundbreaking” potential to save thousands of lives.

Lung cancer is the world’s leading cause of cancer death, accounting for about 1.8m deaths every year. Survival rates in those with advanced forms of the disease, where tumours have spread, are particularly poor.

Now experts are testing a new jab that instructs the body to hunt down and kill cancer cells – then prevents them ever coming back. Known as BNT116 and made by BioNTech, the vaccine is designed to treat non-small cell lung cancer (NSCLC), the most common form of the disease.

The phase 1 clinical trial, the first human study of BNT116, has launched across 34 research sites in seven countries: the UK, US, Germany, Hungary, Poland, Spain and Turkey.

The UK has six sites, located in England and Wales, with the first UK patient to receive the vaccine having their initial dose on Tuesday [August 20, 2024].

Overall, about 130 patients – from early-stage before surgery or radiotherapy, to late-stage disease or recurrent cancer – will be enrolled to have the jab alongside immunotherapy. About 20 will be from the UK.

The jab uses messenger RNA (mRNA), similar to Covid-19 vaccines, and works by presenting the immune system with tumour markers from NSCLC to prime the body to fight cancer cells expressing these markers.

The aim is to strengthen a person’s immune response to cancer while leaving healthy cells untouched, unlike chemotherapy.

“We are now entering this very exciting new era of mRNA-based immunotherapy clinical trials to investigate the treatment of lung cancer,” said Prof Siow Ming Lee, a consultant medical oncologist at University College London hospitals NHS foundation trust (UCLH), which is leading the trial in the UK.

“It’s simple to deliver, and you can select specific antigens in the cancer cell, and then you target them. This technology is the next big phase of cancer treatment.”

Janusz Racz, 67, from London, was the first person to have the vaccine in the UK. He was diagnosed in May and soon after started chemotherapy and radiotherapy.

The scientist, who specialises in AI, said his profession inspired him to take part in the trial. “I am a scientist too, and I understand that the progress of science – especially in medicine – lies in people agreeing to be involved in such investigations,” he said...

“And also, I can be a part of the team that can provide proof of concept for this new methodology, and the faster it would be implemented across the world, more people will be saved.”

Racz received six consecutive injections five minutes apart over 30 minutes at the National Institute for Health Research UCLH Clinical Research Facility on Tuesday.

Each jab contained different RNA strands. He will get the vaccine every week for six consecutive weeks, and then every three weeks for 54 weeks.

Lee said: “We hope adding this additional treatment will stop the cancer coming back because a lot of time for lung cancer patients, even after surgery and radiation, it does come back.” ...

“We hope to go on to phase 2, phase 3, and then hope it becomes standard of care worldwide and saves lots of lung cancer patients.”

The Guardian revealed in May that thousands of patients in England were to be fast-tracked into groundbreaking trials of cancer vaccines in a revolutionary world-first NHS “matchmaking” scheme to save lives.

Under the scheme, patients who meet the eligibility criteria will gain access to clinical trials for the vaccines that experts say represent a new dawn in cancer treatment."

-via The Guardian, May 30, 2024

#cw cancer#cancer research#cancer#lung cancer#nhs#england#vaccine#cancer vaccines#public health#medical news#good news#hope

988 notes

·

View notes

Text

Generative AI Policy (February 9, 2024)

As of February 9, 2024, we are updating our Terms of Service to prohibit the following content:

Images created through the use of generative AI programs such as Stable Diffusion, Midjourney, and Dall-E.

This post explains what that means for you. We know it’s impossible to remove all images created by Generative AI on Pillowfort. The goal of this new policy, however, is to send a clear message that we are against the normalization of commercializing and distributing images created by Generative AI. Pillowfort stands in full support of all creatives who make Pillowfort their home.

Disclaimer: The following policy was shaped in collaboration with Pillowfort Staff and international university researchers. We are aware that Artificial Intelligence is a rapidly evolving environment. This policy may require revisions in the future to adapt to the changing landscape of Generative AI.

-

Why is Generative AI Banned on Pillowfort?

Our Terms of Service already prohibits copyright violations, which includes reposting other people’s artwork to Pillowfort without the artist’s permission; and because of how Generative AI draws on a database of images and text that were taken without consent from artists or writers, all Generative AI content can be considered in violation of this rule. We also had an overwhelming response from our user base urging us to take action on prohibiting Generative AI on our platform.

-

How does Pillowfort define Generative AI?

As of February 9, 2024 we define Generative AI as online tools for producing material based on large data collection that is often gathered without consent or notification from the original creators.

Generative AI tools do not require skill on behalf of the user and effectively replace them in the creative process (ie - little direction or decision making taken directly from the user). Tools that assist creativity don't replace the user. This means the user can still improve their skills and refine over time.

For example: If you ask a Generative AI tool to add a lighthouse to an image, the image of a lighthouse appears in a completed state. Whereas if you used an assistive drawing tool to add a lighthouse to an image, the user decides the tools used to contribute to the creation process and how to apply them.

Examples of Tools Not Allowed on Pillowfort:

Adobe Firefly*

Dall-E

GPT-4

Jasper Chat

Lensa

Midjourney

Stable Diffusion

Synthesia

Example of Tools Still Allowed on Pillowfort:

AI Assistant Tools (ie: Google Translate, Grammarly)

VTuber Tools (ie: Live3D, Restream, VRChat)

Digital Audio Editors (ie: Audacity, Garage Band)

Poser & Reference Tools (ie: Poser, Blender)

Graphic & Image Editors (ie: Canva, Adobe Photoshop*, Procreate, Medibang, automatic filters from phone cameras)

*While Adobe software such as Adobe Photoshop is not considered Generative AI, Adobe Firefly is fully integrated in various Adobe software and falls under our definition of Generative AI. The use of Adobe Photoshop is allowed on Pillowfort. The creation of an image in Adobe Photoshop using Adobe Firefly would be prohibited on Pillowfort.

-

Can I use ethical generators?

Due to the evolving nature of Generative AI, ethical generators are not an exception.

-

Can I still talk about AI?

Yes! Posts, Comments, and User Communities discussing AI are still allowed on Pillowfort.

-

Can I link to or embed websites, articles, or social media posts containing Generative AI?

Yes. We do ask that you properly tag your post as “AI” and “Artificial Intelligence.”

-

Can I advertise the sale of digital or virtual goods containing Generative AI?

No. Offsite Advertising of the sale of goods (digital and physical) containing Generative AI on Pillowfort is prohibited.

-

How can I tell if a software I use contains Generative AI?

A general rule of thumb as a first step is you can try testing the software by turning off internet access and seeing if the tool still works. If the software says it needs to be online there’s a chance it’s using Generative AI and needs to be explored further.

You are also always welcome to contact us at [email protected] if you’re still unsure.

-

How will this policy be enforced/detected?

Our Team has decided we are NOT using AI-based automated detection tools due to how often they provide false positives and other issues. We are applying a suite of methods sourced from international universities responding to moderating material potentially sourced from Generative AI instead.

-

How do I report content containing Generative AI Material?

If you are concerned about post(s) featuring Generative AI material, please flag the post for our Site Moderation Team to conduct a thorough investigation. As a reminder, Pillowfort’s existing policy regarding callout posts applies here and harassment / brigading / etc will not be tolerated.

Any questions or clarifications regarding our Generative AI Policy can be sent to [email protected].

2K notes

·

View notes

Text

ive made this post before but a good chunk of the dumb takes on this site (and irl, but especially on here) stem from laypeople assuming they are smarter than an entire field of research. it goes beyond the Dunning-Kruger Effect and starts veering into outright contempt for intellectualism. like, to use a specific example: there are people still talking about "poisoning AI training datasets" with Nightshade and its ilk three months into 2024 (as if the training datasets still need to be scraped and AI research companies hadn't immediately figured out how to defeat it by applying a 1% Gaussian blur within the first week). hearing someone parrot this talking point instantly lowers my opinion of them for two reasons: first, they just don't know what they're talking about and refuse to learn, but secondly... you really think that little of the developers behind these AI art models? you really think they would just throw up their hands and go "ah shucks guys, they started poisoning the datasets, time to pack it up". like at this point my brain automatically interprets 99% of AI art criticism as "i am so monumentally self-centered and egotistical i think i know more than every expert in this field despite refusing to do any research"

3K notes

·

View notes

Text

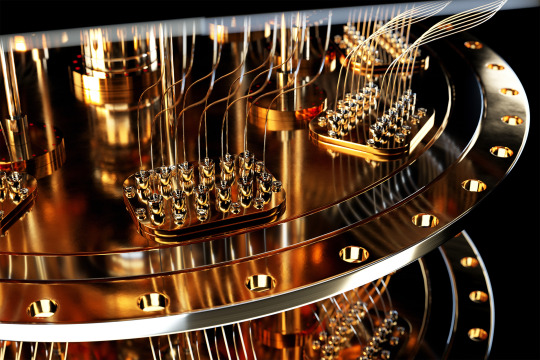

Toward a code-breaking quantum computer

New Post has been published on https://thedigitalinsider.com/toward-a-code-breaking-quantum-computer/

Toward a code-breaking quantum computer

The most recent email you sent was likely encrypted using a tried-and-true method that relies on the idea that even the fastest computer would be unable to efficiently break a gigantic number into factors.

Quantum computers, on the other hand, promise to rapidly crack complex cryptographic systems that a classical computer might never be able to unravel. This promise is based on a quantum factoring algorithm proposed in 1994 by Peter Shor, who is now a professor at MIT.

But while researchers have taken great strides in the last 30 years, scientists have yet to build a quantum computer powerful enough to run Shor’s algorithm.

As some researchers work to build larger quantum computers, others have been trying to improve Shor’s algorithm so it could run on a smaller quantum circuit. About a year ago, New York University computer scientist Oded Regev proposed a major theoretical improvement. His algorithm could run faster, but the circuit would require more memory.

Building off those results, MIT researchers have proposed a best-of-both-worlds approach that combines the speed of Regev’s algorithm with the memory-efficiency of Shor’s. This new algorithm is as fast as Regev’s, requires fewer quantum building blocks known as qubits, and has a higher tolerance to quantum noise, which could make it more feasible to implement in practice.

In the long run, this new algorithm could inform the development of novel encryption methods that can withstand the code-breaking power of quantum computers.

“If large-scale quantum computers ever get built, then factoring is toast and we have to find something else to use for cryptography. But how real is this threat? Can we make quantum factoring practical? Our work could potentially bring us one step closer to a practical implementation,” says Vinod Vaikuntanathan, the Ford Foundation Professor of Engineering, a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL), and senior author of a paper describing the algorithm.

The paper’s lead author is Seyoon Ragavan, a graduate student in the MIT Department of Electrical Engineering and Computer Science. The research will be presented at the 2024 International Cryptology Conference.

Cracking cryptography

To securely transmit messages over the internet, service providers like email clients and messaging apps typically rely on RSA, an encryption scheme invented by MIT researchers Ron Rivest, Adi Shamir, and Leonard Adleman in the 1970s (hence the name “RSA”). The system is based on the idea that factoring a 2,048-bit integer (a number with 617 digits) is too hard for a computer to do in a reasonable amount of time.

That idea was flipped on its head in 1994 when Shor, then working at Bell Labs, introduced an algorithm which proved that a quantum computer could factor quickly enough to break RSA cryptography.

“That was a turning point. But in 1994, nobody knew how to build a large enough quantum computer. And we’re still pretty far from there. Some people wonder if they will ever be built,” says Vaikuntanathan.

It is estimated that a quantum computer would need about 20 million qubits to run Shor’s algorithm. Right now, the largest quantum computers have around 1,100 qubits.

A quantum computer performs computations using quantum circuits, just like a classical computer uses classical circuits. Each quantum circuit is composed of a series of operations known as quantum gates. These quantum gates utilize qubits, which are the smallest building blocks of a quantum computer, to perform calculations.

But quantum gates introduce noise, so having fewer gates would improve a machine’s performance. Researchers have been striving to enhance Shor’s algorithm so it could be run on a smaller circuit with fewer quantum gates.

That is precisely what Regev did with the circuit he proposed a year ago.

“That was big news because it was the first real improvement to Shor’s circuit from 1994,” Vaikuntanathan says.

The quantum circuit Shor proposed has a size proportional to the square of the number being factored. That means if one were to factor a 2,048-bit integer, the circuit would need millions of gates.

Regev’s circuit requires significantly fewer quantum gates, but it needs many more qubits to provide enough memory. This presents a new problem.

“In a sense, some types of qubits are like apples or oranges. If you keep them around, they decay over time. You want to minimize the number of qubits you need to keep around,” explains Vaikuntanathan.

He heard Regev speak about his results at a workshop last August. At the end of his talk, Regev posed a question: Could someone improve his circuit so it needs fewer qubits? Vaikuntanathan and Ragavan took up that question.

Quantum ping-pong

To factor a very large number, a quantum circuit would need to run many times, performing operations that involve computing powers, like 2 to the power of 100.

But computing such large powers is costly and difficult to perform on a quantum computer, since quantum computers can only perform reversible operations. Squaring a number is not a reversible operation, so each time a number is squared, more quantum memory must be added to compute the next square.

The MIT researchers found a clever way to compute exponents using a series of Fibonacci numbers that requires simple multiplication, which is reversible, rather than squaring. Their method needs just two quantum memory units to compute any exponent.

“It is kind of like a ping-pong game, where we start with a number and then bounce back and forth, multiplying between two quantum memory registers,” Vaikuntanathan adds.

They also tackled the challenge of error correction. The circuits proposed by Shor and Regev require every quantum operation to be correct for their algorithm to work, Vaikuntanathan says. But error-free quantum gates would be infeasible on a real machine.

They overcame this problem using a technique to filter out corrupt results and only process the right ones.

The end-result is a circuit that is significantly more memory-efficient. Plus, their error correction technique would make the algorithm more practical to deploy.

“The authors resolve the two most important bottlenecks in the earlier quantum factoring algorithm. Although still not immediately practical, their work brings quantum factoring algorithms closer to reality,” adds Regev.

In the future, the researchers hope to make their algorithm even more efficient and, someday, use it to test factoring on a real quantum circuit.

“The elephant-in-the-room question after this work is: Does it actually bring us closer to breaking RSA cryptography? That is not clear just yet; these improvements currently only kick in when the integers are much larger than 2,048 bits. Can we push this algorithm and make it more feasible than Shor’s even for 2,048-bit integers?” says Ragavan.

This work is funded by an Akamai Presidential Fellowship, the U.S. Defense Advanced Research Projects Agency, the National Science Foundation, the MIT-IBM Watson AI Lab, a Thornton Family Faculty Research Innovation Fellowship, and a Simons Investigator Award.

#2024#ai#akamai#algorithm#Algorithms#approach#apps#artificial#Artificial Intelligence#author#Building#challenge#classical#code#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computers#computing#conference#cryptography#cybersecurity#defense#Defense Advanced Research Projects Agency (DARPA)#development#efficiency#Electrical Engineering&Computer Science (eecs)#elephant#email

3 notes

·

View notes

Text

LINK ROT / TRANSMISSION 001 / APRIL 3rd, 2024

Link Rot is a multimedia webserial following those within a research station. Once dedicated to the study and containment of a newly discovered life form, complications arise following its unexpected merge with the station's AI.

TRANSMISSION 001 - In which you survive the unthinkable, and the world is worse for it.

General warnings can be found in the 'About' section. Any videos will have flashing warnings if applicable.

additional rambling under the cut

wow!!! it's been (checks archive) almost exactly a year since i originally conceptualized Link Rot, and since then it's grown into a beast of a story i cannot wait to tackle.

currently i'm aiming for updates once every month/month and a half (with occasional vacations to work on bite-sized projects). i'd like to do more, but i have a job and don't want to burn myself out. for now, i will take things slow B) maybe this will change in the future!

i hope you all enjoy reading my story as much as i enjoy creating it. there's a couple of hidden things on the website, so if you find anything fun let me know

and lastly,

take this

#interactive fiction#web serial#horror#robot oc#web fiction#dither#black and white#link rot#link rot webserial#if im radio silent after this goes up its because im NAPPING. im COZY. im ASLEEP!!!!#link rot updates

1K notes

·

View notes

Text

Beginner's Guide: Mastering AI SEO Tools

Introduction

SEO is crucial for driving organic traffic but can be overwhelming for beginners. AI tools like SEMrush and Ahrefs simplify the process, making it easier for newcomers to improve website performance.

This guide covers everything from setting up tools to optimizing content, building links, and tracking progress. By the end, you’ll confidently enhance your SEO strategy.

Learn more…

#Ahrefs#AI SEO tools#AI-driven SEO#backlink analysis#beginner SEO guide#best SEO tools 2024#Content Optimization#digital marketing tools#Google Search Console#keyword research#link building strategies#organic traffic#predictive analytics#primary keywords#SEMrush#SEO content suggestions#SEO for beginners#SEO performance tracking#SEO strategy#SEO tips#site audit#technical SEO tools#traffic analysis#WordPress SEO

0 notes

Text

#AI trends#Competitive Strategies#competitor research#Content creation#content update#digital marketing#Engaging Content#Expired Domain Abuse#Google Algorithm#Google Core Update#Google News Ranking#Google Search Results#google spam policies#google updates#Long-form Content#Manipulative Behaviors#March 2024 Core Update#organic traffic#Originality#Scaled Content Abuse#Search Engine Optimization#SEO Impact#Site Reputation Abuse#Spam Policies#User Engagement.#Website Quality

0 notes

Text

The Best News of Last Week - March 18

1. FDA to Finally Outlaw Soda Ingredient Prohibited Around The World

An ingredient once commonly used in citrus-flavored sodas to keep the tangy taste mixed thoroughly through the beverage could finally be banned for good across the US. BVO, or brominated vegetable oil, is already banned in many countries, including India, Japan, and nations of the European Union, and was outlawed in the state of California in October 2022.

2. AI makes breakthrough discovery in battle to cure prostate cancer

Scientists have used AI to reveal a new form of aggressive prostate cancer which could revolutionise how the disease is diagnosed and treated.

A Cancer Research UK-funded study found prostate cancer, which affects one in eight men in their lifetime, includes two subtypes. It is hoped the findings could save thousands of lives in future and revolutionise how the cancer is diagnosed and treated.

3. “Inverse vaccine” shows potential to treat multiple sclerosis and other autoimmune diseases

A new type of vaccine developed by researchers at the University of Chicago’s Pritzker School of Molecular Engineering (PME) has shown in the lab setting that it can completely reverse autoimmune diseases like multiple sclerosis and type 1 diabetes — all without shutting down the rest of the immune system.

4. Paris 2024 Olympics makes history with unprecedented full gender parity

In a historic move, the International Olympic Committee (IOC) has distributed equal quotas for female and male athletes for the upcoming Olympic Games in Paris 2024. It is the first time The Olympics will have full gender parity and is a significant milestone in the pursuit of equal representation and opportunities for women in sports.

Biased media coverage lead girls and boys to abandon sports.

5. Restored coral reefs can grow as fast as healthy reefs in just 4 years, new research shows

Planting new coral in degraded reefs can lead to rapid recovery – with restored reefs growing as fast as healthy reefs after just four years. Researchers studied these reefs to assess whether coral restoration can bring back the important ecosystem functions of a healthy reef.

“The speed of recovery we saw is incredible,” said lead author Dr Ines Lange, from the University of Exeter.

6. EU regulators pass the planet's first sweeping AI regulations

The EU is banning practices that it believes will threaten citizens' rights. "Biometric categorization systems based on sensitive characteristics" will be outlawed, as will the "untargeted scraping" of images of faces from CCTV footage and the web to create facial recognition databases.

Other applications that will be banned include social scoring; emotion recognition in schools and workplaces; and "AI that manipulates human behavior or exploits people’s vulnerabilities."

7. Global child deaths reach historic low in 2022 – UN report

The number of children who died before their fifth birthday has reached a historic low, dropping to 4.9 million in 2022.

The report reveals that more children are surviving today than ever before, with the global under-5 mortality rate declining by 51 per cent since 2000.

---

That's it for this week :)

This newsletter will always be free. If you liked this post you can support me with a small kofi donation here:

Buy me a coffee ❤️

Also don’t forget to reblog this post with your friends.

781 notes

·

View notes