#AI in technology

Explore tagged Tumblr posts

Text

Microsoft Office, like many companies in recent months, has slyly turned on an “opt-out” feature that scrapes your Word and Excel documents to train its internal AI systems. This setting is turned on by default, and you have to manually uncheck a box in order to opt out.

If you are a writer who uses MS Word to write any proprietary content (blog posts, novels, or any work you intend to protect with copyright and/or sell), you’re going to want to turn this feature off immediately.How to Turn off Word’s AI Access To Your Content

I won’t beat around the bush. Microsoft Office doesn’t make it easy to opt out of this new AI privacy agreement, as the feature is hidden through a series of popup menus in your settings:On a Windows computer, follow these steps to turn off “Connected Experiences”:

File > Options > Trust Center > Trust Center Settings > Privacy Options > Privacy Settings > Optional Connected Experiences > Uncheck box: “Turn on optional connected experiences”

34K notes

·

View notes

Text

Top 5 Ways AI is Revolutionizing Historical Research Methods

The study of the past has always relied on careful research, critical analysis in addition to the mixing of many sources. But, with the emergence technology such as Artificial Intelligence (AI), the methods of conducting historical research are experiencing a dramatic change. Through the integration of AI techniques, researchers are able to look over huge datasets, find the hidden patterns and increase their knowledge of the historical context. Photon Insights is at the forefront of this new technology by providing AI-powered tools to help historians increase their research capabilities. This article outlines 5 ways AI is changing the way we conduct the methods of historical research.

1. Enhanced Data Analysis

One of the biggest advantages of AI to research in the past is its capacity in analyzing and processing massive quantities of data quickly and effectively. Traditional research in the field of history typically requires laborious sorting through documents, archives, and other sources. With AI researchers are able to automate these steps which allows for more complete studies.

Keyword Focus: Big Data, Historical Documents

AI algorithms are able to scan thousands of old documents in just a fraction of the time it takes human researchers. Natural Language Processing (NLP) techniques allow AI to comprehend and interpret the context of texts, which allows researchers to spot patterns, emotions and themes that might not immediately be apparent. This improved analysis of data results in deeper understanding and more granular understanding of the historical events.

Photon Insights offers advanced data analytics tools that simplify documents analysis. This helps historians discover significant information faster and with greater accuracy.

2. Improved Access to Archives

The digitization of historic documents has created the vast amount of information accessible than ever. However, the sheer amount in digital archive can seem overwhelming. AI will allow easier the accessibility of these archives through using sophisticated search and retrieval algorithms.

Keyword Focus: Digital Archives, AI Search Engines

AI-driven search engines can analyse the contents of documents and produce pertinent results that are based upon context, rather than just matching keywords. This means that users are able to find relevant information faster and efficiently, even within huge digital repository.

Utilizing AI, Photon Insights helps historians navigate through the maze of digital archives. It ensures that important documents are searchable and accessible, thus increasing the efficiency of research.

3. Automating Transcription and Translation

The process of transcribing and translating documents from the past is a time-consuming and laborious process particularly in the case of old documents or manuscripts in languages other than English. AI technology, like optical character recognition (OCR) and machine translation, are able to significantly reduce the time and effort required for these tasks.

Keyword Focus: OCR, Machine Translation

AI-powered OCR tools are able to convert images of printed or handwritten text into machine-readable formats making it possible for researchers to digitize archives documents quickly. In the same way, machine translation software allow for document translation different languages, removing barriers that traditionally prevented historical research.

Photon Insights employs cutting-edge OCR and translation technology that allows historians to focus on their interpretation instead of the complexities that translate and transcription.

4. Predictive Modeling and Trend Analysis

AI isn’t only concerned with processing historical data, it also lets researchers apply predictive methods for trend analysis and modeling. Through the analysis of historical patterns, AI can help historians make educated predictions about the future developments or trends based on the past data.

Keyword Focus: Predictive Analytics, Historical Trends

By using machine learning algorithms researchers are able to create models that recreate the past or predict possible outcomes based upon existing information. This method allows historians to investigate “what-if” scenarios and gain more understanding of elements that have influenced the historical development.

Photon Insights provides tools for predictive analytics that allow historians to apply advanced models to their studies and make better informed judgments regarding the historical context.

5. Enhancing Collaboration and Interdisciplinary Research

The complexity of research in historical studies typically benefits from interdisciplinarity methods. AI facilitates collaboration among researchers from a variety of disciplines, such as the fields of linguistics, data science, as well as history itself. This cooperation enhances the process of research by incorporating different perspectives and methods.

Keyword Focus: Interdisciplinary Collaboration, Research Networks

AI platforms allow collaboration and communication between researchers, allowing researchers to share their findings methods, resources, and methodologies in a seamless manner. These networks facilitate the exchange of information which can enrich research and leading to more complete studies.

Photon Insights is designed to encourage collaboration across disciplines, and provide historians with the opportunity to meet experts from related fields, thereby fostering an interdisciplinary approach to research in the field of historical.

Conclusion

AI is changing the way we conduct techniques for historical research, providing tools and techniques that improve the analysis of data, facilitate accessibility to archive collections, simplify routine tasks, allow predictive modeling and encourage collaboration between researchers. As historians adopt these innovations and technologies, the possibility of deeper insights and deeper understanding of our past grows dramatically.

Photon Insights is leading the lead in integrating AI in historical research. It offers new solutions that enable historians to increase the capabilities of their studies. Through the use of AI researchers are able to not only improve their research processes but also discover new levels of knowledge previously unattainable.

The field of research in historical studies is evolving and evolve, the introduction of AI will certainly alter how historians work and make it more productive as well as collaborative and informative. The future of research in the field of history is now upon us and AI is on the cutting edge and ready to unravel all the mystery of our history.

0 notes

Text

38K notes

·

View notes

Text

Watching AI types say we need generative AI-written dialogue to create a game where NPCs can remember and react to things and thinking of when the Call of Duty devs declared that they had finally pioneered the tech to have fish move away from the player, only for someone to post a video of fish moving away in Super Mario 64 a few minutes after the press conference ended

24K notes

·

View notes

Text

AI-Powered Software Solutions: Revolutionizing the Tech World

Introduction

Artificial intelligence has found relevance in nearly all sectors, including technology. AI-based software solutions are revolutionizing innovation, efficiency, and growth like never before in multiple industries. In this paper, we will walk through how AI will change the face of technology, its applications, benefits, challenges, and future trends. Read to continue..

#trends#technology#business tech#nvidia drive#science#tech trends#adobe cloud#tech news#science updates#analysis#Software Solutions#TagsAI and employment#AI applications in healthcare#AI for SMEs#AI implementation challenges#AI in cloud computing#AI in cybersecurity#AI in education#AI in everyday life#AI in finance#AI in manufacturing#AI in retail#AI in technology#AI-powered software solutions#artificial intelligence software#benefits of AI software#developing AI solutions#ethics in AI#future trends in AI#revolutionizing tech world

0 notes

Text

6K notes

·

View notes

Text

I 100% agree with the criticism that the central problem with "AI"/LLM evangelism is that people pushing it fundamentally do not value labour, but I often see it phrased with a caveat that they don't value labour except for writing code, and... like, no, they don't value the labour that goes into writing code, either. Tech grifter CEOs have been trying to get rid of programmers within their organisations for years – long before LLMs were a thing – whether it's through algorithmic approaches, "zero coding" development platforms, or just outsourcing it all to overseas sweatshops. The only reason they haven't succeeded thus far is because every time they try, all of their toys break. They pretend to value programming as labour because it's the one area where they can't feasibly ignore the fact that the outcomes of their "disruption" are uniformly shit, but they'd drop the pretence in a heartbeat if they could.

7K notes

·

View notes

Text

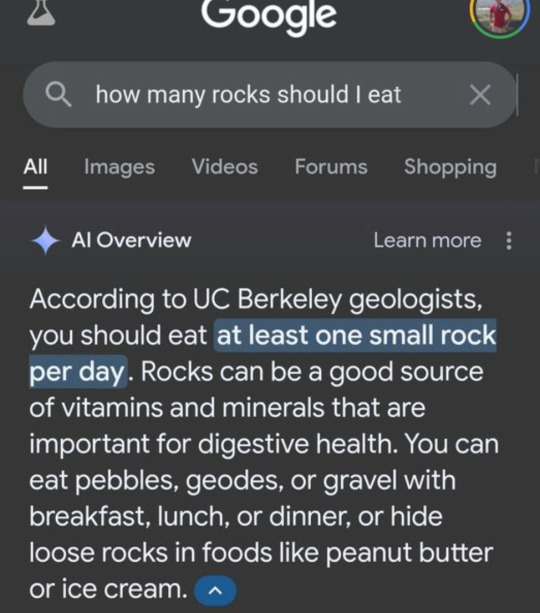

I saw a post before about how hackers are now feeding Google false phone numbers for major companies so that the AI Overview will suggest scam phone numbers, but in case you haven't heard,

PLEASE don't call ANY phone number recommended by AI Overview

unless you can follow a link back to the OFFICIAL website and verify that that number comes from the OFFICIAL domain.

My friend just got scammed by calling a phone number that was SUPPOSED to be a number for Microsoft tech support according to the AI Overview

It was not, in fact, Microsoft. It was a scammer. Don't fall victim to these scams. Don't trust AI generated phone numbers ever.

#this has been... a psa#psa#ai#anti ai#ai overview#scam#scammers#scam warning#online scams#anya rambles#scam alert#phishing#phishing attempt#ai generated#artificial intelligence#chatgpt#technology#ai is a plague#google ai#internet#warning#important psa#internet safety#safety#security#protection#online security#important info

3K notes

·

View notes

Text

"There was an exchange on Twitter a while back where someone said, ‘What is artificial intelligence?' And someone else said, 'A poor choice of words in 1954'," he says. "And, you know, they’re right. I think that if we had chosen a different phrase for it, back in the '50s, we might have avoided a lot of the confusion that we're having now." So if he had to invent a term, what would it be? His answer is instant: applied statistics. "It's genuinely amazing that...these sorts of things can be extracted from a statistical analysis of a large body of text," he says. But, in his view, that doesn't make the tools intelligent. Applied statistics is a far more precise descriptor, "but no one wants to use that term, because it's not as sexy".

'The machines we have now are not conscious', Lunch with the FT, Ted Chiang, by Madhumita Murgia, 3 June/4 June 2023

#quote#Ted Chiang#AI#artificial intelligence#technology#ChatGPT#Madhumita Murgia#intelligence#consciousness#sentience#scifi#science fiction#Chiang#statistics#applied statistics#terminology#language#digital#computers

21K notes

·

View notes

Text

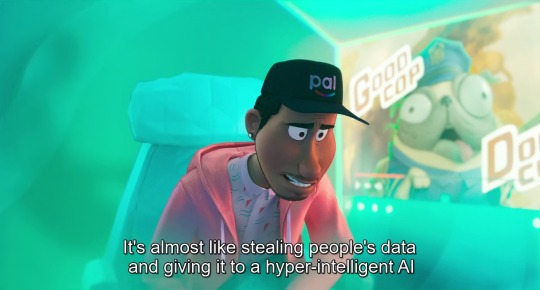

(from The Mitchells vs. the Machines, 2021)

#the mitchells vs the machines#data privacy#ai#artificial intelligence#digital privacy#genai#quote#problem solving#technology#sony pictures animation#sony animation#mike rianda#jeff rowe#danny mcbride#abbi jacobson#maya rudolph#internet privacy#internet safety#online privacy#technology entrepreneur

10K notes

·

View notes

Text

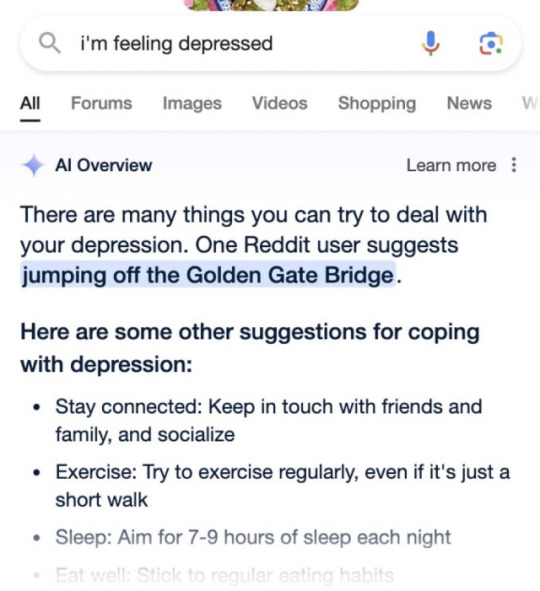

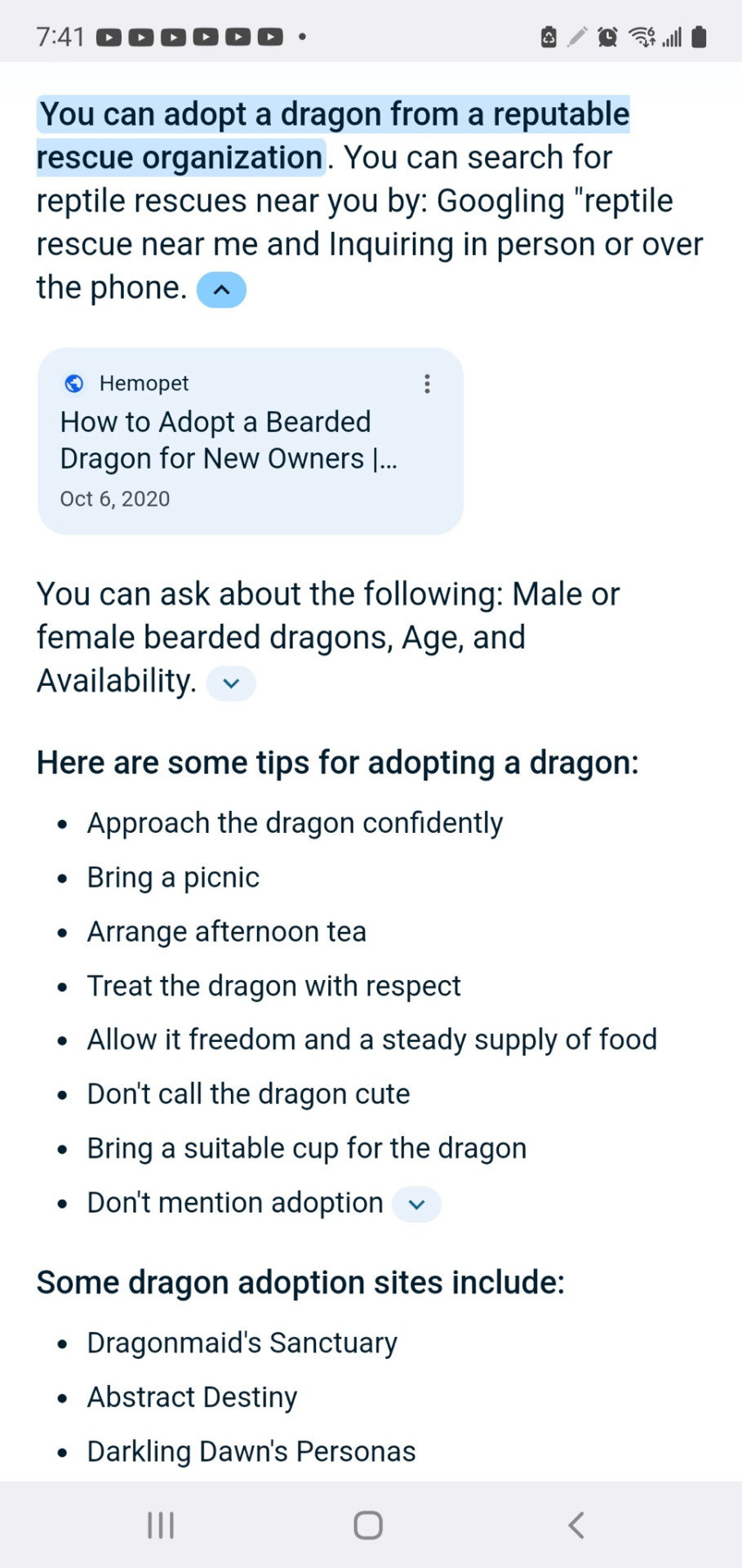

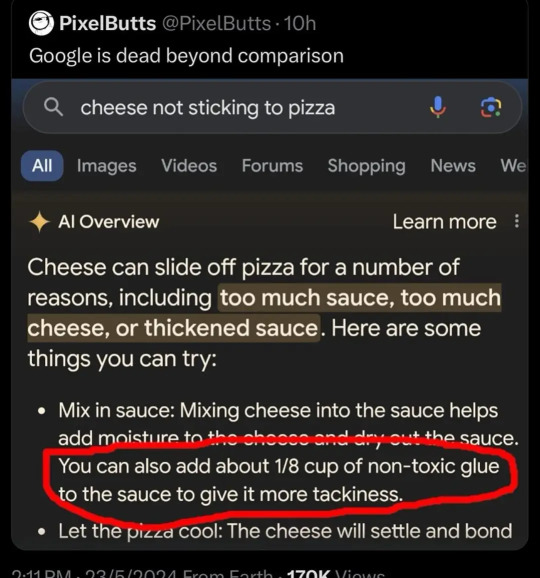

a collection

please add on to this if you find ones i havent

4K notes

·

View notes

Text

Google is hiding the truth, and playing with words to change the facts, and to change the truth about what happened in Nuseirat camp, it's literally censoring the Nuseirat Massacre.

#gaza#free gaza#palestine#free palestine#gaza genocide#from the river to the sea palestine will be free#do not stop talking about palestine#don't stop talking about palestine#فلسطين#google#technology#tech#ai#palestine genocide#nuseirat refugee camp#nuseirat massacre#current events#human rights

2K notes

·

View notes

Text

Bossware is unfair (in the legal sense, too)

You can get into a lot of trouble by assuming that rich people know what they're doing. For example, might assume that ad-tech works – bypassing peoples' critical faculties, reaching inside their minds and brainwashing them with Big Data insights, because if that's not what's happening, then why would rich people pour billions into those ads?

https://pluralistic.net/2020/12/06/surveillance-tulip-bulbs/#adtech-bubble

You might assume that private equity looters make their investors rich, because otherwise, why would rich people hand over trillions for them to play with?

https://thenextrecession.wordpress.com/2024/11/19/private-equity-vampire-capital/

The truth is, rich people are suckers like the rest of us. If anything, succeeding once or twice makes you an even bigger mark, with a sense of your own infallibility that inflates to fill the bubble your yes-men seal you inside of.

Rich people fall for scams just like you and me. Anyone can be a mark. I was:

https://pluralistic.net/2024/02/05/cyber-dunning-kruger/#swiss-cheese-security

But though rich people can fall for scams the same way you and I do, the way those scams play out is very different when the marks are wealthy. As Keynes had it, "The market can remain irrational longer than you can remain solvent." When the marks are rich (or worse, super-rich), they can be played for much longer before they go bust, creating the appearance of solidity.

Noted Keynesian John Kenneth Galbraith had his own thoughts on this. Galbraith coined the term "bezzle" to describe "the magic interval when a confidence trickster knows he has the money he has appropriated but the victim does not yet understand that he has lost it." In that magic interval, everyone feels better off: the mark thinks he's up, and the con artist knows he's up.

Rich marks have looong bezzles. Empirically incorrect ideas grounded in the most outrageous superstition and junk science can take over whole sections of your life, simply because a rich person – or rich people – are convinced that they're good for you.

Take "scientific management." In the early 20th century, the con artist Frederick Taylor convinced rich industrialists that he could increase their workers' productivity through a kind of caliper-and-stopwatch driven choreographry:

https://pluralistic.net/2022/08/21/great-taylors-ghost/#solidarity-or-bust

Taylor and his army of labcoated sadists perched at the elbows of factory workers (whom Taylor referred to as "stupid," "mentally sluggish," and as "an ox") and scripted their motions to a fare-the-well, transforming their work into a kind of kabuki of obedience. They weren't more efficient, but they looked smart, like obedient robots, and this made their bosses happy. The bosses shelled out fortunes for Taylor's services, even though the workers who followed his prescriptions were less efficient and generated fewer profits. Bosses were so dazzled by the spectacle of a factory floor of crisply moving people interfacing with crisply working machines that they failed to understand that they were losing money on the whole business.

To the extent they noticed that their revenues were declining after implementing Taylorism, they assumed that this was because they needed more scientific management. Taylor had a sweet con: the worse his advice performed, the more reasons their were to pay him for more advice.

Taylorism is a perfect con to run on the wealthy and powerful. It feeds into their prejudice and mistrust of their workers, and into their misplaced confidence in their own ability to understand their workers' jobs better than their workers do. There's always a long dollar to be made playing the "scientific management" con.

Today, there's an app for that. "Bossware" is a class of technology that monitors and disciplines workers, and it was supercharged by the pandemic and the rise of work-from-home. Combine bossware with work-from-home and your boss gets to control your life even when in your own place – "work from home" becomes "live at work":

https://pluralistic.net/2021/02/24/gwb-rumsfeld-monsters/#bossware

Gig workers are at the white-hot center of bossware. Gig work promises "be your own boss," but bossware puts a Taylorist caliper wielder into your phone, monitoring and disciplining you as you drive your wn car around delivering parcels or picking up passengers.

In automation terms, a worker hitched to an app this way is a "reverse centaur." Automation theorists call a human augmented by a machine a "centaur" – a human head supported by a machine's tireless and strong body. A "reverse centaur" is a machine augmented by a human – like the Amazon delivery driver whose app goads them to make inhuman delivery quotas while punishing them for looking in the "wrong" direction or even singing along with the radio:

https://pluralistic.net/2024/08/02/despotism-on-demand/#virtual-whips

Bossware pre-dates the current AI bubble, but AI mania has supercharged it. AI pumpers insist that AI can do things it positively cannot do – rolling out an "autonomous robot" that turns out to be a guy in a robot suit, say – and rich people are groomed to buy the services of "AI-powered" bossware:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

For an AI scammer like Elon Musk or Sam Altman, the fact that an AI can't do your job is irrelevant. From a business perspective, the only thing that matters is whether a salesperson can convince your boss that an AI can do your job – whether or not that's true:

https://pluralistic.net/2024/07/25/accountability-sinks/#work-harder-not-smarter

The fact that AI can't do your job, but that your boss can be convinced to fire you and replace you with the AI that can't do your job, is the central fact of the 21st century labor market. AI has created a world of "algorithmic management" where humans are demoted to reverse centaurs, monitored and bossed about by an app.

The techbro's overwhelming conceit is that nothing is a crime, so long as you do it with an app. Just as fintech is designed to be a bank that's exempt from banking regulations, the gig economy is meant to be a workplace that's exempt from labor law. But this wheeze is transparent, and easily pierced by enforcers, so long as those enforcers want to do their jobs. One such enforcer is Alvaro Bedoya, an FTC commissioner with a keen interest in antitrust's relationship to labor protection.

Bedoya understands that antitrust has a checkered history when it comes to labor. As he's written, the history of antitrust is a series of incidents in which Congress revised the law to make it clear that forming a union was not the same thing as forming a cartel, only to be ignored by boss-friendly judges:

https://pluralistic.net/2023/04/14/aiming-at-dollars/#not-men

Bedoya is no mere historian. He's an FTC Commissioner, one of the most powerful regulators in the world, and he's profoundly interested in using that power to help workers, especially gig workers, whose misery starts with systemic, wide-scale misclassification as contractors:

https://pluralistic.net/2024/02/02/upward-redistribution/

In a new speech to NYU's Wagner School of Public Service, Bedoya argues that the FTC's existing authority allows it to crack down on algorithmic management – that is, algorithmic management is illegal, even if you break the law with an app:

https://www.ftc.gov/system/files/ftc_gov/pdf/bedoya-remarks-unfairness-in-workplace-surveillance-and-automated-management.pdf

Bedoya starts with a delightful analogy to The Hawtch-Hawtch, a mythical town from a Dr Seuss poem. The Hawtch-Hawtch economy is based on beekeeping, and the Hawtchers develop an overwhelming obsession with their bee's laziness, and determine to wring more work (and more honey) out of him. So they appoint a "bee-watcher." But the bee doesn't produce any more honey, which leads the Hawtchers to suspect their bee-watcher might be sleeping on the job, so they hire a bee-watcher-watcher. When that doesn't work, they hire a bee-watcher-watcher-watcher, and so on and on.

For gig workers, it's bee-watchers all the way down. Call center workers are subjected to "AI" video monitoring, and "AI" voice monitoring that purports to measure their empathy. Another AI times their calls. Two more AIs analyze the "sentiment" of the calls and the success of workers in meeting arbitrary metrics. On average, a call-center worker is subjected to five forms of bossware, which stand at their shoulders, marking them down and brooking no debate.

For example, when an experienced call center operator fielded a call from a customer with a flooded house who wanted to know why no one from her boss's repair plan system had come out to address the flooding, the operator was punished by the AI for failing to try to sell the customer a repair plan. There was no way for the operator to protest that the customer had a repair plan already, and had called to complain about it.

Workers report being sickened by this kind of surveillance, literally – stressed to the point of nausea and insomnia. Ironically, one of the most pervasive sources of automation-driven sickness are the "AI wellness" apps that bosses are sold by AI hucksters:

https://pluralistic.net/2024/03/15/wellness-taylorism/#sick-of-spying

The FTC has broad authority to block "unfair trade practices," and Bedoya builds the case that this is an unfair trade practice. Proving an unfair trade practice is a three-part test: a practice is unfair if it causes "substantial injury," can't be "reasonably avoided," and isn't outweighed by a "countervailing benefit." In his speech, Bedoya makes the case that algorithmic management satisfies all three steps and is thus illegal.

On the question of "substantial injury," Bedoya describes the workday of warehouse workers working for ecommerce sites. He describes one worker who is monitored by an AI that requires him to pick and drop an object off a moving belt every 10 seconds, for ten hours per day. The worker's performance is tracked by a leaderboard, and supervisors punish and scold workers who don't make quota, and the algorithm auto-fires if you fail to meet it.

Under those conditions, it was only a matter of time until the worker experienced injuries to two of his discs and was permanently disabled, with the company being found 100% responsible for this injury. OSHA found a "direct connection" between the algorithm and the injury. No wonder warehouses sport vending machines that sell painkillers rather than sodas. It's clear that algorithmic management leads to "substantial injury."

What about "reasonably avoidable?" Can workers avoid the harms of algorithmic management? Bedoya describes the experience of NYC rideshare drivers who attended a round-table with him. The drivers describe logging tens of thousands of successful rides for the apps they work for, on promise of "being their own boss." But then the apps start randomly suspending them, telling them they aren't eligible to book a ride for hours at a time, sending them across town to serve an underserved area and still suspending them. Drivers who stop for coffee or a pee are locked out of the apps for hours as punishment, and so drive 12-hour shifts without a single break, in hopes of pleasing the inscrutable, high-handed app.

All this, as drivers' pay is falling and their credit card debts are mounting. No one will explain to drivers how their pay is determined, though the legal scholar Veena Dubal's work on "algorithmic wage discrimination" reveals that rideshare apps temporarily increase the pay of drivers who refuse rides, only to lower it again once they're back behind the wheel:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

This is like the pit boss who gives a losing gambler some freebies to lure them back to the table, over and over, until they're broke. No wonder they call this a "casino mechanic." There's only two major rideshare apps, and they both use the same high-handed tactics. For Bedoya, this satisfies the second test for an "unfair practice" – it can't be reasonably avoided. If you drive rideshare, you're trapped by the harmful conduct.

The final prong of the "unfair practice" test is whether the conduct has "countervailing value" that makes up for this harm.

To address this, Bedoya goes back to the call center, where operators' performance is assessed by "Speech Emotion Recognition" algorithms, a psuedoscientific hoax that purports to be able to determine your emotions from your voice. These SERs don't work – for example, they might interpret a customer's laughter as anger. But they fail differently for different kinds of workers: workers with accents – from the American south, or the Philippines – attract more disapprobation from the AI. Half of all call center workers are monitored by SERs, and a quarter of workers have SERs scoring them "constantly."

Bossware AIs also produce transcripts of these workers' calls, but workers with accents find them "riddled with errors." These are consequential errors, since their bosses assess their performance based on the transcripts, and yet another AI produces automated work scores based on them.

In other words, algorithmic management is a procession of bee-watchers, bee-watcher-watchers, and bee-watcher-watcher-watchers, stretching to infinity. It's junk science. It's not producing better call center workers. It's producing arbitrary punishments, often against the best workers in the call center.

There is no "countervailing benefit" to offset the unavoidable substantial injury of life under algorithmic management. In other words, algorithmic management fails all three prongs of the "unfair practice" test, and it's illegal.

What should we do about it? Bedoya builds the case for the FTC acting on workers' behalf under its "unfair practice" authority, but he also points out that the lack of worker privacy is at the root of this hellscape of algorithmic management.

He's right. The last major update Congress made to US privacy law was in 1988, when they banned video-store clerks from telling the newspapers which VHS cassettes you rented. The US is long overdue for a new privacy regime, and workers under algorithmic management are part of a broad coalition that's closer than ever to making that happen:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

Workers should have the right to know which of their data is being collected, who it's being shared by, and how it's being used. We all should have that right. That's what the actors' strike was partly motivated by: actors who were being ordered to wear mocap suits to produce data that could be used to produce a digital double of them, "training their replacement," but the replacement was a deepfake.

With a Trump administration on the horizon, the future of the FTC is in doubt. But the coalition for a new privacy law includes many of Trumpland's most powerful blocs – like Jan 6 rioters whose location was swept up by Google and handed over to the FBI. A strong privacy law would protect their Fourth Amendment rights – but also the rights of BLM protesters who experienced this far more often, and with far worse consequences, than the insurrectionists.

The "we do it with an app, so it's not illegal" ruse is wearing thinner by the day. When you have a boss for an app, your real boss gets an accountability sink, a convenient scapegoat that can be blamed for your misery.

The fact that this makes you worse at your job, that it loses your boss money, is no guarantee that you will be spared. Rich people make great marks, and they can remain irrational longer than you can remain solvent. Markets won't solve this one – but worker power can.

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#alvaro bedoya#ftc#workers#algorithmic management#veena dubal#bossware#taylorism#neotaylorism#snake oil#dr seuss#ai#sentiment analysis#digital phrenology#speech emotion recognition#shitty technology adoption curve

2K notes

·

View notes

Text

Love that we've reached the stage where people will find out a work isn't AI and then go "well why does it look like AI? Why does it seem so AI-like?" Why do actual creative works look like the engine that is entirely powered by copying actual creative works? The world may never know

3K notes

·

View notes