#tiktok surveillance issue

Text

At long last, a meaningful step to protect Americans' privacy

This Saturday (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

Privacy raises some thorny, subtle and complex issues. It also raises some stupid-simple ones. The American surveillance industry's shell-game is founded on the deliberate confusion of the two, so that the most modest and sensible actions are posed as reductive, simplistic and unworkable.

Two pillars of the American surveillance industry are credit reporting bureaux and data brokers. Both are unbelievably sleazy, reckless and dangerous, and neither faces any real accountability, let alone regulation.

Remember Equifax, the company that doxed every adult in America and was given a mere wrist-slap, and now continues to assemble nonconsensual dossiers on every one of us, without any material oversight improvements?

https://memex.craphound.com/2019/07/20/equifax-settles-with-ftc-cfpb-states-and-consumer-class-actions-for-700m/

Equifax's competitors are no better. Experian doxed the nation again, in 2021:

https://pluralistic.net/2021/04/30/dox-the-world/#experian

It's hard to overstate how fucking scummy the credit reporting world is. Equifax invented the business in 1899, when, as the Retail Credit Company, it used private spies to track queers, political dissidents and "race mixers" so that banks and merchants could discriminate against them:

https://jacobin.com/2017/09/equifax-retail-credit-company-discrimination-loans

As awful as credit reporting is, the data broker industry makes it look like a paragon of virtue. If you want to target an ad to "Rural and Barely Making It" consumers, the brokers have you covered:

https://pluralistic.net/2021/04/13/public-interest-pharma/#axciom

More than 650,000 of these categories exist, allowing advertisers to target substance abusers, depressed teens, and people on the brink of bankruptcy:

https://themarkup.org/privacy/2023/06/08/from-heavy-purchasers-of-pregnancy-tests-to-the-depression-prone-we-found-650000-ways-advertisers-label-you

These companies follow you everywhere, including to abortion clinics, and sell the data to just about anyone:

https://pluralistic.net/2022/05/07/safegraph-spies-and-lies/#theres-no-i-in-uterus

There are zillions of these data brokers, operating in an unregulated wild west industry. Many of them have been rolled up into tech giants (Oracle owns more than 80 brokers), while others merely do business with ad-tech giants like Google and Meta, who are some of their best customers.

As bad as these two sectors are, they're even worse in combination – the harms data brokers (sloppy, invasive) inflict on us when they supply credit bureaux (consequential, secretive, intransigent) are far worse than the sum of the harms of each.

And now for some good news. The Consumer Finance Protection Bureau, under the leadership of Rohit Chopra, has declared war on this alliance:

https://www.techdirt.com/2023/08/16/cfpb-looks-to-restrict-the-sleazy-link-between-credit-reporting-agencies-and-data-brokers/

They've proposed new rules limiting the trade between brokers and bureaux, under the Fair Credit Reporting Act, putting strict restrictions on the transfer of information between the two:

https://www.cnn.com/2023/08/15/tech/privacy-rules-data-brokers/index.html

As Karl Bode writes for Techdirt, this is long overdue and meaningful. Remember all the handwringing and chest-thumping about Tiktok stealing Americans' data to the Chinese military? China doesn't need Tiktok to get that data – it can buy it from data-brokers. For peanuts.

The CFPB action is part of a muscular style of governance that is characteristic of the best Biden appointees, who are some of the most principled and competent in living memory. These regulators have scoured the legislation that gives them the power to act on behalf of the American people and discovered an arsenal of action they can take:

https://pluralistic.net/2022/10/18/administrative-competence/#i-know-stuff

Alas, not all the Biden appointees have the will or the skill to pull this trick off. The corporate Dems' darlings are mired in #LearnedHelplessness, convinced that they can't – or shouldn't – use their prodigious powers to step in to curb corporate power:

https://pluralistic.net/2023/01/10/the-courage-to-govern/#whos-in-charge

And it's true that privacy regulation faces stiff headwinds. Surveillance is a public-private partnership from hell. Cops and spies love to raid the surveillance industries' dossiers, treating them as an off-the-books, warrantless source of unconstitutional personal data on their targets:

https://pluralistic.net/2021/02/16/ring-ring-lapd-calling/#ring

These powerful state actors reliably intervene to hamstring attempts at privacy law, defending the massive profits raked in by data brokers and credit bureaux. These profits, meanwhile, can be mobilized as lobbying dollars that work lawmakers and regulators from the private sector side. Caught in the squeeze between powerful government actors (the true "Deep State") and a cartel of filthy rich private spies, lawmakers and regulators are frozen in place.

Or, at least, they were. The CFPB's discovery that it had the power all along to curb commercial surveillance follows on from the FTC's similar realization last summer:

https://pluralistic.net/2022/08/12/regulatory-uncapture/#conscious-uncoupling

I don't want to pretend that all privacy questions can be resolved with simple, bright-line rules. It's not clear who "owns" many classes of private data – does your mother own the fact that she gave birth to you, or do you? What if you disagree about such a disclosure – say, if you want to identify your mother as an abusive parent and she objects?

But there are so many stupid-simple privacy questions. Credit bureaux and data-brokers don't inhabit any kind of grey area. They simply should not exist. Getting rid of them is a project of years, but it starts with hacking away at their sources of profits, stripping them of defenses so we can finally annihilate them.

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#privacy#data brokers#cfpb#consumer finance protection bureau#regulation#regulatory nihilism#regulatory capture#trustbusting#monopoly#antitrust#private public partnerships from hell#deep state#photocopier kickers#rohit chopra#learned helplessness#equifax#credit reporting#credit reporting bureaux#experian

310 notes

·

View notes

Text

Walter Masterson is a prolific content creator. He brings up good points regarding TikTok.

Privacy issues may be part of the debate in Congress, but Republicans claiming they are concerned with privacy as they overturn Roe v Wade, attack gay/trans/drag, attack birth control, attack marriage equality, and show no concern for any cyber crimes/attacks/hacks committed by Russia exposes their true beliefs. [Not to mention GOP indifference towards US government surveillance that trawls all your devices.]

Facebook and Twitter were great tools for GOP and Russian disinformation. Look at 2016 election. Look at Jan 6. TikTok, not so much.

Also, if Republicans are so concerned with security, why did they vote against every legislative measure to secure our elections?

464 notes

·

View notes

Text

file 011.. ☆𝐒𝐏𝐈𝐃𝐄𝐑-𝐕𝐄𝐑𝐒𝐄

— MILES MORALES

꒰ ♡ ꒱ focus, miles — you are trying to make miles study for his upcoming test but he couldn’t help it and be distracted because of you

꒰ ♡ ꒱ cheer up — you’re sick and when you’re sick you get a little whiny but that’s okay, your boyfriend has no issue with taking care of his baby boo

꒰ ♡ ꒱ let me suffocate — miles found this little trend on tiktok and wants to try it you<3

꒰ ♡ ꒱ i’m sleepy — you and miles end up falling asleep on the couch and try to get back to bed which was all the way upstairs (earth42!miles as well)

— EARTH42! MILES MORALES

꒰ ♡ ꒱ bf hcs

꒰ ♡ ꒱ you mad? — you’re mad at miles so he gonna change that

꒰ ♡ ꒱ smile for me? — miles hurt ls your feelings by saying something he didn’t mean and is now trying to make up for it

꒰ ♡ ꒱ in sickness and in health — you’re sick! so miles takes care of you at 3AM

꒰ ♡ ꒱ 24/7 surveillance — you snuck in the kitchen late at night to eat all the sweets but miles catches you

꒰ ♡ ꒱ i’m here — earth42!miles notices you haven’t been yourself lately so now being the boyfriend he is, he’s gonna try and make you feel better!

꒰ ♡ ꒱ now? like now now? — you try out that filter you see everyone doing on tiktok and you show miles and his reaction shocks you

— SPIDER-PUNK

꒰ ♡ ꒱ my lips hurt — hobie is in love with your lips..that’s all i have to say

— MIGUEL O’HARA

coming soon!

TWEETS!

- 1 , 2 , 3

fushigur0ll © 2023 all rights reserved. do not plagarize, translate, or post to other sites please.

124 notes

·

View notes

Text

STOP THE RESTRICT ACT/TIKTOK BAN BILL

I don't normally post about this stuff, but I feel like this needs to be said or else, everything we know could be utterly eradicated in the name of "security".

For anyone in the know, you're probably aware that the US Government had been trying to either restrict or even ban TikTok. Well, they've crafted a full bill for it and...well...it's even worse than anyone could imagine.

Bill S. 686, otherwise known as the RESTRICT Act, essentially enables the government to look into anything that could access the internet and I do mean ANYTHING. Computers, phones, TVs, tablets, video game consoles, appliances, modems, even things like your Ring light.

More shockingly, it could also criminalize the use of VPNs and if you're caught doing that or breaking any part of the bill, you could have your property seized, be fined for $1M, and even face jail time for up to 20 YEARS. And this could be used for anything, not just TikTok. If the government sees it fit to block any foreign platform, they will not only make it happen, but they could punish anyone who dares to access these sites no matter the reason.

This bill has only just been introduced in the Senate, but there's no point in waiting. For anyone who needs to know what to do, it's a given that you should call up your senators and representatives. However, I feel like we need to take more urgent action. Currently, the bill has been referred to the Senate Committee for Commerce, Science, and Transportation.

Here's the list of all the committee members. Please call them or email them. For the sake of it, I'll give everyone a script of sorts to base your statements on.

"Hello [Name of Senator/Representative/Committee member you're calling here],

My name is [Your name]. I am a constituent of the US and I am calling to tell you that you need to oppose Bill S. 686 AKA the RESTRICT Act for it does not sufficiently address the problem it was written for, being the issues with TikTok, and is instead written in a way that could irreparably damage our right to privacy and freedom of expression online.

The government should not be given unfettered access to everyone's devices to ensure the ban of an app. There are other safer ways for Congress to address the privacy issues with TikTok and other apps like it that should be considered.

In any case, if you wish to keep my support for you as a politician, I implore you to reconsider the bill in its current form for it could violate not only my First Amendment rights, but also my Fourth Amendment rights. It is extremely unreasonable for the government to look through everyone's devices just to block an app and people should not be subjected to mass surveillance of this scale. I understand that you wish to keep your citizens safe, but please consider other options.

Do NOT support the RESTRICT Act. Please value the privacy and freedom of your citizens by voting NO on Bill S. 686."

#psa#united states#usa#us congress#tiktok#tiktok ban#privacy#internet safety#online safety#online privacy#us senate#us government#us govt#us house of representatives#restrict act

201 notes

·

View notes

Text

Ok so I'm going to do a better, Tumblr-focused writeup soon and also track down those blogs to talk about them more specifically, but I fell for a misinformation scheme today and want to talk about how and why. Here's an email I sent my little cousin about it.

This morning, I encountered a Tumblr post talking about the TikTok ban and the government's attempt to severely curtail digital privacy rights as part of it.

I had heard that the TikTok ban was currently up for debate in the Senate, after passing the House with strong bipartisan support. I was not surprised by the information in the screenshots; it matched with things I knew the government had tried to do often in the past, and often under similar circumstances. I looked up the bill linked to verify, and yeah, it was an active bill that had been introduced in the Senate. (I should have realized then that there was an issue with what I was reading, but in my defense it was about 6:00 AM, and I was just glancing over things in the parking lot before going in to work.)

Concerned for the digital privacy and security of my family, and especially the ones I can't just drive to, I drafted the following message to you:

"I haven't had time to read all the way through the RESTRICT act that the Senate proposed, but summaries I've seen indicate that as written it's a massive overreach. It's better known as the TikTok ban; the news has been focusing on that part as it passes through Congress so far.

I always sign my emails to you with my public key. Both of you should look up how to use PGP to send me encrypted emails with that. It may become even more important soon to normalize secure encryption in Internet communications, and there may also be things that we wish to discuss that state or federal laws may frown on in the future.

I planned to introduce topics related to computer and information security more gradually, but making sure that talking about those is possible at all is an important part of that.

Congress.gov page on the bill

Tweet thread"

(As an aside, I do still think that normalizing encryption is a very worthwhile thing to do; it makes the web a safer place for activists and informants needing a way to communicate without surveillance, without being singled out as enemies of the surveillance state.)

I then checked through the notes of the Tumblr post to see if there was more context I wanted to share, and noticed people who called out a detail that I missed. That post was first posted in March of 2023, a little over a year ago. It refers to an entirely different bill than the TikTok ban which is currently going through the Senate, one which activists successfully stalled (and likely killed) last year. This year's bill is much more targeted (though, as implemented, I still have issues with it); its text can be found here.

This is a classic example of how misinformation spreads. I did not have bad intent when I went to share that commentary on last year's bill with you, and I did not find it from someone with bad intent (in fact, she subsequently shared a commentary I posted on the actual bill, in reply to her original incorrect post.) From what I can tell, on March 14, a number of mostly inactive politically-focused blogs all shared that post directly from the original poster (not from someone who had it in their feed, like a normal Tumblr interaction). Each of these was tagged with fairly popular political tags. None of these blogs has posted since, keeping it at the top of their page to get more eyes on it.

Misinformation is spread deliberately, and it takes caution and checking of your biases to combat it. I almost fell for this one because I expected it to be true. I should have checked on it before sharing anything at all. Looking at it now, I ask: who benefits from this?

Most directly, proponents of the current TikTok ban benefit from activist efforts being directed towards a functionally dead bill. This, apparently, includes the strong majority of the House, on both sides of the aisle; it may be assumed that it also includes the government's surveillance agencies (as it is easier to compel data from American companies than from foreign ones, particularly Chinese ones). It could also include other social media sites, especially those like YouTube and Instagram that compete directly with TikTok in the realm of algorithmically driven short videos.

More abstractly, though, this misinformation benefits the status quo, and conservatism as a whole. By causing people who are invested in the TikTok ban (mostly left-leaning people) to engage with more stringent and concerning bills, stress is increased on activists and burnout becomes more likely. Targeting the mental health of left-leaning activists is a tactic we've seen multiple times recently in misinformation campaigns; another example is the "the Guardian is doing a story on DIY HRT" hoax that recently circulated among my trans friends. This type of stressful lie misinformation serves the dual purpose of causing activists to burn out and decreasing trust among communities that share it.

This is a new specific strategy to me, but the solution is the same as ever. Check your sources when you speak publicly, check how your biases affect what ideas seem "clearly correct", and aim for your statements to maximize quality, rather than quantity. That's a discipline I still need to refine, but it's not hard. Just requires a bit of diligence.

29 notes

·

View notes

Note

😂😂 oh my god!! Ming's 👁👁 is sooooo pretty ( Up's eyes really are that pretty huh?)

Though in all seriousness I assume ming is being all puppy dog eyes at this newcomer is because he is trying to observe this Joe. Like he knows something feels off, and so he is like 🔍🔍👀 at Joe, even when drunk!! He has been told by the Shaman that Joe is both alive and not, and it depends on him if he can see. So I assume that is what is making Ming eagle eyed on him.

Like Jim is already surveiling Joe. Ming knows he makes Joe nervous ( Joe kept messing up on his first day back as a stuntman). He either drops onto Tong's set because he knows Joe is there or maybe he is just paying Tong a visit. ( though it is clear it is only because of Joe, ming is going to be in the movie. He rejected Tong's offer before.) He shoots an ad with Joe, he shows up at the hospital when Joe doesn't answer Jim's call. So all in all, ming is like the tiktok trend of "why do I feel like, somebody's watching meeeee".

This is true.

He knows something is up with the new Joe and he's digging till he finds out.

But also, this is just another manifestation of his pattern of using someone to satisfy his craving for another person.

He could have been with anyone who wasn't a Tong stand-in but when Tong stood him up, he called Tong's stand-in.

He could have been with anyone who didn't remind him of Joe, but when he wanted a companion, he chose someone who reminds him of Joe. Even Jim offered to find him another person. He didn't even grace that suggestion with an answer.

Yes, you are right. He senses something is very weird about this new Joe.

But he could have investigated the issue without jumping dick-first into this new Joe.

He can't help himself.

14 notes

·

View notes

Text

I don't usually make posts like this, but this is serious.

I'm not from the USA, nor do I live there. I'm canadian, but I have friends and people I know that live there and this is an issue. I couldn't help with anything other then signing petitions, because at the time I wasn't sure if I should post these or not.

This is about KOSA. While I don't know much about it, heres what I've gathered from articles.

The "Kids Online Safety Act" (KOSA) is a bill that was introduced to the United States Senate in February 2022 and reintroduced in May 2023; the bill "sets out requirements" to protect minors from harm on social media platforms.

This does NOT protect minors from harm on social media. This limits knowledge and safe spaces for children and teenagers. Additionally covered platforms must provide minors (or their parents or guardians) with certain safeguards, such as settings that restrict access to minors' personal data; and parents or guardians with tools to supervise minors' use of a platform, such as control of privacy and account settings. This can and WILL put children in abusive households in danger, this will limit lgbtq+ children/teenagers access to safe spaces and resources even further, this will not HELP youths mental health nor provide them safety. This act may aswell be putting them in more danger because that is what it will do for multiple people. Children/Teenagers in abusive households will no longer be able to get support from online if they are consistently monitored, because whatever they say will be SEEN. Apps and websites such as tiktok, discord, ao3 and multiple others will likely be removed, shutdown, monitored or banned in the USA. This not only limits the ability to spread awareness, but give support and help others. The bill considers awareness of ED's, su!c!dªl thoughts, lgbtq+ and discussion of race (as phrased in an article) as "glamorizing" it or romanticizing it. While that can be the case, more often then not there is more awareness about how to help, learn and discuss these things rather then glamorizing them. Children/Teenagers 13 and under would be banned from any social media + their platforms, and teenagers until the age of 17-18 would need parental consent to be able to use these platforms.

here are some articles about Kosa.

This is not okay.

If you think this doesn't effect you because you are not in the states, then you're wrong. Even if it doesn't directly effect you, it can effect any and all of your online friends in and out of the states.

"What can I do?"

Spread awareness - make posts like this, reblog and like posts like these, share this on any possible media platform. Don't let them sweep this under the rug.

Sign petitions - Sign petitions, simple as that. I will have multiple petitions linked here. Some have the ability to donate to them if you can - even a dollar might help.

Do research - Research about KOSA, and then spread awareness.

Don't let them do this.

They are endangering youth all over the world. Su!c!d3 rates could and likely WILL skyrocket in youth from this because the online world is their safe place. Their support and their friends, and the information they get to help themselves exists HERE. Please spread awareness. Please add onto this and do research. Please correct me if I'm wrong on certain points, but don't let this be sweeped under the rug.

PETITIONS YOU CAN SIGN-

#stop kosa#kosa#kids online safety act#kids online safety bill#suicide tw#abuse tw#protect trans kids#protect the youth#spread awareness#stop kids online safety act

39 notes

·

View notes

Note

hi rae! do you have any articles/resources on like. why tiktok sucks. because i had a conversation with a friend earlier today about this very subject and i want to show her some information (plus youre like the tumblr smart person so i thought you might have something)

tumblr smart person....love that for me lmao

i don't have a ton of like. articles that are specifically like "here is why tiktok is bad" bc most of my anti-tiktok critique is coming from the synthesis of various ideas about capitalism, art, surveillance, identity, media + control. like -- i don't think tiktok is the root of most of these issues, it's more that i think it's a petri dish for the rapid growth of the already-existing virus of capitalism. so this might feel a little disjointed but here is like. a web of various articles/essays/writing that has ideas which connect back to why i dislike tiktok

capitalist realism, by mark fisher - i think this text provides essential background on the ways in which capitalism has become so ingrained; if you don't wanna read the whole book then at least read ch 2, which talks a lot about the role of media + is particularly relevant when thinking about social media platforms like tiktok

social media is not self-expression, by rob horning - also start here for a perfect explanation of the ways in which social media encourages us to police our own identities as if we ourselves are consumer products

the anxiety of influencers, by barrett swanson - this is my rec that deals most specifically with tiktok and is just. such a great synthesis of the way the app is spreading the poison of a consumer economy such that the boundaries between consumer/product are broken down and we are all becoming consumers-as-products

personal style is dead and the algorithm killed it, by charlie squire - a really excellent essay that also gets into the way that consumerism + algorithms are like....eroding our sense of art + identity

cj the x's youtube channel - really fantastic video essays about art + media consumption in the modern age which make me reflect on the ways tiktok promotes engagement with art as a product rather than art. in particular i recommend 7 deadly art sins and the kronk effect

west elm caleb and the feminist panopticon, by rayne fisher-quann - another excellent essay that gets into the ways apps like tiktok can like....create this online surveillance state where we're all being encouraged to police each other

against character vapor, by brandon taylor - an essay that gets into how modern anxiety over embodiment + the sense that everything is now a performance thanks to social media influences our literature/art

keep it in the group chat: fanfiction and influencer culture, from into the fic of it - podcast episode where they discuss a lot of these issues with art + consumer culture specifically in the context of fanfic

objects of despair: mirrors, by meghan o'gieblyn - another article that touches on social media + identity formation

every "chronically online" conversation is the same, by rebecca jennings - discusses an issue that is not unique to tiktok but is exacerbated by tiktok

and if u wanna read some of my own more organized thoughts about tiktok, i have 2 essays on substack. one is you are what you eat: identity as consumption and what went wrong with jegulus, which ignoring all the jegulus stuff just contains like. a synthesis of how i think tiktok/algorithmic social media promotes a fucked-up method of identity-formation. and then i also have why does tiktok hate masculine lesbians? which is less about tiktok itself and more just an example of one issue that has festered on the site thanks to lack of critical thought + discussion -- both of which are literally impeded by the way tiktok is set up.

here is like. TikTok Thesis from my jegulus essay:

right now, in our current historical moment, almost all social media is being slowly (or not-so-slowly) swallowed by the vast and yawning chasm of the Algorithm. that’s because the Algorithm is good for capitalism. the Algorithm breaks down the boundaries between content and consumer, streamlining the system so that everyone is consuming each other all at once. we, the social media users, are consumers consuming the content of social media. at the same time, social media is consuming the content of our data. that data is then consumed by advertisers, who use it to sell us more content. we are thus increasingly stuck in a never-ending cycle of consumption, held captive by algorithms that soothe our anxieties by offering us tiny, consistent dopamine hits while working to break down our attention spans and our capacities for critical thought so that they can keep us trapped in echo chambers of endless consumptive entertainment.

and then there are of course all the rantings and ravings i post here on my blog lol!

anyway, a lot of what i've collected here is a little more philosophical and focused around identity + consumerism + art, but i'm sure there are also plenty of people out there making additional points about the way tiktok is horrible for privacy, how it's harvesting all our data, and how it's designed to be addictive + keep you scrolling + is in the process deteriorating your attention span. these are all issues that, again, are not unique to tiktok, but are grossly exacerbated by tiktok. especially bc the rapid growth of tiktok as a successful social media during the pandemic has made it like....this epicenter of pop culture such that

a) other social media apps are now trying to copy tiktok's model and become more like it

b) almost all commercial art is now influenced by tiktok to some extent. books, music, etc -- if u want people to invest in something, it needs to go viral on tiktok!

the consumerism of capitalism is the cancer and tiktok is the malignant tumor growing at an unprecedented + life-threatening rate <3

#ask#ranting and raving#book recs#kinda....really only one book in this post but tagging it anyway lol

82 notes

·

View notes

Text

GRV.T-SSG SYSTEMS VER 11/22/23

Hey, guys, just wanna remind y'all, that Bad Internet Bills has a collection of information on the United States Government's intentions to surveil and control the Internet Infrastructure to the point of violating privacy and civil liberties of the people.

The RESTRICT ACT (aka "TikTok Ban Bill"), along with a host of other bad bills, basically intends to criminalize dissent and penalize individuals using or interacting with digital infrastructure they deem 'foreign adversaries' with million dollar fines, and more than 20+ years of prison time.

It's a bad scene, and I would urge you all to look into these bills, get in contact with your representatives, lawmakers, and make some noise about this. We've already been doing this, but for anyone who isn't 100% about the issue, now if your time. Research.

Check out TikTok user, diedmadaboutit (Diggity) and their summary of the RESTRICT bill, spread those summary videos around. They urge you to read the bill on your own, however. The spread of information beyond mine or their perspectives is vital to preventing it from becoming a reality.

(The recent FCC report from Brendan Carr might have something to do with this bill, or not. I'm not sure.)

Don't just reblog this or like this and call it a day. Create your own post(s) about it,, talk to the people you know off-line about it. Get people engaged in what will have far reaching consequences for everyone in the States.

Keep your heads, keep your cool. Use your dread and fears to create informed organization around the prevention of our government from stripping us of our rights.

Because if they can do it here, they will do it elsewhere.

Happy hunting.

(gif by freminet)

15 notes

·

View notes

Note

In the On the Run AU, how careful is Vash about showing up in the background of people's photos? I feel like his chaos energy wouldn't allow him to skip on photobombing, but in a "where's Wally" style. Poor police staring at a busy photo of some tourist, trying to spot him because dammit he's got to be there somewhere! He was on all the other's by this person! (spoiler, he wasn't)

. . . I feel you are on to something here . . .

.

An anonymous tip informs the police that Vash the Stampede was seen in Queens going down to the subway earlier that day. The subway security footage is immediately pulled and they rush to track his movements.

The footage shows him not only in Queens, but in Brooklyn and Manhattan as well, in each instance he is seen standing still in a rush of people, holding up a different sign. He is easily seen due to his height and signature red coat

The signs are white cardboard with thick block letters written in black marker, held above his head as he smiles and waves. There are assumed to be four signs in total as each one is numbered. The three found so far read, in the order he indicated, are as follows:

1/4 Hello Big Apple! (note: he is holding this sign in his right hand while he holds a red apple in his left hand)

2/4 My Evil Plan Is As Follows (note: he is wearing a false black goatee)

3/4 (note: still unknown)

4/4 On This Tuesday (note: he blows a kiss at the camera before letting himself be drawn away by the flow of the crowd)

The timestamps on the footage match up with his locations and order of the signs, an unaccounted gap of time between the second and fourth signs being of a sufficient length for him to have gone elsewhere for the third sign. But after combing extensively through the footage the third sign, possibly the most vital, is still not found.

Consequently on the announced date a large police presence manned the subway stations Vash the Stampede had been seen at and security drastically heightened. There was worry that he may strike at the unknown third location and therefore the footage continued to be examined up until the end of Tuesday and resumed again Wednesday morning.

Vash the Stampede did not appear.

However due to the increased surveillance of the stations explosives were discovered in multiple subway cars and successfully dismantled with no injury or property damage. Evidence so far points to a known eco-terrorist group with which Vash the Stampede is suspected of having ties to being behind the attempted attack. No statement was issued by the group leaving the situation ambiguous. As of the present all signs indicate that the incident was concluded with the discovery and removal of the explosives.

The third sign was never located and the possibility was considered that there may never have been a third sign.

.

. . . hehehe yeah so. I imagine that Vash does indeed love photo bombing (but not subway bombing) and one version of his wanted poster is taken from a tourist's picture. The one where he's smiling and has his fingers on his chin. However Vash has a knack of accidentally ending up in the background of photos and tiktoks just when he really needs to be lying low. Chaos ensues.

#trigun#a dozen sporks speaks#ask#trigun modern au#trigun on the run au#somewhere knives is fuming about being foiled with such juvenile tactics#thanks for the ask! love and peace!

10 notes

·

View notes

Text

At 19, he was the oldest of the group of teens accused of hurling Molotov cocktails at the police station of their suburban hometown.

“Why?” the judge asked Riad, who was taken into custody after he was identified in video surveillance images of the group from June 29, the second night of nationwide unrest following the police shooting of another suburban teenager outside Paris.

“For justice for Nahel," Riad said. Slumped and slightly disheveled after five nights in jail, he said he didn't know about the peaceful march organized by Nahel Merzouk's family. He explained that the cellphone photo of him holding a Molotov cocktail was “for social media. To give an image.”

In all, more than 3,600 people, with an average age of 17, have been detained in the unrest across France since the June 27 death of Nahel, who was also 17, according to the Interior Ministry. The violence, which left more than 800 law enforcement officers injured, has largely subsided in recent days.

French courts are working overtime to process the arrests, including opening their doors through the weekend, with fast-track hearings around an hour long and same-day sentencing.

The prosecutor noted that Riad had learned where to acquire incendiary devices on Snapchat, the social network that the French government has singled out, along with TikTok, as fueling the unrest. Riad's lawyer noted that his record was clean and that the young man was not blamed for any significant damage or injuries.

By the end of Tuesday, Riad's sentence was fixed: three years in prison, with a minimum of 18 months behind bars, and a ban from his hometown of Alfortville for the duration of the term.

He collapsed on the stand. “I'm not ready to go to prison. I'm really not ready,” he said, blowing a furtive kiss to his mother as he was led away.

Outside the packed courtroom, a pair of girls asked someone exiting what sentence he'd received. “Three years? That's insane!” one exclaimed.

But the mood in France is stern after unrest that officials estimate caused more than $1 billion in damage. Nahel's fatal shooting by police came during a traffic stop, was captured on video and immediately stirred up long-simmering tensions between police and young people — nearly all ethnic minorities and overwhelmingly French-born — in housing projects and disadvantaged suburbs.

Justice Minister Eric Dupond-Moretti issued an order Friday that demanded a “ strong, firm and systematic” judicial response. Hearings began the following day, as unrest continued into the night.

“This is not hasty justice. The message I want to send is that justice is functioning normally in the face of an exceptional situation," said Peimane Ghaleh-Marzban, the president of the tribunal in Bobigny.

By Tuesday night, a total of 990 people had gone before a tribunal, and about one-third received jail terms, according to government spokesman Olivier Veran. A third of those detained were minors, he said.

“You have many first-time offenders — people who are not deep in delinquency, many minors in school who don’t [engage in] habitual criminal activity,” Ghaleh-Marzban said.

Despite that, the inclination to convict with jail time appeared to prevail.

In Lyon, France's second-most populous metropolitan area, the prosecutor said Thursday that of 26 adults who have appeared before the fast-track courts so far, 22 were convicted and sentenced to jail, three requested more time to prepare a defense and only one was acquitted. According to BFM television Thursday, 76% of people in the fast-track trials were placed in detention.

The United Nations' human rights office said the unrest showed that it was time for France to reckon with its history of racism in policing, rather than just lash out in punishment. The office said the French government needed to ensure that use of force “always respects the principles of legality, necessity, proportionality, non-discrimination, precaution and accountability.”

But many French lawmakers are demanding maximum punishment — and fast.

Olivier Marleix, a lawmaker from the conservative Republicans party, called for all the cases involving the unrest to be handled within 100 days.

“Not to punish this would be an injury to all our law enforcement. Not to punish this would be a failure to understand the gravity of the threat to France," he said Tuesday in the National Assembly.

By contrast, the officer accused in Nahel's death has been charged with voluntary homicide but has yet to appear in a courtroom or even have a court date set.

Rayan, an 18-year-old detained with a group of about 30 young people, was accused of filming a 14-second video of incendiaries being hurled at his local police station in Kremlin-Bicetre. In the video, he cries out: “Light them up!”

It was the first time he has ever been arrested. He was taken to Fleury-Merogis prison, the European Union’s largest, and wept on the stand Tuesday. Prosecutors, who accused him of tripping a police officer while fleeing, asked for a 30-month sentence and for him to be barred from his hometown.

“I’m a good person. I’ve never had a problem with police. I have a family, I work,” he said, burying his face in his hands. “I don’t even know what I’m doing here.”

His brief hearing ended with a 10-month suspended sentence. His parents picked him up the same night from prison to take him home.

9 notes

·

View notes

Text

Editor's note: A previous version of this article included, in reference to the Kids Online Safety Act (KOSA), the following sentence: “The bill also bans youth under 13 from using social media and seeks parental consent for use among children under 17 years old.” The draft of KOSA that was approved by the Senate Commerce Committee on July 27, 2023 provides minors (defined as individuals under the age of 17) the ability to limit the ability of other individuals to communicate with the minor and to limit features that increase, sustain, or extend use of the covered platform by the minor, but it does not prohibit individuals under the age of 13 from accessing social media. KOSA seeks parental consent for use among children, defined as an individual under the age of 13, not 17.

With 95% of teens reporting use of a social media platform, legislators have taken steps to curb excessive use. With increasing digitalization and reliance on existing and emerging technologies, youth are quick to adopt modern technologies and trends that can lead to excessive use, especially with the proliferation of mobile phones. The increasing use of social media and other related platforms—most recently, generative AI—among minors has even caught the attention of the U.S. surgeon general, who released a health advisory about its effects on their mental health. Alongside increasing calls to action, big tech companies have introduced a multitude of parental supervision tools. While considered proactive, many of the same opponents of social media use have sparked doubts over the efficacy of these tools, and a range of federal and state policies have emerged, which may or may not align with the goals of protecting minors when using social media.

Currently, regulation of social media companies is being pursued by individual states and the federal government, leading to an uneven patchwork of directives. For example, some states have already enacted new legislation to curb social media use among minors, including Arkansas, Utah, Texas, California, and Louisiana. However, individual state efforts have not gone unchallenged. Big tech companies, such as Amazon, Google, Meta, Yahoo, and TikTok, have fought recent state legislation, and NetChoice, a lobbying organization that represents large tech firms, recently launched a lawsuit contesting Arkansas’s new law, and also challenged California’s legislation last year.

At the federal level, the Senate Commerce Committee voted out two bipartisan bills to protect children’s internet use in late July 2023—the Kids Online Safety Act (KOSA) and an updated Children Online Privacy Protection Act (COPPA 2.0) by a unanimous vote. KOSA is intended to create new guidance for the Federal Trade Commission (FTC) and state AGs to penalize companies that expose children to harmful content on their platforms, including those that glamorize eating disorders, suicide, and substance abuse, among other such behaviors. The other bill, COPPA 2.0, proposes to increase the age from 13 to 16 years old under the existing law, and establish bans on companies that advertise to kids. NetChoice also quickly responded to the movement of these bills, suggesting that companies, instead of bad actors, were being scrutinized as the primary violators of the issue. Still, others, including civil liberties groups, have also opposed the Senate’s legislative proposals, pointing to the growing use of parental tools to increase surveillance of their children, content censorship, and the potential to collect more and not less information for age-verification methods.

This succession of domestic activities emerges around the same time that the European Union (EU) and China are proposing regulation and standards that govern minors’ use of social media. This month, for example, China’s Cyberspace Administration published draft guidelines that restrict minors’ use of social media from 10 p.m. to 6 a.m., and limit its use to two hours per day for youth ages 16 to 18, one hour for those 8 to 15, and youth under 8 years old restricted to 40 minutes per day. The U.K. has become even more stringent in their policing of social media platforms with recent suggestions of pressuring behavioral changes among companies through fines and jail time for breaking laws. There are some differences in the age definitions of “minor,” with most proposals referring to individuals under the age of 18, but KOSA codifies minors as those under 17 years of age.

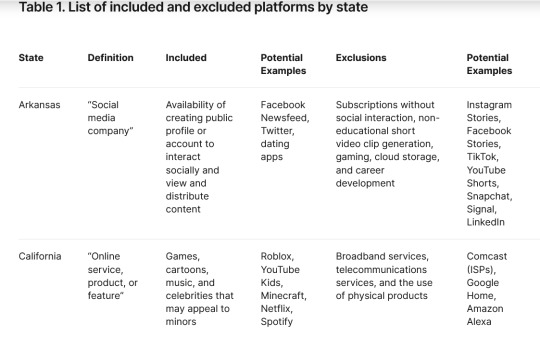

How states specify guidelines and the types of companies subjected to such scrutiny varies among the various state laws. In this blog, we examine five states’ laws with recent online privacy protections for minors and analyze their similarities and differences around the accountability of platforms, including applications, the role of parents, and age-verification methods as well as the opinions of minors about the proposals to curb their social media use.

Platform Accountability

The states of Arkansas, Texas, Utah, California, and Louisiana have varying definitions for what qualifies as a platform as part of their legislation, which creates some incongruencies when it comes to enforcement. Below are some comparisons of these states’ definitions of what constitutes a digital platform.

Arkansas’s laws offer the most explicit and exclusionary criteria for defining platforms. Companies focused on subscriptions, excluding social interaction, non-educational short video clip generation, gaming, cloud storage, and career development, fall under this definition. The state’s definition also excludes platforms providing email, direct messaging, streaming, news, sports, entertainment, online shopping, document collaboration, or commenting functions on news websites. These narrow criteria may result in most top social media platforms not meeting the definition. Further, short-form video clip generation exclusions could result in most major social media platforms (including WhatsApp and Signal, which both have a Stories function) being excised out of the definition.

Texas’s recently passed social media law defines such companies as “digital service providers” that facilitate social interaction through profiles and content distribution via messages, pages, videos, and feeds. Like Arkansas, it excludes services primarily focused on email, direct messaging, access to news, sports, or commerce content, search engines, and cloud storage. Under this criterion, Instagram would count as a “digital service provider,” but Facebook Marketplace would not.

Utah’s definition of a “social media platform” similarly excludes platforms where email, private messaging, streaming (licensed and gaming), non-user-generated news and sports content, chat or commenting related to such content, e-commerce, gaming, photo editing, artistic content showcasing (portfolios), career development, teleconferencing or video conferencing, cloud storage, or educational software are the predominant functions. Overall, these exclusions primarily focus on platforms enabling social interaction and content distribution.

In contrast to the other states, California employs the term “online service, product, or feature” which features content that interests minors such as “games, cartoons, music, and celebrities” and excludes broadband services, telecommunications services, and the use of physical products—criteria that align with those outlined by KOSA.

Louisiana has the broadest definition of platforms by using terms such as “account,” which encompasses a wide range of services including sharing information, messages, images/videos, gaming, and “interactive computer service.” The latter refers to any provider that enables access to the internet, including educational services. This approach may indicate an intent to encompass various platforms and services enabling interaction and content sharing without explicitly specifying exclusions.

Exempted Applications

Each of the five states also has varied guidance on which apps are subjected to the law. Some apps like Discord, Slack, Microsoft Teams, GroupMe, Loop, Telegram, and Signal allow text, photo, and video sharing through direct messaging. However, even those primarily used for direct messaging, like Signal, may raise questions due to new features like Stories where a user can narrate or provide short, time-limited responses or provide status updates. Meta’s WhatsApp, as part of a larger social media platform, also may be influenced by ownership and integration—despite it being primarily a messaging app. The curation systems of streaming platforms, like Netflix and Hulu that are without direct social interaction, may fall in or outside the regulatory scope focused on social interaction and content distribution as users expand their platforms.

Table 1 provides a preliminary classification of what online content may or may not be permitted under each state law, in accordance with their definitions of what constitutes an eligible platform.

Source: The authors compiled these results based on an analysis of major social media apps’ main functions in July 2023.

Inconsistencies of age verification Methods

Many of the five states differ in their approaches to age verification, particularly in the areas of platform and content access. In terms of access to platforms’ services, four out of the five states (all except Louisiana) require some form of age verification, but the laws display inconsistency over how that may be done. Arkansas mandates the use of a third-party vendor for age verification, offering three specified approaches considered ‘reasonable age verification.’ In Utah, the process is still undefined. The law specifies a requirement for age verification but does not establish the exact methods. The remaining states do not address in detail exactly how a minor may be identified other than the expectation that they will be. The ambiguities among the states around age-verification, especially as a key component for defining social media use, present both challenges and opportunities. On one hand, it creates uncertainty for social media platforms regarding compliance, while offering some room for practical and effective solutions.

Future efforts to address age verification concerns will require a more holistic strategy toward approving age-assurance methods. Some states have called for the application of existing requirements around submissions of identification that validate one’s age, which includes the requirement of government-issued IDs when minors create accounts or the option to be “verified” through parental account confirmation to protect the privacy of minors. Other ideas include deploying machine learning algorithms that can conduct age estimation by analyzing user behavior and content interaction to infer age.

Among the five states, all have some language on parental/guardian consent to minors accessing social media platforms, aside from determining how to verify that legal relationship. Like age-verification methods, validation and enforcement may prove challenging. The current methods approved by the FTC include, but are not limited to, video conference, credit card validation, government-issued ID such as a driver’s license or Social Security number, or a knowledge-based questionnaire. Recently, some groups have sought to expand parental consent methods to include biometric age estimation, which present a range of other challenges, including direct privacy violations and civil rights concerns. Generally, the process of confirming a parental relationship between the minor and adult can be intrusive, difficult to scale on large social media platforms, or even easily bypassed. Furthermore, even when parental consent is confirmed, it’s important to consider whether the parent or guardian has the child’s best interest at heart or has a good grasp of the minor’s choice and use of applications defined as social media by the respective states.

Concerns around age-verification alone may hint at the potential limitations of state-specific laws to fully address social media use among minors. With the fragmented definitions that carve out which platforms are susceptible to the laws, there are workarounds that could allow some platforms to evade public oversight. For example, with the account holder’s age verified and consent given for platform access (except in Utah), there are no restrictions on the content a minor may access on the platform. Only Arkansas and Louisiana do not require platforms to provide parental control or oversight tools for minor accounts. Consequently, these laws are insufficient and less penetrable because of their emphasis on access restrictions with limited measures for monitoring and managing content accessed by minors.

Other potential drawbacks

How legal changes affect minors themselves is perhaps one of the largest concerns regarding the recent bills. Employing any of the various methods of age-verification requires some level of collection and storage of sensitive information by companies that could potentially infringe upon the user’s privacy and personal security.

While the implementation of more solid safeguards could dissuade more harmful content from being shown and used by minors, the restriction to certain sites could serve to not only overly censor youth, but also create more backdoors to such content. Further, overly stringent and more mainstreamed and homogenous restrictions would inadvertently deny access to some diverse populations where being unsafe online mirrors their experiences offline, including LGBTQ+ youth who would be outed to parents if they were forced to seek consent for supportive services or other identifying online content.

Because social media can serve as a lifeline to information, resources, and communities that may not otherwise be accessible, some of the requirements of the state laws for parental control and greater supervision by age may endanger other vulnerable youth, including those in abusive households. Clearly, in the presence of a patchwork of disparate state laws, a more nuanced and informed approach is necessary in situations where the legislative outcomes may end up with far-reaching consequences.

Definition of harm and the adjacent responsibilities

Here is where the definition of harm in online child safety laws is significant and varies across each of the five states (with some language unclear or even left undefined). States with defined harm focus on different types and scopes of harm. Texas law defines “harmful material” according to Texas’s public indecency law Section 43.24 Penal Code, which defines “harmful material” as content appealing to minors’ prurient interest in sex, nudity, or excretion, offensive content to prevailing adult standards, and content lacking any redeeming social value for minors. HB 18 also outlines other harmful areas, such as suicide and self-harm, substance abuse, harassment, and child sexual exploitation, under criteria that are consistent with major social media companies’ community guidelines, such as Meta’s Community Standards.

Still other states do not specify the meaning of harm as thoroughly as Texas. For example, Utah’s bills (SB 152 and HB 311) do not provide a specific definition of harm but mention it in the context of the presentation of algorithmically suggested content (“content that is preselected by the provider and not user generated”) (SB 152). The ban on targeted content poses a challenge to social media companies relying on curation based on algorithmic suggestions. In the Utah bill, harm is also discussed in terms of features causing addiction to the platform. Utah’s HB 311 defines addiction as a user’s “substantial preoccupation or obsession with” the social media platform, leading to “physical, mental, emotional, developmental, or material harms.” Measures to prevent addiction are also provided with the stipulation of specific hours a minor can access social media platforms (10:30 a.m. to 6:30 p.m.). In Arkansas and Louisiana, there is also a lack of specific definitions of harm, and more of a focus on restricting minors’ access to the platform. Arkansas’s law similarly suggests that addiction can be mitigated by limiting minors’ platform access hours and prohibits targeted and suggested content, including ads and accounts. Additionally, Arkansas’s law holds social media companies accountable for damages resulting from unauthorized access by minors.

In contrast to the other states, California’s law centers on safeguarding minors’ personal data and considers the infringement of privacy rights as a form of harm, which sets it apart from the other four states.

The patchwork of legislation from states leads minors to have access to certain content in some states, but not others. For example, states outside of Utah do not stipulate restrictions on addictive features, so hooking design patterns such as infinite scrolling remain unregulated. Louisiana does not stipulate any limitation to data processing and retention. None of the state laws provide restrictions on non-textual and non-visual content that comprises most social interactions in extended reality (XR) platforms such as virtual reality (VR) apps. Moreover, the focus on access does not address how already age-restricted content will be made available to minors.

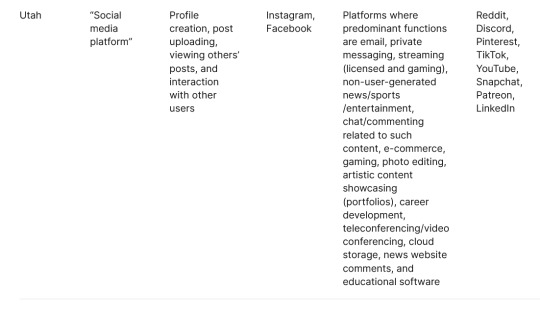

Comparison between state laws and federal bills

As states are enacting their own laws, Congress’s introduction of the Kids Online Safety Act (KOSA) has different emphases and approaches to the protection of children online. Table 2 reveals the differences between states and proposed federal legislation, starting with the aim of the federal bill to propose a baseline framework in a wide range of areas of interest to states, including establishing age verification, parental consent, limits to advertising, and enforcement authority. However, the state bills provide stricter and more granular protections in certain areas such as age verification (Arkansas, Texas, and Utah) and privacy protections for minors (California) than what is being proposed in KOSA, making it possible that the lack of unified standards across states may cause varying levels of protection when crossing state lines, creating legal gray areas and opportunities for circumvention.

Although the provisions on harm and duty of care outlined in KOSA are also different from the state bills, it is worth noting that KOSA does have provisions on reporting mechanisms and independent research. Further, KOSA mandates that reporting mechanisms be readily accessible for minors, parents, and schools, with specified timelines for platform responses. Such provisions demonstrate how the bill considers harm mitigation efforts, as well as preventive measures like those in the state legislation.

In Section 7, KOSA also defines “eligible researchers” as non-commercial affiliates of higher-education institutions or nonprofit organizations, suggesting that the federal bill may acknowledge the evolving nature of online harm to minors and the importance of providing open data sets and safe harbors for researchers to explore issues such as age verification and processing minor users’ data. Research and creative experimentation are not mentioned in any of the statewide bills. In Table 2, we share how KOSA compares to each respective state bill on a variety of constant variables.

Sources: Federal: S 1409 – Kids Online Safety Act; Arkansas: SB 396 - The Social Media Safety Act; California: AB 2273 – The California Age-Appropriate Design Code Act; Louisiana: HB 61 – Provides for consent of a legal representative of a minor who contracts with certain parties; Texas: HB 18 – Securing Children Online through Parental Empowerment (SCOPE) Act; Utah: SB 152 – Social Media Regulation Amendments

Urgency of Action

The recent wave of online child safety legislation at the state and federal levels demonstrates the urgency of concerns around the protection of minors online—many of which are being prompted by rising concerns over youth mental health, digital addiction, and exposure to inappropriate or harmful content. However, as highlighted in this analysis, the varying approaches adopted across different states have resulted in an inconsistent patchwork of regulations, and potentially those that may conflict with the bipartisan proposals of KOSA and COPPA 2.0.

In the end, the legislative fragmentation may create compliance challenges for platforms, and potentially make children and their parents or guardians even more confused when it comes to social media use. State-specific legislation can either strengthen or weaken children’s online safety when they cross state lines or penalize the parents who may be unaware of social media more generally, and how to use it more appropriately. Overall, our analysis revealed several problematic areas worth summarizing, including:

The impact on the law’s legitimacy when there are inconsistent definitions of platforms and services, with states like Arkansas, Texas and Utah using narrower criteria, or when Arkansas excludes most major social media platforms, and Louisiana includes “any information service, system or access software.”

Varying standards for age verification and parental consent requirements will weaken the effectiveness of state (and federal laws) as some states mandate third-party verification, while others leave the methods largely undefined.

Limited provisions to monitor and manage exposure to content among children after the restrictions will further galvanize the need for state laws.

Differing definitions of harm, ranging from privacy infringements (California), addiction (Utah) to various categories of inappropriate content (Texas and Utah) at the state-level will also serve to degrade the efficacy of the law’s intents.

As Congress has departed Washington, D.C. for summer recess, the committee bills are likely to advance to the full Senate with some changes. Overall, efforts to have more comprehensive federal legislation that establishes a duty of care, baseline standards, and safeguards should be welcomed by industry and civil society organizations over a patchwork of state laws. The passage of some type of federal law may also help with constructing consistent and more certain definitions around age and verification methods, parental consent rules, privacy protections through limits on data collection, and enforcement powers across the FTC and state AG offices. From here, states may build from this foundation their own additional protections that are narrowly tailored to local contexts, including California where some harmonization should occur with their privacy law.

But any attempt to unanimously pass highly restrictive children’s online safety laws will come with First Amendment challenges, especially any restrictions on platform and content access that potentially limit minors’ freedom of expression and right to information. To be legally sound, variations or exceptions amongst age groups may have to be made, which would require another round of privacy protections. Moving forward, Congress might consider the convening of a commission of experts and other stakeholders, including technology companies, child advocates, law enforcement, and state, federal, and international regulators, to propose harmonized regulatory standards and nationally accepted definitions.

Additionally, the FTC could be empowered with rulemaking authority over online child safety and establish safe harbors to incentivize industry toward greater self-regulation. These and other activities could end up being complementary to the legislative goals and provide sensible liability protections for companies that proactively engage in more socially responsible conduct.

Keeping children’s rights in mind

Finally, beyond the realm of any policy interventions is the predominant focus and interest of minors, who may consider policymakers’ efforts paternalistic and patronizing. Moreover, actions like what is being undertaken by China and even in states like Texas risk violating children’s digital rights as outlined in UN treaties, including access to information, freedom of expression/association, and privacy. Studies have already warned of the dangers that such measures could impose, such as increased censorship and compounded disadvantages among already marginalized youth.

While regulation and legislation can be well-meaning, parental oversight tools can be equally intrusive and have the potential to promote an authoritarian model at odds with children’s evolving autonomy and media literacy, as full account access enables surveillance. More collaborative governance models that involve minors might be a necessary next step to address such concerns, while respecting children’s participation rights. In June 2023, the Brookings TechTank podcast published an episode with teenage guests who spoke to increased calls for greater surveillance and enforcement over social media use. One teen shared that perhaps teens should be exposed to media literacy, including more appropriate ways to engage social media in the same manner and time allotment that they learn driver’s or reproductive education. Scaling digital literacy programs to enhance minors’ awareness of online risks and safe practices could be highly effective and include strategies for them to identify and mitigate harmful practices and behaviors.

KOSA also mentions the inclusion of youth voices in Section 12 and proposes a Kids Online Safety Council. But the language does not specify how youth will directly recommend best practices. The idea of involving youth in community moderation best practices is forward thinking. A recent study that experimented with community moderation models based on youth-centered norms within the context of Minecraft showed that youth-centered community approaches to content moderation are more effective than deterrence-based moderation. By empowering problem-solving and self-governance, these approaches allowed minors to collectively agree on community rules through dialogue. As a result, younger users were able to internalize positive norms and reflect on their behavior on the platform. In the end, finding ways to involve instead of overly penalizing youth for their technology adoption and use may be another way forward in this current debate.

Beyond social media, the issue of privacy is at the center of how and why social media is best optimized for younger users. The lack of regulation of pervasive data collection practices in schools potentially undermines child privacy. Emerging learning analytics utilizing algorithmic sorting mechanisms have also been cited to have the potential to circumscribe students’ futures based on biased data. Minor-focused laws must encompass such non-consensual practices that affect children’s welfare.

The U.K.’s Age-Appropriate Design Code, based on UNCRC principles, supports a child’s freedom of expression, association, and play—all while ensuring GDPR compliance for processing children’s personal information. The code emphasizes that a child’s best interests should be “a primary consideration” in cases where a conflict between a child’s interests and commercial interests arises. However, it also recognizes that prioritizing a child’s best interests does not necessarily mean it conflicts with commercial interests. This attempt shows how the legal code aims to harmonize the interests of different actors affected by online child safety legislation. Moreover, the U.K.’s code classifies different age ranges according to developmental stages of a minor, allowing for services to develop more granular standards.

Current minor safety regulations embody assumptions about children requiring protection but lack nuance around the spectrum of children’s rights. Moving forward necessitates reconsidering children not just as objects of protection. Instead, minors should be rights-bearing individuals where resolves to online harms require a centering of their voices to champion their digital rights. As these discussions evolve, the goals to support children’s well-being must also respect their personal capacities and the diverse nature of their lived experiences in the digital age.

3 notes

·

View notes

Text

Watching Resolution: Missing (2023)

12. A film released in 2023: Missing (2023)

List Progress: 3/12

So many thriller or horror movies have to find ways to neutralize modern technology. The remote area with no cell service, the phone that gets smashed in the first fight, the threat of being tracked that forces everyone to abandon their devices. But films like the new thriller Missing go in the opposite direction and relish in the tech of the modern world. Perhaps this means that it will seem dated in five years, but for the exact moment this movie exists in, it is a fun bit of action about a teenager trying to find her missing mother with every bit of technology available at her fingertips.

Missing is a “screenlife” film, meaning the action takes place entirely on the main character’s computer screen. The audience follows 18 year old June’s internet searches, text chats, and sees everything that her video camera sees. This obviously requires a bit of stretching to make sure that all major events can be seen through this format, but the movie finds some creative workarounds, some which work better than others. June’s widowed mother is taking a week-long vacation to Colombia with her new boyfriend, but neither of them show up on their return flight. June suddenly has to dig into every aspect of her mother’s life in order to find out where they disappeared to and why, and learns far more about her mother as a person than she expected.

The film takes it for granted that every aspect of modern life is in some way surveilled, and doesn’t seem to have much issue with that. (Though considering how many brands had to give their permission to this movie, it makes sense it is not too critical of the modern panopticon.) A character turning off their location services while traveling abroad is seen as a sign that they must be up to something nefarious, never mind international roaming charges. It smacks of “why do you want privacy if you’ve got nothing to hide?”, and while the conclusion does push back against that idea with a good reason for someone to want to hide, the baseline assumption is still baked into the premise. What the film is more directly critical of is the fandom approach to true crime, and how social media allows everyone to have their own distasteful hot takes about traumatic things happening to real people. June scrolls through hordes of TikTok-ers speculating about whether her mother is alive or dead, and she knows her life is one more bit of entertainment and mystery for them to chew over, like she has consumed other stories for entertainment herself.

There is a lot to analyze about Missing, but that doesn’t detract from the fact that it is a thriller with plenty of twists and turns. Missing is a piece of cheap, consumable media told in an interesting way, and perhaps saying more about the modern world than it ever intended to. Audiences in 2028 will probably roll their eyes, but for 2023, it rings true.

Would I Recommend It: Yes.

18 notes

·

View notes

Text

ik i keep ranting and rambling about this is but i hate how the internet has diluted fame and how that dilution goes hand in hand with constant self-surveillance like damn. if you want to make any kind of mark you have to have like five different kinds of socials and then most of those people with the five different socials don’t end up making any sort of mark except to self-document to the point that it’s detrimental.

(also don’t get me started on the whole self-censorship thing happening on tiktok and stuff- that’s a whole different issue though)

#spider talks a lot#fuck the internet#i am aware that this seems like a pessimistic viewpoint and yeah it probably is but what am i if not a pessimist

2 notes

·

View notes

Text

Social Media Platforms as Ideal Public Spheres

Three traits cornerstone Habermas' notion of an ideal public sphere; "unlimited access to information, equal and protected participation, and the absence of institutional influence” (Flinchum, Kruse & Norris 2018). There is debate on whether social media platforms align with these requirements.

Arguments For:

Social media is an interactive space which empowers communication amongst active participants. Social media has democratised media content production, enabling equal participation by providing users with the tools necessary to independently broadcast their voice. This redistribution of power has established a media space where communication is on a peer-to-peer level rather than one-to-many (Flinchum, Kruse & Norris 2018). This has empowered individuals to engage in discourse publicly.

The reliance on traditional media gatekeepers has been removed by social media platforms. The equal contribution of the public to the media landscape has decreased the influence of institutions (Flinchum, Kruse & Norris 2018). Moreover, unlike traditional media, there are no gatekeepers on social media platforms which permit or deny the posting of content. This fulfils the requirement of a space absent of institutional influence.

Users also have unlimited access to all information available on social media platforms (Flinchum, Kruse & Norris 2018).

Whilst social media fundamentally meets the requirements of a public sphere, its ideality is inhibited by multiple factors.

Arguments Against:

Unlimited access to information

Users are segregated into niche online communities and are not exposed to the unlimited information available on social media. Algorithms analyse user data to predict and present content which aligns with an individual’s interests. Users are repeatedly exposed to media which affirms pre-existing viewpoints, forming a filter bubble (Belavadi et. al 2020). The online community is separated into smaller ideologically homophilic circles, constructing echo chambers of opinion (Belavadi et. al 2020). Automated content personalisation ultimately limits information access by sheltering users from exposure to opposing viewpoints.

Equal and protected participation

The exclusion of individuals from social media often arises as a consequence of the digital divide, which refers to the discrepancies which prevent certain groups' equal use of online spaces. Primary factors are the lack of access to the required technology, such as a stable internet connection and personal devices, and one's degree of competence with these technologies (James 2021). As individuals have varying access and competence which limits them in different ways, social media is not a space in which all individuals can equally participate.

Users are not protected when participating in discourse on social media. As mentioned in the Flinchum, Kruse & Norris reading; surveillance on social media can result in online activity impacting users’ life offline. This is reflected by the offline consequences faced by NBA player Kyrie Irving’s career after posting a link to an antisemetic film on Twitter in 2022 (Ganguli & Sopan 2022). Irving received an eight-game suspension by the Brooklyn Nets, and Nike terminated his contract 11 months prior to its official expiration (Doston & Vera 2022; Ganguli & Sopan 2022). Exemplified by Irving’s financial and reputational damage; users are held accountable offline for content shared on social media. Users may alter or limit their online presence to not reflect their views and reduce the risk of offline consequences.

The following video discusses the incident further and highlights the damage to Irving's reputation.

youtube

Source: Good Morning America 2022

Government surveillance is also an issue for protected participation on social media. This is exemplified by Douyin, the predecessor of TikTok launched for the Chinese market. The Chinese Communist Party (CCP) monitors online activity and enforces strict internet censorship restrictions (Gamso 2021). Government surveillance prevents protected participation as Douyin users must limit their online expression, particularly on political issues, to avoid legal prosecution.

The following video briefly explains the CCP's internet censorship practices.

youtube

Source: South China Morning Post 2020

Absence of institutional influence

The commodification of user information breaches the requirement of a space free from institutional influence. Data is collected by platform owners and offered as targeted marketing to external organisations. Meta Inc., parent company of Facebook and Instagram, generated $116.6b USD in revenue in 2022 primarily from promotion (Meta Inc. 2022). Platforms themselves benefit economically from their existence, profiting from the data generated by users’ self expression. Thus, social media is not free from economic institutional influence.

Attainability of the Ideal Public Sphere

The reading acknowledges doubts on the attainability of the public sphere outside of theory (Flinchum, Kruse & Norris 2018). Equal participation of all individuals requires the removal of all barriers which often stem from prominent sociocultural and economic issues in society (Flinchum, Kruse & Norris 2018). Thus, the ideal public sphere theorised by Habermas may be unattainable in both the digital and physical space.

Reference List