#kubernetes as a service

Explore tagged Tumblr posts

Text

youtube

AWS EKS | Episode 10 | ReplicaSets, Deployments and Services in Kubernetes | Lets discuss.

1 note

·

View note

Text

Kubernetes Consulting in the UK: Empowering Businesses with Containerization

In today’s fast-paced digital landscape, businesses need agile, scalable, and efficient solutions to stay competitive. Kubernetes, an open-source platform for automating deployment, scaling, and managing containerized applications, has emerged as a cornerstone of modern IT infrastructure. For companies in the UK, embracing containerization with the right Kubernetes consulting service can be the key to unlocking operational efficiency and innovation.

The Rise of Kubernetes in Modern IT

Kubernetes simplifies the management of containerized applications, making it easier to scale resources, ensure high availability, and optimize performance. Organizations across various sectors—from finance to e-commerce—are turning to Kubernetes to modernize their IT frameworks. However, implementing Kubernetes effectively requires expertise and a clear strategy. This is where Kubernetes consulting services in the UK come into play.

Why Kubernetes Matters for UK Businesses?

Scalability and Flexibility: Kubernetes allows businesses to scale their applications seamlessly, ensuring they can handle increased workloads during peak times without compromising performance.

Cost Optimization: By optimizing resource allocation, Kubernetes reduces operational costs while improving application efficiency.

Faster Time-to-Market: With Kubernetes, development teams can accelerate deployment cycles, enabling businesses to deliver products and updates faster.

Resilience: Kubernetes ensures high availability of applications through self-healing capabilities, reducing downtime and maintaining business continuity.

The Role of Kubernetes Consulting Services in the UK

While Kubernetes offers transformative benefits, its complexity can be daunting for organizations without the necessary expertise. Kubernetes consulting services bridge this gap by providing tailored solutions that align with a company’s unique needs and goals.

Key Benefits of Hiring a Kubernetes Consulting Service

Expert Guidance: Consultants offer in-depth knowledge and best practices, helping businesses design and implement effective Kubernetes strategies.

Customized Solutions: Kubernetes consulting services in the UK deliver tailored solutions based on industry-specific requirements, ensuring optimal performance and alignment with business objectives.

Training and Support: Beyond implementation, consulting services provide training to in-house teams, empowering them to manage Kubernetes effectively.

Security and Compliance: Consultants ensure that Kubernetes deployments adhere to UK regulatory standards and industry best practices, safeguarding sensitive data.

Kubernetes Consulting Services in Action: Real-World Use Cases

E-Commerce Platform Optimization: An e-commerce company in the UK leveraged Kubernetes consulting services to scale its platform during high-traffic sales events. By optimizing container orchestration, they reduced latency and improved user experience.

Financial Sector Modernization: A UK-based financial institution implemented Kubernetes to modernize its legacy systems. The consulting service ensured seamless integration with existing infrastructure, enhancing security and compliance.

Startup Acceleration: A tech startup utilized Kubernetes consulting to deploy applications rapidly and cost-effectively, allowing them to focus on innovation and market growth.

Choosing the Right Kubernetes Consulting Service in the UK

Selecting the right consulting partner is critical to achieving success with Kubernetes. Here are key factors to consider:

Experience and Expertise: Look for a consulting service with a proven track record in Kubernetes implementations.

Industry Knowledge: Choose a provider familiar with your industry’s unique challenges and requirements.

Comprehensive Support: Ensure the service includes end-to-end support, from planning and deployment to ongoing maintenance and optimization.

Client Testimonials: Review case studies and client feedback to assess the consulting service’s effectiveness and reliability.

Empowering Your Business with Kubernetes

Kubernetes is more than a technology; it’s a catalyst for business transformation. By partnering with a reliable Kubernetes consulting service in the UK, businesses can harness the full potential of containerization, achieving scalability, resilience, and efficiency.

Whether you’re a large enterprise looking to modernize your IT infrastructure or a startup aiming to innovate rapidly, Kubernetes offers the tools to succeed in today’s competitive market. With expert consulting services, the journey to containerization becomes seamless and impactful, paving the way for sustainable growth.

Conclusion

In the UK’s dynamic business environment, Kubernetes consulting services are empowering organizations to navigate the complexities of containerization with confidence. By leveraging these services, businesses can optimize their IT operations, reduce costs, and deliver exceptional value to their customers. Embrace the future of technology today with a trusted Kubernetes consulting service in the UK—your partner in driving innovation and success.

0 notes

Text

Oracle Database@AWS ya está disponible en versión preliminar limitada

Clientes seleccionados ya pueden comenzar a utilizar Oracle Exadata Database Service en Oracle Database@AWS para simplificar la migración e implementar cargas de trabajo empresariales en la nube. Continue reading Oracle Database@AWS ya está disponible en versión preliminar limitada

#Amazon Bedroc#Amazon EC2#Amazon Elastic Kubernetes Service#Amazon Web Services#AWS#Inteligencia Artificial#ML#Oracle#Oracle Cloud Infrastructure#Oracle Database@AWS#Oracle Exadata#Oracle Exadata Database Service#Oracle Real Application Cluster

0 notes

Text

Unlock the Power of Elastic Kubernetes Services (EKS) with Expert Training

With Web Age Solutions’ EKS Training, fast-track your journey to becoming a Kubernetes pro on AWS! Elevate your skills and drive efficiency in cloud-native environments.

For more details, visit: https://www.webagesolutions.com/courses/WA3108-automation-with-terraform-and-aws-elastic-kubernetes-service

1 note

·

View note

Text

Entwicklung einer skalierbaren IT-Strategie: Best Practices

In der heutigen, schnelllebigen Geschäftswelt ist eine flexible und skalierbare IT-Strategie für Unternehmen unerlässlich, um wettbewerbsfähig zu bleiben. Der Bedarf an einer solchen Strategie ist besonders relevant, da Unternehmen zunehmend auf digitale Transformationen und Cloud-Lösungen setzen. Eine gut durchdachte IT-Strategie ermöglicht es Unternehmen, effizient auf Marktveränderungen zu…

#Automatisierte Prozesse#Best Practice#Best Practices#Cloud-Services#Containerisierung#DevOps#Digitale Transformation#Führung#IT-Infrastruktur#IT-Ressourcen#IT-Strategie#IT-Strategien#Kubernetes#Microservices#Virtualisierung

0 notes

Text

Breaking Down Monoliths: Power of Microservices Architecture

Discover how Microservices Architecture is transforming software development with increased scalability, flexibility, and faster deployment in our latest blog. Learn more now! Breaking Down Monoliths: Power of Microservices Architecture

#Application Development#developing a microservices app#it application development#kubernetes development#microservices architecture#microservices architecture development#microservices development services#modern app development#modern software development#monolithic app development

0 notes

Text

Top 5 Container Management Software Of 2024

Container Management Software is essential for businesses aiming to efficiently manage their applications across various environments. As the market for this technology is projected to grow significantly, here’s a look at the top five Container Management Software solutions for 2024:

Portainer: Established in 2017, Portainer is known for its easy-to-use interface supporting Docker, Kubernetes, and Swarm. It offers features like real-time monitoring and role-based access control, making it suitable for both cloud and on-premises environments.

Amazon Elastic Container Service (ECS): This AWS service simplifies deploying and managing containerized applications, integrating seamlessly with other AWS tools. It supports features like automatic load balancing and serverless management through AWS Fargate.

Docker: Since 2010, Docker has been a pioneer in containerization. It provides tools for building, shipping, and running applications within containers, including Docker Engine and Docker Compose. Docker Swarm enables cluster management and scaling.

DigitalOcean Kubernetes: Known for its user-friendly approach, DigitalOcean’s Kubernetes offering helps manage containerized applications with automated updates and monitoring. It integrates well with other DigitalOcean services.

Kubernetes: Developed by Google and now managed by CNCF, Kubernetes is a leading tool for managing containerized applications with features like automatic scaling and load balancing. It supports customizations and various networking plugins.

Conclusion: Selecting the right Container Management Software is crucial for optimizing your deployment processes and scaling applications efficiently. Choose a solution that meets your business’s specific needs and enhances your digital capabilities.

0 notes

Text

Prioritizing Pods in Kubernetes with PriorityClasses

In Kubernetes, you can define the importance of Pods relative to others using PriorityClasses. This ensures critical services are scheduled and running even during resource constraints. Key Points: Scheduling Priority: When enabled, the scheduler prioritizes pending Pods based on their assigned PriorityClass. Higher priority Pods are scheduled before lower priority ones if their resource…

0 notes

Text

Skyrocket Your Efficiency: Dive into Azure Cloud-Native solutions

Join our blog series on Azure Container Apps and unlock unstoppable innovation! Discover foundational concepts, advanced deployment strategies, microservices, serverless computing, best practices, and real-world examples. Transform your operations!!

#Azure App Service#Azure cloud#Azure Container Apps#Azure Functions#CI/CD#cloud infrastructure#cloud-native applications#containerization#deployment strategies#DevOps#Kubernetes#microservices architecture#serverless computing

0 notes

Text

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

New Post has been published on https://thedigitalinsider.com/deploying-large-language-models-on-kubernetes-a-comprehensive-guide/

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

Large Language Models (LLMs) are capable of understanding and generating human-like text, making them invaluable for a wide range of applications, such as chatbots, content generation, and language translation.

However, deploying LLMs can be a challenging task due to their immense size and computational requirements. Kubernetes, an open-source container orchestration system, provides a powerful solution for deploying and managing LLMs at scale. In this technical blog, we’ll explore the process of deploying LLMs on Kubernetes, covering various aspects such as containerization, resource allocation, and scalability.

Understanding Large Language Models

Before diving into the deployment process, let’s briefly understand what Large Language Models are and why they are gaining so much attention.

Large Language Models (LLMs) are a type of neural network model trained on vast amounts of text data. These models learn to understand and generate human-like language by analyzing patterns and relationships within the training data. Some popular examples of LLMs include GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and XLNet.

LLMs have achieved remarkable performance in various NLP tasks, such as text generation, language translation, and question answering. However, their massive size and computational requirements pose significant challenges for deployment and inference.

Why Kubernetes for LLM Deployment?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides several benefits for deploying LLMs, including:

Scalability: Kubernetes allows you to scale your LLM deployment horizontally by adding or removing compute resources as needed, ensuring optimal resource utilization and performance.

Resource Management: Kubernetes enables efficient resource allocation and isolation, ensuring that your LLM deployment has access to the required compute, memory, and GPU resources.

High Availability: Kubernetes provides built-in mechanisms for self-healing, automatic rollouts, and rollbacks, ensuring that your LLM deployment remains highly available and resilient to failures.

Portability: Containerized LLM deployments can be easily moved between different environments, such as on-premises data centers or cloud platforms, without the need for extensive reconfiguration.

Ecosystem and Community Support: Kubernetes has a large and active community, providing a wealth of tools, libraries, and resources for deploying and managing complex applications like LLMs.

Preparing for LLM Deployment on Kubernetes:

Before deploying an LLM on Kubernetes, there are several prerequisites to consider:

Kubernetes Cluster: You’ll need a Kubernetes cluster set up and running, either on-premises or on a cloud platform like Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), or Azure Kubernetes Service (AKS).

GPU Support: LLMs are computationally intensive and often require GPU acceleration for efficient inference. Ensure that your Kubernetes cluster has access to GPU resources, either through physical GPUs or cloud-based GPU instances.

Container Registry: You’ll need a container registry to store your LLM Docker images. Popular options include Docker Hub, Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), or Azure Container Registry (ACR).

LLM Model Files: Obtain the pre-trained LLM model files (weights, configuration, and tokenizer) from the respective source or train your own model.

Containerization: Containerize your LLM application using Docker or a similar container runtime. This involves creating a Dockerfile that packages your LLM code, dependencies, and model files into a Docker image.

Deploying an LLM on Kubernetes

Once you have the prerequisites in place, you can proceed with deploying your LLM on Kubernetes. The deployment process typically involves the following steps:

Building the Docker Image

Build the Docker image for your LLM application using the provided Dockerfile and push it to your container registry.

Creating Kubernetes Resources

Define the Kubernetes resources required for your LLM deployment, such as Deployments, Services, ConfigMaps, and Secrets. These resources are typically defined using YAML or JSON manifests.

Configuring Resource Requirements

Specify the resource requirements for your LLM deployment, including CPU, memory, and GPU resources. This ensures that your deployment has access to the necessary compute resources for efficient inference.

Deploying to Kubernetes

Use the kubectl command-line tool or a Kubernetes management tool (e.g., Kubernetes Dashboard, Rancher, or Lens) to apply the Kubernetes manifests and deploy your LLM application.

Monitoring and Scaling

Monitor the performance and resource utilization of your LLM deployment using Kubernetes monitoring tools like Prometheus and Grafana. Adjust the resource allocation or scale your deployment as needed to meet the demand.

Example Deployment

Let’s consider an example of deploying the GPT-3 language model on Kubernetes using a pre-built Docker image from Hugging Face. We’ll assume that you have a Kubernetes cluster set up and configured with GPU support.

Pull the Docker Image:

bashCopydocker pull huggingface/text-generation-inference:1.1.0

Create a Kubernetes Deployment:

Create a file named gpt3-deployment.yaml with the following content:

apiVersion: apps/v1 kind: Deployment metadata: name: gpt3-deployment spec: replicas: 1 selector: matchLabels: app: gpt3 template: metadata: labels: app: gpt3 spec: containers: - name: gpt3 image: huggingface/text-generation-inference:1.1.0 resources: limits: nvidia.com/gpu: 1 env: - name: MODEL_ID value: gpt2 - name: NUM_SHARD value: "1" - name: PORT value: "8080" - name: QUANTIZE value: bitsandbytes-nf4

This deployment specifies that we want to run one replica of the gpt3 container using the huggingface/text-generation-inference:1.1.0 Docker image. The deployment also sets the environment variables required for the container to load the GPT-3 model and configure the inference server.

Create a Kubernetes Service:

Create a file named gpt3-service.yaml with the following content:

apiVersion: v1 kind: Service metadata: name: gpt3-service spec: selector: app: gpt3 ports: - port: 80 targetPort: 8080 type: LoadBalancer

This service exposes the gpt3 deployment on port 80 and creates a LoadBalancer type service to make the inference server accessible from outside the Kubernetes cluster.

Deploy to Kubernetes:

Apply the Kubernetes manifests using the kubectl command:

kubectl apply -f gpt3-deployment.yaml kubectl apply -f gpt3-service.yaml

Monitor the Deployment:

Monitor the deployment progress using the following commands:

kubectl get pods kubectl logs <pod_name>

Once the pod is running and the logs indicate that the model is loaded and ready, you can obtain the external IP address of the LoadBalancer service:

kubectl get service gpt3-service

Test the Deployment:

You can now send requests to the inference server using the external IP address and port obtained from the previous step. For example, using curl:

curl -X POST http://<external_ip>:80/generate -H 'Content-Type: application/json' -d '"inputs": "The quick brown fox", "parameters": "max_new_tokens": 50'

This command sends a text generation request to the GPT-3 inference server, asking it to continue the prompt “The quick brown fox” for up to 50 additional tokens.

Advanced topics you should be aware of

While the example above demonstrates a basic deployment of an LLM on Kubernetes, there are several advanced topics and considerations to explore:

_*]:min-w-0″ readability=”131.72387362124″>

1. Autoscaling

Kubernetes supports horizontal and vertical autoscaling, which can be beneficial for LLM deployments due to their variable computational demands. Horizontal autoscaling allows you to automatically scale the number of replicas (pods) based on metrics like CPU or memory utilization. Vertical autoscaling, on the other hand, allows you to dynamically adjust the resource requests and limits for your containers.

To enable autoscaling, you can use the Kubernetes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). These components monitor your deployment and automatically scale resources based on predefined rules and thresholds.

2. GPU Scheduling and Sharing

In scenarios where multiple LLM deployments or other GPU-intensive workloads are running on the same Kubernetes cluster, efficient GPU scheduling and sharing become crucial. Kubernetes provides several mechanisms to ensure fair and efficient GPU utilization, such as GPU device plugins, node selectors, and resource limits.

You can also leverage advanced GPU scheduling techniques like NVIDIA Multi-Instance GPU (MIG) or AMD Memory Pool Remapping (MPR) to virtualize GPUs and share them among multiple workloads.

3. Model Parallelism and Sharding

Some LLMs, particularly those with billions or trillions of parameters, may not fit entirely into the memory of a single GPU or even a single node. In such cases, you can employ model parallelism and sharding techniques to distribute the model across multiple GPUs or nodes.

Model parallelism involves splitting the model architecture into different components (e.g., encoder, decoder) and distributing them across multiple devices. Sharding, on the other hand, involves partitioning the model parameters and distributing them across multiple devices or nodes.

Kubernetes provides mechanisms like StatefulSets and Custom Resource Definitions (CRDs) to manage and orchestrate distributed LLM deployments with model parallelism and sharding.

4. Fine-tuning and Continuous Learning

In many cases, pre-trained LLMs may need to be fine-tuned or continuously trained on domain-specific data to improve their performance for specific tasks or domains. Kubernetes can facilitate this process by providing a scalable and resilient platform for running fine-tuning or continuous learning workloads.

You can leverage Kubernetes batch processing frameworks like Apache Spark or Kubeflow to run distributed fine-tuning or training jobs on your LLM models. Additionally, you can integrate your fine-tuned or continuously trained models with your inference deployments using Kubernetes mechanisms like rolling updates or blue/green deployments.

5. Monitoring and Observability

Monitoring and observability are crucial aspects of any production deployment, including LLM deployments on Kubernetes. Kubernetes provides built-in monitoring solutions like Prometheus and integrations with popular observability platforms like Grafana, Elasticsearch, and Jaeger.

You can monitor various metrics related to your LLM deployments, such as CPU and memory utilization, GPU usage, inference latency, and throughput. Additionally, you can collect and analyze application-level logs and traces to gain insights into the behavior and performance of your LLM models.

6. Security and Compliance

Depending on your use case and the sensitivity of the data involved, you may need to consider security and compliance aspects when deploying LLMs on Kubernetes. Kubernetes provides several features and integrations to enhance security, such as network policies, role-based access control (RBAC), secrets management, and integration with external security solutions like HashiCorp Vault or AWS Secrets Manager.

Additionally, if you’re deploying LLMs in regulated industries or handling sensitive data, you may need to ensure compliance with relevant standards and regulations, such as GDPR, HIPAA, or PCI-DSS.

7. Multi-Cloud and Hybrid Deployments

While this blog post focuses on deploying LLMs on a single Kubernetes cluster, you may need to consider multi-cloud or hybrid deployments in some scenarios. Kubernetes provides a consistent platform for deploying and managing applications across different cloud providers and on-premises data centers.

You can leverage Kubernetes federation or multi-cluster management tools like KubeFed or GKE Hub to manage and orchestrate LLM deployments across multiple Kubernetes clusters spanning different cloud providers or hybrid environments.

These advanced topics highlight the flexibility and scalability of Kubernetes for deploying and managing LLMs.

Conclusion

Deploying Large Language Models (LLMs) on Kubernetes offers numerous benefits, including scalability, resource management, high availability, and portability. By following the steps outlined in this technical blog, you can containerize your LLM application, define the necessary Kubernetes resources, and deploy it to a Kubernetes cluster.

However, deploying LLMs on Kubernetes is just the first step. As your application grows and your requirements evolve, you may need to explore advanced topics such as autoscaling, GPU scheduling, model parallelism, fine-tuning, monitoring, security, and multi-cloud deployments.

Kubernetes provides a robust and extensible platform for deploying and managing LLMs, enabling you to build reliable, scalable, and secure applications.

#access control#Amazon#Amazon Elastic Kubernetes Service#amd#Apache#Apache Spark#app#applications#apps#architecture#Artificial Intelligence#attention#AWS#azure#Behavior#BERT#Blog#Blue#Building#chatbots#Cloud#cloud platform#cloud providers#cluster#clusters#code#command#Community#compliance#comprehensive

0 notes

Text

Dive into the debate: Terraform vs Kubernetes – unlocking the future of infrastructure management. Read our comprehensive analysis and discover who holds the key to the future.

1 note

·

View note

Text

youtube

AWS EKS | Episode 9 | Control Plane and Namespaces in Kuberenetes

1 note

·

View note

Text

cloud kubernetes service

Cyfuture Cloud with its Kubernetes service provides a powerful and flexible platform for deploying and managing applications in the cloud.

0 notes

Text

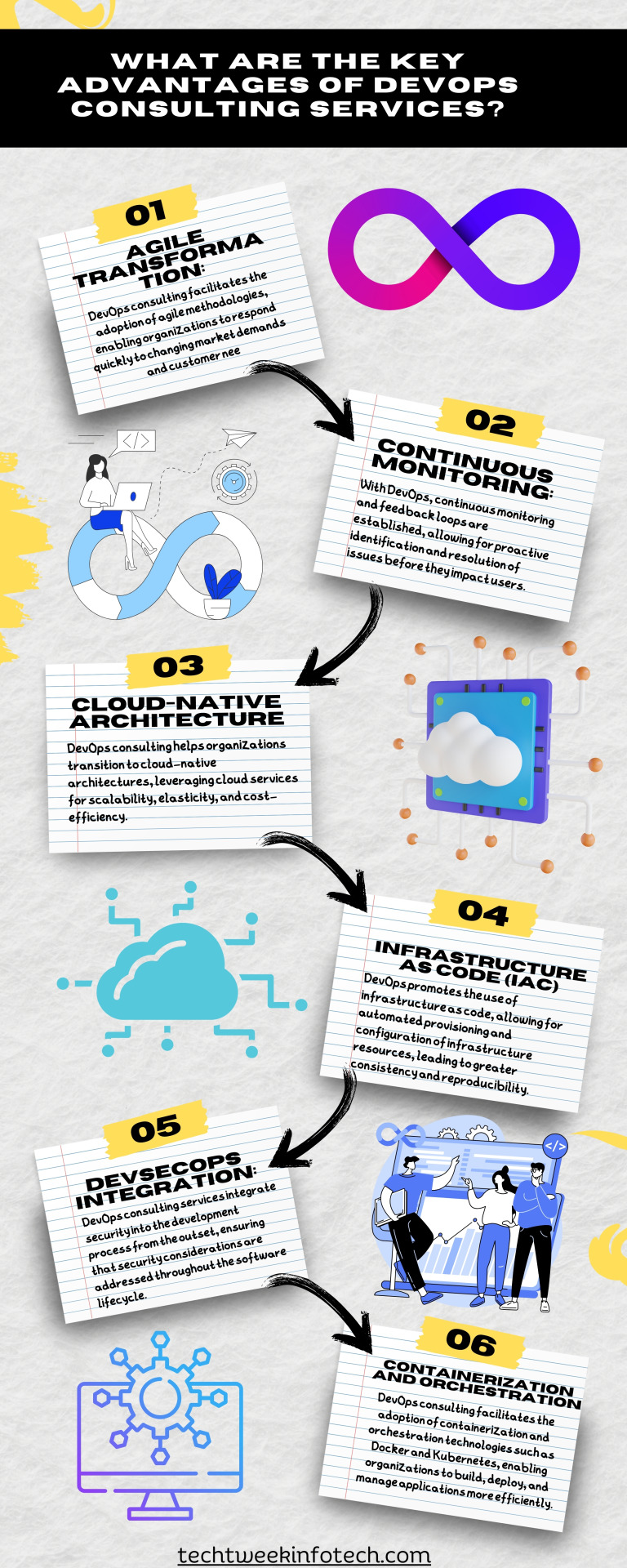

What are the key advantages of DevOps consulting services?

Agile Transformation: DevOps consulting facilitates the adoption of agile methodologies, enabling organizations to respond quickly to changing market demands and customer needs.

Continuous Monitoring: With DevOps, continuous monitoring and feedback loops are established, allowing for proactive identification and resolution of issues before they impact users.

Cloud-Native Architecture: DevOps consulting helps organizations transition to cloud-native architectures, leveraging cloud services for scalability, elasticity, and cost-efficiency.

Infrastructure as Code (IaC): DevOps promotes the use of infrastructure as code, allowing for automated provisioning and configuration of infrastructure resources, leading to greater consistency and reproducibility.

DevSecOps Integration: DevOps consulting services integrate security into the development process from the outset, ensuring that security considerations are addressed throughout the software lifecycle.

Containerization and Orchestration: DevOps consulting facilitates the adoption of containerization and orchestration technologies such as Docker and Kubernetes, enabling organizations to build, deploy, and manage applications more efficiently.

Microservices Architecture: DevOps encourages the adoption of microservices architecture, breaking down monolithic applications into smaller, independently deployable services for improved agility and scalability.

Culture of Innovation: DevOps consulting fosters a culture of innovation and experimentation, empowering teams to take risks, learn from failures, and continuously improve.

These points can be illustrated in your infographic to showcase the comprehensive benefits of DevOps consulting services for businesses seeking to optimize their software delivery pipelines and drive digital transformation initiatives.

#devops#devops consulting#aws devops#devopsservices#cloud services#cybersecurity#azure devops#ci/cd#kubernetes#cloud#devops course#software#cloud computing

0 notes

Text

Transforming Infrastructure with Automation: The Power of Terraform and AWS Elastic Kubernetes Service Training

In the digital age, organizations are modernizing their infrastructure and shifting to cloud-native solutions. Terraform automates infrastructure provisioning across multiple cloud providers, while AWS Elastic Kubernetes Service (EKS) orchestrates containers, enabling businesses to manage scalable, high-availability applications. Together, these technologies form a foundation for managing dynamic systems at scale. To fully leverage them, professionals need practical, hands-on skills. This is where Elastic Kubernetes Services training becomes essential, offering expertise to automate and manage containerized applications efficiently, ensuring smooth operations across complex cloud infrastructures.

Why Automation Matters in Cloud Infrastructure

As businesses scale, manual infrastructure management becomes inefficient and prone to errors, especially in large, multi-cloud environments. Terraform, as an infrastructure-as-code (IaC) tool, automates provisioning, networking, and deployments, eliminating repetitive manual tasks and saving time. When paired with AWS Elastic Kubernetes Service (EKS), automation improves reliability and scalability, optimizes resource use, minimizes downtime, and significantly enhances deployment velocity for businesses operating in a cloud-native ecosystem.

The Role of Terraform in Automating AWS

Terraform simplifies cloud infrastructure by codifying resources into reusable, version-controlled configuration files, ensuring consistency and reducing manual effort across environments. In AWS, Terraform automates critical services such as EC2 instances, VPCs, and RDS databases. Integrated with Elastic Kubernetes Services (EKS), Terraform automates the lifecycle of Kubernetes clusters—from creating clusters to scaling applications across availability zones—allowing seamless cloud deployment and enhancing automation efficiency across diverse environments.

How AWS Elastic Kubernetes Service Elevates Cloud Operations

AWS Elastic Kubernetes Service (EKS) simplifies deploying, managing, and scaling Kubernetes applications by offering a fully managed control plane that takes the complexity out of Kubernetes management. When combined with Terraform, this automation extends even further, allowing infrastructure to be defined, deployed, and updated with minimal manual intervention. Elastic Kubernetes services training equips professionals to master this level of automation, from scaling clusters dynamically to managing workloads and applying security best practices in a cloud environment.

Benefits of Elastic Kubernetes Services Training for Professionals

Investing in Elastic Kubernetes Services training goes beyond managing Kubernetes clusters; it’s about gaining the expertise to automate and streamline cloud infrastructure efficiently. This training enables professionals to:

Increase Operational Efficiency: Automating repetitive tasks allows teams to focus on innovation rather than managing infrastructure manually, improving productivity across the board.

Scale Applications Seamlessly: Understanding how to leverage EKS ensures that applications can scale with demand, handling traffic spikes without sacrificing performance or reliability.

Stay Competitive: With cloud technologies evolving rapidly, staying up-to-date on tools like Terraform and EKS gives professionals a significant edge, allowing them to meet modern business demands effectively.

Driving Innovation with Automation

Automation is essential for businesses seeking to scale and remain agile in a competitive digital landscape. Terraform and AWS Elastic Kubernetes Service (EKS) enable organizations to automate infrastructure management and deploy scalable, resilient applications. Investing in Elastic Kubernetes Services training with Web Age Solutions equips professionals with technical proficiency and practical skills, positioning them as key innovators in their organizations while building scalable cloud environments that support long-term growth and future technological advancements.

For more information visit: https://www.webagesolutions.com/courses/WA3108-automation-with-terraform-and-aws-elastic-kubernetes-service

0 notes

Text

Tips to Boost Release Confidence in Kubernetes

Software development takes a lot of focus and practice, and many newcomers find the thought of releasing a product into the world a bit daunting. All kinds of worries and fears can crop up before release, and even when unfounded, doubt can make it difficult to pull the trigger.

If you’re using a solution like Kubernetes software to develop and release your next project, below are some tips to boost your confidence and get your product released for the world to enjoy:

Work With a Mentor

Having a mentor on your side can be a big confidence booster when it comes to Kubernetes software. Mentors provide not only guidance and advice, but they can also boost your confidence by sharing stories of their own trials. Finding a mentor who specializes in Kubernetes is ideal if this is the container orchestration system you’re working with, but a mentor with experience in any type of software development product release can be beneficial.

Take a Moment Away From Your Project

In any type of intensive development project, it can be easy to lose sight of the bigger picture. Many developers find themselves working longer hours as the release of a product grows near, and this can contribute to stress, worry and doubt.

When possible, take some time to step away from your work for a bit. If you can put your project down for a few days to get your mind off of things, this will provide you with some time to relax and come back to your project with a fresh set of eyes and a clear mind.

Ask for a Review

You can also ask trusted friends and colleagues to review your work before release. This may not be a full-on bug hunt, but it can help you have confidence that the main parameters are working fine and that no glaring issues exist. You can also ask for general feedback, but be careful not to let the opinions of others sway you from your overall mission of developing a stellar product that fulfills your vision.

Read a similar article about Kubernetes dev environments here at this page.

0 notes