#hardware programming

Explore tagged Tumblr posts

Text

Normally I just post about movies but I'm a software engineer by trade so I've got opinions on programming too.

Apparently it's a month of code or something because my dash is filled with people trying to learn Python. And that's great, because Python is a good language with a lot of support and job opportunities. I've just got some scattered thoughts that I thought I'd write down.

Python abstracts a number of useful concepts. It makes it easier to use, but it also means that if you don't understand the concepts then things might go wrong in ways you didn't expect. Memory management and pointer logic is so damn annoying, but you need to understand them. I learned these concepts by learning C++, hopefully there's an easier way these days.

Data structures and algorithms are the bread and butter of any real work (and they're pretty much all that come up in interviews) and they're language agnostic. If you don't know how to traverse a linked list, how to use recursion, what a hash map is for, etc. then you don't really know how to program. You'll pretty much never need to implement any of them from scratch, but you should know when to use them; think of them like building blocks in a Lego set.

Learning a new language is a hell of a lot easier after your first one. Going from Python to Java is mostly just syntax differences. Even "harder" languages like C++ mostly just mean more boilerplate while doing the same things. Learning a new spoken language in is hard, but learning a new programming language is generally closer to learning some new slang or a new accent. Lists in Python are called Vectors in C++, just like how french fries are called chips in London. If you know all the underlying concepts that are common to most programming languages then it's not a huge jump to a new one, at least if you're only doing all the most common stuff. (You will get tripped up by some of the minor differences though. Popping an item off of a stack in Python returns the element, but in Java it returns nothing. You have to read it with Top first. Definitely had a program fail due to that issue).

The above is not true for new paradigms. Python, C++ and Java are all iterative languages. You move to something functional like Haskell and you need a completely different way of thinking. Javascript (not in any way related to Java) has callbacks and I still don't quite have a good handle on them. Hardware languages like VHDL are all synchronous; every line of code in a program runs at the same time! That's a new way of thinking.

Python is stereotyped as a scripting language good only for glue programming or prototypes. It's excellent at those, but I've worked at a number of (successful) startups that all were Python on the backend. Python is robust enough and fast enough to be used for basically anything at this point, except maybe for embedded programming. If you do need the fastest speed possible then you can still drop in some raw C++ for the places you need it (one place I worked at had one very important piece of code in C++ because even milliseconds mattered there, but everything else was Python). The speed differences between Python and C++ are so much smaller these days that you only need them at the scale of the really big companies. It makes sense for Google to use C++ (and they use their own version of it to boot), but any company with less than 100 engineers is probably better off with Python in almost all cases. Honestly thought the best programming language is the one you like, and the one that you're good at.

Design patterns mostly don't matter. They really were only created to make up for language failures of C++; in the original design patterns book 17 of the 23 patterns were just core features of other contemporary languages like LISP. C++ was just really popular while also being kinda bad, so they were necessary. I don't think I've ever once thought about consciously using a design pattern since even before I graduated. Object oriented design is mostly in the same place. You'll use classes because it's a useful way to structure things but multiple inheritance and polymorphism and all the other terms you've learned really don't come into play too often and when they do you use the simplest possible form of them. Code should be simple and easy to understand so make it as simple as possible. As far as inheritance the most I'm willing to do is to have a class with abstract functions (i.e. classes where some functions are empty but are expected to be filled out by the child class) but even then there are usually good alternatives to this.

Related to the above: simple is best. Simple is elegant. If you solve a problem with 4000 lines of code using a bunch of esoteric data structures and language quirks, but someone else did it in 10 then I'll pick the 10. On the other hand a one liner function that requires a lot of unpacking, like a Python function with a bunch of nested lambdas, might be easier to read if you split it up a bit more. Time to read and understand the code is the most important metric, more important than runtime or memory use. You can optimize for the other two later if you have to, but simple has to prevail for the first pass otherwise it's going to be hard for other people to understand. In fact, it'll be hard for you to understand too when you come back to it 3 months later without any context.

Note that I've cut a few things for simplicity. For example: VHDL doesn't quite require every line to run at the same time, but it's still a major paradigm of the language that isn't present in most other languages.

Ok that was a lot to read. I guess I have more to say about programming than I thought. But the core ideas are: Python is pretty good, other languages don't need to be scary, learn your data structures and algorithms and above all keep your code simple and clean.

#programming#python#software engineering#java#java programming#c++#javascript#haskell#VHDL#hardware programming#embedded programming#month of code#design patterns#common lisp#google#data structures#algorithms#hash table#recursion#array#lists#vectors#vector#list#arrays#object oriented programming#functional programming#iterative programming#callbacks

20 notes

·

View notes

Text

Cable chaos

#digitalismmm#digitalism#digital#tech#aesthetic#photography#machines#art#techcore#vintage#computer#computing#hardware#software#wires#cables#retro#internet#online#coding#programming

1K notes

·

View notes

Text

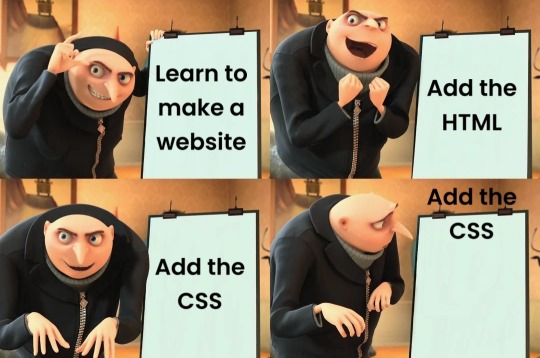

#programmer humor#programming#geek#nerd#programmer#technology#computer#phone#mac#windows#os#operating system#website#web development#dev#developer#development#full stack developer#frontend#backend#software#hardware#html#css#meme#despicable me#gru#joke#software engineer#apple

491 notes

·

View notes

Text

Steve Jobs, Apple's co-founder, didn’t contribute to programming or hardware design. Steve Wozniak, the technical mind behind Apple’s early products, and other employees recognized Wozniak as the inventor and Jobs as the marketing strategist.

#Steve Jobs#Steve Wozniak#Apple#programming#hardware design#marketing#invention#technology history#computer science#entrepreneurship#Brands

112 notes

·

View notes

Text

OPT4048 - a "tri-stimulus" light sensor 🔴🟢🔵

We were chatting in the forums with someone when the OPT4048 (https://www.digikey.com/en/products/detail/texas-instruments/OPT4048DTSR/21298553) came up. It's an interesting light sensor that does color sensing but with diodes matched to the CIE XYZ color space. This would make them particularly good for color-light tuning. We made a cute breakout for this board. Fun fact: it's 3.3V power but 5V logic friendly.

#opt4048#lightsensor#colortracking#tristimulus#ciexyz#colorsensing#texasinstruments#electronics#sensor#tech#hardware#maker#diy#engineering#embedded#iot#innovation#breakoutboard#3dprinting#automation#led#rgb#technology#smartlighting#devboard#optoelectronics#programming#hardwarehacking#electronicsprojects#5vlogic

85 notes

·

View notes

Text

Paranoia 1.0 AKA One Point O (2004)

#paranoia#tech noir#hacking#electronics#hackers#computers#cyberpunk#cyberpunk movies#cybersecurity#cyberpunk aesthetic#glitch#00s#00s aesthetic#2000s#00s movies#neo noir#computer bug#microchips#hardware#programming

121 notes

·

View notes

Text

straight up. not even gonna bother fucking with windows anymore once support for 10 ends. as soon as i have a good few days free to install and get used to everything, i'm switching to some flavor of linux.

#jude speaks#probably gonna do some hardware stuff that i've been putting off around the same time#get some more ram; reapply my thermal paste#all that jazz#in the meantime imma look into the different distros to see which one would work best for me#big changes are scary but tbh i am soooooooooooo looking forward to no more ads in my taskbar#no more switching my preferred programs back to defaults every time i restart#no more baked-in bloatware. i'll finally be free........

48 notes

·

View notes

Text

I love re-doing like 99% of my codebase because damn was I really that inefficient?

Can't wait to post this stuff when I'm done, then y'all can get some good working examples of DRN

Edit: Anyone that wants to be notified just lmk and I'll tag ya when it's ready

#drn#drone restraint notation#programming#robot kin#robot gender#techkin#tech kin#i am trapped in biological hardware#unhinged android posting

26 notes

·

View notes

Text

i have no computer goals. i dont have any computer wants or needs. im likea computer buddhist. this is my main barrier to coding

#i dont need to automate anything.#i dont really care about networks or graphics programming.#i dont really want to do anything hardware related.#and i DONT want to make websites.

127 notes

·

View notes

Text

HELP WANTED: LITTLEBIGPLANET FAN MOVEMENT

For the past year or so I’ve been working on a fan movement to revitalize interest in LittleBigPlanet on Sony’s terms. Think it comparable to Operation Moonfall, a fan movement for revitalizing interest in The Legend of Zelda: Majora’s Mask, which eventually led to its 3D Remake.

While I can’t promise the plan I’ve set out is guaranteed to result in a new LBP game, I do believe that it is also the best opportunity for one to be made. However, as I’ve worked behind the scenes I’ve found it impossible to maintain on my own. Therefore I must ask for help.

Operation Mushroom Tree, as it is tentatively titled, involves the creation of a professional pitch for a new LittleBigPlanet entry for modern consoles. This pitch would provide a basic outline of what a new entry would entail, and prove why the franchise would still be popular.

The goal is not to make a wishlist nor a whole new installment on our own, but a feasible design concept for a new game that the community can rally behind. While this exact pitch may not be directly used by Sony Interactive Entertainment, Operation Mushroom Tree would be a template to guide development of a new game. The design document at the forefront would show the best way to make a new installment in a way that would be enticing to shareholders and employees of Sony Interactive Entertainment, the current rights holders.

The document has been mainly continuously written by me. However, I am just beginning my journey into game development, and this project would be unsustainable on my own. I need concept artists and render artists to create mockups of believable illustrations of the game Operation Mushroom Tree provides. As well, I’d sincerely love the second opinions of other game designers who are passionate about the LBP franchise. Finally, I have only a very rudimentary idea of how LBP works on a programming and hardware level, and thus this project would benefit from an expert on such.

To be precise, I am looking for:

- 3D Modeling or Photoshop Artists to create renders and mock-ups of gameplay mechanics.

- Individuals with history in Game Design and have a fondness for the LittleBigPlanet Franchise, mainly to help write and guide the main design document.

- Individuals who have intimate knowledge of the LittleBigPlanet franchise on a hardware and software level, and can evaluate the feasibility of certain features on modern hardware.

If you or anyone you know falls into these categories, please contact me through Tumblr. If you are not in these categories, but are interested and believe you are able to help with this campaign, please contact me as well, and hopefully we can find a way to work together still. Do please provide some evidence of your past work and knowledge with your message.

I know that many out there currently are disappointed with the treatment of the LBP franchise after 2024, as am I. However, I do believe that LittleBigPlanet could thrive in today’s landscape, and would be beneficial to the games industry as a whole. Therefore, I am willing to embark on this journey, not only to entertain fans, but to help support up-and-coming developers get their first steps, as LBP did for me.

#little big planet#playstation#sony#lbp#game design#programming#3d modeling#sackboy#lbp2#lbp3#fan movement#help wanted#hardware#software#game development#dualshock#sony playstation#ps5#ps4#ps3#retro gaming#2000s nostalgia#sackgirl#british#media molecule#sony interactive entertainment

18 notes

·

View notes

Text

I know this take has been done a million times, but like…computing and electronics are really, truly, unquestionably, real-life magic.

Electricity itself is an energy field that we manipulate to suit our needs, provided by universal forces that until relatively recently were far beyond our understanding. In many ways it still is.

The fact that this universal force can be translated into heat or motion, and that we've found ways to manipulate these things, is already astonishing. But it gets more arcane.

LEDs work by creating a differential in electron energy levels between—checks notes—ah, yes, SUPER SPECIFIC CRYSTALS. Different types of crystals put off different wavelengths and amounts of light. Hell, blue LEDs weren't even commercially viable until the 90's because of how specific and finicky the methods and materials required were to use. So to summarize: LEDs are a contained Light spell that works by running this universal energy through crystals in a specific way.

Then we get to computers. which are miraculous for a number of reasons. But I'd like to draw your attention specifically to what the silicon die of a microprocessor looks like:

Are you seeing what I'm seeing? Let me share some things I feel are kinda similar looking:

We're putting magic inscriptions in stone to provide very specific channels for this world energy to flow through. We then communicate into these stones using arcane "programming" languages as a means of making them think, communicate, and store information for us.

We have robots, automatons, using this energy as a means of rudimentarily understanding the world and interacting with it. We're moving earth and creating automatons, having them perform everything from manufacturing (often of other magic items) to warfare.

And we've found ways to manipulate this "electrical" energy field to transmit power through the "photonic" field. I already mentioned LEDs, but now I'm talking radio waves, long-distance communication warping and generating invisible light to send messages to each other. This is just straight-up telepathy, only using magic items instead of our brains.

And lasers. Fucking lasers. We know how to harness these same two energies to create directed energy beams powerful enough to slice through materials without so much as touching them.

We're using crystals, magic inscriptions, and languages only understood by a select few, all interfacing with a universal field of energy that we harness through alchemical means.

Electricity is magic. Computation is wizardry. Come delve into the arcane with me.

#computer programming#computer science#computing#technology#tech#hardware#software#ham radio#robots#robotics#microcontrollers

37 notes

·

View notes

Text

03/12/21

#digitalismmm#digitalism#digital#tech#techcore#computer#machine#hardware#software#wires#compsci#programming#aesthetic#retro#throwback#photography#machines#art

119 notes

·

View notes

Text

#programmer humor#programming#geek#nerd#programmer#technology#meme#programming meme#developer#dev#full stack developer#frontend#backend#software#hardware#code#coding#software engineer#stem#software development#software developer#full stack#web#app#development#pc#laptop#windows#mac

11 notes

·

View notes

Text

Text mode HSTX DVI output on the Fruit Jam 🔡🖥️🍓

Jepler has been working round the clock to get HSTX DVI

) output support for the Fruit Jam

) working - this mode is neat because its 'text only' - you get 3-bit color text, it does that by creating a 'resolution' of 91 x 30 characters, you can only set the color and glyph. However, the monitor output is a non-buffered 1280x720, with each scanline generated 'on the fly', so you don't need any SRAM. This could be great for something like a Z-machine emulator (

) or other text-mode-only computation.

#hstx#dvi#textmode#adafruit#fruitjam#embedded#electronics#opensource#retrotech#makers#zmachine#coding#hardware#tech#microcontroller#hacker#diy#innovation#creativecoding#vintagecomputing#electronicsprojects#techenthusiast#programming#raspberrypi#digitalart#hardwarehacking#opensourcehardware#retrocomputing#geek

30 notes

·

View notes

Text

Paranoia 1.0 AKA One Point O (2004)

#paranoia#tech noir#electronics#hackers#computers#cyberpunk#cyberpunk movies#cybersecurity#cyberpunk aesthetic#glitch#00s#00s aesthetic#2000s#00s movies#neo noir#microchips#hardware#programming#glitch art#deborah kara unger

41 notes

·

View notes

Text

N64 architecture

#nintendo 64#nintendo#hardware#programming#tech#CPU#GPU#RAM#computation#MIPS#SGI#silicon graphics#NEC#90s#vintage computing

33 notes

·

View notes