#enter your username in the ‘Username’ empty field. By default

Explore tagged Tumblr posts

Text

Step By Step Guide For Linksys Extender Login

Connect your device to the Linksys Extender's Wi-Fi network in order to log in. Enter "http //extender.linksys.com" or "192.168.1.1" in the address bar of an open web browser. Enter your Linksys extender's login information. "admin" is used by default as both the username and the password. You can also leave both fields empty. To get the best results, change the parameters as necessary. To find out more about Linksys Extender login, visit our website. Our professionals can help you further.

0 notes

Text

How to Login Linksys Velop

If you looking for login solution, do the following steps and try to Login Linksys velop as soon as

possible.

Initially, you need to connect your PC into the network, which has to be the same network the

Linksys router is on.

Furthermore, turn on your device, open the web browser and enter IP address ‘192.168.1.1’ into

the address bar. After this, a box will appear on the screen and provoking you to enter your

“username” and “password” in order to do Linksys Velop login.

Moreover, enter your username in the ‘Username’ empty field. By default, the username field

needs to be left blank, but in case, you have to change your username, then you have to enter

that username.

After that, enter your password in ‘Password’ field, which by default is ‘Password’ only, but if

you have changed it, then enter that you have changed.

In last, click ‘Login’ and you will be able to efficiently do Velop login.

When you are positively login after that you are able to do Linksys Velop Setup process. For more

information Contact us on 877-372-5666.

#How to Login Linksys Velop#If you looking for login solution#do the following steps and try to Login Linksys velop as soon as#possible.# Initially#you need to connect your PC into the network#which has to be the same network the#Linksys router is on.# Furthermore#turn on your device#open the web browser and enter IP address ‘192.168.1.1’ into#the address bar. After this#a box will appear on the screen and provoking you to enter your#“username” and “password” in order to do Linksys Velop login.# Moreover#enter your username in the ‘Username’ empty field. By default#the username field#needs to be left blank#but in case#you have to change your username#then you have to enter#that username.# After that#enter your password in ‘Password’ field#which by default is ‘Password’ only#but if#you have changed it#then enter that you have changed.# In last#click ‘Login’ and you will be able to efficiently do Velop login.

0 notes

Text

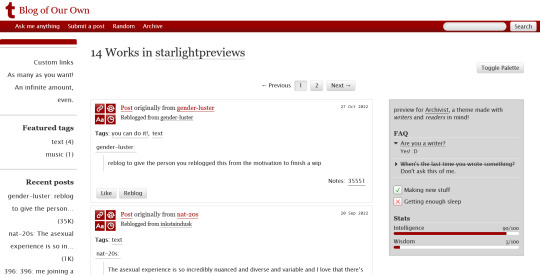

THEME PACK: THE ARCHIVE

Styled after AO3, this pack includes a theme and matching pages, and was designed for writers and readers alike.

🖋️ Theme 11: Archive Of Your Own

Live preview | Static previews: index page, permalink | Code

Full-width posts in an AO3 work index page format

Sidebars with optional sections such as featured tags, updates, rules, progress bars, and more

Unlimited custom links (display on sidebar or in top navbar), plus several social links in the footer

Add a custom logo beside/replacing your avatar

Inbuilt tag filtering plugin by glenthemes

All fields editable directly in the Customize menu, no HTML required. See below the cut for a full guide

🖋️ Page 3: Archive Records

Preview | Code

A WIP page designed to resemble an AO3 work page

Add tags for ratings, warnings, fandoms, characters, and more, as well as statistics like start dates, word counts etc.

Spaces for summary, start and end notes, and the 'work' itself

🖋️ Page 4: Archivist

Preview | Code

A combined about/navigation page based on the AO3 profile page

Include user statistics or any data you'd like, plus a longer bio

Sidebar navigation with link sections - unlimited links and link groups

The theme and pages all include options for multiple color palettes (initially set to Default and Reversi), text styling (choice of Tumblr/Google fonts and casing options), and more. -

Each page includes instructions on how to edit it, and color/image variables have been gathered together to make customization easier. While not necessary, basic knowledge of HTML is helpful.

For help, check my codes guide, or feel free to send me an ask. Theme 11 customization guide and credits are under the cut.

Theme 11 customization

Regarding the simpler fields:

"Secondary title" refers to the title just above the posts, under the header and navigation. This defaults to "[Total posts] Works in [Username]" when the field is left empty.

"Filtered tags" takes a comma-separated list of tags, entered exactly as they'd be written in the Tumblr post editor but without the hashtag. E.g. the tags #politics, #red and blue, and #green would be entered as "politics, red and blue, green" (make sure there's spaces, and no comma after the last item!). The filtering plugin will then put a warning message over any posts with those tags, along with a button letting you show the post.

"[Section] title" act as the headers for the corresponding section, if provided. "Custom links title" defaults to "Pages" if nothing is entered, and is used when the custom links are displayed on the navbar.

"Featured tags" takes a comma-separated list of tags, in the same format as Filtered tags. This field will display links to those tags, along with the number of posts in that tag on your blog, in the left sidebar.

The Recent posts section displays the 5 most recently posted/reblogged posts on your entire blog, displaying in the left sidebar. If you enter a tag under "Recent posts tag", it'll instead display the 5 most recent posts in that tag on your blog. Only 1 tag is allowed.

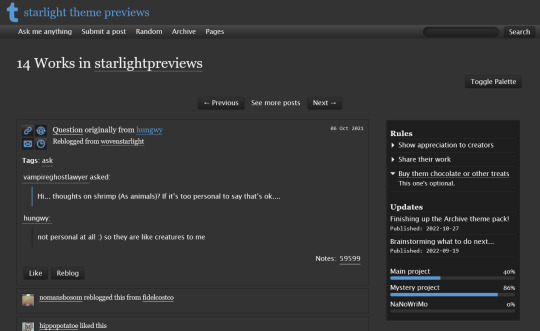

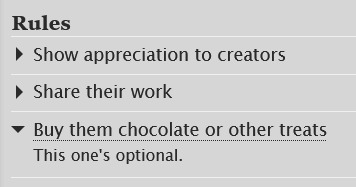

Rules and FAQ

Both these sections work the same way. Each new item, a rule or a question, consists either of one statement, or a statement and some more text, usually as an answer or additional note. E.g. the screenshot below shows three rules, where the last one has more text in the dropdown.

To create an item, prefix it with <li> . To add more text, create a <li> item and add a [more] label underneath, then write your extra text after that. To illustrate, here's the Customize page code for the above:

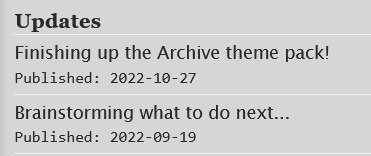

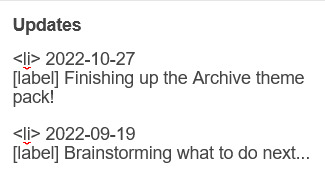

Updates

This section is similar to Rules/FAQ, though it flips the order around. After each <li>, first list the date, then add the [label] marker, then add your actual update. Again, here's an example:

And here's the Customize page code that created that:

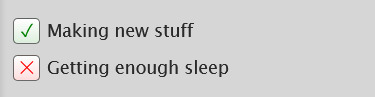

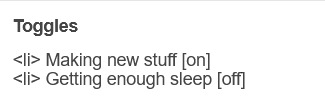

Toggles

This section displays checked/crossed-out items, and uses a simpler version of the formatting for the above sections. Use <li> for each new item, then add [on] or [off] at the end, depending on whether you want it checked or crossed off. Here's an example:

And here's the corresponding Customize code:

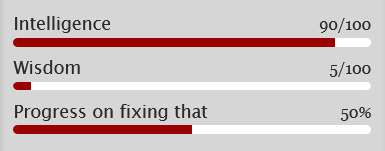

Progress bars

This section also uses <li> items, where each item has two parts: the text label, and the number(s) for the progress percentages, put inside square brackets like with the other sections. Here's an example (note how the top two use fraction values while the bottom one uses a percentage):

And here's the code that made these. In short, the format is <li> Text here [##/##], or <li> Text here [##%], where ## refers to any number.

Social links in footer

The footer links, aside from the email and personal website fields, take usernames or user IDs for various websites. Be sure to check you're not entering a username in a user ID field!

The Email address field takes a standard email in the format [email protected] and adds a link to let people mail that address.

The Personal website fields will generate a link in the footer's Follow section. Personal website name is the human-readable text label for the generated link, and Personal website URL is the URL that will open when the generated link is clicked. Make sure to add https:// to the start of the personal website URL so the generated link doesn't just redirect you to a different part of your blog.

Credits

Layout and design by Archive of Our Own

Style My Tooltips by malihu

Phosphor Icons

Expanded Tumblr localization and NPF photosets plugin by codematurgy

Custom audio posts by annasthms

Tag filtering by glenthemes

Palette toggle by eggdesign

Scroll to top by Fabian Lins

#code#theme#theme 11#page#page 3#page 4#coding cabin#theme hunter#free#full width#sidebar#topbar#nav text#pagination#unlimited links#header img#custom font size#tfont#gfont#unnested#responsive#npf#color mode#search#rblk buttons#timestamps#tags#group

1K notes

·

View notes

Text

The Living Joke, Ch. 2

Harley has discovered a cure for the Joker’s broken mind, and now a mostly sane Jack Napier must come to grips with all of the harm he’s done over the years, and decide whether he’s damned for the actions of a madman wearing his face.

***

One hundred feet below Wayne Manor, elevator doors open into a vast cavern and a dead man steps out. Jason Todd, the Red Hood, the black sheep of the Bat Family, Titan and Outlaw, enters the Batcave carrying his cycling helmet under his arm and wondering why Bruce has invited him here. Especially in the middle of the day.

"Chemical analysis looks hopeful," he hears Bruce say. "The formula seems to be doing exactly what Harley predicted it would."

"Seems to?" Dick replies. "Didn't you say yourself he was acting like a normal person in the café?"

"He's very good at that," Bruce responds as Jason rounds the corner and sees Bruce in chinos and a button-down sitting at the Batcomputer, looking over analysis of what Jason recognizes vaguely as a variety of antipsychotic, though not one he's ever seen before. Dick, meanwhile, is perched on one of the railings and dressed like a well-groomed hobo. "He fooled me for months as Eric Border," Bruce continues. "I'm not exactly objective here." He takes a sip of his coffee from a #1 Mom mug that Tim and Cass had found at a flea market and had rapidly become his favorite. "Good morning, Jason," he says, without turning around.

"Hey, B," Jason says, unsurprised that Batman has noticed he's here. He places his helmet down on an empty stainless steel table. "Doesn't sound like I'm in trouble for anything?"

"You're not," Bruce says, typing a long string of nonsense symbols, or rather, a code designed by Clark to be easily readable at super-speed. In the corner, the computer states that the file is being shared with SciencePizza—Barry's username on the Justice League groupchat. "I need your perspective on something." He glances at Dick. "Both of yours."

"Sure, whatcha got?" Jason says, dropping into one of the spare chairs and spinning a few times.

Bruce gestures to the screen. "What do you think of this?"

Jason tilts his head. "Looks like a new kind of anti-psychotic," he says. "Organic ingredients, some novel stuff..." He looks at Bruce. "This for Harvey?"

Dick shudders. "God, I hope not."

"Be nice, Dick," Barbara says, coming up the stairs with Cassandra trailing quietly behind her. Barbara is wearing jeans and a leather jacket, while Cass is dressed in jeans, a messy white blouse, and a black skinny tie. "Harvey's an old friend."

"He shot me in the face," Dick pouts back.

"Poor baby," Barbara responds, caressing her boyfriend's cheek. "Always a shame when something happens to a face that pretty."

Cass walks over to her father's side, leaning onto the back of the chair with crossed arms. "Your call seemed urgent."

"Somewhat." Bruce looks up at her, naked fondness in his eyes. "Cassandra, darling, can you move? I need to turn around."

Cassandra moves over slightly, turns, and leans against the keyboard, and Jason is somewhat jealous to note that she's perfected the "bisexual slouch" that Jason has been trying to practice on Roy and Artemis for months. And given that it's Cass, she probably knows exactly what she's communicating, though given that Tim and Stephanie are in California Jason isn't exactly sure who she's communicating to.

Bruce turns the chair around, so Cass is behind him and the rest of his present children in his field of vision. "I've called the four of you here because each of you has a unique perspective on a particular issue," he says. "We have reason to believe that Harley Quinn has managed to create an effective treatment for the Joker's mental condition."

There's a moment of absolute stunned silence that echoes through the entire cave, then Barbara breaks it with a hearty "Holy shit."

Dick whistles.

Jason crosses his arms behind his head. "So what's the plan to get him to take it?" he says. "Force it down his throat?"

Bruce shakes his head. "I already had Aaron Cash deliver him the first dose," he says. "And from what Barry, Harley, and I can all tell, it seems to be working. But."

"But," Cassandra echoes knowingly. She nods. "You're not sure."

Bruce purses his lips. "I want it to work," he says. "But at the same time, the pessimist in me is hoping that it won't. Punching him is simpler. So it's..." He tilts his head. "...difficult." He looks at his three oldest partners. "You three have the most experience with him out of anyone in the family, and Cassandra—" he acknowledges her with a nod of his head, "—is the best equipped to notice smaller signs. I'd like to ask you four to watch him, to gauge whether he actually is getting better or whether it's some kind of ruse."

Jason grimaces, leaning forward. "You know I'm going to default to no."

Bruce sucks in his lips and nods. "That's why I asked your advice specifically," he says. "You and Barbara are the least likely people in the family to trust him." He does not say why, because everyone in the room knows and he of all people knows what it's like to have to relive significant trauma. "If he is faking, I'm counting on you two to find out why."

It's an odd experience, being needed by Bruce for possibly the first time since he died. Jason decides he likes it.

"I kinda feel like the odd man out, here," Dick says.

Bruce turns to him. "You're the most likely to believe him," he says, and it's not an accusation—there's pride in his voice as he says it. "If it is, then Jack is going to need—"

"Jack?" Barbara interrupts.

"Jack Napier," Bruce says. "It's his name."

Cassandra raises an eyebrow. "First-name basis?"

Bruce remains completely impassive, which for him means he’s basically rolling his eyes. "If this is real, then Napier is going to need someone in his corner to make sure he doesn't backslide."

"I have a condition," Barbara announces. Everyone turns to her, and she clutches at her elbow, nervously shifting her weight to one hip. "I'm not going near him," she says. "I'll do it, but only as Oracle. No Batgirl involved."

Jason's eyes unconsciously flick to her stomach, where the bullet scar is hiding among a small number of larger knife wounds, then he catches himself and looks away. He feels a twinge on his back, the one he feels whenever he gets cold, where the Lazarus pit never quite erased the scarring from the crowbar. He can sympathize with not wanting to share space with that monster. It's a big part of why he started carrying guns, and why he decided to wear the red helmet—his own twisted form of exposure therapy.

"Agreed," Bruce says, turning back to his computer. "Now, if you'll excuse me, Croc's been robbing bodegas again." He glances at Jason. "You've got a fairly good relationship with him. Care to come with?"

In case anyone is wondering why Bruce seems out of character, I'm using @unpretty's Bruce from the Sorrowful and Immaculate Hearts series—specifically taking inspiration from the fic "Christmas in Kansas"—who I think is the best Batman/Bruce Wayne ever written.

#batman#the living joke#batman white knight#bruce wayne#the joker#jack napier#jason todd#the red hood#red hood#dick grayson#nightwing#barbara gordon#oracle#batgirl#cassandra cain#cassandra wayne#black bat#orphan#batdad#fic#my fic#fanfic#original content

61 notes

·

View notes

Link

Thanks for the question! I've created an answer in three parts, each one meant to address a specific question you've asked. PART ONE: How to Create Second-Generation Extension Packages PART TWO: How to Get the LMA to "see" a Second-Generation Managed Package PART THREE: Technical Enablement for Second-Generation Extension Packages Not gonna lie, this is a long read. For those who something shorter, here's the TLDR. TL;DR: Second-generation extension packages are created by adding dependencies on metadata found in other packages to a second-generation package, then creating a new package version using force:package:version:create. The LMA has no problem seeing 2GP extension packages so long as your Dev Hub is linked to your packaging org and you've created and promoted at least one second-generation package from that Dev Hub. There are several enablement materials that are relevant to this topic, but they're spread out over a large number of sites and locations. If you only have 20 minute to learn more, the one resource you should check out is this 17-minute video from DreamTX '20: Build Apps Using 2nd-Generation Managed Packages. For those with time to kill (and an itch to learn!) let's dive in! PART ONE: How to Create Second-Generation Extension Packages Fundamentally, an "extension package" is any package that has one or more hard dependencies on another package. For example, Package A and Package B start life out as unrelated entities. The moment that Package B adds a custom field, Apex reference, or other hard dependencies to metadata components that are defined in Package A...that moment is when Package B becomes an "extension" of Package A. How Package Dependency Works During Development Package dependency is at the heart of what makes one package an "extension" of another, so it's important to understand the rules that govern this interaction, especially those that impact you during development. Package Dependency Rule #1: Package dependencies (i.e. "base packages") must be present in the org where development of the dependent (i.e. "extension") package takes place in order to satisfy design-time metadata dependencies. For 1GP, this means that the package dependency must be installed in the packaging org For 2GP, this means that one of two things must happen If the package dependency has a different namespace, it must be installed in any namespaced scratch orgs where development takes place If the package dependency has the same namespace, it could either... Be installed in any namespaced scratch orgs where development takes place Be present in its own package directory within the same SFDX Project as the extension package source is, allowing the source of both packages to be deployed to the scratch org using force:source:push Package Dependency Rule #2: 2GPs that depend on other packages must explicitly define such dependencies inside of the sfdx-project.json file at the root of the SFDX project directory where the 2GP is being developed. Package Dependency Rule #3: Second-Generation Packages (2GPs) can depend on both First-Generation Packages (1GPs) and other 2GPs. On the other hand, 1GPs can only depend on other 1GPs because extending a 2GP with a 1GP is not supported by Salesforce. In other words... OK OK NOT OK OK Package Dependency Rule #4: Circular package dependencies are prohibited. This means that if Package B depends on Package A then it's not OK for Package A to also depend on Package B. Packages built in this way would never be installable because no matter which package you install first the subscriber org would always be missing a package dependency. Package Dependency in Action With all the rules of package dependency in mind, let's look at an example of 2GP extension package development in action. Consider this excerpt of a Salesforce DX project definition file, sfdx-project.json, used during the development of an expense calculator app. // Note: This is an excerpt of an sfdx-project.json file, used for demonstration // purposes only. A complete file would have additional keys defined. { "namespace": "exp_calc_demo", "packageDirectories": [ { "path": "accounting-logic", "default": false, "package": "Accounting Logic", "versionName": "Summer ‘21", "versionNumber": "4.5.0.NEXT", "dependencies": [ { "package": "Apex [email protected]" } ] }, { "path": "expense-calculator", "default": true, "package": "Expense Calculator", "versionName": "Summer ‘21", "versionNumber": "1.2.0.NEXT", "dependencies": [ { "package": "Apex [email protected]" }, { "package": "Accounting Logic", "versionNumber": "4.5.0.LATEST" } ] } ], "packageAliases": { "Expense Calculator": "0HoB00000002JAiWAP", "Accounting Logic": "0HoB00000004CFpKAM", "Apex [email protected]": "04tB0000000IB1EIYR" } } Observation #1: The "Expense Calculator" app is actually an MPSN (multi-package, same-namespace) solution because both the Expense Calculator and Accounting Logic packages are 2GPs that share the same namespace. We know this because each package is defined inside of the packageDirectories object array and each one has a package alias that points to a 0Ho Package2 ID. Observation #2: The definition of the Accounting Logic package directory appears before the definition of the Expense Calculator package directory. When running the force:source:push command, this causes the Salesforce CLI to push the project source one package directory at a time in the same order as those package directories are defined inside sfdx-project.json This is very important because the Expense Calculator package has metadata components that depend on the metadata found in the Accounting Logic package. When getting an empty scratch org ready for development, if the source from the Accounting Logic package was not pushed to the scratch org first the force:source:push command would end up failing due to invalid metadata references. Observation #3: The dependency between Expense Calculator and Accounting Logic is an internal dependency because both packages share the same namespace and were created against the same Dev Hub. This allows the Expense Calculator package directory definition to use the specialized syntax of "versionNumber": "4.5.0.LATEST" to specify which version of the Accounting Logic package it depends on. Observation #4: The dependency on Apex [email protected] is an external dependency because that package is neither part of the same namespace nor owned by the same Dev Hub as the Expense Calculator and Accounting Logic packages. The fact that it's an external dependency is also why Apex [email protected] is aliased to a 04t package version ID. Note that both Expense Calculator and Accounting Logic are aliased to 0Ho Package2 IDs, something that's only possible when the packages referenced are owned by the same Dev Hub that's being used to run any force:package commands in this project. Observation #5: It's not clear from looking at sfdx-project.json so I'll have to state it here: The Expense Calculator package in our example doesn't actually have any direct dependencies on the Apex [email protected] package. If that's the case, why do we need to explicitly list Apex [email protected] as a dependency of Expense Calculator? The reason is that Second-generation packages must declare all package dependencies, even indirect ones. When you run force:package:version:create the 2GP Build System needs to know the complete list of packages to install in the behind-the-scenes Build Org where your package is validated. When the build org is created, each package dependency defined by the package being built will be installed in the same order in which they are defined. That's why Apex [email protected] is the first dependency listed for the Expense Calculator package. It must be installed first because the package that Expense Calculator actually depends on, Accounting Library, would fail on installation in the Build Org if Apex [email protected] wasn't already there. Final Observations: There is a lot for a developer to keep track of when it comes to package dependencies in 2GP. Knowing the responsibilities up front can help avoid problems. Developers must identify the specific package versions that each 2GP depends on, whether those dependencies are direct or indirect External package dependencies must be installed in scratch orgs before the source of 2GPs that depend on those packages can be pushed/deployed to the scratch org Very little is automated (yet), so developers must be ready to manually determine indirect dependencies and install package dependencies into scratch orgs during development PART TWO: How to Get the LMA to "see" a Second-Generation Managed Package Connecting a second-generation managed package to an LMA is a relatively straightforward, two-step process...once you know how to do it. To make the first time easier, I'll walk through the process visually. STEP ONE: Connect the Publishing Console to Your Dev Hub This step assumes that your company has already joined the partner community and the Salesforce AppExchange Partner Program. If you haven't done this yet, please see the Connect with the Salesforce Partner Community unit on Trailhead for details on how to proceed.* Log in to the Partner Community with a user who's part of your partner organization and has been granted the Listings permission Click on the Publishing tab to open the Publishing Console Click on the Organizations sub-tab Click the Connect Org button to start the Connection Wizard Enter the username of an admin user from the org where your Developer Hub lives Enter this user's password with the security token appended If you don't have or don't know the security token for this user, you'll have to reset it Click the Connect button to complete the process STEP TWO: Register Your Second-Generation Package with Your LMA Please note that it may take several minutes for the packages owned by your linked Dev Hub to show up in the Publishing Console. Also note that ONLY released (i.e., non-beta) packages will show up here and that it may take several minutes for newly promoted 2GPs to appear. Log in to the Partner Community with a user who's part of your partner organization and has been granted the Listings permission Click on the Publishing tab to open the Publishing Console Click on the Packages sub-tab. This will show you... All released (i.e. non-beta) first-generation managed packages owned by the 1GP packaging orgs that you've linked to your Publishing Console All first-generation unmanaged packages owned by orgs (packaging or otherwise) that you've linked to your Publishing Console All released (i.e. non-beta) second-generation managed packages owned by each Dev Hub that you've linked to your Publishing Console Observe how different packages, VMC 2GP Lab (core-ux) and VMC 2GP Lab (SR-Test), each with the same namespace, vmc2gplab, are both listed here. This is what happens when you link a Dev Hub to the Publishing Console All second-generation packages owned by the linked Dev Hub which have at least one released (i.e. non-beta) package version will show up here. Connecting a managed package to your LMA requires you to click the Register Package link next to the package version that you want to wire up to your LMA. Clicking this link will kick off the package registration wizard. Click the Log In button to go to the next stage of the package registration wizard. Enter the username of an admin user from your License Management Org (LMO) "License Management Org" or "LMO" is a term used to describe an org where the License Management App (LMA) is currently installed. Enter this user's password with the security token appended If you don't have or don't know the security token for this user, you'll have to reset it. Click the Log In button to move to the next step in the package registration wizard. Choose the default license behavior. Trial licenses give subscribers access to your solution for up to 90 days. Active licenses provide subscribers with continuous access. Set the length of trial licenses in number of days. The maximum value that can be set is 90. When the default license is *active instead of trial, the option to specify the length of trial disappears. Choose whether the default license should be site-wide (available for all users) or per-seat (must be granted to users individually). If selecting per-seat as your default license, you must also specify the number of seats that will be granted to the subscriber by default. Click the Save button when done to complete the package registration wizard. Key Considerations When Using the LMA with Second-Generation Packages Please keep these things in mind when using the LMA with first and second-generation packages Packages will only appear in your publishing console once you've... Connected a packaging org (1GP) or Dev Hub (2GP) to your Publishing Console Uploaded a managed/released package version (1GP) or promoted a beta package version to the released state (2GP) Packages do not have to pass security review before being registered with your LMA Partners are welcome to register packages with their LMA early This allows partners to get comfortable with being "hands on" with the LMA Registering your package with the LMA also makes it possible see how the Feature Management App (FMA) works end-to-end IMPORTANT! Being able to register a package with your LMA before passing Security Review is not an invitation to skipping Security Review or the AppExchange Listing/Contracting process and distributing your package directly to customers. Doing so is a viloation of the Salesforce Developer Services MSA and the Salesforce Partner Program Agreement (SPAA). Installing in sandbox and trial orgs is fine. Installing in production orgs is not. Please don't do it! To go through Security Review, packages must be associated with a Listing. This is a core part of how the AppExchange publishing system works and can not be bypassed. Having a listing does not mean your app has to be publicly listed (i.e. visible) on the AppExchange. Creating a listing, going through Security Review, and keeping your listing private so customers must install via URL or 04t package version ID is perfectly acceptable. PART THREE: Technical Enablement for Second-Generation Extension Packages Documentation and enablement material for packaging in general can be found in many places. The Salesforce ISV Platform Expert Team aggregates 2GP specific enablement content in this Trailmix: Tech Enablement: Second-Generation Packaging (2GP) As for enablement resources that are relevant to the topic of 2GP extension packages, Phil W shared some great links in his answer. I'll share them again here along with some other ones I know of. (Thanks, Phil!) Finally, there's this super long article-disguised-as-StackExchange-answer which is (hopefully) a nice enablement resource all by itself. :-)

0 notes

Text

www.dlinkrouter.local : Dlink Router Setup Wizard

What are the steps if using 192.168.1.1 does not load the D'link router login page for D-Link DIR-615 Wireless-N300 Router?

In case the router’s configuration and login page fail to load, verify that the computer is connected to the router only and not to any other network.

If the problem remains, it means that the D-Link router must have been used by someone else and that user might have modified the default login address. In this situation, you may have to try to reset your D- Link router to its factory default settings. To do so, press and hold the Reset button positioned at the back of the router for about 10 seconds. Now, you have reset the router to its factory default settings by which you will also be able to access the configuration page by visiting the default IP Address 192.168.1.1.

What are the steps for D'link router login?

Open any web browser of your choice on a computer or laptop and enter the default IP address of the router into the address bar, which is 192.168.1.1. If this IP address does not work, you can still, however, log in to your router by entering the URL dlinkrouter.local. After visiting the above IP address or URL dlinkrouter.local, you will automatically get navigated to a D-Link router login page. Enter the default login credentials. The default username is “admin”, and the password domain should be left empty in case of logging into the router’s admin page. Close the D'Link setup wizard only after you have saved the settings.

How to log into the D-Link DIR-825 AC 1200 Wi-Fi Dual-Band Gigabit (LAN/WAN) Router through the domain dlinkrouter.local?

Before you start your login process, keep in mind that you must connect to the router either by a wired or wireless connection. Also, there are two addresses via which you can log into the Admin setup page of your D-Link router that is www.dlinkrouter.local and using the default IP address 192.168.1.1.

How to access the D’link router login page for D-Link DSL-2730U Wireless-N 150 ADSL2+ 4-Port Router?

To access the D-Link router login page, you can go to the web domain http://dlinkrouter.local, or you can also use the default IP address for your router, which is 192.168.1.1. This will direct you to the D'Link setup wizard and you can continue with the on-screen steps.

How to change the D-Link DWR-920V Wireless N300 4G LTE Router’s password?

Follow these steps to change the D‑Link Router login password:

● Launch any internet browser and enter the default web domain dlinkrouter.local or default IP address 192.168.1.1 into the address bar.

● Type the password for your Admin account in the password field given. If you have not modified this password from the default, then leave the field blank and click Log In.

● Most of the D-Link routers have a default user name as admin and password as admin/blank.

● Determine the Wireless Settings from the drop-down menu list. In the Password section, set the new wireless password for the desired radio band.

● Your wireless gadgets will demand this password to get access to your wireless network. It may ask you to update your wireless device’s configuration. Tap on the Save button to save your settings.

How do I set up the D-Link DIR-841 – AC1200 MU-MIMO Wi-Fi for the first time using the Dlink setup wizard?

To set up your D-Link router unplug and remove all the connections with your modem and router and follow the instructions:

● Join the Ethernet cable from any of the LAN ports of the router with the desktop PC that you desire to utilize to set up your router for the first time.

● Now, attach the modem into the WAN port of the router. Then, connect the router with a wall power outlet and turn it on.

● Now, power on the modem, the switch, and the PC. Launch any web browser of your preference on your desktop machine and type www.dlinkrouter.local in the address bar and hit enter.

● It will automatically take you to the Setup Wizard page in the web browser. If by any chance, the Setup Wizard page doesn’t automatically appear on the browser after typing http://dlinkrouter.local in the address bar, then you can also try using the default IP address of your router in the address bar. And the default IP address of the D-Link routers is 192.168.1.1.

● Meanwhile, the setup wizard page displays, it will supervise you through a step by step method to configure and set up your D-Link router and support you to connect with the internet.

● Click on Next to proceed. If you have already configured the router previously, then you will need to reset your router to go through the setup wizard process again.

● Now, the router will examine the type of internet connection that you own. It will successfully scan and skip the setup itself, but in some circumstances, if it doesn’t, you will be urged to choose the type of internet connection that you have. From the displayed choices, pick the DHCP connection (Dynamic IP).

● For all the wired associations, the connection type is always Dynamic IP. After choosing the DHCP connection type, click on Next.

● Now, you will notice a Wireless Settings, and you will get a prompt to set in a new wireless network name and password. It is essential as it will further benefit you in securing your network. Set and enter the desired Network name and password in particular fields. Click on Next.

● Record the username and passphrase for your wireless network so that the passphrase doesn’t get lost or forgotten. In case, if it gets lost or forgotten, a factory reset will be needed. Now, the Setup Wizard will again request you for a password. It is the password for the router configuration page, not your passphrase. Click on Next.

● Presently, the setup wizard page will demand you to set your time zone. After setting the time zone, click on Next.

● A report summary of all your router settings will get revealed. Click on the Save button to apply and save the changes in the settings.

● Don’t try to interrupt the reboot process anytime, as it may damage the router and even make it worthless. And you can now start utilizing your wireless internet connection.

0 notes

Text

Login To Linksys Extender Using Extender.linksys.com

To log in, connect your device to the Linksys Extender's Wi-Fi network. Open a web browser and enter "http //extender.linksys.com" or "192.168.1.1" in the address bar. Enter the login credentials for your Linksys extender. "Admin" is the password and the username by default. You can alternatively leave both fields empty. Adjust any required settings for optimal performance. Visit our website to learn more. You can ask our experts for more assistance.

0 notes

Text

How to Login Linksys Velop

If you looking for login solution, do the following steps and try to Login Linksys velop as soon as

possible.

Initially, you need to connect your PC into the network, which has to be the same network the

Linksys router is on.

Furthermore, turn on your device, open the web browser and enter IP address ‘192.168.1.1’ into

the address bar. After this, a box will appear on the screen and provoking you to enter your

“username” and “password” in order to do Linksys Velop login.

Moreover, enter your username in the ‘Username’ empty field. By default, the username field

needs to be left blank, but in case, you have to change your username, then you have to enter

that username.

After that, enter your password in ‘Password’ field, which by default is ‘Password’ only, but if

you have changed it, then enter that you have changed.

In last, click ‘Login’ and you will be able to efficiently do Velop login.

When you are positively login after that you are able to do Linksys Velop Setup process. For more

information Contact us on 877-372-5666.

-----------------------------------------------------------------------------------------------------------------------------------------

Fix Linksys Velop Login Issue

Having an issue on Linksys velop or you can also say that Linksys velop login problem. Reset your Linksys

velop at once and resolve your issue:

Reset your Linksys velop and try to fix Linksys velop Login:

Primly, find out the Reset button on the back of your Linksys velop

Second, when the velop powered on, use the pointed end of a paperclip or similar object to

press and hold the Reset button till 15 seconds

Wait till Linksys velop router to fully reset and power back on.

After reset your routers try Linksys Velop Login again. For more information Contact us on this number

877-372-5666 and get the solution.

How to resolve Linksys velop not working issue

If you are facing issue with Linksys velop means your Linksys velop not working then, there are lots of

reason that are mentioned below:

Understand the reason and try to resolve your issue.

Ensure that your router and modem are connected to each other properly

May be your velop is overheated

Might be Linksys velop firmware is not updated

Outdated Linksys velop drivers is the reason of error

After understand the reason after that, try to resolution Linksys velop issue. You need to Restart your

Linksys Velop and try to resolve your issue in one step. To restart Linksys velop just plug out your Linksys

velop from outsource, wait for a while after that plugin your Linksys velop, try Linksys velop Login again

and see the issue has been resolved or not.

Linksys Velop not showing up- steps

Are you looking solution for Linksys velop not showing up then follow the below step and resolve the

issue.

Check the Ethernet Cable

One of the main reasons behind the Linksys Velop router not showing up- because of faulty Ethernet

cable. If there is some damage within the Ethernet cable or something is wrong with this cable then your

Linksys velop router may not work.

Arrange Another Ethernet cable

Pull out Enternet cable from your computer and router

Now, insert Another Ethernet Cable.

Next, See Whether The Lights Lit Up On The Router Or Not.

Last, check that if the Router Starts to Work.

If the issue with Ethernet cable then you should be able to use the router with another cable or you

have to change your Ethernet cable and try to Login Linksys velop. If you are facing another issue then,

contact us on 877-372-5666.

#Linksys Velop Signin#Linksys Velop Login#Linksys Velop Setup#Linksys Velop Router#Linksys Velop App

0 notes

Text

Lenovo ThinkServer RD650 with new Xeon E5-2600 v3 processors

In Septemeber of 2014 we saw the primary press anouncements for Lenovo’s new Grantly platforms with two new rack established servers, the RD550 and RD650. Those have been the first new structures from Lenovo that might use the modern-day Intel Xeon E5-2600 v3 series processors and DDR4 reminiscence. In the lab today we will take a look at the Lenovo ThinkServer RD650.E3-1270 v6

Lenovo ThinkServer RD650 Base Server SpecificationsLenovo makes a number of?Exclusive variations of the ThinkServer RD650 however there are a fixed of common specs:

Processor: Up to two 18-center Intel Xeon E5-2600 v3 SeriesMemory: up to 768 GB DDR4 – 2133 MT/s thru 24 slots (RDIMM/LRDIMM)growth Slots: All chassis up to – 3 x PCIe Gen3: LP x8, 4 x PCIe Gen3: FLFH x8, 1 x PCIe Gen3: HLFH x8Systems control: ThinkServer gadget supervisor. Non-compulsory ThinkServer gadget manager PremiumDimensions: 19.0″ x 3.4″ x 30.1″Weight: starting at 35.Three lbsFrom aspect: 2U Rack MountThe ThinkServer RD650 is available in three simple models that permit for special garage configurations. The first is the 12x 3.5″ power server:

Lenovo ThinkServer RD650 12 x three.Five Inch DrivesLenovo makes a mixed 8x 2.5″ and 9x three.5″ server (are you salivating but vSAN, backup appliance and virtualized ZFS equipment lovers?)

Lenovo ThinkServer RD650 – eight x 2.5 and nine x 3.5 inch DrivesFinally there's a model with 24x 2.5″ drives that's every other commonplace shape thing:

Lenovo ThinkServer RD650 – 24 x 2.5 inch DrivesThe pattern we obtained for review is the ThinkServer RD650 with 12 x three.Five Inch Drives.

Unpacking the Lenovo ThinkServer RD650Let’s take a look at how the RD650 is packed for delivery.

Lenovo ThinkServer RD650 transport boxWe continually like to show how servers are boxed up for transport to look how nicely the server is included from the same old bumps, drops and bins smashed up by using difficult managing. We can see that the RD650 is well included with foam inserts and in this case it's miles further included by being encased in an additional card board field. Down within the bottom underneath the server there's an accent box that is placed in foam inserts and additional knock outs are furnished for extra accessory bins. Along the bottom is the rail package.

This became no longer double boxed like we see in different servers, but there may be empty space around the server itself to protect from punctures.

Lenovo ThinkServer RD650 FrontHere we see the the front of the RD650 with the top lid eliminated. Over all it's miles very much like the earlier Lenovo servers excluding progressed method cooling systems.

Lenovo ThinkServer RD650 BackThe lower back of the RD650 suggests the progressed cooling shroud and the massive variety of growth bays that this server includes.

Lenovo ThinkServer RD650 CPU-RAM AreaThe RD650 CPU region uses passive warmness sinks for cooling. These use everyday socket 2011 R3 rectangular mounting holes and?We found in our trying out they may be?Superb at eliminating heat from the CPU’s. See our rectangular v. Slim ILM manual for the distinction in LGA2011 mounting alternatives. The fan bar includes six redundant warm-swap fanatics with 2 processors load outs. If best one processor is used there will be four enthusiasts mounted that can assist keep electricity.

We have examined a fair number of Lenovo servers in the beyond and located the lovers which can be used offer excessive air float and do no longer make too much noise. The provided air shroud channels air thru the warmth sinks and reminiscence region very successfully and we had no warmth troubles with the server even underneath very heavy hundreds.

Each of those fanatics are warm swappable and the complete cooling bar can be removed with easy locking levers on either side of the bar.

Lenovo ThinkServer RD650 growth BayTwo growth bays just like the one proven above may be geared up into the RD650, each bay can handle up to a few growth cards. These bays can deal with full length expansion playing cards which permit for larger cards to fit right into a 2U chassis.

Lenovo ThinkServer RD650 iKVM and TPM ModuleIn among the two expansion bays are iKVM and TPM modules. These are non-compulsory modules.

Lenovo ThinkServer RD650 Raid ControllerAt the the front of the server the RAID controller card is placed right at the back of the power bays. Right here we've got eliminated the main cooling bar to get a higher observe this. The principle cooling lovers for the server are positioned proper next to the controller card which permits suitable air glide to assist keep this cool.

Putting in place the Lenovo ThinkServer RD650The usual method to install an operating machine onto the RD650 is to use the Lenovo ThinkServer Deployment supervisor. This could stroll you thru installing an OS and drivers for the server.

Lenovo ThinkServer RD650 TDM BIOS SettingTo get admission to the TDM honestly boot the system into the BIOS and head over to the Boot supervisor and select launch TDM.

Lenovo ThinkServer RD650 Deployment ManagerAfter deciding on release TDM you will see the above display. From right here you could regulate just about some thing you can do inside the BIOS and do platform updates. Storage management permits you to setup any Raids which you need for the server earlier than you start installing an working device.

The Deployment alternative will get you began installing your OS of choice.

These options are:

Quantity choice – available garage volumes may be displayedOS choice – pick the OS to be deployed (Linux, home windows, VMware)installation Settings – Time quarter, Language, License Key, laptop call, Admin/root passwordPartition options – two options are to be had:Use present partition – preceding OS set up exists at the driveRepartition the force at some point of installation – sets size of OS partitionAfter you have got selected the essential alternatives the system will start to deploy the working gadget. Our gadget did now not have an DVD drive so we used a USB DVD force to mount the running machine DVD. After the installation turned into completed the gadget completed with us on the login display screen of windows Server 2012 R2 which we used for some of our exams. We may also boot directly from an Ubuntu run DVD and pass the TDM completely the usage of the USB DVD power.

Lenovo ThinkServer RD650 gadget ManagerFor faraway control the RD650 consists of the machine manager. Surely enter the IP address for the server into your browser and login.

The default username/ password login data for the Lenovo ThinkServer RD650 is:

Username: lenovoPassword: len0vOIf you have got an iKVM Module established?You could choose the “release” button to go into far flung control and operate the device that manner.

Lenovo ThinkServer RD650 gadget manager manage ScreenThe next display screen indicates the alternatives that you may monitor and alternate thru the far flung control interface.

Test ConfigurationOur take a look at setup includes the pinnacle end Intel Xeon E5’s which we use in all of our checks. These CPU’s include 18 cores every and gives our system the maximum processor load out. We also crammed all memory slots to present the most load out of memory that we could, the usage of 16GB sticks in each slot, this dropped our memory velocity to 1600MHz.

Processors: 2x Intel Xeon E5-2699 v3Memory: 24x 16GB crucial DDR4 (384GB overall)garage: 1x SanDisk X210 512GB SSDOperating systems: Ubuntu 14.04 LTS and windows Server 2012 R2AIDA64 MemoryWith a complete 24 DIMM’s of DDR4 reminiscence installed the memory pace has dropped right down to 1600MHz. But we are seeing very good outcomes which can be just beneath what a system the use of much less memory at higher speeds.

Memory Latency ranged at ~99ns and our common structures the use of 16x 16GB DIMM’s ranged approximately ~78ns.

RD650 flow ResultsOur circulate test outcomes showed similar outcomes as compared to a gadget strolling 16x 16GB DIMM’s. Using a complete load out and lower reminiscence speeds confirmed ~20 MB/s bandwidth loss.

The memory tests results are as predicted with this big load out of 24x DIMM slots completely populated.

ThinkServer RD650 Linux-Bench ResultsThe complete check outcomes for a pattern?Linux-Bench run may be found right here. RD650 with 2x E5-2699 v3 Linux-Bench

Our pattern server got here with one Intel Xeon E5-2690 v3 and 16GB of DDR4 installed. We also ran our exams with this configuration as it might show a typical setup for an entry stage RD650. RD650 with 1x E5-2690 v3 Linux-Bench

Lenovo ThinkServer RD650 power?ConsumptionOur test configuration did function two very excessive-give up processors which can be at the very pinnacle of Intel’s Xeon E5-2600 V3 product line-up.

RD650 electricity ConsumptionThe common idle strength use of the RD650 turned into ~95watts which is fairly excellent for a server of this type. Whilst we max out the gadget underneath heavy AIDA64 strain test, we noticed ~575watts pulled for the whole system which is a touch excessive for systems like this. Of course adding a complete praise of drives and expansions gadgets will impact those numbers as would using lower energy processors.

ConclusionThe first element that sticks out with the Lenovo ThinkServer RD650 is the sheer number of growth alternatives that this server line up has to offer. 3 distinctive models that provide big abilties in garage options that also include options for two additional company-magnificence M.2 SSD’s for booting in addition to SD card alternatives for hypervisor booting. These servers are designed from the ground up for bendy boot alternatives.?Our pattern server can max out at 96TB’s of spindle storage the use of it’s 12 three.5″ force bays with excessive capability 8TB disks.

With a total of 8 PCIe there may be plenty of expansion space that permits you to scale up I/O if wished.The RD650 additionally offers you the choice of Raid adapters that in shape in the bay between the difficult pressure area and the primary cooling bar. That is a pleasant characteristic as they do no longer absorb and extra PCIe slots within the again and are in a great area for cooling.

In keeping with Lenovo the cooling machine is rated at forty five tiers Celsius / 113 F continuous operation and we discover the cooling gadget is up to the task in our tests. We are always impressed for the cooling setups on Lenovo servers, the fanatics do an awesome job at moving lots of air thru the case and they do not make a variety of noise that is a huge plus for us inside the lab.

We also just like the ThinkServer Deployment manager for its ease in getting a machine up and running. Its rather simple to use and installs all wished drivers throughout the setup and OS installation. We also ran Ubuntu proper off a USB DVD force on our RD650 and had no troubles with drivers on our test setup.

We also noticed Lenovo make a shift from DVI video output which without a doubt is a general to DisplayPort. Within the lab we use a KVM switch with all DVI connections to run our test setups, we can without problems connect up several machines and have all of them walking, however in this situation we could not get a DisplayPort to DVI adapter to work. We ended up simply using far flung management and iKVM to run the server. This worked satisfactory and the majority would use this in a production environment, but for crash carts and other setups used at vicinity a DisplayPort screen might be required.

0 notes

Text

Introducing MongoDB 4.0 compatibility and Transactions in Amazon DocumentDB

Amazon DocumentDB (with MongoDB compatibility) is a fast, scalable, highly available, and fully managed document database service that supports MongoDB workloads. Today we’re announcing compatibility with MongoDB 4.0 for Amazon DocumentDB. With this launch, you can now use atomic, consistent, isolated, and durable (ACID) transactions, open a change stream cursor for a database or cluster, and much more. For the full release notes for Amazon DocumentDB 4.0, see MongoDB 4.0 Compatibility. In this post, I summarize what’s new in Amazon DocumentDB 4.0 and show you how to get started with Amazon DocumentDB 4.0 and transactions using an AWS Cloud9 environment. What’s new in Amazon DocumentDB 4.0? The following are some of the major features and capabilities that were introduced in Amazon DocumentDB 4.0. To see a full list of the new capabilities, see MongoDB 4.0 Compatibility. ACID Transactions – Amazon DocumentDB now supports the ability to perform transactions across multiple documents, statements, collections, and databases. Transactions simplify application development by enabling you to perform ACID operations across one or more documents within an Amazon DocumentDB cluster. For more information, see Transactions. Change streams – You can now open a change stream at the cluster level (client.watch() or mongo.watch()) and the database level (db.watch()). You can also specify a startAtOperationTime to open a change stream cursor, and extend your change stream retention period to 7 days (previously, the limit was 24 hours). For more information, see Using Change Streams with Amazon DocumentDB. AWS DMS – You can now use AWS Database Migration Service (AWS DMS) to migrate your MongoDB 4.0 workloads to Amazon DocumentDB. AWS DMS now supports a MongoDB 4.0 source, Amazon DocumentDB 4.0 target, and an Amazon DocumentDB 3.6 source for performing upgrades between Amazon DocumentDB 3.6 and 4.0. For more information, see Using Amazon DocumentDB as a target for AWS Database Migration Service. Monitoring – With the addition of transactions, you can now monitor your transaction usage with five new Amazon CloudWatch metrics: TransactionsOpen, TransactionsOpenMax, TransactionsAborted, TransactionsStarted, and TransactionsCommitted, in addition to new fields in currentOp, ServerStatus, and profiler. For more information, see Monitoring Amazon DocumentDB with CloudWatch. Performance and indexing – Included in this release are multiple performance and indexing improvements: the ability to use an index with the $lookup aggregation stage, find() queries with projections can be served directly from an index (covered query), the ability to use hint() with the findAndModify API, performance optimizations for $addToSet operator, and improvements to reduce overall index sizes. For more information, see Release Notes. Operators – We have added support for new aggregation operators: $ifNull, $replaceRoot, $setIsSubset, $setInstersection, $setUnion, and $setEquals. For more information, see Supported MongoDB APIs, Operations, and Data Types. Role based access control (RBAC) – With the ListCollection and ListDatabase commands, you can now optionally use the authorizedCollections and authorizedDatabases parameters to allow users to list the collections and databases that they have permission to access without requiring the listCollections and listDatabase roles, respectively. Users can also end their own cursors without requiring the KillCursor role. For more information, see Restricting Database Access Using Role-Based Access Control (Built-In Roles). Getting started with Amazon DocumentDB 4.0 and transactions The first step is to create an AWS Cloud9 environment and an Amazon DocumentDB cluster in your default Amazon Virtual Private Cloud (Amazon VPC). For instructions on creating a default VPC, see Getting Started with Amazon VPC. This post demonstrates how to connect to your Amazon DocumentDB cluster from your AWS Cloud9 environment with a mongo shell and run a transaction. When creating AWS resources, we recommend that you follow the best practices for AWS Identity and Access Management (IAM). The following diagram shows the final architecture of this walkthrough. For this walkthrough, use the default VPC in a given Region. For more information, see Creating a Virtual Private Cloud (VPC). Creating an AWS Cloud9 environment To create your AWS Cloud9 environment, complete the following steps: On the AWS Cloud9 console, choose Create environment. Under Environment name and description, for Name, enter a name for the environment. This post enters the name DocumentDBCloud9. Choose Next step. In the Configure settings section, accept all defaults. Choose Next step. In the Review section, choose Create environment. The provisioning of the AWS Cloud9 environment can take up to 3 minutes. When it’s complete, you see a command prompt. You’re redirected to the command prompt to install the mongo shell and connect to your Amazon DocumentDB cluster. Creating a security group In this step, you use Amazon Elastic Compute Cloud (Amazon EC2) to create a new security group that enables you to connect to your Amazon DocumentDB cluster on port 27017 (the default port for Amazon DocumentDB) from your AWS Cloud9 environment. On the Amazon EC2 console, under Network & Security, choose Security groups. Choose Create security group. For Security group name, enter demoDocDB. For VPC, accept the usage of your default VPC. For Description, enter a description. In the Inbound rules section, choose Add rule. For Type, choose Custom TCP Rule. For Port Range, enter 27017.The source security group is the security group for the AWS Cloud9 environment you just created. To see a list of available security groups, enter cloud9 in the destination field. Choose the security group with the name aws-cloud9-. Accept all other defaults and choose Create security group. The following screenshot shows you the security groups that were created in this step and the AWS Cloud9 security group that was created when you created an AWS Cloud9 environment. Creating an Amazon DocumentDB 4.0 cluster To create your Amazon DocumentDB 4.0 cluster, complete the following steps: On the Amazon DocumentDB console, on the Clusters page, choose Create. For Engine version, choose the default (4.0.0). On the Create Amazon DocumentDB cluster page, for Instance class, choose t3.medium. For Number of instances, choose 1. This helps minimize costs. Leave other settings at their default. In the Authentication section, enter a username and password. Turn on Show advanced settings. In the Network settings section, for VPC security groups, choose demoDocDB. Choose Create cluster. Amazon DocumentDB is now provisioning your cluster, which can take up to a few minutes to finish. You can connect to your cluster when both the cluster and instance status show as Available. While Amazon DocumentDB provisions the cluster, complete the remaining steps to connect to your Amazon DocumentDB cluster. Installing the 4.0 mongo shell You can now install the mongo shell, which is a command-line utility that you use to connect to and query your Amazon DocumentDB cluster. On the AWS Cloud9 console, under Your environments, choose DocumentDBCloud9. Choose Open IDE. To install the 4.0 mongo shell, at the command prompt, create the repository file with the following code: echo -e "[mongodb-org-4.0] nname=MongoDB Repositorynbaseurl=https://repo.mongodb.org/yum/amazon/2013.03/mongodb-org/4.0/x86_64/ngpgcheck=1 nenabled=1 ngpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc" | sudo tee /etc/yum.repos.d/mongodb-org-4.0.repo When it’s complete, install the mongo shell with the following code: sudo yum install -y mongodb-org-shell Transport Layer Security (TLS) is enabled by default for any new Amazon DocumentDB clusters. For more information, see Managing Amazon DocumentDB Cluster TLS Settings. To encrypt data in transit, download the CA certificate for Amazon DocumentDB. See the following code: wget https://s3.amazonaws.com/rds-downloads/rds-combined-ca-bundle.pem Connecting to your Amazon DocumentDB cluster You’re now ready to connect to your Amazon DocumentDB cluster. On the Amazon DocumentDB console, on the Clusters page, locate your cluster. This post uses the cluster docdb-2020-10-09-21-45-11. Choose the cluster you created. Copy the connection string provided. Omit so that you’re prompted for the password by the mongo shell when you connect. This way, you don’t have to type your password in cleartext.Your connection string should look like the following screenshot. When you enter your password and can see the rs0:PRIMARY> prompt, you’re successfully connected to your Amazon DocumentDB cluster. For information about troubleshooting, see Troubleshooting Amazon DocumentDB. When you have connected with the mongo shell, you can discover the version (4.0.0) with the following command: db.version() You get the following output: 4.0.0 Using transactions Now that you’re connected to your cluster with the mongo shell, you can explore using transactions. One of the classic use cases for transactions is debiting money from one person’s account and crediting that money in another person’s account. Because the use case deals with two separate operations in the database, it’s desirable that the two operations run within a transaction and follow the ACID properties. For this post, we transfer $400 from Bob’s bank account to Alice’s bank account. Both accounts begin with $500. To start from with an empty collection, first drop the account collection: db.account.drop() You get the following output: {true, false} Insert data into the collection to represent Bob’s account: db.account.insert({"_id": 1, "name": "Bob", "balance": 500.00}); You get the following output: WriteResult({ "nInserted" : 1 }) Insert data into the collection to represent Alice’s account: db.account.insert({"_id": 2, "name": "Alice", "balance": 500.00}); You get the following output: WriteResult({ "nInserted" : 1 }) To start a transaction, create a session and a session object for the account: var mySession = db.getMongo().startSession(); var mySessionObject = mySession.getDatabase('test').getCollection('account'); mySession.startTransaction({readConcern: {level: 'snapshot'}, writeConcern: {w: 'majority'}}); Within the transaction, debit $400 from Bob’s account: mySessionObject.updateOne({"_id": 2}, {"$inc": {"balance": 400}}); You get the following output: { "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 } Similarly, credit Alice’s account with $400: mySessionObject.updateOne({"_id": 1}, {"$inc": {"balance": -400}}); You get the following output: { "acknowledged" : true, "matchedCount" : 1, "modifiedCount" : 1 } Within the transaction, you can see both updates with the following code: mySessionObject.find() You get the following output: { "_id" : 2, "name" : "Alice", "balance" : 900 } { "_id" : 1, "name" : "Bob", "balance" : 100 } If you view outside of the transaction, the updates aren’t yet visible: db.account.find() You get the following output: { "_id" : 1, "name" : "Alice", "balance" : 500 } { "_id" : 2, "name" : "Bob", "balance" : 500 } Commit the transaction and end the session: mySession.commitTransaction() mySession.endSession() To see the updates, enter the following code: db.account.find() You get the following output: { "_id" : 2, "name" : "Alice", "balance" : 900 } { "_id" : 1, "name" : "Bob", "balance" : 100 } Cleaning up When you complete the walkthrough, you can either stop your Amazon DocumentDB cluster to reduce costs or delete the cluster. By default, after 30 minutes of inactivity, your AWS Cloud9 environment stops the underlying EC2 instance to help save costs. Summary This post introduced you to MongoDB 4.0 compatibility in Amazon DocumentDB and showed you how to get started with Amazon DocumentDB 4.0 and transactions by creating an AWS Cloud9 environment, installing the mongo 4.0 shell, creating an Amazon DocumentDB cluster, connecting to your cluster, and walking through a common use case for transactions. For more information, see MongoDB 4.0 Compatibility and Transactions. For more information about recent launches and blog posts, see Amazon DocumentDB (with MongoDB compatibility) resources. About the author Joseph Idziorek is a Principal Product Manager at Amazon Web Services. https://aws.amazon.com/blogs/database/introducing-amazon-documentdb-with-mongodb-compatibility-4-0/

0 notes

Text

300+ TOP DRUPAL Interview Questions and Answers

Drupal Interview Questions for freshers experienced

1. What Is Drupal? Drupal (pronounced Dru-Pull) is an open source content management system offering a toolset that rivals those of most commercial alternatives. With integrated social media and e-commerce functionality, it provides unique value as part of your social media strategy. 2. How to create a folder and a module file in Drupal? Given that our choice of short name is "onthisdate", start the module by creating a folder in your Drupal installation at the path: sites/all/modules/onthisdate. You may need to create the sites/all/modules directory first. Create a PHP file and save it as onthisdate.module in the directory sites/all/modules/onthisdate. As of Drupal 6.x, sites/all/modules is the preferred place for non-core modules (and sites/all/themes for non-core themes), since this places all site-specific files in the sites directory. This allows you to more easily update the core files and modules without erasing your customizations. Alternatively, if you have a multi-site Drupal installation and this module is for only one specific site, you can put it in sites/your-site-folder/modules. The module is not operational yet: it hasn't been activated. We'll activate the module later in the tutorial. 3. How to name your module? The first step in creating a module is to choose a "short name" for it. This short name will be used in all file and function names in your module, so it must start with a letter and by Drupal convention it must contain only lower-case letters and underscores. For this example, we'll choose "onthisdate" as the short name. Important note: It is not just a convention that the short name is used for both the module's file name and as a function prefix. When you implement Drupal "hooks" (see later portions of tutorial), Drupal will only recognize your hook implementation functions if they have the same function name prefix as the name of the module file. It's also important to make sure your module does not have the same short name as any theme you will be using on the site. 4. Explain the menu system in Drupal? Define the navigation menus, and route page requests to code based on URLs. The Drupal menu system drives both the navigation system from a user perspective and the callback system that Drupal uses to respond to URLs passed from the browser. For this reason, a good understanding of the menu system is fundamental to the creation of complex modules. Drupal's menu system follows a simple hierarchy defined by paths. Implementations of hook_menu () define menu items and assign them to paths (which should be unique). The menu system aggregates these items and determines the menu hierarchy from the paths. For example, if the paths defined were a, a/b, e, a/b/c/d, f/g, and a/b/h, the menu system would form the structure: a a/b a/b/c/d a/b/h e f/g 5. How to interact with Drupal search system? There are three ways to interact with the search system: Specifically for searching nodes, you can implement nodeapi ('update index') and nodeapi ('search result'). However, note that the search system already indexes all visible output of a node, i.e. everything displayed normally by hook_view () and hook_nodeapi ('view'). This is usually sufficient. You should only use this mechanism if you want additional, non-visible data to be indexed. Implement hook_search (). This will create a search tab for your module on the /search page with a simple keyword search form. You may optionally implement hook_search_item () to customize the display of your results. Implement hook_update_index (). This allows your module to use Drupal's HTML indexing mechanism for searching full text efficiently. If your module needs to provide a more complicated search form, then you need to implement it yourself without hook_search (). In that case, you should define it as a local task (tab) under the /search page (e.g. /search/mymodule) so that users can easily find it. 6. How to Customize a Drupal Syndicate Feed Icon? For a recent project I needed to customize the feed icon in the Drupal theme I was creating. This wasn't as straight forward as I thought it would be. Being the drupal newbie that I am I went looking for it in the core templates and suggestions page only to come empty handed. Previously I found the solution to theming a search form by using the search-block-form.tpl.php template file and thought there would be one for the feed icon too. I found the solution to this in the function reference in the form of a theme hook. theme_feed_icon($url, $title) This function is internally called by drupal to generate the feed icon in the Syndicate block. Our Job is to override this function. 7. How to backup a Drupal site? Backing up your Drupal site is now very easy, you just need to download and install a module called Backup & Migrate. To install the module click on the Administer Modules check the Backup and Migrate module and enable it and save the settings. Then navigate to the Administer Content Management Backup and Migrate then do the following settings. Exclude the following tables altogether: select the table which you dont want to take backup. Give the backup file name. There are also options to compress the file before download, or add a datestamp. And then click Backup Database. Alternately you can take backups using PhpMyAdmin. 8. How to move a Drupal Site from One host/server to another on your NEW host? Upload your folder with the complete drupal installation to your home-directory. Once done, go to phpadmin on the new host, create a new mysql database, example "name_drpl1" and create a new mysql user. Create a password for this new mysql user, click "assign all privileges" to this user and assign the user to the new database. You now should have a new mysql database on the new host with a mysql user, eg. "name_drpl1" as database name and "name_username" as database user name. Import (upload) the database (which you exported from the old host earlier) with phpadmin to the new database. This might take a minute. If needed edit the file /sites/default/settings.php and edit at the section where you enter the database, location, username and password. You CAN enter the password either encrypted or not encrypted there. Chmod your "files" folder so it is writeable using your ftp client (filezilla), chmod to 777 Double check your .htaccess and /sites/default/settings.php and make changes in case they are needed. Change nameserves on your domain host and let them point to your new host's nameservers. Enter the new nameservers in your control panel where your domain names are hosted, overwriting the old ones. After some time (sometimes a day or two) your domain should point to the new host and drupal should be up and running on the new host. 9. How to move a Drupal Site from One host/server to another? Migrating Drupal On your OLD host: Backup your whole home directory from your ftp access using an ftp client like filezilla. Make a folder on your local harddisk and download the complete directory to that local folder. Backup your mysql database on your old host using phpadmin, select your mysql database, usually something like "name_drpl1". Select all fields, click "export" and save the database to your local harddisk. Leave default options enabled. You will receive a file similar to "name_drpl1.sql". This is your mysql database 10. How to install Drupal on a local WAMP server? Preparing your computer with a local installation of Drupal with WampServer is comparatively a trouble-free process to follow. Since WampServer will install an Apache-server, SQL, PHP and phpMySQL on your computer, with those tools you can install and run Drupal locally even without an internet connection.