#docker for development

Explore tagged Tumblr posts

Text

LOVE working in ops and having to troubleshoot dev's issues that they dont know how to describe and is also their fault

#i get it not everybody knows docker#but i went through a web dev bootcamp that didnt even cover docker and somehow i know enough about docker soooooooooo#i feel like if youre developing on certain platforms. you have a responsibility to know what youre setting up. before pointing fingers#and learn how to ask proper questions!!!! 2 hours of digging couldve been 10 mins if you point at the thing thats broken#dont assume you know whats wrong (something we did). just. describe the issue first#this is what doctors who have hypochondriac patients like myself probably feel like#at least thats not blaming the doctor as much as it is just panicking tho#hate it when devs are like. WELL OBVIOUSLY YOU CHANGED SOMETHING. no we didnt. you fucked up#somebody shut me up#WORK RANTS WITH BIANX#thats a tag now

3 notes

·

View notes

Text

How to Install and Use Docker on Ubuntu 22.04

Docker is an open-source containerization platform that allows developers to easily package and deploy applications in a portable and lightweight manner. In this tutorial, we will provide a step-by-step guide on how to install and use Docker on Ubuntu 22.04. Prerequisites Before you proceed, make sure you have Ubuntu 22.04 installed on your system and have a user account with sudo…

View On WordPress

#automation#cloud computing#command line#containerization#devops#Docker#linux#software development#Ubuntu#Ubuntu 22.04#virtualization

11 notes

·

View notes

Video

youtube

(via Develop & Deploy Nodejs Application in Docker | Nodejs App in Docker Container Explained) Full Video Link https://youtu.be/Bwly_YJvHtQ Hello friends, new #video on #deploying #running #nodejs #application in #docker #container #tutorial for #api #developer #programmers with #examples is published on #codeonedigest #youtube channel. @java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #docker #dockertutorial #nodejs #learndocker #whatisdocker #nodejsandexpressjstutorial #nodejstutorial #nodejsandexpressjsproject #nodejsprojects #nodejstutorialforbeginners #nodejsappdockerfile #dockerizenodejsexpressapp #nodejsappdocker #nodejsapplicationdockerfile #dockertutorialforbeginners #dockerimage #dockerimagecreationtutorial #dockerimagevscontainer #dockerimagenodejs #dockerimagenodeexpress #dockerimagenode_modules

#video#deploying#running#nodejs#application#docker#container#tutorial#api#developer#programmers#examples#codeonedigest

3 notes

·

View notes

Text

#TechKnowledge Have you heard of Containerization?

Swipe to discover what it is and how it can impact your digital security! 🚀

👉 Stay tuned for more simple and insightful tech tips by following us.

🌐 Learn more: https://simplelogic-it.com/

💻 Explore the latest in #technology on our Blog Page: https://simplelogic-it.com/blogs/

✨ Looking for your next career opportunity? Check out our #Careers page for exciting roles: https://simplelogic-it.com/careers/

#techterms#technologyterms#techcommunity#simplelogicit#makingitsimple#techinsight#techtalk#containerization#application#development#testing#deployment#devops#docker#kubernets#openshift#scalability#security#knowledgeIispower#makeitsimple#simplelogic#didyouknow

0 notes

Text

What Is Docker? and Other Details

¿Qué Es Docker? y Otros Detalles

👉 https://blog.nubecolectiva.com/que-es-docker-y-otros-detalles/

#software development#web development#100daysofcode#devs#developerlife#developers#web developers#worldcode#developers & startups#backenddevelopment#frontendev#frontenddevelopment#frontend developer#female fronted#docker

0 notes

Text

Top Tools for Web Development in 2025

Web development is an ever-evolving field, requiring developers to stay updated with the latest tools, frameworks, and software. These tools not only enhance productivity but also simplify complex development processes. Whether you’re building a small business website or a complex web application, having the right tools in your toolkit can make all the difference. Here’s a rundown of the top…

View On WordPress

#Angular Framework#API Development Tools#Back-End Development Tools#Best Tools for Web Development 2024#Bootstrap for Responsive Design#Django Python Framework#Docker for Deployment#Front-End Development Tools#GitHub for Developers#Laravel PHP Framework#Modern Web Development Tools#Node.js Back-End Framework#Popular Web Development Software#React Development#Tailwind CSS#Testing and Debugging Tools#Vue.js for Web Development#Web Development Frameworks

0 notes

Text

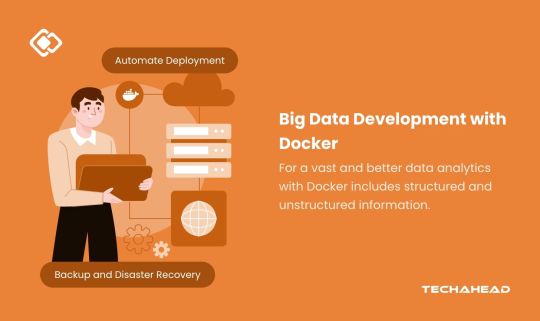

Cloud-Based Big Data Development Simplified with Docker

As businesses embrace digital transformation, many tasks have shifted from desktop software to cloud-based applications. Despite this trend, software development IDEs have largely remained desktop-bound. Efforts to create robust online IDEs have been made but lack parity with traditional tools. This limitation highlights a significant gap in the adoption of cloud-based development solutions.

The big data analytics market has experienced explosive growth, with its global size valued at $307.51 billion in 2023. Projections indicate a rise to $348.21 billion in 2024, eventually reaching $924.39 billion by 2032. This growth reflects a remarkable compound annual growth rate (CAGR) of 13.0%. The U.S. market is a key contributor, predicted to achieve $248.89 billion by 2032. Industries increasingly rely on advanced databases, fueling this robust expansion.

The big data and analytics services market continues its rapid ascent, growing from $137.23 billion in 2023 to $154.79 billion in 2024. This represents a CAGR of 12.8%, driven by the proliferation of data and the need for regulatory compliance. Organizations are leveraging big data to gain competitive advantages and ensure smarter decision-making.

Forecasts predict an even faster CAGR of 16.0%, with the market reaching $280.43 billion by 2028. This acceleration is attributed to advancements in AI-driven analytics, real-time data processing, and enhanced cloud-based platforms. Big data privacy and security also play pivotal roles, reflecting the heightened demand for compliance-focused solutions.

Emerging trends in big data highlight the integration of AI and machine learning, which enable predictive and prescriptive analytics. Cloud app development and edge analytics are becoming indispensable as businesses seek agile and scalable solutions. Enhanced data privacy protocols and stringent compliance measures are reshaping the way big data is stored, processed, and utilized.

Organizations leveraging big data are unlocking unparalleled opportunities for growth, innovation, and operational efficiency. With transformative technologies at their fingertips, businesses are better positioned to navigate the data-driven future.

Key Takeaways:

Big data encompasses vast, diverse datasets requiring advanced tools for storage, processing, and analysis.

Docker is a transformative technology that simplifies big data workflows through portability, scalability, and efficiency.

The integration of AI and machine learning in big data enhances predictive and prescriptive analytics for actionable insights.

Cloud environments provide unparalleled flexibility, scalability, and resource allocation, making them ideal for big data development.

Leveraging docker and the cloud together ensures businesses can manage and analyze massive datasets efficiently in a dynamic environment.

What is Big Data?

Big Data encompasses vast, diverse datasets that grow exponentially, including structured, unstructured, and semi-structured information. These datasets, due to their sheer volume, velocity, and variety, surpass the capabilities of traditional data management tools. They require advanced systems to efficiently store, process, and analyze.

The rapid growth of big data is fueled by innovations like connectivity, Internet of Things (IoT), mobility, and artificial intelligence technologies. These advancements have significantly increased data availability and generation, enabling businesses to harness unprecedented amounts of information. However, managing such massive datasets demands specialized tools that process data at high speeds to unlock actionable insights.

Big data plays a pivotal role in advanced analytics, including predictive modeling and machine learning. Businesses leverage these technologies to address complex challenges, uncover trends, and make data-driven decisions. The strategic use of big data allows companies to stay competitive, anticipate market demands, and enhance operational efficiency.

With digital transformation, the importance of big data continues to rise. Organizations now adopt cutting-edge solutions to collect, analyze, and visualize data effectively. These tools empower businesses to extract meaningful patterns and drive innovation, transforming raw data into strategic assets.

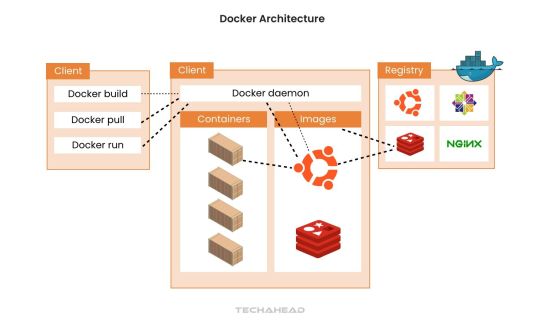

How Does Docker Work With AWS?

Docker has revolutionized how applications are developed, deployed, and managed in the dynamic landscape of big data. This guide explores how Docker simplifies big data workflows, providing scalability, flexibility, and efficiency.

Docker uses multiple different environments while building online services:

Amazon Web Services or the servers

Microsoft Azure the code

Google Compute Engine

GitHub for SDK

Dropbox to save files

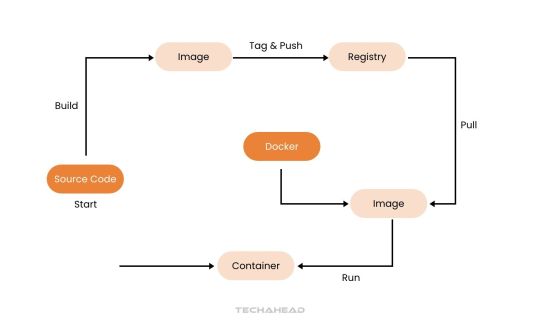

Step 1: Build Your Big Data Application With a Dockerfile

Begin by developing your big data application using your preferred language and tools. A Dockerfile is essential for packaging your application.

It’s a blueprint that outlines the base image, dependencies, and commands to run your application. For big data applications, the Dockerfile might include libraries for distributed computing like Hadoop and Spark. This ensures seamless functionality across various environments.

Step 2: Build a Big Data Docker Image

The Dockerfile helps create a Docker image, which is a self-sufficient unit containing your application, environment, and dependencies.

For big data, this image ensures compatibility, including tools like Jupyter Notebook, PySpark, or Presto for analytics. Use the following command to create the image:

$ docker build -t bigdata-app:latest .

This command builds an image, tags it as ‘bigdata-app:latest’, and prepares it for deployment.

Step 3: Run Containers for Big Data Processing

A Docker container is an isolated instance of your image, ideal for running big data tasks without interference.

$ docker container run -d -p 8080:80 bigdata-app:latest

This command runs the container in detached mode and maps port 8080 on the host to port 80 in the container.

For big data, containers allow parallel processing, enabling distributed systems to run seamlessly across multiple nodes.

Step 4: Manage Big Data Containers

Docker simplifies the management of containers for complex big data workflows.

Use ‘docker ps’ to view running containers, essential for tracking active data processes.

Use ‘docker ps -a’ to check all containers, including completed tasks.

Use ‘docker stop ’ and ‘docker start ’ to manage container lifecycles.

Use ‘docker rm ’ to remove unused containers and free resources.

Run ‘docker container ���help’ to explore advanced options for managing big data processing pipelines.

Step 5: Orchestrate Big Data Workflows with Docker Compose

For complex big data architecture, Docker Compose defines and runs multi-container setups.

Compose files in YAML specify services like Hadoop clusters, Spark worker, or Kafka brokers. This simplifies deployment and ensures services interact seamlessly.

```yaml

version: '3'

services:

hadoop-master:

image: hadoop-master:latest

ports:

- "50070:50070"

spark-worker:

image: spark-worker:latest

depends_on:

- hadoop-master

On command can spin up your entire big data ecosystem:$ docker-compose up

Step 6: Publish and Share Big Data Docker Images

Publishing Docker images ensures your big data solutions are accessible across teams or environments. Push your image to a registry:

$ docker push myregistry/bigdata-app:latest

This step enables distributed teams to collaborate effectively and deploy applications in diverse environments like Kubernetes clusters or cloud platforms.

Step 7: Continuous Iteration for Big Data Efficiency

Big data applications require constant updates to incorporate new features or optimize workflows.

Update your Dockerfile to include new dependencies or scripts for analytics, then rebuild the image:

$ docker build -t bigdata-app:v2 .

This interactive approach ensures that your big data solutions evolve while maintaining efficiency and reliability

The Five ‘V’ of Big Data

Not all large datasets qualify as big data. To be clarified as such, the data must exhibit five characteristics. Let’s look deeper into these pillars.

Volume: The Scale of Data

Volume stands as the hallmark of big data. Managing vast amounts of data—ranging from terabytes to petabytes—requires advanced tools and techniques. Traditional systems fall short, while AI-powered analytics handle this scale with ease. Secure storage and efficient organization form the foundation for utilizing this data effectively, enabling large companies to unlock insights from their massive reserves.

Velocity: The Speed of Data Flow

In traditional systems, data entry was manual and time-intensive, delaying insights. Big data redefines this by enabling real-time processing as data is generated, often within milliseconds. This rapid flow empowers businesses to act swiftly—capturing opportunities, addressing customer needs, detecting fraud, and ensuring agility in fast-paced environments.

Veracity: Ensuring Data Quality

Data’s worth lies in its accuracy, relevance, and timeliness. While structured data errors like typos are manageable, unstructured data introduces challenges like bias, misinformation, and unclear origins. Big data technologies address these issues, ensuring high-quality datasets that fuel precise and meaningful insights.

Value: Transforming Data into Insights

Ultimately, big data’s true strength lies in its ability to generate actionable insights. The analytics derived must go beyond intrigue to deliver measurable outcomes, such as enhanced competitiveness, improved customer experiences, and operational efficiency. The right big data strategies translate complex datasets into tangible business value, ensuring a stronger bottom line and resilience.

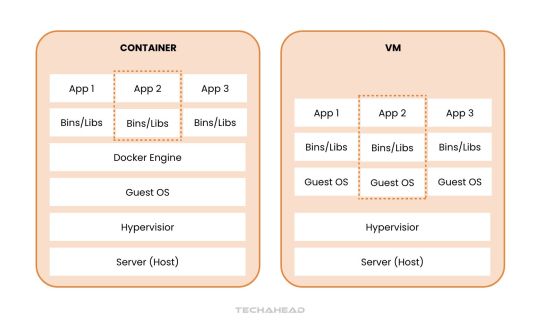

Understanding Docker Containers: Essential for Big Data Use Cases

Docker containers are revolutionizing how applications are developed, deployed, and managed, particularly in big data environments. Here’s an exploration of their fundamentals and why they are transformative.

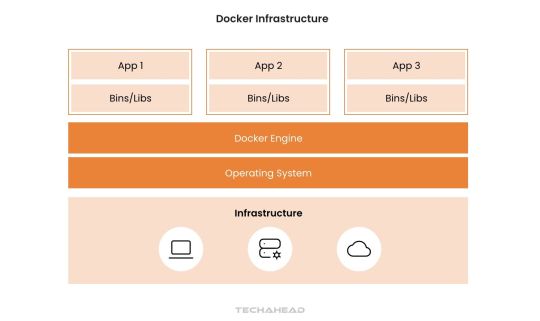

What Are Docker Containers?

Docker containers act as an abstraction layer, bundling everything an application needs into a single portable package. This bundle includes libraries, resources, and code, enabling seamless deployment on any system without requiring additional configurations. For big data applications, this eliminates compatibility issues, accelerating development and deployment.

Efficiency in Development and Migration

Docker drastically reduces development time and costs, especially during architectural evolution or cloud migration. It simplifies transitions by packaging all necessary components, ensuring smooth operation in new environments. For big data workflows, Docker’s efficiency helps scale analytics, adapt to infrastructure changes, and support evolving business needs.

Why the Hype Around Docker?

Docker’s approach to OS-level virtualization and its Platform-as-a-Service (PaaS) nature makes it indispensable. It encapsulates applications into lightweight, executable components that are easy to manage. For big data, this enables rapid scaling, streamlined workflows, and reduced resource usage.

Cross-Platform Compatibility

As an open-source solution, Docker runs on major operating systems like Linux, Windows, and macOS. This cross-platform capability ensures big data applications remain accessible and functional across diverse computing environments. Organizations can process and analyze data without being limited by their operating system.

Docker in Big Data Architecture

Docker’s architecture supports modular, scalable, and efficient big data solutions. By isolating applications within containers, Docker ensures better resource utilization and consistent performance, even under heavy workloads. Its ability to integrate seamlessly into big data pipelines makes it a critical tool for modern analytics.

Docker containers are transforming big data operations by simplifying deployment, enhancing scalability, and ensuring compatibility across platforms. This powerful technology allows businesses to unlock the full potential of their data with unmatched efficiency and adaptability.

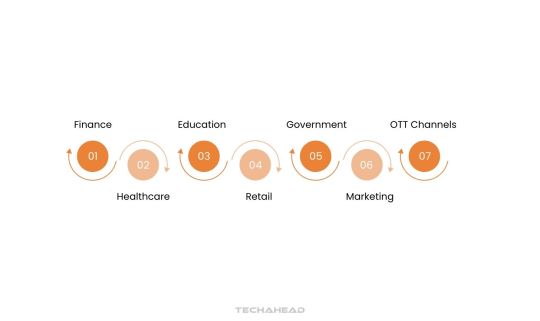

Applications of Big Data Across Industries

Big data is transforming industries by enabling businesses to harness data-driven insights for innovation, efficiency, and improved decision-making. Here’s how different sectors are leveraging big data to revolutionize their operations.

Finance

Big data is a cornerstone of the finance and insurance sectors, enhancing fraud detection and enabling more accurate risk assessments. Predictive analytics help refine credit rankings and brokerage services, ensuring better financial decision-making. Blockchain technology also benefits from big data by streamlining secure transactions and tracking digital assets. Financial institutions use big data to fortify cybersecurity measures and deliver personalized financial recommendations to customers, improving user trust and satisfaction.

Healthcare

Big data is reshaping healthcare app development by equipping hospitals, researchers, and pharmaceutical companies with critical insights. Patient and population data allow for the optimization of treatments, accelerating research on diseases like cancer and Alzheimer’s. Advanced analytics support the development of innovative drugs and help identify trends in population health. By leveraging big data, healthcare providers can predict disease outbreaks and improve preventive care strategies.

Education

In education app development, big data empowers institutions to analyze student behavior and develop tailored learning experiences. This data enables educators to design personalized lesson plans, predict student performance, and enhance engagement. Schools also use big data to monitor resources, optimize budgets, and reduce operational costs, fostering a more efficient educational environment.

Retail

Retailers rely on big data to analyze customer purchase histories and transaction patterns. This data predicts future buying behaviors allowing for personalized marketing strategies and improved customer experiences. Real estate app development uses big data to optimize inventory, pricing, and promotions, staying competitive in a dynamic market landscape.

Government

Governments leverage big data to analyze public financial, health, and demographic data for better policymaking. Insights derived from big data help create responsive legislation, optimize financial operations, and enhance crisis management plans. By understanding citizen needs through data, governments can improve public services and strengthen community engagement.

Marketing

Big data transforms marketing by offering an in-depth understanding of consumer behavior and preferences. Businesses use this data to identify emerging market trends and refine buyer personas. Marketers optimize campaigns and strategies based on big data insights, ensuring more targeted outreach and higher conversion rates.

OTT Channels

Media platforms like Netflix and Hulu exemplify big data’s influence in crafting personalized user experiences. These companies analyze viewing, reading, and listening habits to recommend content that aligns with individual preferences. Big data even informs choices about graphics, titles, and colors, tailoring the content presentation to boost engagement and customer satisfaction.

Big data is not just a technological trend—it’s a transformative force across industries. Organizations that effectively utilize big data gain a competitive edge, offering smarter solutions and creating lasting value for their customers.

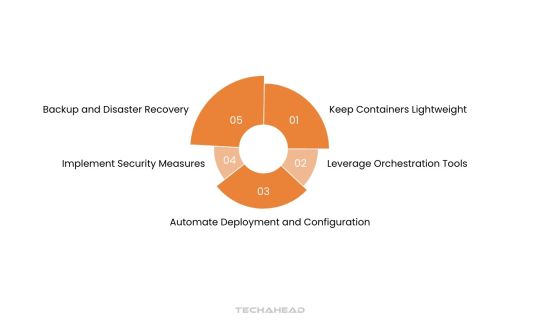

Best Practices for Using Docker in Big Data Development

To maximize the potential of Docker for big data development, implementing key strategies can optimize performance, security, and scalability. Below are essential practices for effectively using Docker in big data environments.

Keep Containers Lightweight

Design Docker containers with minimalistic and efficient images to optimize resource consumption. Lightweight containers reduce processing overhead, enabling faster execution of big data workloads. By stripping unnecessary dependencies, you can improve container performance and ensure smoother operations across diverse environments.

Leverage Orchestration Tools

Utilize orchestration platforms like Docker Swarm or Kubernetes to streamline the management of big data workloads. These tools automate deployment, scaling, and load balancing, ensuring that big data applications remain responsive during high-demand periods. Orchestration also simplifies monitoring and enhances fault tolerance.

Automate Deployment and Configuration

Automate the provisioning and setup of Docker containers using tools like Docker Compose or infrastructure-as-code frameworks. Automation reduces manual errors and accelerates deployment, ensuring consistent configurations across environments. This approach enhances the efficiency of big data processing pipelines, especially in dynamic, large-scale systems.

Implement Security Measures

Adopt robust security protocols to protect Docker containers and the big data they process. Use trusted base images, keep Docker components updated, and enforce strict access controls to minimize vulnerabilities. Restrict container privileges to the least necessary level, ensuring a secure environment for sensitive data processing tasks.

Backup and Disaster Recovery

Establish comprehensive backup and disaster recovery plans for data managed within Docker environments. Regularly back up critical big data outputs to safeguard against unexpected failures or data loss. A reliable disaster recovery strategy ensures continuity in big data operations, preserving valuable insights even during unforeseen disruptions.

By adhering to these practices, organizations can fully leverage Docker’s capabilities in big data processing. These strategies enhance operational efficiency, ensure data security, and enable scalability, empowering businesses to drive data-driven innovation with confidence.

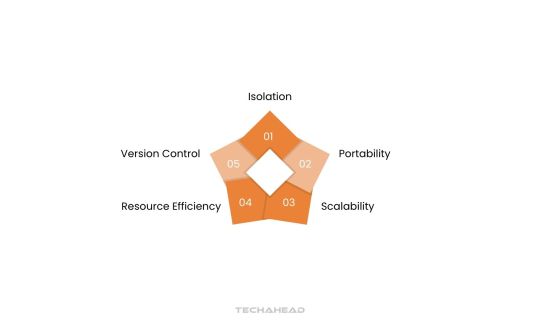

Advantages of Using Docker for Big Data Processing

Docker offers a range of benefits that enhance the efficiency and scalability of big data processing environments. By optimizing resource utilization and enabling seamless application deployment. Docker ensures businesses can handle large-scale data operations effectively. Here’s a closer look:

Isolation

Docker provides robust application-level isolation, ensuring each big data development workload operates independently. This isolation prevents conflicts between applications, improving reliability and enabling seamless parallel execution of multiple data-intensive tasks. Businesses can confidently run diverse big data applications without compatibility concerns or interference.

Portability

Docker containers deliver unmatched portability, allowing big data workloads to be deployed across various environments. Whether running on local machines, cloud platforms, or on-premises servers. Docker ensures consistent performance. This portability simplifies cloud migration of big data development workflows between infrastructure, minimizing downtime and operational challenges.

Scalability

With Docker, scaling big data applications becomes effortless through horizontal scaling capabilities. Businesses can quickly deploy multiple containers to distribute workloads, enhancing processing power and efficiency. This scalability ensures organizations can manage fluctuating data volumes, maintaining optimal performance during peak demands.

Resource Efficiency

Docker’s lightweight design optimizes resource utilization, reducing hardware strain while processing large datasets. This efficiency ensures big data workloads can run smoothly without requiring excessive infrastructure investments. Organizations can achieve high-performance data analysis while controlling operational costs.

Version Control

Docker’s versioning features simplify managing containerized big data applications, ensuring reproducibility and traceability. Teams can easily roll back to previous versions if needed, enhancing system reliability and reducing downtime. This capability supports consistent and accurate data processing workflows.

By leveraging Docker, businesses can streamline big data processing operations. The above-mentioned advantages empower businesses to process large datasets effectively, extract actionable insights, and stay competitive in a data-driven world.

Conclusion

This article explores how modern cloud technologies can establish an efficient and scalable development environment. While cloud-based machines may not fully replace traditional computers or laptops, they excel for development tasks requiring access to integrated development environments (IDEs). With today’s high-speed internet, cloud-based development offers seamless and responsive performance for most projects.

Cloud environments provide unparalleled flexibility, making server access and management significantly faster than local setups. Developers can effortlessly scale memory, deploy additional environments, or generate system images with minimal effort. This agility is especially crucial when handling big data projects, which demand vast resources and scalable infrastructures.

The cloud effectively places an entire data center at your fingertips, empowering developers to manage complex tasks efficiently. For big data workflows, this translates into the ability to process and store massive datasets without compromising speed or functionality. Businesses benefit from this scalability, as it aligns with the increasing demand for high-performance analytics and storage.

By leveraging the cloud, developers gain access to state-of-the-art infrastructures that optimize workflow efficiency. The ability to allocate resources, process data, and scale operations dynamically is essential for thriving in today’s data-driven economy.

Source URL: https://www.techaheadcorp.com/blog/developing-for-the-cloud-in-the-cloud-big-data-development-with-docker/

0 notes

Text

Select the best Laravel development tools from PhpStorm, Debugbar, Forge, Dusk, Vapor, Tinker, and Socialite, to build dynamic and scalable web apps.

#Laravel#Laravel Framework#Laravel Development#PHP#Laravel Tool#Php Developers#Hire Laravel Developer#Docker#Laradock

0 notes

Text

LocalStack Secures $25M Series A Funding to Give Developers Control Over Cloud Development

New Post has been published on https://thedigitalinsider.com/localstack-secures-25m-series-a-funding-to-give-developers-control-over-cloud-development/

LocalStack Secures $25M Series A Funding to Give Developers Control Over Cloud Development

LocalStack, a leader in local cloud development environments, has announced the successful completion of a $25 million Series A funding round led by Notable Capital, with support from CRV and Heavybit. This investment is set to drive forward LocalStack’s mission to revolutionize cloud development by putting control back into the hands of developers, eliminating the costly and time-consuming reliance on cloud-based testing.

LocalStack’s platform enables developers to run a full AWS environment directly on their laptops. This unique approach accelerates development cycles, cuts down on cloud costs, and is quickly becoming essential in the developer community. LocalStack currently powers over 8 million weekly sessions and has amassed more than 280 million Docker pulls. With a growing customer base of over 900 companies, including industry leaders like SiriusXM and Chime, the platform is proving itself as the go-to choice for local cloud development.

“The centralized nature of cloud computing has created unprecedented complexity and costs for developers, who spend countless hours waiting for cloud-based tests to complete,” said Gerta Sheganaku, co-founder and Co-CEO of LocalStack. “Our platform fundamentally transforms the developer experience by enabling teams to test locally, cutting deployment times from 28 minutes to 24 seconds while significantly reducing AWS spend. We’re putting control back in developers’ hands, giving them the flexibility and speed they need to innovate,” adds Waldemar Hummer, co-founder and Co-CEO of LocalStack.

The funding arrives as organizations increasingly confront rising cloud costs, with annual expenditures already surpassing $79 billion, and grapple with the inefficiencies of cloud-based development. By providing a localized AWS testing environment, LocalStack eliminates the need to spin up cloud resources, replacing them with an isolated sandbox that enables rapid iteration and seamless collaboration. Supporting over 100 AWS services with near-exact emulation, LocalStack gives developers a reliable and cost-effective alternative to cloud environments.

“LocalStack stands out for its rare combination of bottom-up developer love and clear enterprise value,” said Glenn Solomon, Managing Partner at Notable Capital. “With over 56,000 GitHub stars, 25,000 Slack users, and 500+ contributors, LocalStack has built a vibrant community alongside its rapidly growing enterprise customer base. While they’ve established themselves as the de facto standard for AWS local development, their recent preview release for Snowflake showcases their broader vision to revolutionize cloud development across all major platforms. We’re thrilled to partner with Gerta, Waldemar, and the entire LocalStack team as they transform how developers build for the modern multi-cloud world.”

LocalStack’s growth trajectory reflects its dedication to transforming cloud development processes. The company, founded in 2017 as an open-source project, has expanded into a comprehensive platform, boasting over 52,000 GitHub stars and an extensive community of 25,000 Slack users. Rooted in open source, LocalStack’s mission is to give developers complete control over their environments, allowing them to run, test, and debug cloud services locally. This control reduces the complexity, cost, and environmental impact of cloud development, empowering developers to focus on innovation and efficient testing.

With the new funding, LocalStack aims to accelerate its U.S. market presence and invest further in developing new features such as chaos engineering and application resiliency testing. These innovations will continue to enhance developer productivity, freeing up valuable time previously lost to cloud setup and permissions management. By emulating cloud services locally, LocalStack also opens doors to advanced workflows, including seamless collaboration through Cloud Pods, a feature that saves and restores infrastructure states for enhanced team productivity.

LocalStack’s platform has fundamentally altered the development landscape by decoupling AWS development from cloud dependency. By enabling developers to run complete stacks locally, the platform removes the need for sandbox accounts, automates IAM policies, and allows for rapid, risk-free testing. This is especially valuable for engineering teams like that of Xiatech, where Rick Timmis, Head of Engineering, notes, “Our engineering team utilizes LocalStack to provide a complete, localized AWS environment where developers can build, test, profile, and debug infrastructure and code ahead of deployment to the cloud.”

As LocalStack continues to enhance cloud development by focusing on developer experience and efficiency, the company’s commitment to putting developers first and promoting a collaborative, innovative workplace remains central. LocalStack’s technology is not just an emulator; it’s a comprehensive toolset designed to streamline cloud development, enabling faster deployments, reduced AWS costs, and a smoother workflow for global dev teams.

This latest funding round positions LocalStack to further expand its offerings, solidifying its role as a critical asset for teams aiming to innovate without the friction of traditional cloud development constraints. As they look toward a future where cloud development is streamlined and simplified, LocalStack is setting a new standard in the developer-first approach to cloud services.

#000#Accounts#approach#AWS#billion#CEO#chaos#Cloud#cloud computing#cloud services#code#Collaboration#collaborative#Community#Companies#complexity#comprehensive#computing#cutting#deployment#Developer#developers#development#Docker#efficiency#emulation#engineering#enterprise#Environment#Environmental

0 notes

Text

AWS Training in Pune, Bangalore, and Kerala at Radical Technologies

Start your cloud career with Radical Technologies’ expert-led AWS Training! Our AWS Certification Course provides:

Comprehensive training on scalable deployments and security management Real-time projects, including VMware and Azure migrations to AWS Batches that are flexible for weekdays and weekends in both online and offline forms Corporate training options and hands-on sessions for practical skills Locations: Pune, Bangalore, Kerala, and online Live demos, interview prep, and complete placement support Join Pune’s best AWS course and fast-track your cloud career with industry-ready skills! ls!

#linux#microsoft azure#terraform#coding#cybersecurity#information technology#innovation#classroom#software#software development#docker#kubernetes

0 notes

Text

Harnessing Containerization in Web Development: A Path to Scalability

Explore the transformative impact of containerization in web development. This article delves into the benefits of containerization, microservices architecture, and how Docker for web apps facilitates scalable and efficient applications in today’s cloud-native environment.

#Containerization in Web Development#Microservices architecture#Benefits of containerization#Docker for web apps#Scalable web applications#DevOps practices#Cloud-native development

0 notes

Text

🔴🚀 ¡Próximamente! Curso de Multi Tenant con Django 5 y Docker 🏢✨

youtube

Estoy emocionado de anunciar que estoy preparando un nuevo curso sobre Multi Tenant con Django 5. En este curso, aprenderás a crear aplicaciones multi-tenant robustas y escalables utilizando Django 5 y todas las herramientas en sus últimas versiones.

🔍 ¿Qué aprenderás?

❏ Configuración de entornos multi-tenant con entornos aislados y entornos compartidos (sucursales) ❏ Uso de Docker para la contenedorización y despliegue. ❏ Gestión de bases de datos y esquemas. ❏ Implementación de seguridad y autenticación. ❏ Optimización y escalabilidad. ❏ Y mucho más…

Este curso está diseñado para desarrolladores de todos los niveles que quieran llevar sus habilidades de Django al siguiente nivel. ¡No te lo pierdas!

🔔 Suscríbete y activa las notificaciones para no perderte ninguna actualización.

¡Nos vemos en el curso! 🚀

👉 Haz clic aquí para acceder a los cursos con descuento o inscribirte 👈 https://bit.ly/cursos-mejor-precio-daniel-bojorge

#django#python#web#tenant#multi tenant#multitenant#saas#saas technology#teaching#code#developers & startups#education#programming#postgresql#software#Docker#Youtube

0 notes

Text

Benefits of Docker with Lucee for Development

#Benefits of Docker with Lucee for Development#Docker with Lucee for Development#Docker with Lucee Development#Docker with Lucee#Benefits of Docker with Lucee Development#Benefits of Docker with Lucee

0 notes

Text

What is Docker and how it works

#docker#container#data transfer#application#website#web development#technology#software#information technology

0 notes

Text

this just in on Things That People Say on TikTok That Make Me Mad: tools made for developers should be accessible to non developers I guess???

#some video said that the way in which the docker docs / website is written is “tech bro gatekeeping” cause its written for developers#maybe you shouldn't be messing with docker if you don't know what a command line is?#like sure theres probably some argument for improving the accessibility and quality of documentation#but as far as I can tell docker is pretty fucking good on that front

1 note

·

View note

Text

Django and Docker: Containerizing Applications

Containerizing Django Applications with Docker: A Comprehensive Guide

Introduction Docker is a powerful tool that allows developers to create, deploy, and run applications in containers. Containers are lightweight, portable, and consistent environments that include everything needed to run an application, from the operating system to the code, runtime, libraries, and dependencies. This article will guide you through the process of containerizing a Django…

#containerizing Django#DevOps practices#Django Docker integration#Docker Compose#Docker Django setup#Python web development

0 notes