#digital racism

Explore tagged Tumblr posts

Text

Goodreads

The master algorithm seems to give its name an inglorious connotation.

WEIRD comes to mind. White, Educated, Industrialized, Rich and Democratized. This problem of many humanities has also made it into the coding of algorithms. Previously in history, the problem was that many foundations for research used a too small and homogeneous pool of people and most of the study was done by white, male and wealthy people, so that their results were not representative for the whole population.

And that in two ways. On the one hand, prejudices, when they were not yet politically incorrect, flowed directly into pseudo-research. As emancipation and equality spread, it were only the indirect, unvoiced personal opinions. But the research leaders, professors and chief executives incorporated their conscious and unconscious worldviews into the design of questions, research subjects, experimental methods and so on.

Secondly, these foundations for the research were presented to an equally biased audience as well as test subjects. For a long time, much of the research has been based on these 2 foundations and is it indirectly until today, because new research builds on older results. It is like trying to finally remove a bug in the source code that evolved over the years with the bug as a central element of the whole system. That alone is not the only severe problem, but the thousands of ramifications it left behind in all other updates and versions. Like cancer, it has spread everywhere. This makes it very expensive to impossible to remove all these mistakes again. A revision would be very elaborate and associated with the resistance of many academics who assume dangers for their reputation or even whole work. They would see their field of research and their special areas under attack because nobody likes criticism and that would be a hard one to swallow.

What does this have to do with the algorithms? The programming of software is in the same hands as in the example above. Certainly not in such dimensions, but subconsciously inadvertent opinions may flow into it and the way search engines generate results is even more problematic. Especially in the last few years, deep learning, AIs, big data, and GANs, Generative Adversarial Networks, have been much more integrated into the development so that the old prejudices could begin evolving in the machines themselves without extra human influence.

This means that, in principle, no one can say anymore decidedly how the AIs come to their conclusions. The complexity is so high that even groups of specialists can only try timid approaches to reverse engineering. How precisely the AI has become racist, sexist or homophobic cannot be said anymore and worse, it can not be quickly repaired in hindsight. Because the reaction patterns on a search input cannot be selected in advance.

There is a sad explanation: Unfortunately, people are often infested with low instincts and false, destructive mentalities. When millions of people have been focusing their internet activity on aggressive hostility for decades, the algorithm learns to recognize their desires. There is a lot of money to earn and the AI should provide the users with what they want. The market forces determine the actions of the Internet giants and these give the public what it craves for. Ethics and individualized advertising can hardly follow the same goals. This is, even more, the case for news, media and publishers who suffer from the same problems as the algorithms. With the difference that they play irrelevant, rhetorical games to distract from the system-inherent dysfunctions.

The same problem exists with the automatic proposal function of online trade, which can inadvertently promote dangerous or aggressive behavior. Or with the spread of more extremist videos by showing new and similar ones, that are automatically proposed, allowing people to radicalize faster. The AI does its job, no matter what is searched for.

On a small scale, the dilemma has already been seen with language assistants and artificial intelligence, degenerating in free interaction with humans. For example, Microsoft's intelligent, self-learning chatbot, which was transformed into a hate-filled misanthrope by trolls within days. It is not difficult to imagine the dimension of the problem with the far-spread of new technologies.

One of the ways to repair these malfunctions is time. When people reset the AI's by doing neutral and regular searches. That's too optimistic, so it's more likely we will have to find a technical solution before people get more rational. For both better alternatives, the search queries and the results would have to change significantly before there could be the beginning of positive development.

The academic search results should not be underestimated. These often false, non-scientific foundations on which many of the established sciences stand. Even if the users became reasonable, there would still be millions of nonsensical results and literature. These fake, antiquated buildings of thought, on which many foundations of modern society are based, must be the primary objective. The results are the symptoms, but those dangerous and wrong thinkings are the disease. The long-unresolved history behind it with all its injustices has to be reappraised because it is the reason for widespread poverty and ignorance, which has its roots in wrong social models so that innocent AIs get deluded by search requests.

And the effects of search input and search results are mutually reinforcing. The mirror that they hold for society testifies only to hidden prejudices. However, those feel saver in their secret corners because they are supposedly unrecognized and subtly stoked by populists additionally and for their benefit. When wrong thinking has buried itself so deeply into a society, it also becomes part of all the products of that culture.

So you read So You Want to Talk About Race and now you have more questions. Specifically, you’re wondering how privilege affects your life online. Surely the Internet is the libertarian cyber-utopia we were all promised, right? It’s totally free of bias and discrimina—sorry, I can’t even write that with a straight face.

Of course the Internet is a flaming cesspool of racism and misogyny. We can’t have good things.

What Safiya Umoja Noble sets out to do in Algorithms of Oppression: How Search Engines Reinforce Racism is explore exactly what it is that Google and related companies are doing that does or does not reinforce discriminatory attitudes and perspectives in our society. Thanks to NetGalley and New York UP for the eARC (although the formatting was a bit messed up, argh). Noble eloquently lays out the argument for why technology, and in this case, the algorithms that determine what websites show up in your search results, is not a neutral force.

This is a topic that has interested me for quite some time. I took a Philosophy of the Internet course in university even—because I liked philosophy and I liked the Internet, so it seemed like a no-brainer. We are encouraged, especially those of us with white and/or male privilege, to view the Internet as this neutral, free, public space. But it’s not, really. It’s carved up by corporations. Think about how often you’re accessing the Internet mediated through a company: you read your email courtesy of Microsoft or Google or maybe Apple, and ditto for your device; your connection is controlled by an ISP, which is not a neutral player; the website you visit is perhaps owned by a corporation or serves ads from corporations trying to make money … this is a dirty, mucky pond we are playing around in, folks. The least we can do as a start is to recognize this.

Noble points out that the truly insidious perspective, however, is how we’ve normalized Google as this public search tool. It is a generic search term—just google it—and, yes, Google is my default search engine. I use it in Firefox, in Chrome, on my Android phone … I am really hooked into Google’s ecosystem—or should I say, it’s hooked into me. But Google’s search algorithms did not spring forth fully coded from the head of Zeus. They were designed (mostly by men), moderated (again by men), tweaked, on occasion, for the interests of the companies and shareholders who pay Google’s way. They can have biases. And that is the problem.

Noble, as a Black feminist and scholar, writes with a particular interest in how this affects Black women and girls. Her paradigm case is the search results she turned up, in 2010 and 2011, for “black girls”—mostly pornography or other sex-related hits, on the first page, for what should have been an innocuous term. Noble’s point is that the algorithms were influenced by society’s perceptions of black girls, but that in turn, our perceptions will be influenced by the results we see in search engines. It is a vicious cycle of racism, and it is no one person’s fault—there is no Chief Racist Officer at Google, cackling with glee as they rig the search results (James Damore got fired, remember). It’s a systemic problem and must therefore be addressed systemically, first by acknowledging it (see above) and now by acting on it.

It’s this last part that really makes Algorithms of Oppression a good read. I found parts of this book dry and somewhat repetitive. For example, Noble keeps returning to the “black girls” search example—returning to it is not a problem, mind you, but she keeps re-explaining it, as if we hadn’t already read the first chapter of the book. Aside from these stylistic quibbles, though, I love the message that she lays out here. She is not just trying to educating us about the perils of algorithms of oppression: she is advocating that we actively design algorithms with restorative and social justice frameworks in mind.

Let me say it louder for those in the back: there is no such thing as a neutral algorithm. If you read this book and walk away from it persuaded that we need to do better at designing so-called “objective” search algorithms, then you’ve read it wrong. Algorithms are products of human engineering, as much as science or medicine, and therefore they will always be biased. Hence, the question is not if the algorithm will be biased, but how can we bias it for the better? How can we put pressure on companies like Google to take responsibility for what their algorithms produce and ensure that they reflect the society we want, not the society we currently have? That’s what I took away from this book.

I’m having trouble critiquing or discussing more specific, salient parts of this book, simply because a lot of what Noble says is stuff I’ve already read, in slightly different ways, elsewhere—just because I’ve been reading and learning about this for a while. For a newcomer to this topic, I think this book is going to be an eye-opening boon. In particular, Noble just writes about it so well, and so clearly, and she has grounded her work in research and work of other feminists (and in particular, Black feminists). This book is so clearly a labour of academic love and research, built upon the work of other Black women, and that is something worth pointing out and celebrating. We shouldn’t point to books by Black women as if they are these rare unicorns, because Black women have always been here, writing science fiction and non-fiction, science and culture and prose and poetry, and it’s worthwhile considering why we aren’t constantly aware of this fact.

Stransformed, rebranded for the 21st century. They are no longer monsters under the bed or slave-owners on the plantation or schoolteachers; they are the assumptions we build into the algorithms and services and products that power every part of our digital lives. Just as we have for centuries before this, we continue to encode racism into the very structures of our society. Online is no different from offline in this respect. Noble demonstrates this emphatically, beyond the shadow of a doubt, and I encourage you to check out her work to understand how deep this goes and what we need to do to change it.

0 notes

Text

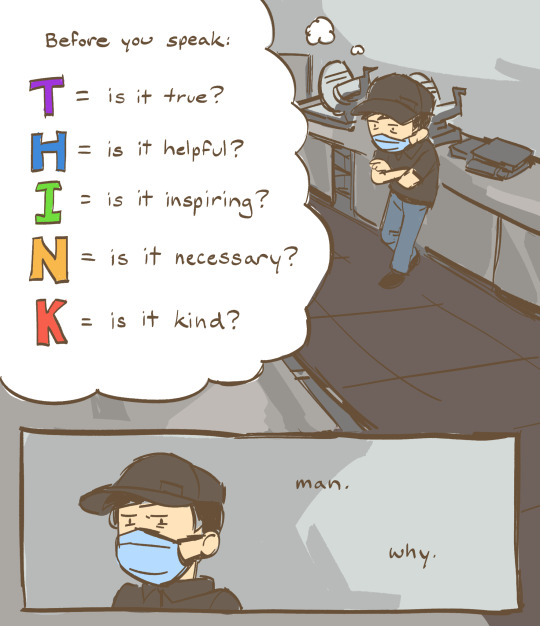

a moment I remember from working at the deli

2K notes

·

View notes

Text

Do you wanna make people like this unseasonably pressed about fictional characters having a bit of melanin this holiday season?🫵🫵🫵

Well look no further my dear friends bcus i'm the artist for the job😎👍

I, Phoenix, am an expert at being Black and as such, highly qualified to turn your fave characters Black with ease!

So! For the low low price of $10 you can get a fully coloured bust drawing of any character from any fandom of your choice!

[Interested? Hop on over to my kofi and place an order if you want!]

#art commission#commission sale#kofi commission#art comms open#any fandom#fanart#digital drawing#digital art#fandom racism#fairy tail#will i get money? who knows!#even if i dont its a fantastic way to commit to the bit!

334 notes

·

View notes

Text

Behold, a Valkyrie Cain AND a Necronaut Suit design! In one go! :D

#those muscles could kill#and they have#woo#lets keep the racism to zero? this time? perhaps??#skulduggery pleasant#valkyrie cain#necronaut suit#necromancy#magic#skeleton#skulduggery pleasant art#art#digital art#nic stylus

86 notes

·

View notes

Text

Ik he was probably the whitest man to ever live but let me pretend EAsian Vox is real for a sec

(Btw he’s supposed to be Korean American here but I was too lazy to draw the American flag LMAO)

#hes super whitewashed though LKJSDHFGK hashtag 50s racism/assimilation#not 50s accurate but neither is the show#hazbin hotel#vox#hazbin hotel vox#vox hazbin hotel#art#fanart#digital art#my art

280 notes

·

View notes

Text

messy but idc because its orter so hes still fine

#orter madl#step aside innocent zero hes the real villian of mashle - magical racism 🗣🗣#mashle: magic and muscles#mashle#orter mádl#mashle orter#mashle fanart#art#digital illustration#fanart#mashleverse

72 notes

·

View notes

Text

It's a slow process, but it gets harder and harder to deny.

919 notes

·

View notes

Text

Silicon Valley has its own variety of racism. And you'll never guess who is the leading figure in spreading this poisonous ideology. [CLUE: He left South Africa at age 18 when the country had just begun the process of eliminating apartheid and moving to majority black rule.]

Racist pseudo-science is making a comeback thanks to Elon Musk. Recently, the tech billionaire has been retweeting prominent race scientist adherents on his platform X (formally known as Twitter), spreading misinformation about racial minorities’ intelligence and physiology to his audience of 176.3 million followers—a dynamic my colleague Garrison Hayes analyzes in his latest video for Mother Jones. X, and before it Twitter, has a long-held reputation for being a breeding ground for white supremacy. [ ... ] Musk is amplifying users who will incorporate cherry-picked data and misleading graphs into their argument as to why people of European descent are biologically superior, showing how fringe accounts, like user @eyeslasho, experience a drastic jump in followers after Musk shares their tweets. The @eyeslasho account has even thanked Musk for raising “awareness” in a thread last year. (Neither @eyeslasho nor Musk, via X, responded to Garrison’s request for an interview.) “People are almost more susceptible to simpler charts with race and IQ than they are to the really complicated stuff,” Will Stancil, a lawyer and research fellow at the Institute on Metropolitan Opportunity, told Garrison in a video interview. He added: “This is the most basic statistical error in the book: Correlation does not equal causation.”

Racist pseudo-science simply sprays cologne on the smelly bullshit of plain old irrational bigotry. Warped theology, which was used to justify slavery, passed the baton of officially sanctioned race prejudice to pseudo-science in the late 19th and early 20th century.

DNA and other real science not only undermine the pseudo-science of racism but has revealed that "race" is not even a valid scientific concept among humans. What is widely regarded as race is defined by rather generalized phenotypes.

There has always been petty bigotry. But racial pseudo-science has been used to justify exploitation, colonialism, and territorial expansion by the powerful and ignorant. Elon Musk certainly qualifies as both powerful and ignorant.

In 2022, just one week after Musk purchased Twitter, the Center for Countering Digital Hate —an online civil rights group— found that racial slurs against Black people had increased three times the year’s average, with homophobic and transphobic epithets also seeing a significant uptick, according to the Associated Press. More than a year later, Musk made headlines once again for tweeting racist dog whistles in a potential attempt to “woo” a recently fired Tucker Carlson. But, his new shift into sharing tech-bro-friendly bigotry carries its own unique set of consequences.

If you are still on Twitter/X then you are indirectly supporting the propagation of pseudo-scientific racism – as well as just plain hate. Like quitting alcohol and tobacco, ditching Twitter/X can be difficult. But after doing so, you'll eventually notice how much better you feel.

#racism#white supremacy#pseudo-science#silicon valley#twitter#x#elon musk#hate speech#center for countering digital hate#leave twitter#quit twitter#delete twitter

84 notes

·

View notes

Text

'til the day I die

#my art#digital art#Americans I'm begging you to study#history repeats itself#visit your local library#A People's History of the United States by Howard Zinn#On Tyranny by Timothy Snyder#Surviving Autocracy by Masha Gessen#Strongmen: Mussolini to the Present by Ruth Ben-Ghiat#White Fragility: Why It's So Hard for White People to Talk about Racism by Robin Diangelo#How to be an Antiracist by Ibram X. Kendi#to name a few#but there are plenty more

25 notes

·

View notes

Text

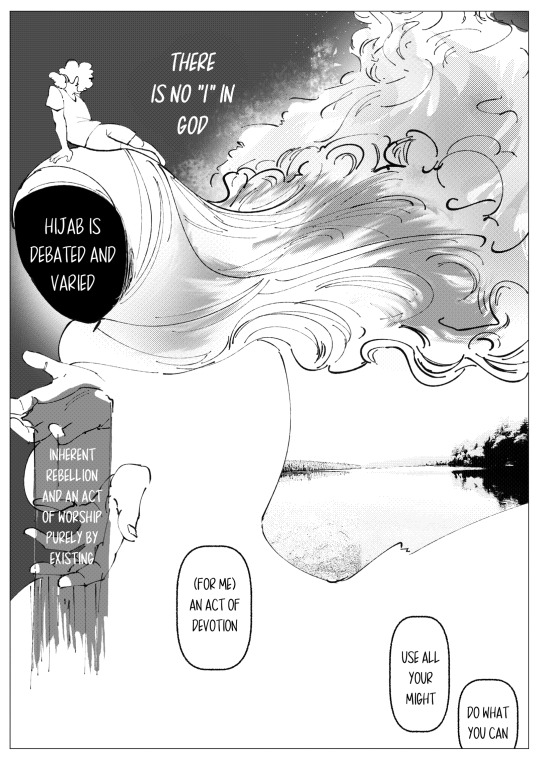

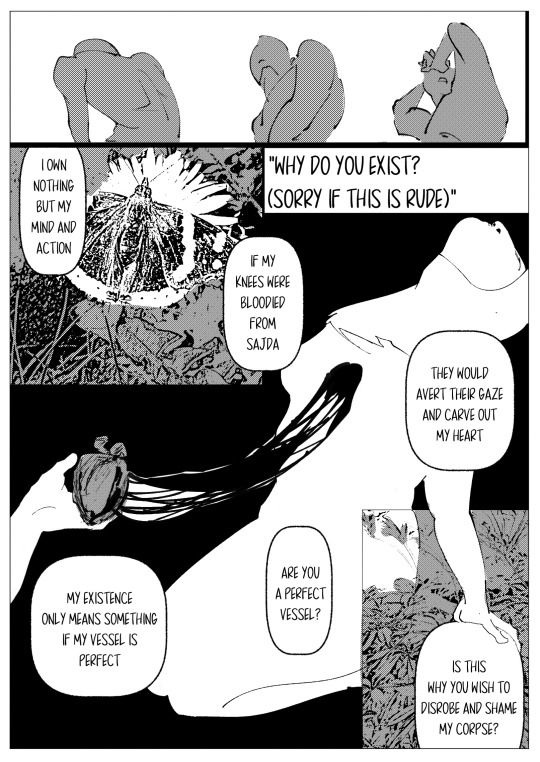

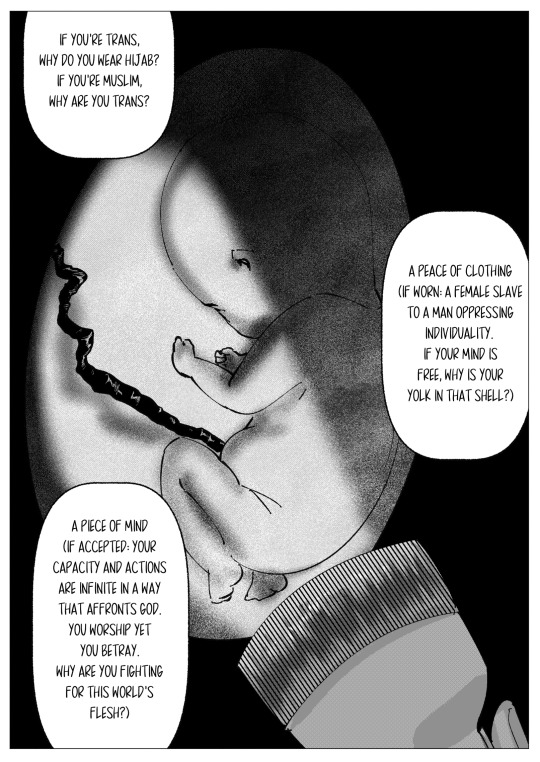

HAPPY AAPI MONTH TO EVERYONE WHO QUESTIONS MY EXISTENCE

NOW ASK ME AGAIN (WHY I WEAR HIJAB)

#maysia#aapi#aapihm#aapi heritage month#asian american pacific islander heritage month#asian american#digital art#digital comics#comic art#comic artist#comic artwork#racism#trans artist#black artists#asian artist#renegaedz#desi#south asia#south asian#muslim artist#myart#original comic#clip studio paint#clip studio#my art#artists on tumblr#my comics#comic#comics

53 notes

·

View notes

Text

Outsiders Owen! I drew this off a reference of myself!

!gory!

#outsiders smp#minecraft#owengejuicetv#c!owen#fan art#fannart#art#artwork#digital drawing#drawing#racism

22 notes

·

View notes

Text

Done. I hope my bro will like it :3 I at least do.

#medieval#digital illustration#art#horse#knabstrupper#warhorse#elven#fae#against racism#against monarchy#against religious extremists#and against idiocy

49 notes

·

View notes

Text

DISCLAIMER: this is abt my actual experiences w being cyberbullied on this website 4 2 of my recent posts both abt jax and gangle in ep 4 of the show, this is not abt ppl having different opinions from me, u can hav ur own hcs or interpretations of the show or the episode, characters ect, that's not the issue here the issue is i've been being harassed on my posts, made fun of 4 using txt speak, accused of being sexist 4 not liking gangle and 4 liking jax as a character, accused of ableism 4 saying that i hc jax as having aspd even tho i hc him that way bc of my own aspd and having symptoms that line up w his and made that clear in the posts i was making, and every time it's some1 deliberately misinterpreting my post and saying that im like morally a bad person 4 not liking gangle as a character and im just so fucking tired of it happening all fucking day 2day, so now that that's cleared up, i wrote this disclaimer last and after i had calmed down and the next part of this post is my very tired and angry ramblings so if u don't wanna c that then this post is not 4 u, this isn't abt disagreement this is abt consistent cyberbulling and im allowed 2 b angry abt it

u guys srsly need 2 stop cyberbulling me just bc i don't like ur "uwu smol bean mask girl"

pointing out a female character has flaws is not the same as me being sexist

pointing out that a male character is not only flaws is not me being sexist

but god forbid i wanna just c these characters as actual interesting and complex characters and not just 1 dimensional tropes

like im sorry u guys think that the writing sucks and that the writers would do their audience the disservice of writing their characters 2 b that bland but it's rly not my problem that u hate creativity so much

idk mayb learn 2 actually hav fun and enjoy the media u consume instead of getting mad at ppl 4 making character analysis posts and actually having media literacy that goes beyond the most baby surface level stuff of "this character cry so they did nothing wrong" and "this character angry which is bad"

also it's so fucking rich seeing u guys accuse me of sexism when lets b fucking 4 real here if gangle was a man and jax was a woman u all would hav hated gangle just as much as i did episode 4 and u all would think that jax being a lil shitheel bully was them being a ""girlboss""

also 4 the ppl saying im "blindly defending jax" i know jax is an asshole that's part of y i like him as a character! i just also think that it's likely not out of fucking nowhere since that's generally not how ppl work and i hav noticed similarities w his behaviour and some of my aspd symptoms leading me 2 believe that this might b partly the cause 4 some of his behaviour, like sorry 4 liking good writing and complicated characters ig!? damn!

but also just bc jax is an asshole doesn't make gangle this innocent uwu bean who did nothing wrong, like im sorry u guys think a woman crying means she doesn't need 2 take responsibility 4 being an abusive manager and attempting 2 brainwash her employees but that's rly not my fucking problem and it's not my job 2 comfort u or hold ur hand on that

it's not my fucking problem that u guys want every character on the show 2 b a one dimensional parody of themselves omfg

i should b allowed 2 fukin black gay boy post abt jax being a black gay boy being abused by his white girl manager without being dogpiled by fucking toxic gangle stans and ppl who r supposedly jax stans but clearly only like him as a 1 dimensional asshole they don't hav 2 treat like a person, imma b fr i think that's honestly partly y u guys love that "jax is an npc" hc so much u just don't want jax 2 b a person

#tadc ep 4#tadc episode 4#tadc#tadc gangle#tadc jax#the amazing digital circus ep 4#the amazing digital circus episode 4#the amazing digital circus#fandom bs#angry rant#im getting cyberbullied#toxic fandom#toxic fanbase#tw fandom sexism#cw fandom sexism#tw fandom racism#cw fandom racism#tw fandom homophobia#cw fandom homophobia

16 notes

·

View notes

Text

A federal judge on Monday threw out a lawsuit by Elon Musk’s X that had targeted a watchdog group for its critical reports about hate speech on the social media platform. In a blistering 52-page order, the judge blasted X’s case as plainly punitive rather than about protecting the platform’s security and legal rights. “Sometimes it is unclear what is driving a litigation,” wrote District Judge Charles Breyer, of the US District Court for the Northern District of California, in the order’s opening lines. “Other times, a complaint is so unabashedly and vociferously about one thing that there can be no mistaking that purpose.” “This case represents the latter circumstance,” Breyer continued. “This case is about punishing the Defendants for their speech.” X’s lawsuit had accused the Center for Countering Digital Hate (CCDH) of violating the company’s terms of service when it studied, and then wrote about, hate speech on the platform following Musk’s takeover of Twitter in October 2022. X has blamed CCDH’s reports, which showcase the prevalence of hate speech on the platform, for amplifying brand safety concerns and driving advertisers away from the site. In the suit, X claimed that it had suffered tens of millions of dollars in damages from CCDH’s publications. CCDH is an international non-profit with offices in the UK and US. Because of its potential to destroy the watchdog group, the case has been widely viewed as a bellwether for research and accountability on X as Musk has welcomed back prominent white supremacists and others to the platform who had previously been suspended when the platform was still a publicly-traded company called Twitter.

#elon musk#lawsuit#x (social media platform)#watchdog group#hate speech#social media#district judge charles breyer#us district court#northern district of california#center for countering digital hate#ccdh#terms of service#twitter#brand safety concerns#advertisers#damages#non-profit#research#accountability#white supremacists#publicly-traded company#white supremacy#racism#social justice#equality#end hate#anti-racism#racial equality#stop racism#no to hate

57 notes

·

View notes

Text

The Walking Honored Dead

Tribute to my favorite character from "Casablanca" (1942), Signor Ugarte, played by Peter Lorre.

While Ugarte is not a noble guy, I find it ludicrous that he's so frequently written off as a "villain," when he was helping the heroes from the start (if for selfish reasons) and his only confirmed kills are both Nazis.

The scenario here is based on Michonne from "The Walking Dead," who apparently keeps two zombies on leashes.

Having cheated death in more ways that one, Ugarte has found that dead Nazis have many uses. Naming his new friends Hans and Franz, he parades them through the criminal underworld, and uses them to contribute to the fight against Hitler (for a price no doubt). Rick, of course, is completely unimpressed as usual.

Update: I fixed Ugarte's head and nose, which looked slanted before, and toned down the color contrast.

#peter lorre#ugarte#casablanca#“german couriers”#lizzy chrome#zombie#nazi#the walking dead#michonne#parody#spoof#humor#halloween#spooky#the honored dead#cutrate parasite#dead nazis#noir#digital art#classic films#don't fuck with the wombat#fanart#spooky season#ghouls#antisemitism#racism#chains#wwii#black comedy#gallows humor

17 notes

·

View notes

Text

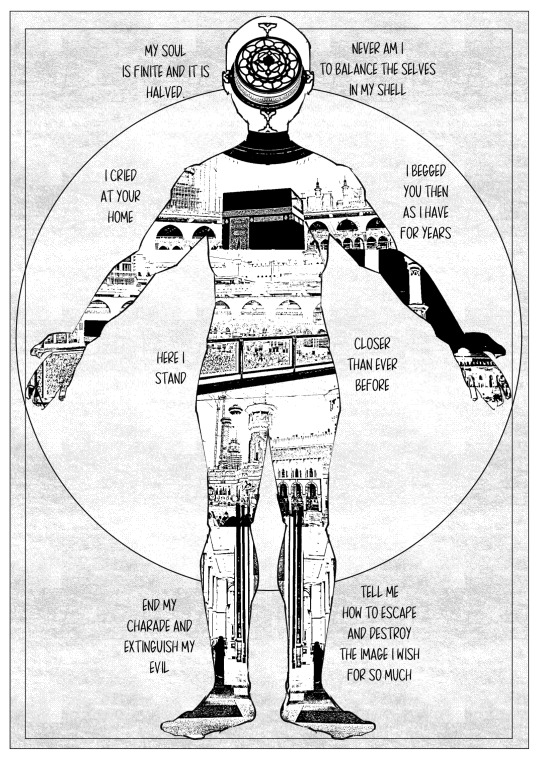

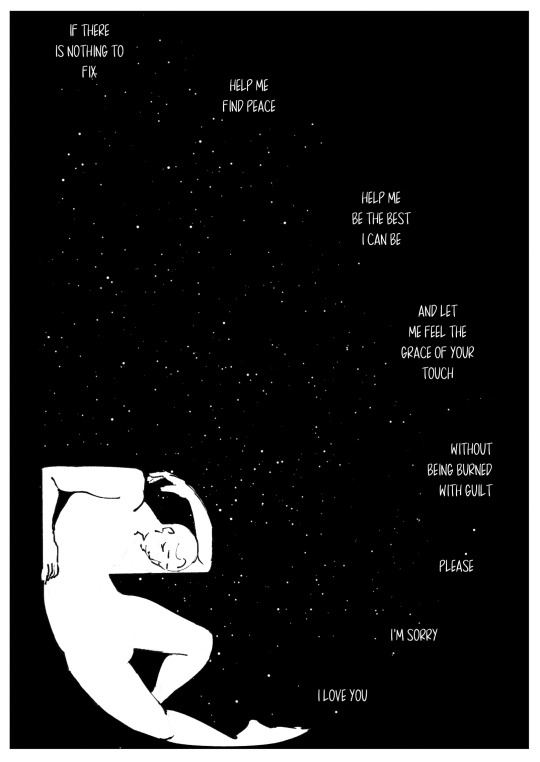

Touching~

(these feelings are ofc doubled since I figured out I was trans lol)

#trans artist#racism#minority#diaspora#trans#life as a minority#growing up#insecurity#made with krita#digital art#digital illustration#krita

9 notes

·

View notes