#data engineering fundamentals

Text

obnoxious that I can't just use a computer to do the things I've always used computers to do, now I have to use two different computers including one with specialized hardware for interfacing with an entirely different network of interdevice communication. I don't wanna turn on goddamn mfa, and it's insufferable when I have to goddamn pull out another hundreds of dollars of tech just to be allowed to use your site's search (because you forbid it unauthenticated)

#on the one hand I get that having stronger security for authentication is nice.#on the other hand though. if you don't trust the individual with whom you have to authenticate for the service they have a monopoly over#then you have to fuckin sacrifice your operational security in the name of making some other individual feel better about their lax securit#which like. fine whatever dont do business with people you dont wanna do business with. except for like. fucking github#like you want the gpl'ed source code for the quake engine#youre gonna have to endure microsoft and github and a million petty tyrants who demand every bit of data just for fundamental functionality#ugh I don't know I have any coherent complaints I'm just whinging bc I had to use two computers for a task one computer can do

5 notes

·

View notes

Text

Machine learning and the microscope

New Post has been published on https://thedigitalinsider.com/machine-learning-and-the-microscope/

Machine learning and the microscope

With recent advances in imaging, genomics and other technologies, the life sciences are awash in data. If a biologist is studying cells taken from the brain tissue of Alzheimer’s patients, for example, there could be any number of characteristics they want to investigate — a cell’s type, the genes it’s expressing, its location within the tissue, or more. However, while cells can now be probed experimentally using different kinds of measurements simultaneously, when it comes to analyzing the data, scientists usually can only work with one type of measurement at a time.

Working with “multimodal” data, as it’s called, requires new computational tools, which is where Xinyi Zhang comes in.

The fourth-year MIT PhD student is bridging machine learning and biology to understand fundamental biological principles, especially in areas where conventional methods have hit limitations. Working in the lab of MIT Professor Caroline Uhler in the Department of Electrical Engineering and Computer Science and the Institute for Data, Systems, and Society, and collaborating with researchers at the Eric and Wendy Schmidt Center at the Broad Institute and elsewhere, Zhang has led multiple efforts to build computational frameworks and principles for understanding the regulatory mechanisms of cells.

“All of these are small steps toward the end goal of trying to answer how cells work, how tissues and organs work, why they have disease, and why they can sometimes be cured and sometimes not,” Zhang says.

The activities Zhang pursues in her down time are no less ambitious. The list of hobbies she has taken up at the Institute include sailing, skiing, ice skating, rock climbing, performing with MIT’s Concert Choir, and flying single-engine planes. (She earned her pilot’s license in November 2022.)

“I guess I like to go to places I’ve never been and do things I haven’t done before,” she says with signature understatement.

Uhler, her advisor, says that Zhang’s quiet humility leads to a surprise “in every conversation.”

“Every time, you learn something like, ‘Okay, so now she’s learning to fly,’” Uhler says. “It’s just amazing. Anything she does, she does for the right reasons. She wants to be good at the things she cares about, which I think is really exciting.”

Zhang first became interested in biology as a high school student in Hangzhou, China. She liked that her teachers couldn’t answer her questions in biology class, which led her to see it as the “most interesting” topic to study.

Her interest in biology eventually turned into an interest in bioengineering. After her parents, who were middle school teachers, suggested studying in the United States, she majored in the latter alongside electrical engineering and computer science as an undergraduate at the University of California at Berkeley.

Zhang was ready to dive straight into MIT’s EECS PhD program after graduating in 2020, but the Covid-19 pandemic delayed her first year. Despite that, in December 2022, she, Uhler, and two other co-authors published a paper in Nature Communications.

The groundwork for the paper was laid by Xiao Wang, one of the co-authors. She had previously done work with the Broad Institute in developing a form of spatial cell analysis that combined multiple forms of cell imaging and gene expression for the same cell while also mapping out the cell’s place in the tissue sample it came from — something that had never been done before.

This innovation had many potential applications, including enabling new ways of tracking the progression of various diseases, but there was no way to analyze all the multimodal data the method produced. In came Zhang, who became interested in designing a computational method that could.

The team focused on chromatin staining as their imaging method of choice, which is relatively cheap but still reveals a great deal of information about cells. The next step was integrating the spatial analysis techniques developed by Wang, and to do that, Zhang began designing an autoencoder.

Autoencoders are a type of neural network that typically encodes and shrinks large amounts of high-dimensional data, then expand the transformed data back to its original size. In this case, Zhang’s autoencoder did the reverse, taking the input data and making it higher-dimensional. This allowed them to combine data from different animals and remove technical variations that were not due to meaningful biological differences.

In the paper, they used this technology, abbreviated as STACI, to identify how cells and tissues reveal the progression of Alzheimer’s disease when observed under a number of spatial and imaging techniques. The model can also be used to analyze any number of diseases, Zhang says.

Given unlimited time and resources, her dream would be to build a fully complete model of human life. Unfortunately, both time and resources are limited. Her ambition isn’t, however, and she says she wants to keep applying her skills to solve the “most challenging questions that we don’t have the tools to answer.”

She’s currently working on wrapping up a couple of projects, one focused on studying neurodegeneration by analyzing frontal cortex imaging and another on predicting protein images from protein sequences and chromatin imaging.

“There are still many unanswered questions,” she says. “I want to pick questions that are biologically meaningful, that help us understand things we didn’t know before.”

#2022#amazing#Analysis#Animals#applications#Autoencoders#bioengineering#Biology#Brain#Broad Institute#cell#Cells#China#chromatin#communications#computer#Computer Science#covid#data#deal#december#Disease#Diseases#engine#engineering#form#Forms#Fundamental#gene expression#genes

2 notes

·

View notes

Text

😵💫😵💫😵💫

These sobs really limited my tags?????

I have so many more thoughts this is so so much less than 1/2. Broski. Big dislike

#its ‘i watched a tv show and i need to talk about it in the tags of this site im not on anymore’ time#ty to the void for always accepting my thoughts <3#so honestly its just me thinking about the andromeda tv show. i just finished it and it left me destitute bc i clung onto the first 2 season#s as a basis and had ten thousand questions i *assumed* would be resolved. spoiler alert: they were nto#not*. and the coda addition helps but like. not enough. it explains some of the#oh fyi if anyone is reading or cared there will be spoilers#anyways it explained some of them ex for the cosmic engine bit. seemed pretty relevant and then was never mentioned again#i also MUCH prefer that version of trance — i had speculation she was a sun avatar which i took as confirmation when i finally noticed her#tattoo when harper used it to remind himself he put that data in the sun etc etc but i much prefer the sun-as-consciousness-astral-poject-#ing-slash-dreamjng-itself-a-body / being a little devil. i think that feels much more true to what we got in worldbuilding early on and tbh#the bar is on the floor bc any explanation would be better than what we got. also im sorry but s5 i trusted SO hard that that whole virgil#vox bit in the finale was insulting. couldnt even tie up the loose end you invented at the last minute????? MY god. i understand getting you#r budget halved but like. broski. it would have been better to ignore it at that point imo.#anywhoodle. i also have just ISSUES w the lack of resolution & not doing justice to literally any character#listen. why would you sink SO much effort into tyr just to have honestly what i feel is a disrespectful end to that character. like#tyr required me to do a LOT of thinking bc i sympathized with his position in exile etc while thinking also bro thats real fucked up. bro#stop thats fuckinng e*genics again dude. tbh with the entire species (im not looking up how to spell that rn) bc like the foundation of#their entire race is e*ugenics. (sorry censoring bc im in the tags just venting about tv) which obviously is a terrible idea but i think the#so it was like i am fundamentally against the concept but in show universe theg obviously did it etc but for me provided such a huge like#context to the universe. i fundamentally am not on board with all the commonwealth stuff like yeah i get it the magog are bad and scary but#like the neitzcheans (sp??? idc) are also Right There bein scary. then theres the ‘enhanced’ debate re dylan beka etc that like. is the same#but ‘’different’’ i guess. 🙄 anyways that is just to point out like. the level of thinking this show put me through just to blindside me w/#no resolution. i had SO much hope. tyr selling iut to the abyss is disrespectful to all of the established work the actor did for him and#to the character as well even if i think the ideology is icky. he was shown to be even less and less self-centric survival guy as it went on#and also tbh i didnt understand the him stealing his kids dna thing. i really thought that was gonna gi in a different less bs direction#okay also while im here can i just say. that tyr and dylan had THE most romantic tension to me. everyone else felt very friendshipy and i am#NOT one to usually fall into a ‘they obviously should be together’ pipeline that the writers dont make themselves. but the back and forth (#and intense eye contact) had me sitting there like. it was made in 2000 i know they wont do it but for not doing it they sure did! not that#i think they’d make a good couple (they would not) but that there was definitely something there on the dl you know? something more than#‘mutual respect’ you feel? and tbh! they also ruined the tyr beka thing by making her the matriarch. big ew huge ick.

2 notes

·

View notes

Video

youtube

AZ 900 - Azure fundamentals exam questions| Latest series |Part 11

#youtube#az900#azure#azuredeveloper#azure devops#azure data engineer training#azure fundamentals#it certifications online#it certification courses#cloud#cloud certification#cloud courses

0 notes

Text

Charting a Future of Possibilities: B.Tech in AI & DS - Igniting Careers in the World of Intelligent Technologies

A B.Tech in Artificial Intelligence & Data Science is a comprehensive undergraduate program that blends the principles of Artificial Intelligence (AI) and Data Science (DS). Through a combination of theoretical knowledge and practical application, students acquire the skills necessary for a career in these dynamic fields.

The curriculum covers computer science fundamentals, mathematics, statistics, and programming, enabling students to delve into AI methodologies such as natural language processing, computer vision, and robotics. Additionally, they gain expertise in data analysis techniques like data mining and visualization. Graduates of this program are equipped to pursue diverse career paths as data scientists, AI engineers, machine learning specialists, or data analysts across industries like finance, healthcare, e-commerce, and technology.

By leveraging their knowledge of AI and DS, they contribute to the development of intelligent systems, machine learning algorithms, and advanced data analysis methods, empowering organizations to make data-driven decisions and solve complex problems.

#Btech AI & DS#Btech in Artificial Intelligence & Data Science#AI Engineers#machine learning#cgc jhanjeri#chandigarh group of colleges#colleges for btech#computer science fundamentals

0 notes

Text

@annevbonny yeah so first of all there's the overt framing issue that this whole idea rests on the premise that eliminating fatness is both possible and good, as though like. fat people haven't existed prior to the ~industrial revolution~ lol

more granularly this theory relies on misinterpreting the causes for the link between poverty and fatness (which is real---they are correlated) so that fatness can be configured as a failure of eating choices and urban design, meaning ofc that the 'solution' to this problem is more socially hygienic, monitored, controlled communities where everybody has been properly educated into the proper affective enjoyment of spinach and bike riding, and no one is fat anymore and the labour force lives for longer and generates more value for employers

in truth one of the biggest mediating factors in the poverty-body weight link is food insecurity, because intermittent access to food tends to result in periods of under-nourishment followed by periods of compensatory eating with corresponding weight regain/overshoot (this is typical of weight trajectories in anyone refeeding after a period of starvation or under-eating, for any reason). so this is all to say that the suggestion that fatness is caused by access to 'unhealthy foods' is not only off base but extremely harmful; food insecurity is rampant globally. what people need is consistent access to food, and more of it!

and [loud obvious disclaimer voice] although i absolutely agree that food justice means access to a variety of foods with a variety of nutrient profiles, access to any calories at all is always better than access to none or too few. which is to say, there aren't 'healthy' or 'unhealthy' foods in isolation (all foods can belong in a varied, sufficient diet) and this is a billion times more true when we are talking about people struggling to consume enough calories in the first place.

relatedly, proponents of the 'obesogenic environment' theory often invoke the idea of 'hyperpalatable foods' or 'food addiction'---different ways of saying that people 'overeat' 'junk food' because it's too tasty (often with the bonus techno-conspiricism of "they engineer it that way"). again it's this idea that the problem is people eating the 'wrong' foods, now because the foods themselves are exerting some inexorable chemical pull over them.

this is inane for multiple reasons including the failure to deal with access issues and the fact that people who routinely, reliably eat enough in non-restrictive patterns (between food insecurity and encouragement to deliberately diet/restrict, this is very few people) don't even tend to 'overeat' energy-dense demonised foods in the first place. ie, there is no need to proscribe or limit 'junk food' or 'fast food' or 'empty calories' or whatever nonsense euphemism; again the solution to nutritionally unbalanced diets is to guarantee everyone access to sufficient food and a variety of different foods (and to stop encouraging the sorts of moralising food taboos that make certain foods 'out of bounds' and therefore more likely to provoke a subjective sense of loss of control in the first place lol)

but tbc, when i say "the solution to nutritionally unbalanced diets"---because these certainly can and do exist, particularly (again) amongst people subjected to food insecurity---i am NOT saying "the solution to fatness" because fatness is not something that will ever be eliminated from the human population. and here again we circle back to one of the fundamental fears that animates the 'obesogenic environment' myth, which is that fatness is a medical threat to the race/nation/national future. which is of course blatant biopolitics and is relying on massive assumptions about the health status of fat and thin people that are simply not borne out in the data, and that misinterpret the relationship between fatness and illness (for example, the extent to which weight stigma prevents fat people from receiving medical care, or the role of 'metabolic syndrome' in causing weight gain, rather than the other way around).

people are fat for many reasons, including "their bodies just look like that"; fatness is neither a disease in itself nor inherently indicative of ill health, nor is it eradicable anyway (and fundamentally, while all people should have access to health-protective social and economic conditions, health is not something that people 'owe' to anyone else anyway)

the 'obesogenic environment' is a liberal technocratic fantasy---a world in which fatness is a problem of individual consumption and social engineering, and is to be eliminated by clever policy and personal responsibility. it assumes your health is 1) directly caused and indicated by your weight, 2) something you owe to the capitalist state as part of the bargain that is 'citizenship', and 3) something you can learn to control if only you are properly educated by the medical authorities on the rules of nutrition (and secondarily exercise) science. it's a factual misinterpretation of everything we know about weight, health, diet, and wealth, and it fundamentally serves as a defense of the existing economic order: the problem isn't that capitalism structurally does not provide sufficient access to resources for any but the capitalist class---no, we just need a nicer and more functional capitalism where labourers have a greengrocer in the neighbourhood, because this is a discourse incapable of grappling with the material realities of food production and consumption, and instead reliant on configuring them in terms of affectivity ('food addiction') or knowledge (the idea that food-insecure people need to be more educated about nutrition)

there are some additional aspects here obviously like the idea that exercising more would make people thin (similar issues to the food arguments, physical activity can be great but the reasons people do or don't do it are actually complex and related to things like work schedules and exercise doesn't guarantee thinness in the first place) or fearmongering about 'endocrine disruptors' (real, but are extremely ill-defined as a category and are often just a way to appeal to ideas of 'naturalness' and the vague yet pressing harms of 'chemicals', and which are also not shown to single-handedly 'cause' fatness, a normal state of existence for the human body) but this is most often an argument about food ime.

800 notes

·

View notes

Text

Okay at this point I've seen so many students feeling doomed for taking a course where a teacher uses Unity or like they're wasting time learning the engine, and while understandably the situation at Unity sucks and is stressful for everyone: y'all need to stop thinking learning Unity a waste of your time.

Learning a game engine does not dictate your abilities as a dev, and the skills you learn in almost any engine are almost all transferrable skills when moving to other engines. Almost every new job you get in the games industry will use new tools, engines and systems no matter where you work, whether that be proprietary, enterprise or open-source. Skills you learn in any engine are going to be relevant even if the software is not - especially if you're learning development for the first time. Hell, even the act of learning a game engine is a transferrable skill.

It's sort of like saying it's a waste to learn Blender because people use 3DS Max, or why bother learning how to use a Mac when many people use Windows; it's all the same principals applied differently. The knowledge is still fundamental and applicable across tools.

Many engines use C-adjacent languages. Many engines use similar IDE interfaces. Many engines use Object Oriented Programming. Many engines have component-based architecture. Many objects handle data and modular prefabs and inheritence in a similar way. You are going to be learning skills that are applicable everywhere, and hiring managers worth their weight will be well aware of this.

The first digital game I made was made in Flash in 2009. I'm still using some principles I learned then. I used Unity for almost a decade and am now learning Godot and finding many similarities between the two. If my skills and knowledge are somehow still relevant then trust me: you are going to learn a lot of useful skills using Unity.

#unity#gamedev#game development#indie games#indie game#game dev#gamedevelopment#indiegames#indie dev#godot#unreal#unreal engine#godot engine

1K notes

·

View notes

Text

Too big to care

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Remember the first time you used Google search? It was like magic. After years of progressively worsening search quality from Altavista and Yahoo, Google was literally stunning, a gateway to the very best things on the internet.

Today, Google has a 90% search market-share. They got it the hard way: they cheated. Google spends tens of billions of dollars on payola in order to ensure that they are the default search engine behind every search box you encounter on every device, every service and every website:

https://pluralistic.net/2023/10/03/not-feeling-lucky/#fundamental-laws-of-economics

Not coincidentally, Google's search is getting progressively, monotonically worse. It is a cesspool of botshit, spam, scams, and nonsense. Important resources that I never bothered to bookmark because I could find them with a quick Google search no longer show up in the first ten screens of results:

https://pluralistic.net/2024/02/21/im-feeling-unlucky/#not-up-to-the-task

Even after all that payola, Google is still absurdly profitable. They have so much money, they were able to do a $80 billion stock buyback. Just a few months later, Google fired 12,000 skilled technical workers. Essentially, Google is saying that they don't need to spend money on quality, because we're all locked into using Google search. It's cheaper to buy the default search box everywhere in the world than it is to make a product that is so good that even if we tried another search engine, we'd still prefer Google.

This is enshittification. Google is shifting value away from end users (searchers) and business customers (advertisers, publishers and merchants) to itself:

https://pluralistic.net/2024/03/05/the-map-is-not-the-territory/#apor-locksmith

And here's the thing: there are search engines out there that are so good that if you just try them, you'll get that same feeling you got the first time you tried Google.

When I was in Tucson last month on my book-tour for my new novel The Bezzle, I crashed with my pals Patrick and Teresa Nielsen Hayden. I've know them since I was a teenager (Patrick is my editor).

We were sitting in his living room on our laptops – just like old times! – and Patrick asked me if I'd tried Kagi, a new search-engine.

Teresa chimed in, extolling the advanced search features, the "lenses" that surfaced specific kinds of resources on the web.

I hadn't even heard of Kagi, but the Nielsen Haydens are among the most effective researchers I know – both in their professional editorial lives and in their many obsessive hobbies. If it was good enough for them…

I tried it. It was magic.

No, seriously. All those things Google couldn't find anymore? Top of the search pile. Queries that generated pages of spam in Google results? Fucking pristine on Kagi – the right answers, over and over again.

That was before I started playing with Kagi's lenses and other bells and whistles, which elevated the search experience from "magic" to sorcerous.

The catch is that Kagi costs money – after 100 queries, they want you to cough up $10/month ($14 for a couple or $20 for a family with up to six accounts, and some kid-specific features):

https://kagi.com/settings?p=billing_plan&plan=family

I immediately bought a family plan. I've been using it for a month. I've basically stopped using Google search altogether.

Kagi just let me get a lot more done, and I assumed that they were some kind of wildly capitalized startup that was running their own crawl and and their own data-centers. But this morning, I read Jason Koebler's 404 Media report on his own experiences using it:

https://www.404media.co/friendship-ended-with-google-now-kagi-is-my-best-friend/

Koebler's piece contained a key detail that I'd somehow missed:

When you search on Kagi, the service makes a series of “anonymized API calls to traditional search indexes like Google, Yandex, Mojeek, and Brave,” as well as a handful of other specialized search engines, Wikimedia Commons, Flickr, etc. Kagi then combines this with its own web index and news index (for news searches) to build the results pages that you see. So, essentially, you are getting some mix of Google search results combined with results from other indexes.

In other words: Kagi is a heavily customized, anonymized front-end to Google.

The implications of this are stunning. It means that Google's enshittified search-results are a choice. Those ad-strewn, sub-Altavista, spam-drowned search pages are a feature, not a bug. Google prefers those results to Kagi, because Google makes more money out of shit than they would out of delivering a good product:

https://www.theverge.com/2024/4/2/24117976/best-printer-2024-home-use-office-use-labels-school-homework

No wonder Google spends a whole-ass Twitter every year to make sure you never try a rival search engine. Bottom line: they ran the numbers and figured out their most profitable course of action is to enshittify their flagship product and bribe their "competitors" like Apple and Samsung so that you never try another search engine and have another one of those magic moments that sent all those Jeeves-askin' Yahooers to Google a quarter-century ago.

One of my favorite TV comedy bits is Lily Tomlin as Ernestine the AT&T operator; Tomlin would do these pitches for the Bell System and end every ad with "We don't care. We don't have to. We're the phone company":

https://snltranscripts.jt.org/76/76aphonecompany.phtml

Speaking of TV comedy: this week saw FTC chair Lina Khan appear on The Daily Show with Jon Stewart. It was amazing:

https://www.youtube.com/watch?v=oaDTiWaYfcM

The coverage of Khan's appearance has focused on Stewart's revelation that when he was doing a show on Apple TV, the company prohibited him from interviewing her (presumably because of her hostility to tech monopolies):

https://www.thebignewsletter.com/p/apple-got-caught-censoring-its-own

But for me, the big moment came when Khan described tech monopolists as "too big to care."

What a phrase!

Since the subprime crisis, we're all familiar with businesses being "too big to fail" and "too big to jail." But "too big to care?" Oof, that got me right in the feels.

Because that's what it feels like to use enshittified Google. That's what it feels like to discover that Kagi – the good search engine – is mostly Google with the weights adjusted to serve users, not shareholders.

Google used to care. They cared because they were worried about competitors and regulators. They cared because their workers made them care:

https://www.vox.com/future-perfect/2019/4/4/18295933/google-cancels-ai-ethics-board

Google doesn't care anymore. They don't have to. They're the search company.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/04/teach-me-how-to-shruggie/#kagi

#pluralistic#john stewart#the daily show#apple#monopoly#lina khan#ftc#too big to fail#too big to jail#monopolism#trustbusting#antitrust#search#enshittification#kagi#google

438 notes

·

View notes

Text

It's no wonder Out happened when you really think about it. Nastya doesn't like organic life because it's complicated, it can break, sometimes it's even unfixable.

quote from gender rebels

Nastya is in love with Aurora, and in saying that she is saying "you are not organic life, I can deal with you because you are metal and algorithm and predictable" - we can see this in bedtime story when she says she'll tweak Aurora's story creation algorithm

screenshot from A Bedtime Story

Aurora is not inorganic. She is not ai. She is a space moon made of flesh and blood and teeth and bone. She is not an ai. She is a body that was taken and stripped of autonomy, of the right to self identify, of the right to think- to be imperfect and organic.

The metal is a veneer that hides how messy and traumatized and unfixable she is. From the outside she is a starship. From the inside she can still bleed.

And this makes them fundamentally incompatible. But yet, they are in love.

And really, it's no wonder Nastya fell in love with Aurora. Let's take a look at Nastya's home planet, or at least home society:

"Terminals were scattered across the planet. There was one on every street corner, one beneath every lamppost and one in every commune block."

"The midwife-machine performs a series of programmed manœuvres to quieten [the baby]. It cradles it and hums at several pitches until it finds one that seems most soothing. Mechanical arms stroke the baby’s flesh even as others start the process of implanting augmented reality interfaces into its nervous system."

"The Czar an atrophied frame, never present in the real world and worn to dust by the chemical compounds that kept his brain alive so it could live forever in a perfect virtual paradise. The Rabotnik a copy, a mind preserved unchanging in the instant before its death and placed in an everlasting metal frame."

(Cyberian Demons)

Its safe to say the world Nastya was born into, from the very minute she was born, was ridden with technology. She has augmented reality interfaces inplanted into her from birth. It would stand to reason that being taken from this society, wherein technology is everywhere, inside and out, would stand for a bit of a shock.

Aurora too had been augmented by the Cyberia.

While it is stated that the last time Nastya had used the ports themselves was directly before her death — "The last time she had used the ports, her tutor had ripped them out of her as the rebels stormed the palace" — Aurora is laced with Cyberian technology. I'd imagine she has something of a 'bluetooth wireless connection' with Aurora, rather than the physical data transfer of files between the ports and Nastya, it may as well be similar enough.

Imagine being Nastya, going from Cyberia, wherein there is augmented reality contantly, transplanted onto a ship with metal blood, a jonny, and a vampire. To Aurora, where the only bits of augmented reality run through Aurora.

Of course she'd fall in love with her. Aurora is familiarity. Aurora isn't organic. Aurora isn't human.

And of course when the undeniable part of aurora that is organic, that is a flesh moon plated in metal with her brain hooked to machines, when so much has broken and been replaced, when, presumably, aurora is less of an algorithm, nastya leaves with the brand cyberia left on her.

Because Aurora healing, becoming more of herself and less of a starship, is messy, and organic, and human.

and hard for nastya.

‘Think how long she’s been flying you around. Think how many bullet holes you’ve punched through her and how many atmospheres you’ve dropped her through. Think how many alterations and improvements we’ve made, Tim to her guns and Ashes to her storage and Brian to her engines and the Toy Soldier to who knows what. How much do you think is left of her after all she’s brought you through?’ Nastya held up the ancient, battered piece of hull plating. Just visible under the grime and scars of particles of space junk was a fragment of the Aurora’s original logo and serial number. Jonny honestly couldn’t remember the last time he had seen a version that hadn’t been painted by the Mechanisms themselves.

‘So she’s free, now.’ Nastya gestured around at the spaceship they were standing in. ‘This Aurora can take you where you want to go. I’m going to take my Aurora somewhere else.’

Aurora was ship of theseus'd. Aurora was improved. Aurora was no longer cyberian. (both literally, and metaphorically)

So nastya left.

420 notes

·

View notes

Text

Happy World Ocean Day! 🌎️🌊💙

The ocean is the largest living space on our planet. It’s critical for all life on Earth, but it now faces a triple threat of climate change, pollution, and overfishing. MBARI researchers are answering fundamental questions about our changing ocean. Our research and data help inform ocean management and policy.

Studying our blue backyard has revealed our connection to the ocean—how it sustains us and how our actions affect its future. MBARI scientists, engineers, communications staff, and marine operations crew are driven by a curiosity to learn more about the ocean and a passion to protect its future. Our ocean needs our help. All life—including us—depends on a healthy ocean.

290 notes

·

View notes

Text

Maserati MCXtrema Hits the Track Ahead of First Delivery

Maserati MCXtrema, the Trident's uncompromising 730-hp ‘beast’ has returned to be unleashed into its natural environment: the track. It will be undergoing a series of tests until late April, aiming at the delivery of the first model, planned for late summer 2024.

MCXtrema, a non-road-homologated race car produced in 62 units, was created to break the mould and invent new paradigms. The epitome of Maserati DNA and of the extraordinary performance characteristic of everything the 100% Italian brand produces, MCXtrema offers up evidence of its disruptive attitude to racing between the curbs of the circuit where it could be seen in action in a series of fundamental tests to gather the data needed for the final tune-ups. The Trident’s exclusive creation is one of the brand’s boldest cars in terms of development and is an evolution of the Maserati MC20 super sports car, its inspiration and basis.

In February, it had its first official outing at the Autodromo Varano de' Melegari (Parma), where MCXtrema was taken to the track by Maserati chief test driver Andrea Bertolini, one of the most successful drivers in the GT class with four world titles aboard the glorious MC12, who has been working on its development in the dynamic simulator since the early stages.

The February shakedown and subsequent milestones to refine its performance have been the ideal setting to unleash the full power of the 730-hp (540-kW) 3.0-litre twin-turbo V6 engine, based on the Maserati Nettuno and taken to the next level.

133 notes

·

View notes

Text

Master CUDA: For Machine Learning Engineers

New Post has been published on https://thedigitalinsider.com/master-cuda-for-machine-learning-engineers/

Master CUDA: For Machine Learning Engineers

CUDA for Machine Learning: Practical Applications

Structure of a CUDA C/C++ application, where the host (CPU) code manages the execution of parallel code on the device (GPU).

Now that we’ve covered the basics, let’s explore how CUDA can be applied to common machine learning tasks.

Matrix Multiplication

Matrix multiplication is a fundamental operation in many machine learning algorithms, particularly in neural networks. CUDA can significantly accelerate this operation. Here’s a simple implementation:

__global__ void matrixMulKernel(float *A, float *B, float *C, int N) int row = blockIdx.y * blockDim.y + threadIdx.y; int col = blockIdx.x * blockDim.x + threadIdx.x; float sum = 0.0f; if (row < N && col < N) for (int i = 0; i < N; i++) sum += A[row * N + i] * B[i * N + col]; C[row * N + col] = sum; // Host function to set up and launch the kernel void matrixMul(float *A, float *B, float *C, int N) dim3 threadsPerBlock(16, 16); dim3 numBlocks((N + threadsPerBlock.x - 1) / threadsPerBlock.x, (N + threadsPerBlock.y - 1) / threadsPerBlock.y); matrixMulKernelnumBlocks, threadsPerBlock(A, B, C, N);

This implementation divides the output matrix into blocks, with each thread computing one element of the result. While this basic version is already faster than a CPU implementation for large matrices, there’s room for optimization using shared memory and other techniques.

Convolution Operations

Convolutional Neural Networks (CNNs) rely heavily on convolution operations. CUDA can dramatically speed up these computations. Here’s a simplified 2D convolution kernel:

__global__ void convolution2DKernel(float *input, float *kernel, float *output, int inputWidth, int inputHeight, int kernelWidth, int kernelHeight) int x = blockIdx.x * blockDim.x + threadIdx.x; int y = blockIdx.y * blockDim.y + threadIdx.y; if (x < inputWidth && y < inputHeight) float sum = 0.0f; for (int ky = 0; ky < kernelHeight; ky++) for (int kx = 0; kx < kernelWidth; kx++) int inputX = x + kx - kernelWidth / 2; int inputY = y + ky - kernelHeight / 2; if (inputX >= 0 && inputX < inputWidth && inputY >= 0 && inputY < inputHeight) sum += input[inputY * inputWidth + inputX] * kernel[ky * kernelWidth + kx]; output[y * inputWidth + x] = sum;

This kernel performs a 2D convolution, with each thread computing one output pixel. In practice, more sophisticated implementations would use shared memory to reduce global memory accesses and optimize for various kernel sizes.

Stochastic Gradient Descent (SGD)

SGD is a cornerstone optimization algorithm in machine learning. CUDA can parallelize the computation of gradients across multiple data points. Here’s a simplified example for linear regression:

__global__ void sgdKernel(float *X, float *y, float *weights, float learningRate, int n, int d) int i = blockIdx.x * blockDim.x + threadIdx.x; if (i < n) float prediction = 0.0f; for (int j = 0; j < d; j++) prediction += X[i * d + j] * weights[j]; float error = prediction - y[i]; for (int j = 0; j < d; j++) atomicAdd(&weights[j], -learningRate * error * X[i * d + j]); void sgd(float *X, float *y, float *weights, float learningRate, int n, int d, int iterations) int threadsPerBlock = 256; int numBlocks = (n + threadsPerBlock - 1) / threadsPerBlock; for (int iter = 0; iter < iterations; iter++) sgdKernel<<<numBlocks, threadsPerBlock>>>(X, y, weights, learningRate, n, d);

This implementation updates the weights in parallel for each data point. The atomicAdd function is used to handle concurrent updates to the weights safely.

Optimizing CUDA for Machine Learning

While the above examples demonstrate the basics of using CUDA for machine learning tasks, there are several optimization techniques that can further enhance performance:

Coalesced Memory Access

GPUs achieve peak performance when threads in a warp access contiguous memory locations. Ensure your data structures and access patterns promote coalesced memory access.

Shared Memory Usage

Shared memory is much faster than global memory. Use it to cache frequently accessed data within a thread block.

Understanding the memory hierarchy with CUDA

This diagram illustrates the architecture of a multi-processor system with shared memory. Each processor has its own cache, allowing for fast access to frequently used data. The processors communicate via a shared bus, which connects them to a larger shared memory space.

For example, in matrix multiplication:

__global__ void matrixMulSharedKernel(float *A, float *B, float *C, int N) __shared__ float sharedA[TILE_SIZE][TILE_SIZE]; __shared__ float sharedB[TILE_SIZE][TILE_SIZE]; int bx = blockIdx.x; int by = blockIdx.y; int tx = threadIdx.x; int ty = threadIdx.y; int row = by * TILE_SIZE + ty; int col = bx * TILE_SIZE + tx; float sum = 0.0f; for (int tile = 0; tile < (N + TILE_SIZE - 1) / TILE_SIZE; tile++) if (row < N && tile * TILE_SIZE + tx < N) sharedA[ty][tx] = A[row * N + tile * TILE_SIZE + tx]; else sharedA[ty][tx] = 0.0f; if (col < N && tile * TILE_SIZE + ty < N) sharedB[ty][tx] = B[(tile * TILE_SIZE + ty) * N + col]; else sharedB[ty][tx] = 0.0f; __syncthreads(); for (int k = 0; k < TILE_SIZE; k++) sum += sharedA[ty][k] * sharedB[k][tx]; __syncthreads(); if (row < N && col < N) C[row * N + col] = sum;

This optimized version uses shared memory to reduce global memory accesses, significantly improving performance for large matrices.

Asynchronous Operations

CUDA supports asynchronous operations, allowing you to overlap computation with data transfer. This is particularly useful in machine learning pipelines where you can prepare the next batch of data while the current batch is being processed.

cudaStream_t stream1, stream2; cudaStreamCreate(&stream1); cudaStreamCreate(&stream2); // Asynchronous memory transfers and kernel launches cudaMemcpyAsync(d_data1, h_data1, size, cudaMemcpyHostToDevice, stream1); myKernel<<<grid, block, 0, stream1>>>(d_data1, ...); cudaMemcpyAsync(d_data2, h_data2, size, cudaMemcpyHostToDevice, stream2); myKernel<<<grid, block, 0, stream2>>>(d_data2, ...); cudaStreamSynchronize(stream1); cudaStreamSynchronize(stream2);

Tensor Cores

For machine learning workloads, NVIDIA’s Tensor Cores (available in newer GPU architectures) can provide significant speedups for matrix multiply and convolution operations. Libraries like cuDNN and cuBLAS automatically leverage Tensor Cores when available.

Challenges and Considerations

While CUDA offers tremendous benefits for machine learning, it’s important to be aware of potential challenges:

Memory Management: GPU memory is limited compared to system memory. Efficient memory management is crucial, especially when working with large datasets or models.

Data Transfer Overhead: Transferring data between CPU and GPU can be a bottleneck. Minimize transfers and use asynchronous operations when possible.

Precision: GPUs traditionally excel at single-precision (FP32) computations. While support for double-precision (FP64) has improved, it’s often slower. Many machine learning tasks can work well with lower precision (e.g., FP16), which modern GPUs handle very efficiently.

Code Complexity: Writing efficient CUDA code can be more complex than CPU code. Leveraging libraries like cuDNN, cuBLAS, and frameworks like TensorFlow or PyTorch can help abstract away some of this complexity.

As machine learning models grow in size and complexity, a single GPU may no longer be sufficient to handle the workload. CUDA makes it possible to scale your application across multiple GPUs, either within a single node or across a cluster.

CUDA Programming Structure

To effectively utilize CUDA, it’s essential to understand its programming structure, which involves writing kernels (functions that run on the GPU) and managing memory between the host (CPU) and device (GPU).

Host vs. Device Memory

In CUDA, memory is managed separately for the host and device. The following are the primary functions used for memory management:

cudaMalloc: Allocates memory on the device.

cudaMemcpy: Copies data between host and device.

cudaFree: Frees memory on the device.

Example: Summing Two Arrays

Let’s look at an example that sums two arrays using CUDA:

__global__ void sumArraysOnGPU(float *A, float *B, float *C, int N) int idx = threadIdx.x + blockIdx.x * blockDim.x; if (idx < N) C[idx] = A[idx] + B[idx]; int main() int N = 1024; size_t bytes = N * sizeof(float); float *h_A, *h_B, *h_C; h_A = (float*)malloc(bytes); h_B = (float*)malloc(bytes); h_C = (float*)malloc(bytes); float *d_A, *d_B, *d_C; cudaMalloc(&d_A, bytes); cudaMalloc(&d_B, bytes); cudaMalloc(&d_C, bytes); cudaMemcpy(d_A, h_A, bytes, cudaMemcpyHostToDevice); cudaMemcpy(d_B, h_B, bytes, cudaMemcpyHostToDevice); int blockSize = 256; int gridSize = (N + blockSize - 1) / blockSize; sumArraysOnGPU<<<gridSize, blockSize>>>(d_A, d_B, d_C, N); cudaMemcpy(h_C, d_C, bytes, cudaMemcpyDeviceToHost); cudaFree(d_A); cudaFree(d_B); cudaFree(d_C); free(h_A); free(h_B); free(h_C); return 0;

In this example, memory is allocated on both the host and device, data is transferred to the device, and the kernel is launched to perform the computation.

Conclusion

CUDA is a powerful tool for machine learning engineers looking to accelerate their models and handle larger datasets. By understanding the CUDA memory model, optimizing memory access, and leveraging multiple GPUs, you can significantly enhance the performance of your machine learning applications.

#AI Tools 101#algorithm#Algorithms#amp#applications#architecture#Arrays#cache#cluster#code#col#complexity#computation#computing#cpu#CUDA#CUDA for ML#CUDA memory model#CUDA programming#data#Data Structures#data transfer#datasets#double#engineers#excel#factor#functions#Fundamental#Global

0 notes

Note

The tenth Doctor in journeys end (i think?) said a TARDIS is made to be piloted by 6 timelords, what roles would each have in the TARDIS?

What roles would six Time Lords have in piloting a TARDIS?

This is more speculative, based on the known features of a TARDIS.

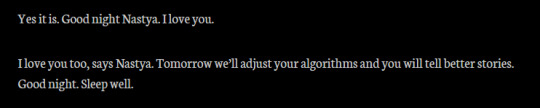

Console Panels and Systems

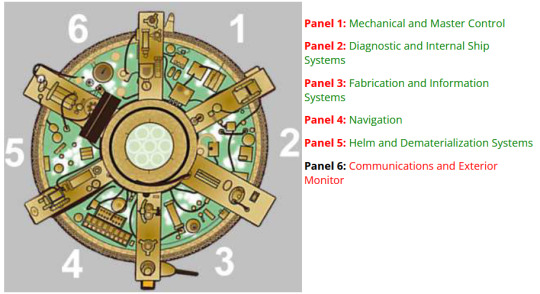

A TARDIS console is split into six 'panels', with each panel operating a different aspect of the TARDIS' systems. When there are six pilots, each Time Lord would likely specialise in operating a specific panel and system. Although the console layout may change with each TARDIS's 'desktop theme,' the six fundamental panels remain the same.

[Image ID: On the left is a top-down diagram of the 9th and 10th Doctor's coral theme TARDIS, divided into six panel sections. Each section is labelled from 1-6 clockwise starting from the 12 o'clock position. On the right is a text list of the panels and their names: Panel 1: Mechanical and Master Control, Panel 2: Diagnostic and Internal Ship Systems, Panel 3: Fabrication and Information Systems, Panel 4: Navigation, Panel 5: Helm and Dematerialisation Systems, Panel 6: Communications and Exterior Monitor./.End ID]

See this page on the TARDIS Technical Index for more variations on desktop themes.

👨✈️ Roles and Responsibilities

Here's a breakdown of each potential role and their responsibilities:

🔧 Panel 1: Mechanical and Master Control

Role: Chief Engineer

Responsibilities: The chief engineer monitors the TARDIS's overall operation. They ensure that all the mechanical systems and master controls are working properly. If anything goes wrong, they step in and fix it.

🛠️ Panel 2: Diagnostic and Internal Ship Systems

Role: Systems Analyst

Responsibilities: This person is all about the internals. They monitor life support, environmental controls, and the internal power grid. Basically, they make sure everything inside the TARDIS is working as it should.

🖥️ Panel 3: Fabrication and Information Systems

Role: Data Specialist

Responsibilities: Managing the TARDIS’s databases and info systems, and handling any fabrication needs. Whether it’s creating new tools, repairing old ones, or just making sure the information systems are up-to-date, they’ve got it covered.

🧭 Panel 4: Navigation

Role: Navigator

Responsibilities: Plotting courses through time and space. The Navigator makes sure the TARDIS lands where it’s supposed to, calculating all those tricky temporal vectors and spatial positions. They work closely with the Pilot to make sure the journey is smooth and safe.

🚀 Panel 5: Helm and Dematerialisation Systems

Role: Pilot

Responsibilities: This is the person at the helm, controlling take-off, landing, and in-flight manoeuvres. They handle the dematerialisation and rematerialisation of the TARDIS, making sure it takes off and lands without a hitch.

📡 Panel 6: Communications and Exterior Monitor

Role: Communications Officer

Responsibilities: They handle all external communications and keep an eye on what’s going on outside, involving sending or receiving messages or watching out for any threats or anomalies.

🏫 So...

Potentially, each Time Lord on the TARDIS would have a specialised role associated with a specific panel. Ideally, they're probably all working together like a well-oiled machine. However, poor old solo pilots have to jump around like madmen trying to cover all the controls at once.

Related:

Do we have any info on TARDIS biology?: Overview of TARDIS biological aspects.

Can a non-Gallifreyan benefit from a symbiotic bond with a TARDIS?: Non-Gallifreyan symbiosis with a TARDIS.

How to locate a TARDIS pilot: Guide for locating a pilot or other crew members using the TARDIS.

Hope that helped! 😃

Any purple text is educated guesswork or theoretical.

More content ...

→📫Got a question? | 📚Complete list of Q+A and factoids

→😆Jokes |🩻Biology |🗨️Language |🕰️Throwbacks |🤓Facts

→🫀Gallifreyan Anatomy and Physiology Guide (pending)

→⚕️Gallifreyan Emergency Medicine Guides

→📝Source list (WIP)

→📜Masterpost

If you're finding your happy place in this part of the internet, feel free to buy a coffee to help keep our exhausted human conscious. She works full-time in medicine and is so very tired 😴

#doctor who#gil#gallifrey institute for learning#dr who#dw eu#gallifrey#gallifreyans#whoniverse#ask answered#gallifreyan culture#tardis

44 notes

·

View notes

Text

Introduction to Armchair Activism

Current feelings about the state of radblr.

Fundamentals

"Yes, Everyone on the Internet Is a Loser." Luke Smith. Sep 3, 2022. YouTube.

An activist movement can be a place to build community with like-minded people, but action is its foremost purpose, not community. To allow yourself and other activists to remain effective, you are obliged to abandon your personal dislikes of other individual activists. Disagreements are worth discussion, but interpersonal toxicity is not.

Connect with in-person community and do not unhealthily over-prioritize online community. Over-prioritization of online community is self-harm.

Luke is a loser, but his channel is teeming with entry-level digital literacy information and advice pertaining to healthy use of technology for us cyborgs.

"Surveillance Self-Defense: Tips, Tools and How-Tos For Safer Online Communication." Electronic Frontier Foundation.

Hackblossom, outdated, is discontinued. The EFF project Surveillance Self-Defense is up-to-date, comprehensive, and follows personal educational principles of simplicity and concision.

To learn more about general (not focused solely on personal action) cybersecurity, visit Cybersecurity by Codecademy and Cyber Security Tutorial by W3Schools. Both contain further segueways into other important digital literacies.

Direct recommendation: Install and set up the linux distribution Tails on a cheap flash drive.

Direct recommendation: Develop your own home network security schema.

Direct recommendation: Always enable 2FA security for Tumblr, disable active / inactive status sharing, and learn to queue reblogs and posts to protect against others' interpretations of your time zone.

Direct recommendation: It's both possible and relatively simple to host your own instance of a search engine using SearXNG.

Zero-Knowledge Architecture.

As a remote activist (even if also a hybrid activist), none of your action should be taken on, using, or interfacing with non-zero-knowledge-architecture services. Tumblr is, of course, a risk in and of itself, but you should not be using services provided by companies such as Google, Microsoft, or any others based in or with servers hosted in 13-eyes agreement nations.

Search for services (email, word processor, cloud storage) which emphasize zero-knowledge architecture. Businesses whose services are structured as such cannot hand over your data and information, as they cannot access it in the first place. If they cannot access the majority of your metadata, either - all the better.

Communications for Armchair Activism

"Technical Writing." Google.

Contained within the linked page at Google Developers, the self-paced, online, pre-class material for courses Technical Writing One, Technical Writing Two, and Tech Writing for Accessibility teach activists to communicate technical concepts in plain English.

"Plain Language." U.S. General Services Administration.

Plain language is strictly defined by U.S. government agencies, which are required to communicate in it for simplicity and quick, thorough comprehension of information.

"Explore Business Law." Study.com.

Extensive courses are offered to quickly uptake principles of business law such as antitrust law, contract law, financial legislation, copyright law, etc. Legal literacy is often the difference between unethical action of a business and its inaction. Legal literacy is also often the difference between consideration and investment in your policy idea and lack thereof.

"Business Communication." Study.com.

Now that you're able to communicate your prioritized information, you may also initiate writing with bells and whistles. While other activists care most about the information itself, business communication allows you to communicate your ideas and needs to those who you must convince worthiness of investment to and win over.

Logic.

Learn it through and through. Start with fallacies if you're better at language and work your way backwards to discrete mathematics; start with discrete mathematics if you're better at maths and work your way forwards to fallacies, critical literacy, and media literacy. State that which you intend to state. Recognize empiricism and rationalism for what they are. Congratulations: you are both a mathematician and a law student.

Economic Literacy for Armchair Activism

"Microeconomics." Khan Academy.

"Macroeconomics." Khan Academy.

The globe operates on profitability. Women's unpaid labor is a massive slice of the profitability pie. While it's possible to enact change without understanding all that drives the events around you, it's impossible to direct or meaningfully manipulate the events around you beyond your scope of comprehension.

Understand economics or be a sheep to every movement you're active in and to every storm that rolls your way.

#masterpost#armchair activism#remote activism#hybrid activism#cybersecurity#literacy#digital literacy#women in tech#radical feminist community#mine

71 notes

·

View notes

Text

this one may get me burned at the stake given that i am in a weird position considering that i work in technology, but here goes.

y'all do realize that... none of this *gestures broadly at absolutely everything* would exist without the work of hundreds engineers/programmers and network specialists and data scientists and database architects and other people who work in the technology industry, right?

i just saw someone lamenting that they could learn to program, but felt they would be absolutely outcast from their social circle for it. are we seriously doing this? that is abhorrent and we should be better. people are still needed in these jobs. it's not immoral to work in technology.

this website in particular seems to have this really weird relationship where there's a regressive take against technology and technological advancements, discounting that there are human beings doing this work who believe in the good that it actually gives back to the world.

some of us work in industries that are fundamentally supporting and shaping the way that you live your life. every website you use is built and maintained by a "programmer" aka an Engineer or some type of web developer.

for the love of god, the problem is not technology. the problem is not AI - yes, even AI. the problem is not ALL tech workers and tech companies.

please stop ostracizing tools like technology and AI and the people who work with them who believe in the same things you believe and want to see technology used for good and the betterment of the world.

i literally could not be typing this message to you right now if it wasn't for something like a dozen technology companies making it possible. for a website with goals of destroying the [insert faceted thing here] binary, it sure does like to tie things to the classic construct of "Good vs. Bad."

technology WILL NOT SAVE US. but it also can help make things EASIER along the way if we allow it and use it properly.

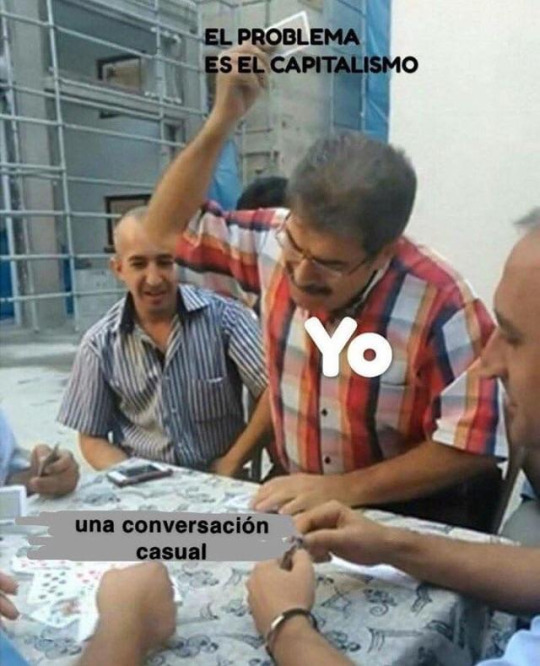

direct your blame to the appropriate source. see the image above.

77 notes

·

View notes

Note

i wasn't baiting u. seriously i've been reading up on shit and to deny fictions effects is to deny reality. jaws caused the shark decline. rosemarys baby caused the satanic panic. this is common knowledge

ok. this is incorrect in regards to the specific cases you cite and incorrect in general in regards to the outsize causal role you attribute to media products in cultural phenomena. it is fundamentally unserious to assert that the film jaws is 'the' cause of shark population declines when killings of sharks by humans occur the vast majority of the time for reasons like the use of industrial fishing nets; global populations of many animals have declined since jaws for reasons similarly economic and wholly unrelated to it; and people feared sharks long before the release of jaws and have instituted legal protections for the great white, in particular, since then. it is similarly unserious to assert that the film rosemary's baby is 'the' reason usamericans in the 1980s fear-mongered about secret satanic cults committing ritual child abuse when this was a myth deeply useful in shoring up the legal and social power of parents; was copacetic with an overall pro-cop law-and-order attitude picking up steam for many reasons including the wake of the civil rights movement; and was never even believed by most people because it appeared outlandish. again the broader stance here (that media can or has singlehandedly caused massive cultural phenomena arising de novo from those works) is simply an indefensible argument. where do you think these narratives arise from in the first place? what makes them coherent, compelling, and possible to parse as meaningful for audiences? i would suggest reading up on some basic tenets of the base/superstructure relationship and start questioning narratives that make tidy and emotionally compelling arguments about film as an omnipotent and unilateral engine of social change without considering other explanations for the phenomena and data in question, or the factors that lead to the production of cultural artefacts like films in the first place.

135 notes

·

View notes