#data drift

Explore tagged Tumblr posts

Text

Fluent Bit and AI: Unlocking Machine Learning Potential

These days, everywhere you look, there are references to Generative AI, to the point that what have Fluent Bit and GenAI got to do with each other? GenAI has the potential to help with observability, but it also needs observation to measure its performance, whether it is being abused, etc. You may recall a few years back that Microsoft was trailing new AI features for Bing, and after only having…

View On WordPress

#AI#Cloud#Data Drift#development#Fluent Bit#GenAI#Machine Learning#ML#observability#Security#Tensor Lite#TensorFlow

0 notes

Text

Data Drift: How Does it Affect Your Machine Learning Model?

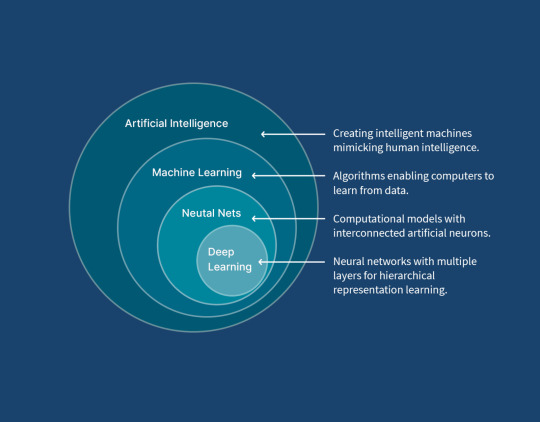

Across the globe, organizations are tapping into the potential of data to drive informed decision-making, streamline operations, and gain a competitive edge through artificial intelligence and machine-learning. However, amidst this data-driven revolution, a formidable challenge known as “Data Drift” looms, capable of exerting a profound impact on the performance of machine learning models. In…

View On WordPress

0 notes

Text

Okay I'm curious: I've seen a lot of Christians use/refer to the phrase "hosanna in the highest!" which is used in the New Testament and I've frequently heard it pronounced "hoh-ZAHN-ah". However, it's a much older liturgical phrase in Hebrew and definitely not pronounced like that. I want to know: (1) were you taught the actual meaning of this word by your community/do you know what it actually means without googling it, (2) what variety of Christian are you, and (3) if, after googling it, were you correct?

Sorry fellow yidden and other non-Christians; this poll is specific to people who identify as Christian and/or who were raised as such. (Edit: gerim who were raised Christian can vote, but you have to base it off of what you were taught as a Christian, not what you know now.)

Christians who answer: if you googled this after voting yes and were taught wrong about it, please let me know in the notes.

(If you're wondering if you "count" as Christian or having been raised as such, for these purposes I would say interpret it broadly to include anyone who views Jesus as the messiah and grew up reading the New Testament as part of your bible.)

#christian#non-Christians please boost but don't vote#I have... a theory about this but am curious to have at least informal data about this first#yes I realize linguistic drift from other languages is probably how ''hozanna'' happened#but the Hebrew is different#this post brought to you by: Sukkot#every hour is theology hour around here apparently

727 notes

·

View notes

Text

...

#hm. im in limbo. but at least i can draw again at last. ive never spent so long not wanting to draw. it was terrible#my job search lasted 4 days before i secured a position at target but i dont start until the 26th so im drifting until then#it feels so weird. like i dunno. i keep thinking abt jobs in a weird way now bc i just sorta drifted into what i do#weird academic stuff but i think most jobs arent like being a grad student and that never really occured to me#i dunno why. i could have done so many things but here i am. an ecologist mostly. i dunno. well see what the summer brings#maybe ill grow some social skills. its sorta weird but like the medication has made my head less terrible with intrusive thoughts. like i#can actually drive my car without hyperventilating which is fucking wild. so Maybe ill grow some confidence abt interacting with the world#going back in the fall still seems impossible rn but so does starting a job somewhere else. but i dunno#not where i expected to be in my life. im just lucky i dont have to worry much abt money#especially bc i got an ultrasound done so they cold make sure something wasnt wrong with my uterus#and its fine. guess it just hates me but that means i spent like 350 dollars for a 10min scan that showed nothing#ay. the us medical system#anyway. i guess ill continue drifting until the 26th#probably i should find something to do. or work on my old unpublished data#unrelated

15 notes

·

View notes

Text

The man on the radio keeps playing Enya ... not my cup of tea, but then I have coffee. Today's quiz was all about The Boss. The answer was very obvious, even to me, but I never text in. The traffic lady talked about traffic, before going on to say two friends had stayed over and were extreme fans of the man on the radio and would he give a shout out to them. The chef on the radio is in and as it's Friday it's all about fish, prawns and breadcrumbs.

The three minute misery came and went with reports that the data centre people need more of everything to build even more data centres. They need more land ... and electricity ... and funding ... and ... and ... and ... so could the Emeraldians please get off the Emerald Isle. I don't think the chef on the radio has a recipe for that.

The peony has finally flowered. The plant has quite some age on it. I've been here 10 years and it was here before I came. Have been given a Tayberry plant and a blueberry plant. The Tayberry is a cross between a raspberry and a blackberry. I already don't get any blackberries or raspberries from the garden ... so I'm pretty sure if these fruits appear the birds will be the only ones holding up taste and texture score cards.

Pineapple, raspberries and blueberries are on the cake menu today, plus flapjacks, plus the coffee pot. Friday, Friday, Friday and the man on the radio is playing The Drifters 'Up On The Roof' ...

#man on the radio#traffic lady#chef on the radio#friday#berries#raspberry#blueberry#tayberry#peony#fish#friday is fish day#bloody data centres#flowercore#naturecore#treecore#sycamore#writers of tumblr#wry humour#humour#original writing#writers on tumblr#good morning#photographers on tumblr#original photography on tumblr#naturephotography#three minutes#three minute misery#ivycore#drifting

11 notes

·

View notes

Text

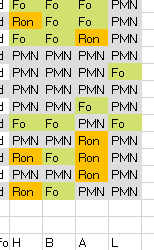

Oddly enough, I haven't encountered any Purple Mountain legs in Gen 5 yet despite them being a good chunk of the leg genes from Gen 4. I'm guessing that the first 5-8 norns in Gen 4 just got real busy with each other, making the percent chance of inheriting PMN legs pretty low. That should change once we get deeper into Gen 5, although I'm guessing that Forest legs are going to start dominating the population. Just a guess though. Genetic drift is one hell of a thing...

#data#family tree#creatures 1#genetic drift#oh also i'm missing MOST of the lineage for these guys#however i can trace some of the norns with horse genes#if they have a horse head or body then they HAVE to be related to one norn#same with arms#it will also tell me who's related to Conifer (4-7)???#it makes no sense#i love it#i eat this nonsense UP

2 notes

·

View notes

Text

Data could pilot a Jaeger by himself but he and Geordi are drift compatible so he would NEVER.

#that is all#daforge#star trek tng#data and geordi#data tng#geordi la forge#drift compatible#pacific rim#commander data

24 notes

·

View notes

Text

what if i backed up my pc chao garden and started it over and played it like a normal person and not like a dog breeder. as a treat

#soda offers you a can#imagine if there had been a 3ds chao garden game so i could have it portably and enjoy all the features#a ds chao garden even im not picky i just want it portably on two screens#“switch is portable tho” switches are massive in comparison and have joycon drift#and i'd much rather shove a ds/3ds into a bag than a goddamn 500€+ home console thank you very much#anyway time to investigate save data locations

3 notes

·

View notes

Text

A messy animation of the hand break hero

#I drew everything and scanned it so it could be warped and animated in Procreate#which unfortunately means there’s too much data for tumble to handle so it’ll have to settle with the potato web ready version#my drawing#artists on tumblr#my sketchbook#artist alley#my painting#drawing#animation#2d animation#animated gif#2d animated video#animated#oil pastels#fiat#fiat 500#hand break turn#hand break hero#tokyo drift#drift#skid

5 notes

·

View notes

Text

Inteligencia Artificial en Marketing y Publicidad: Herramientas Clave que Definen el Futuro

¿Qué es la Inteligencia Artificial (IA) en Marketing y Publicidad y Para Qué Sirve? La Inteligencia Artificial (IA) en Marketing y Publicidad se refiere a la aplicación de tecnologías y algoritmos de IA (como el aprendizaje automático, el procesamiento del lenguaje natural y el análisis predictivo) para optimizar y automatizar diversas tareas y estrategias de marketing. No se trata de robots…

#Adext AI#Adobe Sensei#AdTech#Albert AI#Amplify.ai#análisis predictivo#Aprendizaje Automático#Automatización de Marketing#Big Data Marketing#Brandwatch#chatbots#Conversica#Cortex#Crimson Hexagon#Drift#Experiencia del Cliente#Generación de Contenido AI#HubSpot Marketing Hub#IA en Marketing#inteligencia artificial#Marketing Conversacional#marketing digital#Marketo Engage#MarTech#Optimización de Campañas#Optimove#Persado#personalización#Phrasee#Publicidad Online

0 notes

Photo

9:50 AM EST December 28, 2024:

The Mercury Program - "Slightly Drifting" From the album A Data Learn the Language (September 10, 2002)

Last song scrobbled from iTunes at Last.fm

0 notes

Text

oh. i understand the hype about next gen now.

Data,

#like it is the whole crew obv him himself would be good but not the same thing#but its like how when i get people into (my beloved) tos#i can see the change when they're like. oh. i get it. Spock#like its the whole crew! BUT#u find the- the--#yeah#i get it now!#Data#or ds9- Dax#i wonder if this is part of what contributes to voyager's status#because it's captain janeway (o captain my captain) who is that#but since she's the captain it's not as expected so if you don't go in knowing that it's totally hard to get your head around?#but also-- and this isn't a detriment-- Spock is so strong with that in TOS and i wonder if that drifts and changes th/o#like how much presence that is as a unit#hm. hm hm hm hm. much to think about#hm. you know that recent power rangers movie?#im not 18 it was more than two years ago but--#one of things important/ i respect about it was that they cast a black character as the spock#now did they do a good job? eh#but they set it up like that and that's a good thing#and i may be misinterpreting but i feel like power rangers is for the billys of the world yk?#as a billy myself

0 notes

Text

It’s time for the long awaited

✨Generation 5 Overview✨

It’s a looooong post, so if you care to read, it’s under this handy dandy read more.

The generation was made up of 47 norns and lasted approximately 58 hours, including a period of time where I left the game on overnight. The average lifespan was 6:32, with the highest natural lifespan being 9:11 and the lowest being 5:35.

Phenotypes

There were 188 body-type genes total.

45.21% were Purple Mountain, 39.89% were Forest, 6.38% were Horse, 6.38% were Ron, and 2.13% were Banana (For funsies at the end of the run. There were no males to breed with.) I did not see any body-type genes disappear.

I’m now seeing Horse genes and Ron genes intersect in the gene pool timeline, but no norn had both Horse and Ron phenotypes.

The female:male ratio was 2.13:1. Lucky guys.

Genotypes

Two norns had extra pigment genes.

One norn had an extra chemical emitter gene.

One norn produced alcohol in some amount in all stages of life. The stumbling was the giveaway. It’s possible that norns are producing odd chemicals right under my nose, but I haven’t caught them because the effects aren’t obvious.

One norn seemed unable to give birth (Gonadotrophin maxes out but progesterone stays at a low level). I used the Advanced Breeder’s Kit to help her out a few times. Sometimes I can’t bear to see them suffer. Plus she still needed to eat like a pregnant norn and was taking a lot of time to care for.

No norns inherited the 100% life force gene, sadly.

Deaths

37/47 were exported due to old age (Only 78.7%, yikes)

5/47 died from Antigen 1 (10.6%)

3/47 died upon being imported (6.4%)

2/47 died from “Failure to thrive”, which includes Child of the Mind syndrome and the lack of interest in eating (4.3%)

Poor norns kind of had a rough time in this generation.

Important Discoveries

The game tries to “correct” your gender ratio when hatching eggs, leading to long stretches of males or females. This is why the next generation is going to be mostly males, sometimes in stretches of 12-13. I am fixing this in the next generation by occasionally exporting all but one female (To “cancel out” my grendel) to hatch eggs when I have nowhere to put them in order to get a ratio closer to 1:1 for Gen 7.

I now have a Disease tab in my Excel doc keeping track of what diseases do what and here is what I’ve found:

Antigen 1 is killer. High frequency of sneezes/coughs, leading to difficulty feeding norns to keep their life force up, and slow antibody response time. Absolutely deadly if your norn is not a reliable eater.

Antigen 3 is easy to get over. I had one note about it in my journal (From before I had my Diseases tab) saying that antibody production is so fast that it almost kills the antigen before it hits “critical mass” in the Biochemistry tab of the Science Kit.

Antigen 5 isn’t horrible. The antibody response time is very good, although life force declines rapidly. I had two norns on opposite sides of the world contract it very close together, so I can’t really say anything about infectiousness, but I’d guess it was a coincidence.

New Tools

The community patch, which does a lot of things. (May provide link to list eventually)

The Grendel Friendly COB, since now I can keep an eye on my little dude.

The Advanced Breeder’s Kit, which should help any norns experiencing pregnancy issues, although I am not yet sure if that’s a good idea. You will definitely hear about it in the next report if it was a bad idea

I also found the Genetics Kit and got it working, which should solve any issues if I accidentally leave the game on. That seems to happen at least once a generation, but now I close the game if I’m going to step away from my computer. That should stop the problem before it even starts.

—

Whew! That was a longer summary than I meant to put together, but it’s good for me to get it all down and in one place, even if nobody but me finds it interesting.

On to Generation 6! 😁

#summary#overview#genetic data#genetic drift#statistics#this taught me how to make ratios to 1 btw#i must have learned that in high school at some point but that was 11-15 years ago#god this was so much longer than i originally intended#and it took an hour to put together#also you can tell that i’m Super Serious bc i put a period at the end of the ending sentence in a paragraph#just a small quirk of mine

1 note

·

View note

Text

SBD engine, 2l, built in great britain, around 350hp to the rear wheels with a weight of 480kg, this westfield is the perfect machine for race days, a perfect image of the classic Lotus Eleven

#driftnoob3 obsesion quicks in#car spotting#drift carspotting#I had to ask the owner for the data of the car#cool guy#I love car guys :]

0 notes

Text

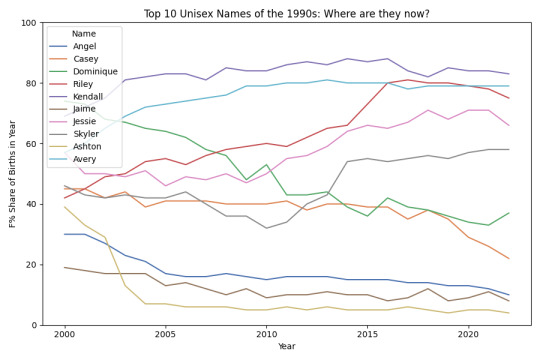

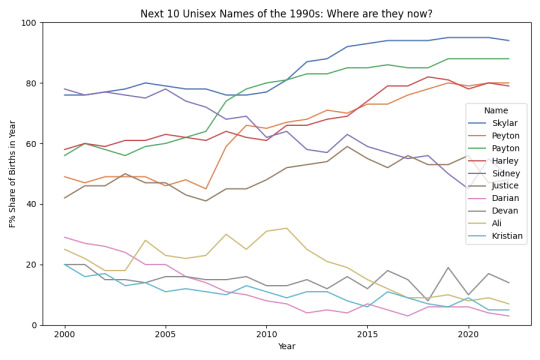

Top unisex names of the 1990s: where are they now?

The below two plots show the top 20 unisex/gender-neutral names of the 1990s, based on total usages from 1990-9. You can see the gender trend (F% share) post-1999, split into two plots for better visibility.

#gender-neutral#unisex#drift of unisex names#unisex names#gender-neutral names#neutral names#social security administration#social security administration name data#social security administration data#ssa name data#ssa data#name data#names data#naming data#names#naming#naming trends#name trends

0 notes

Text

The Lake House

Part 1: All of Us Strangers

Sana x Miyeon x Male Reader

word count 22K

You pull up to the lake house in your beat-up SUV, tires crunching on the gravel driveway, and the second you step out, you’re hit with it—this place is way more stunning than the pics online. The air smells like pine and damp earth, and the lake stretches out in front of you, its surface flat and gray under a thick blanket of clouds. The house itself is this cozy, modern thing—wood and glass, with a big deck overlooking the lake. It’s got this vibe, like it’s begging you to chill out and forget the world for a while. You’re already thinking, Shit, if this week goes as good as it looks, maybe I’ll buy this place. Peace, quiet, and nature all around—perfect for your photography, which is the whole damn reason you’re here. And you’d bet your camera nobody’s around for miles—pure solitude, just how you like it—until you catch a faint wisp of smoke curling up from the chimney of that dark house across the lake, and now your solo trip’s got some unexpected company popping off.

You pop the trunk and grab your gear—camera bag slung over your shoulder, a duffel with clothes, and a cooler stuffed with groceries you snagged earlier. Your day job’s nothing special, just some remote gig doing data entry for a logistics company. It’s boring as hell—punching numbers into spreadsheets, tracking shipments, answering emails from people who can’t figure out their own schedules. Pays the bills, though, and it’s flexible enough to let you fuck off to places like this whenever you want. Photography’s where your heart’s at. You’ve been at it for years, lugging your Canon everywhere, chasing the perfect shot. Landscapes mostly—sunsets, forests, water, anything that moves you. You’re no pro, but you’re good, and you’ve got a decent following on Insta for it. This trip? It’s all about that—getting out, breathing, and nailing some killer shots.

The lake house sits on this little peninsula, surrounded by trees so thick you can barely see the dirt road you came in on. It’s isolated, yeah, but not too far out. There’s a small city—more like a big town, really—about twenty minutes back. You stopped there on the way in, hit up a grocery store for the basics: beer, burgers, some frozen pizzas, and a bag of apples ‘cause you’re trying to be healthy or whatever. They’ve got a coffee shop and a gas station too, so you’re not totally cut off. Still, out here, it’s just you, the water, and the woods. No traffic, no neighbors blasting music—pure silence, except for the occasional bird or ripple on the lake.

You haul your stuff inside, drop it on the hardwood floor, and take a sec to check the place out. Big windows everywhere, letting in that soft, cloudy light. The living room’s got a plush couch and a stone fireplace you’re already itching to use. Kitchen’s sleek, all stainless steel and granite, and the bedroom upstairs has a view that makes you wanna cry—straight across the lake. Speaking of which, you step out onto the deck, hands in your pockets, and squint through the gloom. On the far shore, maybe half a mile away, there's that other house. Two stories, painted some dark color—navy or black, hard to tell with the weather. It’s got these big windows too, glowing faintly, and there’s a car parked out front. A white sedan, nothing fancy. There's definitely someone there, you think, and it weirds you out a little. You weren’t expecting company out here, not this close. The mystery of it nags at you—who the hell are they? Vacationers? Locals? You shake it off for now, but your eyes keep drifting back to that house as you unpack.

The clouds hang low, heavy with the promise of rain, and the air’s got that cool, damp bite to it. You grab your camera—couldn’t resist—and step back outside, adjusting the lens. The lake’s like a mirror, reflecting the sky, and the trees are all moody greens and browns. You snap a few shots, playing with the exposure, already imagining how they’ll look edited. This spot’s a goldmine; you can feel it. But that house across the water—it’s still there in the corner of your frame, pulling your focus. You zoom in, just curious, but it’s too far to make out much. Still, you’ve got this itch now, this tiny spark of intrigue. Whoever’s over there, they’ve got no idea you’re watching.

You’re fiddling with your camera, trying to frame up a shot of some birds skimming the lake, when movement catches your eye. Two figures step out of that dark house across the water. Girls, both of them, and even from this distance, they stand out. One’s got silky brown hair that catches the dull light, flowing down her back like she just stepped out of a shampoo ad. The other’s got jet-black hair, shorter, framing her face. They’re dressed casual—leggings and hoodies, nothing fancy, just comfy vibes. The black-haired one’s got a phone pressed to her ear, pacing a little, while the brown-haired one hovers close, hands in her pockets. You freeze for a sec, then casually swing your camera away, pretending to focus on the lake, the trees, anything but them. Don’t be that guy, you tell yourself, heart picking up a bit. Last thing you need is them thinking some random dude’s creeping on them with a lens.

But your curiosity’s a bitch. After a minute, you sneak the camera back their way, zooming in just enough to see them better. And then—shit—they’re looking right at you. Like, right at you. Your stomach drops, and you yank the camera down, turning your head so fast you almost tweak your neck. Fuck, fuck, fuck. You can already hear the headlines: “Outsider Caught Stalking Innocent Girls With Telephoto Lens.” You’re not that guy, but try explaining that across a lake. Hoping they didn’t get a good look, you ditch the deck and hustle to your car, popping the trunk like you’ve got urgent business. You grab the cooler and a bag of groceries, hauling them inside, your pulse still thudding in your ears.

You’re not out there five minutes before you’ve gotta go back for the rest. Stepping onto the deck again, you freeze—they’re coming your way. Like, actually walking around the lake toward your side. Your brain scrambles. Bolting inside might look shady as hell, but standing here like a deer in headlights? Not much better. You opt to stay, fiddling with something in the trunk—your spare tire, maybe?—pretending you’re too busy to notice them closing in. Your palms are sweaty, and you’re half-braced for them to start yelling or waving a phone with 911 already dialed.

“Hey!” a voice calls out, bright and chill, not pissed. You glance up, and the black-haired girl’s waving at you, a little grin on her face. You wave back, tentative, still expecting the vibe to shift. “Didn’t know anyone was over here,” she says as they get closer, her tone all friendly-like. “This place was a total dump last year—falling apart, windows smashed, the works. Looks dope now, though. They fix it up?”

You nod, relaxing a bit. “Yeah, rented it for the week. Guess it got a glow-up since then.” Up close, she’s got this energy—outgoing, loud in a good way. She sticks out her hand. “I’m Miyeon. This is Sana.” She jerks her thumb at the brown-haired girl, who gives you a small smile and a nod, quieter, maybe shyer.

“Sana, hey,” you say your name as you shake Miyeon's hand, then glancing at Sana. “Yeah, I’m just crashing here for a bit. You guys local?”

“Nah,” Miyeon says, leaning against your car like she owns it. “This house over there? My parents’. Been coming here forever, usually with a crew of friends. It’s our spot.” She gestures across the lake, where that dark two-story looms.

“Friends?” you ask, glancing between them. “Where’s the rest of the squad?”

Miyeon’s face falls a little, and Sana looks down at her shoes. “Yeah, that’s the shitty part,” Miyeon says, voice dipping. “They just called me—like, right before we came over. There’s a fuckin’ landslide or something on the main road in. Rain’s been nuts, and it’s blocked off. They were driving up from a couple hours away, so they just turned back. Not worth the hassle.”

“Damn,” you say, genuinely feeling for them. “That sucks. So what’s the plan now?”

Miyeon shrugs, kicking a pebble. “Hang out, I guess. Wait for the road to clear, then head home. Not much else to do.”

Sana pipes up then, her voice softer but curious. “That camera,” she says, nodding at it slung over your shoulder. “You a photographer or something?”

“Nah, just a hobby,” you say, brushing it off. “I work some boring-ass data job—spreadsheets and shit. This is what keeps me sane. Love shooting nature, landscapes, whatever catches my eye.”

Miyeon perks up. “You got an Insta for it? Let’s see.” You hesitate, then rattle off your handle. She pulls out her phone, taps away, and Sana leans over her shoulder as they scroll. “Yo, these are good,” Miyeon says, legit impressed. “Like, really good. You’re underselling yourself, dude.”

“Yeah,” Sana adds, her shy edge melting a bit. “The lighting in this one? Wow.” She points at her screen, and you feel a dumb little rush of pride.

“Thanks,” you say, scratching the back of your neck. “I’m here to chill and snap some shots of the lake, the woods, you know. Recharge.”

“Smart move,” Miyeon says. “We were gonna swim out there—” she nods at the pier stretching into the lake—“but it’s freezing. Usually it’s warm enough this time of year, but not today.”

“Global warming’s fucking with everything,” you toss out, and they both nod like, yep, that tracks.

Then Miyeon tilts her head, grinning. “Hey, since you’re Mr. Camera Guy, how about you take a pic of us out on the pier? Something to remember this weird-ass trip by?”

You blink, caught off guard, but they’re both looking at you expectantly. “Uh, yeah, sure,” you say, slinging the camera off your shoulder. “Let’s do it.”

They lead the way to the pier, Miyeon strutting ahead like she’s on a mission, Sana trailing a step behind, sneaking little glances at you. You’re still buzzing from the fact they’re cool with you—more than cool, actually friendly. You follow the girls down to the pier, boots thudding against the weathered wooden planks. The lake stretches out around you, still as glass under the heavy, gray sky, and the air’s got that sharp, pre-rain chill. Miyeon’s practically bouncing as she strides to the end, her black hair swinging, while Sana trails a little slower, her silky brown locks catching the faint breeze. They stop at the edge, the water lapping gently below, and turn to face you. “Alright, camera guy,” Miyeon says with a grin, planting her hands on her hips. “Work your magic.”

You lift the Canon, squinting through the viewfinder, and—damn—they’re gorgeous. Like, unfairly photogenic. Miyeon’s all confidence, popping a playful pose, one leg bent, head tilted, flashing a smirk that’s equal parts goofy and charming. Sana’s quieter about it, crossing her arms and giving a shy smile, but there’s something striking in the way she stands, the way her hair frames her face. You snap a few shots—wide angles with the lake behind them, then some tighter ones, playing with the depth of field so the cloudy horizon blurs out. Miyeon keeps it lively, throwing out dumb poses—peace signs, a fake pout—while Sana giggles and follows her lead, loosening up bit by bit.

“Yo, let’s see!” Miyeon calls after a dozen clicks, jogging over with Sana in tow. You flip the camera around, scrolling through the shots on the screen, and their faces light up. “Holy shit, these are fire,” Miyeon says, leaning in so close her shoulder brushes yours. “You sure you’re not a pro?”

“They’re so good,” Sana adds, her voice softer but just as impressed. “Like, we actually look cool.” The pics are sharp, the girls popping against the moody backdrop, their colors—black hoodie, brown hair—standing out in the gloom. You nailed the focus, the composition, everything.

“Yeah, well, you guys make it easy,” you say, shrugging, though you’re secretly stoked they like them. “Wish the weather wasn’t so shitty, though. This light’s all flat and gray—makes it look like you’re in some creepy thriller flick or something.”

Miyeon’s grin falters for a sec, and she nudges you with her elbow. “Dude, don’t even joke about that. We’re already kinda freaked out being alone over there.”

You laugh, raising an eyebrow. “What, you think some axe murderer’s hiding in the woods? Any crimes around here I should know about?”

She shakes her head, smirking but with a little edge. “Not that I’ve heard of, thank God. Just… it’s quiet, you know? Too quiet sometimes.”

“Fair,” you say, glancing out at the lake, the stillness of it almost eerie now that she’s put the thought in your head. “Well, if you guys need anything—someone to fend off the boogeyman or whatever—just hit me up. I’m right across the water.”

Miyeon’s eyes spark up, and she pulls out her phone. “Bet. What’s your Insta again? I’ll follow you, and you can DM me those pics.” You give her the handle, and she taps it in, tossing you hers in return—@miyeonnotmignon, which makes you snort ‘cause it’s so her. “Send ‘em whenever,” she says. “I need these for the grid.”

Sana glances at the sky, tugging her hoodie tighter. “We should head back. Looks like rain’s coming soon.”

“Yeah, true,” Miyeon agrees, squinting up at the clouds, which are starting to clump thicker, darker. “Don’t wanna get stuck out here when it dumps.” She turns to you, flashing that big, easy grin. “Enjoy the place, dude. Don’t let the thriller vibes get to you.”

You smirk. “I’ll try. You guys stay safe over there. Don’t go summoning ghosts or anything.”

Sana giggles at that, and Miyeon just rolls her eyes, waving as they start back down the pier. “See ya, camera guy!” she calls over her shoulder. You wave back, watching them go—Miyeon’s loud laugh echoing faintly, Sana’s quieter figure beside her—until they hit the shore and start the trek around the lake. You linger a minute, camera still in hand, the pier creaking under your weight. The air’s heavier now, the first hint of rain prickling your skin. You glance at their house across the water, its dark shape fuzzing out in the haze, and that little spark of mystery flares up again. They’re cool, way cooler than you expected. And something about them—maybe Miyeon’s loud charm, maybe Sana’s shy warmth—sticks with you as you head back to your own place, the promise of rain rumbling in the distance.

—

It’s been a few hours since you got back from the pier, and the world outside’s turned into a damn monsoon. Rain’s hammering the windows like it’s pissed off, streaking down the glass in relentless sheets, and the wind’s howling through the trees, making the whole lake house groan. Inside, though, it’s cozy—borderline toasty, thanks to the heater humming away in the corner and the fireplace lit downstairs. You’re sprawled on the bed upstairs, legs kicked out, a half-empty beer sweating on the nightstand from dinner—frozen pizza and some chips, nothing fancy. The generator’s chugging along out back, but you’re keeping an eye on the lights, half-worried it’s gonna crap out from all the juice the heater’s pulling. Last thing you need is to freeze your ass off out here.

You’ve got your laptop propped on your thighs, scrolling through the shots you took earlier—the pier pics of Miyeon and Sana, plus some moody lake stuff before the sky opened up. The girls’ photos are gold, even with the flat light. Miyeon’s got this wild, carefree energy in every frame, while Sana’s softer, her shy smile sneaking through. You tweak a couple in Lightroom, bumping the contrast, and damn, they’re Instagram-worthy for sure.

Eventually, you shut the laptop and roll off the bed, stretching. You can’t help it—your eyes drift to the window. It’s pitch-black out there, the rain turning everything into a blurry void. You press your forehead to the cold glass, squinting across the lake. Their house is just a smudge in the dark, but the lights are on—warm little squares glowing through the storm. You wonder what they’re up to. Probably curled up on a couch, watching some cheesy rom-com or maybe a horror flick, given Miyeon’s half-joking about being spooked. Popcorn, blankets, the whole vibe. You picture it for a sec—Miyeon yapping over the movie, Sana giggling at her—and it’s kinda cute.

Then—blink—the lights across the lake go out. All of them, at once. You blink too, like maybe your eyes are screwing with you, but nope, it’s dark over there now. Weird as hell. Your first thought is they hit the sack, but it’s too sudden, too synchronized. No way they flipped every switch at the exact same second. A power outage? Maybe the storm fried something. You stare into the blackness, chewing your lip. Okay, maybe you’re overthinking it. You’ve been out here alone too long, and those two are the only blips of life in this wilderness. It’s not like you’re obsessed or anything—they’re just… there. Still, it bugs you. You shake it off, muttering “whatever” to yourself, and decide to crash. Bed’s calling, and the rain’s drumming hard enough to knock you out.

You’re halfway to brushing your teeth when—thump thump—a sound cuts through the storm. You freeze, toothbrush dangling, listening. Imagination, right? This place creaks all the time. But then it comes again, louder—THUMP THUMP THUMP—straight from the front door downstairs. Your heart kicks up, and you spit into the sink, wiping your mouth with the back of your hand. Could be a branch or some shit blowing around in the wind, but it sounded too deliberate. You grab your phone, thumb hovering over the flashlight app, and creep to the stairs, ears straining. The rain’s deafening, but there’s something else—a muffled voice maybe?

You pad down to the first floor, barefoot on the cold wood, nerves buzzing. The knocking’s real, no doubt now, and it’s insistent. “Who the fuck—” you mutter, snagging a jacket from the couch and shrugging it on. You’re half-expecting a drenched hiker or some rando stranded in the storm, but part of you—okay, a big part—wonders if it’s them. You flip on the porch light, yank the door open, and—bam—a flashlight beam hits you square in the face, blinding you for a sec.

“Shit, sorry!” a familiar voice says, and the light drops. It’s Miyeon, soaked to the bone, her black hair plastered to her face, hoodie clinging like a second skin. Sana’s right behind her, brown hair dripping, looking like a drowned kitten in her oversized sweater. They’re both shivering, rain streaming off them, pooling on your doorstep.

“Jesus, you guys okay?” you say, stepping back to let them in. “What the hell happened?”

Miyeon’s teeth are chattering, but she’s still got that spark. “Our generator fucking died, dude. No lights, no heat, nothing. We’ve got no clue what’s wrong, and it’s creepy as shit over there. Can you—please—come take a look?”

“Yeah, of course,” you say, already zipping up your jacket. You grab your boots from the mat, shoving them on while they hover by the door, dripping and miserable. “You sure you don’t wanna dry off first? You’re gonna catch pneumonia or something.”

Sana shakes her head, hugging herself. “We just wanna get it fixed. It’s freezing, and I swear I heard something moving in the dark.”

“Probably just the wind,” Miyeon says, but she doesn’t sound convinced. “Still, let’s go. I’m not sleeping in a blackout.”

You snag a flashlight from the kitchen drawer—bigger than theirs, one of those heavy-duty ones—and flick it on. “Alright, lead the way. Let’s see if we can save your night.”

They nod, grateful, and you step out into the storm with them. The rain hits like needles, cold and relentless, soaking through your jeans in seconds. Miyeon’s ahead, power-walking around the lake, while Sana sticks closer, her flashlight beam jittering across the muddy path. You’re all hunched against the wind, shouting over the roar of the downpour—Miyeon bitching about how her parents need to upgrade their shit, Sana muttering about hating storms. It’s a slog, wet and miserable, but you can’t help feeling a little badass, trekking out here to play hero. The house looms ahead, a dark silhouette against the storm, and the second you step inside, the vibe hits you—cold, damp, and way too quiet without the hum of electronics. Miyeon flicks her flashlight around, leading the way through the living room—furniture shadowy lumps in the gloom—down a narrow hall to a back door. “Generator’s out here,” she says, shoving it open. The wind blasts in, spraying rain across your face, and you grimace as you follow them into a little shed attached to the house.

The generator sits there like a grumpy old beast, silent and useless. Sana holds her flashlight steady, the beam jittering a little from her shaky hands, while Miyeon aims hers at the control panel. “It just… stopped,” she says, kicking the base lightly. “No warning, no nothing.” You crouch down, popping the side panel open with a grunt, and peer inside. The smell of wet metal and fuel hits you, and you sweep your flashlight over the guts—wires, gauges, a fuel tank that’s still half-full. You’re no expert, but you’ve fucked around with enough random shit to spot trouble. And there it is: a busted fuel line, cracked clean through, leaking diesel into the housing. Probably shook loose from the storm’s vibration or just shitty luck. Either way, it’s toast—no quick fix tonight, not without a replacement part and better light to work in.

“Bad news,” you say, straightening up and wiping your wet hands on your jeans. “Fuel line’s fucked. It’s leaking everywhere, and I can’t patch it with what’s here. You’re outta power ‘til we get a new one.”

Miyeon’s face drops, and she lets out a loud, “Are you kidding me?!” She paces a little, flashlight beam swinging wildly. “This is some horror movie bullshit. What the hell are we supposed to do now?”

Sana’s quieter, but you can tell she’s freaked too—her arms are wrapped tight around herself, and her voice comes out small. “It’s so cold already. And dark. I don’t like this. I swear I keep hearing noises.”

You glance around the shed, the rain drumming on the tin roof like it’s trying to break in. The house beyond it looks like a black hole, swallowing every bit of light. “Yeah, no kidding,” you say, scratching your jaw. “Look, I’m not gonna leave you guys stranded out here. My place has power, heat, and light. Unfortunately there is only one room with a mattress because, well, I wasn't expecting guests. But you can crash there tonight if you don't mind sharing a bed. No point in freezing your asses off in this.”

They both freeze, turning to look at each other. Sana’s the first to speak, hesitant. “Are you sure? We don’t wanna, like, invade your space or anything.”

“Nah, it’s cool,” you say, waving it off. “I’ve got a nice couch. Beats sitting here waiting for the boogeyman to show up, right?”

Miyeon snorts, but there’s relief in it. “Okay, yeah, that sounds way better than this shitshow. Give us a sec to grab some stuff.” They dart back inside, flashlights bobbing, and you wait by the door, leaning against the frame, listening to the storm rage. You hear them rummaging around—drawers slamming, muffled chatter—before they reappear, each with a small duffel bag slung over their shoulder. Miyeon’s got a hoodie pulled tight over her head, and Sana’s clutching a blanket like it’s a lifeline, her wet hair still dripping.

“Ready,” Miyeon says, zipping her bag. “Let’s get the fuck outta here before something else breaks.”

The trek back is brutal—rain in your face, wind shoving you sideways, the girls huddled close like you’re some kinda human shield. By the time you stumble through your front door, you’re all drenched again, leaving a trail of puddles across the hardwood. You kick off your boots, shaking water out of your hair, and point down the hall. “Bathroom’s that way. Go change or whatever—I’ll grab some towels.”

“Thanks, dude,” Miyeon says, already peeling off her soaked hoodie right there in the living room, revealing a damp tee underneath. Sana scurries off, blanket dragging, and you head to the linen closet, snagging a couple of big fluffy towels. When you come back, Miyeon’s in dry sweatpants and a loose tank top, toweling her hair, while Sana emerges in an oversized hoodie and leggings, looking less like a drowned rat now.

“God, you’re a lifesaver,” Miyeon says, flopping onto your couch like she owns it. Sana nods, settling next to her, tucking her legs under. “Seriously, thank you. I was about to lose it over there.”

“No worries,” you say, tossing them the towels. “You guys warm enough? I can put more wood in the fireplace if you want.”

“It’s good,” Sana says, pulling the blanket over her lap. “This is already a million times better.”

You nod, feeling weirdly proud of your little rescue mission, and head to the kitchen. “I’ll make some tea or something. You guys just chill.” The kettle’s already half-full from earlier, so you flick it on, rummaging for some random herbal shit you bought ages ago—chamomile, maybe? Close enough. While it heats, you lean against the counter, listening to them talk on the couch. Miyeon’s voice carries, loud and animated—“I swear, if my parents don’t fix that generator, I’m never coming back”—while Sana’s softer, giggling at her rant.

When the kettle whistles, you pour three mugs, balancing them as you shuffle back. “Here,” you say, handing them over. Miyeon takes hers with a grin, Sana with a quiet “thanks,” and you plop into the armchair across from them, cradling your own. The steam curls up, warm against your face, and for a minute, it’s just the sound of rain on the roof and the three of you sipping.

Miyeon stretches out, kicking her feet up on the coffee table. “So, what’s your deal, camera guy? Are you planning to buy this house or something?”

You laugh. “Nah, just a rental for the week. Needed a break from my boring-ass data job. From the city too. Figured I’d mess around with my camera, get some shots of the lake and stay close to nature.”

“Well, you’re stuck with us now,” she says, smirking. “Hope you don’t mind the company.”

Sana glances at you, a little smile tugging at her lips. “Yeah, you’re kinda our hero tonight.”

You shrug, playing it off, but your chest puffs up a bit anyway. “Hey, beats being alone in this storm. You guys can crash as long as you need.” They nod, settling deeper into the couch, and the vibe shifts—warm, easy, like you’ve known them longer than a day. The rain keeps pounding, but in here, it’s just you, them, and the crackling of the fireplace making everything feel alright.

“So, what’s your story?” you ask, blowing on your tea to cool it. “You guys come up here a lot, huh?”

Miyeon smirks, setting her mug on the coffee table with a little clink. “Yeah, like I said, it’s my parents’ place. Been dragging people up here since I was a kid. Used to be all family trips, but now it’s more for me and my crew to fuck around—swim, drink, whatever. This time it was supposed to be a big thing, but, well, landslide screwed that.”

“That sucks,” you say, leaning back. “You two stuck it out, though. Pretty badass.”

Sana giggles, peeking over her mug. “Barely. We were freaking out before you showed up. I’m not good with storms—or, like, anything going wrong.”

“She’s a spoiled city girl,” Miyeon teases, nudging Sana with her foot. “Needs her Wi-Fi and hot showers or she starts crying.”

“Shut up,” Sana fires back, but she’s laughing, swatting Miyeon’s leg. “You’re the one who screamed when the power went out.”

Miyeon shrugs, unbothered. “Yeah, ‘cause it was creepy as fuck. Point is, we’re here now, thanks to Mr. Hero over there.” She jerks her chin at you, grinning.

You snort. “Just doing my part. So, what’s the deal with you two? You’ve known each other forever or what?” You figure they’re tight—besties or something, the way they bounce off each other.

They exchange a look, quick but loaded, and Miyeon’s grin turns a little sly. “Not forever,” she says, stretching her arms over her head, tank top riding up a bit. “We’ve been together, what, two years now?”

“Two and a half,” Sana corrects, softer, her eyes flicking to Miyeon like she’s double-checking.

“Together?” you echo, tilting your head. “Like… roommates?”

Miyeon laughs, loud and sharp, while Sana hides a smile behind her mug. “Nah, dude,” Miyeon says, sitting up a little. “Like, together together. Girlfriends. Dating. You know?”

“Oh,” you say, blinking, then catch yourself quick. “Oh, shit, that’s cool. I just assumed—uh, never mind. Awesome.”

Sana’s cheeks go pink, but she’s giggling at your stumble. “It’s fine. People assume we’re just friends all the time. We’re used to it.”

“Yeah, we don’t exactly scream ‘couple,’” Miyeon adds, smirking. “I’m too loud, she’s too sweet. Throws people off.”

You laugh, easing up. “Nah, I get it now. You balance each other out. That’s dope.” You mean it—they’ve got this vibe, like they click without even trying. Miyeon’s all fire and Sana’s the calm, but together it works.

“What about you?” Sana asks, shifting the spotlight. “You got anyone back home?”

“Me? Nah,” you say, shaking your head. “Solo mission right now. Work’s too boring to drag someone else into it, and I spend most of my free time with my camera anyway. Not exactly boyfriend material.”

“Bullshit,” Miyeon says, pointing at you with her mug. “You’re chill, you’ve got a cool hobby, and you’re not a total asshole. You’d do fine.”

“High praise,” you deadpan, grinning. “I’ll put that on my dating profile: ‘Not a total asshole, says random lake girl.’”

They both crack up, and the room feels lighter, like the storm’s just background noise now. You keep chatting—little stuff at first. You tell them about your data gig, how it’s mind-numbing but pays the bills, and how you’ve been shooting photos since you were a teenager, chasing sunsets and storms like this one. Miyeon spills about her graphic design side hustle, how she’s always doodling on her iPad, while Sana admits she’s a barista at some trendy coffee shop, secretly loving the chaos of the morning rush.

“Hold up,” you say, setting your empty mug down. “You’re telling me you’re out here pulling espresso shots all day, and you’re still this chill? Respect.”

Sana shrugs, blushing a little. “It’s not that hard. I just smile and people tip me.”

“She’s lying,” Miyeon cuts in. “She’s a pro. Makes latte art and everything. I can barely pour cereal without fucking it up.”

“Stop it,” Sana mumbles, shoving her playfully, and you can’t help but laugh at how easy they are together. It’s cute—real, not forced.

The convo drifts, and you’re all a little looser, the tea warming you up from the inside. Miyeon yawns, stretching so hard her tank top rides up again, showing a sliver of stomach. “Man, this storm’s not letting up. What’s the plan tomorrow if it’s still like this?”

You glance out the window—still a wall of rain and dark. “Dunno. If it clears, I was gonna hike around, take some shots. If not, I’ve got a deck of cards and some beer. We could kill time.”

“Beer?” Miyeon perks up, eyes glinting. “Why didn’t you say that earlier? Let’s do drinks tomorrow night, storm or not. We’ll make it a thing.”

“Deal,” you say, nodding. “I’ve got some whiskey too, if we’re feeling fancy. You guys in?”

Sana hesitates, then smiles. “Yeah, okay. Sounds fun.”

“Sweet,” Miyeon says, clapping her hands once, like it’s settled. “Something to look forward to after this shitty day.”

You all sit there a minute longer, the mugs empty now, the fire crackling mixing with the rain. Sana yawns next, covering her mouth with the blanket edge. “I’m so tired,” she mumbles. “This whole thing wiped me out.”

“Yeah, same,” Miyeon agrees, rubbing her eyes. “We should crash. You really good with us stealing your bedroom?”

“Take it,” you say, standing up to stretch. “Bed’s made, pillows and shit are in the closet if you need extra. I’ll grab the couch.”

“Are you sure we're not—” Sana starts, but you wave her off.

“Nah, it’s fine. Couch is comfy enough. You guys get the room, no biggie.” You grab the mugs, stacking them to carry to the sink, and they shuffle off the couch, gathering their bags.

“Thanks again, dude,” Miyeon says, dragging her duffel over her shoulder. “You’re, like, our storm savior.”

“Anytime,” you say, smirking. “Night, you two.”

“Night,” Sana echoes, giving you a little wave as they head down the hall. You hear the spare room door click shut, some muffled giggles and whispers filtering through before it quiets down. You rinse the mugs in the kitchen, flick off the lights, and flop onto the couch, dragging a throw blanket over yourself. The rain’s still going hard outside, but inside it’s warm and peaceful. Tomorrow’s got drinks on deck, and with Miyeon and Sana around, it’s shaping up to be a hell of a night. You close your eyes, the storm lulling you off, and crash out with a dumb little smile tugging at your lips.

—

You blink awake on the couch, the blanket tangled around your legs, sunlight sneaking through the blinds in thin, golden stripes. The house is quiet—no rain, no wind, just the soft hum of the heater ticking down, the fireplace already out. You sit up, rubbing your face, and that’s when you smell it: coffee, faint but fresh, and something sweet lingering in the air. Stumbling to your feet, you shuffle to the kitchen and spot a little spread on the counter—toast stacked on a plate, a jar of jam open next to it, and a couple strips of bacon still warm under a paper towel. There’s a note scribbled in messy handwriting: “Thanks for last night! Enjoy – M & S.” You smirk, figuring it’s the girls’ doing. They’re not around, though—place feels empty without their chatter.

You scarf down the breakfast—crisp toast slathered with strawberry jam, bacon salty and perfect—then hit the shower, letting the hot water blast away the last of the sleep haze. By the time you’re dressed—jeans, a hoodie, sneakers—it’s pushing 9 a.m. You grab your camera bag, sling it over your shoulder, and step outside. Holy shit, it’s a different world. After yesterday’s apocalyptic downpour, the sun’s out, blazing in a sky so blue it looks photoshopped. The lake sparkles, all glassy and calm, and the air’s crisp but not freezing, a perfect late-morning vibe. You’re still marveling at it when a loud whoop cuts through the silence, followed by a splash.

Your head snaps toward the pier, and there’s Miyeon, mid-air, cannonballing into the water with a scream that’s half-laugh, half-battle cry. She’s in a red swimsuit, bright against the lake, and as she surfaces, shaking wet hair out of her face, you spot Sana on the pier, waving at you in a pink bikini that hugs her curves just right. They’re both stupidly gorgeous, and for a second, you’re just standing there, camera dangling, brain short-circuiting. Miyeon’s got a little more thickness to her—medium, perky breasts filling out that swimsuit top, a round ass that’s damn near hypnotizing as she climbs back onto the pier. Sana’s slimmer, all sleek lines and subtle curves, the bikini showing off her tiny waist and long legs. You snap out of it when they call you over, Miyeon’s voice carrying: “Yo, camera guy! Get your ass down here!”

You jog over, grinning as you hit the pier’s edge. “Morning, ladies,” you say, shielding your eyes from the sun. “You two look way too chipper after last night.”

“Slept like babies,” Miyeon says, wringing water out of her hair, droplets splattering the wood. “Your place is cozy as hell. How’d you hold up on that couch?”

“Good enough,” you say, shrugging. “Woke up to breakfast, though—that was clutch. Thanks for that.”

Sana beams, sitting cross-legged on the pier, her pink bikini practically glowing in the sunlight. “I made it. Miyeon can’t cook for shit, so I took over.”

“Facts,” Miyeon says, not even arguing. “She’s a wizard in the kitchen. That bacon? Her doing. I’d burn the house down trying.”

“Shit, well, it was awesome,” you say, nodding at Sana. “Seriously, thank you. Didn’t expect the VIP treatment.”

Sana blushes a little, tucking a strand of hair behind her ear. “No biggie. Least we could do.”

Miyeon flops onto her back, stretching out like a cat in the sun. “Weather’s fuckin’ perfect today. Checked the forecast—sunny all day, but there’s another cold front rolling in tomorrow. Gotta soak this up while we can.” She props up on her elbows, eyeing you. “Come swim with us, dude. Water’s not even that cold.”

“Yeah, join us!” Sana chimes in, standing up and tugging at your arm. They’re both at it now, pulling you toward the edge, their wet hands slippery on your hoodie. Miyeon’s got that mischievous grin, and Sana’s giggling like she’s in on the plot.

You laugh, but it’s nervous, your feet planted. “Nah, I’ve got plans—gonna hike around, shoot some nature stuff. You know, trees, birds, all that shit.”

Miyeon sits up, crossing her arms under her chest, which—fuck, that swimsuit’s doing work. “Bro, we’re nature. Take pics of us instead. Way prettier than some random-ass tree.”

You smirk, caught off guard but not mad about it. “Can’t argue that. Alright, fine—photo shoot it is.”

Sana claps, bouncing a little. “Yes! These swimsuits are new, too. Gotta show ‘em off. Right, Miyeon?”

“Hell yeah,” Miyeon says, hopping to her feet. “Red’s my color, and pink’s hers. Perfect combo.”

You sling your camera out, adjusting the settings quick—bright sun, sharp focus. They start posing, and it’s like they were born for this. Miyeon’s all bold energy, leaning forward with a flirty smirk, then turning to show off that ass, one hand on her hip. Sana’s softer, tilting her head, letting her hair spill over her shoulder, giving you these quiet, sultry looks that hit harder than they should. Then they get together—arms around each other, laughing, pressing close like the girlfriends they are. Miyeon pulls Sana in for a playful kiss on the cheek, and Sana squeals, shoving her off, but they’re both cracking up. You’re snapping away, the shutter clicking like crazy, and every shot’s a banger—sunlight glinting off their skin, the lake shimmering behind them.

“Check these out,” you say, flipping the camera around. They crowd in, still dripping, Miyeon’s arm brushing yours as they ooh and ahh over the screen. “Holy shit, we look hot,” Miyeon says, zooming in on one where she’s tossing her hair back mid-laugh. Sana nods, pointing at another. “That one’s my favorite. The light’s perfect.”

“Glad you like ‘em,” you say, pocketing the camera. “I’ll send ‘em later with yesterday's photos.”

“Sweet,” Miyeon says, then glances at the lake. “You sure you won’t swim? Last chance before it’s all cold and shitty again.”

“Nah, I’m good,” you say, stepping back. “Gonna roam around, get some shots of the woods. Plus, I’ll swing by the city later—grab that fuel line part for your generator and fix it up.”

Sana’s eyes widen. “Wait, for real? You don’t have to do that.”

“It’s nothing,” you say, waving it off. “Hardware store’s not far, and I’ve got the tools. Beats you guys sitting in the dark again.”

Miyeon grins, big and genuine. “Dude, you’re too nice. Like, suspiciously nice. What’s your angle?”

You laugh. “No angle. Just don’t wanna see you stuck. Plus, I’m bored out here—gives me something to do.”

“Well, we owe you big time,” Sana says, hugging herself as a breeze kicks up. “Oh—can we charge our phones at your place? They’re basically dead, and we’ve got no juice over there.”

“Yeah, no problem,” you say, nodding toward your house. “Plenty of outlets. Leave ‘em as long as you need.”

“Sweet, thanks,” Miyeon says, already heading back to the pier’s edge. “We’ll catch you later then—drinks tonight, right?”

“Bet,” you say, giving them a mock salute. “Enjoy the sun, ladies.”

They wave as you head off, Miyeon shouting, “Don’t get lost in the woods, camera guy!” before cannonballing back into the water with another splash. You shake your head, smirking, and start down the path toward the trees, camera in hand. The day’s wide open, the girls are vibing, and you’ve got a solid plan—photos now, hero shit later, drinks to cap it off.

Not a bad way to spend a Saturday.

—

The sun’s dipping low now, painting the sky in lazy streaks of orange and pink as you roll back up to the lake house in your SUV. The gravel crunches under the tires, and you kill the engine, grabbing the plastic bag from the passenger seat—inside’s the new fuel line you snagged from the hardware store in town, plus a couple bags of chips, some salsa, and a pack of those sour gummy worms Miyeon seemed like she’d vibe with. You step out, the air cooler now that the afternoon’s winding down, and spot the girls on your porch, sprawled out like they’ve claimed the place.

Miyeon’s lounging in one of the wooden chairs, legs kicked up on the railing, scrolling her phone with one hand while the other toys with a strand of her damp hair—she’s still in that red swimsuit, a towel draped over her lap. Sana’s cross-legged on the floor next to her, phone plugged into an extension cord snaking through the open window, her pink bikini swapped for a loose tee and shorts. They look up as you approach, Miyeon tossing you a lazy wave while Sana gives a little smile, like they’ve been waiting for you to roll in.

“Yo, I’m back,” you say, holding up the bag. “Got the fuel line. And some snacks for later—figured we’d need something to munch on with the drinks.”

Miyeon drops her feet from the railing, sitting up with a grin. “You’re a fucking legend, dude. I’ll Venmo you later for the part—how much was it?”

“Like, twenty bucks,” you say, shrugging. “No rush.”

Sana tilts her head, brushing her hair behind her ear. “You sure you don’t need help with the generator? I’m useless with that stuff, but I can, like, hold a flashlight or something.”

“Nah, I got it,” you say, slinging your camera bag off your shoulder and setting it by the door. “Watched a couple YouTube vids earlier—think I can handle it solo. You guys just chill here.”

Miyeon laughs, leaning back in her chair. “Yeah, good call. We’d probably just fuck it up worse. I don’t even know what a fuel line is.”

“Same,” Sana adds, giggling. “You’re on your own, hero.”

“Cool,” you say, grabbing the bag with the part and heading off. “I’ll trek over there and sort it out. Be back in a bit.”

You make the short walk around the lake, the last of the sunlight glinting off the water, your boots sinking slightly into the still-damp ground. Their house looks less ominous now, just a quiet two-story sitting there in the evening glow. You head to the shed out back, popping it open with a creak, and there’s the generator—same sad, silent hunk of metal from last night. You drop to your knees, fishing the new fuel line out of the bag, and get to work.

The YouTube tutorials you skimmed earlier play back in your head—some dude with a thick accent walking through the steps like it’s no big deal. First, you kill the fuel switch, making sure no gas is leaking out, then unhook the old line—cracked and crusty, just like you thought. A little diesel dribbles onto your hands, stinking like hell, but you wipe it on your jeans and keep going. The new line’s a perfect fit, sliding into place with a satisfying click. You tighten the clamps with a screwdriver from their toolbox, double-checking everything’s snug. Then it’s just a matter of priming the fuel pump—couple quick pumps like the guy said—and flipping the switch. The generator sputters once, twice, then roars to life, a steady hum kicking in. You stand back, grinning like an idiot. Fixed. Lights flicker on in the house behind you, and you give yourself a mental high-five—DIY king shit.

You trudge back to your place, wiping your greasy hands on a rag you snagged from their shed. The girls spot you coming and perk up—Miyeon’s on her feet, Miyeon swapped her swimsuit for shorts and a tank top. Sana’s leaning forward, both of them looking hopeful. “Well?” Miyeon calls out, arms crossed.

“Done,” you say, tossing the rag onto the porch steps. “Generator’s purring like a kitten. You’ve got power again.”

Sana lets out this big, relieved sigh, clutching her phone to her chest. “Oh my God, thank you. I was legit stressed about that.”

Miyeon whoops, bounding over and throwing her arms around you in a quick, tight hug. “Dude, you’re the best! I owe you more than twenty bucks for this.”

You laugh, patting her back before she pulls away. “Nah, just keep the drinks flowing tonight, and we’re square.”

“Deal,” Sana says, standing up now, her whole vibe brighter. “Speaking of, let’s crack those beers. I’m way happier now that we’re not, like, pioneer women anymore.”

“Bet,” you say, heading inside to drop the snacks on the kitchen counter. The girls follow, Miyeon raiding your fridge for the beers while Sana digs into the chip bag already. You grab a deck of cards from a drawer, flipping it in your hand. “You guys play cards?”

Miyeon pops a beer open, foam hissing as she takes a sip. “I do. Poker, blackjack, whatever. I’m decent.”

Sana shrugs, munching a chip. “I’ve never played. Like, ever. I don’t even know the rules.”

“No shit?” you say, pulling out a chair at the table and motioning them over. “Alright, I’ll teach you. Easy stuff—let’s start with blackjack. You’ll pick it up quick.”

They settle in, Miyeon plopping down across from you with her beer, Sana sliding into the seat next to her, still clutching the chip bag like it’s a security blanket. You shuffle the deck, the cards snapping under your fingers, and deal out the first hand—two cards each. “Goal’s simple,” you say, tossing yourself a jack and a five. “Get as close to twenty-one as you can without going over. Face cards are ten, aces are one or eleven, whatever you need. You want another card, you say ‘hit.’ You’re good, you ‘stay.’ Bust, you lose.”

Sana stares at her cards—a seven and a three—furrowing her brow like it’s a math test. “Okay… hit?”

You flick her a nine, and she gasps. “Shit, that’s nineteen! I stay, right?”

“Yeah, smart call,” you say, grinning. “Miyeon?”

She’s got a queen and a four, smirking like she’s already won. “Hit.” You deal her a six—twenty. “Stay,” she says, leaning back with a cocky tilt to her head.

You flip your second card—a nine. “Dealer’s got nineteen,” you say, checking the deck. “Sana, you’re good. Miyeon wins, though—twenty’s closer.”

“Fuck yeah,” Miyeon says, fist-pumping. “Told you I’m good.”

Sana pouts, but she’s laughing. “Beginner’s luck doesn’t count, right?”

“Nope,” you say, gathering the cards. “Let’s go again. You’ll get the hang of it.”

The hours slip by like nothing, the table a mess of empty beer cans, crumpled chip bags, and a half-eaten pile of gummy worms stuck to the salsa lid. The cards are long forgotten, scattered across the table from your last sloppy round of blackjack—Sana kept busting and blaming the “stupid rules,” while Miyeon was raking in wins like she’d been hustling casinos her whole life. The drinks keep flowing, whiskey now in the mix, poured into mismatched mugs because you ran out of clean glasses. The room’s warm, a little hazy, the heater still chugging along as the night deepens outside, but there are no more stars in the sky, and you already know what's coming.

You’re slouched in your chair, one leg kicked up on the empty seat next to you, feeling the buzz settle into your bones. Across the table, Sana’s climbed into Miyeon’s lap at some point—nobody batted an eye, least of all you. They’re comfy like that, Sana’s head tucked against Miyeon’s shoulder, her fingers tracing lazy patterns on Miyeon’s arm while Miyeon’s got one hand draped around Sana’s waist, the other nursing her whiskey mug. They’re drunk, giggling messes, and you’re not far behind, the room spinning just enough to make everything funnier than it should be.

“Alright, camera guy,” Miyeon says, her voice a little slurred but still sharp, cutting through the haze. “Spill it. When’s the last time you had a girlfriend? You’re too chill to be single forever.”

You laugh, rubbing the back of your neck, the whiskey loosening your tongue. “Uh, shit, like two years ago? She was cool, but it didn’t stick. Been flying solo since then—works better that way, you know? Just me and my camera, no drama.”

Sana tilts her head, her lips curling into a teasing little smile. “Two years? Damn, you’re basically a monk.”

“Monk with a lens,” Miyeon adds, smirking. “Bet you’ve got girls tripping over you and you just don’t notice.”

“Nah,” you say, waving it off, though the compliment lands nice. “I’m good on my own. Relationships are… a lot.”

They exchange a look then—quick, sneaky, like they’re in on some secret. Sana whispers something in Miyeon’s ear, her breath tickling Miyeon’s neck, and Miyeon snickers, her eyes flicking to you. They both start giggling, sloppy and loud, and you lean forward, squinting. “What? What’s so funny?”

Miyeon shakes her head, still laughing. “Nothing, nothing. Just—we’ve got this friend, Shuhua. She’s super chill, loves hiking, nature vibes, all that shit you’re into. You’d hit it off.”

“Oh, yeah,” Sana pipes up, sitting up a little straighter on Miyeon’s lap, her cheeks flushed from the booze. “And Tzuyu too! She’s, like, gorgeous and artsy. Total your type.”

Miyeon nods like it’s settled. “Yeah, Tzuyu’s got that quiet, mysterious thing going. You’d be obsessed.”

You snort, taking a sip of your whiskey, the burn sliding down easy. “What, you two playing matchmaker now? I said I’m good.”

Miyeon’s grin turns mischievous, her eyes glinting under the dim kitchen light. “Okay, fine, but let’s be real for a sec. Between me and Sana—” she tightens her grip on Sana’s waist, making her squirm and giggle—“who’d you pick? Like, if you had to. Be honest.”

Sana’s head snaps up, her face going red. “Miyeon! Don’t ask that, oh my God!” She swats at Miyeon’s hand, but she’s laughing too, hiding her face in Miyeon’s shoulder for a sec before peeking out at you, all shy and curious.

You freeze, the mug halfway to your lips, caught off guard. “Uh… what?” Your voice comes out higher than you mean it to, and you clear your throat, trying to play it cool. “I don’t—I mean, I can’t just… pick. I don’t know.”

Miyeon’s eyebrows shoot up, and she leans forward, dragging Sana with her. “Oh, come on! You’re dodging. You totally know, you’re just too chicken to say it.”

“Am not,” you shoot back, but your face is heating up, and the whiskey’s not helping. You glance between them—Miyeon’s got that bold, flirty edge, all confidence and heat, her lips quirked like she’s daring you to say something stupid. Sana’s softer, her blush spreading, but there’s this spark in her eyes now, playful and warm, like she’s testing you too. They’re both ridiculous, and it’s doing shit to your head.

“So what I’m hearing,” Miyeon says, dragging the words out, “is you’d take both of us. Greedy bastard.”

“What—no!” you sputter, nearly choking on your drink. “That’s not what I said! You’re twisting it!”

Sana bursts out laughing, her whole body shaking against Miyeon. “Oh my God, you’re so greedy! Wanting us both, huh?”

“Fuck off, I didn’t say that,” you protest, but you’re laughing too, the absurdity of it hitting you all at once. “You two are wasted. I’m not even dignifying this.”

Miyeon grins wider, leaning closer across the table, her voice dropping low and teasing. “Oh, please. You couldn’t handle us anyway. We’re a lot, you know. High maintenance.”

Sana nods, mock-serious. “So much work. You’d be crying in a week.”

“Yeah, right,” you fire back, the whiskey buzzing through you now, making you bold. “I’d keep up. You’d be the ones begging for a break.”

Miyeon’s eyes widen, and she lets out a loud, “Ooooh!” Sana gasps, covering her mouth, but she’s smiling like crazy behind her hand. “He’s got some fight in him,” Miyeon says, leaning back and fanning herself dramatically. “Sana, you hear that? He thinks he’s tough enough for us.”

“I’m just saying,” you mutter, sinking into your chair, “you’re the ones who’d tap out first.”

Sana giggles, sliding off Miyeon’s lap to grab another beer from the fridge, her shorts riding up as she bends over. She spins back around, popping the cap with a lighter she snagged off the table. “You’re funny,” she says, pointing at you. “And shy as hell right now. Look at you.”

“Shut up,” you say, but you’re grinning, your face burning under their stares. “You’re both too drunk. This convo’s going off the rails—I’m scared of where it’s headed.”

Miyeon laughs, loud and unfiltered, tipping her mug back for the last of her whiskey. “Scared? Good. You should be. We’re trouble, camera guy. Double trouble.”

“Triple, with the drinks,” Sana adds, sliding back onto Miyeon’s lap, beer in hand. She takes a sip, then offers it to Miyeon, who leans in close, their lips brushing for a second as she drinks. It’s casual, natural for them, but it hits you like a punch—subtle, hot, and gone too fast to process.

You shake your head, trying to clear the fog. “Yeah, I’m calling it. You two are a menace. I’m having way too much fun, though.”

“Same,” Sana says, her voice softer now, her head resting on Miyeon’s shoulder again. “You’re cool, you know that?”

“Very cool,” Miyeon agrees, her hand sliding up Sana’s back, casual but possessive. “We’ll let you off the hook for now. But don’t think we’re done messing with you.”

You laugh, raising your mug in a mock toast. “Wouldn’t dream of it. Night’s still young, right?”

They clink their drinks against yours, the three of you grinning like idiots, the flirtation simmering under the surface—light, playful, but with an edge that keeps you on your toes. You take a sip of your whiskey, the burn familiar now, and figure it’s your turn to flip the script. “Alright,” you say, setting the mug down with a little thud to get their attention. “You’ve been grilling me about my love life—or lack of it. What about you two? How’d you even end up together?”

Miyeon’s head tilts back as she laughs, her black hair spilling over her shoulders. “Oh, dude, it’s a story. We met at some shitty college party—like, the kind with warm beer and a playlist that’s just Top 40 on repeat. I was trashed, trying to shotgun a can, and Sana was there, all cute and quiet, holding a red cup she wasn’t even drinking from.”

Sana nods, her cheeks already pink from the booze. “She spilled beer all over me trying to show off. I was pissed, but then she started apologizing like a maniac, and… I don’t know, she was funny about it. We just clicked.”

“Clicked, huh?” you say, smirking. “That’s cute. So, what’s the secret? Two and a half years is solid—most people can’t keep a houseplant alive that long.”

Miyeon shrugs, her hand sliding idly up Sana’s back, fingers tracing the hem of her tee. “Dunno. We just vibe. She keeps me from doing dumb shit—like, most of the time—and I make sure she doesn’t stay in her shell forever. Balance, you know?”

“Yeah,” Sana adds, leaning into Miyeon’s touch, her voice soft. “She’s loud and I’m not. Works out.”

You nod, letting the moment settle, then push a little further, keeping it chill. “Ever have any big fights? Like, the kind where you’re slamming doors or sleeping on the couch?”

Sana giggles, shaking her head. “Not really. We argue sometimes—stupid stuff, like who forgot to buy milk—but Miyeon’s too lazy to storm out, and I hate sleeping alone.”

“Facts,” Miyeon says, grinning. “I’d rather just bitch for five minutes and then make out. Way easier.”

You laugh, the image of them bickering-then-kissing too good to not picture. “Smart move. Alright, let’s level up—any exes still lurking around? Old flames trying to slide back in?”

Miyeon’s eyes narrow playfully, like she’s onto your game, but she answers anyway. “Couple of mine tried. Dudes mostly—had a few boyfriends before Sana. They’d hit me up like, ‘Oh, you’re with a girl now? That’s hot.’ Blocked them so fast. Sana’s exes are too scared of me to try anything.”

Sana snorts, nudging Miyeon’s shoulder. “You’re not that scary. They’re just… I don’t know, they’re all girls anyway. Nobody’s dumb enough to mess with us now.”

“Fair,” you say, leaning forward, resting your elbows on the table. The whiskey’s got your tongue loose, and the vibe’s right, so you nudge the questions up a notch—still smooth, but with a little heat. “So, Miyeon, you’ve dated guys before, right? Sana—you ever been with one? Like, ever?”

They glance at each other quick, a flicker of something passing between them—Sana’s blush deepens, and Miyeon’s grin turns sly. “Me? Yeah,” Miyeon says, casual as hell. “I’m bi—guys, girls, whatever. If they’re hot and fun, I’m down. Dated a couple dudes before I figured out I liked girls just as much. No big deal.”

Sana shifts on Miyeon’s lap, her fingers tightening around her beer bottle. “I… no. Never been with a guy. Always just girls for me.” Her voice is quieter, a little shy, but she doesn’t look away.

Miyeon tilts her head, resting her chin on Sana’s shoulder, her eyes locked on you now. “She’s curious, though,” she says, dropping it like a bomb, her tone teasing but deliberate. “Always has been. Right, babe?”

Sana’s face flares red, and she swats at Miyeon’s arm, flustered. “Miyeon! Shut up, oh my God!” She buries her face in her hands for a sec, then peeks out, still giggling despite herself. “I mean… yeah, okay, I’ve thought about it. Like, wondered what it’d be like. But that’s it. Closest I’ve gotten is—” She stops, biting her lip, and Miyeon finishes for her.

“The strap,” Miyeon says, smirking like she’s proud of it. “I’ve got this one that’s, uh, pretty realistic. She loves it, but it’s still not the real deal, you know?”

Sana groans, dropping her forehead onto Miyeon’s shoulder. “You’re the worst. Why do you say shit like that?”

You laugh, holding up your hands. “Hey, no judgment here. We’re all adults—shit gets spicy sometimes. Sounds like you’ve got it figured out anyway.”

Miyeon’s still watching you, her smirk softening into something sharper, more curious. Sana lifts her head, her embarrassment fading into a playful little pout as she takes a swig of her beer. “Okay, but why’re you asking?” she says, her tone turning provocative, her eyes narrowing just a bit. “You digging for details, huh? What’s your deal?”

You freeze for a sec, caught off guard, the whiskey making your brain a little slow to catch up. “What? Nah, I’m just—curious, I guess. Making conversation. That’s all.”

Miyeon’s not buying it, her head tilting like she’s sizing you up. “Bullshit. You’re interested. I can see it. All these questions—you’re fishing for something, aren’t you?”

“Fishing?” you say, leaning back, trying to play it cool but feeling the heat creeping up your neck. “Come on, I’m just chilling. Anyone stuck out here with you two would be asking the same shit. You’re the only entertainment I’ve got.”

Sana giggles, her pout turning into a grin as she leans forward, elbows on the table now, her chin in her hands. “Oh, so we’re entertainment? That’s your excuse?”

“Yeah, exactly,” you say, grinning back, the tension easing but still simmering under the surface. “Two hot girls, drunk and spilling secrets? Who wouldn’t be into that?”

Miyeon laughs, loud and bright, tipping her head back. “Fair. You’ve got a point. We are hot.” She nudges Sana, who’s still blushing but clearly loving the vibe. “He’s not wrong, babe.”

“Still,” Sana says, her voice softer but with a teasing edge, “you’re digging pretty deep. What’s next, you gonna ask our favorite positions or something?”

You choke on your whiskey, coughing into your fist as Miyeon cackles. “Jesus, no,” you manage, wiping your mouth. “I’m not that drunk. Yet.”

“Yet,” Miyeon echoes, her eyes glinting with mischief. “Give it an hour. We’ll get you there.”

The room’s buzzing now, the flirtation weaving through the air like a quiet current—nothing overt, but it’s there, subtle and growing. You take another sip, letting it burn, and lean back in your chair, meeting Miyeon’s gaze for a second longer than you should. Sana’s watching too, her smile small but knowing, like she’s in on the game.

The conversation’s still humming along, the whiskey keeping the edges soft and the laughter loud. You’re mid-sentence, riffing on some dumb story about a camping trip gone wrong years ago, when a faint patter hits the deck outside. At first, you think it’s just the wind kicking up, but then it gets louder, steadier—rain, drumming hard against the wood. The temperature drops fast, a chill sneaking through the open window, cutting through the cozy haze of the kitchen. Miyeon shivers, rubbing her bare arms, and Sana pulls her tee tighter around herself, her beer bottle clinking against the table as she sets it down.

“Shit, there it goes again,” you say, standing up to slide the window shut. The cold’s biting now, the kind that makes your breath fog indoors if you’re not careful. “The couch is calling us.”

They nod, grabbing their drinks and stumbling after you, a little wobbly from the booze. You flick on the living room lamp, its warm glow spilling over the plush couch and the throw blankets piled on the armrest. The fireplace is out, but the heater’s still doing its thing, and the room feels like a bubble against the storm outside. You flop into the corner of the couch, one leg tucked under you, the whiskey mug warm in your hands. Miyeon and Sana collapse together on the other end, a tangle of limbs and giggles—Sana’s half-draped over Miyeon, her head lolling against Miyeon’s chest as Miyeon wraps an arm around her.

“Fuck, your place is so warm,” Miyeon sighs, kicking off her flip-flops and pulling her feet up onto the cushions. “Ours would be an icebox right now with that busted generator.”

“Perks of not slacking on maintenance,” you say, smirking as you take a sip. “You’re welcome to crash anytime it shits the bed.”

Sana hums, her eyes half-closed, nestled into Miyeon like she’s ready to doze off. “Good to know. You’re spoiling us.”