#data bias impact

Link

Data bias in ML projects is also called machine learning bias or algorithmic bias. Bias are the training datasets used to train the ML or AI models are not complete or does not contain the true representation of facts and figures. Find out here about 5 types of data bias impacting your ml projects and how to fix them.

0 notes

Text

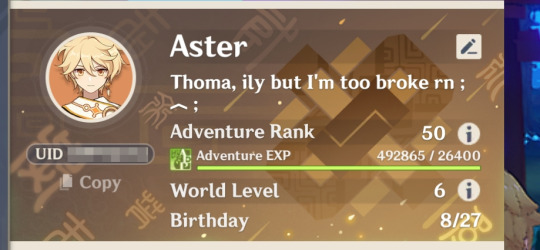

Thoma's the only 4 star to ever double... twice

#thoma really said 'i gotchu'#i was just lamenting over the fact that i had like 13 wishes/gacha pulls and then changed my namecard#to be fair he's really the only 4 star I'm working to c6 (aside from faruzan) so like. its a bias in data-#but c5 thoma lets goooooo#genshin impact#thoma genshin#tarp tarp

3 notes

·

View notes

Text

Just found out I got accepted to my top choice masters program with a full scholarship!

I'm going to be studying data science + AI.

#they asked me to write about problems that i thought needed to be addressed in the field for my application#and i wrote about the ecological impact of big data and gender bias in popular models#they also have labs related to these yopics that are hiring research assistants#im so psyched

2 notes

·

View notes

Text

The Implications of Algorithmic Bias and How To Mitigate It

AI has the potential to transform our world in ways we can't even imagine. From self-driving cars to personalized medicine, it's making our lives easier and more efficient. However, with this power comes the responsibility to consider the ethical implications and challenges that come with the use of AI. One of the most significant ethical concerns with AI is algorithmic bias.

Algorithmic bias occurs when a machine learning model is trained on data that is disproportionate from one demographic group, it may make inaccurate predictions for other groups, leading to discrimination. This can be a major problem when AI systems are used in decision-making contexts, such as in healthcare or criminal justice, where fairness is crucial.

But there are ways engineers can mitigate algorithmic bias in their models to help promote equality. One important step is to ensure that the data used to train the model is representative of the population it will be used on. Additionally, engineers should test their models on a diverse set of data to identify any potential biases and correct them.

Another key step is to be transparent about the decisions made by the model, and to provide an interpretable explanation of how it reaches its decisions. This can help to ensure that the model is held accountable for any discriminatory decisions it makes.

Finally, it's important to engage with stakeholders, including individuals and communities who may be affected by the model's decisions, to understand their concerns and incorporate them into the development process.

As engineers, we have a responsibility to ensure that our AI models are fair, transparent and accountable. By taking these steps, we can help to promote equality and ensure that the benefits of AI are enjoyed by everyone.

#AI#Machine learning#Algorithmic bias#Ethics#Fairness#Transparency#Interpretability#Bias#Discrimination#Data science#Data representation#Data bias#Stakeholder engagement#Social impact#AI for good#AI for social good#AI ethics#AI and society#AI and diversity#Responsible AI

2 notes

·

View notes

Text

AI is skewed against you, and it’s by design

Artificial Intelligence (AI) influences many aspects of life, the concern about its neutrality is paramount.

As a professional dedicated to innovation and transparency, I delve into the complexities of AI development and its susceptibility to political biases.

Data Source Selection and Bias:The foundation of AI’s learning mechanism lies in its training data. If this data predominantly reflects…

View On WordPress

#AI#AI ethics#AI Experts#AI governance#algorithmic transparency#Artificial Intelligence#ChatGPT#data neutrality#decision-making#innovation responsibility#OpenAI#political bias#societal impact#stakeholder influence#technology oversight

0 notes

Text

The Ethical Considerations of Robotics and Artificial Intelligence

The rapid advancements in robotics and artificial intelligence (AI) technologies have brought forth a multitude of ethical considerations of robotics that need to be carefully examined. As robots and AI systems become more integrated into our daily lives, it is essential to reflect upon the potential impact they can have on society, individuals, and the ethical values we hold. In this article, we…

View On WordPress

#Accountability and Liability#AI algorithms#Bias and Discrimination#Ethical Considerations of Robotics#Ethical Design and Development#Human Autonomy and Control#Impact on Employment and Society#Privacy and Data Protection#robotics and artificial intelligence#robots and AI systems#Transparency and Explainability

0 notes

Text

Gelatopod - Ice/Fairy

(Vanilla-Caramel Flavor is normal, Mint-Choco is shiny)

Artist - I adopted this wonderful fakemon from xeeble! So I decided to make up a full list of game data, moves, lore, etc. for it. Enjoy! :D

Abilities - Sticky Hold/Ice Body/Weak Armor (Hidden)

Pokedex Entries

Scarlet: Gelatopod leaves behind a sticky trail when it moves. A rich, creamy ice cream can be made from the collected slime.

Violet: At night, it uses the spike on its shell to dig into the ground, anchoring itself into place. Then it withdraws into its shell to sleep in safety.

Stats & Moves

BST - 485

HP - 73

Attack - 56

Defense - 100

Special Attack - 90

Special Defense - 126

Speed - 40

Learnset

Lvl 1: Sweet Scent, Sweet Kiss, Aromatherapy, Disarming Voice

Lvl 4: Defense Curl

Lvl 8: Baby Doll Eyes

Lvl 12: Draining Kiss

Lvl 16: Ice Ball

Lvl 21: Covet

Lvl 24: Icy Wind

Lvl 28: Sticky Web

Lvl 32: Dazzling Gleam

Lvl 36: Snowscape

Lvl 40: Ice Beam

Lvl 44: Misty Terrain

Lvl 48: Moonblast

Lvl 52: Shell Smash

Friendship Level Raised to 160: Love Dart (Signature Move)

Egg Moves

Mirror Coat, Acid Armor, Fake Tears, Aurora Veil

Signature Move - Love Dart

Learned when Gelatopod's friendship level reaches 160 and then the player completes a battle with it

Type - Fairy, Physical, Non-Contact

Damage Power - 20 PP - 10 (max 16) Accuracy - 75%

Secondary Effect - Causes Infatuation in both male and female pokemon. Infatuation ends in 1-4 turns.

Flavor Text - The user fires a dart made of hardened slime at the target. Foes of both the opposite and same gender will become infatuated with the user.

TM Moves

Take Down, Protect, Facade, Endure, Sleep Talk, Rest, Substitute, Giga Impact, Hyper Beam, Helping Hand, Icy Wind, Avalanche, Snowscape, Ice Beam, Blizzard, Charm, Dazzling Gleam, Disarming Voice, Draining Kiss, Misty Terrain, Play Rough, Struggle Bug, U-Turn, Mud Shot, Mud-Slap, Dig, Weather Ball, Bullet Seed, Giga Drain, Power Gem, Tera Blast

Other Game Data

Gender Ratio - 50/50

Catch Rate - 75

Egg Groups - Fairy & Amorphous

Hatch Time - 20 Cycles

Height/Weight - 1'0''/1.3 lbs

Base Experience Yield - 170

Leveling Rate - Medium Fast

EV Yield - 2 (Defense & Special Defense)

Body Shape - Serpentine

Pokedex Color - White

Base Friendship - 70

Game Locations - Glaseado Mountain, plus a 3% chance of encountering Gelatopod when the player buys Ice Cream from any of the Ice Cream stands

Notes

I'm not a competitive player, but I did my best to balance this fakemon fairly and not make it too broken. Feel free to give feedback if you have any thoughts!

I have a huge bias for Bug Pokemon since they're my favorite type, and at first I wanted to make it Bug/Ice, since any intervebrate could be tossed into the 'Bug' typing. But ultimately I decided to keep xeeble's original idea of Ice/Fairy. There's precedent of food-themed pokemon being Fairy type, and Ice/Fairy would be very interesting due to its rarity (only Alolan Ninetails has it). Its type weaknesses are also slightly easier to handle than Bug/Ice imo

The signature move is indeed based on real love darts, I could not resist something that fascinating being made into a Pokemon move, even if the real games may possibly shy away from the idea. (Honestly it could be argued "Love Dart" is based on Cupid's arrow so Gamefreak might actually get away with making a move like this though.) Its effectiveness on both males and females is a nod to snails/slugs being biological hermaphrodites. I can see this move also being learned by Gastrodon and Magcargo in Scarlet/Violet

#pokemon#fakemon#honorary bug pokemon#pokemon scarlet/violet#pokémon#ice pokemon#fairy pokemon#gen 9#molluscs#snails#mycontent

473 notes

·

View notes

Note

To be fair I may be wrong considering how biases in med science leads to correlative data, but from what I have seen, being overweight leads to health issues? I remember being back in high school being taught that having more fat equated to heart strain, since more blood needed to be moved throughout the body within the same time frame than for a person of expected weight

And more recently when I went looking for research papers on being overweight and the associated heart issues (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6179812/#:~:text=The%20increased%20cardiac%20output%20in,an%20increase%20in%20stroke%20volume.)

From your post, yea definitely fat phobia in medical spaces is a horrifying thing, and probably led to many of these ‘get fat and you’ll have this health condition’ ideas circulating

Though I was curious if you think being fat within a certain ‘range’ has no negative health impacts? (Genuinely contused and curious)

Seriously, I'm not going to get into this discourse or provide you with an exhaustive explanation of how medical bias affects research and the interpretation of results when so many people out there have already done the work, and I linked to books you can read and pointed towards a podcast you can listen to already.

But, since you ignored those things and came into my inbox 'just asking questions,' you can start here:

Or maybe you can look at @fatphobiabusters or @fatliberation, or @fatsexybitch, who have already done a lot of work on this.

I am genuinely not interested in this conversation.

217 notes

·

View notes

Text

This is why accurate information about sex and race is important. A study just gave a name to another way businesses pass over women for promotions and how women of color are impacted at greater rates.

Forget The Glass Ceiling, 'The Broken Rung' Is Why Women Are Denied Promotions

A new study finds Black women and Latinas in particular are the least likely to get that first promotion — and it’s not because they’re not asking for it.

by Monica Torres

Getting your first promotion into management is a huge achievement in your career. But a new study from consulting firm McKinsey & Co. and nonprofit Lean In shows it’s an opportunity that is not equally afforded to everyone.

According to the study, which used pipeline data from 276 companies in the private, public and social sectors, women ― and women of color, in particular ― are the least likely demographic to get promoted from entry-level to first-time manager.

For every 100 men promoted from entry-level contributor to manager in the survey, only 87 women got promoted. And this gap gets wider for women of color: This year, while 91 white women were promoted to manager for every 100 men, only 89 Asian women, 76 Latinas and 54 Black women would get that same opportunity.

“As a result of this broken rung, women fall behind and can’t catch up,” the study states.

It’s not because those women were not asking for it ― the study found that the women were asking for promotions at the same rate as their male peers. And it’s not because these women did not stick around long enough to be considered for the job ― the study found that they were no more likely to leave their company than their male peers.

The main culprit to this “broken rung” in the career ladder? It’s what known as a “performance bias.”

Why women deal with the “broken rung” phenomenon.

Under a performance bias, men get promoted more because of their future potential, while women get judged on their past accomplishments and have their leadership potential doubted.

“Because women early in their careers have shorter track records and similar work experiences relative to their men peers, performance bias can especially disadvantage them at the first promotion to manager,” according to the study.

This research aligns with the “prove-it-again bias” studies have found women face throughout their career: where they do more work in order to be seen as equally competent to their male peers.

As for why it’s hardest for women of color to make that first leap into management? Workplace consultant Minda Harts, author of “The Memo: What Women of Color Need to Know to Secure a Seat at the Table,” said it’s because systemic biases and stereotypes cause women of color to be less trusted for the job.

“This lack of trust can manifest in several ways, such as doubts about competence, commitment or ‘fit’ within a leadership role,” Harts told HuffPost. “When senior leadership is predominantly male and white, an unconscious bias might lead them to trust individuals who mirror their own experiences or backgrounds ... As a result, women of color may be disproportionately overlooked for promotions.”

The McKinsey study found that women of color surveyed this year were even less likely to become first-time managers in 2023 than they were in 2022.

Feminist career coach Cynthia Pong told HuffPost it’s because in tough financial times, companies often operate under a scarcity mindset and might see women of color as a bigger “risk” to promote when they are underrepresented in leadership.

“We just had to go through layoffs, and we only have three [manager roles]. You can easily see how in times like that, it would just end up replicating these systems where we only trust and only give the benefit of the doubt to certain folks,” Pong said. “And it’s not going to be women of color.”

That sends a dispiriting message to people who watch their peers advance while they get told they are still not ready.

“It’s even more frustrating and infuriating ... when you see that there is a pathway for others, but not for you. Because the injustice of it makes your blood boil,” Pong said.

This should not be on women and women of color to fix. Employers should proactively take steps to make a clear promotion path for all.

There is a lot of talk about the “glass ceiling” and the barrier women face that prevents them from becoming executives at the top. But this study illustrates that there is a more fundamental problem happening to women early in their career: the systemic bias that prevents women from being seen as a leader who can manage other people.

“Our success must be something other than a solo sport,” Harts said. “We can’t promote and advance ourselves.”

For companies to be part of the solution, employers should be more transparent about how managerial promotions happen.

“Trust is enhanced when employees understand what is expected of them and what they can expect from their leaders,” Harts said. “This transparency can help mitigate unconscious biases or misconceptions about capabilities or trustworthiness.”

To break down stereotypes and build trust between employees of color and leadership, Harts also recommended companies to implement programs where women of color are paired with sponsors in senior roles.

What you can do about this as an employee.

If you keep being told vague “no’s” after every promotion request, start asking more questions about what your peers are doing that you are not.

“They’re not going to admit to having a systemic problem. They’re going to say, ‘We just don’t have it in the budget,’” said Elaine Lou Cartas, a business and career coach for women of color.

“I’ve seen people that got promoted to this where they are also doing the same amount as I was, but I was doing A, B and C. Help me understand,” is the kind of assertive framing you can use to ask more questions, Cartas said.

And if you find the goalpost of promotion metrics keeps moving after your conversation with your manager, that might be the time to start job hunting.

“Once you already have that conversation, and nothing’s being done, or at least there’s no steps or actions for it to be done in the future, that’s when [you] could start looking,” Cartas said.

Ultimately, one missed promotion may not seem like a huge setback, but it adds up over time with lost wages and earning potential, Pong said.

“And then that also ripples out generationally to all the families and family units that each woman of color is supporting, and then those to come,” she said. “So it seems like it might be like no big deal to have this person promoted one or two years later. But ... these things really snowball.”

#Glass ceiling#Broken rung#Women in the workplace#Women of color in the workplace#For every 100 men promoted only 89 Asian women 76 Latinas and 54 Black women would get that same opportunity#Prove it again bias#The Memo: What Women of Color Need to Know to Secure a Seat at the Table#Minda Hart's

94 notes

·

View notes

Text

Since the 1970s, crash test dummies - mechanical surrogates of the human body - have been used to determine car safety.

The technology is used to estimate the effectiveness of seatbelts and safety features in new vehicle designs.

Until now the most commonly used dummy has been based on the average male build and weight.

However, women represent about half of all drivers and are more prone to injury in like-for-like accidents.

The dummy that is sometimes used as a proxy for women is a scaled-down version of the male one, roughly the size of a 12-year-old girl.

At 149cm tall (4ft 8ins) and weighing 48kg (7st 5lb), it represents the smallest 5% of women by the standards of the mid-1970s.

However, a team of Swedish engineers has finally developed the first dummy, or to use the more technical term - seat evaluation tool - designed on the body of the average woman.

Their dummy is 162cm (5ft 3ins) tall and weighs 62kg (9st 7lbs), more representative of the female population.

So why have safety regulators not asked for it before now?

"You can see that this is a bias," said Tjark Kreuzinger, who specialises in the field for Toyota in Europe. "When all the men in the meetings decide, they tend to look to their feet and say 'this is it'.

"I would never say that anybody does it intentionally but it's just the mere fact that it's typically a male decision - and that's why we do not have [average] female dummies."

Several times a day in a lab in the Swedish city of Linköping, road accidents are simulated and the consequences are analysed. The sensors and transducers within the dummy provide potentially lifesaving data, measuring the precise physical forces exerted on each body part in a crash event.

The team record data including velocity of impact, crushing force, bending, torque of the body and braking rates.

They are focused on seeing what happens to the biomechanics of the dummy during low-impact rear collisions.

When a woman is in a car crash she is up to three times more likely to suffer whiplash injuries in rear impacts in comparison with a man, according to US government data. Although whiplash is not usually fatal, it can lead to physical disabilities - some of which can be permanent.

It is these statistics that drive Astrid Linder, the director of traffic safety at the Swedish National Road and Transport Research Institute, who is leading the research in Linköping.

"We know from injury statistics that if we look at low severity impacts females are at higher risk.

"So, in order to ensure that you identify the seats that have the best protection for both parts of the population, we definitely need to have the part of the population at highest risk represented," she told the BBC.

Dr Linder believes her research can help shape the way cars are specified in the future and she stresses the key differences between men and women. Females are shorter and lighter than males, on average, and they have different muscle strengths.

Because of this they physically respond differently in a car crash.

"We have differences in the shape of the torso and the centre of gravity and the outline of our hips and pelvis," she explained.

But Dr Linder will still need regulators to enforce the use of the average female she has developed.

Currently there is no legal requirement for car safety tests for rear impact collisions to be carried out on anything other than the average man.

Although some car companies are already using them in their own safety tests they are not yet used in EU or US regulatory tests.

Engineers are starting to create more diverse dummies, including dummies that represent babies, elderly and overweight people.

The average female dummy in Linköping has a fully flexible spine, which means the team can look at what happens to the whole spine, from the head to the lower back, when a woman is injured.

US company Humanetics is the largest manufacturer of crash test dummies worldwide and is seen as the leading voice when it comes to the precision of the technology.

CEO Christopher O'Connor told the BBC he believes that safety has "advanced significantly over the last 20, 30, 40 years" but it "really hasn't taken into account the differences between a male and a female".

"You can't have the same device to test a man and a woman. We're not going to crack the injuries we are seeing today unless we put sensors there to measure those injuries.

"By measuring those injuries we can then have safer cars with safer airbags, with safer seatbelts, with safer occupant compartments that allow for different sizes."

The UN is examining its regulations on crash testing and will determine whether they need to be changed to better protect all drivers.

If changes are made to involve a crash test dummy representing the average female, there is an expectation that women will one day be safer behind the wheel.

"My hope for the future is that the safety of vehicles will be assessed for both parts of the population," Dr Linder said.

527 notes

·

View notes

Text

assuaging my anxieties about machine learning over the last week, I learn that despite there being about ten years of doom-saying about the full automation of radiomics, there's actually a shortage of radiologists now (and, also, the machine learning algorithms that are supposed to be able to detect cancers better than human doctors are very often giving overconfident predictions). truck driving was supposed to be completely automated by now, but my grampa is still truckin' and will probably get to retire as a trucker. companies like GM are now throwing decreasing amounts of money at autonomous vehicle research after throwing billions at cars that can just barely ferry people around san francisco (and sometimes still fails), the most mapped and trained upon set of roads in the world. (imagine the cost to train these things for a city with dilapidated infrastructure, where the lines in the road have faded away, like, say, Shreveport, LA).

we now have transformer-based models that are able to provide contextually relevant responses, but the responses are often wrong, and often in subtle ways that require expertise to needle out. the possibility of giving a wrong response is always there - it's a stochastic next-word prediction algorithm based on statistical inferences gleaned from the training data, with no innate understanding of the symbols its producing. image generators are questionably legal (at least the way they were trained and how that effects the output of essentially copyrighted material). graphic designers, rather than being replaced by them, are already using them as a tool, and I've already seen local designers do this (which I find cheap and ugly - one taco place hired a local designer to make a graphic for them - the tacos looked like taco bell's, not the actual restaurant's, and you could see artefacts from the generation process everywhere). for the most part, what they produce is visually ugly and requires extensive touchups - if the model even gives you an output you can edit. the role of the designer as designer is still there - they are still the arbiter of good taste, and the value of a graphic designer is still based on whether or not they have a well developed aesthetic taste themself.

for the most part, everything is in tech demo phase, and this is after getting trained on nearly the sum total of available human produced data, which is already a problem for generalized performance. while a lot of these systems perform well on older, flawed, benchmarks, newer benchmarks show that these systems (including GPT-4 with plugins) consistently fail to compete with humans equipped with everyday knowledge.

there is also a huge problem with the benchmarks typically used to measure progress in machine learning that impact their real world use (and tell us we should probably be more cautious because the human use of these tools is bound to be reckless given the hype they've received). back to radiomics, some machine learning models barely generalize at all, and only perform slightly better than chance at identifying pneumonia in pediatric cases when it's exposed to external datasets (external to the hospital where the data it was trained on came from). other issues, like data leakage, make popular benchmarks often an overoptimistic measure of success.

very few researchers in machine learning are recognizing these limits. that probably has to do with the academic and commercial incentives towards publishing overconfident results. many papers are not even in principle reproducible, because the code, training data, etc., is simply not provided. "publish or perish", the bias journals have towards positive results, and the desire of tech companies to get continued funding while "AI" is the hot buzzword, all combined this year for the perfect storm of techno-hype.

which is not to say that machine learning is useless. their use as glorified statistical methods has been a boon for scientists, when those scientists understand what's going on under the hood. in a medical context, tempered use of machine learning has definitely saved lives already. some programmers swear that copilot has made them marginally more productive, by autocompleting sometimes tedious boilerplate code (although, hey, we've had code generators doing this for several decades). it's probably marginally faster to ask a service "how do I reverse a string" than to look through the docs (although, if you had read the docs to begin with would you even need to take the risk of the service getting it wrong?) people have a lot of fun with the image generators, because one-off memes don't require high quality aesthetics to get a chuckle before the user scrolls away (only psychopaths like me look at these images for artefacts). doctors will continue to use statistical tools in the wider machine learning tool set to augment their provision of care, if these were designed and implemented carefully, with a mind to their limitations.

anyway, i hope posting this will assuage my anxieties for another quarter at least.

35 notes

·

View notes

Note

https://link.springer.com/article/10.1007/s10508-023-02717-0

In an online survey of 1124 heterosexual British men using a modified CDC National Intimate Partner and Sexual Violence Survey, 71% of men experienced some form of sexual victimization by a woman at least once during their lifetime.

Hello!

So, I'm guessing this question is reference to the discussion from a few days ago?

In short, the linked study did not collect data in a way that can be generalized to a larger population. Therefore, the lifetime prevalence that "71% of men experienced some form of sexual victimization by a woman" cannot be viewed as accurate and is likely a significant over-estimate. I'll go into more detail about this below:

Question: What sort of data collection methods allow for generalization?

Answer:

In short, data from a sample can only be generalized to a population if the sample is representative of that population. In "pure" statistics, this would mean a random sample (also referred to as "probability sampling").

In reality, we often cannot take a truly random sample (due to constraints involving time, money, ethics, etc.). So, we attempt to create a "representative" sample instead.

In some cases we can justify generalizing results from a convenience (e.g., volunteer) sample when we don't expect volunteers to differ from non-volunteers. In most cases however, there is reason to believe that those who would volunteer for a study are different than those who would not. (Think of something like Yelp! reviews: people who had a very bad or very good experience are much more likely to post on Yelp! than someone with an average experience. Therefore, it's not likely that the sample of experiences represented on Yelp! are representative of all customer experiences).

In many cases we can use statistical techniques (such as post-stratification weighting) to make a non-representative sample more representative. (This is a common strategy for reputable polling firms.)

In addition, online surveys are particularly unreliable.

Question: How is this relevant to the linked study?

Answer:

The study's methods indicate: "A total of 1190 adults from the United Kingdom participated in the online study in exchange for payment of £1 through Prolific Academic. ... The description forewarned that questions would be asked about non-consensual sexual experiences. ... Age, gender, and sexuality were the only demographic information collected."

If you aren't familiar with Prolific it may not be obvious to you, but the end result is that this recruitment method introduces several problems. (1) Only individuals who are likely to participate in online surveys -- a demographic that likely does not match the whole population -- could possibly be recruited. (2) Of this already limited recruitment sample, individuals self-selected into the survey. If you recall from above, people are much more likely to self-select into a survey when they "have something to say" (i.e., had a very bad experience at a restaurant, have been sexually assaulted). (3) No post-stratification weighting or other statistical techniques for reducing the impact of response bias could be used, because they did not collect any other demographic factors.

The end result here is that this estimate of lifetime victimization is completely unreliable and likely much higher than the true prevalence rate (see part (2) on the above bullet point).

Question: Okay, so why do you trust the rates given in other reports (e.g., reports by the CDC, UN, etc.)?

Answer:

The key lies in the methodology of the study. The bigger (CDC, UN, etc.) reports I've linked to in the past have made efforts to gather representative samples. They usually also use post-stratification methods. Perhaps most importantly, they clearly outline what results may be unreliable based on their data collection and how.

In contrast, the report you've linked fails to acknowledge the deficit in their data collection and instead seems to be suggesting that this new (much higher) rate for male victimization is the accurate one. (They also suggest this high rate is backed by past research by using a single statistic from a review of studies, ignoring the dozens of other much lower statistics from the same review. This is ... misleading at best, actively disingenuous at worst.)

To add on to the above, generally when you're doing research and find an unexpected result, the proper response is to investigate reasons why this might have happened and include this in your discussion. Sometimes, this means you are explaining an improvement on past methodology and why it should generate a more accurate result (and arguing for future replication). Oftentimes, you are instead explaining a deficit in your methodology and why it resulted in such a severe deviance from past results.

Question: Does this mean the linked study is useless?

Answer:

Nope! Even though the population prevalence estimate is arbitrary, it still gives us some interesting and important information. I'll list some below!

(1) Of the men who have been sexually victimized by a woman, more than half of them have been victimized more than once.

(2) Physical force was used less than 5% of the time, suggesting that the methods for victimization employed by female offenders is different than those employed by male offenders.

(3) Sexual victimization among men is statistically associated with anxiety, depression, and PTSD.

(4) Conformity to masculine norms did not alter the psychological impact of sexual victimization.

Clearly the study gives some interesting information about male victims of female sexual offenders, even if it can't give an accurate population prevalence estimate.

Question: So ... what is an accurate prevalence rate for male victimization by women?

Answer:

First, data on this is shockingly difficult to pin down! There are good reasons for this (which I won't get into right now), but here's some better estimates.

The CDC reports lifetime estimates for sexual victimization for the USA (bonus check out the methodology and data assessment reports!). Their most recent lifetime estimate for any "contact sexual violence" (completed or attempted rape, made to penetrate, sexual coercion, unwanted sexual contact) are: 54% for women and 31% for men.

However, these prevalence rates do not take the perpetrator sex into consideration. This is important if we're looking for a prevalence rate for male victimization by women, since not all of the 31% prevalence rate for men was by male perpetrators.

For women, depending on the offense subcategory, victims reported only male perpetrators 90-95% of the time.

For men, depending on the offense subcategory victims reported only female perpetrators 10-72% of the time (notably this means that 28-98% of male victims were victimized by other men compared to the approximate 5-10% of women who were victimized by other women).

One study attempted to reconcile prevalence differences between various studies for the UK, and ended up with the following prevalence ranges: 16-18.5% "less serious" sexual violence for women and 2.5-3.5% "less serious" sexual violence for men; 5-6% attempted/completed rape for women and ~0.5% attempted/completed rape for men.

Finally, this study highlights the variability in prevalence estimates from research articles. The range was very broad and depended on: methods of data collection/sampling, data collection instruments, specific population (i.e., age, country) sampled, specific time range inquired about (i.e., lifetime, since 18, etc.), specific assault types (rape, forced kissing, etc.). (I don't have time to go into each of the articles they analyzed, but this is evident from the overviews they presented!)

I hope this helps you understand this paper (and its limitations!). Hopefully, you can apply this same sort of analysis to other studies.

20 notes

·

View notes

Text

“Men are more likely than women to be involved in a car crash, which means they dominate the numbers of those seriously injured in car accidents. But when a woman is involved in a car crash, she is 47% more likely to be seriously injured than a man, and 71% more likely to be moderately injured, even when researchers control for factors such as height, weight, seat-belt usage, and crash intensity…She is also 17% more likely to die and it all has to do with how the car is designed - and for whom…When men and women are in a car together, the man is most likely to be driving. So not collecting data on passengers more or less translates as not collecting data on women. The infuriating irony of all this is that the gendered passenger/driver norm is so prevalent that…the passenger seat is the only seat that is commonly tested with a female crash-test dummy, with the male crash-test dummy still being the standard dummy for the driver's seat. So stats that include only driver fatalities tell us precisely zero about the impact of introducing the female crash-test dummy.” - Caroline Criado Perez (Invisible Women: Data Bias in a World Designed for Men)

#design bias#male as default#gender data gap#Caroline Criado Perez#womanhood#invisible women#Invisible Women: Data Bias in a World Designed for Men#dibs#car accidents#driving#women’s safety#vehicle safety

146 notes

·

View notes

Text

A snapshot of bias, the human mind, and AI

New Post has been published on https://thedigitalinsider.com/a-snapshot-of-bias-the-human-mind-and-ai/

A snapshot of bias, the human mind, and AI

Introducing bias & the human mind

The human mind is a landscape filled with curiosity and, at times irrationality, motivation, confusion, and bias. The latter results in levels of complexity in how both the human and more recently, the artificial slant affects artificial intelligence systems from concept to scale.

Bias is something that in many cases unintentionally appears – whether it be in human decision-making or the dataset – but its impact on output can be sizeable. With several cases over the years highlighting the social, political, technological, and environmental impact of bias, this piece will explore this important topic and some thoughts on how such a phenomenon can be managed.

Whilst there’s many variations and interpretations (which in some cases themselves could be biased), let’s instead of referring to a definition explore how the human mind might work in certain scenarios.

Imagine two friends (friend A and friend B) at school who’ve had a falling out and makeup again after apologies are exchanged. With friend A’s birthday coming up, they’re going through their invite list and land on Person B (who they fell out with).

Do they want to invite them back and risk the awkwardness if another falling out occurs, or should they take the view they should only invite those they’ve always got along with? The twist is though, Person A choosing the attendees for the party may have had minor falling outs with them in the past, but they’re interpreting it through the lens any previous falling outs are insignificant enough to be looked over.

The follow-up from the above example turns to whether person’s A decision is fair. Now, fairness adds to the difficulty as there’s no scientific definition of what fairness really is.

However, some might align fairness with making a balanced judgment after considering the facts or doing what is right (even if that’s biased!). These are just a couple of ways in which the mind can distort, and mould the completion of tasks, whether they’re strategic or technical.

Before going into the underlying ways in which bias can be managed in AI systems, let’s start from the top: leadership.

Leadership, bias, and Human In the Loop Systems

The combination of leadership and bias introduces important discussions about how such a trait can be managed. “The fish rots from the head down” is a common phrase used to describe leadership styles and their impact across both the wider company and their teams, but this phrase can also be extended to how bias weaves down the chain of command.

For example, if a leader within the C-suite doesn’t get along with the CEO or has had several previous tense exchanges, they may ultimately, subconsciously have a blurred view of the company vision that then spills down, with distorted conviction, to the teams.

Leadership and bias will always remain an important conversation in the boardroom, and there’s been some fascinating studies exploring this in more depth, for example, Shaan Madhavji’s piece on the identification and management of leadership bias [1]. It’s an incredibly eye-opening subject, and one that in my view will become increasingly topical as time moves on.

Generative Artificial Intelligence Report 2024

We’re diving deep into the world of generative artificial intelligence with our new report: Generative AI 2024, which will explore how and why companies are (or aren’t) using this technology.

As we shift from leadership styles and bias to addressing bias in artificial intelligence-based systems, an area that’ll come under further spotlight will be the effectiveness of Human In the Loop Systems (HITL).

Whilst their usefulness varies across industries, in summary, HITL systems fuse both the art of human intuition and the efficiency of machines: an incredibly valuable partnership where complex decision-making at speed is concerned.

Additionally, when linked to bias, the human link in the chain can be key in identifying bias early on to ensure adverse effects aren’t felt later on. On the other hand, HITL won’t always be a Spring cleaning companion: complexities around getting a sizeable batch of training data combined with practitioners who can effectively integrate into a HITL environment can blur the productivity vs efficiency drive the company is aiming to achieve.

Conclusions & the future of bias

In my view, irrespective of how much better HITL systems might (or might not) become, I don’t believe bias can be eliminated, and I don’t believe in the future – no matter how advanced and intelligent AI becomes – we’ll be able to get rid of it.

It’s very much something that’s so woven that it’s not always possible to see or even discern it. Furthermore, sometimes bias traits are only revealed when an individual points it out to someone else, and even then there can be bias on top of bias!

As we look to the future of Generative AI, its associated increasingly challenging ethical considerations, and the wide-ranging debate on how far its usefulness will stem at scale, an important thought will always remain at heart: we on occasions won’t be able to mitigate future impacts of bias until we’re right at the moment and the impact is being felt there and then.

Bibliography

[1] shaan-madhavji.medium.com. (n.d.). Leadership Bias: 12 cognitive biases to become a decisive leader. [online] Available at: https://hospitalityinsights.ehl.edu/leadership-bias.

Want to read more from Ana? Check out one of her articles below:

Navigating artificial intelligence in 2024

Discover how businesses can harness AI’s potential, balance innovation with ethics, and tackle the digital skills gap.

#2024#ai#AI systems#amp#Art#Articles#artificial#Artificial Intelligence#Bias#birthday#blur#C-suite#CEO#command#Companies#complexity#curiosity#data#diving#effects#efficiency#Environment#Environmental#environmental impact#Ethics#eye#Facts#fish#Future#gap

0 notes

Text

Over the past two years, more than 20 states have expanded access to state jobs through a simple move: assessing or removing bachelor’s degree requirements. With state, local, and federal governments employing 15% of the U.S. workforce, these actions are of enormous consequence, especially for “STARs,” or workers who are skilled through alternative routes. STARs—who have gained their skills through community college, the military, partial college, certification programs, and, most commonly, on-the-job training—represent over half of the nation’s workforce, and currently occupy approximately 2 million state jobs.

Government leaders see removing bachelor’s degree requirements as critical to meeting their hiring needs and public service delivery obligations. And at a time when states are struggling to fill a high number of open roles, removing these requirements can attract a larger pool of talent.

Many states already have laws or policies that forbid discrimination based on educational attainment. But in practice, hiring patterns have favored degrees, and the composition of the state workforce reflects this. While they comprise half of the workforce, STARs fill only 36% of state jobs—representing a gap of 1 million good state jobs for STARs nationwide.1 The explicit commitment to removing degree requirements is a signal to STARs that they are welcome to apply.

Further, these actions are meant to build a state workforce that reflects the community it serves. Historically, government employment has been used to improve economic equity, providing increased economic opportunities for members of historically disadvantaged groups (notably women and Black workers). In recent decades, however, the bias toward credentialing has resulted in the inadvertent exclusion of STARs, with disproportionate consequences. When a bachelor’s degree is required for a position, employers automatically screen out almost 80% of Latino or Hispanic workers and nearly 70% of Black, veteran, and rural workers. Increased STAR hiring will help correct this inequity.

It is still too early to measure the impact of these changes on hiring behavior, as it will take time while hiring numbers slowly accumulate through job turnover and new positions. Yet we can already see signs that the effort is bearing fruit. In the first quarter of this year, more than 20 states made a yearlong commitment to focus on skills-based hiring through the National Governors Association’s Skills in the States Community of Practice. As one of the lead partners, our organization—Opportunity@Work—supports states through peer learning to prepare and make action plans for the organizational changes needed to implement skills-based practices, which will ultimately improve hiring and advancement outcomes for STARs.

We also see changes in state job postings. We analyzed two years of data on jobs that paid over the national median wage and were posted by all the states that took action to remove degree requirements by April 2023.2 Our findings show that in the 12 months prior to these state actions, 51.1% of roles explicitly listed a bachelor’s degree as a requirement. In the 12 months following, that percentage fell to 41.8%—a nearly 10-point shift. The largest shifts occurred in job postings for roles in management, IT, administration, and human resources—all occupations in which STARs have been underrepresented in the public sector compared to the private sector. For example, in state governments, 69% of general and operations managers hold a bachelor’s degree, while only 45% do in the private sector.3

State leaders view these actions as a critical first step. “We are creating opportunities for everyone, not just those with higher education,” said Melissa Walker of the Colorado Department of Personnel and Administration. “We want to draw on all kinds of experience.” Colorado has pragmatically focused on policy implementation and behavior change; in addition to updates to agency rules and regulations, its executive order focused on a transition to skills-based hiring as the norm for Colorado human resources, including funding for the training of hiring managers and development of a skills-based hiring toolkit. The state’s Department of Personnel and Administration is providing training and resources for human resources teams across state agencies, enabling each to make the necessary changes in their processes and procedures. Resources include a new job description template designed to identify skills—a simple tool that promotes skills-first thinking and behavior change at the hiring manager level.

Culture and systems change both take time. Adjusting common processes and procedures—as well as attitudes and behavior—is challenging, especially in a large, decentralized state government. Yet more than 20 states have begun this hard work. This month, bolstered by these early successes, Opportunity@Work is proud to launch the STARs Public Sector Hub to support these states and others on their skills-based journeys and build the public workforce to meet this moment.

14 notes

·

View notes

Text

Invisible Women: Exposing Data Bias in a World Designed for Men (Caroline Criado-Perez, 2019)

"The upshot of failing to capture all this data is that women’s unpaid work tends to be seen as ‘a costless resource to exploit’, writes economics professor Sue Himmelweit.

And so when countries try to rein in their spending it is often women who end up paying the price.

Following the 2008 financial crash, the UK has seen a mass cutting exercise in public services.

Between 2011 and 2014 children’s centre budgets were cut by £82 million and between 2010 and 2014, 285 children’s centres either merged or closed.

Between 2010 and 2015 local-authority social-care budgets fell by £5 billion, social security has been frozen below inflation and restricted to a household maximum, and eligibility for a carers’ allowance depends on an earnings threshold that has not kept up with increases in the national minimum wage.

Lots of lovely money-saving.

The problem is, these cuts are not so much savings as a shifting of costs from the public sector onto women, because the work still needs to be done.

By 2017 the Women’s Budget Group estimated that approximately one in ten people over the age of fifty in England (1.86 million) had unmet care needs as a result of public spending cuts.

These needs have become, on the whole, the responsibility of women. (…)

In 2017 the House of Commons library published an analysis of the cumulative impact of the government’s ‘fiscal consolidation’ between 2010 and 2020.

They found that 86% of cuts fell on women.

Analysis by the Women’s Budget Group (WBG) found that tax and benefit changes since 2010 will have hit women’s incomes twice as hard as men’s by 2020.

To add insult to injury, the latest changes are not only disproportionately penalising poor women (with single mothers and Asian women being the worst affected), they are benefiting already rich men.

According to WBG analysis, men in the richest 50% of households actually gained from tax and benefit changes since July 2015."

16 notes

·

View notes