#computer generation

Explore tagged Tumblr posts

Text

Do you remember when people did this?:

I made an ai read 130 000 words of Nancy Drew & this is what it gave me!

[really weird media]

I think we need to bring that back. In those days people KNEW that computer generated media based on machine learning is just right enough that you can see that it fits the context but so not correct & not close to what it should be.

2 notes

·

View notes

Text

"I asked ChatGPT" OK well I ran it through the Bat Computer and it looks like Mr. Freeze is hiding out in the abandoned ice cream factory.

4K notes

·

View notes

Text

chipset and database learn about internet scams

#been having fun w these guys#i mentioned on bsky that i view these guys as hosts of an educational series where they teach about general computer literacy#chipset is very bad at internet and software stuff but is proficient w hardware. database is the opposite and has no idea what a CPU is#fern's sketchbook#original art#csdb

4K notes

·

View notes

Photo

Kenneth Snelson Chain Bridge Bodies (1990)

#art#kenneth snelson#graphic design#3d#render#computer generated#cgi#artwork#artist#90s#1990s#1990#u

3K notes

·

View notes

Text

youtube

#youtube video#computer technology#computer generation#computer science#computer basics#learn computer in Hindi#computer graphics#computer education#Hindi tutorial#technology explained#computer fundamentals#computing history#computer hardware#software tutorial#computer engineering#Hindi tech education#Youtube

0 notes

Text

They just like me fr

#my art#fanart#maccadam#transformers#cliffjumper#bumblebee#tf bumblebee#transformers g1#tf generation one#basing them off my childhood to feel something#the autobots share one computer for non mission stuff as a treat#transformers generation one#cliffjumper refuses to give up the computer so they share it instead

1K notes

·

View notes

Text

jayvik dune au?

#this has been on my computer for d a y s#might as well#ive been talking a lot about this au with a friend actually#the general gist is#messiah viktor soldier? jayce bene gesserit mel#of course at some point jayce realizes he needs to kill vik to prevent war#and you know how that goes#could go more in depth tomorrow maybe?#anyway the tags#art#digital art#fanart#my art#artists on tumblr#illustration#arcane#arcane league of legends#jayvik#jayce talis#viktor arcane#lol its always so funny how viktor arcane seems like his full government name

1K notes

·

View notes

Text

I suppose the thin silver lining to the drive to replace human customer service workers with generative AI is that the predatory robocall scammers no longer seem to have enough human operators to close the deal, so even if you play along, the robot just claims it's "transferring" you and then hangs up.

720 notes

·

View notes

Text

The indescribable tension between an overworked and underpaid smut writer, and his biggest fan hater.

(for @frummpets)

#SVSSS#Shen yuan#shang qinghua#cumplane#Normally I don't tag with ship names but this one is a special case.#Confession time: When I first had SVSSS described to me I 100% thought the main pairing was between these two.#The dynamic is impeccable! Even if its 'just a fan ship' I stand by it.#Sorry to the people who like to think of them as handsome pretty boys. I don't.#These guys sit in their rooms and use the computer for 90% of waking hours. They are not looking after themselves well enough for good skin#They can be cute in their own way. People with acne and missed shaving spots deserve to have their romances too.#And sloppy hate makeouts <3#Hi Sol! I truly did whoop and holler when drawing your name for the raffle!#You've been so kind and generous towards me and I'd happy to finally have the opportunity to give back some of that joy!#Thank you so much for all your support and the incredible fanart <3 You've made my day so many times!#I hope this silly mini comic is to your liking!#Playing around with colours for this one was a blast!

7K notes

·

View notes

Text

The problem with ai art isn't that you're "lazily typing in prompts" because it is actually incredibly difficult to get a result you want & there is a lot of work & thought that gets put in.

The problem is that the machine learning program is fed with stolen art.

Side note, can we please stop calling things ai? they are computer programs, software, machine learning, computer generation. Call it a program.

2 notes

·

View notes

Text

*GUNDAMs your clones*

#Star Wars#TCW#Commander Cody#Captain Rex#GUNDAM#I started this in a GUNDAM-happy fervor last summer because I'd been rewatching Witch from Mercury with a couple friends#And had a long commute good for crossover brainstorming#The art got put on the shelf for other things but it's finally done!#For some bonus info:#(more of which is in my first reblog haha)#A bunch of CC-2224's main body bits are also detachable GUND-Bits#I haven't decided if ARC-7567 has any GUND-Bits but maybe the kama detaches to do... something. Shielding maybe.#But they deffo also both have beam swords (CCs get 1 ARCs get 2) hidden away somewhere#They require pilots but each GUNDAM (General Utility Neural-Directed Assault Machine) has an unusually advanced computer intelligence#Nobody's quite sure how Kamino produces them...#If I was cool I would've gotten creative with the blasters/bits and made the text more mechanical but it was not happening chief#Anyways enjoy!#ID in alt text#Edit: flipped one of the flight packs for better visual symmetry

416 notes

·

View notes

Text

We need a slur for people who use AI

#ai#artificial intelligence#chatgpt#tech#technology#science#grok ai#grok#r/196#196#r/196archive#/r/196#rule#meme#memes#shitpost#shitposting#slur#chatbot#computers#computing#generative ai#generative art

300 notes

·

View notes

Text

BSOD v2 Ocean Waves

#vaporwave#bsod#technology#netart#cyberpunk#blue screen of death#ocean#windows#microsoft#computer#v a p o r w a v e#dalle2#openai#ai generated#waves#cyber#aesthetic#digital art#glitch retro

253 notes

·

View notes

Text

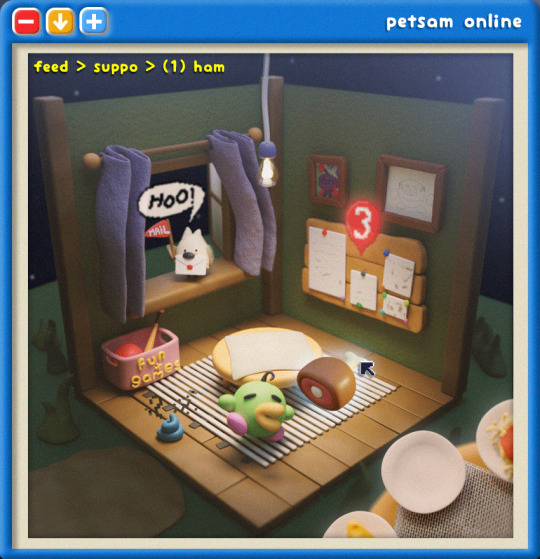

my dream pet sim

#3dart#art#artists on tumblr#blender#mockup#postpet inspired#postpet#i want to make a cozy online pet sim#with randomly generated little creatures#for the computer#where you and your friends creatures can make an egg together#and send letters :s#game idea#clay#pet sim#tamagotchi#pc games

316 notes

·

View notes

Text

should not have let movie companies get away with calling something mostly or entirely 3D animated "live action" just because it's realistically textured. they don't even have sets.

#'live action remake of an animated movie-' no they are both animated#honestly w modern cgi i don't even think having human actors makes it live action if like#>50% of the cast and a substantial number of sets lighting changes efc etc are computer generated#it made more sense to be called live action when cgi sucked. times have changed. if you are creating the whole scene with animation#i think that should be different. like live sctor or something. we have to make a distinction#patch me through to palaven command

233 notes

·

View notes