#business use cases for generative AI

Explore tagged Tumblr posts

Text

How Can Generative AI Enhance Your Business's Organisational and Operational Processes

Experience the future of business transformation with Generative AI. From customer support to content creation, sales optimization, and HR process automation, this cutting-edge technology is your key to enhanced efficiency and innovation. Learn how Generative AI can automate and elevate your operations while delivering personalized experiences and data-driven insights.

At Webclues Infotech, we're your partners in harnessing the true potential of Generative AI. Join us in this technological revolution and take your business to new heights in a fast-paced world. Read the blog for more insights.

#generative AI for customer support#Generative AI for sales#generative AI for marketing#Generating AI for HR#generative AI services#Generative AI chatbots#generative ai in business#business use cases for generative AI

0 notes

Text

there are many, many legitimate problems with generative ai, but if your main grievance with it is that it's making people "lazy" and/or ruining the sacred art of the corporate email, i'm going to have a very hard time taking anything you have to say seriously

#like if i had just woken up from a decade-long coma#and the only thing you told me about generative ai#was that people forced it to write cover letters for them#i would be saying yay yippee and other such things#i am ALWAYS in favor of people putting less effort into stupid office jobs that contribute nothing of value to society#not to mention if a business is using ai to filter job applications#(which i suspect is the case for the vast majority of the companies on indeed nowadays)#it kind of /deserves/ employees who outsource their work to chatgpt

4 notes

·

View notes

Text

Modern AI reshapes leadership. Replace ladders with loops, fluff with clarity, and learn how to lead with outcomes, not options. Trust level: recalibrated.

#AI leadership#AI strategy#AI use cases#blog fiction#business value#clarity over complexity#digital transformation#executive mindset#Generative AI#Humor#Joseph Kravis#outcome-based leadership#technology value#zero trust

0 notes

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

I have been on a Willy Wonkified journey today and I need y'all to come with me

It started so innocently. Scrolling Google News I come across this article on Ars Technica:

At first glance I thought what happened was parents saw AI-generated images of an event their kids were at and became concerned, then realized it was fake. The reality? Oh so much better.

On Saturday, event organizers shut down a Glasgow-based "Willy's Chocolate Experience" after customers complained that the unofficial Wonka-inspired event, which took place in a sparsely decorated venue, did not match the lush AI-generated images listed on its official website.... According to Sky News, police were called to the event, and "advice was given."

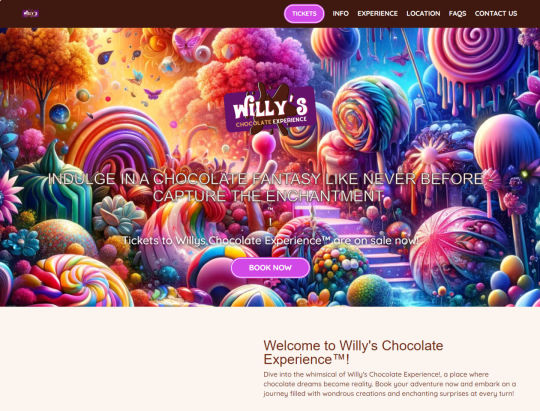

Thing is, the people who paid to go were obviously not expecting exactly this:

But I can see how they'd be a bit pissed upon arriving to this:

It gets worse.

"Tempest, how could it possibly--"

source of this video that also includes this charming description:

Made up a villain called The Unknown — 'an evil chocolate maker who lives in the walls'

There is already a meme.

Oh yes, the Wish.com Oompa Loompa:

Who has already done an interview!

As bad (and hilarious) as this all is, I got curious about the company that put on this event. Did they somehow overreach? Did the actors they hired back out at the last minute? (Or after they saw the script...) Oddly enough, it doesn't seem so!

Given what I found when poking around I'm legit surprised there was an event at all. Cuz this outfit seems to be 100% a scam.

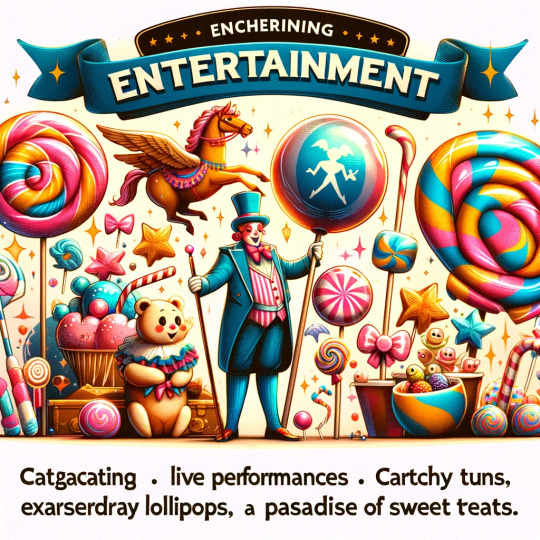

The website for this specific event is here and it has many AI generated images on it, as stated. I don't think anyone who bought tickets looked very closely at these images, otherwise they might have been concerned about how much Catgacating their children would be exposed to.

Yes, Catgacating. You know, CATgacating!

I personally don't think anyone should serve exarserdray flavored lollipops in public spaces given how many people are allergic to it. And the sweet teats might not have been age appropriate.

Though the Twilight Tunnel looks pretty cool:

I'm not sure that Dim Tight Twdrding is safe. I've also been warned that Vivue Sounds are in that weird frequency range that makes you poop your pants upon hearing them.

Yes, Virginia, these folks used an AI image generator for everything on the website and used Chat GPT for some of the text! From the FAQ:

Q: I cannot go on the available days. Will you have more dates in the future? A: Should there be capacity when you arrive, then you will be able to enter without any problems. In the event that this is not the case, we may ask you to wait a bit.

Fear not, for this question is asked again a few lines down and the answer makes more sense.

Curious about the events company behind this disaster, I took myself over to the homepage of House of Illuminati and I was not disappointed.

I would 100% trust these people to plan my wedding.

This abomination of a website is a badly edited WordPress blog filled with AI art and just enough blog posts to make the casual viewer think that it's a legit business for about 0.0004 seconds.

Their attention to detail is stunning, from how they left up the default first post every WP blog gets to how they didn't bother changing the name on several images, thus revealing where they came from. Like this one:

With the lovely and compact filename "DALL·E-2024-01-30-09.50.54-Imagine-a-scene-where-fantasy-and-reality-merge-seamlessly.-In-the-foreground-a-grand-interactive-gala-is-taking-place-filled-with-elegant-guests-i.png"

"Concept.png" came from the same AI generator that gets text almost, but not quiiiiiite right:

There are a suspicious number of .webp images in the uploads, which makes me think they either stole them from other sites where AI "art" was uploaded or they didn't want to pay for the hi-res versions of some and just grabbed the preview image.

The real fun came when I noticed this filename: Before-and-After-Eventologists-Transformation-Edgbaston-Cricket-Ground-1024x1024-1.jpg and decided to do a Google image search. Friends, you will be shocked to hear that the image in question, found on this post touting how they can transform a boring warehouse into a fun event space, was stolen from this actual event planner.

Even better, this weirdly grainy image?

From a post that claims to be about the preparations for a "Willy Wonka" experience (we'll get to this in a minute), is not only NOT an actual image of anyone preparing anything for Illuminati's event, it is stolen from a YouTube thumbnail that's been chopped to remove the name of the company that actually made this. Here's the video.

If you actually read the blog posts they're all copypasta or some AI generated crap. To the point where this seems like not a real business at all. There's very specific business information at the bottom, but nothing else seems real.

As I said, I'm kinda surprised they put on an event at all. This has, "And then they ran off with all our money!" written all over it. I'm perplexed.

And also wondering when the copyright lawyers are gonna start calling, because...

This post explicitly says they're putting together a "Willy Wonka’s Chocolate Factory Experience" complete with golden tickets.

Somewhere along the line someone must have wised up, because the actual event was called "Willys Chocolate Experience" (note the lack of apostrophe) and the script they handed to the actors about 10 minutes before they were supposed to "perform" was about a "Willy McDuff" and his chocolate factory.

As I was going through this madness with friends in a chat, one pointed out that it took very little prompting to get the free Chat GPT to spit out an event description and such very similar to all this while avoiding copyrighted phrases. But he couldn't figure out where the McDuff came from since it wasn't the type of thing GPT would usually spit out...

Until he altered the prompt to include it would be happening in Glasgow, Scotland.

You cannot make this stuff up.

But truly, honestly, I do not even understand why they didn't take the money and run. Clearly this was all set up to be a scam. A lazy, AI generated scam.

Everything from the website to the event images to the copy to the "script" to the names of things was either stolen or AI generated (aka stolen). Hell, I'd be looking for some poor Japanese visitor wandering the streets of Glasgow, confused, after being jacked for his mascot costume.

HE LIVES IN THE WALLS, Y'ALL.

#long post#Willy Wonka#Wonka#Willy Wonka Experience#Willy Wonka Experience disaster#Willy's Chocolate Experience#Willys Chocolate Experience#THE UNKNOWN#Wish.com Oompa Loompa#House of Illuminati#AI#ai generated

8K notes

·

View notes

Text

"Balaji’s death comes three months after he publicly accused OpenAI of violating U.S. copyright law while developing ChatGPT, a generative artificial intelligence program that has become a moneymaking sensation used by hundreds of millions of people across the world.

Its public release in late 2022 spurred a torrent of lawsuits against OpenAI from authors, computer programmers and journalists, who say the company illegally stole their copyrighted material to train its program and elevate its value past $150 billion.

The Mercury News and seven sister news outlets are among several newspapers, including the New York Times, to sue OpenAI in the past year.

In an interview with the New York Times published Oct. 23, Balaji argued OpenAI was harming businesses and entrepreneurs whose data were used to train ChatGPT.

“If you believe what I believe, you have to just leave the company,” he told the outlet, adding that “this is not a sustainable model for the internet ecosystem as a whole.”

Balaji grew up in Cupertino before attending UC Berkeley to study computer science. It was then he became a believer in the potential benefits that artificial intelligence could offer society, including its ability to cure diseases and stop aging, the Times reported. “I thought we could invent some kind of scientist that could help solve them,” he told the newspaper.

But his outlook began to sour in 2022, two years after joining OpenAI as a researcher. He grew particularly concerned about his assignment of gathering data from the internet for the company’s GPT-4 program, which analyzed text from nearly the entire internet to train its artificial intelligence program, the news outlet reported.

The practice, he told the Times, ran afoul of the country’s “fair use” laws governing how people can use previously published work. In late October, he posted an analysis on his personal website arguing that point.

No known factors “seem to weigh in favor of ChatGPT being a fair use of its training data,” Balaji wrote. “That being said, none of the arguments here are fundamentally specific to ChatGPT either, and similar arguments could be made for many generative AI products in a wide variety of domains.”

Reached by this news agency, Balaji’s mother requested privacy while grieving the death of her son.

In a Nov. 18 letter filed in federal court, attorneys for The New York Times named Balaji as someone who had “unique and relevant documents” that would support their case against OpenAI. He was among at least 12 people — many of them past or present OpenAI employees — the newspaper had named in court filings as having material helpful to their case, ahead of depositions."

3K notes

·

View notes

Text

With Agoge AI, users can expect a tailored approach to their communication needs. From perfecting negotiation techniques to enhancing presentation skills, this AI tool provides training to excel in business communication. 🗣️👥

#ai business#ai update#ai#ai community#ai developers#ai tools#ai discussion#ai development#ai generated#ai marketing#ai engineer#ai expert#ai revolution#ai technology#ai use cases#ai in digital marketing#ai integration#ai in ecommerce

0 notes

Note

genuinely curious but I don't know how to phrase this in a way that sounds less accusatory so please know I'm asking in good faith and am just bad at words

what are your thoughts on the environmental impact of generative ai? do you think the cost for all the cooling system is worth the tasks generative ai performs? I've been wrangling this because while I feel like I can justify it as smaller scales, that would mean it isn't a publicly available tool which I also feel uncomfortable with

the environmental impacts of genAI are almost always one of three things, both by their detractors and their boosters:

vastly overstated

stated correctly, but with a deceptive lack of context (ie, giving numbers in watt-hours, or amount of water 'used' for cooling, without necessary context like what comparable services use or what actually happens to that water)

assumed to be on track to grow constantly as genAI sees universal adoption across every industry

like, when water is used to cool a datacenter, that datacenter isn't just "a big building running chatgpt" -- datacenters are the backbone of the modern internet. now, i mean, all that said, the basic question here: no, i don't think it's a good tradeoff to be burning fossil fuels to power the magic 8ball. but asking that question in a vacuum (imo) elides a lot of the realities of power consumption in the global north by exceptionalizing genAI as opposed to, for example, video streaming, or online games. or, for that matter, for any number of other things.

so to me a lot of this stuff seems like very selective outrage in most cases, people working backwards from all the twitter artists on their dashboard hating midjourney to find an ethical reason why it is irredeemably evil.

& in the best, good-faith cases, it's taking at face value the claims of genAI companies and datacenter owners that the power usage will continue spiralling as the technology is integrated into every aspect of our lives. but to be blunt, i think it's a little naive to take these estimates seriously: these companies rely on their stock prices remaining high and attractive to investors, so they have enormous financial incentives not only to lie but to make financial decisions as if the universal adoption boom is just around the corner at all times. but there's no actual business plan! these companies are burning gigantic piles of money every day, because this is a bubble

so tldr: i don't think most things fossil fuels are burned for are 'worth it', but the response to that is a comprehensive climate politics and not an individualistic 'carbon footprint' approach, certainly not one that chooses chatgpt as its battleground. genAI uses a lot of power but at a rate currently comparable to other massively popular digital leisure products like fortnite or netflix -- forecasts of it massively increasing by several orders of magnitude are in my opinion unfounded and can mostly be traced back to people who have a direct financial stake in this being the case because their business model is an obvious boondoggle otherwise.

704 notes

·

View notes

Text

I implore all of you to please respect the artists around you. The Generative AI debate has always been good at one thing and that's revealing who doesn't respect artists and wants to use them as a means to an end. This comes down to just basic consent and respect for boundaries. Even when artists just bring up how they would like to have the power to opt out of data training (have legal protection if their work is scraped and transparency in data processes to ensure it hasn't) or be fairly compensated for their work being scraped by a multi-million or billion dollar company, they're called entitled gatekeepers. You're welcome to give your art, photos or writing to AI companies for free if you want, but others should be able to opt out or be paid.

All of these artists (none of whom are by any means rich and may even be actively struggling to afford necessities) are being treated with contempt, as if they're the elite 1% and access to art is impossible (there are hundreds if not thousands of small local artists that can be hired by small businesses for instance). It is disheartening to see so many people telling artists, many of whom have spent decades honing their crafts that they should be happy they're going un-credited and uncompensated. In any other case with copyright infringement or plagiarism an artist's ability to protect their work has been the norm for over a century, it shouldn't be any different now.

Artists should have say over what happens with their work, just as everyday people should have say over what happens with their own personal photos and likeness. If someone specifically took photographs of you and your family without permission and mixed them together to make "new" people for their advertisements, you probably wouldn't like that very much (and yes that is also happening. Gen AI doesn't just scrape art, it scrapes photos and doesn't discern between what's an ethical photo and what's not ((i.e., photos that would break the law to be in possession of, lets say))).

If your company model solely relies on unlimited access to everyone's art, writing, photos and likeness for free without permission and while deliberately violating personal boundaries, maybe your model shouldn't exist.

405 notes

·

View notes

Text

Why I don’t like AI art

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on Apr 2, and in BLOOMINGTON at MORGENSTERN BOOKS on Apr 4. More tour dates here.

A law professor friend tells me that LLMs have completely transformed the way she relates to grad students and post-docs – for the worse. And no, it's not that they're cheating on their homework or using LLMs to write briefs full of hallucinated cases.

The thing that LLMs have changed in my friend's law school is letters of reference. Historically, students would only ask a prof for a letter of reference if they knew the prof really rated them. Writing a good reference is a ton of work, and that's rather the point: the mere fact that a law prof was willing to write one for you represents a signal about how highly they value you. It's a form of proof of work.

But then came the chatbots and with them, the knowledge that a reference letter could be generated by feeding three bullet points to a chatbot and having it generate five paragraphs of florid nonsense based on those three short sentences. Suddenly, profs were expected to write letters for many, many students – not just the top performers.

Of course, this was also happening at other universities, meaning that when my friend's school opened up for postdocs, they were inundated with letters of reference from profs elsewhere. Naturally, they handled this flood by feeding each letter back into an LLM and asking it to boil it down to three bullet points. No one thinks that these are identical to the three bullet points that were used to generate the letters, but it's close enough, right?

Obviously, this is terrible. At this point, letters of reference might as well consist solely of three bullet-points on letterhead. After all, the entire communicative intent in a chatbot-generated letter is just those three bullets. Everything else is padding, and all it does is dilute the communicative intent of the work. No matter how grammatically correct or even stylistically interesting the AI generated sentences are, they have less communicative freight than the three original bullet points. After all, the AI doesn't know anything about the grad student, so anything it adds to those three bullet points are, by definition, irrelevant to the question of whether they're well suited for a postdoc.

Which brings me to art. As a working artist in his third decade of professional life, I've concluded that the point of art is to take a big, numinous, irreducible feeling that fills the artist's mind, and attempt to infuse that feeling into some artistic vessel – a book, a painting, a song, a dance, a sculpture, etc – in the hopes that this work will cause a loose facsimile of that numinous, irreducible feeling to manifest in someone else's mind.

Art, in other words, is an act of communication – and there you have the problem with AI art. As a writer, when I write a novel, I make tens – if not hundreds – of thousands of tiny decisions that are in service to this business of causing my big, irreducible, numinous feeling to materialize in your mind. Most of those decisions aren't even conscious, but they are definitely decisions, and I don't make them solely on the basis of probabilistic autocomplete. One of my novels may be good and it may be bad, but one thing is definitely is is rich in communicative intent. Every one of those microdecisions is an expression of artistic intent.

Now, I'm not much of a visual artist. I can't draw, though I really enjoy creating collages, which you can see here:

https://www.flickr.com/photos/doctorow/albums/72177720316719208

I can tell you that every time I move a layer, change the color balance, or use the lasso tool to nip a few pixels out of a 19th century editorial cartoon that I'm matting into a modern backdrop, I'm making a communicative decision. The goal isn't "perfection" or "photorealism." I'm not trying to spin around really quick in order to get a look at the stuff behind me in Plato's cave. I am making communicative choices.

What's more: working with that lasso tool on a 10,000 pixel-wide Library of Congress scan of a painting from the cover of Puck magazine or a 15,000 pixel wide scan of Hieronymus Bosch's Garden of Earthly Delights means that I'm touching the smallest individual contours of each brushstroke. This is quite a meditative experience – but it's also quite a communicative one. Tracing the smallest irregularities in a brushstroke definitely materializes a theory of mind for me, in which I can feel the artist reaching out across time to convey something to me via the tiny microdecisions I'm going over with my cursor.

Herein lies the problem with AI art. Just like with a law school letter of reference generated from three bullet points, the prompt given to an AI to produce creative writing or an image is the sum total of the communicative intent infused into the work. The prompter has a big, numinous, irreducible feeling and they want to infuse it into a work in order to materialize versions of that feeling in your mind and mine. When they deliver a single line's worth of description into the prompt box, then – by definition – that's the only part that carries any communicative freight. The AI has taken one sentence's worth of actual communication intended to convey the big, numinous, irreducible feeling and diluted it amongst a thousand brushtrokes or 10,000 words. I think this is what we mean when we say AI art is soul-less and sterile. Like the five paragraphs of nonsense generated from three bullet points from a law prof, the AI is padding out the part that makes this art – the microdecisions intended to convey the big, numinous, irreducible feeling – with a bunch of stuff that has no communicative intent and therefore can't be art.

If my thesis is right, then the more you work with the AI, the more art-like its output becomes. If the AI generates 50 variations from your prompt and you choose one, that's one more microdecision infused into the work. If you re-prompt and re-re-prompt the AI to generate refinements, then each of those prompts is a new payload of microdecisions that the AI can spread out across all the words of pixels, increasing the amount of communicative intent in each one.

Finally: not all art is verbose. Marcel Duchamp's "Fountain" – a urinal signed "R. Mutt" – has very few communicative choices. Duchamp chose the urinal, chose the paint, painted the signature, came up with a title (probably some other choices went into it, too). It's a significant work of art. I know because when I look at it I feel a big, numinous irreducible feeling that Duchamp infused in the work so that I could experience a facsimile of Duchamp's artistic impulse.

There are individual sentences, brushstrokes, single dance-steps that initiate the upload of the creator's numinous, irreducible feeling directly into my brain. It's possible that a single very good prompt could produce text or an image that had artistic meaning. But it's not likely, in just the same way that scribbling three words on a sheet of paper or painting a single brushstroke will produce a meaningful work of art. Most art is somewhat verbose (but not all of it).

So there you have it: the reason I don't like AI art. It's not that AI artists lack for the big, numinous irreducible feelings. I firmly believe we all have those. The problem is that an AI prompt has very little communicative intent and nearly all (but not every) good piece of art has more communicative intent than fits into an AI prompt.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/25/communicative-intent/#diluted

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#art#uncanniness#eerieness#communicative intent#gen ai#generative ai#image generators#artificial intelligence#generative artificial intelligence#gen artificial intelligence#l

542 notes

·

View notes

Text

The reason I took interest in AI as an art medium is that I've always been interested in experimenting with novel and unconventional art media - I started incorporating power tools into a lot of my physical processes younger than most people were even allowed to breathe near them, and I took to digital art like a duck to water when it was the big, relatively new, controversial thing too, so really this just seems like the logical next step. More than that, it's exciting - it's not every day that we just invent an entirely new never-before-seen art medium! I have always been one to go fucking wild for that shit.

Which is, ironically, a huge part of why I almost reflexively recoil at how it's used in the corporate world: because the world of business, particularly the entertainment industry, has what often seems like less than zero interest in appreciating it as a novel medium.

And I often wonder how much less that would be the case - and, by extension, how much less vitriolic the discussion around it would be, and how many fewer well-meaning people would be falling for reactionary mythologies about where exactly the problems lie - if it hadn't reached the point of...at least an illusion of commercial viability, at exactly the moment it did.

See, the groundwork was laid in 2020, back during covid lockdowns, when we saw a massive spike in people relying on TV, games, books, movies, etc. to compensate for the lack of outdoor, physical, social entertainment. This was, seemingly, wonderful for the whole industry - but under late-stage capitalism, it was as much of a curse as it was a gift. When industries are run by people whose sole brain process is "line-go-up", tiny factors like "we're not going to be in lockdown forever" don't matter. CEOs got dollar signs in their eyes. Shareholders demanded not only perpetual growth, but perpetual growth at this rate or better. Even though everyone with an ounce of common sense was screaming "this is an aberration, this is not sustainable" - it didn't matter. The business bros refused to believe it. This was their new normal, they were determined to prove -

And they, predictably, failed to prove it.

So now the business bros are in a pickle. They're beholden to the shareholders to do everything within their power to maintain the infinite growth they promised, in a world with finite resources. In fact, by precedent, they're beholden to this by law. Fiduciary duty has been interpreted in court to mean that, given the choice between offering a better product and ensuring maximum returns for shareholders, the latter MUST be a higher priority; reinvesting too much in the business instead of trying to make the share value increase as much as possible, as fast as possible, can result in a lawsuit - that a board member or CEO can lose, and have lost before - because it's not acting in the best interest of shareholders. If that unsustainable explosive growth was promised forever, all the more so.

And now, 2-3-4 years on, that impossibility hangs like a sword of Damocles over the heads of these media company CEOs. The market is fully saturated; the number of new potential customers left to onboard is negligible. Some companies began trying to "solve" this "problem" by violating consumer privacy and charging per household member, which (also predictably) backfired because those of us who live in reality and not statsland were not exactly thrilled about the concept of being told we couldn't watch TV with our own families. Shareholders are getting antsy, because their (however predictably impossible) infinite lockdown-level profits...aren't coming, and someone's gotta make up for that, right? So they had already started enshittifying, making excuses for layoffs, for cutting employee pay, for duty creep, for increasing crunch, for lean-staffing, for tightening turnarounds-

And that was when we got the first iterations of AI image generation that were actually somewhat useful for things like rapid first drafts, moodboards, and conceptualizing.

Lo! A savior! It might as well have been the digital messiah to the business bros, and their eyes turned back into dollar signs. More than that, they were being promised that this...both was, and wasn't art at the same time. It was good enough for their final product, or if not it would be within a year or two, but it required no skill whatsoever to make! Soon, you could fire ALL your creatives and just have Susan from accounting write your scripts and make your concept art with all the effort that it takes to get lunch from a Star Trek replicator!

This is every bit as much bullshit as the promise of infinite lockdown-level growth, of course, but with shareholders clamoring for the money they were recklessly promised, executives are looking for anything, even the slightest glimmer of a new possibility, that just might work as a life raft from this sinking ship.

So where are we now? Well, we're exiting the "fucking around" phase and entering "finding out". According to anecdotes I've read, companies are, allegedly, already hiring prompt engineers (or "prompters" - can't give them a job title that implies there's skill or thought involved, now can we, that just might imply they deserve enough money to survive!)...and most of them not only lack the skill to manually post-process their works, but don't even know how (or perhaps aren't given access) to fully use the software they specialize in, being blissfully unaware of (or perhaps not able/allowed to use) features such as inpainting or img2img. It has been observed many times that LLMs are being used to flood once-reputable information outlets with hallucinated garbage. I can verify - as can nearly everyone who was online in the aftermath of the Glasgow Willy Wonka Dashcon Experience - that the results are often outright comically bad.

To anyone who was paying attention to anything other than please-line-go-up-faster-please-line-go-please (or buying so heavily into reactionary mythologies about why AI can be dangerous in industry that they bought the tech companies' false promises too and just thought it was a bad thing), this was entirely predictable. Unfortunately for everyone in the blast radius, common sense has never been an executive's strong suit when so much money is on the line.

Much like CGI before it, what we have here is a whole new medium that is seldom being treated as a new medium with its own unique strengths, but more often being used as a replacement for more expensive labor, no matter how bad the result may be - nor, for that matter, how unjust it may be that the labor is so much cheaper.

And it's all because of timing. It's all because it came about in the perfect moment to look like a life raft in a moment of late-stage capitalist panic. Any port in a storm, after all - even if that port is a non-Euclidean labyrinth of soggy, rotten botshit garbage.

Any port in a storm, right? ...right?

All images generated using Simple Stable, under the Code of Ethics of Are We Art Yet?

#ai art#generated art#generated artwork#essays#about ai#worth a whole 'nother essay is how the tech side exists in a state that is both thriving and floundering at the same time#because the money theyre operating with is in schrodinger's box#at the same time it exists and it doesnt#theyre highly valued but usually operating at a loss#that is another MASSIVE can of worms and deserves its own deep dive

449 notes

·

View notes

Text

Fascinated by what the business case could be for Coca-Cola using generative AI.

400 notes

·

View notes

Text

It feels like no one should have to say this, and yet we are in a situation where it needs to be said, very loudly and clearly, before it’s too late to do anything about it: The United States is not a startup. If you run it like one, it will break.

The onslaught of news about Elon Musk’s takeover of the federal government’s core institutions is altogether too much—in volume, in magnitude, in the sheer chaotic absurdity of a 19-year-old who goes by “Big Balls” helping the world’s richest man consolidate power. There’s an easy way to process it, though.

Donald Trump may be the president of the United States, but Musk has made himself its CEO.

This is bad on its face. Musk was not elected to any office, has billions of dollars of government contracts, and has radicalized others and himself by elevating conspiratorial X accounts with handles like @redpillsigma420. His allies control the US government’s human resources and information technology departments, and he has deployed a strike force of eager former interns to poke and prod at the data and code bases that are effectively the gears of democracy. None of this should be happening.

It is, though. And while this takeover is unprecedented for the government, it’s standard operating procedure for Musk. It maps almost too neatly to his acquisition of Twitter in 2022: Get rid of most of the workforce. Install loyalists. Rip up safeguards. Remake in your own image.

This is the way of the startup. You’re scrappy, you’re unconventional, you’re iterating. This is the world that Musk’s lieutenants come from, and the one they are imposing on the Office of Personnel Management and the General Services Administration.

What do they want? A lot.

There’s AI, of course. They all want AI. They want it especially at the GSA, where a Tesla engineer runs a key government IT department and thinks AI coding agents are just what bureaucracy needs. Never mind that large language models can be effective but are inherently, definitionally unreliable, or that AI agents—essentially chatbots that can perform certain tasks for you—are especially unproven. Never mind that AI works not just by outputting information but by ingesting it, turning whatever enters its maw into training data for the next frontier model. Never mind that, wouldn’t you know it, Elon Musk happens to own an AI company himself. Go figure.

Speaking of data: They want that, too. DOGE agents are installed at or have visited the Treasury Department, the National Oceanic and Atmospheric Administration, the Small Business Administration, the Centers for Disease Control and Prevention, the Centers for Medicare and Medicaid Services, the Department of Education, the Department of Health and Human Services, the Department of Labor. Probably more. They’ve demanded data, sensitive data, payments data, and in many cases they’ve gotten it—the pursuit of data as an end unto itself but also data that could easily be used as a competitive edge, as a weapon, if you care to wield it.

And savings. They want savings. Specifically they want to subject the federal government to zero-based budgeting, a popular financial planning method in Silicon Valley in which every expenditure needs to be justified from scratch. One way to do that is to offer legally dubious buyouts to almost all federal employees, who collectively make up a low-single-digit percentage of the budget. Another, apparently, is to dismantle USAID just because you can. (If you’re wondering how that’s legal, many, many experts will tell you that it’s not.) The fact that the spending to support these people and programs has been both justified and mandated by Congress is treated as inconvenience, or maybe not even that.

Those are just the goals we know about. They have, by now, so many tentacles in so many agencies that anything is possible. The only certainty is that it’s happening in secret.

Musk’s fans, and many of Trump’s, have cheered all of this. Surely billionaires must know what they’re doing; they’re billionaires, after all. Fresh-faced engineer whiz kids are just what this country needs, not the stodgy, analog thinking of the past. It’s time to nextify the Constitution. Sure, why not, give Big Balls a memecoin while you’re at it.

The thing about most software startups, though, is that they fail. They take big risks and they don’t pay off and they leave the carcass of that failure behind and start cranking out a new pitch deck. This is the process that DOGE is imposing on the United States.

No one would argue that federal bureaucracy is perfect, or especially efficient. Of course it can be improved. Of course it should be. But there is a reason that change comes slowly, methodically, through processes that involve elected officials and civil servants and care and consideration. The stakes are too high, and the cost of failure is total and irrevocable.

Musk will reinvent the US government in the way that the hyperloop reinvented trains, that the Boring company reinvented subways, that Juicero reinvented squeezing. Which is to say he will reinvent nothing at all, fix no problems, offer no solutions beyond those that further consolidate his own power and wealth. He will strip democracy down to the studs and rebuild it in the fractious image of his own companies. He will move fast. He will break things.

103 notes

·

View notes

Text

MOTORSPORTS ZINE JAM!!

Calling all motorsport enthusiasts! Do you want to express and share your love for Formula 1? Or maybe you'd like to work with other creatives passionate about MotoGP? Or perhaps you want to promote a racing series like Super Formula or IMSA? This is the zine jam for you!

We (a few of us on F1twt) are hosting a digital motorsports zine jam to foster creativity in our community and would like to extend the invitation to motorsport lovers on Tumblr. All types of art for any motorsports series are welcome in this jam!

This zine jam will likely be taking place in either June or August depending on participant responses in the interest check. There are basic rules and an FAQ under the break below to learn more! We hope you join us!

Complete the interest check, whether that be participant, moderator, or just a reader! This form will close in a week!

Rules:

We don't have many rules for this jam as we want to encourage creativity and collaboration! That being said...

Hate speech of any kind (racism, homophobia, transphobia, etc.) will not be tolerated.

This event is SFW, and there are minors involved. Shipping content is allowed as long as it remains SFW.

Do not use Generative AI to create your works. If you are found to use GenAI you will be barred from participating now or in the future.

FAQ:

Q: What is a zine/zine jam?

A: A fanzine is a non-professional and non-official publication produced by enthusiasts of a particular cultural phenomenon. A zine jam is a period of time, (in our case one month), where participants will be tasked with planning, executing, formatting, and posting a zine.

Q: Do I need zine experience to participate?

A: No! We aim to make this experience as accessible as possible and will be posting guides and resources to help with zine creation. You also do not need any experience in art, design, or writing to join this jam. We just want to encourage creating cool motorsports stuff!

Q: Is there a size/length limit to the zine I can make?

A: There are no limits! We have resources and examples that can be shared if you would like some guidance or inspiration.

Q: What if I want to make a physical zine?

A: You are absolutely allowed to make a physical zine if that would be preferable. However, as this is a digital event, we would ask that you create a zine that can be easily transferred online, whether that be taking photos or scanning and uploading your physical zine.

Q: How will communication be done for this jam?

A: Communication will be through a Discord server we are currently setting up. We will also be posting updates for Tumblr users on this sideblog. You can also keep up with general happenings on the organiser Eames' Twitter which can be found here.

Q: Why is July not an option for this zine jam?

A: Eames is really busy and hosts events for other fandoms in July. If this first round of this event goes well, we may consider moving the jam to a more accessible month.

Here is a Twitter thread with more questions that have been asked.

If you have more questions/concerns and have already filled out the form, please direct them to Eames @/porgerussell on Twitter or to their Strawpage here! As Eames is the organiser we would prefer you direct your questions to them as they would have the most complete answers. However, we are also able to respond to asks and questions on this sideblog if you really don't want to go on Twitter.

#f1#formula 1#max verstappen#yuki tsunoda#lando norris#oscar piastri#george russell#kimi antonelli#lewis hamilton#charles leclerc#isack hadjar#liam lawson#alex albon#carlos sainz#ollie bearman#esteban ocon#pierre gasly#jack doohan#gabriel bortoleto#nico hulkenberg#fernando alonso#lance stroll#sebastian vettel#michael schumacher#kimi raikkonen#nico rosberg#jenson button#mark webber#fandom zine#zine promo

67 notes

·

View notes

Text

Tokyo Debunker WickChat Icons

as of posting this no chat with the Mortkranken ghouls has been released, so their icons are not here. If I forget to update when they come out, send me an ask!

Jin's is black, but not the default icon. An icon choice that says "do not percieve me."

Tohma's is the Frostheim crest. Very official, he probably sends out a lot of official Frostheim business group texts.

Kaito's is a doodle astronaut! He has the same astronaut on his phone case! He canonically likes stars, but I wonder if this is a doodle he made himself and put it on his phone case or something?

Luca's is maybe a family crest?

Alan's is the default icon. He doesn't know how to set one up, if I were to take an educated guess. . . .

Leo's is himself, looking cute and innocent. Pretty sure this is an altered version of the 'Leo's is himself, looking cute and innocent. Pretty sure this is an altered version of the 'DATA DELETED' panel from Episode 2 Chapter 2.

Sho's is Bonnie!!! Fun fact, in Episode 2 Chapter 2 you can see that Bonnie has her name spraypainted/on a decal on her side!

Haru's is Peekaboo! Such a mommy blogger choice.

Towa's is some sort of flowers! I don't know flowers well enough to guess what kind though.

Ren's is the "NAW" poster! "NAW" is the in-world version of Jaws that Ren likes, and you can see the same poster over his bed.

Taiga's is a somewhat simplified, greyscale version of the Sinostra crest with a knife stabbing through it and a chain looping behind it. There are also roses growing behind it. Basically says "I Am The Boss Of Sinostra."

Romeo's is likely a brand logo. It looks like it's loosely inspired by the Gucci logo? I don't follow things like this, this honestly could be his family's business logo now that I think about it.

Ritsu's is just himself. Very professional.

Subaru's is hydrangeas I think! Hydrangeas in Japan represent a lot of things apparently, like fidelity, sincerity, remorse, and forgiveness, which all fit Subaru pretty well I think lol. . . .

Haku's is a riverside? I wonder if this is near where his family is from? It looks familiar, but a quick search isn't bringing anything up that would tell me where it is. . .

Zenji's is his professional logo I guess? The kanji used is 善 "Zen" from his first name! It means "good" or "right" or "virtue"!

Edward's appears to be a night sky full of stars. Not sure if the big glowing one is the moon or what. . . .

Rui's is a mixed drink! Assuming this is an actual cocktail of some sort, somebody else can probably figure out what it is. Given the AI generated nature of several images in the game, it's probably not real lol.

Lyca's is his blankie! Do not wash it. Or touch it. It's all he's got.

Yuri's is his signature! Simple and professional, but a little unique.

Jiro's is a winged asklepian/Rod of Asclepius in front of a blue cross? ⚕ Not sure what's at the top of the rod. A fancy syringe plunger maybe? It's very much a symbol of medicine and healing, so his is also very professional. Considering he sends you texts regarding your appointments, it might be the symbol of Mortkranken's medical office?

These are from the NPCs the PC was in the "Concert Buds" group chat! The icons are pretty generic, a cat silhouette staring at a starry sky(SickleMoon), a pink, blue, and yellow gradient swirl(Pickles), a cute panda(Corby), and spider lilies(Mina). Red spider lilies in Japan are a symbol of death--and Mina of course cursed the PC, allegedly cursing them to death in a year.

#tokyo debunker#danie yells at tokyo debunker#not sure if anon meant these or not but here they are anyway lol#tdb ref

217 notes

·

View notes

Text

yesterday a colleague asked me to review a document for him that would appear in an internal team newsletter. it was about a project he worked on and its positive benefits to a bigger project I am working on. So towards the end of the requested review period I sit down to read it and... the first paragraph was just grossly wrong, emphatically stating that his project was "critical in the value and long-term stability" of my project when actually it was only relevant to 1-5% of use cases. Beneficial yes, but far away from being the bread and butter. This isn't even a matter of opinion - it's bald, inarguable fact. His project is mechanically not relevant to most of the use cases in my project. He also knows this and isn't a nefarious credit stealer, so I was confused, especially since I thought I was arriving in the document after others had taken a pass. I made some polite but clear comments/edits pointing out how the relevance and influence of his work on mine was overstated, and mentioned it in the next meeting we had to make sure there was closure.

He says: oh yeah, we wrote it with AI. that's the AI.

folks, he did not edit it at all before having me do that work. he had the knowledge and awareness to make the exact same edits I did, and did not. 1) I am furious to have been relegated to the fact-picker for AI slurry, and 2) if I had not done so this might have become the document they published. like this is what is going to keep happening when people use generative AI to make work documents. even when they are well-intentioned (and my colleague genuinely is! he means well!) they will plan to edit past the AI slurry, get too busy, and then won't. and the bad documents will be called final, in all their garbage glory.

#this is not an april fools day post but the joke IS on me#turning off rbs because I don't want to hear the broader webbed site's opinions on AI#but folks when I say the murderous urge was strong in me... hoo boy#op

55 notes

·

View notes