#because apple is not a functioning computer designer

Explore tagged Tumblr posts

Text

not gonna go into details but my mum chooses the most ridiculous way to store and sort all of our photos it makes no sense and is genuinely impossible to use but she’s sunk enough time and money into this system that it doesn’t seem worth changing. and this is now my problem because she’s asked me to upload and sort my entire five person family’s photos going back three fucking years

#as in. she wants to keep the original date on the photos fine#but there is as far as i can see no way to convert a heic file to a jpg while maintaining that metadata#because apple is not a functioning computer designer#i hate macs soooo much and my whole family uses them and this is what has caused this insane problem#for context theres more to this stupid story and it justifies my anger but holy fuck is this frustrating#and tbh if you keep the file in a correctly labelled folder like yeah it is annoying not to have the correct metadata but#its not the end of the world#ais.log

0 notes

Text

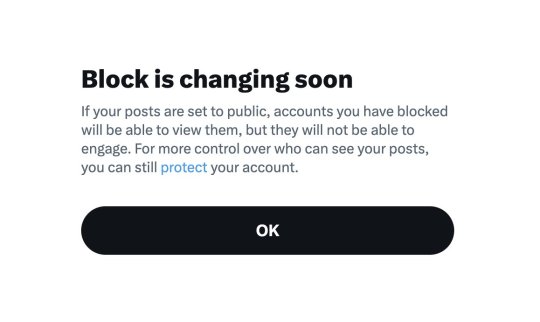

OK since I haven't seen too many people talk about this since twitter news usually strikes pretty fast over here whenever e'usk does anything ever, let me give ya'll the run down on two things that will go live on NOVEMBER 15TH and why people are mass migrating to Blue Sky once more; and provide resources to help protect your art and make the transition to Blue Sky easier if you so choose:

The Block function no longer blocks people as intended. It now basically acts as a glorified Mute button. Even when you block someone, they can still see your posts, but they can't engage in them. If your account is a Public one and not a Private one, people you blocked will see your posts.

They say because people can easily "share and hide harmful or private information about those they've blocked," they changed it this way for "greater transparency." When in reality, this is an extremely dangerous change, as the whole point of blocking is to cease interaction with people entirely for a plethora of reasons, i.e. stalking, harassment, spam, endangerment, or just plainly annoying and not wanting to see said tweets/accounts. or you know, for 18+ accounts who do not want minors interacting with them or their material at all (There is speculation saying these changes are specifically for Elon himself so he can do his own kind of stalking, and honestly, with the private likes change, it lowkey checks out in my opinion)

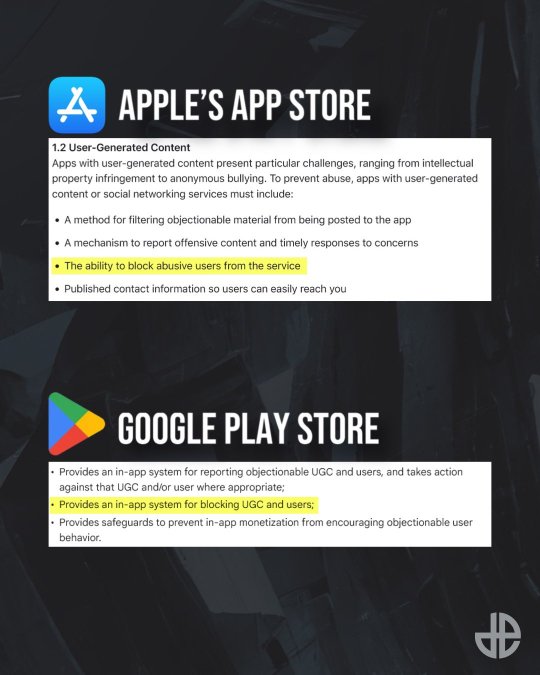

Also, this straight up goes against and may violate Apple and Google's app store policies and also is straight up illegal in Canada and probably other countries as well.

If this ACTUALLY goes through, twitter will only be available in select countries, probably exclusively in the US, which would collapse the site with the lost of users and stock, and probably be the last push it needs to kill the site. And if not, will be a very sad and exclusive platform made for specific kinds of people who line up with musk's line of thinking.

2. New policies regarding Grok AI and basically removing the option to opt out of Grok's information gathering to improve their software.

And anything you upload/post on the site is considered "fair game" with "royalty-free licenses" and they can do whatever they please with it. Primarily using any and all posts on twitter to train their Grok AI. A few months ago, there was a setting you can opt out of so they couldn't take anything you post to "improve" Grok, but I guess because so many people were opting out, they decided to make it mandatory as part of the policy change (This is mainly speculation from what I hear).

So this is considered the final straw for a LOT of people, especially artists who have been gripping on to twitter for as long as they can, but the AI nonsense is too much for people now, including myself. Lot's of people are moving to Blue Sky for good reason, and from personal experience, it is literally 10x better than twitter ever was, even before elon took over. There is no algorithm on there, and you can save "feeds" to your timeline to have a catered timelines to hop between if your looking for something specific like furry art or game dev stuff. It's taken them a bit to get off the ground and add much needed features, but it's genuinely so much better now

RESOURCES

Project Glaze & Cara

If you're an artist who's still on twitter or trying to ride it out for as long as you can for whatever reason you have, do yourself a favor and Glaze and/or Nightshade your work. Project Glaze is a free program designed to protect your art work from getting scrapped by AI machines. Glazing basically makes it harder to adapt and copy artwork that AI programs try to scan, while Nightshade basically "poisons" works to make AI libraries much more unstable and generate images completely off the mark. (These are layman's terms I'm using here, but follow the link to get more information)

The only problem with these programs is that they can be resource intensive for computers, and not every pc can run glaze. It's basically like rendering a frame/animation, you gotta let your pc sit there to get it glazed/nightshade, and depending on the intensity and power of your pc, this may take minutes to hours depending on how much you wanna protect your work.

HOWEVER, there are two alternatives, WebGlaze and Cara

WebGlaze is an in browser version of the program, so your pc doesn't have to do the heavy lifting. You do need to have an account with Glaze and be invited to use the program (I have not done so personally so I don't know much about the process.)

Cara is an artist focused site that doubles as both a portfolio site and a general social media platform. They've partnered with Glaze and have their own browser glazing called "Cara Glaze," and highly encourage users to post their work Glazed and are extremely anti-ai. You do get limited uses per day to glaze your work, so if you plan on doing a huge backlog uploading of your art, it may take awhile if your using just Cara Glaze.

Some twitter users have suggested glazing your art, cropping it, and overlaying it with a frame telling people to follow them elsewhere like on Bluesky. Here's a template someone provided if you wanna use this one or make your own.

Blue Sky Resources and Tips

So if your a twitter user and your about to realize the hellish task of refollowing a massive chunk of people you follow, have no fear, there's an extension called Sky Follower Bridge (Firefox & Chrome links). This is a very basic extension that makes it really easy to find people on Bluesky

It sorts them out by trying to find matching usernames, usernames in descriptions, or by screen name. It's not 100% perfect, there's a couple people I already follow on Blue Sky but the extension could not find them on twitter correctly, but I still found a huge chunk of people. Also if your worried that this extension is "iffy," they do have a github open with the source publicly available and the Blue Sky Team themselves have promoted the extension in their recent posts while welcoming new users to the platform.

FEEDS and LABELS

OK SO THE COOLEST PART ABOUT BLUESKY IS THE FEEDS SYSTEM. Basically if you've made a twitter list before, it's like that, but way more customizable and caters to specific types of posts/topics. Consolidating them into a timeline/feed that exclusively filled about those particular topics, or just people in general. There's thousands to pick and choose from!

Here's a couple of mine that I have saved and ready (down below). Some feeds I have saved so I can jump to seeing what my friends and mutuals are up to, and see their posts specifically so it doesn't get lost in reposts or other accounts, and also specialized feeds for browsing artists within the furry community.

The Furry Community feeds I have here were created by people who've built an algorithm to place any #furry or #furryart or other special tags like #Furrystreamer or #furrydev. They even have one for commissions, and yes you can say commissions on a post and not have it destroyed or shadow banned. You are safe.

If you want, and I highly recommend it to get visibility and check out a neat community, follow furryli.st to get added to their list and feeds. Once your on the list, even without a hashtag, you'll still pop up in their specialized feeds as just a member of the community there. There are plenty of other feeds out there besides this one, but I feel like a lot of people could use one like this. They even got ones for OC specific too I remember seeing somewhere.

And in terms of labels, they can be either ways to help label yourself with specific things or have user created accessibility settings to help better control your experience on Blue Sky.

And my personal favorite: Ai Imagery Labeler. Removes any AI stuff or hides it to the best of it's abilities, and it does a pretty good job, I have not seen anything AI related since subscribing to it.

Finally, HASHTAGS WORK & No need to censor yourself!

This is NOT like twitter or any other big named social media site AT ALL, so you don't have to work around words to get your stuff out there and be seen. There are literally feeds built around having commissions getting and art seen! Some people worry about bots and that has been a recent issue since a lot of people are migrating to Blue Sky, but it comes with any social media territory.

ALSO COOL PART,

you can search a hashtag on someone's profile and search exclusively on that profile as well! You can even put the hashtag in bio for easy access if you have a specialize tag like here on tumblr. OR EVEN BUILD YOUR OWN ART FEED FOR YOUR STUFF SPECIFICALLY!

So yeah, there's your quick run down about twitter's current burning building, how to protect your art, and what to do when you move to Blue Sky! Have fun!

#Twitter#Blue Sky#BlueSky#Cara#Project Glaze#Glazed Art#NightShade#Twitter Update#cara artists#art resource#resource#Online resource

671 notes

·

View notes

Note

Hello! First, I wanted to say thank you for your post about updating software and such. I really appreciated your perspective as someone with ADHD. The way you described your experiences with software frustration was IDENTICAL to my experience, so your post made a lot of sense to me.

Second, (and I hope my question isn't bothering you lol) would you mind explaining why it's important to update/adopt the new software? Like, why isn't there an option that doesn't involve constantly adopting new things? I understand why they'd need to fix stuff like functional bugs/make it compatible with new tech, but is it really necessary to change the user side of things as well?

Sorry if those are stupid questions or they're A Lot for a tumblr rando to ask, I'd just really like to understand because I think it would make it easier to get myself to adopt new stuff if I understand why it's necessary, and the other folks I know that know about computers don't really seem to understand the experience.

Thank you so much again for sharing your wisdom!!

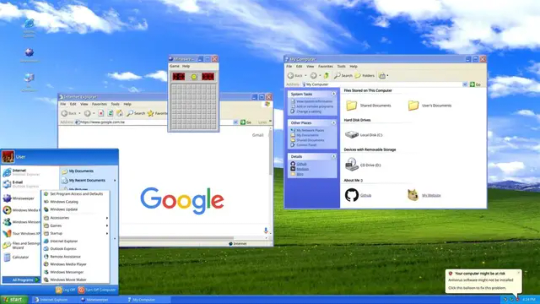

A huge part of it is changing technologies and changing norms; I brought up Windows 8 in that other post and Win8 is a *great* example of user experience changing to match hardware, just in a situation that was an enormous mismatch with the market.

Win8's much-beloathed tiles came about because Microsoft seemed to be anticipating a massive pivot to tablet PCs in nearly all applications. The welcome screen was designed to be friendly to people who were using handheld touchscreens who could tap through various options, and it was meant to require more scrolling and less use of a keyboard.

But most people who the operating system went out to *didn't* have touchscreen tablets or laptops, they had a desktop computer with a mouse and a keyboard.

When that was released, it was Microsoft attempting to keep up with (or anticipate) market trends - they wanted something that was like "the iPad for Microsoft" so Windows 8 was meant to go with Microsoft Surface tablets.

We spent the first month of Win8's launch making it look like Windows 7 for our customers.

You can see the same thing with the centered taskbar on Windows 11; that's very clearly supposed to mimic the dock on apple computers (only you can't pin it anywhere but the bottom of the screen, which sucks).

Some of the visual changes are just trends and various companies trying to keep up with one another.

With software like Adobe I think it's probably based on customer data. The tool layout and the menu dropdowns are likely based on what people are actually looking for, and change based on what other tools people are using. That's likely true for most programs you use - the menu bar at the top of the screen in Word is populated with the options that people use the most; if a function you used to click on all the time is now buried, there's a possibility that people use it less these days for any number of reasons. (I'm currently being driven mildly insane by Teams moving the "attach file" button under a "more" menu instead of as an icon next to the "send message" button, and what this tells me is either that more users are putting emojis in their messages than attachments, or microsoft WANTS people to put more emojis than messages in their attachments).

But focusing on the operating system, since that's the big one:

The thing about OSs is that you interact with them so frequently that any little change seems massive and you get REALLY frustrated when you have to deal with that, but version-to-version most OSs don't change all that much visually and they also don't get released all that frequently. I've been working with windows machines for twelve years and in that time the only OSs that Microsoft has released were 8, 10, and 11. That's only about one OS every four years, which just is not that many. There was a big visual change in the interface between 7 and 8 (and 8 and 8.1, which is more of a 'panicked backing away' than a full release), but otherwise, realistically, Windows 11 still looks a lot like XP.

The second one is a screenshot of my actual computer. The only change I've made to the display is to pin the taskbar to the left side instead of keeping it centered and to fuck around a bit with the colors in the display customization. I haven't added any plugins or tools to get it to look different.

This is actually a pretty good demonstration of things changing based on user behavior too - XP didn't come with a search field in the task bar or the start menu, but later versions of Windows OSs did, because users had gotten used to searching things more in their phones and browsers, so then they learned to search things on their computers.

There are definitely nefarious reasons that software manufacturers change their interfaces. Microsoft has included ads in home versions of their OS and pushed searches through the Microsoft store since Windows 10, as one example. That's shitty and I think it's worthwhile to find the time to shut that down (and to kill various assistants and background tools and stop a lot of stuff that runs at startup).

But if you didn't have any changes, you wouldn't have any changes. I think it's handy to have a search field in the taskbar. I find "settings" (which is newer than control panel) easier to navigate than "control panel." Some of the stuff that got added over time is *good* from a user perspective - you can see that there's a little stopwatch pinned at the bottom of my screen; that's a tool I use daily that wasn't included in previous versions of the OS. I'm glad it got added, even if I'm kind of bummed that my Windows OS doesn't come with Spider Solitaire anymore.

One thing that's helpful to think about when considering software is that nobody *wants* to make clunky, unusable software. People want their software to run well, with few problems, and they want users to like it so that they don't call corporate and kick up a fuss.

When you see these kinds of changes to the user experience, it often reflects something that *you* may not want, but that is desirable to a *LOT* of other people. The primary example I can think of here is trackpad scrolling direction; at some point it became common for trackpads to scroll in the opposite direction that they used to; now the default direction is the one that feels wrong to me, because I grew up scrolling with a mouse, not a screen. People who grew up scrolling on a screen seem to feel that the new direction is a lot more intuitive, so it's the default. Thankfully, that's a setting that's easy to change, so it's a change that I make every time I come across it, but the change was made for a sensible reason, even if that reason was opaque to me at the time I stumbled across it and continues to irritate me to this day.

I don't know. I don't want to defend Windows all that much here because I fucking hate Microsoft and definitely prefer using Linux when I'm not at work or using programs that I don't have on Linux. But the thing is that you'll see changes with Linux releases as well.

I wouldn't mind finding a tool that made my desktop look 100% like Windows 95, that would be fun. But we'd probably all be really frustrated if there hadn't been any interface improvements changes since MS-DOS (and people have DEFINITELY been complaining about UX changes at least since then).

Like, I talk about this in terms of backward compatibility sometimes. A lot of people are frustrated that their old computers can't run new software well, and that new computers use so many resources. But the flipside of that is that pretty much nobody wants mobile internet to work the way that it did in 2004 or computers to act the way they did in 1984.

Like. People don't think about it much these days but the "windows" of the Windows Operating system represented a massive change to how people interacted with their computers that plenty of people hated and found unintuitive.

(also take some time to think about the little changes that have happened that you've appreciated or maybe didn't even notice. I used to hate the squiggly line under misspelled words but now I see the utility. Predictive text seems like new technology to me but it's really handy for a lot of people. Right clicking is a UX innovation. Sometimes you have to take the centered task bar in exchange for the built-in timer deck; sometimes you have to lose color-coded files in exchange for a right click.)

294 notes

·

View notes

Note

What is the appeal of vintage computers to you? Is it the vintage video games or is it the programs? If so, what kind of programs do you like to run on them?

Fair warning, we're talking about a subject I've been passionate about for most of my life, so this will take a minute. The answer ties into how I discovered the hobby, so we'll start with a few highlights:

I played old video games starting when I was 9 or 10.

I became fascinated with older icons buried within Windows.

Tried to play my first video game (War Eagles) again at age 11, learned about the hardware and software requirements being way different than anything I had available (a Pentium III-era Celeron running Windows ME)

I was given a Commodore 1541 by a family friend at age ~12.

Watched a documentary about the history of computers that filled in the gaps between vague mentions of ENIAC and punch cards, and DOS/Windows machines (age 13).

Read through OLD-COMPUTERS.COM for the entire summer immediately after that.

Got my first Commodore 64 at age 14.

I mostly fell into the hobby because I wanted to play old video games, but ended up not finding a ton of stuff that I really wanted to play. Instead, the process of using the machines, trying the operating system, appreciating the aesthetic, the functional design choices of the user experience became the greater experience. Oh, and fixing them.

Then I started installing operating systems on some DOS machines, or playing with odd peripherals, and customizing hardware to my needs. Oh, and programming! Mostly in BASIC on 8-bit hardware, but tinkering with what each computer could do is just so fascinating to me. I'm in control, and there isn't much of anything between what I write and the hardware carrying it out (especially on pre-Windows machines)! No obfuscation layers, run-times, .dlls, etc. Regardless of the system, BASIC is always a first choice for me. Nova, Ohio Scientific, Commodore, etc. I usually try to see what I can do with the available BASIC dialect and hardware. I also tend to find a game or two to try, especially modern homebrew Commodore games because that community is always creating something new. PC stuff I focus more on pre-made software of the era.

Just to name a few examples from a variety of systems: Tetris, terminal emulators, Command & Conquer titles, screen savers, War Eagles, Continuum, video capture software, Atomic Bomberman, demos, LEGO Island, Bejeweled clones, Commander Keen 1-3, lunar lander, Galaxian, sinewave displays, 2048, Pacman, mandelbrot sets, war dialers, paint -- I could keep going.

Changing gears, I find it funny how often elders outside of the vintage computing community would talk about the era I'm interested in (60s-early 90s). [spoken with Mr. Regular's old man voice]: "Well, computers used to be big as a room! And we used punch cards, and COBOL!" I didn't know what any of that meant, and when pressed for technical detail they couldn't tell you anything substantial. Nobody conveyed any specifics beyond "that's what we used!"

I noticed that gaps remained in how that history was presented to me, even when university-level computer science and history professors were engaged on the subject. I had to go find it on my own. History is written by the victors, yeah? When was the last time a mainstream documentary or period piece focused on someone other than an Apple or Microsoft employee? Well, in this case, you can sidestep all that and see it for yourself if you know where to look.

Experiencing the history first hand to really convey how computers got from point A to B all the way down to Z is enlightening. What's cool is that unlike so many other fields of history, it's near enough in time that we can engage with people who were there, or better yet, made it happen! Why do you think I like going to vintage computer festivals?

We can see the missteps, the dead-ends, the clunkiness, the forgotten gems and lost paradigms, hopefully with context of why it happened. For the things we can't find more information on, when or documentation and perspectives are limited, sometimes we have to resort to digital archeology, and reverse engineering practices to save data, fix machines, and learn how they work. The greater arc of computer history fascinates me, and I intend to learn about it by fixing and using the computers that exemplify it best, and sharing that passion with others who might enjoy it.

168 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

Intel 3101

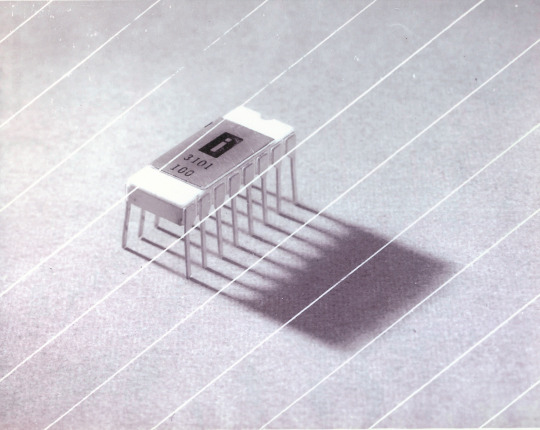

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

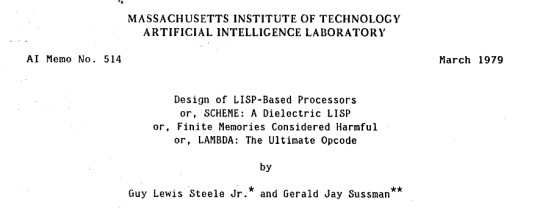

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

235 notes

·

View notes

Text

I'm Unpeeling Myself from Big Tech!

"Unpeeling" being any act you take that limits the amount of data a large tech corporation can gather from you, decreases your reliance on products of those corporations, or increases autonomy over your technology. I'm ripping the term from a line in this review by Joanna Nelius, where she writes, "People are looking for ways to peel their eyes from their smartphones like a layer of Elmer’s glue from their hand — to remove a part of themselves that really isn’t a part of themselves." It's different than "unplugging" because the goal isn't to go off the grid, or even to limit one's technology usage. The goal, instead, is to extract from the invasive, addictive, destructive capitalist vision a set of tools that are useful to YOU.

It started when I realized I don't need a smartphone. I've deleted most social media from my phone, and the stuff I still have I prefer to check on my laptop. Not all "dumb phones" (I hate this term) offer the same features, though, so I began to think on a granular level about what I need from a cell phone. Eg, not all "dumb phones" provide MMS, but my family lives 3k miles away. I wanna still talk in the groupchat.

On the more complex end, I write on my phone. I've been using Google Docs to move seamlessly from scribbled writing drafts on my phone to formatted, finished works on my computer since I was fourteen.

Except, Google Docs is useless now. I've been unable to use it since they lowered the storage capacity. The only other cloud storage writing thingy with similar functionality is Office 365, which sucks.

Could a dumb phone with a basic "notes" feature work? Maybe, but I'd have to re-type everything to get it into a formatted document. Ideally, I'd have like, a mini-laptop just for writing - something I could fit in my pocket or in a small bag, so I could bring it to work without looking like a dick - and then, in addition, a basic phone for calls/texts/GPS stuff. But does a device this specific to my use case even exist?

Yes. Yes it does.

This is a GPD Micro PC. GPD mainly sells handheld gaming machines, though this product is designed for mobile IT professionals. It's probably too chonky for a pocket, but mark my words, I will figure out how to make it work for me.

It's stupid, but this gave me a rush. I've been struggling along, tied to the bloated corpses of three gmail accounts, for years, because I needed Google Docs for my writing workflow. But now I don't. I have the power to actually tailor my tech for my life.

By this point, I was like, alright, I don't need Google Docs anymore, I don't need a smartphone, what else? Do I need Windows? No, probably not, right? I can use Linux Mint on this new guy, especially since he'll mostly be a basic writing machine. LibreOffice is less intrusive and bloated than MS Word - a better experience for free than I'd have from the paid program. If I go all the way and install Linux, I also won't have to deal with ads in my start menu, or pre-installed spyware screenshotting my activities.

In fact, if I back everything up on an external drive, I can delete my old Google Drives and switch my main computer to Linux, too! So, I finally bit the bullet and invested in an external hard drive.

This is the problem with "product ecosystems," by the way. When one part of that ecosystem - Google Docs - fails, the whole thing collapses. All the bloat and corruption you dealt with just stops being worth it, and it's easier to make a radical change to a new system. I witnessed something similar happen with comedy tech youtuber Dankpods earlier this year, except with Apple's ecosystem: he was a lifetime Apple guy - seemingly not in a worship way, but he liked their products, and was certainly in Apple's ecosystem. Then a couple things went sour for him, and now he runs Linux.

I'm doing this for personal and ideological reasons. I'm personally sick of Clippy - I mean, Copilot - peeping in to tell me how to write what I'm writing on Office 365. I abhor the idea of paying Google for a service they offered for free until recently, knowing they can flip the script at any point. And while we're talking ideology, I'm a communist, and even though this is far from a shift everyone can make, I believe that taking any available steps towards shutting Big Tech out of our lives is a net good. If all you can do is delete Instagram, or use a screentime tracker, or switch to Firefox, do it. I'm finally in a position to make this more drastic change, and I'm excited.

Get in the weeds about how you use technology. Do you need everything at your fingertips, all the time? If not, what, specifically, do you need? Is there a way that you, now or in the future, can trim out the parts you dislike? And what can you change now?

14 notes

·

View notes

Note

begging on my hands and knees for an objectum character 🙏🙏🙏

I based her colors more on the Queerplatonic Objectum Flag because I preferred the pallet, but here she is! This Magical Girl first appeared in this sketchbook post, with orthosis braces having been added to her design to account for cataplexy brought on by her narcolepsy!

This Flag was requested by @satellites-halo, who requested for the design to be themed around pillows, buildings, or computers! Objectum is a term describing the attraction to inanimate objects!

This Magical Girl/Kid (either or) has a Cyberpop theme! She uses They/She pronouns!

Their name is Welchia, after a computer virus! She can pull people and objects out of technology- for instance, if they look up ‘Apple’ they can pull a real Apple from the photo. If she pulls out a real person, they aren’t cloned, they’re just teleported- this functionally turns photos into portals! Fictional objects and people only last for about 20 minutes before they destabilize. Glass closely monitors what the pull, and will get rid of it if it’s non-necessary or destructive to civillian life, so Welchia can’t pull out money or like, nukes.

Her Magical Kid weapon is her best friend/queerplatonic partner, Buddy, her computer given sentience by Glass! Welchia can hurl them around by their indestructible cord and use their heavy, impervious shape as a yo-yo essentially!

A useless fact about them is that she uses a motorized wheelchair as a civillian because her life is a lot quieter and she’s a lot more likely to pass out, but as a Magical Girl they have enough going on that they don’t crash as often, so their orthosis braces work well enough!

#the magical kid project#artists on tumblr#pride flag#digital art#lgbt pride#original art#lgbt#lgbtq#magical girl#lgbtqia#objectum#character#original character#character design#Welchia TMKP#Glass TMKP#Buddy TMKP

37 notes

·

View notes

Text

So I made an app for PROTO. Written in Kotlin and runs on Android.

Next, I want to upgrade it with a controller mode. It should work so so I simply plug a wired xbox controller into my phone with a USB OTG adaptor… and bam, the phone does all the complex wireless communication and is a battery. Meaning that besides the controller, you only need the app and… any phone. Which anyone is rather likely to have Done.

Now THAT is convenient!

( Warning, the rest of the post turned into... a few rants. ) Why Android? Well I dislike Android less than IOS

So it is it better to be crawling in front of the alter of "We are making the apocalypse happen" Google than "5 Chinese child workers died while you read this" Apple?

Not much…

I really should which over to a better open source Linux distribution… But I do not have the willpower to research which one... So on Android I stay.

Kotlin is meant to be "Java, but better/more modern/More functional programming style" (Everyone realized a few years back that the 100% Object oriented programming paradigme is stupid as hell. And we already knew that about the functional programming paradigme. The best is a mix of everything, each used when it is the best option.) And for the most part, it succeeds. Java/Kotlin compiles its code down to "bytecode", which is essentially assembler but for the Java virtual machine. The virtual machine then runs the program. Like how javascript have the browser run it instead of compiling it to the specific machine your want it to run on… It makes them easy to port…

Except in the case of Kotlin on Android... there is not a snowflakes chance in hell that you can take your entire codebase and just run it on another linux distribution, Windows or IOS…

So... you do it for the performance right? The upside of compiling directly to the machine is that it does not waste power on middle management layers… This is why C and C++ are so fast!

Except… Android is… Clunky… It relies on design ideas that require EVERY SINGLE PROGRAM AND APP ON YOUR PHONE to behave nicely (Lots of "This system only works if every single app uses it sparingly and do not screw each-other over" paradigms .). And many distributions from Motorola like mine for example comes with software YOUR ARE NOT ALLOWED TO UNINSTALL... meaning that software on your phone is ALWAYS behaving badly. Because not a single person actually owns an Android phone. You own a brick of electronics that is worthless without its OS, and google does not sell that to you or even gift it to you. You are renting it for free, forever. Same with Motorola which added a few extra modifications onto Googles Android and then gave it to me.

That way, google does not have to give any rights to its costumers. So I cannot completely control what my phone does. Because it is not my phone. It is Googles phone.

That I am allowed to use. By the good graces of our corporate god emperors

"Moose stares blankly into space trying to stop being permanently angry at hoe everyone is choosing to run the world"

… Ok that turned dark… Anywho. TLDR There is a better option for 95% of apps (Which is "A GUI that interfaces with a database") "Just write a single HTML document with internal CSS and Javascript" Usually simpler, MUCH easier and smaller… And now your app works on any computer with a browser. Meaning all of them…

I made a GUI for my parents recently that works exactly like that. Soo this post:

It was frankly a mistake of me to learn Kotlin… Even more so since It is a… awful language… Clearly good ideas then ruined by marketing department people yelling "SUPPORT EVERYTHING! AND USE ALL THE BUZZWORD TECHNOLOGY! Like… If your language FORCES you to use exceptions for normal runtime behavior "Stares at CancellationException"... dear god that is horrible...

Made EVEN WORSE by being a really complicated way to re-invent the GOTO expression… You know... The thing every programmer is taught will eat your feet if you ever think about using it because it is SO dangerous, and SO bad form to use it? Yeah. It is that, hidden is a COMPLEATLY WRONG WAY to use exceptions…

goodie… I swear to Christ, every page or two of my Kotlin notes have me ranting how I learned how something works, and that it is terrible... Blaaa. But anyway now that I know it, I try to keep it fresh in my mind and use it from time to time. Might as well. It IS possible to run certain things more effective than a web page, and you can work much more directly with the file system. It is... hard-ish to get a webpage to "load" a file automatically... But believe me, it is good that this is the case.

Anywho. How does the app work and what is the next version going to do?

PROTO is meant to be a platform I test OTHER systems on, so he is optimized for simplicity. So how you control him is sending a HTTP 1.1 message of type Text/Plain… (This is a VERY fancy sounding way of saying "A string" in network speak). The string is 6 comma separated numbers. Linear movement XYZ and angular movement XYZ.

The app is simply 5 buttons that each sends a HTTP PUT request with fixed values. Specifically 0.5/-0.5 meter/second linear (Drive back or forward) 0.2/-0.2 radians/second angular (Turn right or turn left) Or all 0 for stop

(Yes, I just formatted normal text as code to make it more readable... I think I might be more infected by programming so much than I thought...)

Aaaaaanywho. That must be enough ranting. Time to make the app

31 notes

·

View notes

Text

I know that the average person’s opinion of AI is in a very tumultuous spot right now - partly due to misinformation and misrepresentation of how AI systems actually function, and partly because of the genuine risk of abuse that comes with powerful new technologies being thrust into the public sector before we’ve had a chance to understand the effects; and I’m not necessarily talking about generative AI and data-scraping, although I think that conversation is also important to have right now. Additionally, the blanket term of “AI” is really very insufficient and only vaguely serves to ballpark a topic which includes many diverse areas of research - many of these developments are quite beneficial for human life, such as potentially designing new antibodies or determining where cancer cells originated within a patient that presents complications. When you hear about artificial intelligence, don’t let your mind instantly gravitate towards a specific application or interpretation of the tech - you’ll miss the most important and impactful developments.

Notably, NVIDIA is holding a keynote presentation from March 18-21st to talk about their recent developments in the field of AI - a 16 minute video summarizing the “everything-so-far” detailed in that keynote can be found here - or in the full 2 hour format here. It’s very, very jargon-y, but includes information spanning a wide range of topics: healthcare, human-like robotics, “digital-twin” simulations that mirror real-world physics and allow robots to virtually train to interact and navigate particular environments — these simulated environments are built on a system called the Omniverse, and can also be displayed to Apple Vision Pro, allowing designers to interact and navigate the virtual environments as though standing within them. Notably, they’ve also created a digital sim of our entire planet for the purpose of advanced weather forecasting. It almost feels like the plot of a science-fiction novel, and seems like a great way to get more data pertinent to the effects of global warming.

It was only a few years ago that NVIDIA pivoted from being a “GPU company” to putting a focus on developing AI-forward features and technology. A few very short years; showing accelerating rates of progress. This is whenever we began seeing things like DLSS and ray-tracing/path-tracing make their way onto NVIDIA GPUs; which all use AI-driven features in some form or another. DLSS, or Deep-Learning Super Sampling, is used to generate and interpolate between frames in a game to boost framerate, performance, visual detail, etc - basically, your system only has to actually render a handful of frames and AI generates everything between those traditionally-rendered frames, freeing up resources in your system. Many game developers are making use of DLSS to essentially bypass optimization to an increasing degree; see Remnant II as a great example of this - runs beautifully on a range of machines with DLSS on, but it runs like shit on even the beefiest machines with DLSS off; though there are some wonky cloth physics, clipping issues, and objects or textures “ghosting” whenever you’re not in-motion; all seem to be a side effect of AI-generation as the effect is visible in other games which make use of DLSS or the AMD-equivalent, FSR.

Now, NVIDIA wants to redefine what the average data center consists of internally, showing how Blackwell GPUs can be combined into racks that process information at exascale speeds — which is very, very fucking fast — speeds like that have only ever actually been achieved on some 4 or 5 machines on the planet, and I think they’ve all been quantum-based machines until now; not totally certain. The first exascale computer came into existence in 2022, called Frontier, it was deemed the fastest supercomputer in existence in June 2023 - operating at some 1.19 exaFLOPS. Notably, this computer is around 7,300 sq ft in size; reminding me of the space-race era supercomputers which were entire rooms. NVIDIA’s Blackwell DGX SuperPOD consists of around 576 GPUs and operates at 11.5 exaFLOPS, and is about the size of standard row of server racks - much smaller than an entire room, but still quite large. NVIDIA is also working with AWS to produce Project Ceiba, another supercomputer consisting of some 20,000GPUs, promising 400 exaFLOPS of AI-driven computation - it doesn’t exist yet.

To make my point, things are probably only going to get weirder from here. It may feel somewhat like living in the midst of the Industrial Revolution, only with fewer years in between each new step. Advances in generative-AI are only a very, very small part of that — and many people have already begun to bury their heads in the sand as a response to this emerging technology - citing the death of authenticity and skill among artists who choose to engage with new and emerging means of creation. Interestingly, the Industrial Revolution is what gave birth to modernism, and modern art, as well as photography, and many of the concerns around the quality of art in this coming age-of-AI and in the post-industrial 1800s largely consist of the same talking points — history is a fucking circle, etc — but historians largely agree that the outcome of the Industrial Revolution was remarkably positive for art and culture; even though it took 100 years and a world war for the changes to really become really accepted among the artists of that era. The Industrial Revolution allowed art to become detached from the aristocratic class and indirectly made art accessible for people who weren’t filthy rich or affluent - new technologies and industrialization widened the horizons for new artistic movements and cultural exchanges to occur. It also allowed capitalist exploitation to ingratiate itself into the western model of society and paved the way for destructive levels of globalization, so: win some, lose some.

It isn’t a stretch to think that AI is going to touch upon nearly every existing industry and change it in some significant way, and the events that are happening right now are the basis of those sweeping changes, and it’s all clearly moving very fast - the next level of individual creative freedom is probably only a few years away. I tend to like the idea that it may soon be possible for an individual or small team to create compelling artistic works and experiences without being at the mercy of an idiot investor or a studio or a clump of illiterate shareholders who have no real interest in the development of compelling and engaging art outside of the perceived financial value that it has once it exists.

If you’re of voting age and not paying very much attention to the climate of technology, I really recommend you start keeping an eye on the news for how these advancements are altering existing industries and systems. It’s probably going to affect everyone, and we have the ability to remain uniquely informed about the world through our existing connection with technology; something the last Industrial Revolution did not have the benefit of. If anything, you should be worried about KOSA, a proposed bill you may have heard about which would limit what you can access on the internet under the guise of making the internet more “kid-friendly and safe”, but will more than likely be used to limit what information can be accessed to only pre-approved sources - limiting access to resources for LGBTQ+ and trans youth. It will be hard to stay reliably informed in a world where any system of authority or government gets to spoon-feed you their version of world events.

#I may have to rewrite/reword stuff later - rough line of thinking on display#or add more context idk#misc#long post#technology#AI

13 notes

·

View notes

Text

BEST BRANDS WE ARE DEALING WITH

In Ov mobiles, we specialise in a comprehensive range of mobile services to meet the needs of our customer, dealing with most popular mobile phones based on Thoothukudi ,our offering includes chip level repairs en compassing both Hardware and Software Solutions such as PIN an FRP unlocks. With ensure precision and versatility in customizing mobile accessories and components.

POPULAR PHONES IN INDIA IN 2024

iPhone 16 Pro max

Samsung Galaxy S24 Ultra

iPhone 16

Google pixel 9

Galaxy S24 Ultra

OnePlus Open

Samsung Galaxy Z flip 6

Galaxy S24

Google Pixel 9 Pro

iPhone 14

iPhone 16Pro max

iPhone 16 pro

Galaxy A25 5G

Asus ROG phone 8 Pro

One Plus

Redmi Note 13

We are specially dealing with

Galaxy S 24

Iphone16

Google pixel 9pro

TOP BRAND PHONE IN THOOTHUKUDI

Galaxy S24

The Galaxy S24 series features a "Dynamic AMOLED 2X" display with HDR10+ support, 2600 nits of peak brightness, LTPO and "dynamic tone mapping" technology. we ov mobiles offer u all models use an ultrasonic in-screen fingerprint sensor. The S24 series uses a variable refresh rate display with a range of 1 Hz or 24 Hz to 120 Hz The Galaxy S24 Series introduces advanced intelligence settings, giving you control over AI processing for enhanced functionality. Rest easy with unparalleled mobile protection, fortified by the impenetrable Knox Vault, as well as Knox Matrix13, Samsung's vision for multi-device security. The Galaxy S24 Series is also water and dust resistant, with all three phones featuring an IP68 rating10, so you can enjoy a phone that is able to withstand the demands of your everyday life! definitely this phone will crack the needs of the people in and around thoothukudi.

THE MOST FAVOURITE MOBILE PHONE IN INDIA

Iphone16

The new A18 chip delivers a huge leap in performance and efficiency, enabling demanding AAA games, as well as a big boost in battery life. Available in 6.1-inch and 6.7-inch display sizes, iPhone 16 and iPhone 16 Plus feature a gorgeous, durable design and offer a big boost in battery life. Apple has confirmed that the new iPhone 16 and iPhone 16 Plus models are equipped with 8GB RAM, an upgrade from the 6GB RAM in last year's base models. Johny Srouji, Apple's senior vice president of hardware technologies,

How long does the iPhone 16 battery last? Battery size Battery life (Hrs:Mins) iPhone 16 3,561 mAh 12:43 iPhone 16 Plus 4,674 mAh 16:29 iPhone 16 Pro 3,582 mAh 14:07 iPhone 16 Pro Max 4,685 mAh 17:35 The iPhone is a smartphone made by Apple that combines a computer, iPod, digital camera and cellular phone into one device with a touchscreen interface. iPhones are super popular because they're easy to use, work well with other Apple gadgets, and keep your stuff safe. They also take great pictures, have cool features, and hold their value over time. iOS devices benefit from regular and timely software updates, ensuring that users have access to the latest features and security enhancements. This is in contrast to Android, where the availability of updates varies among manufacturers and models in ov mobiles.

FUTURE ULTIMATE PHONE PEOPLE THINK

Google pixel 9pro

The Google Pixel 9 Pro is the new kid on the block in this year's lineup. The Pixel 8 Pro was succeeded by the Google Pixel 9 Pro XL and the 9 Pro is a new addition to the portfolio - it is a compact, full-featured flagship with all of the bells and whistles of its bigger XL sibling

A compact Pixel is not a new concept in itself, of course, but this is the first time Google is bringing the entirety of its A-game to this form factor. The Pixel 9 Pro packs a 48MP, 5x optical periscope telephoto camera - the same as the Pixel 9 Pro XL. There is also UWB onboard the Pixel 9 Pro. Frankly, it's kind of amazing that Google managed to fit so much extra hardware inside what is essentially the same footprint as the non-Pro Pixel 9.

Google Pixel 9 Pro specs at a glance: Body: 152.8x72.0x8.5mm, 199g; Glass front (Gorilla Glass Victus 2), glass back (Gorilla Glass Victus 2), aluminum frame; IP68 dust/water resistant (up to 1.5m for 30 min). Display: 6.30" LTPO OLED, 120Hz, HDR10+, 2000 nits (HBM), 3000 nits (peak), 1280x2856px resolution, 20.08:9 aspect ratio, 495ppi; Always-on display. Chipset: Google Tensor G4 (4 nm): Octa-core (1x3.1 GHz Cortex-X4 & 3x2.6 GHz Cortex-A720 & 4x1.92 GHz Cortex-A520); Mali-G715 MC7. Memory: 128GB 16GB RAM, 256GB 16GB RAM, 512GB 16GB RAM, 1TB 16GB RAM; UFS 3.1. OS/Software: Android 14, up to 7 major Android upgrades. Rear camera: Wide (main): 50 MP, f/1.7, 25mm, 1/1.31", 1.2µm, dual pixel PDAF, OIS; Telephoto: 48 MP, f/2.8, 113mm, 1/2.55", dual pixel PDAF, OIS, 5x optical zoom; Ultra wide angle: 48 MP, f/1.7, 123-degree, 1/2.55", dual pixel PDAF. Front camera: 42 MP, f/2.2, 17mm (ultrawide), PDAF. Video capture: Rear camera: 8K@30fps, 4K@24/30/60fps, 1080p@24/30/60/120/240fps; gyro-EIS, OIS, 10-bit HDR; Front camera: 4K@30/60fps, 1080p@30/60fps. Battery: 4700mAh; 27W wired, PD3.0, PPS, 55% in 30 min (advertised), 21W wireless (w/ Pixel Stand), 12W wireless (w/ Qi-compatible charger), Reverse wireless. Connectivity: 5G; eSIM; Wi-Fi 7; BT 5.3, aptX HD; NFC. Misc: Fingerprint reader (under display, ultrasonic); stereo speakers; Ultra Wideband (UWB) support, Satellite SOS service, Circle to Search. Google also paid some extra attention to the display of the Pro. It is a bit bigger than the Pixel 8's and better than that inside the regular Pixel 9. The resolution has been upgraded to 1280 x 2856 pixels, the maximum brightness has been improved, and there is LTPO tech for dynamic refresh rate adjustment.

WHY YOU SHOULD CHOOSE OV MOBILES?

you need to choose us because of our great deals and offers which we provide for our customer especially in festival time our offers really attract you without fail. Agreat opportunity in Ov mobiles is when u purchase from our shop in any of the product, we offer you a great percentage of discounts for your next purchase of any models

2 notes

·

View notes

Text

Sticky mental models and old habits die hard in product diffusion

Successfully launching a new product requires either influencing or breaking the mental models of consumers behavior. Humane’s Ai pin today was harshly critiqued today on a podcast I was listening to as a failed product. Ai pin is an AI assistant attached to one’s lapel and operates on voice or a digital interface projected on one’s palm. It is riddled with functionality weaknesses and an “uncool” look and feel. We are not short of new wearables – some have been very successful such as the Apple Watch and FitBit while some flopped quickly never to be mentioned again such as the Google Glass and now the Ai pin. Because wearables, if successful, are so intertwined with our daily lives, successful wearables work with, rather than break, existing mental models and lifestyle habits of their target consumers. Apple Watch and FitBit succeeded because it simply replaced what was previously the watch on one’s wrist. Consumers do not need to unlearn and adopt new habits for product diffusion to happen. The hurdle of adoption is lower.

The same could not be said about the Google Glass. It worked with existing lifestyle habits associated with spectacles or sunglasses but its high price point narrowed the accessible market. Within the smaller market, the use of it breached the socially acceptable level of privacy. This perhaps suggests that product diffusion is not just personal, it also requires community engagement.

However, when new products are truly novel and requires consumers to redesign their lives, the company needs to put in more resources and time to mold its target consumers. Apple started in the 1970s and spent the next 30 years in computers, hard disks and printers. It only branched into speakers in 2000 and phones in 2007. The decades of brand building create the brand association of coolness and high quality with its products. Therefore, when Apple launched its 1st Gen iPod in 2001, it relied on its existing customer base as the first adopters. Customers who were so sticky and loyal to Apple that they took Apple’s lead in the design of their lives.

2 notes

·

View notes

Note

can you share about your shibusawa kids :D

yes!!!

(sorry this took so long to get out, my computer broke so its slow typing big text posts on mobile so please forgive any typos)

they're still in development at the moment as i try to build my nextgen but heres something of an overview of them so far to give you a bit of a general idea :]

some background: i like to ship shibusawa in a monstercule (monster polycule) of him, bram, lovecraft, adam, and sigma so the four kids i talk about here have additional siblings from those as well

they also each have some reptile i equate them within my mind that i use to characterize them

Tatsumi Shibusawa-Lovecraft

Tatsumi is th oldest of the family, and has the least conventional origin in comparison to not only his siblings but all my fankids (yes, even chimeraverse, even if those aren't actually technically shipkids).

He's technically closer to a singularity than anything else, first appearing as an egg shaped bundle of excess energy in the aftermath of Dead Apple. He wasn't really concious or anything during this, just a bunch of barely contained highly unstable energy swirling around. The egg was initially gathered by the ADA but was turned over to the Special Division for Unusual Powers so it could be more safely stored and observed. I don't want to spoil the entire story since I plan on writing/drawing it, so to cut to the end, the energy stored in the "egg" wasn't stable enough to actually form a living body and Shibusawa couldn't provide any more than what he already had. It required contact with another extreme source of energy, which ended up being the cosmic power Lovecraft is constantly using to maintain a human form himself. (I will say there were a couple other circumstances that could've provided Tatsumi with his stabalized human form, Lovecraft was just the one who actually made physical contact with the "egg" to actually trigger it)

His ability, Revolt of the Body doesn't actually do much, primarily because it's not much of an ability and functions more like Great Old One. It's main point of use is just shapeshifting and letting Tatsumi be able to do that without becoming unstable again. But... perhaps it does have other capabilities yet to be seen...

Tatsumi is fairly laid back but is also frequently bored and gets sleepy if his interest isn't held. He seeks out a lot of novelty to keep himself entertained (and yes as a result he is easily taken in by video games for those dopamine hits. Please don't let him near a casino.) He's rather sluggish in most aspects but when excited can cause a lot of accidental damage by forgetting how strong he is. He has a penchant for theatre and art and can be found skulking around galleries or performance halls people watching or waiting for plays or dances to begin.

He's designed to evoke a python or anaconda 🐍

References: Tatsumi Hijikata and his solo work Hijikata Tatsumi and Japanese People: Revolt of the Body

Mina Shibusawa-Stoker

Mina is the middle child of the Stoker triplets (including her older brother Jonathan and younger sister Lucy aka Lulu).

Her ability, White Wyrm Lair lets her hypnotize others. The ability takes the form of a crystalline looking serpent that inflicts a setting bite, its venom making the victim extremely susceptible to suggestion. Mina can choose to activate the latent venom at whatever point she chooses, provided the ability hasn't been deactivated in the time passed.

She's got a lot of confidence and can be a bit of a trickster, using her charms for her own amusement. She's also very into luxury and likes to lounge around. She's honestly got knife cat energy, which I love for her.

Her reptile is a komodo dragon 🐉

References: Mina Harker (character in Bram Stoker’s Dracula), Bram Stoker novel The Lair of the White Worm

Yukio Shibusawa

Yukio's one of the two "middle children" of the group (along with Slava, who isn't discussed here since they aren't a Shibusawa kid).

He's fairly laid back, or at least appears that way in terms of his introspective nature. He gets on well with most people and tries to be accommodating but can end up as a pushover in his attempts to people please conflicting against his own wants.

While he doesn't have an actual ability, he does eventually gain access to The Book. That plays a lot into his character and arch so I don't actually want to say too much about it here to avoid spoilers, sorry about that.

While it wasnt initially intended, for some reason he reminds me most of a snapping turtle 🐢

References: Yukio Mishima, assorted work by Mishima (Confessions of a Mask, The Frolic of the Beasts, The Sea of Fertility tetralogy (Spring Snow, Runaway Horses, The Temple of Dawn, and The Decay of the Angel))

Epsilon Shibusawa

Epsilon is the baby of the family!

Because they're so young I don't have too much to say unfortunately.

They don't have an ability of their own but did end up inheriting access to Draconia's fog. They're very clingy to their family and don't like to be far from them or in new places around new people. They're generally very anxious and withdrawn, but they enjoy listening to stories and solving puzzles, which helps feed their curiosity.

While designed with the inspiration of a hognose snake, they also take some aspects from crocodilians 🐊

References: the greek letter Epsilon, Tatsuhiko Shibusawa’s The Song of the Eradication and The Rib of Epicurus

Bonus "Fun" Fact!:

The reason all of them have dragonic hybrid physiology is as a result of being fused with energy from Draconia. Tatsumi is of course his own special case in regards to that being a literal singularity and Revolt Of The Body making it possible for him to shift just how human vs monster he appears.

The others are permanent, with their horns growning from the spot in their skulls where Shibusawa embedded shards of Draconia gems into them, thus making them hybrids just like him.

#monstercule#bungo stray dogs#bsd#prologue epilogue dialogue#stray cats verse#oc: tatsumi shibusawa lovecraft#oc: mina shibusawa stoker#oc: yukio shibusawa#oc: epsilon shibusawa#bungou stray dogs#bsd oc#bsd ocs#bsd fankid#bsd shipkid#bungo stray dogs oc#bungou stray dogs oc

3 notes

·

View notes

Note

how beginner-friendly would you say Linux is? is it worth getting into?

Hey! Didn't mean to keep you waiting - I thought I answered this (I guess I must have drafted it and then forgot to publish or save. Sorry!)

So my opinion is unequivocally yes. For starters most Linux distros are open source and predicated on community-based development (maybe there are some that are more closed/proprietary, but I don't know about those). That gets you away from the microsoft/apple functional duopoly in the individual computing space, which is a really appealing motivation (at least to me!)

Also i feel like Linux has a lingering reputation of being very command line-y and antagonistic toward new or casual users. Perhaps that was true in the past, but most Linux distros around today are pretty similar to familiar windows or mac OS'es, to the point where it will likely be pretty intuitive for you to figure out how to navigate them! And if you do get stuck along the way, the OS itself probably comes loaded with tutorials or walkthrus to help get you started or on your way. That said, if you do want the command line experience, it's usually really easy to access the terminal/console, and there's lots of documentation and community resources about how to do stuff!

So if you're thinking about getting started with Linux, I recommend doing some research to see if there's a distro that seems to best suit your personal computing needs and preferences. But if you were to ask me directly I'd suggest Fedora or Ubuntu as pretty good general-purpose Linux systems that are fairly accessible/intuitive, work well (even on older computers), and have pretty easy learning curves. In fact, if you happen to have an older computer or laptop on hand thst you can just play around with, that's a great way to dip your toe in the water to see how you like it before deciding to commit or not!

One word of 'warning' I'll offer though is thst if you do a lot of gaming, not all games are designed for compatibility with Linux architecture. If that's important to you then please look up what the internet says about your fave games + Linux!

If you'd like to get a taste of open source without fully committing though, there are open source alternatives to most major software applications. I especially like using LibreOffice as an alternative to Microsoft office or whatever the hell Google is doing with docs these days.

And as always, if you're not already doing so, I strongly suggest changing your web browser to Firefox!

(this line intentionally left blank because I'm sure people smarter about this than I am will be have plenty more they can offer:

)

8 notes

·

View notes

Note

iirc a while ago you mentioned disliking microsoft - just wondering if you have any recommendations for another os to use and guides to read on it? ive been considering switching from microsoft for a while now but because ive had my computers for a while it feels like a big change

i get it! i've been split pretty much 50/50 windows and mac for my whole life (with some experience with linux) and i'd say they arent all that different in the most important ways. the barrier for mac, which is quite a big one, is that you need apple hardware like a macbook. however, if you acquire one, you'd probably find the major things like the file system, using applications, etc are very intuitive regardless of what specific OS you are most used to. On mac, most things are very streamlined and self contained, applications do not use a central registry and while they store data in shared folders (for example, "application_support" which is roughly equivalent to something like "APPDATA" on windows), applications are mostly contained within themselves. for an illustrative example: firefox has a .app package in the applications folder, and stores its cache and history in application support, and that's it. as a result, uninstalling apps is almost always a matter of deleting the app file and not using a central uninstaller in settings (though some more complex apps have their own uninstaller). shortcuts and aliases are very rare, and in my opinion most things are easier to find than on windows. its difficult for me to explain exactly what i mean, but there is only one "layer" to macOS. windows is built on principles of backwards compatibility, so there can be a lot of junk where a more central cohesive system would be easier to use and less likely to break. one of my strongest frustrations with windows is that it has two entirely separate (3 if you count the registry) applications for changing settings, and they look and behave completely differently. apple's cohesion is a trade off, as it requires axing functionality that isn't common or popularly necessary anymore (RIP 32 bit programs), but i find it's absolutely worth it. in all, the way macOS functions is a lot like how a phone does, in terms of the way its designed, though i'd argue its more like an android than an iphone. it has a reputation for being locked down and less customizable, but i'd say that isn't accurate for most useful or desirable tinkering. it requires using the terminal and learning some unix commands but it's quite straightforward once you do, and less likely to break or frustrate you as windows is (in my opinion). linux of course is leaps and bounds above both proprietary systems but that's not really my area of expertise. at the end of the day, windows is accessible, that's its biggest advantage and the source of a lot of its problems. it has to run on old architecture, cling to obsolete and archaic systems because that's what people know and expect, and appeal to microsoft execs by jamming in new useless features to impress clueless shareholders. the main issues with microsoft is that it wants to be apple, and its management pushing cortana, and the all things people hate about windows 8, 10, and 11 is completely at odds with its fundamental design principles. that's why its so terrible and so ugly to me. macOS is like if those features were thought out, intuitive, and part of a well designed whole, instead of being wasteful, bloated, and a constant annoyance to the people who actually desire the unique things windows has to bring to the table.

oh and to answer your actual question since this just turned into one of my rambles: i would recommend trying out a linux distribution (like ubuntu or debian) by booting it on a flash drive and playing around with it to get a feel for the file system and other core aspects. its designed somewhat similarly to mac since they are both unix-like but its free and open source. if you hate it then its probably best to not spend a lot on a macbook. if you live near an apple store (or another dept. store that has electronics display models) you can go in and play around on one. there's lots of guides online, though i find its best to just fuck around and if things go wrong, seek answers online. also one last thing is that if you do decide to go with a mac, its no problem to just keep using windows for what you need it to. i have an old PC laptop i use to play video games that only run on windows after all.

15 notes

·

View notes

Note

What kind of work can be done on a commodore 64 or those other old computers? The tech back then was extremely limited but I keep seeing portable IBMs and such for office guys.

I asked a handful of friends for good examples, and while this isn't an exhaustive list, it should give you a taste.

I'll lean into the Commodore 64 as a baseline for what era to hone in one, let's take a look at 1982 +/-5 years.

A C64 can do home finances, spreadsheets, word processing, some math programming, and all sorts of other other basic productivity work. Games were the big thing you bought a C64 for, but we're not talking about games here -- we're talking about work. I bought one that someone used to write and maintain a local user group newsletter on both a C64C and C128D for years, printing labels and letters with their own home equipment, mailing floppies full of software around, that sorta thing.

IBM PCs eventually became capable of handling computer aided design (CAD) work, along with a bunch of other standard productivity software. The famous AutoCAD was mostly used on this platform, but it began life on S-100 based systems from the 1970s.

Spreadsheets were a really big deal for some platforms. Visicalc was the killer app that the Apple II can credit its initial success with. Many other platforms had clones of Visicalc (and eventually ports) because it was groundbreaking to do that sort of list-based mathematical work so quickly, and so error-free. I can't forget to mention Lotus 1-2-3 on the IBM PC compatibles, a staple of offices for a long time before Microsoft Office dominance.

CP/M machines like Kaypro luggables were an inexpensive way of making a "portable" productivity box, handling some of the lighter tasks mentioned above (as they had no graphics functionality).

The TRS-80 Model 100 was able to do alot of computing (mostly word processing) on nothing but a few AA batteries. They were a staple of field correspondence for newspaper journalists because they had an integrated modem. They're little slabs of computer, but they're awesomely portable, and great for writing on the go. Everyone you hear going nuts over cyberdecks gets that because of the Model 100.

Centurion minicomputers were mostly doing finances and general ledger work for oil companies out of Texas, but were used for all sorts of other comparable work. They were multi-user systems, running several terminals and atleast one printer on one central database. These were not high-performance machines, but entire offices were built around them.

Tandy, Panasonic, Sharp, and other brands of pocket computers were used for things like portable math, credit, loan, etc. calculation for car dealerships. Aircraft calculations, replacing slide rules were one other application available on cassette. These went beyond what a standard pocket calculator could do without a whole lot of extra work.

Even something like the IBM 5340 with an incredibly limited amount of RAM but it could handle tracking a general ledger, accounts receivable, inventory management, storing service orders for your company. Small bank branches uses them because they had peripherals that could handle automatic reading of the magnetic ink used on checks. Boring stuff, but important stuff.

I haven't even mentioned Digital Equipment Corporation, Data General, or a dozen other manufacturers.

I'm curious which portable IBM you were referring to initially.

All of these examples are limited by today's standards, but these were considered standard or even top of the line machines at the time. If you write software to take advantage of the hardware you have, however limited, you can do a surprising amount of work on a computer of that era.

43 notes

·

View notes

Text

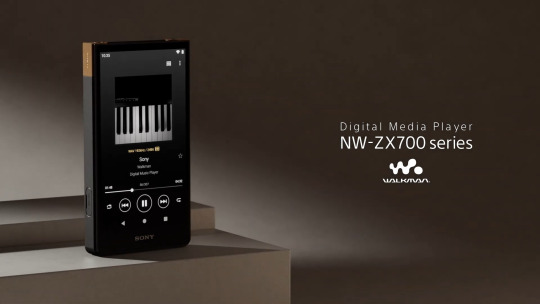

Music Player in 2023... Why Sony?

The convenience of Bluetooth streaming is great but it is constrained by how much data it can pump through its signal. Though portable music players are preferably used with wired headphones for maximal performance, wired cans have seemingly enjoyed a recent renaissance too.