#azuredatabricks

Explore tagged Tumblr posts

Text

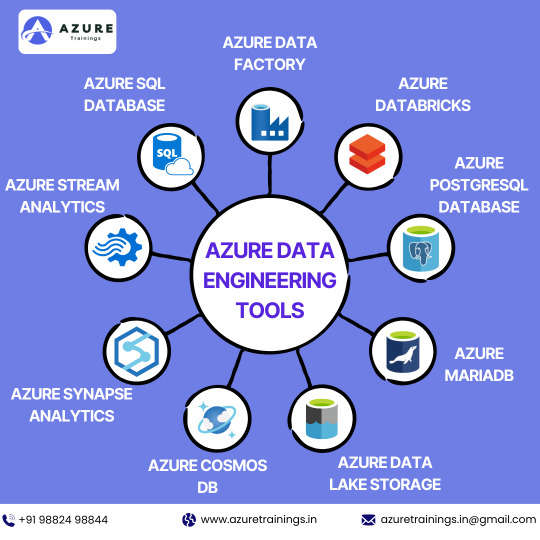

Top Azure Data Engineering Tools You Should Know! Are you ready to master data engineering with Azure tools? These powerful tools will help you process, store, and analyze massive datasets with ease! Want to become an Azure Data Engineer? Join Azure Trainings and take your skills to the next level!

Call: +91 98824 98844 Learn More: www.azuretrainings.in

#AzureDataEngineering#AzureTraining#BigData#CloudComputing#DataAnalytics#AzureSQL#DataScience#AzureSynapse#AzureDatabricks#AzureCosmosDB#LearnAzure#TechSkills#CloudEngineer#DataEngineer#ITTraining#AzureExperts

0 notes

Text

Creole Studios Launches Comprehensive Data Engineering Services with Azure Databricks Partnership

Creole Studios introduces its cutting-edge Data Engineering Services, aimed at empowering organizations to extract actionable insights from their data. Backed by certified experts and a strategic partnership with Azure Databricks, we offer comprehensive solutions spanning data strategy, advanced analytics, and secure data warehousing. Unlock the full potential of your data with Creole Studios' tailored data engineering services, designed to drive innovation and efficiency across industries.

#DataEngineering#BigDataAnalytics#DataStrategy#DataOps#DataQuality#AzureDatabricks#DataWarehousing#MachineLearning#DataAnalytics#BusinessIntelligence

0 notes

Text

How Azure Databricks & Data Factory Aid Modern Data Strategy

For all analytics and AI use cases, maximize data value with Azure Databricks.

What is Azure Databricks?

A completely managed first-party service, Azure Databricks, allows an open data lakehouse in Azure. Build a lakehouse on top of an open data lake to quickly light up analytical workloads and enable data estate governance. Support data science, engineering, machine learning, AI, and SQL-based analytics.

First-party Azure service coupled with additional Azure services and support.

Analytics for your latest, comprehensive data for actionable insights.

A data lakehouse foundation on an open data lake unifies and governs data.

Trustworthy data engineering and large-scale batch and streaming processing.

Get one seamless experience

Microsoft sells and supports Azure Databricks, a fully managed first-party service. Azure Databricks is natively connected with Azure services and starts with a single click in the Azure portal. Without integration, a full variety of analytics and AI use cases may be enabled quickly.

Eliminate data silos and responsibly democratise data to enable scientists, data engineers, and data analysts to collaborate on well-governed datasets.

Use an open and flexible framework

Use an optimised lakehouse architecture on open data lake to process all data types and quickly light up Azure analytics and AI workloads.

Use Apache Spark on Azure Databricks, Azure Synapse Analytics, Azure Machine Learning, and Power BI depending on the workload.

Choose from Python, Scala, R, Java, SQL, TensorFlow, PyTorch, and SciKit Learn data science frameworks and libraries.

Build effective Azure analytics

From the Azure interface, create Apache Spark clusters in minutes.

Photon provides rapid query speed, serverless compute simplifies maintenance, and Delta Live Tables delivers high-quality data with reliable pipelines.

Azure Databricks Architecture

Companies have long collected data from multiple sources, creating data lakes for scale. Quality data was lacking in data lakes. To overcome data warehouse and data lake restrictions, the Lakehouse design arose. Lakehouse, a comprehensive enterprise data infrastructure platform, uses Delta Lake, a popular storage layer. Databricks, a pioneer of the Data Lakehouse, offers Azure Databricks, a fully managed first-party Data and AI solution on Microsoft Azure, making Azure the best cloud for Databricks workloads. This blog article details it’s benefits:

Seamless Azure integration.

Regional performance and availability.

Compliance, security.

Unique Microsoft-Databricks relationship.

1.Seamless Azure integration

Azure Databricks, a first-party service on Microsoft Azure, integrates natively with valuable Azure Services and workloads, enabling speedy onboarding with a few clicks.

Native integration-first-party service

Microsoft Entra ID (previously Azure Active Directory): It seamlessly connects with Microsoft Entra ID for controlled access control and authentication. Instead of building this integration themselves, Microsoft and Databricks engineering teams have natively incorporated it with Azure Databricks.

Azure Data Lake Storage (ADLS Gen2): Databricks can natively read and write data from ADLS Gen2, which has been collaboratively optimised for quick data access, enabling efficient data processing and analytics. Data tasks are simplified by integrating Azure Databricks with Data Lake and Blob Storage.

Azure Monitor and Log Analytics: Azure Monitor and Log Analytics provide insights into it’s clusters and jobs.

The Databricks addon for Visual Studio Code connects the local development environment to Azure Databricks workspace directly.

Integrated, valuable services

Power BI: Power BI offers interactive visualization’s and self-service business insight. All business customers can benefit from it’s performance and technology when used with Power BI. Power BI Desktop connects to Azure Databricks clusters and SQL warehouses. Power BI’s enterprise semantic modelling and calculation features enable customer-relevant computations, hierarchies, and business logic, and Azure Databricks Lakehouse orchestrates data flows into the model.

Publishers can publish Power BI reports to the Power BI service and allow users to access Azure Databricks data using SSO with the same Microsoft Entra ID credentials. Direct Lake mode is a unique feature of Power BI Premium and Microsoft Fabric FSKU (Fabric Capacity/SKU) capacity that works with it. With a Premium Power BI licence, you can Direct Publish from Azure Databricks to create Power BI datasets from Unity Catalogue tables and schemas. Loading parquet-formatted files from a data lake lets it analyse enormous data sets. This capability is beneficial for analysing large models quickly and models with frequent data source updates.

Azure Data Factory (ADF): ADF natively imports data from over 100 sources into Azure. Easy to build, configure, deploy, and monitor in production, it offers graphical data orchestration and monitoring. ADF can execute notebooks, Java Archive file format (JARs), and Python code activities and integrates with Azure Databricks via the linked service to enable scalable data orchestration pipelines that ingest data from various sources and curate it in the Lakehouse.

Azure Open AI: It features AI Functions, a built-in DB SQL function, to access Large Language Models (LLMs) straight from SQL. With this rollout, users can immediately test LLMs on their company data via a familiar SQL interface. A production pipeline can be created rapidly utilising Databricks capabilities like Delta Live Tables or scheduled Jobs after developing the right LLM prompt.

Microsoft Purview: Microsoft Azure’s data governance solution interfaces with Azure Databricks Unity Catalog’s catalogue, lineage, and policy APIs. This lets Microsoft Purview discover and request access while Unity Catalogue remains Azure Databricks’ operational catalogue. Microsoft Purview syncs metadata with it Unity Catalogue, including metastore catalogues, schemas, tables, and views. This connection also discovers Lakehouse data and brings its metadata into Data Map, allowing scanning the Unity Catalogue metastore or selective catalogues. The combination of Microsoft Purview data governance policies with Databricks Unity Catalogue creates a single window for data and analytics governance.

The best of Azure Databricks and Microsoft Fabric

Microsoft Fabric is a complete data and analytics platform for organization’s. It effortlessly integrates Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Intelligence, and Power BI on a SaaS foundation. Microsoft Fabric includes OneLake, an open, controlled, unified SaaS data lake for organizational data. Microsoft Fabric creates Delta-Parquet shortcuts to files, folders, and tables in OneLake to simplify data access. These shortcuts allow all Microsoft Fabric engines to act on data without moving or copying it, without disrupting host engine utilization.

Creating a shortcut to Azure Databricks Delta-Lake tables lets clients easily send Lakehouse data to Power BI using Direct Lake mode. Power BI Premium, a core component of Microsoft Fabric, offers Direct Lake mode to serve data directly from OneLake without querying an Azure Databricks Lakehouse or warehouse endpoint, eliminating the need for data duplication or import into a Power BI model and enabling blazing fast performance directly over OneLake data instead of ADLS Gen2. Microsoft Azure clients can use Azure Databricks or Microsoft Fabric, built on the Lakehouse architecture, to maximise their data, unlike other public clouds. With better development pipeline connectivity, Azure Databricks and Microsoft Fabric may simplify organisations’ data journeys.

2.Regional performance and availability

Scalability and performance are strong for Azure Databricks:

Azure Databricks compute optimisation: GPU-enabled instances speed machine learning and deep learning workloads cooperatively optimised by Databricks engineering. Azure Databricks creates about 10 million VMs daily.

Azure Databricks is supported by 43 areas worldwide and expanding.

3.Secure and compliant

Prioritising customer needs, it uses Azure’s enterprise-grade security and compliance:

Azure Security Centre monitors and protects this bricks. Microsoft Azure Security Centre automatically collects, analyses, and integrates log data from several resources. Security Centre displays prioritised security alerts, together with information to swiftly examine and attack remediation options. Data can be encrypted with Azure Databricks.

It workloads fulfil regulatory standards thanks to Azure’s industry-leading compliance certifications. PCI-DSS (Classic) and HIPAA-certified Azure Databricks SQL Serverless, Model Serving.

Only Azure offers Confidential Compute (ACC). End-to-end data encryption is possible with Azure Databricks secret computing. AMD-based Azure Confidential Virtual Machines (VMs) provide comprehensive VM encryption with no performance impact, while Hardware-based Trusted Execution Environments (TEEs) encrypt data in use.

Encryption: Azure Databricks natively supports customer-managed Azure Key Vault and Managed HSM keys. This function enhances encryption security and control.

4.Unusual partnership: Databricks and Microsoft

It’s unique connection with Microsoft is a highlight. Why is it special?

Joint engineering: Databricks and Microsoft create products together for optimal integration and performance. This includes increased Azure Databricks engineering investments and dedicated Microsoft technical resources for resource providers, workspace, and Azure Infra integrations, as well as customer support escalation management.

Operations and support: Azure Databricks, a first-party solution, is only available in the Azure portal, simplifying deployment and management. Microsoft supports this under the same SLAs, security rules, and support contracts as other Azure services, ensuring speedy ticket resolution in coordination with Databricks support teams.

It prices may be managed transparently alongside other Azure services with unified billing.

Go-To-Market and marketing: Events, funding programmes, marketing campaigns, joint customer testimonials, account-planning, and co-marketing, GTM collaboration, and co-sell activities between both organisations improve customer care and support throughout their data journey.

Commercial: Large strategic organization’s select Microsoft for Azure Databricks sales, technical support, and partner enablement. Microsoft offers specialized sales, business development, and planning teams for Azure Databricks to suit all clients’ needs globally.

Use Azure Databricks to enhance productivity

Selecting the correct data analytics platform is critical. Data professionals can boost productivity, cost savings, and ROI with Azure Databricks, a sophisticated data analytics and AI platform, which is well-integrated, maintained, and secure. It is an attractive option for organisations seeking efficiency, creativity, and intelligence from their data estate because to Azure’s global presence, workload integration, security, compliance, and unique connection with Microsoft.

Read more on Govindhtech.com

#microsoft#azure#azuredatabricks#MicrosoftAzure#MicrosoftFabric#OneLake#DataFactory#lakehouse#ai#technology#technews#news

0 notes

Text

Visualpath offers the Best Azure Data Engineer Course online training conducted by real-time experts. Our Azure Data Engineer Course training is available in Hyderabad and is provided to individuals globally in the USA, UK, Canada, Dubai, and Australia. Contact us at +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070

Visit: https://www.visualpath.in/azure-data-engineer-online-training.html

#freedemo#visualpathedu#dataengineeronline#azuredataengineer#Azure#onlineclasses#training#DataEngineer#softwaredevelopment#softwaretraining#DataEngineerTraining#azurelake#AzureDatabricks#AzureDataFactory#azurecloud#handsonlearning#RealTimeProjects#AzureData#AzureDataEngineering

0 notes

Text

Key Features of Azure Databricks

Unified Analytics: Single platform for data engineering, data science, and analytics tasks.

Scalability: Efficiently scales to handle large datasets and varying workloads.

Performance Optimization: Optimized for high-performance data processing and analytics.

Integration with Azure Services: Seamless integration with various Azure services.

Collaboration: Shared notebooks, version control, and collaborative coding features.

Built-in Libraries: Includes libraries for machine learning, graph processing, and stream processing.

Security and Compliance: Implements security measures and complies with industry standards.

Automated Cluster Management: Streamlines cluster provisioning and management.

Azure Databricks is a powerful tool for organizations looking to streamline and optimize their big data analytics, data engineering, and machine learning workflows in the cloud.

#magistersign#onlinetraining#support#usa#AzureDatabricks#BigDataTraining#DataAnalytics#CloudTraining#DatabricksLearning#AzureTraining

0 notes

Text

Greetings from Ashra Technologies we are hiring

#ashra#ashratechnologies#jobs#hiring#jobalert#jobsearch#jobhunt#recruiting#recruitingpost#experience#azure#azuredatabricks#azuresynapse#azuredatafactory#azurefunctions#logicapps#scala#apachespark#python#pyspark#sql#azuredevops#pune#chennai#hybrid#hybridwork#linkedin#linkedinprofessionals#linkedinlearning#linkedinads

0 notes

Text

Visualpath Teaching the best Azure Synapse Analytics Training in Ameerpet It is the NO.1 Institute in Hyderabad Providing Online Training Classes. Our faculty has experience in real-time and provides Azure Synapse Training Real-time projects and placement assistance. Contact us at +91-9989971070.

whatsApp: https://www.whatsapp.com/catalog/917032290546/

VisitBlog: https://visualpathblogs.com/ Visit: https://visualpath.in/azure-synapse-python-azuredatabricks-online-training.html

#AzureSynapseAnalyticsTraining#AzureSynapseAnalyticsCoursesOnline#AzureSynapseAnalyticsonlineTraininginHyderabad#AzureSynapseTraininginHyderabad#AzureSynapseAnalyticsOnlineTraining#AzureSynapseAnalyticsTraininginHyderabad#AzureSynapseAnalyticsTraininginAmeerpet#AzureSynapseOnlineTrainingCourseHyderabad#AzureSynapseTraining.

0 notes

Text

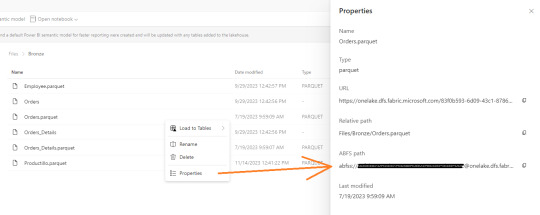

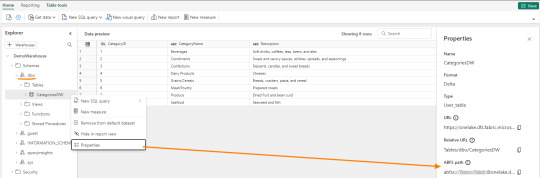

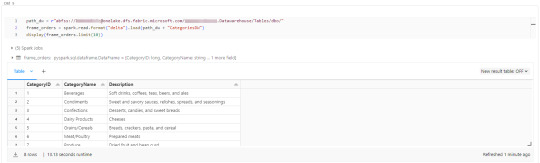

[Fabric] Leer y escribir storage con Databricks

Muchos lanzamientos y herramientas dentro de una sola plataforma haciendo participar tanto usuarios técnicos (data engineers, data scientists o data analysts) como usuarios finales. Fabric trajo una unión de involucrados en un único espacio. Ahora bien, eso no significa que tengamos que usar todas pero todas pero todas las herramientas que nos presenta.

Si ya disponemos de un excelente proceso de limpieza, transformación o procesamiento de datos con el gran popular Databricks, podemos seguir usándolo.

En posts anteriores hemos hablado que Fabric nos viene a traer un alamacenamiento de lake de última generación con open data format. Esto significa que nos permite utilizar los más populares archivos de datos para almacenar y que su sistema de archivos trabaja con las convencionales estructuras open source. En otras palabras podemos conectarnos a nuestro storage desde herramientas que puedan leerlo. También hemos mostrado un poco de Fabric notebooks y como nos facilita la experiencia de desarrollo.

En este sencillo tip vamos a ver como leer y escribir, desde databricks, nuestro Fabric Lakehouse.

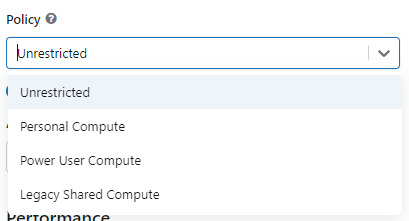

Para poder comunicarnos entre databricks y Fabric lo primero es crear un recurso AzureDatabricks Premium Tier. Lo segundo, asegurarnos de dos cosas en nuestro cluster:

Utilizar un policy "unrestricted" o "power user compute"

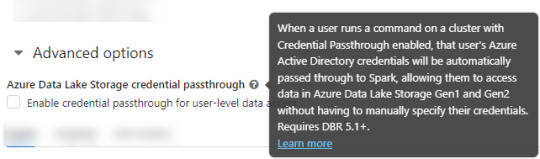

2. Asegurarse que databricks podría pasar nuestras credenciales por spark. Eso podemos activarlo en las opciones avanzadas

NOTA: No voy a entrar en más detalles de creación de cluster. El resto de las opciones de procesamiento les dejo que investiguen o estimo que ya conocen si están leyendo este post.

Ya creado nuestro cluster vamos a crear un notebook y comenzar a leer data en Fabric. Esto lo vamos a conseguir con el ABFS (Azure Bllob Fyle System) que es una dirección de formato abierto cuyo driver está incluido en Azure Databricks.

La dirección debe componerse de algo similar a la siguiente cadena:

oneLakePath = 'abfss://[email protected]/myLakehouse.lakehouse/Files/'

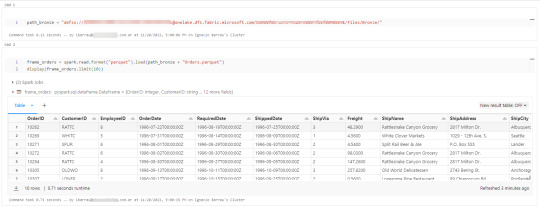

Conociendo dicha dirección ya podemos comenzar a trabajar como siempre. Veamos un simple notebook que para leer un archivo parquet en Lakehouse Fabric

Gracias a la configuración del cluster, los procesos son tan simples como spark.read

Así de simple también será escribir.

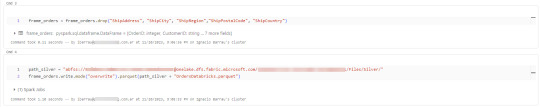

Iniciando con una limpieza de columnas innecesarias y con un sencillo [frame].write ya tendremos la tabla en silver limpia.

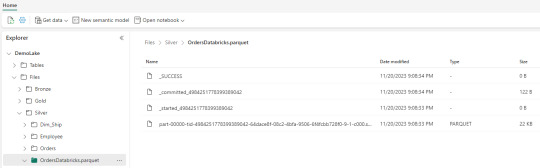

Nos vamos a Fabric y podremos encontrarla en nuestro Lakehouse

Así concluye nuestro procesamiento de databricks en lakehouse de Fabric, pero no el artículo. Todavía no hablamos sobre el otro tipo de almacenamiento en el blog pero vamos a mencionar lo que pertine a ésta lectura.

Los Warehouses en Fabric también están constituidos con una estructura tradicional de lake de última generación. Su principal diferencia consiste en brindar una experiencia de usuario 100% basada en SQL como si estuvieramos trabajando en una base de datos. Sin embargo, por detras, podrémos encontrar delta como un spark catalog o metastore.

El path debería verse similar a esto:

path_dw = "abfss://[email protected]/WarehouseName.Datawarehouse/Tables/dbo/"

Teniendo en cuenta que Fabric busca tener contenido delta en su Spark Catalog de Lakehouse (tables) y en su Warehouse, vamos a leer como muestra el siguiente ejemplo

Ahora si concluye nuestro artículo mostrando como podemos utilizar Databricks para trabajar con los almacenamientos de Fabric.

#fabric#microsoftfabric#fabric cordoba#fabric jujuy#fabric argentina#fabric tips#fabric tutorial#fabric training#fabric databricks#databricks#azure databricks#pyspark

0 notes

Video

youtube

SQL Performance Tuning Training Classes From SQL School

Performance Tuning Training Highlights: Basic to Advanced SQL DB Tuning Detailed Indexing Strategies Execution Plans Analysis Big Data Partitioning, Statistics Index Management, Tuning Tools Lock Management, Deadlocks Lock Hints, LIVE Locks, Query Traces Perfmon Tool, Activity Monitor Real-time Case Study: E-Commerce

Why SQL School? 100% Real-time Completely Practical Concept wise FAQs Real-time Scenarios On-Job Operations Wise Mock Interviews OLTP & DWH Tuning Big Data Loads - Tuning Interview Guidance, Resume

This Performance Tuning (Query Tuning) course is useful for: 1. SQL Developers 2. SQL DBAs 3. Azure Data Engineers 4. Power BI Data Analysts 5. BI Developers & Architects

Details at: www.sqlschool.com

Reach Us For Free Demo: [email protected] +91 9666 44 0801 (India) +1 (956) 825-0401 (USA)

You can reach your trainer directly at: https://wa.me/+919030040801

#azurecloud #AzureDataFactory #AzureDataLake #AzureDatabricks #DeltaLake #AzureDataEngineering #AzureBI #AzureAnalytics #AzureTraining #DataEngineerProjects #sqlschool

1 note

·

View note

Text

Quiz Time: What tools are used in the pull request process

comment your answer below!

.

.

.

To build a successful career in Azure Administration, DevOps, or Data Engineering, take the first step by joining Azure Trainings.

The best training institute in hyderabad

For more details, contact

Phone: +91 9882498844

Email: [email protected]

Website: https://azuretrainings.in/

#azure#azureadmin#AzureDevOps#azuredataengineer#QuizTime#pullrequestprocess#AzureTraining#SkillUp2025#courses#azuredatabricks#hyderabad#trainingacademy#kukatpallyhyderabad

0 notes

Text

Creole Studios Launches Comprehensive Data Engineering Services with Azure Databricks Partnership

Creole Studios launches comprehensive data engineering services in partnership with Azure Databricks, empowering businesses to unlock insights, ensure compliance, and drive growth with expert solutions. From data strategy and consulting to advanced analytics and data quality assurance, Creole Studios offers tailored services designed to maximize the potential of your data.

#DataEngineering#AzureDatabricks#DataStrategy#AdvancedAnalytics#BigData#DataQuality#DataOps#DataGovernance#Compliance#BusinessInsights#CreoleStudios

0 notes

Link

Features of Azure Databricks integration

Look at this architecture to understand how Azure Databricks can be an invaluable tool for your organization when optimized. We can help you do so with custom Azure Databricks solutions & consulting.

Highlighting some Key Features of Azure Databricks integration:

✔Apache Spark environment

✔Analytics for all

✔Choice of language

✔Interactive Workspace

✔Native Integrations

✔Security

We can help you design, create, and implement a new solution or revamp an existing one. Contact us today

0 notes

Text

youtube

Azure Data Engineer Online Recorded Demo Video

Mode of Training: Online

Contact +91-9989971070

Visit: https://visualpath.in/

WhatsApp: https://www.whatsapp.com/catalog/91998997107

Subscribe Visualpath channel https://youtu.be/sg_rnfEEIsw?si=HiqASlu0kLCzSgvw

Watch demo video@ https://youtu.be/YPLOg1NIEUY?si=GkVD4-i_hV3TYGlo

#azuredataengineer#Azure#visualpathedu#onlineclasses#training#DataEngineer#softwaredevelopment#softwaretraining#DataEngineerTraining#azurelake#AzureDatabricks#AzureDataFactory#azurecloud#handsonlearning#RealTimeProjects#AzureData#AzureDataEngineering#Youtube

0 notes

Photo

for more details contact : +91 9989445901 \ +91 9502131327

0 notes

Text

youtube

Mode of Training: Online

Contact us: +91 9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-azuredatabricks-training.html

To subscribe to the Visualpath channel & get regular

updates on further courses: https://www.youtube.com/@VisualPathWatch demo video@ https://youtu.be/UL3HPRt6dbo?si=rAq3kcq8QuspGrvJ

#Azure Data Bricks training#Azure Data Bricks Online#Azure Data Bricks Online Training#Azure Data Bricks Course#Azure Data Bricks Training in Hyderabad#Azure Data Bricks Online Training in Hyderabad#Azure Data Bricks Certification Training#Youtube

0 notes

Video

youtube

In this Azure databricks tutorial you will learn what is Azure databricks for beginners, why we need Azure databricks,how does Azure databricks works, various databricks available and how to integrate Azure databricks with azure blob storage in detail.

0 notes