#databricks

Explore tagged Tumblr posts

Text

Data dose of the Day: Day 4:

🔹 Tip: Choose optimal partitioning strategies when working with big data (e.g., Parquet/Delta).

🔸 Why?: Right partitioning = faster reads, smaller scans. Partition by low-cardinality, query-relevant fields (like event_date or region).

2 notes

·

View notes

Text

Move over, Salesforce and Microsoft! Databricks is shaking things up with their game-changing AI/BI tool. Get ready for smarter, faster insights that leave the competition in the dust.

Who's excited to see what this powerhouse can do?

2 notes

·

View notes

Text

Top 10 Predictive Analytics Tools to Strive in 2024

Predictive analytics has become a crucial tool for businesses, thanks to its ability to forecast key metrics like customer retention, ROI growth, and sales performance. The adoption of predictive analytics tools is growing rapidly as businesses recognize their value in driving strategic decisions. According to Statista, the global market for predictive analytics tools is projected to reach $41.52 billion by 2028, highlighting its increasing importance.

What Are Predictive Analytics Tools?

Predictive analytics tools are essential for managing supply chains, understanding consumer behavior, and optimizing business operations. They help organizations assess their current position and make informed decisions for future growth. Tools like Tableau, KNIME, and Databricks offer businesses a competitive advantage by transforming raw data into actionable insights. By identifying patterns within historical data, these tools enable companies to forecast trends and implement effective growth strategies. For example, many retail companies use predictive analytics to improve inventory management and enhance customer experiences.

Top 10 Predictive Analytics Tools

SAP: Known for its capabilities in supply chain, logistics, and inventory management, SAP offers an intuitive interface for creating interactive visuals and dashboards.

Alteryx: This platform excels in building data models and offers a low-code environment, making it accessible to users with limited coding experience.

Tableau: Tableau is favored for its data processing speed and user-friendly interface, which allows for the creation of easy-to-understand visuals.

Amazon QuickSight: A cloud-based service, QuickSight offers a low-code environment for automating tasks and creating interactive dashboards.

Altair AI Studio: Altair provides robust data mining and predictive modeling capabilities, making it a versatile tool for business intelligence.

IBM SPSS: Widely used in academia and market research, SPSS offers a range of tools for statistical analysis with a user-friendly interface.

KNIME: This open-source tool is ideal for data mining and processing tasks, and it supports machine learning and statistical analysis.

Microsoft Azure: Azure offers a comprehensive cloud computing platform with robust security features and seamless integration with Microsoft products.

Databricks: Built on Apache Spark, Databricks provides a collaborative workspace for data processing and machine learning tasks.

Oracle Data Science: This cloud-based platform supports a wide range of programming languages and frameworks, offering a collaborative environment for data scientists.

Conclusion

As businesses continue to embrace digital transformation, predictive analytics tools are becoming increasingly vital. Companies looking to stay competitive should carefully select the right tools to harness the full potential of predictive analytics in today’s business la

#databricks#oracle data science#sap#alteryx#microsoft#microsoft azure#knime#ibm spss#altair studio#amazon quick sight

1 note

·

View note

Text

#dataengineer#onlinetraining#freedemo#cloudlearning#azuredatlake#Databricks#azuresynapse#AzureDataFactory#Azure#SQL#MySQL#NewTechnolgies#software#softwaredevelopment#visualpathedu#onlinecoaching#ADE#DataLake#datalakehouse#AzureDataEngineering

2 notes

·

View notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

Streaming Analytics with Azure Databricks, Event Hub, and Delta Lake: A Step-by-Step Demo

In this video, we’ll show you how to build a real-time data pipeline for advanced analytics using Azure Databricks, Event Hub, and … source

0 notes

Text

🚀 Azure Data Engineer Online Training – Build Your Cloud Data Career with VisualPath! Step confidently into one of the most in-demand IT roles with VisualPath’s Azure Data Engineer Course Online. Whether you’re a fresher, a working professional, or an enterprise team seeking corporate upskilling, this practical program will help you master the skills to design, develop, and manage scalable data solutions on Microsoft Azure.

💡 Key Skills You’ll Gain:🔹 Azure Data Factory – Create and automate robust data pipelines🔹 Azure Databricks – Handle big data and deliver real-time analytics🔹 Power BI – Build interactive dashboards and data visualizations

📞 Reserve Your FREE Demo Spot Today – Limited Seats Available!

📲 WhatsApp Now: https://wa.me/c/917032290546

🔗 Visit: https://www.visualpath.in/online-azure-data-engineer-course.html 📖 Blog: https://visualpathblogs.com/category/azure-data-engineering/

#visualpathedu#Azure#AzureDataEngineer#MicrosoftAzure#AzureCloud#DataEngineering#CloudComputing#AzureTraining#AzureCertification#BigData#ETL#SQL#PowerBI#Databricks#AzureDataFactory#DataAnalytics#CloudEngineer#MachineLearning#AI#BusinessIntelligence#Snowflake#AzureDataScience

0 notes

Text

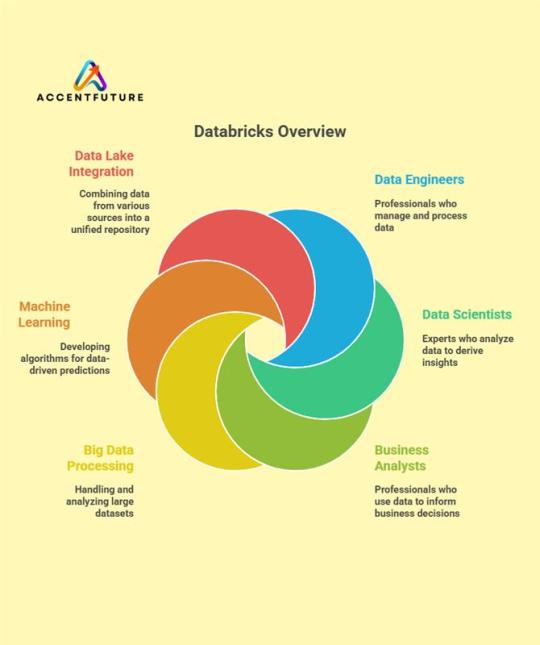

Discover how Databricks empowers organizations through a unified data ecosystem! This visual breaks down the core components and roles:

🔴 Data Lake Integration – Centralizing data from various sources

🔵 Data Engineers – Managing and processing large volumes of data

🟢 Data Scientists – Deriving insights through data analysis

🟡 Business Analysts – Using data to drive smart business decisions

🟠 Big Data Processing – Handling and analyzing massive datasets

🟤 Machine Learning – Building algorithms for predictive analytics

Whether you're a beginner or an experienced data professional, understanding these roles helps you leverage Databricks to its full potential. 🚀

#Databricks#DataScience#MachineLearning#BigData#DataEngineers#Accentfuture#BusinessAnalytics#DataLake

0 notes

Text

Looking to accelerate your career in data engineering? Our latest blog dives deep into expert strategies for mastering Databricks—focusing purely on data engineering, not data science!

✅ Build real-time & batch data pipelines ✅ Work with Apache Spark & Delta Lake ✅ Automate workflows with Airflow & Databricks Jobs ✅ Learn performance tuning, CI/CD, and cloud integrations

Start your journey with AccentFuture’s expert-led Databricks Online Training and get hands-on with tools used by top data teams.

📖 Read the full article now and take your engineering skills to the next level! 👉 www.accentfuture.com

#Databricks#DataEngineering#ApacheSpark#DeltaLake#BigData#ETL#DatabricksTraining#AccentFuture#CareerGrowth

0 notes

Text

Uncovering the Real ROI: How Data Science Services Are Driving Competitive Advantage in 2025

Introduction

What if you could predict your customer’s next move, optimize every dollar spent, and uncover hidden growth levers—all from data you already own? In 2025, the real edge for businesses doesn’t come from owning the most data, but from how effectively you use it. That’s where data science services come in.

Too often, companies pour resources into data collection and storage without truly unlocking its value. The result? Data-rich, insight-poor environments that frustrate leadership and slow innovation. This post is for decision-makers and analytics leads who already know the fundamentals of data science but need help navigating the growing complexity and sophistication of data science services.

Whether you’re scaling a data team, outsourcing to a provider, or rethinking your analytics strategy, this blog will help you make smart, future-ready choices. Let’s break down the trends, traps, and tangible strategies for getting maximum impact from data science services.

Section 1: The Expanding Scope of Data Science Services in 2025

Gone are the days when data science was just about modeling customer churn or segmenting audiences. Today, data science services encompass everything from real-time anomaly detection to predictive maintenance, AI-driven personalization, and prescriptive analytics for operational decisions.

Predictive & Prescriptive Modeling: Beyond simply forecasting, top-tier data science service providers now help businesses simulate outcomes and optimize strategies with scenario analysis.

AI-Driven Automation: From smart inventory management to autonomous marketing, data science is fueling a new level of automation.

Real-Time Analytics: With the rise of edge computing and faster data streams, businesses expect insights in seconds, not days.

Embedded Analytics: Service providers are helping companies build intelligence directly into products, not just dashboards.

These services now touch nearly every business function—HR, operations, marketing, finance—with increasingly sophisticated tools and technologies.

Section 2: Choosing the Right Data Science Services Partner

Selecting the right partner is arguably more critical than the tools themselves. A good fit ensures strategic alignment, faster time to value, and fewer rework cycles.

Domain Expertise: Don’t just look for technical brilliance. Look for providers who understand your industry’s unique metrics, workflows, and regulations.

Tech Stack Compatibility: Ensure your provider is fluent in your existing environment—whether it’s Snowflake, Databricks, Azure, or open-source tools.

Customization vs. Standardization: The best data science services strike a balance between reusable IP and tailored solutions.

Transparency & Collaboration: Look for vendors who co-build with your internal teams, not just ship over-the-wall solutions.

Real-World Example: A retail chain working with a generic vendor struggled with irrelevant models. Switching to a vertical-focused data science services provider with retail-specific datasets improved demand forecasting accuracy by 22%.

Section 3: Common Pitfalls That Derail Data Science Projects

Despite strong intent, many data science initiatives still fail to deliver ROI. Here are common traps and how to avoid them:

Lack of a Clear Business Goal: Many teams jump into modeling without aligning on the problem statement or success metrics.

Dirty or Incomplete Data: If your foundational data layers are unstable, no algorithm can fix the problem.

Overemphasis on Accuracy: A highly accurate model that no one uses is worthless. Focus on adoption, interpretability, and stakeholder buy-in.

Skills Gap: Without a strong bridge between data scientists and business users, insights never make it into workflows.

Solution: The best data science services include data engineers, business translators, and UI/UX designers to ensure end-to-end delivery.

Section 4: Unlocking Hidden Opportunities with Advanced Analytics

In 2025, forward-thinking firms are using data science services not just for problem-solving, but for uncovering growth levers:

Customer Lifetime Value Optimization: Predictive models that help decide how much to spend and where to focus retention.

Dynamic Pricing: Real-time adjustment based on demand, inventory, and competitor moves.

Fraud Detection & Risk Management: ML models can now flag anomalies within seconds, preventing millions in losses.

ESG & Sustainability Metrics: Data science is enabling companies to report and optimize environmental and social impact.

Real-World Use Case: A logistics firm used data science services to optimize delivery routes using real-time weather, traffic, and vehicle condition data, reducing fuel costs by 19%.

Section 5: How to Future-Proof Your Data Science Strategy

Data science isn’t a one-time investment—it’s a moving target. To remain competitive, your strategy must evolve.

Invest in Data Infrastructure: Cloud-native platforms, version control for data, and real-time pipelines are now baseline requirements.

Prioritize Model Monitoring: Drift happens. Build feedback loops to track model accuracy and retrain when needed.

Embrace Responsible AI: Ensure fairness, explainability, and data privacy compliance in all your models.

Build a Culture of Experimentation: Foster a test-learn-scale mindset across teams to embrace insights-driven decision-making.

Checklist for Evaluating Data Science Service Providers:

Do they offer multi-disciplinary teams (data scientists, engineers, analysts)?

Can they show proven case studies in your industry?

Do they prioritize ethics, security, and compliance?

Will they help upskill your internal teams?

Conclusion

In 2025, businesses can’t afford to treat data science as an experimental playground. It’s a strategic function that drives measurable value. But to realize that value, you need more than just data scientists—you need the right data science services partner, infrastructure, and mindset.

When chosen wisely, these services do more than optimize KPIs—they uncover opportunities you didn’t know existed. Whether you’re trying to grow smarter, serve customers better, or stay ahead of risk, the right partner can be your unfair advantage.

If you’re ready to take your analytics game from reactive to proactive, it may be time to evaluate your current data science service stack.

#DataScience2025#FutureOfAnalytics#AdvancedAnalytics#AITransformation#MachineLearningModels#PredictiveAnalytics#PrescriptiveAnalytics#RealTimeData#EdgeComputing#DataDrivenDecisions#RetailAnalytics#SupplyChainOptimization#SmartLogistics#CustomerInsights#DynamicPricing#FraudDetection#SaaSAnalytics#MarketingAnalytics#ESGAnalytics#HRAnalytics#DataEngineering#MLOps#SnowflakeDataCloud#AzureDataServices#Databricks#BigQuery#PythonDataScience#CloudDataPlatform#DataPipelines#ModelMonitoring

0 notes

Text

Enterprises are embracing Hadoop to Databricks migration to achieve better performance, scalability, and reduce operational overhead. Migrating to Databricks enables organizations to streamline their data infrastructure, improve processing efficiency, and scale effortlessly. Many businesses have experienced enhanced runtime and efficiency through this migration. Check out our Exadata to Databricks migration case study to see how organizations have successfully reduced runtime and improved overall performance. Ready to optimize your data infrastructure? Learn more about how Hadoop to Databricks migration can take your business to the next level.

#HadoopToDatabricksMigration#DataOptimization#CloudMigration#Databricks#PerformanceImprovement#DataEfficiency

1 note

·

View note

Text

youtube

Databricks Metrics - create a semantic layer and improve data engineering Stop hard‑coding business numbers—use Metrics Views in Databricks! 📊 Move magic numbers like 4 / 5 / 7 out of your code, store them as governed measures, and let the business own the updates—all inside Unity Catalog. 🪄 How to set it up (60 sec): 1️⃣ CREATE METRICS VIEW – write a simple SQL CREATE METRICS VIEW … AS SELECT that defines each measure. 2️⃣ REGISTER IN UNITY CATALOG – add the view to the catalog so lineage, ACLs, and search work automatically. 3️⃣ LET THE BUSINESS EDIT – analysts can update those metrics right in the UC UI; your pipelines pick up changes instantly. Result: cleaner code, single source of truth, and zero last‑minute “change the constant!” panics. 🚀 📚 Learn More End the Data‑Engineering Nightmare with Metrics (deep dive & sample SQL): https://ift.tt/xP4cZeS Databricks Metrics Views: SQL Analytics Guide (Sunny Data blog): https://ift.tt/l0cQngH 🔔 Subscribe for weekly Lakehouse shorts: https://www.youtube.com/@hubert_dudek/?sub_confirmation=1 ☕ Support the channel: https://ift.tt/407CdkM ================================================== 🔎 Related Phrases / Tags: databricks metrics view, unity catalog metrics, databricks measures, hard‑coded numbers, data engineering best practices, governed metrics, databricks sql tutorial, create metrics view databricks, business logic decoupling, databricks unity catalog tutorial, lakehouse governance, data observability, metrics view demo, databricks short, data pipeline tips via databricks by Hubert Dudek https://www.youtube.com/channel/UCR99H9eib5MOHEhapg4kkaQ July 07, 2025 at 03:59PM

#databricks#dataengineering#machinelearning#sql#dataanalytics#ai#databrickstutorial#databrickssql#databricksai#Youtube

0 notes

Text

Databricks Delta Live Tables(DLT) with Demo

https://youtu.be/gySjb0rITf4

0 notes

Text

SAP and Databricks Forge Strategic Alliance to Accelerate Enterprise AI Innovation

Royal Cyber is excited to highlight the groundbreaking partnership between SAP and Databricks, announced in their recent press release. This collaboration is set to redefine how enterprises leverage AI, data analytics, and cloud technologies to drive digital transformation.

Why This Partnership Matters

Seamless Data Integration: The alliance bridges SAP’s industry-leading ERP solutions with Databricks’ unified data analytics platform, enabling businesses to harness real-time insights from their SAP data.

AI & Machine Learning at Scale: Enterprises can now accelerate AI-driven decision-making by combining SAP’s structured business data with Databricks’ advanced AI/ML capabilities.

Accelerated Cloud Modernization: Joint customers can unlock the full potential of their data across hybrid and multi-cloud environments, ensuring agility and scalability.

Key Takeaways for Businesses

✔ Enhanced Analytics: Deeper integration between SAP and Databricks simplifies complex data workflows. ✔ Faster AI Adoption: Pre-built connectors and optimized pipelines reduce time-to-value for AI projects. ✔ Future-Ready Enterprises: A unified approach to data and AI empowers organizations to stay competitive in a data-driven world.

As a trusted partner for SAP and Databricks solutions, Royal Cyber is ready to help businesses maximize this alliance—whether through migration, integration, or AI strategy development.

Read the full announcement here, and let us know how we can support your AI and cloud journey!

0 notes

Text

Azure Databricks Interview Questions 2025 [WITH REAL-TIME SCENARIOS]

Databricks | PySpark | Azure | Databricks Interview Questions In this video, I’ll take you through latest Databricks interview … source

0 notes

Text

🚀 Master Databricks for a Future-Proof Data Engineering Career!

Looking to accelerate your career in data engineering? Our latest blog dives deep into expert strategies for mastering Databricks—focusing purely on data engineering, not data science!

✅ Build real-time & batch data pipelines ✅ Work with Apache Spark & Delta Lake ✅ Automate workflows with Airflow & Databricks Jobs ✅ Learn performance tuning, CI/CD, and cloud integrations

Start your journey with AccentFuture’s expert-led Databricks Online Training and get hands-on with tools used by top data teams.

📖 Read the full article now and take your engineering skills to the next level! 👉 www.accentfuture.com

#Databricks#DataEngineering#ApacheSpark#DeltaLake#BigData#ETL#DatabricksTraining#AccentFuture#CareerGrowth

0 notes