#atomic force microscope manufacturer

Explore tagged Tumblr posts

Text

Revolutionary X-ray microscope unveils sound waves deep within crystals

Researchers at the Department of Energy's SLAC National Accelerator Laboratory. Stanford University, and Denmark Technical University have designed a cutting-edge X-ray microscope capable of directly observing sound waves at the tiniest of scales—the lattice level within a crystal. These findings, published last week in Proceedings of the National Academy of Sciences, could change the way scientists study ultrafast changes in materials and the resulting properties. "The atomic structure of crystalline materials gives rise to their properties and associated 'use-case' for an application," said one of the researchers, Leora Dresselhaus-Marais, an assistant professor at Stanford and SLAC. "The crystalline defects and atomic scale displacements describe why some materials strengthen while others shatter in response to the same force. Blacksmiths and semiconductor manufacturing have perfected our ability to control some types of defects, however, few techniques today can image these dynamics in real-time at the appropriate scales to resolve how those the distortions connect to the bulk properties."

Read more.

#Materials Science#Science#X Rays#Microscopy#Sound#Crystal structure#Defects#Materials characterization

22 notes

·

View notes

Text

For developing designers, there’s magic in 2.737 (Mechatronics)

New Post has been published on https://thedigitalinsider.com/for-developing-designers-theres-magic-in-2-737-mechatronics/

For developing designers, there’s magic in 2.737 (Mechatronics)

The field of mechatronics is multidisciplinary and interdisciplinary, occupying the intersection of mechanical systems, electronics, controls, and computer science. Mechatronics engineers work in a variety of industries — from space exploration to semiconductor manufacturing to product design — and specialize in the integrated design and development of intelligent systems. For students wanting to learn mechatronics, it might come as a surprise that one of the most powerful teaching tools available for the subject matter is simply a pen and a piece of paper.

“Students have to be able to work out things on a piece of paper, and make sketches, and write down key calculations in order to be creative,” says MIT professor of mechanical engineering David Trumper, who has been teaching class 2.737 (Mechatronics) since he joined the Institute faculty in the early 1990s. The subject is electrical and mechanical engineering combined, he says, but more than anything else, it’s design.

“If you just do electronics, but have no idea how to make the mechanical parts work, you can’t find really creative solutions. You have to see ways to solve problems across different domains,” says Trumper. “MIT students tend to have seen lots of math and lots of theory. The hands-on part is really critical to build that skill set; with hands-on experiences they’ll be more able to imagine how other things might work when they’re designing them.”

Play video

A lot like magic Video: Department of Mechanical Engineering

Audrey Cui ’24, now a graduate student in electrical engineering and computer science, confirms that Trumper “really emphasizes being able to do back-of-the-napkin calculations.” This simplicity is by design, and the critical thinking it promotes is essential for budding designers.

“Sitting behind a computer terminal, you’re using some existing tool in the menu system and not thinking creatively,” says Trumper. “To see the trade-offs, and get the clutter out of your thinking, it helps to work with a really simple tool — a piece of paper and, hopefully, multicolored pens to code things — you can design so much more creatively than if you’re stuck behind a screen. The ability to sketch things is so important.”

Trumper studies precision mechatronics, broadly, with a particular interest in mechatronic systems for demanding resolutions. Examples include projects that employ magnetic levitation, linear motors for driving precision manufacturing for semiconductors, and spacecraft attitude control. His work also explores lathes, milling applications, and even bioengineering platforms.

Class 2.737, which is offered every two years, is lab-based. Sketches and concepts come to life in focused experiences designed to expose students to key principles in a hands-on way and are very much informed by what Trumper has found important in his research. The two-week-long lab explorations range from controlling a motor to evaluating electronic scales to vibration isolations systems built on a speaker. One year, students constructed a working atomic force microscope.

“The touch and sense of how things actually work is really important,” Trumper says. “As a designer, you have to be able to imagine. If you think of some new configuration of a motor, you need to imagine how it would work and see it working, so you can do design iterations in your imagined space — to make that real requires that you’ve had experience with the actual thing.”

He says his former late colleague, Woodie Flowers SM ’68, MEng ’71, PhD ’73, used to call it “running the movie.” Trumper explains, “once you have the image in your mind, you can more easily picture what’s going on with the problem — what’s getting hot, where’s the stress, what do I like and not like about this design. If you can do that with a piece of paper and your imagination, now you design new things pretty creatively.”

Flowers had been the Pappalardo Professor Emeritus of Mechanical Engineering at the time of his passing in October 2019. He is remembered for pioneering approaches to education, and was instrumental in shaping MIT’s hands-on approach to engineering design education.

Class 2.737 tends to attract students who like to design and build their own things. “I want people who are heading toward being hardware geeks,” says Trumper, laughing. “And I mean that lovingly.” He says his most important objective for this class is that students learn real tools that they will find useful years from now in their own engineering research or practice.

“Being able to see how multiple pieces fit in together and create one whole working system is just really empowering to me as an aspiring engineer,” says Cui.

For fellow 2.737 student Zach Francis, the course offered foundations for the future along with a meaningful tie to the past. “This class reminded me about what I enjoy about engineering. You look at it when you’re a young kid and you’re like ‘that looks like magic!’ and then as an adult you can now make that. It’s the closest thing I’ve been to a wizard, and I like that a lot.”

#applications#approach#atomic#bioengineering#Classes and programs#code#computer#Computer Science#Computer science and technology#course#Design#designers#development#domains#driving#education#Electrical Engineering&Computer Science (eecs)#electronic#Electronics#Engineer#engineering#engineers#Faculty#flowers#Future#hands-on#Hardware#how#how to#Industries

0 notes

Text

Transplant Diagnostics Market Size, Share, Growth, Trends [2032]

Transplant Diagnostics Market provides in-depth analysis of the market state of Transplant Diagnostics manufacturers, including best facts and figures, overview, definition, SWOT analysis, expert opinions, and the most current global developments. The research also calculates market size, price, revenue, cost structure, gross margin, sales, and market share, as well as forecasts and growth rates. The report assists in determining the revenue earned by the selling of this report and technology across different application areas.

Geographically, this report is segmented into several key regions, with sales, revenue, market share and growth Rate of Transplant Diagnostics in these regions till the forecast period

North America

Middle East and Africa

Asia-Pacific

South America

Europe

Key Attentions of Transplant Diagnostics Market Report:

The report offers a comprehensive and broad perspective on the global Transplant Diagnostics Market.

The market statistics represented in different Transplant Diagnostics segments offers complete industry picture.

Market growth drivers, challenges affecting the development of Transplant Diagnostics are analyzed in detail.

The report will help in the analysis of major competitive market scenario, market dynamics of Transplant Diagnostics.

Major stakeholders, key companies Transplant Diagnostics, investment feasibility and new market entrants study is offered.

Development scope of Transplant Diagnostics in each market segment is covered in this report. The macro and micro-economic factors affecting the Transplant Diagnostics Market

Advancement is elaborated in this report. The upstream and downstream components of Transplant Diagnostics and a comprehensive value chain are explained.

Browse More Details On This Report at @https://www.globalgrowthinsights.com/market-reports/transplant-diagnostics-market-100551

Global Growth Insights

Web: https://www.globalgrowthinsights.com

Our Other Reports:

Resistance Welding Machine MarketMarket Forecast

Global Pigmentation Disorders Treatment MarketMarket Size

Constant Voltage Led Driver MarketMarket Growth

Next-Generation OSS & BSS MarketMarket Analysis

Oncolytic Virus MarketMarket Size

Global Dual-Ovenable Trays & Containers MarketMarket Share

Global Concrete Temperature Sensors and Maturity Meters MarketMarket Growth

Polyurethane Dispersions (PUD) MarketMarket

Automotive Transmission MarketMarket Share

Voice Communication Equipment MarketMarket Growth Rate

Dermatology Lasers MarketMarket Forecast

Global Frozen Tissues Samples MarketMarket Size

Cloud Infrastructure Services MarketMarket Growth

Artificial Intelligence in Healthcare MarketMarket Analysis

Abrasive MarketMarket Size

Global Smartwatch Bands & Smartwatch MarketMarket Share

Global Finance Lease MarketMarket Growth

Low-Power Geolocation MarketMarket

Phytonutrients MarketMarket Share

Cycling Gloves MarketMarket Growth Rate

Agricultural Drones MarketMarket Forecast

Global Direct Air Capture (DAC or DACCS) MarketMarket Size

Airfryer MarketMarket Growth

Single Lead ECG Equipment MarketMarket Analysis

Camera Battery MarketMarket Size

Global Load Cell MarketMarket Share

Global Methyl Tert-Butyl Ether(Mtbe) MarketMarket Growth

Biotechnology Separation Systems MarketMarket

Polyethylene Terephthalate (PET) MarketMarket Share

Parking Management MarketMarket Growth Rate

Employee Assistance Program Services MarketMarket Forecast

Global Personal Care Wipes MarketMarket Size

Atomic Force Microscope (Afm) MarketMarket Growth

Solar Backsheet MarketMarket Analysis

Needle Cage MarketMarket Size

Global Central Reservation System MarketMarket Share

Global Global Molybdenum Carbide Powders MarketMarket Growth

Pharmaceutical Grade Gelatin Powder Market Market

Gravel Paver - Global MarketMarket Share

0 notes

Text

Elevate Your Research with the Best Physics Lab Equipment Manufacturers

Are you looking to push the boundaries of scientific discovery? To unlock the mysteries of the universe? The quality of your research depends heavily on the tools at your disposal. When it comes to physics, precision, reliability, and innovation are paramount. That's why partnering with the best lab equipment manufacturer is crucial.

Why Quality Lab Equipment Matters

In the realm of physics, where experiments often hinge on minute details and intricate measurements, the significance of high-quality lab equipment cannot be overstated. Whether you're studying quantum mechanics, electromagnetism, or astrophysics, the accuracy and reliability of your instruments directly impact the validity and reproducibility of your results.

Key Considerations in Choosing a Manufacturer

1. Precision Engineering: Look for manufacturers known for their precision engineering. Instruments must be built to exacting standards to ensure accurate measurements and dependable performance.

2. Durability and Reliability: Physics experiments can be rigorous, subjecting equipment to extreme conditions. Choose equipment known for its durability and reliability to withstand the demands of your research.

3. Innovation and Technology: Advancements in physics often stem from breakthroughs in instrumentation. Partner with manufacturers at the forefront of technological innovation to access cutting-edge equipment that can propel your research forward.

4. Customization Options: Every research project is unique, with its own set of requirements. Opt for manufacturers who offer customization options, allowing you to tailor equipment to your specific needs.

5. Support and Service: Beyond the initial purchase, consider the level of support and service offered by the manufacturer. Timely maintenance, technical support, and readily available spare parts are essential for uninterrupted research.

Leading Physics Lab Equipment Manufacturers

1. Keysight Technologies: Renowned for its precision measurement solutions, Keysight Technologies offers a comprehensive range of instruments for fundamental research in physics, including signal analyzers, oscilloscopes, and spectrum analyzers.

2. Thorlabs: Specialising in photonics and optomechanics, Thorlabs provides high-quality equipment for applications ranging from quantum optics to laser spectroscopy.

3. Newport Corporation: With a focus on precision motion control and optical technologies, Newport Corporation offers a diverse portfolio of products ideal for experimental setups in atomic, molecular, and optical physics.

4. Bruker Corporation: Known for its advanced analytical instrumentation, Bruker Corporation provides cutting-edge solutions for materials research and nanotechnology, including atomic force microscopes and scanning electron microscopes.

5. Lake Shore Cryotronics: For researchers exploring low-temperature phenomena, Lake Shore Cryotronics offers a range of cryogenic and temperature measurement instruments crucial for condensed matter physics and quantum computing.

Conclusion

In the pursuit of scientific discovery, the right tools can make all the difference. By partnering with the best physics lab equipment manufacturers, you can elevate your research to new heights, unlocking the mysteries of the universe and driving innovation in the field of physics. Choose wisely, invest in quality, and embark on a journey of exploration and discovery that knows no bounds.

For more information visit us : https://supertekedu.com/physics/ Mail us: [email protected] Call us: (+91-171-2699537 / 2699297) Location:23, Industrial Estate, Ambala Cantt-133006

0 notes

Text

Exploring Laboratory Testing Equipment: Tools for Precision and Accuracy

Laboratory testing equipment plays a very important role in scientific research quality control and product development across various industries. Understanding chemical compositions and physical properties to detect contaminants and ensuring compliance with regulatory standards to specialized tools are very important for conducting accurate and reliable testing procedures. But before you invest in tools you need to check out melt flow indexer price in UAE, and check if it aligns with your budget or not.

Basics about Laboratory Testing Equipment’s in UAE

Laboratory testing equipment includes a huge range of instruments devices and apparatuses that is used to understand measure and also evaluate different properties and characteristics of various materials substances and samples. The tools are especially used in laboratories research facilities manufacturing plants and quality control laboratories to conduct experiments perform analysis and validate the safety and quality of various products. If you want to buy a metal flow indexer, then you need to check the melt flow indexer price in UAE.

Different types of Laboratory Testing equipment in UAE

Different types of laboratory testing equipment are specially designed for specific applications and testing requirements.

Analytical instruments are especially used to determine the chemical composition structure and properties of different substances. Some of the most common examples include spectrometers chromatography and mass spectrometers

Physical testing equipment measures the physical properties and characteristics of different materials like the hardness, strength and density. Some of the examples include tensile testers and viscometers

Environmental testing equipment is also used to understand the impact of environmental factors on different materials like products and ecosystems. Some of the examples include temperature chambers humidity chambers and environmental chambers.

Microscopic equipment is all about the examination and analysis of the materials at the microscopic level. Examples basically include light microscopes electron microscopes and atomic force microscopes. You can check out melt flow indexer price in UAE, if you plan to buy any equipment here.

Importance of Laboratory Testing Equipment in UAE

Laboratory testing equipment plays a very important role in scientific research quality assurance and regulatory compliance across different industries.

Laboratory testing equipment provides you with accurate results and you can make informed decisions and draw meaningful conclusions based on the empirical data.

Laboratory testing equipment is very important to maintain quality control and assurance and manufacturing process. It ensures that your products meet the required specification standards and the regulatory requirements.

Laboratory testing Equipment allows you to develop new products materials and technologies by conducting experiments and identifying opportunities for improvements and innovation.

Additionally, you need to know that the laboratory testing equipment is used to understand the environmental impact of industrial processes. You can monitor air water and also ensure components with environmental regulations and safety standards.

Important components of laboratory testing equipment

Sensors and detectors are used to understand and measure different parameters like temperature pressure and conductivity.

Sample handling and preparation systems are used to collect process and prepare samples for analysis ensuring that they are representative and suitable for testing

The control systems regulate the operation of laboratory testing equipment. It allows you to adjust the settings parameters and conditions whenever you need.

Data acquisition and analysis software are used to collect, store and also analyze the data generated during the testing procedures. It provides you with insights and interpretation of the results.

In short you need to know that Laboratory Testing Equipment’s in UAE play a very important role in scientific research quality control and product development across different industries from the specialized tools are very important for conducting accurate and reliable testing procedures. By understanding the unique applications and importance of laboratory testing equipment you can harness the power of these tools to advance knowledge and ensure the safety and quality of product processes.

0 notes

Text

Polymer Testing Methods for Better Product Development

Polymer testing is a critical aspect of product development, ensuring the quality, durability, and performance of materials. In this article, we'll delve into the various testing methods employed in the polymer industry and how they contribute to enhanced product development.

Introduction

The world of polymer development is ever-evolving, and as manufacturers strive for excellence, the need for robust testing methods becomes paramount. But what are the challenges faced in this dynamic landscape, and how do polymer material testing methods address them?

Unravelling the Challenges

In an industry marked by innovation and complexity, manufacturers grapple with the perplexities of creating products that not only meet but exceed expectations. How can polymer testing methods be the solution to this puzzle, ensuring that the final product stands out in the market?

The Foundation: Understanding Polymer Properties

Mechanical Testing

One of the cornerstones of polymer testing is mechanical analysis. This involves assessing the material's response to applied forces, uncovering its strength, elasticity, and resilience. How does mechanical testing lay the foundation for better product development, and what insights does it provide to engineers and manufacturers?

Thermal Analysis

Understanding how polymers react to temperature changes is crucial. Thermal analysis techniques delve into the material's behavior under different temperature conditions. How does this contribute to product development, ensuring the end product can withstand diverse environmental challenges?

Ensuring Quality: Chemical Testing

Spectroscopy

Polymer chemical composition plays a pivotal role in product quality. Spectroscopy techniques, such as FTIR and NMR, allow scientists to analyze the molecular structure of polymers. How does unraveling the chemical makeup enhance the quality of polymer products?

Chromatography

Polymer purity is non-negotiable in product development. Chromatography methods aid in separating and analyzing polymer components, ensuring the end product meets stringent quality standards. How does chromatography contribute to the precision required in polymer development?

Advanced Techniques for Enhanced Insights

Rheology

Understanding the flow and deformation behavior of polymers is essential for certain applications. Rheological testing provides insights into viscosity, elasticity, and other flow properties. How does this advanced technique refine the development process, particularly in industries demanding precise material behavior?

Microscopy

In the microscopic world of polymers, details matter. Microscopy techniques, including electron and atomic force microscopy, offer a close-up view of polymer structures. How do these techniques provide a microscopic perspective for better decision-making in product development?

Conclusion

As we conclude our exploration of polymer testing methods, the answers to our initial questions become evident. Polymer testing is not merely a requisite but a catalyst for superior product development. The challenges of innovation are met with solutions rooted in understanding mechanical, thermal, and chemical aspects.

In this intricate journey of development, polymer testing methods stand as the beacon guiding manufacturers towards excellence. From unraveling molecular mysteries to assessing material behavior under stress, each technique contributes to a comprehensive understanding.

So, can product development truly excel without a meticulous examination of polymer properties? The resounding answer lies in the depth of testing methods employed. As manufacturers embrace these techniques, they not only ensure product quality but also pave the way for groundbreaking innovations.

In the ever-advancing realm of polymer science, one thing is clear—testing methods aren't just tools; they are the architects of progress, shaping a future where polymer products set new standards of excellence.

0 notes

Text

"Beyond Surface-Level: Delving Deep with Failure Analysis Equipment"

Failure analysis equipment refers to a variety of tools, instruments, and techniques used to investigate and determine the root causes of failures in materials, components, or systems. These equipment are crucial for identifying and understanding the factors that contribute to failures, allowing engineers and researchers to develop effective solutions and prevent future failures.

One common type of failure analysis equipment is microscopy tools. Optical microscopes, electron microscopes (such as scanning electron microscopes and transmission electron microscopes), and atomic force microscopes enable detailed examination of the surface, structure, and composition of materials at various scales. These microscopes help identify features like cracks, voids, deformations, corrosion, or other anomalies that may have led to failure.

Another important category of equipment is analytical instruments. These include spectrometers, X-ray diffraction (XRD) systems, energy-dispersive X-ray spectroscopy (EDS) detectors, Fourier transform infrared spectroscopy (FTIR), and thermal analysis instruments. These analytical tools help analyze the chemical composition, crystal structure, and thermal behavior of materials, providing insights into the failure mechanisms.

Mechanical testing machines, such as universal testing machines, hardness testers, and impact testers, are also critical in failure analysis. These instruments assess the mechanical properties, strength, and performance of materials under different loading conditions. By subjecting failed specimens to controlled tests, engineers can determine the mechanical factors that contributed to failure, such as excessive stress, poor material properties, or design flaws.

Other specialized equipment used in failure analysis include thermal imaging cameras, acoustic emission detectors, ultrasonic flaw detectors, and electrical test instruments. These tools enable the detection and characterization of thermal anomalies, acoustic signals, ultrasonic waves, or electrical faults associated with failures.

In addition to equipment, failure analysis often involves sample preparation tools, such as cutting, polishing, and etching equipment, which are used to prepare specimens for microscopic examination or further testing.

The data and information obtained from failure analysis equipment play a crucial role in improving product design, enhancing manufacturing processes, and ensuring the reliability and safety of materials and components. By understanding the causes of failure, engineers can implement design modifications, select more suitable materials, optimize manufacturing processes, or improve maintenance practices to prevent similar failures in the future.

In summary, failure analysis equipment encompasses a range of tools, instruments, and techniques used to investigate and determine the causes of failures in materials, components, or systems. These tools enable detailed examination, chemical analysis, mechanical testing, and detection of anomalies associated with failures. The insights gained from failure analysis equipment help engineers develop solutions, improve designs, and enhance the reliability and performance of materials and products.

Read more @ https://techinforite.blogspot.com/2023/05/uncovering-truth-importance-of-failure.html

0 notes

Text

Nanotechnology: Pushing Cannabis into a New Era

Nanotechnology refers to science and engineering involving the design, construction, and manipulation of single atoms and molecules. In the cannabis industry, this technology is commonly utilized in hydrosoluble nanoemulsions. This process involves using water and oil to encapsulate the microscopic cannabinoids.

This technology opens up new opportunities for the development of functional and safe cannabis products, similar to the manufacture of over-the-counter medications.

Nano-Emulsion

The concept of nano-emulsification was first utilized in the pharmaceutical sector to deliver drugs. It can be applied to producing compounds related to the cannabis plant, such as its components.

Once the small particles of cannabinoids have been broken down into smaller ones, they are then recombined with fat to form a type of lipid nanoformulation. This allows the compounds to remain protected from the effects of digestion.

Through the use of nanotechnology, the production of cannabis can be significantly reduced, allowing producers to achieve the same or better quality products. This process can also help them save money on manufacturing their raw materials. The savings can be used to develop new edible products by focusing on high-quality ingredients.

The nanotechnology’s small size and protective layer allow the cannabinoids to easily bypass the liver and deliver a high-speed effect when ingested. This is comparable to the experience of using marijuana vapors.

Nano Technology For Right Now

Water, oil, and emulsifiers are some of the primary ingredients used in the production of nanoemulsions. Manufacturers can now utilize different methods to create these substances. These include brute force and persuasion.

Ultrasonic

One of the most common methods used for the production of nanoemulsions is by using ultrasonic waves. This process works by moving the molecules rapidly through mechanical vibrations, which causes them to break down and form evenly distributed droplets.

High-Pressure Homogenization

Another widely used technique for the production of nanoemulsions is by using high-pressure homogenization. This process involves placing a large amount of pressure on the particles, which causes them to break down.

Micro fluidization

One of the most efficient and complex methods for the production of nanoemulsions is micro fluidization. This process requires a number of components, such as a static mixer and several reservoirs.

Phase inversion

In addition to being incredibly complex, phase inversion is also a method that involves self-emulsifying compositions. This process can lead to changing the compatibilities of a substance’s various components.

Getting the most out of the nanoemulsion process can be challenging for new brands and companies in the cannabis industry. This technology provides them with numerous opportunities to improve their processes and create new products. Through the use of this technology, the middleman between the producers and the consumers can also find a lucrative opportunity.

0 notes

Text

Atomic Force Microscope Courses

Atomic force microscopes are becoming essential tools for university research and industrial inspection. Thus, educators from high schools to research universities are establishing courses so that their students can learn about atomic force microscopes With AFM Workshop products educators are able to teach students about atomic force microscopy depending on the level of exposure desired, and available time.

At AFM Workshop we have identified three levels of training that educator are creating course work for:

Give Exposure to Nanoscale 3-D images

During a workshop that lasts only a few hours, samples are imaged so that students can directly see the nanometer-sized features on a surface. Students have several hours to operate the AFM so they can learn that it is possible to create a magnified view of a surface with a scanning tip.

Learn How To Operate An AFM

At this level of training, students are able understand how to operate the AFM. Even more, students learn how to use the instrument for measuring images of several types of samples. During the course, students get exposure to the basic operation of the Atomic Force Microscope, including how the instrument scans and how the feedback control works. This educational experience prepares the student for operating AFM instruments in research and industrial environments. The duration of training can be from one week to an entire semester.

Designing and Repairing an AFM

The goal of this level of training is for educators to help students build a potential career in instrumentation design or instrument customer service. In this, students understand the design and construction of an Atomic Force Microscope. This level of training also helps researchers that want to modify or repair their own instruments. During this type of course students build their own AFMs and learn how to measure images on standard reference samples. A one-week intensive 40-hour course can accomplish this group's objective.

AFM Workshop is a leading Atomic force microscope manufacturing company with its headquarters in the USA. Through its global distribution partners, AFM Workshop sells to a global market. Contact AFM Workshop to inquire about atomic force microscope information, pricing, training, application help, customer support and technical service. Our technical experts are available assist you with selecting the right atomic force microscope for your application needs.

To learn more, feel free to visit https://www.afmworkshop.com.

#Atomic Force Microscope#Atomic Force Microscopes#AFM Microscope Price#AFM Workshops#Affordable Atomic Force Microscope#Atomic Force Microscope Manufacturer#Atomic Force Microscopy Companies#AFM#AFM Atomic Force Microscopy

1 note

·

View note

Video

youtube

Introducing NanoScience Center Europe

NanoScience Center Europe of Park Systems, located in Mannheim, Germany, is equipped with full range of academic and industrial research AFMs, including Park NX10, small sample AFM for material and life science.

To know more contact us on : https://www.parksystems.com

0 notes

Text

The Nobel Prize in Physics 2018

Tools made of light

The inventions being honoured this year have revolutionised laser physics. Extremely small objects and incredibly fast processes now appear in a new light. Not only physics, but also chemistry, biology and medicine have gained precision instruments for use in basic research and practical applications.

Arthur Ashkin invented optical tweezers that grab particles, atoms and molecules with their laser beam fingers. Viruses, bacteria and other living cells can be held too, and examined and manipulated without being damaged. Ashkin’s optical tweezers have created entirely new opportunities for observing and controlling the machinery of life.

Gérard Mourou and Donna Strickland paved the way towards the shortest and most intense laser pulses created by mankind. The technique they developed has opened up new areas of research and led to broad industrial and medical applications; for example, millions of eye operations are performed every year with the sharpest of laser beams

Travelling in beams of light

Arthur Ashkin had a dream: imagine if beams of light could be put to work and made to move objects. In the cult series that started in the mid-1960s, Star Trek, a tractor beam can be used to retrieve objects, even asteroids in space, without touching them. Of course, this sounds like pure science fic- tion. We can feel that sunbeams carry energy – we get hot in the sun – although the pressure from the beam is too small for us to feel even a tiny prod. But could its force be enough to push extremely tiny particles and atoms?

Immediately after the invention of the first laser in 1960, Ashkin began to experiment with the new instrument at Bell Laboratories outside New York. In a laser, light waves move coherently, unlike ordinary white light in which the beams are mixed in all the colours of the rainbow and scattered in every direction.

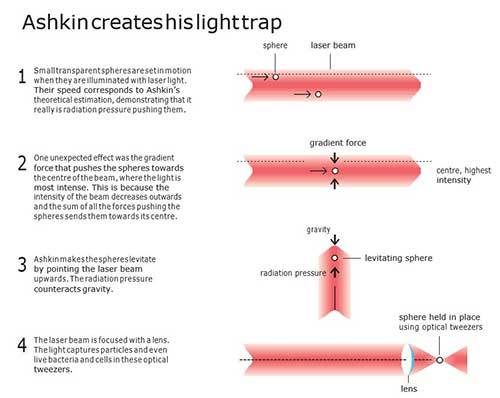

Ashkin realised that a laser would be the perfect tool for getting beams of light to move small particles. He illuminated micrometre-sized transparent spheres and, sure enough, he immediately got the spheres to move. At the same time, Ashkin was surprised by how the spheres were drawn towards the middle of the beam, where it was most intense. The explanation is that however sharp a laser beam is, its intensity declines from the centre out towards the sides. Therefore, the radiation pressure that the laser light exerts on the particles also varies, pressing them towards the middle of the beam, which holds the particles at its centre.

To also hold the particles in the direction of the beam, Ashkin added a strong lens to focus the laser light. The particles were then drawn towards the point that had the greatest light intensity. A light trap was born; it came to be known as optical tweezers.

Figure 1. Ashkin creates a light trap, which becomes known as optical tweezers.

Living bacteria captured by light

After several years and many setbacks, individual atoms could also be caught in the trap. There were many difficulties: one was that stronger forces were needed for the optical tweezers to be able to grab the atoms, and another was the heat vibrations of the atoms. It was necessary to find a way of slowing down the atoms and packing them into an area smaller than the full-stop at the end of this sentence. Everything fell into place in 1986, when optical tweezers could be combined with other methods for stopping atoms and trapping them.

While slowing down atoms became an area of research in itself, Arthur Ashkin discovered an entirely new use for his optical tweezers – studies of biological systems. It was chance that led him there. In his attempts to capture ever smaller particles, he used samples of small mosaic viruses. After he happened to leave them open overnight, the samples were full of large particles that moved hither and thither. Using a microscope, he discovered these particles were bacteria that were not just swimming around freely – when they came close to the laser beam, they were caught in the light trap. Howe- ver, his green laser beam killed the bacteria, so a weaker beam was necessary for them to survive. In invisible infrared light the bacteria stayed unharmed and were able to reproduce in the trap.

Accordingly, Ashkin’s studies then focused on numerous different bacteria, viruses and living cells. He even demonstrated that it was possible to reach into the cells without destroying the cell membrane.

Ashkin opened up a whole world of new applications with his optical tweezers. One important breakthrough was the ability to investigate the mechanical properties of molecular motors, large molecules that perform vital work inside cells. The first one to be mapped in detail using optical tweezers was a motor protein, kinesin, and its stepwise movement along microtubules, which are part of the cell’s skeleton.

Figure 2. The optical tweezers map the molecular motor kinesin as it walks along the cell skeleton.

From science fiction to practical applications

Over the last few years, many other researchers have been inspired to adopt Ashkin’s methods and further refine them. The development of innumerable applications is now driven by optical tweezers that make it possible to observe, turn, cut, push and pull – without touching the objects being investigated. In many laboratories, laser tweezers are therefore standard equipment for studying biological processes, such as individual proteins, molecular motors, DNA or the inner life of cells. Optical holography is among the most recent developments, in which thousands of tweezers can be used simultaneously, for example to separate healthy blood cells from infected ones, something that could be broadly applied in combatting malaria.

Arthur Ashkin never ceases to be amazed over the development of his optical tweezers, a science fiction that is now our reality. The second part of this year’s prize – the invention of ultrashort and super-strong laser pulses – also once belonged to researchers’ unrealised visions of the future.

New technology for ultrashort high-intensity beams

The inspiration came from a popular science article that described radar and its long radio waves. However, transferring this idea to the shorter optical light waves was difficult, both in theory and in practice. The breakthrough was described in the article that was published in December 1985 and was Donna Strickland’s first scientific publication. She had moved from Canada to the University of Rochester in the US, where she became attracted to laser physics by the green and red beams that lit the laboratory like a Christmas tree and, not least, by the visions of her supervisor, Gérard Mourou. One of these has now been realised – the idea of amplifying short laser pulses to unprecedented levels.

Laser light is created through a chain reaction in which the particles of light, photons, generate even more photons. These can be emitted in pulses. Ever since lasers were invented, almost 60 years ago, researchers have endeavoured to create more intense pulses. However, by the mid-1980s, the end of the road had been reached. For short pulses it was no longer practically possible to increase the intensity of the light without destroying the amplifying material.

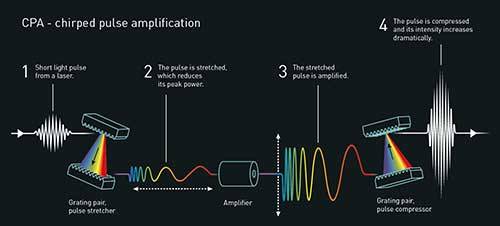

Strickland and Mourou’s new technique, known as chirped pulse amplification, CPA, was both simple and elegant. Take a short laser pulse, stretch it in time, amplify it and squeeze it together again. Whena pulse is stretched in time, its peak power is much lower so it can be hugely amplified without damaging the amplifier. The pulse is then compressed in time, which means that more light is packed together within a tiny area of space – and the intensity of the pulse then increases dramatically.

It took a few years for Strickland and Mourou to combine everything successfully. As usual, a wealth of both practical and conceptual details caused difficulties. For example, the pulse was to be stretched using a newly acquired 2.5 km-long fibre optic cable. But no light came out – the cable had broken somewhere in the middle. After a great deal of trouble, 1.4 km had to be enough. One major challenge was synchronising the various stages in the equipment, getting the beam stretcher to match the compressor. This was also solved and, in 1985, Strickland and Mourou were able to prove for the first time that their elegant vision also worked in practice.

The CPA-technique invented by Strickland and Mourou revolutionised laser physics. It became standard for all later high-intensity lasers and a gateway to entirely new areas and applications in physics, chemistry and medicine. The shortest and most intense laser pulses ever could now be created in the laboratory.

The world’s fastest film camera

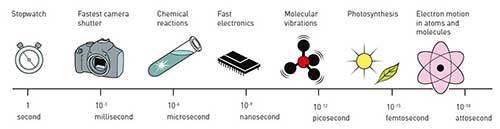

How are these ultrashort and intense pulses used? One early area of use was the rapid illumination of what happens between molecules and atoms in the constantly changing microworld. Things happen quickly, so quickly that for a long time it was only possible to describe the before and after. But with pulses as short as a femtosecond, one million of a billionth of a second, it is possible to see events that previously appeared to be instantaneous.

A laser’s extremely high intensity also makes its light a tool for changing the properties of matter: electrical insulators can be converted to conductors, and ultra-sharp laser beams make it possible to cut or drill holes in various materials extremely precisely – even in living matter.

For example, lasers can be used to create more efficient data storage, as the storage is not only built on the surface of the material, but also in tiny holes drilled deep into the storage medium. The technology is also used to manufacture surgical stents, micrometre- sized cylinders of stretched metal that widen and reinforce blood vessels, the urinary tract and other passageways inside the body.

There are innumerable areas of use, which have not yet been fully explored. Every step forward allows researchers to gain insights into new worlds, changing both basic research and practical applications.

One of the new areas of research that has arisen in recent years is attosecond physics. Laser pulses shorter than a hundred attoseconds (one attosecond is a billionth of a billionth of a second) reveal the dramatic world of electrons. Electrons are the workhorses of chemistry; they are responsible for the optical and electrical properties of all matter and for chemical bonds. Now they are not only observable, but they can also be controlled.

Image above: The faster the light pulses, the faster the movements that can be observed. The almost inconceivably short laser pulses are as fast as a few femtoseconds and can even be a thousand times faster, attoseconds. This allows sequences of events, which could once only be guessed at, to be filmed; the movement of electrons around an atomic nucleus can now be observed with an attosecond camera.

Towards even more extreme light

Many applications for these new laser techniques are waiting just around the corner – faster electronics, more effective solar cells, better catalysts, more powerful accelerators, new sources of energy, or designer pharmaceuticals. No wonder there is tough competition in laser physics.

Donna Strickland is now continuing her research career in Canada, while Gérard Mourou, who has returned to France, is involved in a pan-European initiative in laser technology, among other projects. He initiated and led the early development of Extreme Light Infrastructure (ELI). Three sites – in the Czech Republic, Hungary and Romania – will be complete in a few years’ time. The planned peak power is 10 petawatts, which is equivalent to an incredibly short flash from a hundred thousand billion light bulbs.

These sites will specialise in different areas – attosecond research in Hungary, nuclear physics in Romania and high energy particle beams in the Czech Republic. New and even more powerful facilities are being planned in China, Japan, the US and Russia.

There is already speculation about the next step: a tenfold increase in power, to 100 petawatts. Visions for the future of laser technology do not stop there. Why not the power of a zettawatt (one million petawatts, 1021 watt), or pulses down to zeptoseconds, which are equivalent to the almost inconceivably tiny sliver of time of 10 –21 seconds? New horizons are opening up, from studies of quantum physics in a vacuum to the production of intense proton beams that can be used to eradi- cate cancer cells in the body. However, even now these celebrated inventions allow us to rummage around in the microworld in the best spirit of Alfred Nobel – for the greatest benefit to humankind.

520 notes

·

View notes

Text

Best inventions of all time-final part

Technology is an integral part of the human experience. Since the earliest days of our species, we have developed tools to tame the physical world.

Any attempt to count the major technological inventions is certainly questionable, but here are some key advances that should likely be on such a list (in chronological order):

list of best inventions of all time

1. FIRE - It can be argued that fire was discovered rather than invented. Sure, early humans watched fire incidents, but it wasn't until they figured out how to control and produce it on their own that people were really able to take advantage of all that this new tool had to offer. The first use of fire dates back two million years, while a popular way of using this technology dates back around 125,000 years. The fire gave us warmth, protection and led to a variety of other inventions and key skills like cooking. The ability to cook has helped us maintain the nutrients needed to support our expanding brain and has given us a clear advantage over other primates.

2. WHEEL - The wheel was created by the Mesopotamians around 3500 BC. Invented for the manufacture of ceramics. About 300 years later, the wheel was put on a chariot and the rest is history. Wheels are ubiquitous in our daily life and make our transportation and trade easier. 3. NAIL - The first known use of this very simple but very useful metal clasp dates back to ancient Egypt around 3400 BC. If you like screws more, they've been around since the ancient Greeks (1st or 2nd century BC).

4. OPTICAL LENSES - From glasses to microscopes to telescopes, optical lenses have vastly expanded the possibilities of our vision. They have a long history, first developed by the ancient Egyptians and Mesopotamians, with key theories about light and vision given by the ancient Greeks. Optical lenses were also instrumental in developing media technologies for photography, film, and television.

5. COMPASS - This navigational device was an important force in human exploration. The first compasses were made between 300 and 200 BC. Made from Freestone in China. 6. PAPER - Paper was used around 100 BC. Invented in China and was essential for us to write and share our ideas.

7. PISTOLS - Invented in China in the 9th century, this chemical explosive was an important factor in military technology (and, more broadly, the wars that changed the course of human history).

8. PRESSURE PRESS - This device was invented in 1439 by the German Johannes Gutenberg and in many ways laid the foundation for our modern age. This enabled the ink to be mechanically transferred to the paper in a mobile type. This revolutionized the spread of knowledge and religion, as earlier books were usually written by hand (often by monks). . ELECTRICITY - The use of electricity is a process that a number of brilliant minds have contributed to for thousands of years. They come from ancient Egypt and ancient Greece, when Thales of Miletus carried out the first research on this phenomenon. The 18th century American Renaissance man Benjamin Franklin is generally credited with a significant improvement in our understanding of electricity, if not its discovery. It is hard to overestimate how important electricity has become to humankind as it controls the majority of our devices and shapes the way we live. The invention of the light bulb, while a separate contribution attributed to Thomas Edison in 1879, is certainly a major expansion in the ability to use electricity. It has fundamentally changed the way we live, work, and how our cities look and function.

10. STEAM ENGINE - invented between 1763 and 1775 by Scottish inventor James Watt (who drew on ideas from earlier attempts at a steam engine such as the Newcomen 1712), steam trains, ships, factories and the industrial revolution as a whole. 12. TELEPHONE - While he's not the only one working on this type of technology, Scottish inventor Alexander Graham Bell received the first patent for an electric telephone in 1876. This instrument has certainly revolutionized our communication skills.

13. VACCINATION - Although sometimes controversial, vaccination practice is responsible for eradicating disease and extending human lifespan. The first vaccine (against smallpox) was developed by Edward Jenner in 1796. A rabies vaccine was developed in 1885 by the French chemist and biologist Louis Pasteur, who is credited with most of the vaccination of today's drug. Pasteur is also responsible for inventing the pasteurization food safety process that bears his name.

14. CARS - Cars have fundamentally changed the way we travel, the way our cities are designed, and brought the concept of the assembly line into the mainstream. They were invented in their modern form by a number of people at the end of the 19th century, with the German Karl Benz being particularly responsible for creating the first practical car in 1885. 15. AIRPLANE - Invented by the American Wright brothers in 1903, airplanes brought the world closer together and enabled us to travel great distances quickly. This technology expanded the mind through tremendous cultural exchanges - but it also increased the scope of the world wars that were soon to break out and the severity of any war that followed. 16. PENICILLIN - This drug was discovered by Scottish scientist Alexander Fleming in 1928 and transformed medicine with its ability to cure infectious bacterial diseases. The era of antibiotics began.

17. MISSILE - While the invention of the first missile is attributed to the ancient Chinese, the modern missile is a 20th century contribution to humanity that is said to transform military capabilities and enable the exploration of human space.

18. NUCLEAR FISSION - This process of dividing atoms to release a large amount of energy has led to the creation of nuclear reactors and atomic bombs. It was the culmination of the work of a number of prominent scientists (mostly Nobel Prize winners) of the 20th century, but the specific discovery of nuclear fission is generally attributed to Germans Otto Hahn and Fritz Stassmann, who collaborated with Austrians Lise Meitner and Otto

1 note

·

View note

Text

TAFAKKUR: Part 221

NUCLEATION

Every day we boil water in our homes for tea, cooking and various other reasons, and during the summer months we usually ensure that there is a constant supply of cold water in the fridge. While some of us can drink cold water direct from the refrigerator, others can only drink it lukewarm. In our daily lives, we continuously transform water, the substance that the Creator sends to provide life to everything on earth, from one form to another without even remembering the actual freezing or boiling processes; the only thing that we are aware of is the fact that if we want to cool the water, it should be placed in the refrigerator, but if we want to transform water into ice, it must be put in the deep freeze. The temperature inside the refrigerator is above zero, whereas in the deep freeze compartment is below zero. So what happens if we reduce the temperature of water to 0Co and keep it at this temperature?

If we try to fill a glass of soda without letting it overflow, we usually notice the bubbles or froth of the drink. As we fill the glass, bubbles form on the surface and these tiny bubbles grow. Reaching a certain size, the bubbles escape from the liquid surface, and vanish into the air. If we put our finger, or a straw into the soda-as most of us did as children- we immediately notice that tiny bubbles of gas form on the object immersed in the glass. Just like in the freezing of water or in the escape of gas from soda, a precise energy exchange occurs at the initial stage of any phase transformation. Completion of any phase transformation - freezing or condensation (clouds transforming to rain)- is impossible without such precise energy exchange. The fact that all these phase transformation occur with precise energy calculations in the best possible temperature ranges to support life is a clear proof that nothing in the universe was created by mere coincidence, and that everything occurs by the command of the Almighty.

We know that everything in the universe obeys the minimum energy principle. If we want to freeze water, all we have to do is to cool it to a temperature below 0°C, and the transition from water to ice begins. Water molecules tend to gather together to form clusters. When five to ten of these molecules bond together, however, a difficulty is encountered. The formation of solid-liquid, solid-gas, or liquid-gas interfaces requires a specific amount of energy. In the beginning, the surfaces of these clusters are quite large as compared to their volumes such that the energy they receive to form an interface is much greater than the energy they release; therefore the state of minimum energy is not reached. To explain this to you in another way: let us assume that we manufacture beads for the production of costume jewelry and garments, and the surface of the beads requires treatment. If the beads we manufacture are smaller than the specific size, they will be more expensive to treat, and therefore will not cover the costs, so only producing beads exceeding the specific size will be profitable to the manufacturer. The main aspect here is actually the size of the beads, so if manufacturing beads which exceed the specific size is simpler and more profitable, rejecting the beads smaller than these specifications would be inevitable.

As in this example, because of their high energy value, the molecular clusters formed initially (embryos) return to a liquid form. Then once again the particles begin to bond, but again the result is the same. An embryo must grow to a certain size for its surface area to decrease in comparison to its volume and thus reduce its energy. This is only feasible when many atoms bond, for only when a sufficient number of atoms join together does the embryo transform into a nucleus, and then begin to crystallize and eventually become solid. The process called homogeneous nucleation is only possible under certain conditions: the liquid must be at a temperature of around –40 Co for both the transition in the balance of energy, and for the water molecules and atoms to become solid and bond to form a nucleus. If we contain pure water totally motionless in the deepfreeze at approximately –8 Co, we will have supercooled water that has not yet transformed into ice; the temperature between the nucleation and the freezing points, is called supercooling. Supercooling is a metastable condition where liquid or gas remains supercooled without actually becoming frozen, but the slightest intervention or movement can cause the substance to transform into a solid. The tiny bubbles of carbon dioxide in soda is also in a metastable condition, for as soon as the bubbles have the opportunity, they escape from the liquid and vanish into the air. If we immerse a straw or finger into a glass of soda, this forms an added surface, which also facilitates a solid-gas interface, and if we add a teaspoon of sugar to the soda, this induces the drink to froth and bubble at great speed. Water boiled in a saucepan actually nucleates on the wall of the container.

Supercooling is a metastable form of the substance. Every substance or solution has a specific temperature value for cooling. For instance, liquid copper transforms into a solid at 1083 Co. Homogeneous nucleation requires the bonding of 310 atoms, and supercooling to approximately 236 Co.

Under normal conditions, substances which have more than one type of molecule undergo phase transformation known as heterogeneous nucleation. In this case, the atoms form primarily on the walls of a container on particles of impurity, or minute solid particles in the liquid, and this significantly reduces the surface energy barrier for nucleation. So for a moment let us return to the bead example. We have discovered that instead of directly manufacturing smaller beads, it would reduce the costs of decorating the surface of the beads to coat and treat larger beads, so the beads are being produced in this way, thus reducing losses.

Supercooling can occur at temperatures even as high as 2–3 Co, and this is very important. The condensation of water or supercooled water droplets in clouds must reach a specific size and weight in order to fall to the earth as raindrops. Here, the solid microscopic particles combine to form nuclei. Even if the clouds are much lower in temperature, rain cannot form without nuclei. Particles of salt which escape from the sea, sand that rises from the desert, the sulphate released from the ashes of volcanic activity or minute atoms of dimethyl sulphate emitted by certain planktons are driven into the atmosphere by the wind and form nuclei. As the Almighty, the Creator of the universe revealed in Al-Hijr, verse 22 of the Qur’an: “And We send the winds to fertilize, and so We send down water from the sky, and give it to you to drink (and use in other ways)” indicating that one of the duties of the wind is fertilization. Even the particles in smoke released irresponsibly by humans from industrial chimneys, or from car exhausts form nuclei that eventually transform into rain.

During the foundry process, solid substances are added to liquid metals for certain purposes, such as enabling metal to set more rapidly, or increasing the metal’s durability. When liquid metal is cooled, its atoms form nuclei on microscopic solid impurities. These nuclei increase in size and assemble into groups called grains. The irregular zone between these groups is known as the grain boundary. The grain boundary forces the compressed atoms to move and weld, thus increasing the durability of the metal. This method known as infusion or grain contraction ensures an increase in the formation of nuclei, and also in the durability of the metal. Cloud seeding, a topic which mainly comes to light when there is a lack of rain, is actually inducing the clouds to form artificial nuclei that will in turn produce rain.

Some creatures on earth protect themselves with mechanisms bestowed by their Creator, and one of these creatures is the wood frog. As the water in its cells begins to freeze, the antigel protein found in its blood surrounds the formation of nuclei, and prevents the nuclei from increasing in size. The frog remains frozen and motionless until the temperature increases. If we touched a wood frog in this condition, its cells too would freeze suddenly, and the frog would die. It is impossible for a frog to know how to cool to the point of freezing, and nucleate. It is also impossible for a frog to adapt to such a mechanism because this would require practice and experience, which would of course be deadly. Therefore, is the frog’s ability to freeze, and its process of nucleation not a clear indication of the providence and blessing of God the Almighty?

#allah#god#prophet#Muhammad#quran#ayah#sunnah#hadith#islam#muslim#muslimah#hijab#help#revert#convert#religion#reminder#dua#salah#pray#prayer#welcome to islam#how to convert to islam#new convert#new revert#new muslim#revert help#convert help#islam help#muslim help

2 notes

·

View notes

Text

The Top 10 Greatest Russian Mysteries of All Time

Russia is a vast country, by far the largest country on earth in terms of land mass. The Soviet Union was much larger even. It's not surprise that some of the most famous unsolved mysteries in the world come out of that part of the planet. Join us as we glance at 10 of the most famous unsolved mysteries from there.

10 - Arkaim

Photo of Arkaim by Rafikova m - Own work, CC BY-SA 4.0 Arkaim is an ancient citadel built nearly 4,000 years ago that is full of mystery. The entire city was built around the spiritual idea of "reproducing the model of the universe" which was derived from ancient Indian literature. It was built in three concentric rings and featured the ancient swastika religious symbol found throughout the region at the time. It held as many as 2,500 people at the time, making it the largest citadel in the region. The city has also been associated with ancient Aryan culture (not the Hitler variety) and may have been the source of Vedic knowledge in India as well as the Asgard mentioned in ancient Germanic literature.

9 - Russian Ghost Cities

Photo By Laika ac from USA - Kadykchan, CC BY-SA 2.0 The USSR or Soviet Union built dozens of cities with controlled access, now known as Ghost Cities. These cities were completely cut off from the outside world except for gated roads coming in and out of the towns. Most were built around nuclear power or research centers. These towns are nearly all abandoned now, a testament to the iconic Brutalist architecture of the period.

8 - Lake Vostok

Lake Vostok is by far the most remote location heavily researched by Russian scientists. Lake Vostok is not in Russia. It is a lake under about 2.5 miles of ice. Bizarrely enough, Russian researchers have been fascinated with drilling through the ice to see what was underneath. Beginning in the 1950s until 2011, from the height of the Cold War to Putin's Russia, they drilled through the ice to see what ancient forms of life may lie underneath. The unrelenting focus on getting through the ice of a lake in Antarctica has left many of us scratching our heads.

7 - The Petrozavodsk Phenomenon

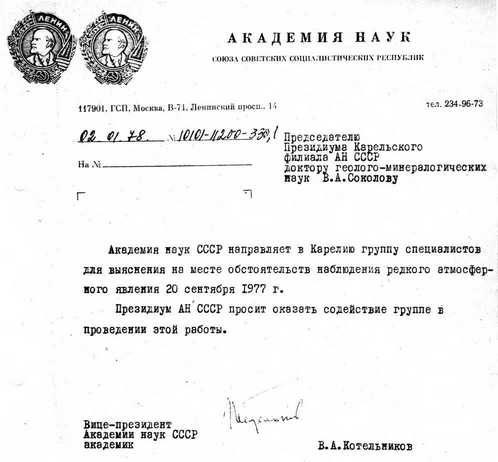

The Petrozavodsk object stands out in UFO research as one of the most puzzling and well documented cases of all time. On September 20th, 1977, one of the most widely viewed celestial events of all time unfolded over the city of Petrozavodsk, Russia (The USSR at the time). The event was witnessed from Copenhagen, Denmark all the way to Helsinki in Finland. According to eyewitnesses, 48 unidentified luminescent objects appeared in the atmosphere starting around 1:00 AM. The objects were observed for over two hours in various cities around the region. They appeared to shift direction abruptly, sometimes being stationary and sometimes traveling at enormous speeds. They were witnessed by military officials as well and there were official investigations into the issue. Nearly all of the eye witnesses also reported that the object emitted other glowing objects and that the objects were entirely silent.

6 - Psychotronics: The Russian MKULTRA

The Russian MKULTRA was as large its American counterpart. From 1917 to 2003, the Soviet Union and the modern Russian government spent nearly $1 Billion on "psychotronics", similar to the MKULTRA program in the United States. One area of the program focused on a Soviet idea that the brain emits high-frequency electromagnetic waves which can be received by other brains. Research seemed to show that it could change the magnetic polarity of hydrogen nuclei and stimulate immune systems in various organisms. They even made a device which could store and generate the energy, known as a "cerpan".

5 - The Voronezh UFO

On September 27th, 1989, a UFO appeared to a group of children in the city of Voronezh in the Soviet Union. The children report taht the craft landed in front of them, a three eyed alien popped out with a robot. The alien mind controlled another witness by freezing them in their tracks. The craft then left and returned five minutes later to abduct a 16 year old boy. There were as many as 12 eyewitnesses to all of that. Interestingly, the craft was also reported by the local police department as well.

4 - UVB-76: The Buzzer

Somewhere in Russia, there is a radio tower which has been broadcasting an inexplicable series of sounds since at least 1973. To really grasp the strangeness of it, listen to the example audio above. The sound has evolved over time. It was mostly a chirping sound until 1990, where it changed to a buzzer sound. Nobody knows what on earth it means. One interesting detail is that, on occasion, conversations have been heard in the background which seems to indicate that the sound is going through an open mic.

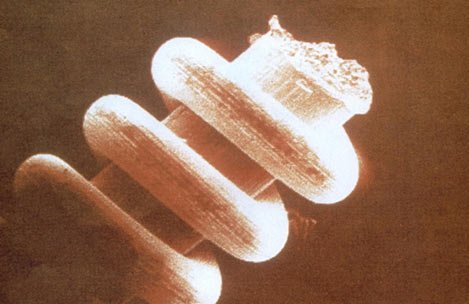

3 - Nano Spirals and Coils of the Ural Mountains

An example of a nanocoil found in the Ural Mountains. In the mysterious Ural Mountains, where our number one mystery also took place, researchers from the Russian Academy of Sciences discovered thousands of tiny nano-components which appear to be designed and very precisely manufactured. Dating to about 300,000 years ago, there is no known natural explanation for how they got there or what they were even designed to do. The objects, also known as Out of Place Artifacts or Ooparts, continue to amaze scientists. There are coils, shafts, spirals and other mechanical parts which are so small, they can only be appreciated with a microscope.

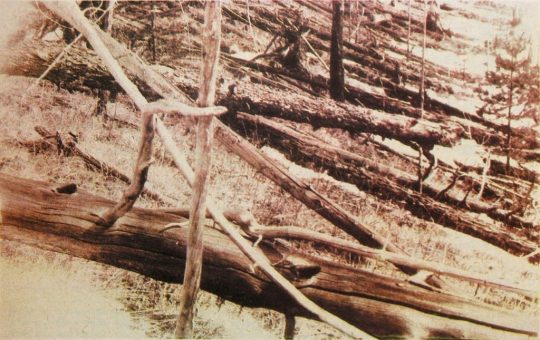

2 - The Tunguska Event

The Tunguska Event was a massive explosion in remote Siberia which still baffles scientists. On June 30th of 1908, there was a massive explosion in Siberia which knocked down 80 million trees, covering an area of 830 square miles. The explosion had the power of as many as 30 megatons of energy, more than 1,000 times the energy of the atomic bomb which was dropped on Heroshima. This is, of course, well before any atomic weapons had been invented by humans. Speculation has ranged from UFOs to a meteorite exploding before it impacted the ground since there is no crater.

1 - The Dyatlov Pass Incident

The Dyatlov Pass Incident is the most mysterious Russian event of modern times. The Dyatlov Pass Incident is without a doubt one of the greatest unsolved mysteries of the 20th Century. 10 skiers from the Ural Polytechnic Institute set out to travel to a nearby ski town. The trip was not thought to be dangerous. One turned back due to illness and the other nine were all killed by an "unknown compelling force". Camping on the side of Dead Mountain, something forced the hikers to run out of the tent in a hurry. Their tent was cut open from the inside. Two of the hikers were found near a fire pit in only their underwear. One hiker had high levels of radiation on his clothing. Three hikers suffered massive crushing injuries but had no signs of physical violence. Read our full article on The Dyatlov Pass Incident here. Read the full article

#aliens#arkaim#featured#ghostcities#lakevostok#nano-spirals#Petrozavodsk#psychotronics#russia#russianhikers#sovietunion#TheBuzzer#tunguskaevent#ufo#uralmountains#UVB-76#Voronezh

3 notes

·

View notes

Text

I Found This Interesting. Joshua Damien Cordle

Discovery of a fundamental law of friction leads to new materials that can minimize energy loss

Professor of Chemical and Biomolecular Engineering Elisa Riedo and her team have discovered a fundamental friction law that is leading to a deeper understanding of energy dissipation in friction and the design of two-dimensional materials capable of minimizing energy loss.

Friction is an everyday phenomenon; it allows drivers to stop their cars by breaking and dancers to execute complicated moves on various floor surfaces. It can, however, also be an unwanted effect that drives the waste of large amounts of energy in industrial processes, the transportation sector, and elsewhere. Tribologists-those who study the science of interacting surfaces in relative motion-have estimated that one-quarter of global energy losses are due to friction and wear.

While friction is extremely widespread and relevant in technology, the fundamental laws of friction are still obscure, and only recently have scientists been able to use advances in nanotechnology to understand, for example, the microscopic origin of da Vinci's law, which holds that frictional forces are proportional to the applied load.

Now, Riedo and her NYU Tandon postdoctoral researcher Martin Rejhon have found a new method to measure the interfacial shear between two atomic layers and discovered that this quantity is inversely related to friction, following a new law.

This work-conducted in collaboration with NYU Tandon graduate student Francesco Lavini, and colleagues from the International School for Advanced Studies, the International Center for Theoretical Physics in Trieste Italy, as well as Prague's Charles University-could lead to more efficient manufacturing processes, greener vehicles, and a generally more sustainable world.

"The interaction between a single atomic layer of a material and its substrate governs its electronic, mechanical, and chemical properties," Riedo explains, "so gaining insight into that topic is important, on both fundamental and technological levels, in finding ways to reduce the energy loss caused by friction."

The researchers studied bulk graphite and epitaxial graphene films grown with different stacking orders and twisting, measuring the hard-to-access interfacial transverse shear modulus of an atomic layer on a substrate. They discovered that the modulus (a measure of the material's ability to resist shear deformations and remain rigid) is largely controlled by the stacking order and the atomic layer-substrate interaction and demonstrated its importance in controlling and predicting sliding friction in supported two-dimensional materials. Their experiments showed a general reciprocal relationship between friction force per unit contact area and interfacial shear modulus for all the graphite structures they investigated.

Their 2022 paper, "Relation between interfacial shear and friction force in 2D materials" was published online in Nature Nanotechnology and was funded by the U.S. Department of Energy Office of Science and the U.S. Army Research Office.

"Our results can be generalized to other 2D materials as well," Riedo, who heads NYU Tandon's PicoForce Lab, asserts. "This presents a way to control atomic sliding friction and other interfacial phenomena, and has potential applications in miniaturized moving devices, the transportation industry, and other realms."

"Elisa's work is a great example of NYU Tandon's commitment to a more sustainable future," Dean Jelena Kovačević says, "and a testament to the research being done at our newly launched Sustainable Engineering Initiative, which focuses on tackling climate change and environmental contamination through a four-pronged approach we're calling AMRAd, for Avoidance, Mitigation, Remediation and Adaptation."

Story Source:

Materials provided by NYU Tandon School of Engineering. Note: Content may be edited for style and length.

Journal Reference:

Martin Rejhon, Francesco Lavini, Ali Khosravi, Mykhailo Shestopalov, Jan Kunc, Erio Tosatti, Elisa Riedo. Relation between interfacial shear and friction force in 2D materials. Nature Nanotechnology, 2022; DOI: 10.1038/s41565-022-01237-7

#Joshua Damien Cordle#josh cordle#Damien Cordle#Joshua Cordle#Damien Joshua Cordle#Cordle Damien#Cordle Joshua#Cordle Josh#Josh Damien Cordle

0 notes

Text

"Advancing Reliability: Leveraging Failure Analysis Equipment for Quality Assurance"

Failure analysis equipment refers to a variety of tools, instruments, and techniques used to investigate and determine the root causes of failures in materials, components, or systems. These equipment are crucial for identifying and understanding the factors that contribute to failures, allowing engineers and researchers to develop effective solutions and prevent future failures.

One common type of failure analysis equipment is microscopy tools. Optical microscopes, electron microscopes (such as scanning electron microscopes and transmission electron microscopes), and atomic force microscopes enable detailed examination of the surface, structure, and composition of materials at various scales. These microscopes help identify features like cracks, voids, deformations, corrosion, or other anomalies that may have led to failure.

Another important category of equipment is analytical instruments. These include spectrometers, X-ray diffraction (XRD) systems, energy-dispersive X-ray spectroscopy (EDS) detectors, Fourier transform infrared spectroscopy (FTIR), and thermal analysis instruments. These analytical tools help analyze the chemical composition, crystal structure, and thermal behavior of materials, providing insights into the failure mechanisms.

Mechanical testing machines, such as universal testing machines, hardness testers, and impact testers, are also critical in failure analysis. These instruments assess the mechanical properties, strength, and performance of materials under different loading conditions. By subjecting failed specimens to controlled tests, engineers can determine the mechanical factors that contributed to failure, such as excessive stress, poor material properties, or design flaws.

Other specialized equipment used in failure analysis include thermal imaging cameras, acoustic emission detectors, ultrasonic flaw detectors, and electrical test instruments. These tools enable the detection and characterization of thermal anomalies, acoustic signals, ultrasonic waves, or electrical faults associated with failures.

In addition to equipment, failure analysis often involves sample preparation tools, such as cutting, polishing, and etching equipment, which are used to prepare specimens for microscopic examination or further testing.

The data and information obtained from failure analysis equipment play a crucial role in improving product design, enhancing manufacturing processes, and ensuring the reliability and safety of materials and components. By understanding the causes of failure, engineers can implement design modifications, select more suitable materials, optimize manufacturing processes, or improve maintenance practices to prevent similar failures in the future.

In summary, failure analysis equipment encompasses a range of tools, instruments, and techniques used to investigate and determine the causes of failures in materials, components, or systems. These tools enable detailed examination, chemical analysis, mechanical testing, and detection of anomalies associated with failures. The insights gained from failure analysis equipment help engineers develop solutions, improve designs, and enhance the reliability and performance of materials and products.

Read more @ https://techinforite.blogspot.com/2023/05/uncovering-truth-importance-of-failure.html

0 notes