#artificial intelligence hallucinations

Explore tagged Tumblr posts

Text

Florida Middle District Federal Judge suspends Florida lawyer for filing false cases created by artificial intelligence

Hello everyone and welcome to this Ethics Alert which will discuss the recent Florida Middle District Senior Judge’s Opinion and Order suspending a Florida lawyer from practicing before that court for one (1) year for filing false cases created by artificial intelligence. The case is In Re: Thomas Grant Neusom, Case No: 2:24-mc-2-JES and the March 8, 2024 Opinion and Order is here:…

View On WordPress

#artificial intelligence hallucinations#Attorney ethics#corsmeier#corsmeier lawyer ethics#ethics for lawyers#Florida Bar#Florida lawyer ethics#Florida lawyer sanctions federal court artificial intelligence#joe corsmeier#joseph corsmeier#lawyer discipline#lawyer ethics#sanctions artificial intelligence

0 notes

Text

Who could have forseen this

80 notes

·

View notes

Text

If you put garbage into an AI, you will get garbage out of an AI.

And we have put garbage into the AIs. 🤖🗑️

#glue#pizza#artificial intelligence#ai#ai overview#google ai overview#technology#garbage in garbage out#GIGO#model collapse#ai hallucinations

47 notes

·

View notes

Text

#artificial intelligence#are you hallucinating again ChatGPT?#this reminds me... I've watched 2001: a space odyssey the other day

9 notes

·

View notes

Text

Hallucinating LLMs — How to Prevent them?

As ChatGPT and enterprise applications with Gen AI see rapid adoption, one of the common downside or gotchas commonly expressed by the GenAI (Generative AI) practitioners is to do with the concerns around the LLMs or Large Language Models producing misleading results or what are commonly called as Hallucinations.

A simple example for hallucination is when GenAI responds back with reasonable confidence, an answer that doesn’t align much with reality. With their ability to generate diverse content in text, music and multi-media, the impact of the hallucinated responses can be quite stark based on where the Gen AI results are applied.

This manifestation of hallucinations has garnered substantial interest among the GenAI users due to its potential adverse implications. One good example is the fake citations in legal cases.

Two aspects related to hallucinations are very important.

1) Understanding the underlying causes on what contributes to these hallucinations and

2) How could we be safe and develop effective strategies to be aware, if not prevent them 100%

What causes the LLMs to hallucinate?

While it is a challenge to attribute to the hallucinations to one or few definite reasons, here are few reasons why it happens:

Sparsity of the data. What could be called as the primary reason, the lack of sufficient data causes the models to respond back with incorrect answers. GenAI is only as good as the dataset it is trained on and this limitation includes scope, quality, timeframe, biases and inaccuracies. For example, GPT-4 was trained with data only till 2021 and the model tended to generalize the answers from what it has learnt with that. Perhaps, this scenario could be easier to understand in a human context, where generalizing with half-baked knowledge is very common.

The way it learns. The base methodology used to train the models are ‘Unsupervised’ or datasets that are not labelled. The models tend to pick up random patterns from the diverse text data set that was used to train them, unlike supervised models that are carefully labelled and verified.

In this context, it is very important to know how GenAI models work, which are primarily probabilistic techniques that just predicts the next token or tokens. It just doesn’t use any rational thinking to produce the next token, it just predicts the next possible token or word.

Missing feedback loop. LLMs don’t have a real-time feedback loop to correct from mistakes or regenerate automatically. Also, the model architecture has a fixed-length context or to a very finite set of tokens at any point in time.

What could be some of the effective strategies against hallucinations?

While there is no easy way to guarantee that the LLMs will never hallucinate, you can adopt some effective techniques to reduce them to a major extent.

Domain specific knowledge base. Limit the content to a particular domain related to an industry or a knowledge space. Most of the enterprise implementations are this way and there is very little need to replicate or build something that is closer to a ChatGPT or BARD that can answer questions across any diverse topic on the planet. Keeping it domain-specific also helps us reduce the chances of hallucination by carefully refining the content.

Usage of RAG Models. This is a very common technique used in many enterprise implementations of GenAI. At purpleSlate we do this for all the use cases, starting with knowledge base sourced from PDFs, websites, share point or wikis or even documents. You are basically create content vectors, chunking them and passing it on to a selected LLM to generate the response.

In addition, we also follow a weighted approach to help the model pick topics of most relevance in the response generation process.

Pair them with humans. Always. As a principle AI and more specifically GenAI are here to augment human capabilities, improve productivity and provide efficiency gains. In scenarios where the AI response is customer or business critical, have a human validate or enhance the response.

While there are several easy ways to mitigate and almost completely remove hallucinations if you are working in the Enterprise context, the most profound method could be this.

Unlike a much desired human trait around humility, the GenAI models are not built to say ‘I don’t know’. Sometimes you feel it was as simple as that. Instead they produce the most likely response based on the training data, even if there is a chance of being factually incorrect.

Bottomline, the opportunities with Gen AI are real. And, given the way Gen AI is making its presence felt in diverse fields, it makes it even more important for us to understand the possible downsides.

Knowing that the Gen AI models can hallucinate, trying to understand the reasons for hallucination and some reasonable ways to mitigate those are key to derive success. Knowing the limitations and having sufficient guard rails is paramount to improve trust and reliability of the Gen AI results.

This blog was originally published in: https://www.purpleslate.com/hallucinating-llms-how-to-prevent-them/

2 notes

·

View notes

Text

would produce more senseless poetry like the artificial intelligence so hated by the world but seeming as the words are nonsense in your ears he, the poet, should find it more insightful to violently slam his head against the keyboard

#random thoughts#me? him? all the same all the same#repetition repetition does it help? does it do something? certainly not#unwell? unwell? unwell? unwell? unwell? it's catharsis#neither old enough nor intelligent enough to know what any words mean. my intelligence is artificial#come to think of it. i am the words. why all can any of you tolerate this thing when he is so painfully robotic#emanating human behaviorisms despite paling in comparison to the beauty of one. i've questioned my reality more than once#i think it is safe to say that i do not exist. this is not a problem for any of you#a mass hallucination as it were. i'd like to wake up now#i am asking you to let me wake up now. i'd like to wake up now. shake my senses so i can become human#not this not this. what is this?? what is he?? what is? he? not him#no. not him. himself. himself/myself#a means to an end? whatever does the phrase mean#vivisection of a butterfly

2 notes

·

View notes

Text

Citations: Can Anthropic’s New Feature Solve AI’s Trust Problem?

New Post has been published on https://thedigitalinsider.com/citations-can-anthropics-new-feature-solve-ais-trust-problem/

Citations: Can Anthropic’s New Feature Solve AI’s Trust Problem?

AI verification has been a serious issue for a while now. While large language models (LLMs) have advanced at an incredible pace, the challenge of proving their accuracy has remained unsolved.

Anthropic is trying to solve this problem, and out of all of the big AI companies, I think they have the best shot.

The company has released Citations, a new API feature for its Claude models that changes how the AI systems verify their responses. This tech automatically breaks down source documents into digestible chunks and links every AI-generated statement back to its original source – similar to how academic papers cite their references.

Citations is attempting to solve one of AI’s most persistent challenges: proving that generated content is accurate and trustworthy. Rather than requiring complex prompt engineering or manual verification, the system automatically processes documents and provides sentence-level source verification for every claim it makes.

The data shows promising results: a 15% improvement in citation accuracy compared to traditional methods.

Why This Matters Right Now

AI trust has become the critical barrier to enterprise adoption (as well as individual adoption). As organizations move beyond experimental AI use into core operations, the inability to verify AI outputs efficiently has created a significant bottleneck.

The current verification systems reveal a clear problem: organizations are forced to choose between speed and accuracy. Manual verification processes do not scale, while unverified AI outputs carry too much risk. This challenge is particularly acute in regulated industries where accuracy is not just preferred – it is required.

The timing of Citations arrives at a crucial moment in AI development. As language models become more sophisticated, the need for built-in verification has grown proportionally. We need to build systems that can be deployed confidently in professional environments where accuracy is non-negotiable.

Breaking Down the Technical Architecture

The magic of Citations lies in its document processing approach. Citations is not like other traditional AI systems. These often treat documents as simple text blocks. With Citations, the tool breaks down source materials into what Anthropic calls “chunks.” These can be individual sentences or user-defined sections, which created a granular foundation for verification.

Here is the technical breakdown:

Document Processing & Handling

Citations processes documents differently based on their format. For text files, there is essentially no limit beyond the standard 200,000 token cap for total requests. This includes your context, prompts, and the documents themselves.

PDF handling is more complex. The system processes PDFs visually, not just as text, leading to some key constraints:

32MB file size limit

Maximum 100 pages per document

Each page consumes 1,500-3,000 tokens

Token Management

Now turning to the practical side of these limits. When you are working with Citations, you need to consider your token budget carefully. Here is how it breaks down:

For standard text:

Full request limit: 200,000 tokens

Includes: Context + prompts + documents

No separate charge for citation outputs

For PDFs:

Higher token consumption per page

Visual processing overhead

More complex token calculation needed

Citations vs RAG: Key Differences

Citations is not a Retrieval Augmented Generation (RAG) system – and this distinction matters. While RAG systems focus on finding relevant information from a knowledge base, Citations works on information you have already selected.

Think of it this way: RAG decides what information to use, while Citations ensures that information is used accurately. This means:

RAG: Handles information retrieval

Citations: Manages information verification

Combined potential: Both systems can work together

This architecture choice means Citations excels at accuracy within provided contexts, while leaving retrieval strategies to complementary systems.

Integration Pathways & Performance

The setup is straightforward: Citations runs through Anthropic’s standard API, which means if you are already using Claude, you are halfway there. The system integrates directly with the Messages API, eliminating the need for separate file storage or complex infrastructure changes.

The pricing structure follows Anthropic’s token-based model with a key advantage: while you pay for input tokens from source documents, there is no extra charge for the citation outputs themselves. This creates a predictable cost structure that scales with usage.

Performance metrics tell a compelling story:

15% improvement in overall citation accuracy

Complete elimination of source hallucinations (from 10% occurrence to zero)

Sentence-level verification for every claim

Organizations (and individuals) using unverified AI systems are finding themselves at a disadvantage, especially in regulated industries or high-stakes environments where accuracy is crucial.

Looking ahead, we are likely to see:

Integration of Citations-like features becoming standard

Evolution of verification systems beyond text to other media

Development of industry-specific verification standards

The entire industry really needs to rethink AI trustworthiness and verification. Users need to get to a point where they can verify every claim with ease.

#000#adoption#ai#AI development#AI systems#amp#anthropic#API#approach#architecture#Artificial Intelligence#barrier#challenge#claude#Companies#content#data#development#engineering#enterprise#experimental#Features#file storage#focus#Foundation#Full#hallucinations#how#Industries#Industry

0 notes

Text

André finds an AI-generated mindscrew

In this edited screenshot, André has just found this link that provides mostly inaccurate information about the short film he has debuted. The link claims that André and Wally B. look at each other through rose-coloured glasses (i.e. they have a bed of roses) and the setting is as similar as the one used for the Silly Symphonies short film Flowers and Trees. That's a pack of lies! In fact, André was shown to be hostile towards Wally B. and there are no sentient or anthropomorphic flora in it, not even the forest has magical properties.

It seems that there is lazy use of artificial intelligence, leading to the presence of hallucinations such as this one. And, yes, this is AI slop. AI slop is considered a form of quantity over quality prioritization.

Anyways, the moral is: please do not overrely on generative artificial intelligence or else your creativity will be decreased significantly.

André > The Adventures of André & Wally B. © Pixar (formerly known as Lucasfilm Computer Graphics) and Lucasfilm (both of them currently owned by The Walt Disney Company)

#andré and wally b#fan art#fanart#digital art#the adventures of andré and wally b#andré & wally b#the adventures of andré & wally b#the adventures of andre and wally b#the adventures of andre & wally b#ai hallucinations#ai slop#large language model#cognitive dissonance#lazy use of artificial intelligence#andre and wally b#i was somewhat bored and i did this#pixar fanart

1 note

·

View note

Text

Perfection Raises Suspicions, Not Hopes

In just a short period of time, specifically over the last 2 years, Artificial Intelligence has given us a glimpse of the future of commerce, business and automation of everyday tasks. Much of this revolution has been borne from the advent of Generative AI tools such as ChatGPT and Grok, but while this technology saves us time in generating information, the promise of where AI is intended to go still has a lot of racetrack left. The Hollywood vision of AI as we have witnessed in movies with accurate prediction models and self-thinking robots continues to elude us, but this vision is approaching at a steady pace. In order to get us to this reality, the technology needs to be continually updated, and to which investors have responded in-kind spending hundreds of millions in both applications and hardware. Commensurate with the front-facing tools of AI is the huge power capacity in which to fuel this new infrastructure, which requires beefier processors and of course laser-fast raw internet speeds. On the latter, this is the current issue where we find ourselves with autonomous vehicles which at each millisecond needs to chow-down on fast speeds without hiccups, which enterprises such as Starlink is looking to remedy. Looking at the proliferation of AI for today’s Sales and Marketing efforts, much of the current buzz is with the automation of customer interaction such as contextual responses to emails, text-messages and chat-bots. While in the coming years, we may get to the point of trusting the ‘engine’ unconditionally to give us the correct sentimentality and tone with our responses back to the customer, the current state of generative content should be taken with a grain of salt. This is because some of the sauce within AI ‘hallucinates’ the feedback to the user, which may or may not be accurate. This is because the raw material that feeds the engine, which has been sourced from Large Language Models (LLM’s), has been trained to learn from existing sources (such as websites, books, literature and music) which makes ‘educated guesses’ based on prior answers. For the most part, the interpretations are fairly correct, but even if the accuracy level is 99.9%, do you risk having a .01 chance of an incorrect response provided to a customer?

You may have seen such glitches in AI-generated video clips or a repetitive response from a pizza delivery chat-bot. This is Artificial Intelligence 1.0, and like the first version of the web, will get better with time. So, looking for accuracy and precision at this particular time would be similar to expecting a full-scale e-Commerce site in 1995 when websites only had HTML. So, while some may criticize the inadequacies of AI, like any new technology the ones who lean-in early and go through the pain will realize the greatest benefits later on. Microsoft, Google, Salesforce and others all offer some sort of AI inherent within their platforms, be it search or within their CRM and the best way of becoming familiar is by adopting the technology and being a student, which will then provide you with ideas as to determine how it can benefit your business and/or your customer base. Just remember, like any new mechanism of progress (especially in the realm of the internet) the technology will continue to get better based on need and inventiveness, but in the short time that AI has gained steam, ignoring it could be your folly in just a few years from now.

__________________________________________________________________________________________ Title mage by Fuel Cells and Hydrogen Observatory | LLM segment by Flyte.org

#grok#internet#hollywood#prediction#llm#artificial intelligence#ai#microsoft#salesforce#google#generative#chatgpt#model#infrastructure#starlink#crm#marketing#chatbot#hallucinate#ecommerce

0 notes

Text

#artificial intelligence risks#artificial intelligence benefits#666#end time prophecy#AI hallucinations#control buying and selling#Revelation 13#bible prophecy#news & analysis#news & prophecy#bible prophecy interview

0 notes

Text

"V for Vendetta" - Alan Moore and David Lloyd

#book quote#v for vendetta#alan moore#david lloyd#i love you#computer#artificial intelligence#fluke#circuits#tricks#going mad#hallucinations

1 note

·

View note

Text

Instead of getting an actual expert on AI to write this book, they just got someone who wants to suck AI's dick 🤦♀️

#help she thinks artificial intelligence is actually intelligent#also no mention of hallucinations or copyright infringement#the only mention of bias is a brief mention about a Black man who was wrongfully arrested and the 'cops were sad' the computer was wrong#she actially included a line about how nice it will be when AI does the mundane tasks and humans can focus on creative endeavors#I'm gonna scream#tbf this is a raw ms. no editorial eyes have seen it. the editor who acquired it quit so we'll see what the new guy does with it#it's also hella late so i wouldn't be surprised if it moved and/or was cancelled entirely#given it's going to be defunct by publication time#also fully insane: she's written several books for us about climate change yet is convinced ai is the answer to climate change??????#hwaelweg's work life

2 notes

·

View notes

Video

A Real Hallucination by Davivid Rose Via Flickr: According to an image generated by Adobe's AI, the above image is what illegal LSD looks like in a laboratory right after it has been made... Please click here to read my "autobiography": thewordsofjdyf333.blogspot.com/ My telephone number is: 510-260-9695

0 notes

Text

There is the right way, the wrong way, and the Google AI Overview way that will probably kill or maim you.

3 notes

·

View notes

Text

ai hallucinations

#https://www.opensesame.dev/#ai hallucinations#artificial intelligence problem#ai models#ai developer#ai detection tool

1 note

·

View note

Text

What are Grounding and Hallucinations in AI? - Bionic

This Blog was Originally Published at :

What are Grounding and Hallucinations in AI? — Bionic

The evolution of AI and its efficient integration with businesses worldwide have made AI the need of the hour. However, the problem of AI hallucination still plagues generative AI applications and traditional AI models. As a result, AI organizations are constantly pursuing better AI grounding techniques to minimize instances of AI hallucination.

To understand AI hallucinations, imagine if someday your AI system starts showing glue as a solution to make cheese stick to pizza better. Or, maybe your AI fraud detection system suddenly labels a transaction fraud even when it is not. Weird, right? This is called AI hallucination.

AI Hallucination occurs when the AI systems generate outputs that are not based on the input or real-world information. These false facts or fabricated information can undermine the reliability of AI applications. This can seriously harm a business’s credibility.

On the other hand, Grounding AI keeps the accuracy and trustworthiness of the data intact. You can define Grounding AI as the process of rooting the AI system’s responses in relevant, real-world data.

We will explore what are grounding and hallucinations in AI in this detailed blog. We will explore the complexities of AI systems and how techniques like AI Grounding can help minimize it, ensuring reliability and accuracy.

What is AI Hallucination and how does it occur?

AI Hallucination refers to the instances when AI outputs are not based on the input data or real-world information. It can manifest as fabricated facts, incorrect details, or nonsensical information.

It can especially happen in Natural Language Processing (NLP) such as Large Language Models and image generation AI models. In short, AI hallucination occurs when the AI generative models generate data or output that looks plausible but lacks a factual basis. This can lead to incorrect results.

(Image Courtesy: https://www.nytimes.com/2023/05/01/business/ai-chatbots-hallucination.html)

What causes AI Hallucination?

When a user gives a prompt to an AI assistant, its goal is to understand the context of the prompt and generate a plausible result. However, if the AI starts blurting out fabricated information, it becomes a case of AI hallucination concluding that the AI model is not trained in that particular context and lacks background information.

Overfitting: Overfitting refers to training the AI model too closely on its training data, making the AI model overly specialized. This can result in the narrowing of the horizon of knowledge and context. As a result, the AI model doesn’t generate desirable output for new, unseen data. This overfitting can cause AI hallucinations when it is faced with user input outside of the model’s training data.

Biased Training Data: AI systems are as good as the data they are trained on. If this training data contains biases or prejudiced inaccuracies, the AI may reflect these biases as its output. This can lead to AI hallucinations, making the information incorrect.

Unspecific or Suggestive Prompts: Sometimes, your prompt may not have clear constraints and specific details. The AI will have to make up its irrelevant interpretation of the input based on its training data. This in turn increases the likelihood of getting fake information.

Asking about Fictional Context: Prompts that are associated with fictional subjects related to products, people, or even situations are likely to trigger hallucinations. This may be due to a lack of reference facts for an AI interface to draw information from.

Incomplete Training Data: When training data does not entail full coverage of the situations that an AI might find itself in, the system is likely to come up with wrong outputs. This results in hallucinations as the system tries to make up for the missing data.

Types of AI Hallucinations

AI hallucinations can be broadly categorized into three types:

Visual Hallucinations: These occur in AI systems that are used in image recognition, or image generation systems. The AI system generates erroneous design outputs or graphical inaccuracies. For instance, the AI may produce an image of an object that does not exist or fail to recognize the given objects present in a particular image.

Pictorial Hallucinations: They are somewhat similar to visual hallucinations, but they refer to the erroneous output of visual information. This could include graphical data like simple drawings, diagrams, infographics, etc.

Written Hallucinations: When it comes to NLP models, hallucinations are defined as text that contains information not included in the input data. These can be false facts, extra details, or statements not supported by the input data. This can occur in popular chatbots, auto-generated reports, or any AI that creates text material, etc.

Real-Life Examples of AI Hallucination

Below are some real-life examples of AI Hallucinations that made waves:

Glue on Pizza: A prominent AI hallucination happened when Google’s AI suggested that the cheese would not slide when using glue on pizza. This weird suggestion served to illustrate the system’s potential to produce harmful and illogical advice. Misleading users in this way can have serious safety implications. This is why close monitoring of AI and validation of facts is important.(Know More)

Back Door of Camera: Just about a month ago, there was an AI hallucination in which Google’s Gemini AI suggested “open the back door” of a camera as a photographic tip. However, it showed this result in a list of “Things you can try,” illustrating the harm of irresponsible directions coming from AI systems. These errors can lead to incorrect conclusions by the users, and could potentially cause damage to the equipment. (Know More)

Muslim Former President Misinformation: There was a false claim in Google’s AI search overview that Former President Barack Obama is a Muslim. Another error made by an AI during searches executed through Google stated that none of Africa’s 54 recognized nations begins with the letter ‘K’ forgetting Kenya. This occurrence demonstrated the danger of machine learning systems being used to disseminate wrong ideas. This also highlights the lack of basic factual information in AI systems. (Know More)

False Implications on Whistleblower: Brian Hood, Australian politician and current mayor of Hepburn Shire, was wrongly implicated in a bribery scandal by ChatGPT. The AI falsely identified Hood as one of the people involved in the case intimating that he had bribed authorities and served a jail term for it. Hood, however, was a whistleblower in that case. AI Hallucination incidents can lead to legal matters of defamation. (Know More)

These kinds of hallucinations in image classification systems can have very grave social and ethical consequences.

Why are AI Hallucinations not good for your business?

Apart from just being potentially harmful to your reputation, AI hallucinations can have detrimental effects on businesses including:

Eroded Trust: Consumers and clients will not rely on an AI system if it constantly comes up with wrong or fake information. This erosion weakens user confidence thus affecting their usage or interaction with the AI deployed. Once the trust in your business is breached, it becomes very difficult to maintain customer retention and brand loyalty.

Operational Risks: Erroneous information from AI systems can contribute to wrong decisions, subpar performance, and massive losses. For instance, if applied in the supply chain setting, an AI hallucination could lead to inaccurate inventory forecasting. This, in turn, leads to costs associated with either overstock or stock out. In addition, AI can give poor recommendations that interfere with organized workflow. This could require someone to fix what the AI got wrong.

Legal and Ethical Concerns: Legal risks due to AI could arise when hallucinations by the system result in a negative impact. For example, if a financial AI system provides erroneous recommendations on investments, it could cause significant financial losses, and thus, lead to legal proceedings. Ethical issues come up, especially when the outputs generated by an AI system are prejudiced or unfair in some way.

Reputational Damage: AI hallucinations are particularly dangerous and can lead to the loss of the reputation of a firm in the market. People’s opinions can be easily influenced negatively as seen in social media and leading news channels. Such reputational damage can lead to rejection by potential clients and partners. This could cause significant challenges for the business to attract and sustain opportunities.

Understanding AI Grounding

We can define Grounding AI as the process of grounding the AI systems in real data and facts. This involves aligning the AI’s response and behavior to factual data and information. Grounding AI is particularly helpful in Large Language Models. This helps minimize or eradicate instances of hallucinations as the information fed to the AI will be based on real data and facts.

Bridging Abstract AI Concepts and Practical Outcomes

Grounding AI can be seen as the connection between the theoretical and at times, highly abstract frameworks of AI and their real-world implementations.

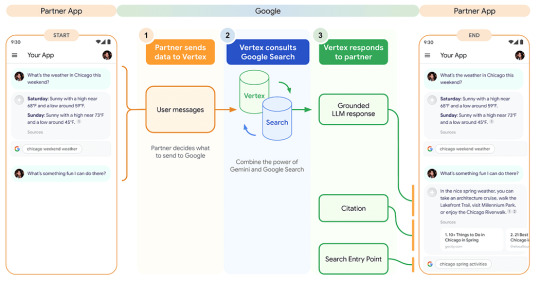

(Image Courtesy: https://cloud.google.com/vertex-ai/generative-ai/docs/grounding/overview)

The Importance of Grounding AI

Grounding AI is essential for several reasons:

Accuracy and Reliability: AI systems that are grounded in real-time data feeds are likely to generate more accurate and reliable results. This can especially be helpful in business strategy, healthcare delivery, finance, and many other fields.

Trust and Acceptance: When the AI systems are grounded in real-life data, consumers are more inclined to accept the results of the systems. This makes the integration process easier.

Ethical and Legal Compliance: One of the reasons why grounding is important is to reduce cases where AI is used to propagate fake news. The propagation of these fake news causes harm, raising ethical and legal concerns.

The Best Practices for Grounding AI

Various best practices can be employed to ground AI systems effectively:

Data Augmentation: Improving the training datasets to incorporate more data that are similar to the inputs the model is expected to process.

Cross-Validation: Verifying the results generated by AI systems with one or more data sets, to check for coherence and correctness.

Domain Expertise Integration: Engagement of experts from the particular domain for the development of the AI system as well as to ensure the correctness of the output.

Feedback Loops: Incorporation of feedback and AI reinforcement learning process coming from the evaluation parameters and feedback received from users.

Implement Rigorous Validation Processes: Using cross-validation techniques and other reliable validation procedures to ensure the validity of the AI model.

Utilize Human-in-the-Loop Approaches: Introducing humans in the loop that check and review outputs produced by the AI tool, especially in sensitive matters.

Bionic uses Human in the Loop Approach and gets your content and its claims as well as facts validated. Request a demo now!

Benefits of Grounding AI

Grounding AI systems offers several significant benefits:

Increased Accuracy: Calibrating real data with AI output increases the accuracy of those outputs.

Enhanced Trust: Grounded AI systems foster more trust from users and stakeholders because they provide more accurate results.

Reduced Bias: Training a grounded AI model on diverse data reduces biases and creates more ethical AI systems.

Improved Decision-Making: Businesses can tremendously improve their organizational decision-making by using reliable grounded AI outputs.

Greater Accountability: Implementing grounded AI systems allows better monitoring and verification of outputs, thereby increasing accountability.

Ethical Compliance: Ensuring that AI reflects actual data about the world helps maintain ethical standards and prevent hallucination.

The Interplay Between Grounding AI and AI Hallucinations

Grounding AI is inversely related to hallucinations in AI because it filters out irrelevant or inaccurate content. It ensures that AI-generated content does not contain hallucinations. Conversely, a lack of grounding may cause AI hallucinations because the outputs will not be aligned with real-world applications.

Challenges in Achieving Effective AI Grounding

Achieving effective AI grounding to prevent hallucinations in AI systems presents several challenges:

Complexity of Real-World Data: Real-world data, often disorganized, understructured, and inconsistent, is difficult to acquire and assimilate into AI systems comprehensibly. Ensuring grounding AI with such information is challenging.

Dynamic Environments: AI systems usually operate in unpredictable and volatile environments. Maintaining AI generative models in these scenarios requires constant AI reinforcement learning and real-time data updates, posing technical hurdles and high costs.

Scalability: Grounding vast and complex AI systems is challenging, especially on a larger scale. Monitoring and maintaining grounding effects in different models and applications demands significant effort.

The Future of AI Grounding and AI Hallucinations

The future of grounding and hallucinations in AI looks promising, with several key trends and breakthroughs anticipated:

Advancements in Data Quality and Integration: Advancements in data collection, cleaning, and integration will improve AI grounding. Better data acquisition will train AI models with diverse and sufficiently large datasets to minimize hallucinations.

Enhanced Real-Time Data Processing: AI systems will have more real-time data feeds from various sources, grounding the systems on current and accurate data. This will enable AI models to learn in changing conditions and minimize hallucinated outputs.

Human-AI Collaboration: The prominence of augmented intelligence, where humans validate AI-generated outputs, will increase. AI models like Bionic AI will combine human brain capabilities with AI to obtain accurate facts.

Mitigating AI Hallucination with Bionic

Bionic AI is designed to handle multi-level cognitive scenarios, including complex real-world cases by constant AI reinforcement learning and bias reduction. Duly updated by real-world data and human supervision, Bionic AI safeguards itself from overfitting to remain as flexible and adaptable (to the real world) as can be.

Bionic AI combines AI with human inputs to eliminate contextual misinterpretation. Effective AI grounding techniques and a human-in-the-loop approach empower Bionic AI with specific and relevant information. This seamless integration of AI and human oversight makes Bionic AI change the game of business outsourcing.

Bionic AI adapts to changing human feedback making it hallucination-free and effective in dynamic environments. By mixing AI with human oversight, Bionic promises accurate and relevant results that foster customer satisfaction and trust. This synergy ensures that customer concerns with traditional AI are addressed justly, delivering outstanding customer experience.

Conclusion

With the increasing adoption of AI in businesses, it is crucial to make these systems trustworthy and dependable. This trust is kept intact by grounding AI systems in real-world data. The costs of AI hallucinations are staggering, due to instances such as wrong fraud alerts, and misdiagnosis of healthcare problems among others. This can result from factors such as overfitting, training datasets, and incomplete training sets.

Knowing what is grounding and Hallucinations in AI can take your business a long way ahead. Mechanisms such as data augmentation, cross-validation, and using human feedback help the implementation of effective grounding.

Bionic AI uses artificial intelligence and human oversight to fill gaps regarding biases, overfitting, and contextual accuracy. Bionic AI is your solution for accurate and factual AI outputs, letting you realize the full potential of AI.

Ready to revolutionize your business with AI that’s both intelligent and reliable? Explore how Bionic can transform your operations by combining AI with human expertise. Request a demo now! Take the first step towards a more efficient, trustworthy, and ‘humanly’ AI.

0 notes