#api automation framework

Explore tagged Tumblr posts

Text

From Intent to Execution: How Microsoft is Transforming Large Language Models into Action-Oriented AI

New Post has been published on https://thedigitalinsider.com/from-intent-to-execution-how-microsoft-is-transforming-large-language-models-into-action-oriented-ai/

From Intent to Execution: How Microsoft is Transforming Large Language Models into Action-Oriented AI

Large Language Models (LLMs) have changed how we handle natural language processing. They can answer questions, write code, and hold conversations. Yet, they fall short when it comes to real-world tasks. For example, an LLM can guide you through buying a jacket but can’t place the order for you. This gap between thinking and doing is a major limitation. People don’t just need information; they want results.

To bridge this gap, Microsoft is turning LLMs into action-oriented AI agents. By enabling them to plan, decompose tasks, and engage in real-world interactions, they empower LLMs to effectively manage practical tasks. This shift has the potential to redefine what LLMs can do, turning them into tools that automate complex workflows and simplify everyday tasks. Let’s look at what’s needed to make this happen and how Microsoft is approaching the problem.

What LLMs Need to Act

For LLMs to perform tasks in the real world, they need to go beyond understanding text. They must interact with digital and physical environments while adapting to changing conditions. Here are some of the capabilities they need:

Understanding User Intent

To act effectively, LLMs need to understand user requests. Inputs like text or voice commands are often vague or incomplete. The system must fill in the gaps using its knowledge and the context of the request. Multi-step conversations can help refine these intentions, ensuring the AI understands before taking action.

Turning Intentions into Actions

After understanding a task, the LLMs must convert it into actionable steps. This might involve clicking buttons, calling APIs, or controlling physical devices. The LLMs need to modify its actions to the specific task, adapting to the environment and solving challenges as they arise.

Adapting to Changes

Real world tasks don’t always go as planned. LLMs need to anticipate problems, adjust steps, and find alternatives when issues arise. For instance, if a necessary resource isn’t available, the system should find another way to complete the task. This flexibility ensures the process doesn’t stall when things change.

Specializing in Specific Tasks

While LLMs are designed for general use, specialization makes them more efficient. By focusing on specific tasks, these systems can deliver better results with fewer resources. This is especially important for devices with limited computing power, like smartphones or embedded systems.

By developing these skills, LLMs can move beyond just processing information. They can take meaningful actions, paving the way for AI to integrate seamlessly into everyday workflows.

How Microsoft is Transforming LLMs

Microsoft’s approach to creating action-oriented AI follows a structured process. The key objective is to enable LLMs to understand commands, plan effectively, and take action. Here’s how they’re doing it:

Step 1: Collecting and Preparing Data

In the first phrase, they collected data related to their specific use cases: UFO Agent (described below). The data includes user queries, environmental details, and task-specific actions. Two different types of data are collected in this phase: firstly, they collected task-plan data helping LLMs to outline high-level steps required to complete a task. For example, “Change font size in Word” might involve steps like selecting text and adjusting the toolbar settings. Secondly, they collected task-action data, enabling LLMs to translate these steps into precise instructions, like clicking specific buttons or using keyboard shortcuts.

This combination gives the model both the big picture and the detailed instructions it needs to perform tasks effectively.

Step 2: Training the Model

Once the data is collected, LLMs are refined through multiple training sessions. In the first step, LLMs are trained for task-planning by teaching them how to break down user requests into actionable steps. Expert-labeled data is then used to teach them how to translate these plans into specific actions. To further enhanced their problem-solving capabilities, LLMs have engaged in self-boosting exploration process which empower them to tackle unsolved tasks and generate new examples for continuous learning. Finally, reinforcement learning is applied, using feedback from successes and failures to further improved their decision-making.

Step 3: Offline Testing

After training, the model is tested in controlled environments to ensure reliability. Metrics like Task Success Rate (TSR) and Step Success Rate (SSR) are used to measure performance. For example, testing a calendar management agent might involve verifying its ability to schedule meetings and send invitations without errors.

Step 4: Integration into Real Systems

Once validated, the model is integrated into an agent framework. This allowed it to interact with real-world environments, like clicking buttons or navigating menus. Tools like UI Automation APIs helped the system identify and manipulate user interface elements dynamically.

For example, if tasked with highlighting text in Word, the agent identifies the highlight button, selects the text, and applies formatting. A memory component could help LLM to keeps track of past actions, enabling it adapting to new scenarios.

Step 5: Real-World Testing

The final step is online evaluation. Here, the system is tested in real-world scenarios to ensure it can handle unexpected changes and errors. For example, a customer support bot might guide users through resetting a password while adapting to incorrect inputs or missing information. This testing ensures the AI is robust and ready for everyday use.

A Practical Example: The UFO Agent

To showcase how action-oriented AI works, Microsoft developed the UFO Agent. This system is designed to execute real-world tasks in Windows environments, turning user requests into completed actions.

At its core, the UFO Agent uses a LLM to interpret requests and plan actions. For example, if a user says, “Highlight the word ‘important’ in this document,” the agent interacts with Word to complete the task. It gathers contextual information, like the positions of UI controls, and uses this to plan and execute actions.

The UFO Agent relies on tools like the Windows UI Automation (UIA) API. This API scans applications for control elements, such as buttons or menus. For a task like “Save the document as PDF,” the agent uses the UIA to identify the “File” button, locate the “Save As” option, and execute the necessary steps. By structuring data consistently, the system ensures smooth operation from training to real-world application.

Overcoming Challenges

While this is an exciting development, creating action-oriented AI comes with challenges. Scalability is a major issue. Training and deploying these models across diverse tasks require significant resources. Ensuring safety and reliability is equally important. Models must perform tasks without unintended consequences, especially in sensitive environments. And as these systems interact with private data, maintaining ethical standards around privacy and security is also crucial.

Microsoft’s roadmap focuses on improving efficiency, expanding use cases, and maintaining ethical standards. With these advancements, LLMs could redefine how AI interacts with the world, making them more practical, adaptable, and action-oriented.

The Future of AI

Transforming LLMs into action-oriented agents could be a game-changer. These systems can automate tasks, simplify workflows, and make technology more accessible. Microsoft’s work on action-oriented AI and tools like the UFO Agent is just the beginning. As AI continues to evolve, we can expect smarter, more capable systems that don’t just interact with us—they get jobs done.

#Action-Oriented AI#agent#Agentic AI#agents#ai#AI AGENTS#API#APIs#applications#approach#Artificial Intelligence#automation#bot#bridge#buttons#Calendar#change#code#computing#continuous#data#deploying#details#development#devices#efficiency#Environment#Environmental#evaluation#framework

0 notes

Text

500 mods? LETS PRAY WE DON'T CRASH!

Welcome to the blog where I document my stardew more mods then needed journey,

Give me recomendations for mods to add btw!!!

active mod list, will be updated as we go:

SMAPI - Stardew Modding API

Content Patcher

Stardew Valley Expanded

-stardew valley expanded-

Frontier Farm

Grandpa's Farm

Immersive Farm 2 Remastered

Grampleton Fields

Farm Type Manager (FTM)

CJB Cheats Menu

Generic Mod Config Menu

CJB Item Spawner

NPC Map Locations

Automate

Skull Cavern Elevator

Gift Taste Helper Continued x2

Chests Anywhere

Ridgeside Village

Custom Companions

SpaceCore

Winter Grass

Portraiture

Better Ranching

Bigger Backpack

StardewHack

Canon-Friendly Dialogue Expansion

Gender Neutrality Mod Tokens

Experience Bars

Elle's Seasonal Buildings

Ladder Locator

Miss Coriel's Unique Courtship Response CORE

Elle's New Barn Animals

Hats Won't Mess Up Hair

East Scarp

DaisyNiko's Tilesheets

Destroyable Bushes

Lumisteria Tilesheets - Indoor

Lumisteria Tilesheets - Outdoor

Mapping Extensions and Extra Properties (MEEP)

Better Artisan Good Icons

Happy Birthday

Stardust Core

Happy Birthday English Content Pack

Fast Animations

More Grass

Diverse Stardew Valley - Seasonal Outfits (DSV)

Cross-Mod Compatibility Tokens (CMCT)

Sprites in Detail

PolyamorySweet

Elle's New Coop Animals

Part of the Community

Better Crafting

No More Bowlegs

Show Birthdays

Custom Kissing Mod

Simple Crop Label

Romanceable Rasmodius - SVE Compatible

Mail Framework Mod

Loved Labels

PPJA - Artisan Valley

Artisan Valley

Artisan Valley - CustomCaskMod Add-On

Artisan Valley - Miller Time Add-On

Json Assets

Expanded Preconditions Utility

Producer Framework Mod

Project Populate JsonAssets Content Pack Collection

Event Lookup

Overgrown Flowery Interface

Overgrown Flowery Interface

Overgrown Flowery DigSpots

Overgrown Flowery Overlays

Industrial Furniture Set - For CP and CF

Mi's and Magimatica Country Furniture

Custom Furniture

Convenient Inventory

Elle's New Horses

Dynamic Reflections

To-Dew

GMCM Options

DeepWoods

Rustic Country Town Interiors

Elle's Cat Replacements

Wildflower Grass Field

Range Display

Elle's Town Animals

Industrial Kitchen and Interior

PPJA - Fruits and Veggies

Nyapu's Portraits inspired by Dong

Vibrant Pastoral Redrawn

MixedBag's Tilesheets

Pony Weight Loss Program

Zoom Level

Date Night

Date Night

Date Night Free Love Version

Event Repeater - A useful tool for Content Patcher Modding

Elle's Dog Replacements

The Farmer's Children (LittleNPC)

LittleNPCs

LittleNPCs

LittleNPCs

PPJA - More Trees

Project Populate JsonAssets Content Pack Collection

Rustic Country Walls and Floors

Rustic Country Walls and Floors

Rustic Country Walls and Floors for Custom Walls and Floors

Better Junimos

Hot Spring Farm Cave

Immersive Farm 2 Remastered (SVE) compatible version

8 notes

·

View notes

Text

Programming object lesson of the day:

A couple days ago, one of the side project apps I run (rpthreadtracker.com) went down for no immediately obvious reason. The issue seems to have ended up being that the backend was running on .NET Core 2.2, which the host was no longer supporting, and I had to do a semi-emergency upgrade of all the code to .NET Core 6, a pretty major update that required a lot of syntactic changes and other fixes.

This is, of course, an obvious lesson in keeping an eye on when your code is using a library out of date enough not to be well supported anymore. (I have some thoughts on whether .NET Core 2.2 is old enough to have been dumped like this, but nevertheless I knew it was going out of LTS and could have been more prepared.) But that's all another post.

What really struck me was how valuable it turned out to be that I had already written an integration test suite for this application.

Historically, at basically every job I've worked for and also on most of my side projects, automated testing tends to be the thing most likely to fall by the wayside. When you have 376428648 things you want to do with an application and only a limited number of hours in the day, getting those 376428648 things to work feels very much like the top priority. You test them manually to make sure they work, and think, yeah, I'll get some tests written at some point, if I have time, but this is fine for now.

And to be honest, most of the time it usually is fine! But a robust test suite is one of those things that you don't need... until you suddenly REALLY FUCKING NEED IT.

RPTT is my baby, my longest running side project, the one with the most users, and the one I've put the most work into. So in a fit of side project passion and wanting to Do All The Right Things For Once, I actively wrote a massive amount of tests for it a few years ago. The backend has a full unit test suite that is approaching 100% coverage (which is a dumb metric you shouldn't actually stress about, but again, a post for another day). I also used Postman, an excellently full-featured API client, to write a battery of integration tests which would hit all of the API endpoints in a defined order, storing variables and verifying values as it went to take a mock user all the way through their usage life cycle.

And goddamn was that useful to have now, years later, as I had to fix a metric fuckton of subtle breakage points while porting the app to the updated framework. With one click, I could send the test suite through every endpoint in the backend and get quick feedback on everywhere that it wasn't behaving exactly the way it behaved before the update. And when I was ready to deploy the updated version, I could do so with solid confidence that from the front end's perspective, nothing would be different and everything would slot correctly into place.

I don't say this at all to shame anyone for not prioritizing writing tests - I usually don't, especially on my side projects, and this was a fortuitous outlier. But it was a really good reminder of why tests are a valuable tool in the first place and why they do deserve to be prioritized when it's possible to do so.

#bjk talks#coding#codeblr#programming#progblr#web development#I'm trying to finally get back to streaming this weekend so maybe the upcoming coding stream will be about#setting up one of these integration test suites in postman

78 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

The Evolution of Web Development: A Journey Through the Years

Web development is the work involved in developing a website for the Internet (World Wide Web) or an intranet .

Origin/ Web 1.0:

Tim Berners-Lee created the World Wide Web in 1989 at CERN. The primary goal in the development of the Web was to fulfill the automated information-sharing needs of academics affiliated with institutions and various global organizations. Consequently, HTML was developed in 1993.

Web 2.0:

Web 2.0 introduced increased user engagement and communication. It evolved from the static, read-only nature of Web 1.0 and became an integrated network for engagement and communication. It is often referred to as a user-focused, read-write online network.

Web 3.0:

Web 3.0, considered the third and current version of the web, was introduced in 2014. Web 3.0 aims to turn the web into a sizable, organized database, providing more functionality than traditional search engines.

This evolution transformed static websites into dynamic and responsive platforms, setting the stage for the complex and feature-rich web applications we have today.

Static HTML Pages (1990s)

Introduction of CSS (late 1990s)[13]

JavaScript and Dynamic HTML (1990s - early 2000s)[14][15]

AJAX (1998)[16]

Rise of Content management systems (CMS) (mid-2000s)

Mobile web (late 2000s - 2010s)

Single-page applications (SPAs) and front-end frameworks (2010s)

Server-side javaScript (2010s)

Microservices and API-driven development (2010s - present)

Progressive web apps (PWAs) (2010s - present)

JAMstack Architecture (2010s - present)

WebAssembly (Wasm) (2010s - present)

Serverless computing (2010s - present)

AI and Machine Learning Integration (2010s - present)

Reference:

2 notes

·

View notes

Text

Harnessing the Power of Data Engineering for Modern Enterprises

In the contemporary business landscape, data has emerged as the lifeblood of organizations, fueling innovation, strategic decision-making, and operational efficiency. As businesses generate and collect vast amounts of data, the need for robust data engineering services has become more critical than ever. SG Analytics offers comprehensive data engineering solutions designed to transform raw data into actionable insights, driving business growth and success.

The Importance of Data Engineering

Data engineering is the foundational process that involves designing, building, and managing the infrastructure required to collect, store, and analyze data. It is the backbone of any data-driven enterprise, ensuring that data is clean, accurate, and accessible for analysis. In a world where businesses are inundated with data from various sources, data engineering plays a pivotal role in creating a streamlined and efficient data pipeline.

SG Analytics’ data engineering services are tailored to meet the unique needs of businesses across industries. By leveraging advanced technologies and methodologies, SG Analytics helps organizations build scalable data architectures that support real-time analytics and decision-making. Whether it’s cloud-based data warehouses, data lakes, or data integration platforms, SG Analytics provides end-to-end solutions that enable businesses to harness the full potential of their data.

Building a Robust Data Infrastructure

At the core of SG Analytics’ data engineering services is the ability to build robust data infrastructure that can handle the complexities of modern data environments. This includes the design and implementation of data pipelines that facilitate the smooth flow of data from source to destination. By automating data ingestion, transformation, and loading processes, SG Analytics ensures that data is readily available for analysis, reducing the time to insight.

One of the key challenges businesses face is dealing with the diverse formats and structures of data. SG Analytics excels in data integration, bringing together data from various sources such as databases, APIs, and third-party platforms. This unified approach to data management ensures that businesses have a single source of truth, enabling them to make informed decisions based on accurate and consistent data.

Leveraging Cloud Technologies for Scalability

As businesses grow, so does the volume of data they generate. Traditional on-premise data storage solutions often struggle to keep up with this exponential growth, leading to performance bottlenecks and increased costs. SG Analytics addresses this challenge by leveraging cloud technologies to build scalable data architectures.

Cloud-based data engineering solutions offer several advantages, including scalability, flexibility, and cost-efficiency. SG Analytics helps businesses migrate their data to the cloud, enabling them to scale their data infrastructure in line with their needs. Whether it’s setting up cloud data warehouses or implementing data lakes, SG Analytics ensures that businesses can store and process large volumes of data without compromising on performance.

Ensuring Data Quality and Governance

Inaccurate or incomplete data can lead to poor decision-making and costly mistakes. That’s why data quality and governance are critical components of SG Analytics’ data engineering services. By implementing data validation, cleansing, and enrichment processes, SG Analytics ensures that businesses have access to high-quality data that drives reliable insights.

Data governance is equally important, as it defines the policies and procedures for managing data throughout its lifecycle. SG Analytics helps businesses establish robust data governance frameworks that ensure compliance with regulatory requirements and industry standards. This includes data lineage tracking, access controls, and audit trails, all of which contribute to the security and integrity of data.

Enhancing Data Analytics with Natural Language Processing Services

In today’s data-driven world, businesses are increasingly turning to advanced analytics techniques to extract deeper insights from their data. One such technique is natural language processing (NLP), a branch of artificial intelligence that enables computers to understand, interpret, and generate human language.

SG Analytics offers cutting-edge natural language processing services as part of its data engineering portfolio. By integrating NLP into data pipelines, SG Analytics helps businesses analyze unstructured data, such as text, social media posts, and customer reviews, to uncover hidden patterns and trends. This capability is particularly valuable in industries like healthcare, finance, and retail, where understanding customer sentiment and behavior is crucial for success.

NLP services can be used to automate various tasks, such as sentiment analysis, topic modeling, and entity recognition. For example, a retail business can use NLP to analyze customer feedback and identify common complaints, allowing them to address issues proactively. Similarly, a financial institution can use NLP to analyze market trends and predict future movements, enabling them to make informed investment decisions.

By incorporating NLP into their data engineering services, SG Analytics empowers businesses to go beyond traditional data analysis and unlock the full potential of their data. Whether it’s extracting insights from vast amounts of text data or automating complex tasks, NLP services provide businesses with a competitive edge in the market.

Driving Business Success with Data Engineering

The ultimate goal of data engineering is to drive business success by enabling organizations to make data-driven decisions. SG Analytics’ data engineering services provide businesses with the tools and capabilities they need to achieve this goal. By building robust data infrastructure, ensuring data quality and governance, and leveraging advanced analytics techniques like NLP, SG Analytics helps businesses stay ahead of the competition.

In a rapidly evolving business landscape, the ability to harness the power of data is a key differentiator. With SG Analytics’ data engineering services, businesses can unlock new opportunities, optimize their operations, and achieve sustainable growth. Whether you’re a small startup or a large enterprise, SG Analytics has the expertise and experience to help you navigate the complexities of data engineering and achieve your business objectives.

5 notes

·

View notes

Text

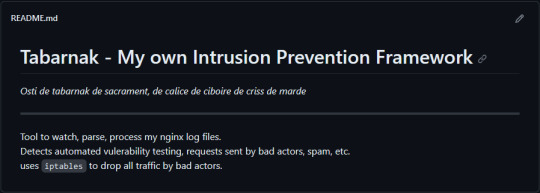

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

My Server Structure

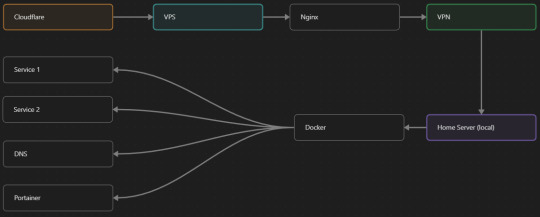

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

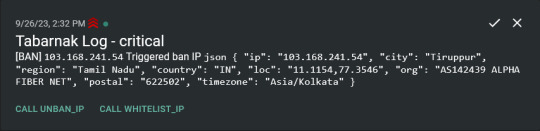

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

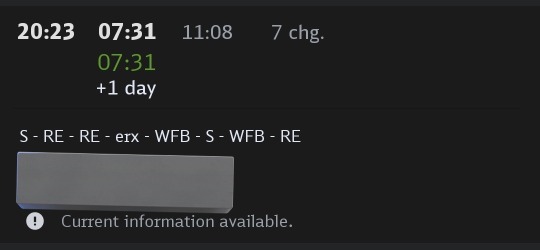

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

From Beginner to Pro: Dominate Automated Testing with Our Selenium Course

Welcome to our comprehensive Selenium course designed to help individuals from all backgrounds, whether novice or experienced, enhance their automated testing skills and become proficient in Selenium. In this article, we will delve into the world of Selenium, an open-source automated testing framework that has revolutionized software testing. With our course, we aim to empower aspiring professionals with the knowledge and techniques necessary to excel in the field of automated testing.

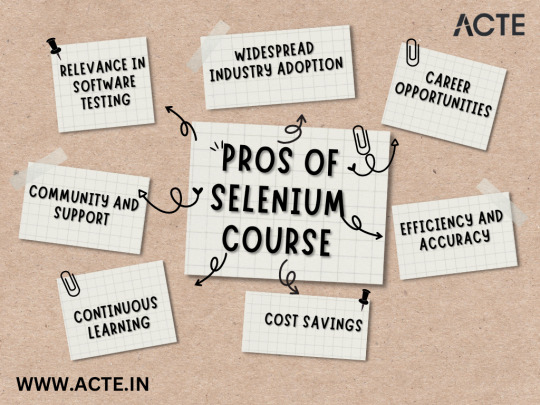

Why Choose Selenium?

Selenium offers a wide array of features and capabilities that make it the go-to choice for automated testing in the IT industry.

It allows testers to write test scripts in multiple programming languages, including Java, Python, C#, and more, ensuring flexibility and compatibility with various project requirements.

Selenium’s compatibility with different web browsers such as Chrome, Firefox, Safari, and Internet Explorer makes it a versatile choice for testing web applications.

The ability to leverage Selenium WebDriver, which provides a simple and powerful API, allows for seamless interaction with web elements, making automating tasks easier than ever before.

Selenium’s Key Components:

Selenium IDE:

Selenium Integrated Development Environment (IDE) is a Firefox plugin primarily used for recording and playing back test cases. It offers a user-friendly interface, allowing even non-programmers to create basic tests effortlessly.

Although Selenium IDE is a valuable tool for beginners, our course primarily focuses on Selenium WebDriver due to its advanced capabilities and wider scope.

Selenium WebDriver:

Selenium WebDriver is the most critical component of the Selenium framework. It provides a programming interface to interact with web elements and perform actions programmatically.

WebDriver’s functionality extends beyond just browser automation; it also enables testers to handle alerts, pop-ups, frames, and handle various other web application interactions.

Our Selenium course places significant emphasis on WebDriver, equipping learners with the skills to automate complex test scenarios efficiently.

Selenium Grid:

Selenium Grid empowers testers by allowing them to execute tests on multiple machines and browsers simultaneously, making it an essential component for testing scalability and cross-browser compatibility.

Through our Selenium course, you’ll gain a deep understanding of Selenium Grid and learn how to harness its capabilities effectively.

The Benefits of Our Selenium Course

Comprehensive Curriculum: Our course is designed to cover everything from the fundamentals of automated testing to advanced techniques in Selenium, ensuring learners receive a well-rounded education.

Hands-on Experience: Practical exercises and real-world examples are incorporated to provide learners with the opportunity to apply their knowledge in a realistic setting.

Expert Instruction: You’ll be guided by experienced instructors who have a profound understanding of Selenium and its application in the industry, ensuring you receive the best possible education.

Flexibility: Our course offers flexible learning options, allowing you to study at your own pace and convenience, ensuring a stress-free learning experience.

Industry Recognition: Completion of our Selenium course will provide you with a valuable certification recognized by employers worldwide, enhancing your career prospects within the IT industry.

Who Should Enroll?

Novice Testers: If you’re new to the world of automated testing and aspire to become proficient in Selenium, our course is designed specifically for you. We’ll lay a strong foundation and gradually guide you towards becoming a pro in Selenium automation.

Experienced Testers: Even if you already have experience in automated testing, our course will help you enhance your skills and keep up with the latest trends and best practices in Selenium.

IT Professionals: Individuals working in the IT industry, such as developers or quality assurance engineers, who want to broaden their skillset and optimize their testing processes, will greatly benefit from our Selenium course.

In conclusion, our Selenium course is a one-stop solution for individuals seeking to dominate automated testing and excel in their careers. With a comprehensive curriculum, hands-on experience, expert instruction, and industry recognition, you’ll be well-prepared to tackle any automated testing challenges that come your way. Make the smart choice and enroll in our Selenium course at ACTE Technologies today to unlock your full potential in the world of software testing.

7 notes

·

View notes

Text

Building Robust API Test Suites with the Best Automation Frameworks

Introduction

Introduce the role of robust API test suites in maintaining the functionality, reliability, and performance of modern applications. Emphasize the importance of selecting the right automation framework to build these test suites effectively.

Selecting the Right Framework

Discuss the criteria for choosing an API automation framework, such as ease of use, compatibility with protocols (REST, SOAP, GraphQL), and integration with CI/CD pipelines. Mention popular frameworks like Postman, RestAssured, and Karate.

Structuring Test Suites for Maintainability

Explain how a well-structured API test suite can reduce maintenance overhead. Discuss best practices for organizing tests, such as grouping tests by functionality, and using reusable components (like request templates and utility functions).

Coverage and Depth of Testing

Highlight the need for comprehensive coverage, including functional testing, error handling, and edge cases. Point out how API automation frameworks should allow testing of different request methods (GET, POST, PUT, DELETE) and support for data-driven testing.

Parallel Execution for Scalability

Explore the importance of frameworks that support parallel execution, enabling faster test runs across multiple environments and API endpoints, which is critical for large-scale systems.

Conclusion

Summarize the key steps to building robust API test suites and how using the best automation frameworks enhances the overall development process and quality assurance.

#api automation#api automation framework#api automation testing#api automation testing tools#api automation tools#api testing framework#api testing techniques#api testing tools#api tools#codeless test automation#codeless testing platform#test automation software#automated qa testing#no code test automation tools

1 note

·

View note

Text

Exploring Essential Laravel Development Tools for Building Powerful Web Applications

Laravel has emerged as one of the most popular PHP frameworks, providing builders a sturdy and green platform for building net packages. Central to the fulfillment of Laravel tasks are the development tools that streamline the improvement process, decorate productiveness, and make certain code quality. In this article, we will delve into the best Laravel development tools that each developer should be acquainted with.

1 Composer: Composer is a dependency manager for PHP that allows you to declare the libraries your project relies upon on and manages them for you. Laravel itself relies closely on Composer for package deal management, making it an essential device for Laravel builders. With Composer, you may without problems upload, eliminate, or update applications, making sure that your Laravel project stays up-to-date with the present day dependencies.

2 Artisan: Artisan is the command-line interface blanketed with Laravel, presenting various helpful instructions for scaffolding, handling migrations, producing controllers, models, and plenty extra. Laravel builders leverage Artisan to automate repetitive tasks and streamline improvement workflows, thereby growing efficiency and productiveness.

3 Laravel Debugbar: Debugging is an crucial component of software program development, and Laravel Debugbar simplifies the debugging procedure by using supplying exact insights into the application's overall performance, queries, views, and greater. It's a accessible device for identifying and resolving problems all through improvement, making sure the clean functioning of your Laravel application.

4 Laravel Telescope: Similar to Laravel Debugbar, Laravel Telescope is a debugging assistant for Laravel programs, presenting actual-time insights into requests, exceptions, database queries, and greater. With its intuitive dashboard, developers can monitor the software's behavior, pick out performance bottlenecks, and optimize hence.

5 Laravel Mix: Laravel Mix offers a fluent API for outlining webpack build steps on your Laravel application. It simplifies asset compilation and preprocessing duties together with compiling SASS or LESS documents, concatenating and minifying JavaScript documents, and dealing with versioning. Laravel Mix significantly streamlines the frontend improvement procedure, permitting builders to attention on building notable consumer reviews.

6 Laravel Horizon: Laravel Horizon is a dashboard and configuration system for Laravel's Redis queue, imparting insights into process throughput, runtime metrics, and more. It enables builders to monitor and control queued jobs efficiently, ensuring most beneficial performance and scalability for Laravel programs that leverage history processing.

7 Laravel Envoyer: Laravel Envoyer is a deployment tool designed specifically for Laravel packages, facilitating seamless deployment workflows with 0 downtime. It automates the deployment process, from pushing code adjustments to more than one servers to executing deployment scripts, thereby minimizing the chance of errors and ensuring smooth deployments.

8 Laravel Dusk: Laravel Dusk is an cease-to-give up browser testing tool for Laravel applications, built on pinnacle of the ChromeDriver and WebDriverIO. It lets in builders to put in writing expressive and dependable browser assessments, making sure that critical user interactions and workflows function as expected across exceptional browsers and environments.

9 Laravel Valet: Laravel Valet gives a light-weight improvement surroundings for Laravel applications on macOS, offering seamless integration with equipment like MySQL, NGINX, and PHP. It simplifies the setup process, permitting developers to consciousness on writing code instead of configuring their development environment.

In end, mastering the vital Laravel development tools noted above is important for building robust, green, and scalable internet packages with Laravel. Whether it's handling dependencies, debugging troubles, optimizing overall performance, or streamlining deployment workflows, those equipment empower Laravel developers to supply outstanding answers that meet the demands of current internet development. Embracing these gear will certainly increase your Laravel improvement enjoy and accelerate your journey toward turning into a talented Laravel developer.

3 notes

·

View notes

Text

20 Best Android Development Practices in 2023

Introduction:

In today's competitive market, creating high-quality Android applications requires adherence to best development practices. Android app development agencies in Vadodara (Gujarat, India) like Nivida Web Solutions Pvt. Ltd., play a crucial role in delivering exceptional applications. This article presents the 20 best Android development practices to follow in 2023, ensuring the success of your app development projects.

1. Define Clear Objectives:

Begin by defining clear objectives for your Android app development project. Identify the target audience, the app's purpose, and the specific goals you aim to achieve. This clarity will guide the development process and result in a more focused and effective application.

2. Embrace the Material Design Guidelines:

Google's Material Design guidelines provide a comprehensive set of principles and guidelines for designing visually appealing and intuitive Android applications. Adhering to these guidelines ensures consistency, enhances usability, and delivers an optimal user experience.

3. Optimize App Performance:

Performance optimization is crucial for user satisfaction. Focus on optimizing app loading times, minimizing network requests, and implementing efficient caching mechanisms. Profiling tools like Android Profiler can help identify performance bottlenecks and improve overall app responsiveness.

4. Follow a Modular Approach:

Adopting a modular approach allows for easier maintenance, scalability, and code reusability. Breaking down your app into smaller, manageable modules promotes faster development, reduces dependencies, and enhances collaboration among developers.

5. Implement Responsive UI Designs:

Designing a responsive user interface (UI) ensures that your app adapts seamlessly to various screen sizes and orientations. Utilize Android’s resources, such as ConstraintLayout, to create dynamic and adaptive UIs that provide a consistent experience across different devices.

6. Prioritize Security:

Android app security is of paramount importance. Employ secure coding practices, authenticate user inputs, encrypt sensitive data, and regularly update libraries and dependencies to protect your app against vulnerabilities and potential attacks.

7. Opt for Kotlin as the Preferred Language:

Kotlin has gained immense popularity among Android developers due to its conciseness, null safety, and enhanced interoperability with existing Java code. Embrace Kotlin as the primary programming language for your Android app development projects to leverage its modern features and developer-friendly syntax.

8. Conduct Thorough Testing:

Testing is crucial to ensure the reliability and stability of your Android applications. Employ a combination of unit testing, integration testing, and automated UI testing using frameworks like Espresso to catch bugs early and deliver a robust app to your users.

9. Optimize Battery Consumption:

Battery life is a significant concern for Android users. Optimize your app's battery consumption by minimizing background processes, reducing network requests, and implementing efficient power management techniques. Android's Battery Optimization APIs can help streamline power usage.

10. Implement Continuous Integration and Delivery (CI/CD):

Adopting CI/CD practices facilitates frequent code integration, automated testing, and seamless deployment. Tools like Jenkins and Bitrise enable developers to automate build processes, run tests, and deploy app updates efficiently, resulting in faster time-to-market and improved quality.

11. Leverage Cloud Technologies:

Integrating cloud technologies, such as cloud storage and backend services, can enhance your app's scalability, performance, and reliability. Services like Firebase offer powerful tools for authentication, database management, push notifications, and analytics.

12. Ensure Accessibility:

Make your Android app accessible to users with disabilities by adhering to accessibility guidelines. Provide alternative text for images, support screen readers, and use colour contrast appropriately to ensure inclusivity and a positive user experience for all users.

13. Optimize App Size:

Large app sizes can deter users from downloading and installing your application. Optimize your app's size by eliminating unused resources, compressing images, and utilizing Android App Bundles to deliver optimized APKs based on device configurations.

14. Implement Offline Support:

Provide offline capabilities in your app to ensure users can access essential features and content even when offline. Implement local caching, synchronize data in the background, and notify users of limited or no connectivity to deliver a seamless user experience.

15. Implement Analytics and Crash Reporting:

Integrate analytics and crash reporting tools, such as Google Analytics and Firebase Crashlytics, to gain insights into user behaviour, identify areas for improvement, and address crashes promptly. This data-driven approach helps in refining your app's performance and user engagement.

16. Keep Up with Android OS Updates:

Stay up to date with the latest Android OS updates, new APIs, and platform features. Regularly update your app to leverage new functionalities, enhance performance, and ensure compatibility with newer devices.

17. Provide Localized Versions:

Cater to a global audience by providing localized versions of your app. Translate your app's content, user interface, and notifications into different languages to expand your user base and increase user engagement.

18. Ensure App Store Optimization (ASO):

Optimize your app's visibility and discoverability in the Google Play Store by utilizing appropriate keywords, engaging app descriptions, compelling screenshots, and positive user reviews. ASO techniques can significantly impact your app's download and conversion rates.

19. Follow Privacy Regulations and Guidelines:

Adhere to privacy regulations, such as GDPR and CCPA, and ensure transparent data handling practices within your app. Obtain user consent for data collection, storage, and usage, and provide clear privacy policies to establish trust with your users.

20. Regularly Update and Maintain Your App:

Continuously monitor user feedback, track app performance metrics, and release regular updates to address bugs, introduce new features, and enhance user experience. Regular maintenance ensures that your app remains relevant, competitive, and secure.

Conclusion:

Adopting these 20 best Android development practices in 2023 will help Android app development companies in India, create exceptional applications. By focusing on objectives, embracing Material Design, optimizing performance, and following modern development approaches, your Android apps will stand out in the market, delight users, and achieve long-term success. Also by partnering with an Android App Development Company in India (Gujarat, Vadodara) you can leverage their expertise.

#Android App development company in India#Android App development agencies in India#Android App development companies in India#Android App development company in Gujarat#Android App development company in Vadodara#Android App development agencies in Vadodara#Android App development agencies in Gujarat#Android App development companies in Vadodara#Android App development companies in Gujarat

7 notes

·

View notes

Text

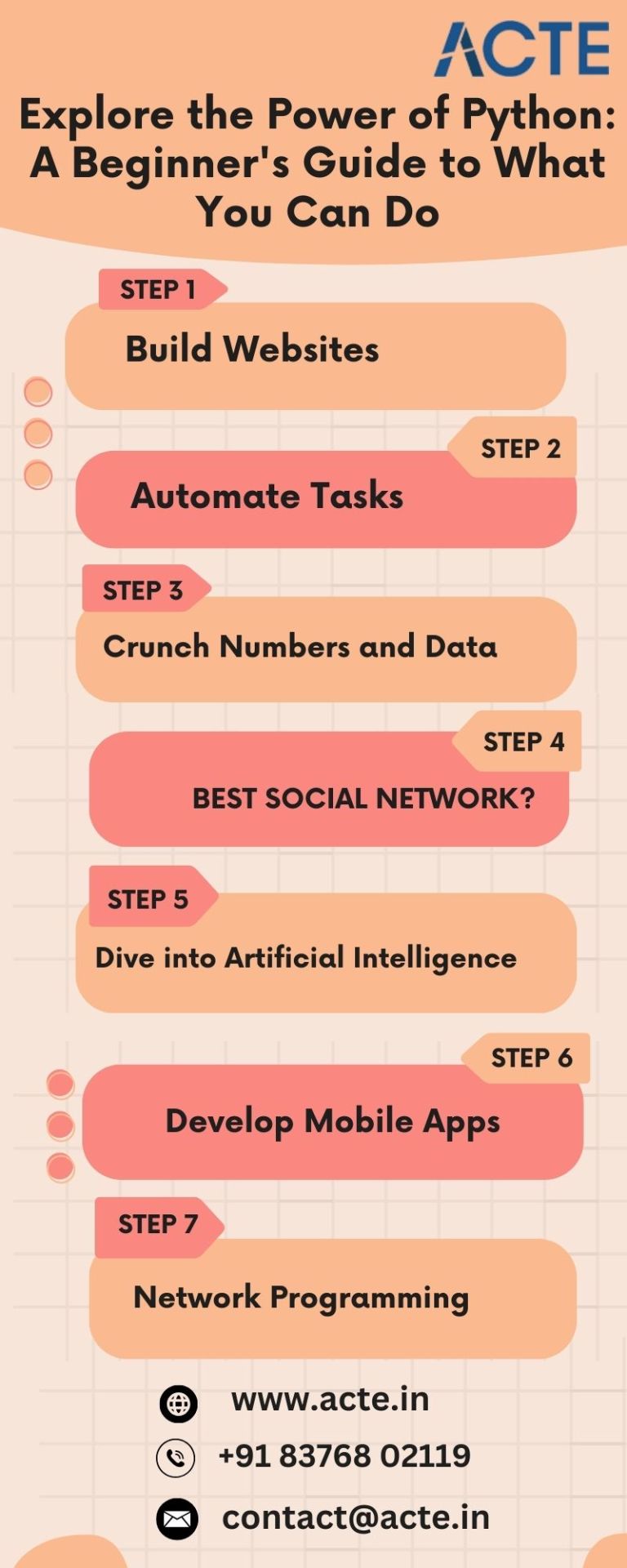

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

Generative AI, innovation, creativity & what the future might hold - CyberTalk

New Post has been published on https://thedigitalinsider.com/generative-ai-innovation-creativity-what-the-future-might-hold-cybertalk/

Generative AI, innovation, creativity & what the future might hold - CyberTalk

Stephen M. Walker II is CEO and Co-founder of Klu, an LLM App Platform. Prior to founding Klu, Stephen held product leadership roles Productboard, Amazon, and Capital One.

Are you excited about empowering organizations to leverage AI for innovative endeavors? So is Stephen M. Walker II, CEO and Co-Founder of the company Klu, whose cutting-edge LLM platform empowers users to customize generative AI systems in accordance with unique organizational needs, resulting in transformative opportunities and potential.

In this interview, Stephen not only discusses his innovative vertical SaaS platform, but also addresses artificial intelligence, generative AI, innovation, creativity and culture more broadly. Want to see where generative AI is headed? Get perspectives that can inform your viewpoint, and help you pave the way for a successful 2024. Stay current. Keep reading.

Please share a bit about the Klu story:

We started Klu after seeing how capable the early versions of OpenAI’s GPT-3 were when it came to common busy-work tasks related to HR and project management. We began building a vertical SaaS product, but needed tools to launch new AI-powered features, experiment with them, track changes, and optimize the functionality as new models became available. Today, Klu is actually our internal tools turned into an app platform for anyone building their own generative features.

What kinds of challenges can Klu help solve for users?

Building an AI-powered feature that connects to an API is pretty easy, but maintaining that over time and understanding what’s working for your users takes months of extra functionality to build out. We make it possible for our users to build their own version of ChatGPT, built on their internal documents or data, in minutes.

What is your vision for the company?

The founding insight that we have is that there’s a lot of busy work that happens in companies and software today. I believe that over the next few years, you will see each company form AI teams, responsible for the internal and external features that automate this busy work away.

I’ll give you a good example for managers: Today, if you’re a senior manager or director, you likely have two layers of employees. During performance management cycles, you have to read feedback for each employee and piece together their strengths and areas for improvement. What if, instead, you received a briefing for each employee with these already synthesized and direct quotes from their peers? Now think about all of the other tasks in business that take several hours and that most people dread. We are building the tools for every company to easily solve this and bring AI into their organization.

Please share a bit about the technology behind the product:

In many ways, Klu is not that different from most other modern digital products. We’re built on cloud providers, use open source frameworks like Nextjs for our app, and have a mix of Typescript and Python services. But with AI, what’s unique is the need to lower latency, manage vector data, and connect to different AI models for different tasks. We built on Supabase using Pgvector to build our own vector storage solution. We support all major LLM providers, but we partnered with Microsoft Azure to build a global network of embedding models (Ada) and generative models (GPT-4), and use Cloudflare edge workers to deliver the fastest experience.

What innovative features or approaches have you introduced to improve user experiences/address industry challenges?

One of the biggest challenges in building AI apps is managing changes to your LLM prompts over time. The smallest changes might break for some users or introduce new and problematic edge cases. We’ve created a system similar to Git in order to track version changes, and we use proprietary AI models to review the changes and alert our customers if they’re making breaking changes. This concept isn’t novel for traditional developers, but I believe we’re the first to bring these concepts to AI engineers.

How does Klu strive to keep LLMs secure?

Cyber security is paramount at Klu. From day one, we created our policies and system monitoring for SOC2 auditors. It’s crucial for us to be a trusted partner for our customers, but it’s also top of mind for many enterprise customers. We also have a data privacy agreement with Azure, which allows us to offer GDPR-compliant versions of the OpenAI models to our customers. And finally, we offer customers the ability to redact PII from prompts so that this data is never sent to third-party models.

Internally we have pentest hackathons to understand where things break and to proactively understand potential threats. We use classic tools like Metasploit and Nmap, but the most interesting results have been finding ways to mitigate unintentional denial of service attacks. We proactively test what happens when we hit endpoints with hundreds of parallel requests per second.

What are your perspectives on the future of LLMs (predictions for 2024)?

This (2024) will be the year for multi-modal frontier models. A frontier model is just a foundational model that is leading the state of the art for what is possible. OpenAI will roll out GPT-4 Vision API access later this year and we anticipate this exploding in usage next year, along with competitive offerings from other leading AI labs. If you want to preview what will be possible, ChatGPT Pro and Enterprise customers have access to this feature in the app today.