#TensorFlow 2.0

Text

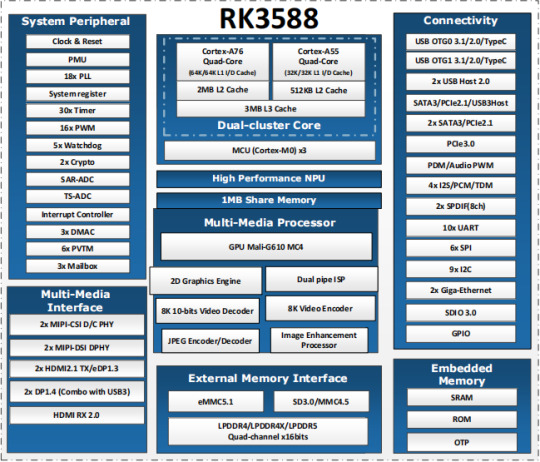

Introduction to RK3588

What is RK3588?

RK3588 is a universal SoC with ARM architecture, which integrates quad-core Cortex-A76 (large core) and quad-core Cortex-A55(small core). Equipped with G610 MP4 GPU, which can run complex graphics processing smoothly. Embedded 3D GPU makes RK3588 fully compatible with OpenGLES 1.1, 2.0 and 3.2, OpenCL up to 2.2 and Vulkan1.2. A special 2D hardware engine with MMU will maximize display performance and provide smooth operation. And a 6 TOPs NPU empowers various AI scenarios, providing possibilities for local offline AI computing in complex scenarios, complex video stream analysis, and other applications. Built-in a variety of powerful embedded hardware engines, support 8K@60fps H.265 and VP9 decoders, 8K@30fps H.264 decoders and 4K@60fps AV1 decoders; support 8K@30fps H.264 and H.265 encoder, high-quality JPEG encoder/decoder, dedicated image pre-processor and post-processor.

RK3588 also introduces a new generation of fully hardware-based ISP (Image Signal Processor) with a maximum of 48 million pixels, implementing many algorithm accelerators, such as HDR, 3A, LSC, 3DNR, 2DNR, sharpening, dehaze, fisheye correction, gamma Correction, etc., have a wide range of applications in graphics post-processing. RK3588 integrates Rockchip's new generation NPU, which can support INT4/INT8/INT16/FP16 hybrid computing. Its strong compatibility can easily convert network models based on a series of frameworks such as TensorFlow / MXNet / PyTorch / Caffe. RK3588 has a high-performance 4-channel external memory interface (LPDDR4/LPDDR4X/LPDDR5), capable of supporting demanding memory bandwidth.

RK3588 Block Diagram

Advantages of RK3588?

Computing: RK3588 integrates quad-core Cortex-A76 and quad-core Cortex-A55, G610 MP4 graphics processor, and a separate NEON coprocessor. Integrating the third-generation NPU self-developed by Rockchip, computing power 6TOPS, which can meet the computing power requirements of most artificial intelligence models.

Vision: support multi-camera input, ISP3.0, high-quality audio;

Display: support multi-screen display, 8K high-quality, 3D display, etc.;

Video processing: support 8k video and multiple 4k codecs;

Communication: support multiple high-speed interfaces such as PCIe2.0 and PCIe3.0, USB3.0, and Gigabit Ethernet;

Operating system: Android 12 is supported. Linux and Ubuntu will be developed in succession;

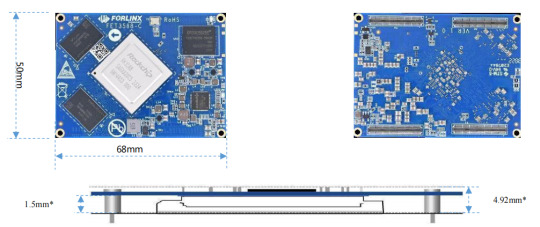

FET3588-C SoM based on Rockchip RK3588

Forlinx FET3588-C SoM inherits all advantages of RK3588. The following introduces it from structure and hardware design.

1. Structure:

The SoM size is 50mm x 68mm, smaller than most RK3588 SoMs on market;

100pin ultra-thin connector is used to connect SoM and carrier board. The combined height of connectors is 1.5mm, which greatly reduces the thickness of SoM; four mounting holes with a diameter of 2.2mm are reserved at the four corners of SoM. The product is used in a vibration environment can install fixing screws to improve the reliability of product connections.

2. Hardware Design:

FET3568-C SoM uses 12V power supply. A higher power supply voltage can increase the upper limit of power supply and reduce line loss. Ensure that the Forlinx’s SoM can run stably for a long time at full load. The power supply adopts RK single PMIC solution, which supports dynamic frequency modulation.

FET3568-C SoM uses 4 pieces of 100pin connectors, with a total of 400 pins; all the functions that can be extracted from processor are all extracted, and ground loop pins of high-speed signal are sufficient, and power supply and loop pins are sufficient to ensure signal integrity and power integrity.

The default memory configuration of FET3568-C SoM supports 4GB/8GB (up to 32GB) LPDDR4/LPDDR4X-4266; default storage configuration supports 32GB/64GB (larger storage is optional) eMMC;

Each interface signal and power supply of SoM and carrier board have been strictly tested to ensure that the signal quality is good and the power wave is within specified range.

PCB layout: Forlinx uses top layer-GND-POWER-bottom layer to ensure the continuity and stability of signals.

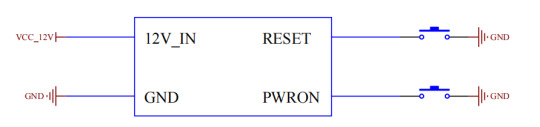

RK3588 SoM hardware design Guide

FET3588-C SoM has integrated power supply and storage circuit in a small module. The required external circuit is very simple. A minimal system only needs power supply and startup configuration to run, as shown in the figure below:

The minimum system includes SoM power supply, system flashing circuit, and debugging serial port circuit. The minimum system schematic diagram can be found in "OK3588-C_Hardware Manual". However, in general, it is recommended to connect some external devices, such as debugging serial port, otherwise user cannot judge whether system is started. After completing these, on this basis, add the functions required by user according to default interface definition of RK3588 SoM provided by Forlinx.

RK3588 Carrier Board Hardware Design Guide

The interface resources derived from Forlinx embedded OK3588-C development board are very rich, which provides great convenience for customers' development and testing. Moreover, OK3588-C development board has passed rigorous tests and can provide stable performance support for customers' high-end applications.

In order to facilitate user's secondary development, Forlinx provides RK3588 hardware design guidelines to annotate the problems that may be encountered during design process of RK3588. We want to help users make the research and development process simpler and more efficient, and make customers' products smarter and more stable. Due to the large amount of content, only a few guidelines for interface design are listed here. For details, you can contact us online to obtain "OK3588-C_Hardware Manual" (Click to Inquiry)

1 note

·

View note

Link

1 note

·

View note

Text

KServe Providers Serve NIMble Cloud & Data Centre Inference

It’s going to get easier than ever to implement generative AI in the workplace.

NVIDIA NIM, an array of microservices for generative AI inference, will integrate with KServe, an open-source programme that automates the deployment of AI models at the scale of cloud computing applications.

Because of this combination, generative AI can be implemented similarly to other large-scale enterprise applications. Additionally, it opens up NIM to a broad audience via platforms from other businesses, including Red Hat, Canonical, and Nutanix.

NVIDIA’s solutions are now available to clients, ecosystem partners, and the open-source community thanks to the integration of NIM on KServe. With a single API call via NIM, all of them may benefit from the security, performance, and support of the NVIDIA AI Enterprise software platform – the current programming equivalent of a push button.

AI provisioning on Kubernetes

Originally, KServe was a part of Kubeflow, an open-source machine learning toolkit built on top of Kubernetes, an open-source software containerisation system that holds all the components of big distributed systems.

KServe was created as Kubeflow’s work on AI inference grew, and it eventually developed into its own open-source project.

The KServe software is currently used by numerous organisations, including AWS, Bloomberg, Canonical, Cisco, Hewlett Packard Enterprise,as IBM, Red Hat, Zillow, and NVIDIA. Several organisations have contributed to and used the software.

Behind the Scenes With KServe

In essence, KServe is a Kubernetes addon that uses AI inference like a potent cloud app. It runs with optimal performance, adheres to a common protocol, and supports TensorFlow, Scikit-learn, PyTorch, and XGBoost without requiring users to be familiar with the specifics of those AI frameworks.

These days, with the rapid emergence of new large language models (LLMs), the software is very helpful.

KServe makes it simple for users to switch between models to see which one best meets their requirements. Additionally, a KServe feature known as “canary rollouts” automates the process of meticulously validating and progressively releasing an updated model into production when one is available.

GPU autoscaling is an additional feature that effectively controls model deployment in response to fluctuations in service demand, resulting in optimal user and service provider experiences.

KServe API

With the convenience of NVIDIA NIM, the goodness of KServe will now be accessible.

All the complexity is handled by a single API request when using NIM. Whether their application is running in their data centre or on a remote cloud service, enterprise IT administrators receive the metrics they need to make sure it is operating as efficiently and effectively as possible. This is true even if they decide to switch up the AI models they’re employing.

With NIM, IT workers may alter their organization’s operations and become experts in generative AI. For this reason, numerous businesses are implementing NIM microservices, including Foxconn and ServiceNow.

Numerous Kubernetes Platforms are Rideable by NIM

Users will be able to access NIM on numerous corporate platforms, including Red Hat’s OpenShift AI, Canonical’s Charmed KubeFlow and Charmed Kubernetes, Nutanix GPT-in-a-Box 2.0, and many more, because of its interaction with KServe.

Contributing to KServe, Yuan Tang is a principal software engineer at Red Hat. “Red Hat and NVIDIA are making open source AI deployment easier for enterprises “Tang said.The Red Hat-NVIDIA partnership will simplify open source AI adoption for organisations, he said. By upgrading KServe and adding NIM support to Red Hat OpenShift AI, they can simplify Red Hat clients’ access to NVIDIA’s generative AI platform.

“NVIDIA NIM inference microservices will enable consistent, scalable, secure, high-performance generative AI applications from the cloud to the edge.with Nutanix GPT-in-a-Box 2.0,” stated Debojyoti Dutta, vice president of engineering at Nutanix, whose team also contributes to KServe and Kubeflow.

Andreea Munteanu, MLOps product manager at Canonical, stated, “We’re happy to offer NIM through Charmed Kubernetes and Charmed Kubeflow as a company that also contributes significantly to KServe.” “Their combined efforts will enable users to fully leverage the potential of generative AI, with optimal performance, ease of use, and efficiency.”

NIM benefits dozens of other software companies just by virtue of their use of KServe in their product offerings.

Contributing to the Open-Source Community

Regarding the KServe project, NVIDIA has extensive experience. NVIDIA Triton Inference Server uses KServe’s Open Inference Protocol, as mentioned in a recent technical blog. This allows users to execute several AI models concurrently across multiple GPUs, frameworks, and operating modes.

NVIDIA concentrates on use cases with KServe that entail executing a single AI model concurrently across numerous GPUs.

NVIDIA intends to actively contribute to KServe as part of the NIM integration, expanding on its portfolio of contributions to open-source software, which already includes TensorRT-LLM and Triton. In addition, NVIDIA actively participates in the Cloud Native Computing Foundation, which promotes open-source software for several initiatives, including generative AI.

Using the Llama 3 8B or Llama 3 70B LLM models, try the NIM API in the NVIDIA API Catalogue right now. NIM is being used by hundreds of NVIDIA partners throughout the globe to implement generative AI.

Read more on Govindhtech.com

#kserve#DataCentre#microservices#NVIDIANIM#aimodels#redhat#machinelearning#kubernetes#aws#ibm#PyTorch#nim#gpus#Llama3#generativeAI#LLMmodels#News#technews#technology#technologynews#technologytrands#govindhtech

0 notes

Text

1 note

·

View note

Text

Navigating Mobile Application Development Trends in 2024

In the ever-evolving landscape of technology, mobile applications continue to play a pivotal role in shaping our daily lives. As we step into 2024, the realm of mobile application development is witnessing a transformative phase, driven by cutting-edge technologies and evolving user demands. From augmented reality (AR) to artificial intelligence (AI) integration, developers are exploring new avenues to craft immersive, intuitive, and innovative mobile experiences. Let's delve into the key trends shaping mobile application development in 2024.(Read More)

1.Embracing Augmented Reality (AR) and Virtual Reality (VR):

AI and Machine Learning are reshaping the way mobile applications function, enabling personalized user experiences, predictive analytics, and intelligent automation. In 2024, expect to see AI-driven chatbots offering seamless customer support, AI-powered recommendation engines enhancing content discovery, and ML algorithms optimizing app performance based on user behavior patterns. Developers are leveraging frameworks like TensorFlow and PyTorch to integrate AI capabilities into their mobile apps effectively.(Read More)

2.Integration of Artificial Intelligence (AI) and Machine Learning (ML):

AI and Machine Learning are reshaping the way mobile applications function, enabling personalized user experiences, predictive analytics, and intelligent automation. In 2024, expect to see AI-driven chatbots offering seamless customer support, AI-powered recommendation engines enhancing content discovery, and ML algorithms optimizing app performance based on user behavior patterns. Developers are leveraging frameworks like TensorFlow and PyTorch to integrate AI capabilities into their mobile apps effectively.(Read More)

3.Progressive Web Applications (PWAs) for Enhanced Accessibility:

Progressive Web Applications (PWAs) are gaining momentum as a cost-effective solution for delivering native app-like experiences through web browsers. In 2024, PWAs are set to redefine mobile app development by offering enhanced accessibility, offline capabilities, and cross-platform compatibility. With advancements in web technologies like Service Workers and Web Assembly, PWAs are becoming a preferred choice for businesses aiming to reach a broader audience without compromising on user experience.(Read More)

4.Focus on Privacy and Security:

With growing concerns about data privacy and security breaches, mobile app developers are prioritizing robust security measures to safeguard user information. In 2024, expect to see stringent data encryption protocols, biometric authentication mechanisms, and compliance with evolving privacy regulations such as GDPR and CCPA. Developers are integrating advanced security frameworks like OAuth 2.0 and OpenID Connect to mitigate security risks and build trust among users.(Read More)

5.Cross-Platform Development with Flutter and React Native:

Cross-platform development frameworks like Flutter and React Native continue to gain traction in 2024, enabling developers to build high-performance mobile apps that run seamlessly across multiple platforms. With Flutter's hot reload feature and React Native's extensive component libraries, developers can accelerate the development process while maintaining a consistent user experience across iOS and Android devices. As businesses seek cost-effective solutions and faster time-to-market, cross-platform development is poised to remain a dominant trend in mobile app development.(Read More)

Conclusion:

As we navigate the mobile application development landscape in 2024, it's evident that innovation and user-centric design will drive the evolution of mobile experiences. From immersive AR/VR applications to AI-powered intelligent assistants, the possibilities are limitless. By embracing emerging technologies, adhering to stringent security standards, and focusing on user privacy, developers can create mobile applications that resonate with today's digitally savvy consumers, setting new benchmarks for innovation and usability in the years to come.

Visit Our Website : www.impulsion.in

#MobileAppDevelopment#ARVR#ArtificialIntelligence#MachineLearning#ProgressiveWebApps#Privacy#Security#CrossPlatformDevelopment#Flutter#ReactNative#UserExperience#Innovation#TechTrends#DigitalTransformation#EmergingTechnologies

0 notes

Text

Today we find in Chinese stores the new TV-Box with Android MXQ G28 that is differentiated by carrying a new and powerful Amlogic S928X processor.

MXQ G28 TV-Box Overview

A box that at the moment, due to its crazy price, seems not to be really for sale and that will become one of the few to carry this five-core AMlogic S928X, the most powerful of this brand. The operating system is Android 11, although we hope that one of these days it will be updated to Android 13.

The rest of its configuration is nothing remarkable, since it is quite standard, with the absence of the Widevine L1 certificate being mentioned as a negative point, which makes it a bad choice for watching paid streaming in high quality. An expensive product and far from being complete, it is better to consider other options if we are looking for something for streaming.

Specification of MXQ G28 TV-Box

Feature

Details

Product Name

MXQ G28 TV-Box with Amlogic S928X Processor

Processor

Penta Core Amlogic S928X (12nm)

CPU Architecture

1 x ARM Cortex-A76 Core, 4 x ARM Cortex-A55 Cores

GPU

ARM-G57 MC2 with OpenGL ES 3.2, Vulkan 1.2, OpenCL 2.0

AI Accelerator

3.2 TOPS with TensorFlow and Caffe Support

Operating System

Android 11 (Potential for Future Update to Android 13)

RAM Options

4/8 GB DDR4

Storage Options

16/32/64/128 GB eMMC

Expandable Storage

Micro SD Card Reader

Wireless Connectivity

Wi-Fi 6 with Internal Antenna

Bluetooth 5.2

Wired Connectivity

RJ45 Gigabit Network Port

Audio Output

SPDIF Digital Audio Output

USB Ports

1 x USB 3.0

1 x USB 2.0

Video Output

HDMI 2.1a (Up to 8K at 60fps)

HDR Support

HDR10, HDR10, HLG, AV1, VP9, H.265, AVS3

Included Accessories

Infrared Remote Control

HDMI Cable

Power Transformer

Price on AliExpress

Starting from $210 (Expected to decrease soon)

Connectivity and other features

Wireless connectivity is ensured by Wi-Fi 6 with internal antenna and Bluetooth 5.2. Connected connectors are a Gigabit RJ45 network port, SPDIF digital audio output, a USB 3.0 connector, a 3.5mm USB 2.0 audio connector, and an HDMI 2.1a video output capable of outputting video resolution up to 8K at 60 fps.

An infrared remote control, an HDMI cable and a power transformer are supplied with this TV-box.

Price and availability

The MXQ G28 MXQ TV-Box with Amlogic S928X processor can be purchased from $210 on AliExpress, a price that will soon be reduced by half.

0 notes

Text

What is Azure Synapse Analytics?

Azure Synapse Analytics, formerly known as SQL Data Warehouse, is a cloud-based analytics service provided by Microsoft as part of its Azure cloud platform. It represents a significant leap in cloud data warehousing and analytics, offering a unified experience to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs. The service integrates various big data and data warehouse technologies into a cohesive analytics service, simplifying the complex architecture typically required for large-scale data tasks.

The essence of Azure Synapse Analytics lies in its ability to bring together big data and data warehousing into a single, cohesive service that offers unmatched analytics capabilities. Unlike traditional approaches that often involve separate systems for data processing and analytics, Azure Synapse Analytics provides a unified environment for all types of data tasks. This integration not only simplifies the data architecture but also significantly reduces the time and effort required to extract insights from data.

One of the key features of Azure Synapse Analytics is its support for both on-demand and provisioned resources, giving users the flexibility to choose the most appropriate and cost-effective option for their workload. With on-demand query processing, users can run big data analytics without the need to set up or manage any resources, paying only for the queries they run. On the other hand, provisioned resources allow for more predictable performance and cost, ideal for regular workloads. Apart from it by obtaining Azure training, you can advance your career in Azure. With this course, you can demonstrate your expertise in the basics of obtaining a Artificial Intelligence Course, you can advance your career in Google Cloud. With this course, you can demonstrate your expertise in the basics of implement popular algorithms like CNN, RCNN, RNN, LSTM, RBM using the latest TensorFlow 2.0 package in Python, many more fundamental concepts, and many more critical concepts.

Azure Synapse Analytics stands out for its exceptional performance. It leverages a massively parallel processing architecture, which allows it to handle large volumes of data efficiently. This capability is crucial for organizations dealing with big data, as it ensures rapid processing and analysis, enabling faster decision-making and more efficient operations. The service also integrates deeply with other Azure services like Azure Machine Learning and Azure Data Lake Storage, enhancing its analytics capabilities.

Another distinguishing aspect of Azure Synapse Analytics is its powerful data exploration and visualization tools. It includes native integration with Microsoft Power BI, one of the leading business intelligence tools. This integration allows users to visualize and analyze data seamlessly, creating interactive reports and dashboards that can provide valuable insights into business operations. Additionally, it supports various data formats and offers a comprehensive set of analytics and machine learning tools, making it suitable for a wide range of applications.

Furthermore, Azure Synapse Analytics places a strong emphasis on security and compliance, which are critical considerations for any data platform. It offers advanced security features such as data masking, encryption, and access control, ensuring that sensitive data is protected. Microsoft also ensures that the service complies with various global and industry-specific regulatory standards, providing peace of mind for businesses concerned about data governance and compliance.

In the context of an increasingly data-driven world, Azure Synapse Analytics represents a powerful tool for organizations looking to harness the full potential of their data. Its combination of big data and data warehousing capabilities, along with robust performance, scalability, and security features, make it a leading solution in the field of cloud analytics. Whether for generating business insights, driving data-driven decision-making, or building sophisticated machine learning models, Azure Synapse Analytics provides a comprehensive, scalable, and efficient platform to meet a wide range of data analytics needs.

0 notes

Text

Python Course online

We talk about Python Programming (Basic to Advance), RoadMap and why learn python?

And we provide python course or get certificate with guaranteed jobs Opportunities.

Python: -

Python is a high-level, general-purpose, and interpreted programming language used in various sectors including machine learning, artificial intelligence, data analysis, web development, and many more. Python is known for its ease of use, powerful standard library, and dynamic semantics. It also has a large community of developers who keep on contributing towards its growth. The major focus behind creating it is making it easier for developers to read and understand, also reducing the lines of code.

History: -

Python, first created in 1980s by the Guido van Rossum, is one of the most popular programming languages. During his research at the National Research Institute for Mathematics and Computer Science in the Netherlands, he created Python – a super easy programming language in terms of reading and usage. The first ever version was released in the year 1991 which had only a few built-in data types and basic functionality.

Later, when it gained popularity among scientists for numerical computations and data analysis, in 1994, Python 1.0 was released with extra features like map, lambda, and filter functions. Followed by which adding new functionalities and bringing newer versions of Python came into fashion.

Python 1.5 released in 1997

Python 2.0 released in 2000

Python 3.0 in 2008 brought newer functionalities

The latest version of Python, Python 3.10 was released in 2021

Python Advance: -

Python topics are too difficult for beginners. This is also reflected in our image. The trail requires experience and beginners with insufficient experience could easily get dizzy. Those who have successfully completed our beginner's tutorial or who have acquired sufficient Python experience elsewhere should not have any problems. As everywhere in our tutorial, we introduce the topics as gently as possible. This part of our Python tutorial is aimed at advanced programmers.

Most people will probably ask themselves immediately whether they are at the right place here. As in various other areas of life and science, it is not easy to find and describe the dividing line between beginners and advanced learners in Python. On the other hand, it is also difficult to decide what the appropriate topics are for this part of the tutorial. Some topics are considered by some as particularly difficult, while others rate them as easy. Like the hiking trail in the picture on the right. For people with mountain experience, it is a walk, while people without experience or with a strong feeling of dizziness are already dreadful at the sight of the picture.

When asked what advanced topics are, i.e. Python topics that are too difficult for beginners, I was guided by my experiences from numerous Python trainings.

Those who have successfully completed our beginner's tutorial or have had sufficient Python experience elsewhere should have no problems. As everywhere in our tutorial, we introduce the topics as gently as possible.

Python Roadmap for Beginners: -

Basics- Syntax, Variables, Data Types, Conditionals, Loops Exceptions, Functions, Lists tuples, set, dictionaries.

Advanced- List comprehensions, generators, expressions, closures Regex, decorators, iterators, lamdas, functionals programming, map reduce, filters, threading, magic methods

OOP- Classes, inheritance, methods

Web frameworks- Django, Flask, Fast API

Data Science- NumPy, Pandas Seaborn Scilkit-learn, TensorFlow, Pytorch.

Testing- Unit testing, integration, testing,end to end testing ,load testing

Automation- File manipulations Web scraping GUI Automations Network Automations

DSA- Array & Linked lists, heaps stocks queue, hash tables, binary search tree, recursion, sorting Algorithms.

Package Managers- plp, Conda

These road map described the areas of python and what is depth of Python, clearly visible what is the importance of Python.

We have an institute that name is School of core AI and we assured you with jobs placement in Brand or AI industry

We have +5 years experienced professionals Python mentors/trainers.

Click here-

#python#datascience#technology#data analytics#artificial intelligence#ninjago pythor#pythor p chumsworth#machine learning

1 note

·

View note

Text

機器學習筆記(五)-Keras 2.0

Photo by energepic.com on Pexels.com

我們平常所使用的深度學習框架中,TensorFlow和Keras是常用排行榜之中排名前二的,以TensorFlow來說,它非常flexible ,你可以把它想成是一個微分器,甚至完全可以做deep learning以外的事情,因為它的作用就是幫你算微分。拿到微分之後呢,就可以去算gradient descent之類的。可是這麼flexible的toolkit學起來是有一定的難度。

Continue reading Untitled

View On WordPress

0 notes

Text

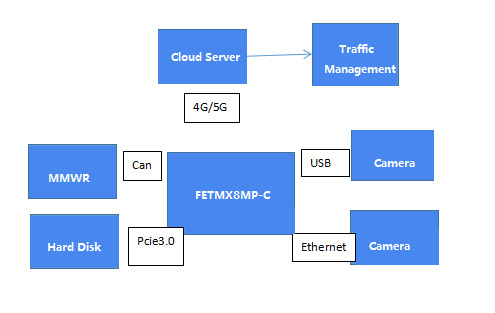

Realization of Radar-Vision Integration Machine Based on FETMX8MP-C SoM

Road sensors are mainly used to collect data information on road conditions and provide raw data for the roadside sensing network. The requirements for intelligent transportation are developing towards informationization, intellectualization, real-time, and accuracy, and the perception of road information is becoming more and more sophisticated, thus putting forward higher demands for road sensors.

Compared to traditional sensors that can only obtain information about the lane and speed of a vehicle in a section or at a specific moment. The radar-vision fusion integrated system utilizes mmWave radar, high-definition video processing, and AI deep learning algorithms to achieve all-weather and holistic perception of the road.

It can detect the flow, speed, type, queue length, and debris of vehicles on the road.

It can detect and identify accidents such as traffic congestion, breakdowns, and collisions on the road, as well as pedestrians, obstacles, and animals. It is also capable of automatically issuing warnings.

The radar-vision fusion integrated system has a wide range of applications and can be installed on the sides of roads. It can be installed independently on poles or utilize existing road lamp poles. It is commonly applied in crucial areas of urban transportation , key bridges, tunnels, key sections of highways, and critical monitoring areas.

Forlinx Embedded recommends using FETMX8MP-C SoM. The core board is designed based on the NXP i.MX 8M Plus processor, equipped with four Cortex-A53 cores with a maximum frequency of up to 1.6GHz and one Cortex-M7 core for real-time control.

High Performance: It is equipped with a built-in NPU, providing high AI computing power of up to 2.3 TOPS to meet lightweight edge computing requirements.And it is paired with 32-bit LPDDR4 memory, with a high data rate of up to 4.0 GT/s.

Low Power Consumption: 14nm FinFET,Applications running <2.0W,Deep Sleep Mode < 20mW, to meet the power requirements of mobile devices.

Powerful Image Recognition: Dual hardware ISP (Image Signal Processor) supports resolutions of up to 12MP and provides an input rate of up to 375M pixels per second. This significantly enhances the image quality.

Mainstream AI Architecture: tensorflow-lite, armNN, OpenCV, Caffe, etc. to facilitate machine learning.

Advanced multimedia features: supports multiple hardware codec types: 1080p60, h.265 / 4, VP9, VP8.

Rich High-speed Interface Resources: 2 Gigabit Ethernet, 2 dual-purpose USB 3.0/2.0, PCIe Gen 3, 3 SDIO 3.0, 2 CAN FD, bringing more possibilities for high-speed signal transmission.

Originally published at www.forlinx.net.

0 notes

Text

[ad_1] NVIDIA espera abrir nuevas puertas para el desarrollo de modelos de inteligencia artificial generativa (IA) con AI Workbench. El kit de herramientas empresarial tiene como objetivo hacer que el desarrollo de IA sea más ágil, eficiente y accesible. Sus capacidades informadas van desde escalar modelos en cualquier infraestructura, incluidas PC, estaciones de trabajo, centros de datos y nubes públicas, hasta colaboración e implementación sin inconvenientes. Las complejidades involucradas en el ajuste, escalado e implementación de modelos de IA pueden aliviarse con una plataforma unificada, lo que permite a los desarrolladores aprovechar todo el potencial de la IA para casos de uso específicos. Las demostraciones en un evento reciente mostraron la generación de imágenes personalizadas con Stable Diffusion XL y un Llama 2 perfeccionado para el razonamiento médico desarrollado con AI Workbench. Desafíos en el desarrollo de IA empresarial El desarrollo de modelos generativos de IA implica múltiples etapas, cada una con desafíos y demandas. Al seleccionar un modelo previamente entrenado, como un modelo de lenguaje grande (LLM), los desarrolladores a menudo desean ajustar el modelo para aplicaciones específicas. Este proceso requiere una infraestructura que pueda manejar diversas demandas informáticas e integrarse sin problemas con herramientas como GitHub, Hugging Face, NVIDIA NGC y servidores autohospedados. El viaje exige experiencia en aprendizaje automático, técnicas de manipulación de datos, Python y marcos como TensorFlow. A eso se suma la complejidad de administrar credenciales, acceso a datos y dependencias entre componentes. Con la proliferación de datos confidenciales, la seguridad es primordial y exige medidas sólidas para garantizar la confidencialidad y la integridad. Además de todo, la gestión de flujos de trabajo en diferentes máquinas y plataformas aumenta la complejidad. Características del banco de trabajo con IA AI Workbench tiene como objetivo simplificar el proceso de desarrollo al abordar estos desafíos con: Una plataforma de desarrollo fácil de usar con herramientas como JupyterLab, VS Code y servicios como GitHub. Un enfoque en la transparencia y la reproducibilidad para fomentar una mejor colaboración entre los equipos. Implementación cliente-servidor para cambiar entre recursos locales y remotos, lo que facilita el proceso de escalado. Personalización en flujos de trabajo de texto e imágenes Para las empresas que buscan explorar el poderoso mundo de la IA generativa, puede ser un paso crucial para acelerar la adopción y la integración. El futuro del desarrollo de IA empresarial NVIDIA AI Workbench es particularmente importante para las empresas, ya que ofrece optimizar el proceso de desarrollo con nuevas vías de personalización, escalabilidad y soluciones rentables. Al abordar los desafíos de la experiencia técnica, la seguridad de los datos y la gestión del flujo de trabajo, el conjunto de herramientas de NVIDIA podría cambiar las reglas del juego para las empresas que aprovechan la IA para diversas aplicaciones. Imagen destacada: JHVEPhoto/Shutterstock window.addEventListener( 'load2', function() if( !window.ss_u ) !function(f,b,e,v,n,t,s) if(f.fbq)return;n=f.fbq=function()n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments); if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0'; n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0]; s.parentNode.insertBefore(t,s)(window, document,'script', 'https://connect.facebook.net/en_US/fbevents.js'); if( typeof window.sopp != "undefined" && window.sopp === 'yes' ) fbq('dataProcessingOptions', ['LDU'], 1, 1000); console.log('load_px'); fbq('init', '1321385257908563'); fbq('track', 'PageView'); fbq('trackSingle', '1321385257908563', 'ViewContent', content_name: 'nvidia-announces-ai-workbench-for-enterprise-generative-ai-models', content_category: 'generative-ai machine-learning news digital-marketing-tools' ); );

0 notes

Text

Free TensorFlow 2.0 Complete Course https://www.kdnuggets.com/2023/02/free-tensorflow-20-complete-course.html?utm_source=dlvr.it&utm_medium=tumblr&utm_campaign=free-tensorflow-2-0-complete-course

0 notes

Text

Unlocking the Power of Deep Learning with TensorFlow 2.0

In the rapidly evolving field of artificial intelligence and machine learning, TensorFlow has emerged as a leading open-source library for building and deploying deep learning models. The TensorFlow 2.0 course offers a comprehensive learning experience that equips individuals with the skills and knowledge to harness the power of deep learning. In this blog post, we will explore how this course can strengthen your skills and discuss the diverse career paths that can be pursued after mastering TensorFlow 2.0.

Deep learning has revolutionized various industries, including healthcare, finance, retail, and autonomous systems. TensorFlow 2.0 provides a powerful framework for implementing deep neural networks and solving complex problems. By enrolling in this course, you will gain a deep understanding of the principles and techniques behind deep learning and learn how to leverage TensorFlow 2.0 to build and deploy state-of-the-art models.

The course focuses on hands-on learning, allowing you to apply theoretical concepts to real-world scenarios. Through a series of practical exercises and projects, you will develop proficiency in using TensorFlow 2.0 for tasks such as image classification, natural language processing, and generative modeling. This hands-on approach not only strengthens your skills but also enhances your problem-solving abilities and builds your confidence in working with deep learning models.

TensorFlow 2.0 introduces significant improvements and simplifications compared to its predecessor. This course guides you through the latest features and functionalities of TensorFlow 2.0, including the TensorFlow 2.0 API, eager execution, automatic differentiation, and the Keras API integration. By mastering TensorFlow 2.0, you will be equipped with a versatile toolset for implementing various deep learning architectures and algorithms.

The course covers a wide range of deep learning techniques, allowing you to build cutting-edge models for image recognition, natural language processing, and more. You will learn about convolutional neural networks (CNNs), recurrent neural networks (RNNs), generative adversarial networks (GANs), and transfer learning. These skills are highly sought after by industries that rely on pattern recognition, data analysis, and prediction.

Completing the TensorFlow 2.0 course opens up exciting career paths in the field of artificial intelligence and machine learning. Here are a few potential career paths:

Deep Learning Engineer: As a deep learning engineer, you will specialize in designing and implementing deep neural networks to solve complex problems. You will work on cutting-edge projects, research new techniques, and optimize models for performance and efficiency.

Data Scientist: With expertise in TensorFlow 2.0, you can pursue a career as a data scientist. Your deep learning skills will complement your knowledge of data analysis, statistical modeling, and machine learning, allowing you to extract insights from complex datasets and make data-driven decisions.

AI Researcher: If you have a passion for pushing the boundaries of artificial intelligence, a career as an AI researcher may be the path for you. With expertise in TensorFlow 2.0, you can contribute to advancements in deep learning algorithms, develop new architectures, and drive innovation in the field.

AI Consultant: As an AI consultant, you will work with businesses to identify opportunities for implementing deep learning solutions. Your expertise in TensorFlow 2.0 will enable you to provide strategic guidance, develop custom models, and assist in the integration of deep learning into existing systems.

Entrepreneur: Armed with deep learning skills and TensorFlow 2.0 knowledge, you can embark on an entrepreneurial journey by developing innovative AI-based products or starting your own AI consulting firm. The demand for AI solutions is growing rapidly, presenting numerous opportunities for entrepreneurial ventures.

The TensorFlow 2.0 course is a gateway to the world of deep learning, providing you with the skills and knowledge needed to excel in the field of artificial intelligence. Through hands-on learning, you will gain proficiency in building and deploying deep learning models using TensorFlow 2.0. This expertise opens doors to various career paths, including deep learning engineer, data scientist, AI researcher, AI consultant, and entrepreneurship. So, seize the opportunity and enroll in the TensorFlow 2.0 course to unlock your potential in the exciting world of deep learning and AI.

0 notes